Word for Microsoft 365 Word 2021 Word 2019 Word 2016 Word 2013 Word 2010 More…Less

The Insert key on your keyboard allows you to replace text as you type. You can set up the function in Word Options.

Turn on Overtype mode

When you edit text in Overtype mode, you type over text to the right of the insertion point.

-

In Word, choose File > Options.

-

In the Word Options dialog box, choose Advanced.

-

Under Editing options, do one of the following:

-

To use Insert key to control Overtype mode, select the Use Insert key to control overtype check box.

-

To keep Overtype mode enabled always, select the Use overtype mode check box.

-

Need more help?

Want more options?

Explore subscription benefits, browse training courses, learn how to secure your device, and more.

Communities help you ask and answer questions, give feedback, and hear from experts with rich knowledge.

Type over text in Word for Windows

- In Word, choose File > Options.

- In the Word Options dialog box, choose Advanced.

- Under Editing options, do one of the following: To use Insert key to control Overtype mode, select the Use Insert key to control overtype check box.

Contents

- 1 How do you type over an existing text in Word?

- 2 How do you type over a document?

- 3 How do I toggle between insert and overtype mode?

- 4 How do you type the symbol for over?

- 5 How do you change overwrite text?

- 6 What do you mean by over typing text?

- 7 How do you put a symbol above a letter in Word?

- 8 How do I insert text without box in Word?

- 9 How do I turn on the Insert key on my keyboard?

- 10 How do you type plus or minus on a keyboard?

- 11 How do I put a line over text in Word 2016?

- 12 What is colon key?

- 13 Where is the INS key?

- 14 What is insertion mode?

- 15 Why does text disappear in Word?

- 16 How do you put a line above and below text in Word?

- 17 How do I type the dots above a letter?

- 18 How do we wrap text around the object?

- 19 How do you type over on a Mac?

- 20 How do you turn on inserts?

How do you type over an existing text in Word?

Word Options

- Click “File,” “Options” and then the “Advanced” tab.

- Check “Use Overtype Mode” in the Editing Options section.

- Click “OK” to enable Overtype and close the Word Options window.

- Click anywhere in the document and start typing to overwrite text to the right of the cursor.

How do you type over a document?

If you have the free Adobe Acrobat Reader app installed, you can type on PDFs on your Android device.

- Open the Adobe Acrobat reader app and select Files in the bottom toolbar.

- Find the PDF you want to type on and tap to select it.

How do I toggle between insert and overtype mode?

One way to switch between insert mode and overtype mode is to double-click on the OVR letters on the status bar. Overtype mode becomes active, the OVR letters become bold, and you can proceed to make any edits you desire.

How do you type the symbol for over?

These keyboard shortcuts will help you display text more accurately in your business documents.

- Open a document in Microsoft Word.

- Press “Ctrl-Shift” and the caret (” ^ “) key and then the letter to insert a circumflex accent.

- Press “Ctrl-Shift” and the tilde (” ~ “) key and then the letter to insert a tilde accent.

How do you change overwrite text?

Press the “Ins” key to toggle overtype mode off. Depending on your keyboard model, this key may also be labeled “Insert.” If you simply want to disable overtype mode but keep the ability to toggle it back on, you are done.

What do you mean by over typing text?

overtype. (ˌəʊvəˈtaɪp) vb (tr) to replace (typed text) by typing new text in the same place.

How do you put a symbol above a letter in Word?

You’ll use the Ctrl or Shift key along with the accent key on your keyboard, followed by a quick press of the letter. For example, to get the á character, you’d press Ctrl+’ (apostrophe), release those keys, and then quickly press the A key.

How do I insert text without box in Word?

Removing the Box from a Text Box

- Either click on the border of the text box or position the insertion point within the text box.

- Select the Text Box option from the Format menu.

- Click on the Colors and Lines tab, if necessary.

- In the Color drop-down list, select No Line.

- Click on OK.

How do I turn on the Insert key on my keyboard?

How to Enable the Insert key in Microsoft Word:

- Go to file > word options > advanced > editing options.

- Check the box that says, “use the Insert key to control overtype mode”

- Now the insert key works.

How do you type plus or minus on a keyboard?

Using a shortcut key: Microsoft Word offers a pre-defined shortcut key for some symbols such as plus-minus sign and minus-plus sign: Type 00b1 or 00B1 (does not matter, uppercase or lowercase) and immediately press Alt+X to insert the plus-minus symbol: ± Type 2213 and press Alt+X to insert the minus-plus symbol: ∓

How do I put a line over text in Word 2016?

Type the text you want to overline into your Word document and make sure the “Home” tab is active on the ribbon bar. Click the down arrow on the “Borders” button in the “Paragraph” section of the “Home” tab. Select “Top Border” from the drop-down menu.

What is colon key?

A colon is a symbol that resembles two vertical periods ( : ) and found on the same key as the semicolon on standard United States keyboards.Keyboard help and support.

Where is the INS key?

Sometimes displayed as Ins, the Insert key is a key on most computer keyboards near or next to the backspace key. The Insert key toggles how text is inserted by inserting or adding text in front of other text or overwriting text after the cursor as you type.

What is insertion mode?

Updated: 08/02/2020 by Computer Hope. Insert mode is a mechanism that allows users to insert text without overwriting other text. This mode, if it’s supported, is entered and exited by pressing the Insert key on a keyboard. Tip.

Why does text disappear in Word?

Turn off overtype mode: Click File > Options. Click Advanced. Under Editing options, clear both the Use the Insert key to control overtype mode and the Use overtype mode check boxes.

How do you put a line above and below text in Word?

To insert a line in Word above and / or below a paragraph using the Borders button:

- Select the paragraph(s) to which you want to add a line.

- Click the Home tab in the Ribbon.

- Click Borders in the Paragraph group. A drop-down menu appears.

- Select the line you want to use.

How do I type the dots above a letter?

Hold down the “Ctrl” and “Shift” keys, and then press the colon key. Release the keys, and then type a vowel in upper or lower case. Use Office’s Unicode shortcut combination to put an umlaut over a non-vowel character.

How do we wrap text around the object?

Wrap text around simple objects

- To display the Text Wrap panel, choose Window > Text Wrap.

- Using the Selection tool or Direct Selection tool , select the object you want to wrap text around.

- In the Text Wrap panel, click the desired wrap shape:

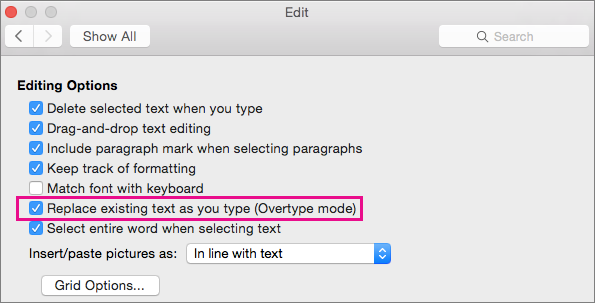

How do you type over on a Mac?

On the Word menu, click Preferences. Under Authoring and Proofing Tools, click Edit, and then in the Edit dialog box, select Replace existing text as you type (Overtype mode). Note: To turn off overtype mode, clear the check box next to Replace existing text as you type (Overtype mode).

How do you turn on inserts?

Enabling overtype mode in the options

- In Microsoft Word 2010, 2013, and later, click File and then Options.

- In the Word Options, click Advanced.

- Check the box for Use the Insert key to control overtype mode to allow the Insert key to control the Overtype mode.

- Click Ok.

Using Overtype and Insert Modes in Microsoft Word

What’s the difference? We’ll explain

Updated on November 4, 2019

Annette Stoffregen / EyeEm / Getty Images

Microsoft Word has two text entry modes: Insert and Overtype. These modes each describe how text behaves as it’s added to a document with pre-existing text. Here’s how these two modes work and how to use them.

Instructions in this article apply to Word for Microsoft 365, Word 2019, Word 2016, Word 2013, and Word 2010.

Insert Mode Definition

While in Insert mode, the new text that is added to a document moves the current text forward, to the right of the cursor, to accommodate the new text as it’s typed or pasted into the document. It’s the default mode for text entry in Microsoft Word.

Overtype Mode Definition

In Overtype mode, when text is added to a document where there is existing text, the existing text is replaced by the newly added text as it’s entered, character by character.

If you want to turn off the default Insert mode in Microsoft Word so you can type over the current text, there are two ways to do this. The simplest way is to press the Insert key, which toggles the mode on and off. Another way is to set the Insert key to toggle Overtype mode on and off.

To change the settings for Overtype mode:

-

Go to File > Options.

-

In the Word Options dialog box, choose Advanced.

-

In the Editing options section, choose one of the following:

- To use the Insert key to control Overtype mode, select the Use Insert key to control overtype check box.

- To permanently enable Overtype mode, select the Use overtype mode check box.

-

Select OK.

Thanks for letting us know!

Get the Latest Tech News Delivered Every Day

Subscribe

I hope that this is the correct place to ask this question.

I have some PDFs that have been converted into Word and I am now trying to tidy them up so that they match the original as much as possible. These PDFs are from scans from hard copy documents that were originally created via a typewriter. As such there are areas where text has been written over the top of other text. For example there might be a row of dots for a form type layout and then the value typed over the top. Or in some cases existing options have been covered with x’s to indicate that it is not used.

I have looked at underlining but the styles available aren’t that similar and are too close to the text bottom. I also tried to create a row of dots on the line below and then adjusted the spacing which looks much more like the original but then the dots are too far from the text.

These also don’t cover how to overlay existing text in the case of the x’s that are in use.

Any thoughts on how to accomplish this would be appreciated. I am using Word 2007 and there is no scope to use anything else.

Thanks

Type over text in Word 2016 for Mac When you are editing text in overtype mode in a Word document, typing new characters …

When you are editing text in overtype mode in a Word document, typing new characters replaces any existing characters to the right side of the insertion point.

Turn on overtype mode

-

On the Word menu, click Preferences.

-

Under Authoring and Proofing Tools, click Edit, and then in the Edit dialog box, select Replace existing text as you type (Overtype mode).

Note: To turn off overtype mode, clear the check box next to Replace existing text as you type (Overtype mode).

Type over existing text

-

In your document, click where you want to type over the existing text and begin typing. The new characters you type replace the characters to the right.

Disclaimer : All images and content that you find here are believed to be in the «public domain». We do not intend to violate legitimate intellectual property, artistic rights or copyright. If you are the legitimate owner of one of the images and content posted on this site, and do not want to be displayed or if you need an appropriate credit, please contact us and we will immediately do whatever is needed by deleting or giving credit to the content displayed.

Overtype is a feature of Microsoft Word that allows you to type over existing words, rather than just inserting characters behind them. This feature is especially helpful when filling in business forms, because example text is overwritten as you type. In many applications, this feature is enabled simply by pressing the «Insert» key on the keyboard, but Microsoft disables this option by default in Word 2007. However, you can enable the Overtype mode through the Word Options dialog and also choose to re-enable the «Insert» key functionality. You can also place a button on the Status Bar to toggle Overtype mode via your mouse.

Word Options

-

Click «File,» «Options» and then the «Advanced» tab.

-

Check «Use Overtype Mode» in the Editing Options section. Optionally, check «User the Insert Key to Control Overtype Mode» if you want quick access to this feature via the keyboard.

-

Click «OK» to enable Overtype and close the Word Options window.

-

Click anywhere in the document and start typing to overwrite text to the right of the cursor. If you enabled the «Insert» key, press it to toggle Overtype mode off when you no longer need it.

Status Bar

-

Right-click the Status Bar to bring up the Customize Status Bar menu.

-

Click «Overtype» to add a check-mark next to the selection.

-

Click «Insert» on the Status Bar to enable Overtype Mode or «Overtype» to turn the feature off. The button name indicates the current typing mode.

|

|

This article shows you how to extract the meaningful bits of information from raw text and how to identify their roles. Let’s first look into why identifying roles is important. |

Take 40% off Getting Started with Natural Language Processing by entering fcckochmar into the discount code box at checkout at manning.com.

Understanding word types

The first fact to notice is that there‘s a conceptual difference between the bits of the expression like “[Harry] [met] [Sally]”: “Harry” and “Sally” both refer to people participating in the event, and “met” represents an action. When we humans read text like this, we subconsciously determine the roles each word or expression plays along those lines: to us, words like “Harry” and “Sally” can only represent participants of an action but can’t denote an action itself, and words like “met” can only denote an action. This helps us get at the essence of the message quickly: we read “Harry met Sally” and we understand [HarryWHO] [metDID_WHAT] [SallyWHOM].

This recognition of word types has two major effects: the first effect is that the straightforward unambiguous use of words in their traditional functions helps us interpret the message. Funnily enough, this applies even when we don’t know the meaning of the words. Our expectations about how words are combined in sentences and what roles they play are strong, and when we don’t know what a word means such expectations readily suggest what it might mean: e.g., we might not be able to exactly pin it down, but we can still say that an unknown word means some sort of an object or some sort of an action. This “guessing game” is familiar to anyone who has ever tried learning a foreign language and had to interpret a few unknown words based on other, familiar words in the context. Even if you are a native speaker of English and never tried learning a different language, you can still try playing a guessing game, for example, with nonsensical poetry. Here’s an excerpt from “Jabberwocky”, a famous nonsensical poem by Lewis Carroll:[1]

Figure 1. An example of text where the word meaning can only be guessed

Some of the words here are familiar to anyone, but what do “Jabberwock”, “Bandersnatch” and “frumious” mean? It’s impossible to give a precise definition for any of them because these words don’t exist in English or any other language, and their meaning is anybody’s guess. One can say with high certainty that “Jabberwock” and “Bandersnatch” are some sort of creatures, and “frumious” is some sort of quality.[2] How do we make such guesses? You might notice that the context for these words gives us some clues: for example, we know what “beware” means. It’s an action, and as an action it requires some participants: one doesn’t normally “beware”, one needs to beware of someone or something. We expect to see this someone or something, and here comes “Jabberwock”. Another clue is given away by the word “the” which normally attaches itself to objects (like “the car”) or creatures (like “the dog”), and we arrive at an interpretation of “Jabberwock” and “Bandersnatch” being creatures. Finally, in “the frumious Bandersnatch” the only possible role for “frumious” is some quality because this is how it typically works in language: e.g. “the red car” or “the big dog”.

The second effect that the expectations about the roles that words play have on our interpretation is that we tend to notice when these roles are ambiguous or somehow violated, because such violations create a discordance. This is why ambiguity in language is a rich source of jokes and puns, intentional or not. Here’s one expressed in a news headline:

Figure 2. An example of ambiguity in action

What is the first reading that you get? You wouldn’t be the only one if you read this as if “Police help a dog to bite a victim”, but common sense suggests that the intended meaning is probably “Police help a victim with a dog bite (or, that was bitten by a dog)”. News headlines are rich in ambiguities like that because they use a specific format aimed at packing the maximum amount of information in a shortest possible expression. This sometimes comes at a price as both “Police help a dog to bite a victim” and “Police help a victim with a dog bite (that was bitten by a dog)” are clearer but longer than “Police help dog bite victim” that a newspaper might prefer to use. This ambiguity isn’t necessarily intentional, but it’s easy to see how this can be used to make endless jokes.

What exactly causes confusion here? It’s clear that “police” denotes a participant in an event, and “help” denotes the action. “Dog” and “victim” also seem to unambiguously be participants of an action, but things are less clear with “bite”. “Bite” can denote an action as in “Dog bites a victim” or a result of an action as in “He has mosquito bites.” In both cases, what we read is a word “bites”, and it doesn’t give away any further clues as to what it means, but in “Dog bites a victim” it answers the question “What does the dog do?” and in “He has mosquito bites” it answers the question “What does he have?”. Now, when you see a headline like “Police help dog bite victim”, your brain doesn’t know straight away which path to follow:

- Path 1: “bite” is an action answering the question “what does one do?” → “Police help dog [biteDO_WHAT] victim”

- Path 2: “bite” is the result of an action answering the question “what happened?” → “Police help dog [biteWHAT] victim”.

Apart from the humorous effect of such confusions, ambiguity may also slow the information processing down and lead to misinterpretations. Try solving Exercise 1 to see how the same expression may lead to completely different readings.

Solution: These are quite well-known examples that are widely used in NLP courses to exemplify ambiguity in language and its effect on interpretation.

In (1), “I” certainly denotes a person, and “can” certainly denotes an action, but “can” as an action has two potential meanings: it can denote ability “I can” = “I am able to” or the action of putting something in cans.[3] “Fish” can denote an animal as in “freshwater fish” (or a product as in “fish and chips”), or it can denote an action as in “learn to fish”. In combination with the two meanings of “can” these can produce two completely different readings of the same sentence: either “I can fish” means “I am able / I know how to fish” or “I put fish in cans”.

In (2), “I” is a person and “saw” is an action, but “duck” may mean an animal or an action of ducking. In the first case, the sentence means that I saw a duck that belongs to her, and in the second it means that I witnessed how she ducked – once again, completely different meanings of what seems to be the same sentence!

Figure 3. Ambiguity might result in some serious misunderstanding[4]

This far, we’ve been using the terminology quite frivolously: we’ve been defining words as denoting actions or people or qualities, but in fact there are more standard terms for that. The types of words defined by the different functions that words might fulfill are called parts-of-speech, and we distinguish between a number of such types:

- words that denote objects, animals, people, places and concepts are called nouns;

- words that denote states, actions and occurrences are called verbs;

- words that denote qualities of objects, animals, people, places and concepts are called adjectives;

- those for qualities of actions, states and occurrences are called adverbs.

Table 1 provides some examples and descriptions of different parts-of-speech:

Table 1. Examples of words of different parts-of-speech

|

Part-of-speech Nouns |

What it denotes Objects, people, animals, places, concepts, time references |

Examples car, Einstein, dog, Paris, calculation, Friday |

|

Verbs |

Actions, states, occurrences |

meet, stay, become, happen |

|

Adjectives |

Qualities of objects, people, animals, places, concepts |

red car, clever man, big dog, beautiful city, fast calculation |

|

Adverbs |

Qualities of actions, states, occurrences |

meet recently, stay longer, just become, happen suddenly |

|

Articles |

Don’t have a precise meaning of their own, but show whether the noun they are attached to is identifiable in context (it is clear what / who the noun is referring to) or not (the noun hasn’t been mentioned before) |

I saw a man = This man is mentioned for the first time (“a” is an indefinite article) The man is clever = This suggests that it should be clear from the context which particular man we are talking about (“the” is a definite article) |

|

Prepositions |

Don’t have a precise meaning of their own, but serve as a link between two words or groups of words: for example, linking a verb denoting action with nouns denoting participants, or a noun to its attributes |

meet on Friday – links action to time meet with administration – links action to participants meet at house – links action to location a man with a hat – links a noun to its attribute |

This isn’t a comprehensive account of all parts-of-speech in English, but with this brief guide you should be able to recognize the roles of the most frequent words in text and this suite of word types should provide you with the necessary basis for implementation of your own information extractor.

Why do we care about the identification of word types in the context of information extraction and other tasks? You’ve seen above that correct and straightforward identification of types helps information processing, although ambiguities lead to misunderstandings. This is precisely what happens with the automated language processing: machines like humans can extract information from text better and more efficiently if they can recognize the roles played by different words, although misidentification of these roles may lead to mistakes of various kinds. For instance, having identified that “Jabberwock” is a noun and some sort of a creature, a machine might be able to answer a question like “Who is Jabberwock?” (e.g., “Someone / Something with jaws that bite and claws that catch”), although if a machine processed “I can fish” as “I know how to fish” it wouldn’t be able to answer the question “What did you put in cans?”

Luckily, there are NLP algorithms that can detect word types in text, and such algorithms are called part-of-speech taggers (or POS taggers). Figure 4 presents a mental model to help you put POS taggers into the context of other NLP techniques:

Figure 4. Mental Model that visualizes the flow of information between different NLP components

As POS tagging is an essential part of many tasks in language processing, all NLP toolkits contain a tagger and often you need to include it in your processing pipeline to get at the essence of the message. Let’s now look into how this works in practice.

Part-of-speech tagging with spaCy

I want to introduce spaCy[5] – a useful NLP library that you can put under your belt. A number of reasons to look into spaCy in this book are:

- NLTK and spaCy have their complementary strengths, and it’s good to know how to use both;

- spaCy is an actively supported and fast-developing library that keeps up-to-date with the advances in NLP algorithms and models;

- A large community of people work with this library, and you can find code examples of various applications implemented with or for spaCy on their webpage,[6] as well as find answers to your questions on their github;

- spaCy is actively used in industry; and

- It includes a powerful set of tools particularly applicable to large-scale information extraction.

Unlike NLTK that treats different components of language analysis as separate steps, spaCy builds an analysis pipeline from the beginning and applies this pipeline to text. Under the hood, the pipeline already includes a number of useful NLP tools which are run on input text without you needing to call on them separately. These tools include, among others, a tokenizer and a POS tagger. You apply the whole lot of tools with a single line of code calling on the spaCy processing pipeline, and then your program stores the result in a convenient format until you need it. This also ensures that the information is passed between the tools without you taking care of the input-output formats. Figure 5 visualizes spaCy’s NLP pipeline, that we’re going to discuss in more detail next:

Figure 5. spaCy’s processing pipeline with some intermediate results[7]

Machines, unlike humans, don’t treat input text as a sequence of sentences or words – for machines, text is a sequence of symbols. The first step that we applied before was splitting text into words – this step is performed by a tool called tokenizer. Tokenizer uses raw text as an input and returns a list of words as an output. For example, if you pass it a sequence of symbols like “Harry, who Sally met”, it returns a list of tokens [“Harry”, “,”, “who”, …] Next, we apply a stemmer that converts each word to some general form: this tool takes a word as an input and returns its stem as an output. For instance, a stemmer returns a generic, base form “meet” for both “meeting” and “meets”. A stemmer can be run on a list of words, where it treats each word separately and returns a list of correspondent stems. Other tools require an ordered sequence of words from the original text: for example, we’ve seen that it’s easier to figure out that Jabberwock is a noun if we know that it follows a word like “the”; order matters for POS tagging. This means that each of the three tools – tokenizer, stemmer, POS tagger – requires a different type of input and produces a different type of output, and in order to apply them in sequence we need to know how to represent information for each of them. This is what spaCy’s processing pipeline does for you: it runs a sequence of tools and connects their outputs together.

For information retrieval we opted for stemming that converts different forms of a word to a common core. We said that it’s useful because it helps connect words together on a larger scale, but it also produces non-words: you won’t always be able to find stems of the words (e.g. something like “retriev”, the common stem of retrieval and retrieve) in a normal dictionary. An alternative to this tool is lemmatizer, which aims at converting different forms of a word to its base form which can be found in a dictionary: for instance, it returns a lemma retrieval that can be found in a dictionary. Such base form is called lemma. In its processing pipeline, spaCy uses a lemmatizer.

The starting point for spaCy’s processing pipeline is, as before, raw text: for example, “On Friday board members meet with senior managers to discuss future development of the company.” The processing pipeline applies tokenization to this text to extract individual words: [“On”, “Friday”, “board”, …]. The words are then passed to a POS tagger that assigns parts-of-speech (or POS) tags like [“ADP”, “PROPN”,[8] “NOUN”, …], to a lemmatizer that produces output like [“On”, “Friday”, …, “member”, …, “manager”, …], and to a bunch of other tools.

You may notice that the processing tools in Figure 5 are comprised within a pipeline called nlp. As you’ll shortly see in the code, calling on nlp pipeline makes the program first invoke all the pre-trained tools and then applies them to the input text. The output of all the steps gets stored in a “container” called Doc – it contains a sequence of tokens extracted from input text and processed with the tools. Here’s where spaCy implementation comes close to object-oriented programming: the tokens are represented as Token objects with a specific set of attributes. If you’ve done object-oriented programming before, you’ll hopefully see the connection soon. If not, here’s a brief explanation: imagine you want to describe a set of cars. All cars share the list of attributes they have: with respect to cars, you may want to talk about the car model, size, color, year of production, body style (e.g. saloon, convertible), type of engine, etc. At the same time, such attributes as wingspan or wing area won’t be applicable to cars – they rather relate to planes. You can define a class of objects called Car and require that each object car of this class should have the same information fields, for instance calling on car.model should return the name of the model of the car, for example car.model=“Volkswagen Beetle”, and car.production_year should return the year the car was made, for example car.production_year=“2003”, etc.

This is the approach taken by spaCy to represent tokens in text: after tokenization, each token (word) is packed up in an object Token that has a number of attributes. For instance:

token.textcontains the original word itself;token.lemma_stores the lemma (base form) of the word;[9]token.pos_– its part-of-speech tag;token.i– the index position of the word in text;token.lower_– lowercase form of the word;

and so on.

The nlp pipeline aims to fill in the information fields like lemma, pos and others with the values specific for each particular token. Because different tools within the pipeline provide different bits of information, the values for the attributes are added on the go. Figure 6 visualizes this process for the words “on” and “members” in the text “On Friday board members meet with senior managers to discuss future development of the company”:

Figure 6. Processing of words “On” and “members” within the nlp pipeline

Now, let’s see how this is implemented in Python code. Listing 1 provides you with an example.

Listing 1. Code exemplifying how to run spaCy’s processing pipeline

import spacy #A

nlp = spacy.load("en_core_web_sm") #B

doc = nlp("On Friday board members meet with senior managers " +

"to discuss future development of the company.") #C

rows = []

rows.append(["Word", "Position", "Lowercase", "Lemma", "POS", "Alphanumeric", "Stopword"]) #D

for token in doc:

rows.append([token.text, str(token.i), token.lower_, token.lemma_,

token.pos_, str(token.is_alpha), str(token.is_stop)]) #E

columns = zip(*rows) #F

column_widths = [max(len(item) for item in col) for col in columns] #G

for row in rows:

print(''.join(' {:{width}} '.format(row[i], width=column_widths[i])

for i in range(0, len(row)))) #H

#A Start by importing spaCy library

#B spacy.load command initializes the nlp pipeline. The input to the command is a particular type of data (model) that the language tools were trained on. All models use the same naming conventions (en_core_web_), which means that it’s a set of tools trained on English Web data; the last bit denotes the size of data the model was trained on, where sm stands for ‘small’[10]

#C Provide the nlp pipeline with input text

#D Let’s print the output in a tabular format. For clarity, add a header to the printout

#E Add the attributes of each token in the processed text to the output for printing

#F Python’s zip function[11] allows you to reformat input from row-wise representation to column-wise

#G As each column contains strings of variable lengths, calculate the maximum length of strings in each column to allow enough space in the printout

#H Use format functionality to adjust the width of each column in each row as you print out the results[12]

Here’s the output that this code returns for some selected words from the input text:

Word Position Lowercase Lemma POS Alphanumeric Stopword On 0 on on ADP True False Friday 1 friday friday PROPN True False ... members 3 members member NOUN True False ... to 8 to to PART True True discuss 9 discuss discuss VERB True False ... . 15 . . PUNCT False False

This output tells you:

- The first item in each line is the original word from text – it’s returned by

token.text; - The second is the position in text, which starts as all other indexing in Python from zero – this is identified by

token.i; - The third item is the lowercase version of the original word. You may notice that it changes the forms of “On” and “Friday”. This is returned by

token.lower_; - The fourth item is the lemma of the word, which returns “member” for “members” and “manager” for “managers”. Lemma is identified by

token.lemma_; - The fifth item is the part-of-speech tag. Most of the tags should be familiar to you by now. The new tags in this piece of text are PART, which stands for “particle” and is assigned to particle “to” in “to discuss”, and PUNCT for punctuation marks. POS tags are returned by

token.pos_; - The sixth item is a True/False value returned by

token.is_alpha, which checks whether a word contains alphabetic characters only. This attribute is False for punctuation marks and some other sequences that don’t consist of letters only, and it’s useful for identifying and filtering out punctuation marks and other non-words; - Finally, the last, seventh item in the output is a True/False value returned by

token.is_stop, which checks whether a word is in a stopwords list – a list of highly frequent words in language that you might want to filter out in many NLP applications, as they aren’t likely to be informative. For example, articles, prepositions and particles have theiris_stopvalues set to True as you can see in the output above.

Solution: Despite the fact that a text like “Jabberwocky” contains non-English words, or possibly non-words at all, this Python code is able to tell that “Jabberwock” and “Bandersnatch” are some creatures that have specific names (it assigns a tag PROPN, proper noun to both of them), and that “frumious” is an adjective. How does it do that? Here’s a glimpse under the hood of a typical POS tagging algorithm (see Figure 7):

Figure 7. A glimpse under the hood of a typical POS tagging algorithm

We’ve said earlier that when we try to figure out what type of a word something like “Jabberwock” is we rely on the context. In particular, the previous words are important to take into account: if we see “the”, chances that the next word is a noun or an adjective are high, but a chance that we see a verb next is minimal – verbs shouldn’t follow articles in grammatically correct English. Technically, we rely on two types of intuitions: we use our expectations about what types of words typically follow other types of words, and we also rely on our knowledge that words like “fish” can be nouns or verbs but hardly anything else. We perform the task of word type identification in sequence. For instance, in the example from Figure 7, when the sentence begins, we already have certain expectations about what type of a word we may see first – quite often, it’s a noun or a pronoun (like “I”). Once we’ve established that it’s likely for a pronoun to start a sentence, we also rely on our intuitions about how likely it is that such a pronoun will be exactly “I”. Then we move on and expect to see a particular range of word types after a pronoun – almost certainly it should be a normal verb or a modal verb (as verbs denoting obligations like “should” and “must” or abilities like “can” and “may” are technically called). More rarely, it may be a noun (like “I, Jabberwock”), an adjective (“I, frumious Bandersnatch”), or some other part of speech. Once we’ve decided that it’s a verb, we assess how likely it is that this verb is “can”; if we’ve decided that it’s a modal verb, we assess how likely it is that this modal verb is “can”, etc. We proceed like that until we reach the end of the sentence, and this is where we assess which interpretation we find more likely. This is one possible step-wise explanation of how our brain processes information, on which part-of-speech tagging is based.

The POS tagging algorithm takes into account two types of expectations: an expectation that a certain type of a word (like modal verb) may follow a certain other type of a word (like pronoun), and an expectation that if it’s a modal verb such a verb may be “can”. These “expectations” are calculated using the data: for example, to find out how likely it is that a modal verb follows a pronoun, we calculate the proportion of times we see a modal verb following a pronoun in data among all the cases where we saw a pronoun. For instance, if we saw ten pronouns like “I” and “we” in data before, and five times out of those ten these pronouns were followed by a modal verb like “can” or “may” (as in “I can” and “we may”), what’s the likelihood, or probability, or seeing a modal verb following a pronoun be? Figure 8 gives a hint on how probability can be estimated:

Figure 8. If modal verb follows pronoun 5 out of 10 times, the probability is 5/10

We can calculate it as:

Probability(modal verb follows pronoun) = 5 / 10

or in general case:

Probability(modal verb follows pronoun) = How_often(pronoun is followed by verb) / How_often(pronoun is followed by any type of word, modal verb or not)

To estimate how likely (or how probable) it is that the pronoun is “I”, we need to take the number of times we’ve seen a pronoun “I” and divide it by the number of times we’ve seen any pronouns in the data. If among those ten pronouns that we’ve seen in the data before seven were “I” and three were “we”, the probability of seeing a pronoun “I” is estimated as Figure 9 illustrates:

Figure 9. If 7 times out of 10 the pronoun is “I”, the probability of a word being “I’ given that we know the POS of such a word is pronoun is 7/10

Probability(pronoun being “I”) = 7 / 10

or in general case:

Probability(pronoun being “I”) = How_often(we’ve seen a pronoun “I”) / How_often(we’ve seen any pronoun, “I” or other)

In the end, the algorithm goes through the sequence of tags and words one by one, and takes all the probabilities into account. Because the probability of each decision, each tag and each word is a separate component in the process, these individual probabilities are multiplied. To find out how probable it is that “I can fish” means “I am able / know how to fish”, the algorithm calculates:

Probability(“I can fish” is “pronoun modal_verb verb”) = probability(a pronoun starts a sentence) * probability(this pronoun is “I”) * probability(a pronoun is followed by a modal verb) * probability(this modal verb is “can”) * … * probability(a verb finishes a sentence)

This probability gets compared with the probabilities of all the alternative interpretations, like “I can fish” = “I put fish in cans”:

Probability(“I can fish” is “pronoun verb noun”) = probability(a pronoun starts a sentence) * probability(this pronoun is “I”) * probability(a pronoun is followed by a verb) * probability(this verb is “can”) * … * probability(a noun finishes a sentence)

In the end, the algorithm compares the calculated probabilities for the possible interpretations and chooses the one which is more likely, i.e. has higher probability.

That’s all for this article. We’re going to move onto syntactic parsing in part 2.

If you want to learn more about the book, you can preview its contents on our browser-based liveBook platform here.

[2] A blend of “fuming” and “furious”, according to Lewis Carroll himself.

[3] Formally, when a word has several meanings this is called lexical ambiguity.

[5] To get more information on the library, check https://spacy.io. Installation instructions walk you through the installation process depending on the operating system you’re using: https://spacy.io/usage#quickstart.

[8] In the scheme used by spaCy, prepositions are referred to as “adposition” and use a tag ADP. Words like “Friday” or “Obama” are tagged with PROPN, which stands for “proper nouns” reserved for names of known individuals, places, time references, organizations, events and such. For more information on the tags, see documentation here: https://spacy.io/api/annotation.

[9] You may notice that some attributes are called on using an underscore, like token.lemma_. This is applicable when spaCy has two versions for the same attribute: for example, token.lemma returns an integer version of the lemma, which represents a unique identifier of the lemma in the vocabulary of all lemmas existing in English, and token.lemma_ returns a Unicode (plain text) version of the same thing – see the description of the attributes on https://spacy.io/api/token.

[10] Check out the different language models available for use with spaCy: https://spacy.io/models/en. Small model (en_core_web_sm) is suitable for most purposes and it’s more efficient to upload and use, but larger models like en_core_web_md (medium) and en_core_web_lg (large) are more powerful and some NLP tasks require the use of such larger models.

Tomh4530

-

#1

Hi — when I type in the middle of a word it types over the following text

instead of just adding or deleting. This has only just started happening —

how can I stop it? Thanks

Advertisements

Suzanne S. Barnhill

-

#2

Press the Insert key again.

—

Suzanne S. Barnhill

Microsoft MVP (Word)

Words into Type

Fairhope, Alabama USA

http://word.mvps.org

Advertisements

Gordon Bentley-Mix

-

#3

Or click Tools | Options… and clear the ‘Overtype mode’ checkbox on the

Edit tab or double click OVR in the status bar at the bottom of the document

window — although just pressing the Insert key as Suzanne has instructed

will have the same effect.

Note, however, that the status bar does provide a useful visual cue: OVR

will be enabled (the letters will be dark) in overtype mode.

Want to reply to this thread or ask your own question?

You’ll need to choose a username for the site, which only take a couple of moments. After that, you can post your question and our members will help you out.

Ask a Question

| word/excel 2003. Cursor jumps all over place, text deleted, | 2 | Aug 10, 2009 |

| How to type each letter of the alphabet automatically in a specific color in Word? | 0 | May 8, 2022 |

| Why isn’t the selected text replaced when I start typing? | 1 | Dec 24, 2009 |

| When I select text to write over it doesn’t delete when I type | 2 | May 13, 2009 |

| How do I add letters to a sentence without typing over existing w. | 2 | Apr 2, 2007 |

| Highlighting and typing over text | 1 | Nov 22, 2007 |

| How do I type over text without having to usebackspace or delete? | 4 | Aug 27, 2007 |

| Why can’t I insert a sentance? It types over the existing text. | 2 | Feb 9, 2009 |