I had a long list (57 pages!) of Latin species names, sorted into alphabetical order. I’d separated the words so that there was only one word on each line. My next task was to go through and remove all the duplicates (i.e. a word immediately followed by the same word) so I could add the final list to my custom dictionary for species in Microsoft Word. I started doing it manually—it’s easy enough to find duplicates when the words are familiar, but for Latin words, my brain just wasn’t coping well and I was missing subtle differences like a single or double ‘i’ at the end of a word. There had to be a better way…

And there is! Good old Dr Google came to the rescue, and with a bit of fiddling to suit my circumstances (one word on each line), I got a wildcard find and replace routine to find the duplicates.

NOTE: DO NOT do a ‘replace all’ with this, in case Word makes unwanted changes. In my case it didn’t treat the second word as a whole word for matching purposes (e.g. it thought banksi, banksia, and banksii were duplicates). Even though I had to skip some of these, it was still worth it to automate much of the process. Another caveat—if you have several lines of the same word, each pair will be found, but you’ll have to run the find several times to get them all. Much better to move your cursor into Word and delete the excess multiple duplicates when you find them. You may still have to do a couple of passes over the document, but the heavy lifting will have been done for you.

Here’s what I did to get it work:

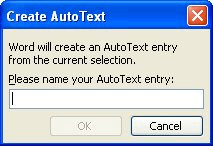

- Press Ctrl+H to open the Find and Replace window.

- Click More, then select the Use Wildcards checkbox.

- In the Find What field, type (<*>)^0131 (there are no spaces in this string).

- In the Replace With field, type 1 (there are no spaces in this string either).

- Click Find Next.

- When a pair of matching whole words is found, click Replace. NOTE: If the second word is only a partial match for the first word, click Find Next.

- Repeat steps 5 and 6 until you’re satisfied you’ve found them all.

How this works:

- (<*>) is the first element (later represented by 1) of the find. The angle brackets specify the start and end of a word, and the ‘word’ is anything (represented by the *). In other words, you’re looking for a whole ‘word’ of any length and made up of any characters (including numbers).

- ^013 is the paragraph marker at the end of the line. In my situation, each word was on its own line with a paragraph mark at the end of the line. If you don’t have this situation, leave this out and replace it with a space (two repeated words in the same line are separated by a space). NOTE: Normally you can find a paragraph mark in a Find with ^p, but not with a wildcard Find—you have to use ^013.

- 1 is the first element. In the Find, it means the duplicate of whatever was found by (<*>); in the Replace, it means replace the duplicated word with the first word found.

The word that comes closest to describing this sort of behavior(repetition of the same word in a sentence) is: Epizeuxis

According to Wikipedia:

In rhetoric, an epizeuxis is the repetition of a word or phrase

in immediate succession, for vehemence or emphasis.

Some examples provided(among others):

«Never give in — never, never, never, never, in nothing great or small, large or petty, never give in except to convictions of honour

and good sense. Never yield to force; never yield to the apparently

overwhelming might of the enemy.» —Winston Churchill«O horror, horror, horror.» —Macbeth

«Words, words, words.» —Hamlet

«Rain, rain, rain, rain, rain.» —Guy Gavriel Kay

«Developers, developers, developers, developers, developers, developers. Developers, developers, developers, developers,

developers, developers, developers, developers!» —Steve Ballmer«Never, never, never, never, never!» —King Lear

«But you never know now do you now do you now do you.» —David Foster Wallace, Brief Interviews with Hideous Men

However, do note this in the definition of Epizeuxis:

for vehemence or emphasis

It appears this sort of repetition is usually done to emphasize some meaning. Accordingly, I’m not sure if a sentence like:

That that exists exists in that that that that exists exists in.

would classify, without any further context.

But then again, upon reading the answers in your cited EL&U questions, it looks like these sentences do make sense. In that case, I would say «Epizeuxis» is indeed the word you’re looking for.

Also take a look at Repetition as defined on Wikipedia. There seem to be some other types of repetitions which you might be interested in:

Conduplicatio is the repetition of a word in various places throughout a paragraph.

«And the world said, ‘Disarm, disclose, or face serious

consequences’—and therefore, we worked with the world, we worked to

make sure that Saddam Hussein heard the message of the world.»(George

W. Bush)Mesodiplosis is the repetition of a word or phrase at the middle of every clause.

«We are troubled on every side, yet not distressed; we are perplexed, but not in despair; persecuted, but not forsaken; cast

down, but not destroyed…» (Second Epistle to the Corinthians)Diacope is a rhetorical term meaning uninterrupted repetition of a word, or repetition with only one or two words between each repeated

phrase.

The article is an abstract of my book [1] based on previously presented publications [2], [3], [4], [5].

1. Coordinating texts

1.1. Rules

1.1.1. Texts and algebra

Numbers and letters are two kinds of ideal objects-signs for studying relations of real objects. Understanding (interpretation) of texts is personified — it depends on a person’s genotype and phenotype. Also, the meaning of words can change over time. All words of contextual language are homonyms. A word has as many properties (relationships between words) as there are contexts in the entire corpus of natural language.

People understand numbers in roughly the same way and regardless of where and when they are used. The language of numbers is universal, universal, and eternal.

Algebra (a symbolic generalization of arithmetic) and text (a sequence of symbols) are so far two very different tools of cognition.

1.1.2. Coordination

Application of mathematical methods in any subject area is preceded by coordinatization, which begins with digitization. Coordinatization is the replacement, modeling of the object of research by its digital copy. This is followed by the correct replacement of the model numbers themselves with symbols and the determination of the properties and regularities of combinations of these symbols.

If correct coordinatization is applied to the text, the text can be reduced to algebra.

For successful algebraization, it is extremely important to describe the coordinatization rules and the properties of the coordinating objects in such a way as to reduce the variability of their choices.

1.1.3. Purpose of Algebraization

The goal of text algebraization is to be able to compute from solutions of systems of algebraic equations the meaning of text, variants of structuring, vocabularies, summaries and versions of text by the target function.

Texts are understood as sequences of signs (letters, words, notes, etc.). There are five types of sign systems: natural, figurative, linguistic, records, and codes.

1.1.3. Purpose of Algebraization

The carrier of a character sequence is all its characters without repetition. A carrier may be called an alphabet or a dictionary of a sign sequence.

Words are sequences of letters or elementary phonemes. The meaning of a letter is only in its form or sound. There is no contextual dependence of the letters of the alphabet.

Ultimate context dependence is present in the words of homonyms of the natural language. For example, the Russian word «kosa» has four different meanings. It is believed that a fifth of the vocabulary of the English language is occupied by homonymy.

1.1.5. Repetition

Text is a character sequence with at least one repetition. Vocabulary is an iconic sequence without repetition. The presence of repetitions allows one to reduce the number of used sign-words (reduce the vocabulary). But then the repeated signs can differ in meaning. The meaning of a word depends on the words around it. Problems of understanding words and text have as their cause that this some part of meaning (meaning) is determined or guessed subjectively and ambiguously. Different readers and listeners understand different meanings for the same word.

When words are repeated in a text, there are preferable, in the opinion of the author of the text, connections-relationships of the repeated word with other words. These relationships are recorded as the new meaning of the repeated word.

1.1.6. Semantic markup

If a particular text does not have explicit repetitions, it does not mean that they are not hidden in semantic (contextual) form. The meaning can be repeated, not only the character-sign (word) denoting it. The context here is a fragment of text between repeating words. If the contexts of different words are similar, then different words are similar in the sense of their common contexts, the word-signs of those contexts. Contexts are similar if they have at least one common sign-word.

The context is not only for two words repeated in a row. The meaning or context of a word can be pointed to by referring to any suitable fragment of text, not necessarily located in the immediate vicinity of the repeated word. In this case, the text loses its linear order, like making words out of letters. If there are no such fragments, the meaning of the word is borrowed by reference to a suitable context from another corpus text (library).

Common words in contexts, in turn, also have their contexts — the notion of a refined word context arises.

1.1.7. Coordination rules

In a finite character sequence, each character has a unique number that determines the place of the character in the sequence. No two characters can be in the same place. But the text requires another index to indicate the repetition of a sign in the text. This second index creates equivalence relations on a finite set of words. It is reasonable to match the sign with some two-index object (for example, some matrix). The first index of the matrix indicates the number of the sign in the sequence. The second index indicates the number of this sign, first encountered in the sequence. The carrier (dictionary) of a character sequence (text) is its part of characters with the same indices. Missing word numbers in the dictionary can be eliminated by continuous numbering.

Text coordinating rules:

The first index of the coordinating text (matrix) is the ordinal number of the word in the text, the second index is the ordinal number of the same word first encountered in the text. If the word has not been previously encountered, the second index is equal to the first index.

The dictionary is the original text with deleted repetitions. It is possible to order the dictionary with exclusion of gaps in word numbering.

For two or more texts that are not a single text, the word order in each text is independent. In two texts, the initial words are equally first. Just as in two books, the beginning pages begin with one.

The common dictionary of a set of texts is the dictionary of all texts after their concatenation. It is possible to order the dictionary with deletion of gaps in the numbering of words.

1.2. Examples

1.2.1. Similarity and sameness

According to G. Frege any object having relations with other objects and their combinations has as many properties (meanings) as these relations of similarity and sameness (tolerance and equivalence). The part of the values taken into account is called the sense by which the object is represented in a given situation. The naming of an object by a number, a symbol, a word, a picture, a sound, a gesture to describe it briefly is called the sign of the object (this is one of the meanings).

Each of the all possible parts (boolean set) of an object’s meanings (meaning) corresponds to one sign. This is the main problem of meaning recognition, but it is also the basis for making do with minimal sets of signs. It is impossible to assign a unique sign to each subset of values. The objects of information exchange are minimal sets of signs (notes, alphabet, language dictionary). The meaning of signs is usually not calculated, but is determined by the contexts (neighborhoods) of the sign so far intuitively.

1.2.2. Example on the abacus

A solution to the problem of sign ambiguity is the semantic markup of text. The semantic markup can be explained on the example of marginal unambiguity. On Russian abacus the text is a sequence of identical signs (knuckles). The vocabulary of such a text consists of a single word. This is even stronger than in Morse code, where the dictionary consists of two words. Without semantic markup, it is impossible to use such texts. Therefore, the vocabulary changes and the characters are divided into groups — ones, tens, hundreds, etc. These group names (numbers) become unique word numbers. The vocabulary are numbers from zero to nine. Each knuckle, too, can be represented so far by an undefined matrix on such a Cartesian abacus.

The transformation of identical objects into similar ones has taken place. The measure of similarity is the coordinate values of the words. In addition to positional, repetitions of dictionary digits occur when arithmetic operations are performed. Equivalence relations are established: if after an arithmetic operation the number 9+1 is obtained, then 0 appears in that position and 1 is added to the next digit. On the abacus, all the knuckles are shifted to the original (zero) position, and one is added in the next digit (wire). Some matrix transformation is performed on the matrix abacus.

If one sets a measure of the sameness of signs, then the ratio of tolerance (similarity) can be transformed again into the ratio of equivalence (sameness) by this measure. For example, by rounding numbers. One can recognize the difference between tolerance and equivalence by the violation of transitivity. For tolerance relations it can be violated. For example, let an element A be similar to B in one sense. If the sense of B does not coincide with the sense of element C, then A can be similar to C only in the part of intersection of their senses (part of properties). The transitivity of relations is restored (closed), but only for this common part of sense. After the sameness is achieved, A will be equivalent to C. For example, the above transformation (closure) on some coordinates provides arithmetic operations on a matrix abacus.

1.2.3. Chess example

For chess, the vocabulary of their matrix text of the game is the numbers of one of the pieces of each color and the move separator (from 1 to 11). The word of the chess text is also a kind of matrix. The first coordinate i is unique and is the cell number on the chessboard (from 1 to 64). The second coordinate j is a number from the dictionary. The chess matrix text at any moment of the game is the sum of matrices, each showing a piece on the corresponding place on the chessboard. The repetitions in the text appear both because of duplication of pieces and because of constant transitions during the game from similarity to sameness and vice versa for all pieces except the king. The game consists in implementing the most effective such transitions and the actual classification of the pieces. Pawns that are identical in the beginning then become similar only by the move rule, and sometimes a pawn becomes identical with a queen.

The tool of matrix text analysis is a transitivity control to check the difference between similarity and sameness. Lack of transitivity control is an algebraic explication of misunderstanding for language texts, loss in chess, or errors in numerical calculations.

Relational transitivity is a condition for transforming a set of objects into a mathematical category. The semantic markup of a text can become the computation of its categories by means of transitive closure. The category objects are the contexts of matrix words, the morphisms are the transformation matrices of these contexts.

1.2.4. Example of a language text

Example text:

A set is an object that is a set of objects. A polynomial is a set of monomial objects which are a set of objects-somnomials.

Text in normal form is coordinated according to the above rules. The vocabulary of a text is the text itself, but without repetitions. Text coordination is its indexing and matching of indexed matrix words.

1.2.5. Example of a mathematical text

As an example of a mathematical text selected formulas for the volume of the cone, cylinder and torus. The formulas are treated as texts. This means that signs included in texts are not mathematical objects and there are no algebraic operations for them.

For the semiotic analysis of formulas as texts, the repetition of signs is important. The repetitions determine the patterns.

Formulas are presented according to the rules of coordinating in index form in a single numbering, as if they were not three texts, but one. The coordinated text is written through matrices in tabular form.

1.2.6. Example of a Morse-Weil-Herke code

This example is chosen because of the extreme brevity of the dictionary. In Morse code, the character sequences of 26 Latin letters can be considered as texts consisting of words — dots and dashes. The order of words (dots and dashes) is extremely important in each individual text (alphabet letter). In linguistic texts, the order is also important («mom’s dad» is not «dad’s mom», but there are exceptions («languid evening» and «languid evening»).

The dictionary and carrier of Morse code is a sequence of two character-characters — («dot» and «dash») that coincides with the letter A. The order of the characters in the dictionary or the carrier is no longer important. Therefore, the carrier may also be the letter N. One letter is the carrier (dictionary), the remaining 25 letters are code texts. Defining the 26 letters of Morse code as texts of words is unusual for linguistic texts. In linguistic texts, words are composed of letters. But for codes, as relations of signs, the composition of letters (cipher) from words is natural.

Each code word (of dots and dashes), as some object, has two coordinates. The first coordinate is the number of the word in this letter (from one to four). The second coordinate is the number in the dictionary (1 or 2). The dictionary is the same for all 26 texts.

All the 26 texts (Latin letters) are independent of each other: the presence of dots or dashes in one text (as letters) and their order have no effect on the composition of the other text (another letter). Therefore the numbering of the first character in Morse code in all letters begins with one according to the third coordinate rule.

Each point or dash, of which a letter consists, taking into account their order, according to the coordinating rule, is assigned a coordinating object — a matrix, the choice of which must satisfy certain requirements.

1.3. Requirements for coordinate objects

Coordination for texts consists in matching the words of the text with some «number-like objects» that satisfy three general requirements:

-

The objects must be individual like numbers;

-

The objects must be abstract (the volume of the concept is maximal, the content of the concept is minimal);

-

Algebraic operations (addition, multiplication, comparison) can be performed over the objects.

The text-appropriate objects in algebra are two-index matrix units:

-

They are individual-all matrix units are different as matrices.

-

An arbitrary n-order matrix can be represented through a decomposition by matrix units. Matrix units are the basis of a complete matrix algebra and a matrix ring. This means that the maximum concept volume requirement is satisfied. Matrix units contain only one unit — the content is minimal.

-

All algebraic operations necessary for the coordinate object can be performed with matrices.

2. Matrix units

In the section on the basis of matrix units (hyperbinary numbers) the necessary algebraic systems for transformation of coordinated texts into matrix ones are constructed and investigated. The matrix representation of texts allows to recognize and create the meaning of texts by means of mathematical methods.

2.1. Definition

Matrix units are matrices in which the unit is at the intersection of the row number (first index) and the column number (second index). In the following, only square matrix units are considered.

The number of all square matrix units (full set) is equal to the total number of elements of a square matrix.

Hereinafter matrix units are considered as a matrix generalization of integers 0 and 1. The main difference between such hyperbinary numbers and integers is the noncommutativity of their product.

2.2. Product

2.2.1. Определяющее соотношение

The product of matrix units is different from zero (zero matrix) only if the internal indices of the product matrices are equal. Then the product is a matrix unit with the first index of the first factor and the second index of the second factor.

Some matrix units can be called simple matrix units by analogy with simple integers, and others can be called composite matrix units because they are products of simple ones.

A complete set of matrix units can be obtained from simple matrix units, which are called the formants of the complete set.

Matrix units are treated precisely as a matrix generalization of integers. Left and right noncommutative divisors of hyperbinary numbers can be different, and there are divisors of zero when each multiplier (the divisor of the product) is different from zero, but their product is equal to a zero matrix. This property of matrices essentially distinguishes them from integers, for which there are no divisors of zero. But many concepts of modular arithmetic (comparisons of integers modulo) remain valid for hyperbinary numbers, but only because of their matrix form. The elements of such matrices (zero and one) have no such properties.

Simple matrix units (analog of simple integers) in the full set are recognized by the ratio of indices.

2.2.2. Indexes

The indices of simple matrix units are of two kinds: the units in such binary matrices are immediately above or below the main diagonal of the square matrix. The elements with the same indices (diagonal matrix units) are located on the main diagonal, and they are not simple.

In composite matrix units, the difference of the first and second indices is either zero (diagonal matrix units), or the difference of the indices is greater than one in absolute value. In composite matrix units their units are outside the two diagonals where the units of simple matrix units are located.

The indices of composite matrix units are all pairs of indices of elements of a square matrix of dimension n except for pairs of indices of simple matrix units.

The ratio of indices determines the value of the product of two identical matrix units. Unlike integers, the square of any hyperbinary number is either zero (nilpotent numbers) or the same number (idempotent hyperbinary numbers).

2.2.3. Idempotent and nilpotent

Idempotency is a property of an algebraic operation and an object, when repeatedly applied to an object, to produce the same result as the first application of the operation. For example, it is the addition of a number to zero, multiplication by one, or raising to the power of one.

Diagonal matrix units are idempotent. Squares of diagonal matrix units are matrices themselves due to equality of internal indices. The product of diagonal matrix units with different indices is zero. Such algebraic objects are known as orthogonal projectors.

A nilpotent element is an element of algebraic structure, some degree of which turns to zero. All matrix units (hyperbinary numbers) except for idempotent ones are nilpotent matrix units. Their second degree is converted to zero. A pair of identical nilpotent matrix units (hyperbinary numbers) are divisors of zero.

The ratio (distribution) of prime and composite hyperbinary numbers in the full set is determined by their dimension n (the corresponding dimension of matrix units).

2.2.4. Distribution

The distribution of prime and composite matrix units is as follows. The number of matrix units with elements above and below the main diagonal is simple out of the total number of complete matrix units. The remaining matrix units are composite matrix units (products of simple ones).

The peculiarity of the system of hyperbinary numbers is that they have left and right multipliers (phantom), multiplication by which does not lead to their change.

2.2.5. Phantomness

Phantom units are such multipliers of matrix units that when multiplied do not lead to a change in the matrix units. Phantom is a generalization of unipotency. Matrix units have a countable set (an infinite set that can be numbered by a natural number series) of phantom left and right factors. The phantom multipliers do not lead to a change in the matrix unit and are analogous to the unit for integers

In contrast to the case of integers and their dull multiplication by one, the phantom multipliers of matrix units are countably manifold. If the occurrence of a particular phantom multiplier has signs of a pattern, then matrix units can be compared by their phantom multipliers. Phantom multipliers are some free index (coordinate) parameters of hyperbinary numbers.

The motivation for using phantom multipliers is that the relations between matrix units can be extended by corresponding equivalence and similarity relations between their phantom multipliers. Different matrix units with the same or similar phantom multipliers can be compared modulo this phantom multiplier. Conversely, identical matrix units may differ by their phantom multipliers.

If a one-to-one correspondence between a matrix unit and its phantom multiplier is defined, then this multiplier can be the module of comparison of matrix units.

The presence of such phantom multipliers will further be used to compare words by their contexts and to compose systems of matrix text equations. Contexts for words will be their corresponding phantom multipliers.

One-valuedness of decomposition of integers into prime factors (factoriality) for matrix units is generalized taking into account their noncommutativity and the need to restrict the ambiguity of decompositions.

2.2.6. Hyperbinary factoriality

Matrix units have a countable set of decompositions into factorizers. This means that there is no single-valuedness of factorization for matrix units. This property will prove useful for comparisons of text fragments at any distance from each other.

Among the decompositions of matrix units it is possible to define some canonical decompositions generalizing the decompositions of integers into prime factors. Such decompositions are algebraically richer than decompositions of integers due to noncommutativity of hyperbinary numbers.

There is the following classification of canonical expansions.

2.2.7. Classification

There are three classes of canonical decompositions of matrix units. A decomposition is called canonical if the co-multipliers are simple matrix units. The property determining the canonical decompositions is the maximal closeness in coordinates of the multipliers of the decomposition of composite hyperbinary numbers into prime numbers.

In general case there are three classes of canonical expansions of arbitrary matrix units depending on the ratio of indices:

2.2.7.1. The first index is greater than the second.

This is the first class of decomposition into prime matrix units — here the first index is greater than the second one strictly by one in each factor. It is impossible to be less than that.

2.2.7.2. The first index is less than the second.

This is the second class of decomposition into prime matrix units — the first index is less than the second in each factor. The first index is strictly less than the second index by one in each factor.

2.2.7.3. The indices are equal

This is the third class of decomposition into simple matrix units — the first index in the first factor is less than the second one strictly by one, and in the second factor the first index is greater than the second one by one and equal to the second index of the first factor.

The decomposition is singular and therefore it is canonical.

All simple matrix units are the complete system of formants of the complete set of matrix units.

2.2.8. Formators

A comparatively small number of simple matrix units will allow to write any texts only with the help of such formants which are formants for all matrix units (complete set). The formants are the alphabet of matrix texts, and monomials, as products of formants, are the words.

A complete system of formants consists of simple matrix units. Compound matrix units (monomials) are called basis units of a complete set in systems of hypercomplex numbers, e.g. alternions.

These formants, like basis elements, are linearly independent. There is no set of any numbers other than zero such that any partial sum or sum of all elements and basis elements equals the zero matrix. This follows from the fact that the units in all matrix units of any formant and basis element are in different places in the matrix and it is impossible to achieve a zero matrix sum by using numbers (integers or real numbers) as coefficients before the summands.

The multiplicity of integers for hyperbinary numbers is inherited in terms of similarity.

2.2.9 Similarity on the left

Matrix units having the same second indexes are multiples (similar) from the left.

2.2.10. Similarity on the right

Matrix units having the same first indexes are multiples from the right.

The transitivity of multiplicity for triples of integers is inherited for triples of hyperbinary numbers.

2.2.11. Left similarity transitivity

The similarity relations of matrix units are transitive: if the first matrix unit is similar to the second matrix unit on the left and the second matrix unit is similar to the third matrix unit, then the first matrix unit is similar to the third matrix unit on the left.

2.2.12. Transitivity of similarity to the right

If the first matrix unit is similar to the right of the second matrix unit and the second matrix unit is similar to the third matrix unit, then the first matrix unit is similar to the right of the third matrix unit. The similarity relations on the right are transitive.

The transitivity property of similarity of matrix units will be used further in the construction of the category of context.

2.3. Addition

2.3.1. Problem

The result of matrix unit multiplication is a matrix unit. Matrix units are closed with respect to the multiplication operation. Therefore the algebraic system of matrix units by multiplication is a monoid of matrix units or a semigroup with a unit matrix (a common neutral element).

The result of adding matrix units will no longer be a matrix unit in the general case.

Matrix units are matrix monomials (monomials). They are either simple matrix units or their products. A matrix polynomial (polynomial) is the sum of matrix monomials.

Any n×n binary matrix (basis element) can be represented as a polynomial with respect to simple matrix units (formants). Matrices over the ring of integers and the field of real numbers are not considered here. But the binary matrices (consisting of 0 and 1) needed to create matrix texts should also have appropriate constraints.

Text binary matrices should not have more than one unit in a row in accordance with the rules of text coordinating. The first coordinate of words is unique — it is the first index of matrix units and the number of the row where the unit is located. When adding identical matrix units, for example, the result ceases to be a binary matrix at all. Therefore, the addition of textual matrix units must be defined in a special way.

There are different rules for adding binary numbers.

2.3.2. Known Binary Additions

When multiplying square binary matrices of the same dimensionality, the result will always be binary matrices. The analogue of the binary multiplication operation in the language of logical functions is logical multiplication. The truth table for this operation coincides with the usual 0 and 1 multiplication.

Logical addition can be considered as a pretender to the rule of addition of text binary matrices. In this case the result of their element-by-element addition and multiplication will again be a binary matrix.

In its turn, logical addition (disjunction) is of two kinds: strict disjunction (addition modulo 2); unstrict (weak) disjunction.

The table of truth for them differs by the addition of units. In set theory addition modulo 2 corresponds to the operation of symmetric difference of two sets. Strict disjunction has the meaning of «either this or that». Non-strict disjunction has the meaning «either this, or that, or both at once». In terms of set theory, a non-strict disjunction is analogous to a union of sets.

The operations of logical addition instead of usual addition when adding matrix units solve the problem of appearance of elements in absolute value greater than one, but as a result of matrix addition, units may appear in several places of the rows of the sum matrix. According to the text coordinate rule, this means that two or more words can be in the same place of the text (defined by the first coordinate).

An addition rule devoid of these disadvantages is required.

2.3.3. Addition by matching (concordance)

The three types of addition operations differ in the rules for matching the summand to the summand. If some rule is accepted (concordance), then the addition rule is uniquely defined.

The three types of addition can be combined into one concordance addition (concordant addition). On the basis of concordant addition it is possible to define matrix unit concordance addition. Such multiplication must be closed by such addition.

The sum by concordance of two text binary matrices is a text binary matrix. The result of concordance addition is the usual addition of matrices.

An algebraic system of matrix units by addition is a monoid of matrix units or a semigroup with zero matrix (a common neutral element).

Before investigating the division operation of hyperbinary numbers, it is necessary to determine the order relation for them. Division of hyperbinary numbers, as with integers, is generally possible, but only as a division with a remainder, which by definition must be less than the divisor. The property of hyperbinary numbers to be smaller or larger needs to be determined.

2.4. Order

On the set of hyperbinary numbers it is possible to define a relation of order through the relation of membership, like integers. Any integer is a sum of units. One number is greater than another if the latter is contained by a fraction of those units in the former and belongs to it. The same approach is used for hyperbinary numbers with phantom multipliers, which is an example of the usefulness of their use.

2.4.1. Magnitude

The value on the left-hand side of the hyperbinary numbers is the trace of their left-hand phantom multiplier matrices. The value to the right of the hyperbinary numbers is the trace of the matrices of their right phantom multipliers. Then, a hyperbinary number is larger (smaller) on the left or on the right if the advising traces of their phantom multiplier matrices are larger or smaller.

The scalar measure of the value µ is a necessary feature of ordering of hyperbinary numbers, but not sufficient.

2.4.2. Neter chains

The value µ does not distinguish the distribution of units on the diagonal of the matrix. As already mentioned, the hyperbinary number is similar on the left and right of its phantom multipliers. This means that the phantom multipliers generate sets of hyperbinary numbers, which differ from each other by corresponding similarity coefficients. These similarity coefficients are themselves hyperbinary numbers.

There arise sets generated on the left or on the right by the corresponding phantom hyperbinary numbers. The set of all such subsets (booleans) are arranged in chains with their generating diagonal elements — one, the sum of two, the sum of three, and so on. These are increasing chains by the number of generating elements. For textual hyperbinary chains are broken because their dictionaries (phantom multipliers) are finite.

Chains with such properties are neter chains.

There is a simple method for constructing such neuter chains for hyperbinary numbers:

-

The product of the generating phantom multipliers of neighboring links is different from the zero matrix.

-

The value µ of the link must be smaller than µ of the next link.

The increasing chains of the Nether booleans generated by the left and right phantom multipliers are a sufficient indication of ordering of hyperbinary numbers.

2.5. Subtraction and division

2.5.1. Subtraction

The operation of subtracting text hyperbinary numbers is not generally defined, as it is for positive integers. The result can be a negative number. But subtraction of identical positive integers is always defined.

The same is true for hyperbinary numbers. The difference matrix of different matrix units will generally contain negative numbers and then it is not a binary matrix. But the result of subtracting the same matrix units is a zero matrix. It is a binary matrix.

2.5.2. Division

The division operation for hyperbinary numbers, like for integers, is undefined. For integers, the division operation is replaced by the corresponding multiplication operation, which is called division with a remainder (division by multiplication and addition).

Square matrix units are singular (have no inverse matrices). For hyperbinary numbers, there is a matrix counterpart to division with a remainder of integers.

2.6. Phantom multiplier comparisons

Comparisons of integers are generalized to the case of hyperbinary numbers.

A diagonal hyperbinary number is called a module comparison of two hyperbinary numbers if the difference of the right (left) diagonal phantom multipliers of those two hyperbinary numbers is divided without remainder by that module.

The set of all hyperbinary numbers comparable modulo is called the modulo deduction class. Thus, the comparison is equivalent to the equality of deduction classes.

Any hyperbinary number of the class is called a modulo deduction. Let there be a residue from division of any member of the chosen class, then the deduction equal to the residue is called the smallest nonnegative deduction, and the deduction smallest in µ is called the absolutely smallest deduction.

Since comparability modulo is an equivalence relation on the set of integers, the classes of deductions modulo are equivalence classes; their number is equal to the measure µ of their phantom multipliers.

2.7. Transformations and equations

2.7.1. Transformations

There is always a quadruple of such hyperbinary numbers that the product of three numbers equals the fourth hyperbinary number. Such equality is a general formula for transforming any number from this quadruple.

2.7.2. Equations

The formula for converting hyperbinary numbers is an equality on the four numbers. It can be thought of as an equation where each set of hyperbinary numbers and their components can be an unknown matrix number and the remaining set can be a given number. A system of linear or nonlinear equations can be made on different words (matrix units), their place in the text, phantom multipliers and summands, if on the set of equations the equivalence relations of hyperbinary numbers on their phantom multipliers and summands are given or defined. In this case, different words are considered equal if their phantom elements (phantoms) are similar, and vice versa, if words are similar (repeated in different places in the text), then their phantoms may differ. The same applies not only to words, but also to text fragments. For example, a phantom (hyperbinary number) may be common to all fragments and words of a text, such as a text abstract as its invariant in transformations and the meaning of the text. In turn, this common phantom can be an unknown for the corresponding system of equations.

In the case of such equivalence classes of textual hyperbinary numbers, the equations become entangled on equivalent hyperbinary numbers.

Unlike polynomial systems of equations over a field of numbers, in systems of hyperbinary equations the given and unknown variables are noncommutative. A method for solving such systems of equations will be proposed in the following.

3. Matrix Texts

3.1. The hyperbinary coordinate formula

In accordance with the rules of coordinatization texts are transformed into matrix texts by the following formula. Each text word with some ordinal number corresponds to a square matrix unit with two indices, where the second index is a function of the first index, and this first index is the word number. The function takes two values: if the word has not occurred earlier in the text, the second index is assigned a value equal to the word number in the text; if the word has occurred earlier in the text under some number, the second index is equal to this number.

A matrix text is a special matrix polynomial — a special case of a hyperbinary number. The sum of monomials in this polynomial should be treated as a concordance summation. After matching, this hyperbinary number acquires the properties corresponding to the rules of coordinating texts.

A matrix text consists of the sum of matrix words (monomials), in part of which a second index (repetition of words in the text) may be repeated. This sum is a matrix polynomial and a hyperbinary number (after coordination), since each of its summands is a matrix monomial, which may be a simple matrix unit or their product (composite matrix unit). In this case the monomials must correspond to the coordinate formula.

The right matrix dictionary is a matrix text with excluded monomials with different indices and consists of matrix units with the same indices. The left matrix dictionary is the full sum of matrix units with the same indices, each of which is a word number in the text. The dimensionality of the square matrix units of the text and dictionaries is equal to the maximum dimensionality of any of them.

There is not more than one unit in each row of the text matrices and dictionaries, the remaining elements are equal to zero. This property is a consequence of the uniqueness of the first index in all matrix words of the text in accordance with the coordinate rules and formula. In which place of the matrix row one is located is determined by the corresponding second index.

In the matrix of dictionaries the corresponding words of the text the units are on the main diagonal. The remaining elements of the diagonal and matrix are zero. In the matrix of the left dictionary there are ones on each place of the main diagonal, the matrix is unitary. The right dictionary is not a unit matrix.

Separator (space) of words in ordinary texts turns into the matrix addition operation. Inversely, the original text is reconstructed of the matrix text by indexes «forgetting» the algebraic properties (by turning the addition operation into a divider-space).

The order of elements in matrix texts is no longer essential, unlike regular texts. The summands can be swapped, but without changing their indices. Consequently, algebraic transformations can be performed with matrix texts (e.g., similarity reduction) as in the case of numerical polynomials.

3.2. Properties

The product on the left of the full left dictionary by the whole matrix text is, of course, the whole matrix text, because the full left dictionary is a unit matrix (phantom multiplier). The part of the left dictionary is a projector. Multiplication of this projector by the text on the left will extract from the whole matrix text the part of the text corresponding to this projector.

The product on the right of the full right dictionary by the whole matrix text is the whole matrix text, since this dictionary contains all the second indices present in the text, and there are no other second indices in the text monomials that would not be present in the right dictionary. The right dictionary is the right phantom multiplier for the matrix text as a hyperbinary number. At that, the right dictionary, unlike the left dictionary, is not a unit matrix.

The squares of the matrix text and dictionaries are the text itself and the dictionaries. The product of the right dictionary on the left one is the right dictionary. The product of the left dictionary on the right one is the right dictionary.

3.3. Fragments.

Each word of a matrix text is its minimal fragment. The sum of all minimal fragments is the text itself. In general, the fragments of a matrix text are the polynomials resulting from the product of the left-hand part of the full left-hand dictionary by the whole matrix text. For the sum of any text fragments to be this text it is necessary to understand addition as a matching addition. After such a concordance the intersection of fragments will be excluded.

The algebraic goal of transformations of matrix texts is a reasonable (with the help of phantom multipliers) fragmentation of the original text with a significant reduction of the number of fragments used compared to the combinatorial evaluation.

3.4. Example of a linguistic text

3.5. Example of matrix mathematical text

3.6. Example of matrix Morse code

4. Algebra of text

4.1. Definitions of algebraic systems

A semigroup is a non-empty set in which any pair of elements taken in a certain order has a new element called their product, and for any three elements the product is associative. Matrix units by multiplication form a semigroup. For matrix units the condition of associativity is satisfied because they are square matrices of the same dimensionality. Matrix units have no inverse (singular). The presence of a neutral element (unit matrix) and an inverse element is not required for a semigroup (unlike a group). A semigroup with a neutral element is called a monoid. Any semigroup that does not contain a neutral element can be turned into a monoid by adding to it some element permutation with all elements of the semigroup, for example a unit matrix of the same dimension for a semigroup of matrix units.

A ring is an algebraic structure, a set in which a reversible addition operation and an multiplication operation, similar in properties to the corresponding operations on numbers, are defined for its elements. The result of these operations must belong to the same system. Integer numbers form a ring. Integer numbers can be multiplied and added, the result is an integer. For integers, there are opposite numbers on addition (negative integers) — addition is reversible. Integers are an infinite commutative ring with one, with no divisors of zero (integral ring). Two elements are elements of an integer ring or the first element (divisor) divides the second if and only if there exists a third element such that the product of the first number by the third is equal to the second number.

A ring of integers is a Euclidean ring. A Euclidean ring is a ring in which the elements are analogous to the Euclidean algorithm for division with a remainder. The Euclidean algorithm is an efficient algorithm for computing the greatest common divisor of two integers (or the common measure of two segments).

For a ring, an ideal is a subcolumn closed with respect to multiplication by the elements of the ring. An ideal is called left (respectively right) if it is closed with respect to multiplication from the left (respectively right) by the elements of the whole ring. A finitely generated ideal of an associative ring is an ideal that is generated by a finite number of its elements. The simplest example of an ideal is a subcollection of even numbers in a ring of integers.

Rings are distinguished by a characteristic — the smallest integer k, such that the product of each element by such k (the sum of k instances of this element) equals the zero element of the ring. If no such k exists, then the ring has characteristic zero. For example, a finite field (finite number of elements) of characteristic 2 is a field consisting of two elements 0 and 1. The sum of the two units here is zero.

A semi-ring is two semigroups (additive and multiplicative) connected by the law of distributivity of multiplication with respect to addition on both sides. For example, the natural numbers form a semicircle. The result of multiplication of natural numbers will be a natural number. But because there are no negative numbers, there are no elements opposite to natural numbers with respect to addition.

Algebra is a ring that has these same elements multiplied by the elements of some field. A field is also a ring, but such that its elements are permutable when multiplied by each other and the elements are inverse (the product of an element by its inverse is a unit element).

Module over a ring is one of the basic concepts in general algebra, which is a generalization of vector space (module over a field) and abelian group (module over a ring of integers). In vector space, a field is a set of numbers, such as real numbers, to which vectors can be multiplied. This operation satisfies the corresponding axioms, such as distributivity of multiplication. Modulus, on the other hand, only requires that the elements on which vectors are multiplied form a ring (associative with unity), such as a ring of matrices, not necessarily a field of real numbers.

An ideal (right or left) can be defined as a submodule of a ring considered as a right or left module over itself.

A half-module is similar to a module, but it is a module over a half-ring (no inverse elements).

A free module is a module over a ring if it has a generating set of linearly independent formants generating that module. The term free means that this set remains generating after linear transformations of the formants. Every vector space, for example, is a free modulus.

A free half-module is a free module over a half-ring.

Algebraic systems are an inverted hierarchical system of concepts (an inverted pyramid), where natural numbers are at the base and various number-like objects on top, with their properties defined by axioms and correspondences («forgetting» some part of properties) between them. For example, complex numbers turn into real numbers by forgetting the imaginary unit, hypercomplex numbers turn into complex numbers by forgetting their matrix nature. Free semi-modules turn into vector spaces when vector coordinates are real numbers, not hypercomplex matrix numbers, and they have no inverse in addition and multiplication (hyperbinary numbers).

4.2. Free semi-module

A text algebra is a free noncommutative semi-module (an associative algebra with a unit) whose elements (matrix units of the text) are commutative in addition and noncommutative in multiplication, and satisfy two relations. The first relation determines the multiplication of matrix units (semigroup by multiplication). The second relation determines the addition of matrix units by agreement. The result of such addition satisfies the rules and formula for text coordination (semigroup by addition). The sum of the text matrix units will be the text matrix unit.

A semigroup is two semigroups. The multiplicative semigroup and the additive semigroup are related by the law of distributivity of multiplication with respect to addition on both sides, since their elements are square binary matrices which are distributive with respect to their joint multiplication and addition.

4.3 Fragment Algebra

Matrix text fragments have the following algebraic properties:

1. The divisor, divisor and quotient are defined for any matrix text fragments almost the same way as for integers. The fragments are hyperbinary numbers. Each fragment has a corresponding left and right phantom multiplier.

The relation of divisibility (or multiplicity) of fragments is reflexive, like for integers (a fragment divides by itself). However, the matrix of a quotient is not always unambiguous. One-valuedness and diagonality of the quotient matrix are restored by using matching addition. The reason of multivaluedness is possible repetitions of indices in matrix units of text fragments.

The divisibility (multiplicity) relation is transitive,

4. — 7. Describe the properties of multiplication and addition of divisibility relations similar to integers.

7. Properties of right and left multiplication of multiples by combinations of matrix units (matrix polynomials) distinguish divisibility (multiplicity) of integers and divisibility (multiplicity) of number-like elements (hyperbinary numbers) as polynomials of matrix units. Integers always exist when multiples of numbers on the left or right are multiplied by integers. In the case of hyperbinary numbers they do not always exist.

8. и 9. The sign of divisibility of matrix text fragments is the divisibility (multiplicity) of their right and left dictionaries.

10.-18. Definitions and signs of common divisor, NOD, mutual simplicity, common multiple.

19, 20. The left ideal of a matrix text is the corpus of all texts (all possible first coordinates) which can be composed from the words of a given right dictionary (second coordinates). Indeed, the left ideal is the set of all matrix polynomials, which are multiplied from the left by the right dictionary. The multiplication results in polynomials that have second coordinates only such as are available in the dictionary. Also, when any polynomial on the left is multiplied by another polynomial, the result of the multiplication is such a matrix polynomial that all its second indices are a subset of the second indices of the polynomial to which the left is multiplied. Any matrix polynomial generates a left ideal of polynomials that have the same right dictionary or smaller. When textual matrix polynomials are added by agreement, the result is a textual polynomial: the polynomial matrix is binary and there is at most one unit on each row of the matrix.

If a textual hyperbinary number (after adding the monomials that make it up) is multiplied to the left or right by any element of a matrix semicircle, this hyperbinary number generates a left or right ideal — all matrix units multiple to the left or right of the given matrix unit. This means that multiplying an even number by any integer results in an even number.

The main left and right ideals are generated by each matrix unit of the dictionaries. The left and right ideals of a matrix semicircle are generated by the sum of the generating elements of the principal ideals.

21. Ideals of matrix texts, by analogy with ideals of integers, allow to investigate not only specific texts and fragments, but also their sets (classes). The theorems for ideals of texts are the same as for ideals of integers, but taking into account that matrix words are noncommutative and some of them are divisors of zero.

22. The notion of divisibility of matrix texts is generalized to the divisibility of ideals of matrix texts. The properties of divisibility of matrix fragments of the text take place in the division of ideals. The notions of NOD and NOC are also generalized to the case of ideals of matrix texts.

23. Comparisons of integers are also generalized to the case of matrix texts. Fragments of matrix texts are comparable modulo (measure) of some fragment if the residues from dividing these fragments by a given fragment are multiples. If the residues are multiples then they have the same dictionaries. Therefore fragments are comparable modulo a given fragment if the residues from division of the fragments on the given fragment have the same dictionaries. Comparability of texts modulo some text can be interpreted as follows. Let there be a corpus of English. Six books are chosen which most correspond to the six basic plots of Shakespeare. The matrix text of these six books is a common fragment. Then the six books that have multiples of the residuals from dividing their matrix texts by the common fragment are comparable. This means that it is possible to make a catalog of books for those who are interested not only in Shakespearean plots. And the multiplicity of residues is a classifying feature for this catalog. There are six classes of residues in this example. By taking only three books, for example, one can compare the entire corpus of English with only three stories out of six. If one has ten favorite books or authors, one can classify the corpus of language in terms of differences from this topthen.

24. For classes of deductions (residues) of matrix texts, operations of modular arithmetic are performed, taking into account that, as for ideals, matrix words and fragments are noncommutative and some of them can be divisors of zero.

25. The notion of solving comparisons also generalizes to matrix texts. To solve a system of comparisons modulo means to find all classes of deductions such that any combinations of matrix fragment units from these classes satisfy the comparison equation.

The unknowns in the comparison equation are the coordinates of the matrix units in the text fragments. The result of solving a system of comparisons is such a replacement of words and/or places of words in the text that the comparison equation is satisfied. For example, if a person has read ten books, then the remaining books are edited into the vocabulary and phrases of those ten books by the comparison solution. If there is a partial solution to the comparison, then the general solution is the class of deductions for which the partial solution (e.g., the working version of the text) is representative of that class. Then the current version of the matrix text corresponds to the set of possible matrix texts corresponding to the solutions of the comparison system. This property of matrix texts can be used in the creation of texts by predicting the variant continuation of a fragment of text (autauthor).

26. Euclid’s algorithm for polynomials of matrix units is simpler than for integers. The incomplete quotient is found in one step and depends on the number of common second coordinates. These common coordinates are defined as the incomplete quotient of the dictionaries of polynomials that are divisible. The incomplete quotient of the fragment dictionaries is uniquely found in contrast to the incomplete quotient of the fragment dictionaries because there are no repetitions in each of the fragment dictionaries.

The ring of integers is Euclidean. The free noncommutative semi-modulus of hyperbinarian numbers is Euclidean.

5. Algebraic structurization

5.1. Structurization

Structure — the totality and arrangement of the links between the parts of the whole. The signs of a structured text are: headings of different levels of fragments (paragraphs, chapters, volumes, the whole text); summaries (preface, introduction, conclusion, abstract, abstract — extended abstract); context and frequency dictionary; dictionaries of synonyms, antonyms and homonyms; marking of text-forming fragments with separators (commas, dots, signs of paragraphs, paragraphs, chapters).

The listed structural features are the corresponding parts (fragments) of the text. For polynomial representation of matrix text some such parts are corresponding noncommutative Gr¨obner-Shirschov bases of free noncommutative semi-modular hyperbinary numbers (text algebra).

5.2 Example of a linguistic text

Algebraic structuring of the example text is done by transforming, using properties of matrix units, the original matrix text in additive form into multiplicative form (similar to division of ordinary polynomials «in column»). The corresponding commutatives are the noncommutative analog of the Gr¨obner-Shirschov basis for commutative polynomials. The diamond lemma is satisfied — the summands have meshing to the right of the second index, but they are solvable.

During transformation (reduction) a transformation of the vocabulary of the text takes place. In the new vocabulary (the basis of the ideal) there are new words. The words as signs are the same, but the meaning of repeated words in the text changes. Words are defined by contexts. Words are close if their contexts contain at least one word in common. Contexts are the more close the more common words from the corresponding dictionary (common second indices) they contain.

In natural languages, the multiplicity of word contexts is the cause of ambiguity in understanding the meaning of words. The meaning according to Frege is the corresponding part of the meanings of the sign (word). The meanings of a word are all its contexts (properties).

The right dictionary in the beginning of structurization was a dictionary of signs-words. In the process of structurization it is converted into context-dependent matrix constructions of n-grams (combinations of word-signs, taking into account their mutual order and distance in the text). The semantic partitioning of the text is based on extending the original vocabulary of the text with homonyms (the signs are the same, the context meaning is different), and the text itself is already constructed using such an extended vocabulary from the noncommutative Gröbner-Shirshov basis.

The marked text, after the first separation of homonyms and their introduction into the extended dictionary, can be algebraically structured again for a finer semantic partitioning.

The extended dictionary (Gröbner-Shirschov basis) together with the contexts of repeated words is called the matrix context dictionary of the text.

The matrix synonym dictionary is a fragment of the context dictionary for words that have similar contexts in semantic distance, but different, like the signs in the right dictionary. Semantic distance measures a measure of synonymy.

The matrix dictionary of homonyms is a fragment of the context dictionary for words that are the same as signs, but with zero semantic distance.

A matrix dictionary of antonyms is a fragment of the context dictionary for words with opposite contexts. A sign of opposites in linguistic texts is the presence of negative words (particles, pronouns, and adverbs) in contexts.

The hierarchical headings of the matrix text are fragments of the Gröbner-Shirschov basis, which have the corresponding frequency of words of the synonymic dictionary. For example, for the example of a linguistic text, the highest heading is two bigrams «set object» «object set».

The preface, the introduction, the conclusion, the abstract, the abstract are the headings supplemented with the elements of the Gröbner-Shirschow basis of lower frequency. and the deductions included in the basis (as in the Buchberger algorithm).

The repetitive words are defined by the frequency matrix dictionary of the text, which is equal to the product of the transposed text by the matrix text itself.

The list of contexts is defined by the context matrix dictionary, which is equal to the product of the matrix text by the transposed text. The context matrix dictionary is a dictionary of intervals between repeating words of the text. The context of non-repeating words is the whole character sequence containing them. The context of the dictionary is the vocabulary.

The text can be restructured with a fragment of the baseline. For example, the novel War and Peace can be restructured into a medical theme by using a dictionary fragment related to the scene of field surgery, and laying out the entire text on the module of this fragment of the general Gröbner-Shirschov basis. In doing so, the supreme title may change. The existing title of the novel (the supreme title) is considered controversial. The word «peace» has two different meanings (an antonym of «war» and a synonym for «society»). In 1918, the dictionary of the Russian language was changed. The letters «ъ» and «і» disappeared. Two words «world» and «mir» became one word, possibly changing the author’s meaning of the novel’s title. Using algebraic structurization, it is possible to calculate the text title as a function of the text, using the two texts (and the two calculated context dictionaries of the novel) before and after the spelling reform.

Two texts under algebraic structurization turn into one text with a unique first coordinate of matrix words as follows. Let each unique first coordinate of a word turn into two indexes. The first is the number of the text, the second is the number of the word in this text. Then pairs of indexes of two texts are numbered with one index and turned into one character sequence with unique numbers (concatenation of texts).

The meaning of the text, its understanding is determined by the motivation and personal context vocabulary of the reader. If they are determined, it is possible to restructure the author’s text, presented in matrix form, into a text as understandable to the reader as possible (in his personal Gröbner-Shirshov basis), but with elements of the unknown, stated in the reader’s personal language, and with additions or clarifications of his personal context vocabulary.

Personal adaptation of texts on the basis of its restructuring is possible. To understand a text is to put it into one’s own words — the basic technique of semantic reading. For texts in matrix form, to understand it means to decompose and restructure the author’s text on its Gröbner-Shirshov basis.

Restructuring requires an algebraic structuring of the corpus of texts to compose the above vocabularies of the corpus of language. In this case the ideals and classes of deductions of the matrix ring of the corpus of matrix texts should be constructed and investigated beforehand. In the Bergman-Kohne structural theory, free (finitely generated) matrix rings are related (connected) with rings of noncommutative polynomials over corpora as commutative regions of principal ideals with rings of polynomials from one variable over the field.

In a free semicircle between the polynomials of a text, there are relations defined by interval and semantic extended vocabularies of the corpus of texts. A particular matrix text can be defined by a system of polynomial equations on text coordinates (the unknowns in the equations are monomials with unknown indices; the noncommutative coefficients given in the equations are monomials with known indices). Some of them will be given by extended dictionaries or inequalities to fragments, and some of them will be unknowns. In this case it is possible to set headings and summaries by equations, and to compute draft text from systems of polynomial equations (inverse problem of structurization — restructuring). It is possible to find the necessary redistribution of text-forming fragments, to replace some dictionaries with others, to change the significance of repeated words, and to define neologisms.

5.3. An example of a mathematical text

The method of algebraic structuring of texts allows us to find appropriate classifiers and dictionaries for texts of different nature. That is, to classify texts without a priori setting the features of classification and naming the classes. Such classification is called categorization or a posteriori classification. Using the example of a mathematical text, five classification attributes, their combinations and corresponding classes are calculated. The names of classes coincide with the names of features and their combinations.

5.4. Example of Morse code

Morse code is algebraically structured into three ideals (classes) by the corresponding noncommutative Grebner-Shirshov bases.

The title of those letters that have a dash sign on the first place of the 4-digit sequence pattern is:

_BCD__G___K_MNO_Q__T___XYZ (13 letters)

The title of those letters that have a «dot» sign on the second place of the 4-character sequence pattern:

_BCD_F_HI_K__N____S_UV_XY_ (13 letters)

The title of those letters that have a «dash» sign in the third place of the 4-character sequence pattern:

__C__F___J K ___OP____U_W_Y_ (9 letters)

6. Context category

6.1. Definitions.

A matrix text word’s context is its fragment — the sum of matrix units (words) between two matrix words-repeaters. Context is all words of a matrix text between repeating characters of the dictionary. For example, between repeating words, repeating dots, signs of paragraphs, chapters, volumes of language texts or phrases, periods and parts of musical works.

The signs of text fragments look the same, but they are also marks homonyms — their context is the corresponding fragments. The context of a linguistic fragment (explication or explanation) can be not only linguistic text, but also audio (for example, music), figurative (photo) or joint (video). The context of a musical text can be a linguistic text (e.g., a libretto).

Matrix words correspond to their matrix contexts, represented as algebraic objects. All possible relations between these objects are the subject of analysis in determining the meaning of words. Category theory is useful for the study of such constructions because it is based on the notion of transitivity.

The category of the text sign context is defined as follows:

-

Category objects are pairwise multiples of contexts.

-

For each pair of multiple objects there exists a set of morphisms (right and left parts), each morphism corresponds to a single context.

-

For a pair of morphisms there is a composition (the product of square matrices of two partials) such that if one partial of the first and second contexts and the second partial of the second and third contexts are given, then the partial of the first and third contexts equals the product of matrices of these two partials (taking into account the right and left products) — the condition of transitivity.

-

A unit matrix is defined for each object as an identical morphism. Categorical associativity follows from associativity of matrix multiplication.

The intersection (common words) of matrix dictionaries is their product. The proof follows from the defining property of matrix units and the definition of dictionaries. When the matrix units of dictionaries are multiplied (the lower indices are the same in each unit), the product of their matrix words (units) with different indices is equal to zero. In the product there remain only common words with matching indices of all the multipliers.

The union of any pair of dictionaries is their sum minus the intersection (deleted repetitions of matrix units).

The minimal right-hand dictionary of the matrix text fragment is such a dictionary of the text that the dictionary and the text are mutually multiples. For mutually multiples of the text and the right dictionary nonzero matrices of their privates exist. The privates exist if the matrix units of the text and the right dictionary contain the same number of second indices (coordinates) and do not contain any other second indices.

Minimal dictionaries do not contain matrix words (second indices of matrix units) that are absent in the corresponding text fragment.

The equivalence classes of contexts are defined by the common minimal right dictionaries. If a pair of contexts has a common minimum dictionary, then these contexts are mutually multiple. Hence, there are their mutual transformations (matrices).

If the sign-word contexts have a minimum common right vocabulary, then they are multiples of each other. Hereinafter, the dictionaries of text fragments mean their minimal dictionaries.

If the given contexts are multiplied by the right dictionary such that each resulting context has a right dictionary (minimum), they are called reduced contexts. During the reduction (multiplication by the dictionary on the right) the part of matrix units with second indices, which are not in the corresponding dictionary, is removed in each of the given fragments. If any of the obtained fragments lacks at least one of the dictionary indices, it should not get into the reduced set.

Contexts with shared vocabularies, for example, after reduction of some word-sign from the dictionary, are objects of the category of that sign.

A transitive closure can be defined for any set of fragments by specifying for them a common vocabulary that is less than or equal to any fragment by the order of the corresponding Neuter chain.

6.2. Example

The same example of a linguistic text is used, in which there are four identical as signs for the word «set. These four signs, in turn, have four contexts and their four vocabularies.

The problem is to calculate the sameness and difference of the four words «set» depending on the sameness and difference in some measure (modulo) of their contexts. The sameness of contexts is determined by the presence of common vocabularies, which are used as a module for comparing contexts. The difference is determined by the deductions of the contexts by the same module. Deductions will define their equivalence classes (classes of deductions) and categories of deductions, since transitivity closure can also occur for them.

The general vocabulary of the four contexts is constructed as their product. Transitive closure on the common vocabulary-module leads to the removal of «superfluous» words.

Thus, the reduced (reduced) contexts of the sign-word «set» are the four corresponding matrix words. These words have the same matrix unit of the sign-word «object» in the unified matrix dictionary (see Dictionary Unification). The category of this sign is computed: the four matrix morphisms and their composition. The composition is an expression in the language of category theory of the interval partitioning of the word «set» (chapter on algebraic structurization), and the reduction is an example of solving a system of comparisons modulo minimal dictionary. The usefulness of using category theory is that its approach is more general and allows the use of methods from different sections of algebra.

Thus, all four fragments of the text are the same (equivalent) in the sense of the matrix-word sign «set» (comparable modulo this word). There are four matrix-morphisms transforming these texts into each other. By analogy with a library catalog, all these four texts (objects of the matrix-word «set» category) are in the same catalog box with the name of the matrix-word «set». This is an example of a crude keyword classification of texts. The contextual meaning of words is not taken into account, all such words as signs are the same, and all cases of their occurrence in the text can be added up to calculate the significance of keywords by frequency of use.

The obtained result means that in the first approximation all four words «set» are contextually related to the word «object». The words «set» can be the same or different as long as their reduced (reduced) contexts are the same or different.

For matrix texts, modulo comparisons are performed. The residues of division of fragments of matrix texts into other fragments (modules) can have residues (subtractions), which, as well as modules, are classifying signs.

The sign of divisibility (multiplicity) of fragments of matrix texts is the divisibility (multiplicity) of their right dictionaries. The residues of division of the dictionaries (deductions of dictionaries) of fragments are the dictionaries of the residues of division of these fragments.

To calculate the similarities and differences of the words you need to compare the corresponding four contexts modulo matrix word «object». Four deductions of each context modulo matrix word «object» are calculated.

It follows from the result that all four contexts are incomparable modulo matrix word «object». The deductions are not pairwise multiples and do not form any class of deductions in pairs. This means that all «set» words are different in sense (context).

The similarity is found in the next step of calculations (for deductions) by calculating common vocabularies for pairs of deductions and performing the reduction. There is no common dictionary for all deductions. This is the reason why there is no common class of deductions and no corresponding category of the matrix word «object». But some three pairs of deductions have three corresponding common vocabularies. Then these pairs of deductions, after reduction, form classes and categories of deductions with the matrix word names «this», «being» and «point». The directory with the matrix name «this» contains the first and second fragments, the directory with the matrix name «to be» contains the first and third fragments, and the directory with the matrix name «dot» contains the second and fourth fragments.

The matrix word «polynomial» is the annulator (divisor of zero) of the first, third, and fourth fragments.

The matrix word «monom» is an annulator (divisor of zero) of the deductions of the first, second and fourth fragments.

The matrix word «coinvariant» is an annulator (divisor of zero) of the deductions of the first, second and third fragments.

These are context-free matrix words (the last three summands in the context dictionary of the chapter on algebraic structurization) — when multiplying a deduction by an annulator the product is different from zero if the deduction contains this annulator.

So the problem statement of the above example was to calculate the sameness and difference of the four matrix words «set» depending on the sameness and difference of their four contexts (fragments) by some measure (modulo).