Word Processing

Andrew Prestage, in Encyclopedia of Information Systems, 2003

I. An Introduction to Word Processing

Word processing is the act of using a computer to transform written, verbal, or recorded information into typewritten or printed form. This chapter will discuss the history of word processing, identify several popular word processing applications, and define the capabilities of word processors.

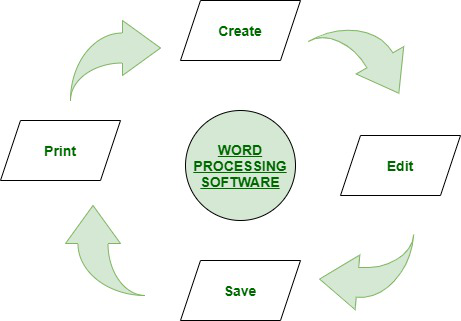

Of all the computer applications in use, word processing is by far the most common. The ability to perform word processing requires a computer and a special type of computer software called a word processor. A word processor is a program designed to assist with the production of a wide variety of documents, including letters, memoranda, and manuals, rapidly and at relatively low cost. A typical word processor enables the user to create documents, edit them using the keyboard and mouse, store them for later retrieval, and print them to a printer. Common word processing applications include Microsoft Notepad, Microsoft Word, and Corel WordPerfect.

Word processing technology allows human beings to freely and efficiently share ideas, thoughts, feelings, sentiments, facts, and other information in written form. Throughout history, the written word has provided mankind with the ability to transform thoughts into printed words for distribution to hundreds, thousands, or possibly millions of readers around the world. The power of the written word to transcend verbal communications is best exemplified by the ability of writers to share information and express ideas with far larger audiences and the permanency of the written word.

The increasingly large collective body of knowledge is one outcome of the permanency of the written word, including both historical and current works. Powered by decreasing prices, increasing sophistication, and widespread availability of technology, the word processing revolution changed the landscape of communications by giving people hitherto unavailable power to make or break reputations, to win or lose elections, and to inspire or mislead through the printed word.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0122272404001982

Computers and Effective Security Management1

Charles A. Sennewald, Curtis Baillie, in Effective Security Management (Sixth Edition), 2016

Word Processing

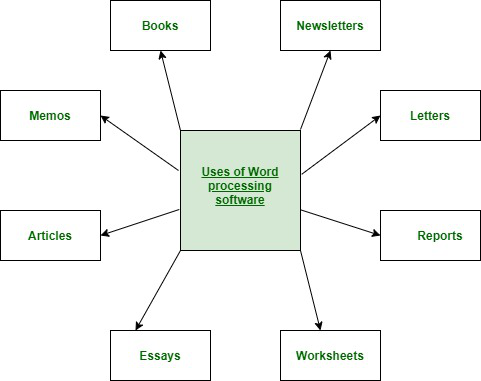

Word processing software can easily create, edit, store, and print text documents such as letters, memoranda, forms, employee performance evaluations (such as those in Appendix A), proposals, reports, security surveys (such as those in Appendix B), general security checklists, security manuals, books, articles, press releases, and speeches. A professional-looking document can be easily created and readily updated when necessary.

The length of created documents is limited only by the storage capabilities of the computer, which are enormous. Also, if multiple copies of a working document exist, changes to it should be promptly communicated to all persons who use the document. Specialized software, using network features, can be programmed to automatically route changes to those who need to know about updates.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128027745000241

Globalization

Jennifer DeCamp, in Encyclopedia of Information Systems, 2003

II.D.2.c. Rendering Systems

Special word processing software is usually required to correctly display languages that are substantially different from English, for example:

- 1.

-

Connecting characters, as in Arabic, Persian, Urdu, Hindi, and Hebrew

- 2.

-

Different text direction, as in the right-to-left capability required in Arabic, Persian, Urdu, and Hindi, or the right-to-left and top-to-bottom capability in formal Chinese

- 3.

-

Multiple accents or diacritics, such as in Vietnamese or in fully vowelled Arabic

- 4.

-

Nonlinear text entry, as in Hindi, where a vowel may be typed after the consonant but appears before the consonant.

Alternatives to providing software with appropriate character rendering systems include providing graphic files or elaborate formatting (e.g., backwards typing of Arabic and/or typing of Arabic with hard line breaks). However, graphic files are cumbersome to download and use, are space consuming, and cannot be electronically searched except by metadata. The second option of elaborate formatting often does not look as culturally appropriate as properly rendered text, and usually loses its special formatting when text is added or is upgraded to a new system. It is also difficult and time consuming to produce. Note that Microsoft Word 2000 and Office XP support the above rendering systems; Java 1.4 supports the above rendering systems except for vertical text.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0122272404000800

Text Entry When Movement is Impaired

Shari Trewin, John Arnott, in Text Entry Systems, 2007

15.3.2 Abbreviation Expansion

Popular word processing programs often include abbreviation expansion capabilities. Abbreviations for commonly used text can be defined, allowing a long sequence such as an address to be entered with just a few keystrokes. With a little investment of setup time, those who are able to remember the abbreviations they have defined can find this a useful technique. Abbreviation expansion schemes have also been developed specifically for people with disabilities (Moulton et al., 1999; Vanderheiden, 1984).

Automatic abbreviation expansion at phrase/sentence level has also been investigated: the Compansion (Demasco & McCoy, 1992; McCoy et al., 1998) system was designed to process and expand spontaneous language constructions, using Natural Language Processing to convert groups of uninflected content words automatically into full phrases or sentences. For example, the output sentence “John breaks the window with the hammer” might derive from the user input text “John break window hammer” using such an approach.

With the rise of text messaging on mobile devices such as mobile (cell) phones, abbreviations are increasingly commonplace in text communications. Automatic expansion of many abbreviations may not be necessary, however, depending on the context in which the text is being used. Frequent users of text messaging can learn to recognize a large number of abbreviations without assistance.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123735911500152

Case Studies

Brett Shavers, in Placing the Suspect Behind the Keyboard, 2013

Altered evidence and spoliation

Electronic evidence in the form of word processing documents which were submitted by a party in litigation is alleged to have been altered. Altered electronic evidence has become a common claim with the ability to determine the changes becoming more difficult. How do you know if an email has been altered? What about a text document?

Case in Point

Odom v Microsoft and Best Buy, 2006

The Odom v Microsoft and Best Buy litigation primarily focused on Internet access offered to customers in which the customers were automatically billed for Internet service without their consent. One of the most surprising aspects of this case involved the altering of electronic evidence by an attorney for Best Buy. The attorney, Timothy Block, admitted to altering documents prior to producing the documents in discovery to benefit Best Buy.

Investigative Tips: All evidence needs to be validated for authenticity. The weight given in legal hearings depends upon the veracity of the evidence. Many electronic files can be quickly validated through hash comparisons. An example seen in Figure 11.4 shows two files with different file names, yet their hash values are identical. If one file is known to be valid, perhaps an original evidence file, any file matching the hash values would also be a valid and unaltered copy of the original file.

Figure 11.4. Two files with different file names, but having the same hash value, indicating the contents of the files are identical.

Alternatively, Figure 11.5 shows two files with the same file name but having different hash values. If there were a claim that both of these files are the same original files, it would be apparent that one of the files has been modified.

Figure 11.5. Two files with the same file names, but having different hash values, indicating the contents are not identical.

Finding the discrepancies or modifications of an electronic file can only be accomplished if there is a comparison to be made with the original file. Using Figure 11.5 as an example, given that the file having the MD5 hash value of d41d8cd98f00b204e9800998ecf8427e is the original, and where the second file is the alleged altered file, a visual inspection of both files should be able to determine the modifications. However, when only file exists, proving the file to be unaltered is more than problematic, it is virtually impossible.

In this situation of having a single file to verify as original and unaltered evidence, an analysis would only be able to show when the file was modified over time, but the actual modifications won’t be known. Even if the document has “track changed” enabled, which logs changes to a document, that would only capture changes that were tracked, as there may be more untracked and unknown changes.

As a side note to hash values, in Figure 11.5, the hash values are completely different, even though the only difference between the two sample files is a single period added to the text. Any modification, no matter how minor, results in a drastic different hash value.

The importance in validating files in relation to the identification of a suspect that may have altered a file is that the embedded metadata will be a key point of focus and avenue for case leads. As a file is created, copied, modified, and otherwise touched, the file and system metadata will generally be updated.

Having the dates and times of these updates should give rise to you that the updates occurred on some computer system. This may be on one or more computers even if the file existed on a flash drive. At some point, the flash drive was connected to a computer system, where evidence on a system may show link files to the file. Each of these instances of access to the file is an opportunity to create a list of possible suspects having access to those systems in use at each updated metadata fields.

In the Microsoft Windows operating systems, Volume Shadow Copies may provide an examiner with a string of previous versions of a document, in which the modifications between each version can be determined. Although not every change may have been incrementally saved by the Volume Shadow Service, such as if the file was saved to a flash drive, any previous versions that can be found will allow to find some of the modifications made.

Where a single file will determine the outcome of an investigation or have a dramatic effect on the case, the importance of ‘getting it right’ cannot be overstated. Such would be the case of a single file, modified by someone in a business office, where many persons had common access to the evidence file before it was known to be evidence. Finding the suspect that altered the evidence file may be simple if you were at the location close to the time of occurrence. Interviews of the employees would be easier as most would remember their whereabouts in the office within the last few days. Some may be able to tell you exactly where other employees were in the office, even point the suspect out directly.

But what if you are called in a year later? How about 2 or more years later? What would be the odds employees remembering their whereabouts on a Monday in July 2 years earlier? To identify a suspect at this point requires more than a forensic analysis of a computer. It will probably require an investigation into work schedules, lunch schedules, backup tapes, phone call logs, and anything else to place everyone somewhere during the time of the file being altered.

Potentially you may even need to examine the hard drive of a copy machine and maybe place a person at the copy machine based on what was copied at the time the evidence file was being modified. When a company’s livelihood is at stake or a person’s career is at risk, leave no stone unturned. If you can’t place a suspect at the scene, you might be able to place everyone else at a location, and those you can’t place, just made your list of possible suspects.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9781597499859000113

When, How, and Why Do We Trust Technology Too Much?

Patricia L. Hardré, in Emotions, Technology, and Behaviors, 2016

Trusting Spelling and Grammar Checkers

We often see evidence that users of word processing systems trust absolutely in spelling and grammar checkers. From errors in business letters and on resumes to uncorrected word usage in academic papers, this nonstrategy emerges as epidemic. It underscores a pattern of implicit trust that if a word is not flagged as incorrect in a word processing system, then it must be not only spelled correctly but also used correctly. The overarching error is trusting the digital checking system too much, while the underlying functional problem is that such software identifies gross errors (such as nonwords) but cannot discriminate finer nuances of language requiring judgment (like real words used incorrectly). Users from average citizens to business executives have become absolutely comfortable with depending on embedded spelling and grammar checkers that are supposed to autofind, trusting the technology so much that they often do not even proofread. Like overtrust of security monitoring, these personal examples are instances of reduced vigilance due to their implicit belief that the technology is functionally flawless, that if the technology has not found an error, then an error must not exist.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128018736000054

Establishing a C&A Program

Laura Taylor, Matthew Shepherd Technical Editor, in FISMA Certification and Accreditation Handbook, 2007

Template Development

Certification Packages consist of a set of documents that all go together and complement one another. A Certification Package is voluminous, and without standardization, it takes an inordinate amount of time to evaluate it to make sure all the right information is included. Therefore, agencies should have templates for all the documents that they require in their Certification Packages. Agencies without templates should work on creating them. If an agency does not have the resources in-house to develop these templates, they should consider outsourcing this initiative to outside consultants.

A template should be developed using the word processing application that is the standard within the agency. All of the relevant sections that the evaluation team will be looking for within each document should be included. Text that will remain constant for a particular document type also should be included. An efficient and effective C&A program will have templates for the following types of C&A documents:

- ▪

-

Categorization and Certification Level Recommendation

- ▪

-

Hardware and Software Inventory

- ▪

-

Self-Assessment

- ▪

-

Security Awareness and Training Plan

- ▪

-

End-User Rules of Behavior

- ▪

-

Incident Response Plan

- ▪

-

Security Test and Evaluation Plan

- ▪

-

Privacy Impact Assessment

- ▪

-

Business Risk Assessment

- ▪

-

Business Impact Assessment

- ▪

-

Contingency Plan

- ▪

-

Configuration Management Plan

- ▪

-

System Risk Assessment

- ▪

-

System Security Plan

- ▪

-

Security Assessment Report

The later chapters in this book will help you understand what should be included in each of these types of documents. Some agencies may possibly require other types of documents as required by their information security program and policies.

Templates should include guidelines for what type of content should be included, and also should have built-in formatting. The templates should be as complete as possible, and any text that should remain consistent and exactly the same in like document types should be included. Though it may seem redundant to have the exact same verbatim text at the beginning of, say, each Business Risk Assessment from a particular agency, each document needs to be able to stand alone and make sense if it is pulled out of the Certification Package for review. Having similar wording in like documents also shows that the packages were developed consistently using the same methodology and criteria.

With established templates in hand, it makes it much easier for the C&A review team to understand what it is that they need to document. Even expert C&A consultants need and appreciate document templates. Finding the right information to include the C&A documents can by itself by extremely difficult without first having to figure out what it is that you are supposed to find—which is why the templates are so very important. It’s often the case that a large complex application is distributed and managed throughout multiple departments or divisions and it can take a long time to figure out not just what questions to ask, but who the right people are who will know the answers.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9781597491167500093

Speech Recognition

John-Paul Hosom, in Encyclopedia of Information Systems, 2003

I.B. Capabilities and Limitations of Automatic Speech Recognition

ASR is currently used for dictation into word processing software, or in a “command-and-control” framework in which the computer recognizes and acts on certain key words. Dictation systems are available for general use, as well as for specialized fields such as medicine and law. General dictation systems now cost under $100 and have speaker-dependent word-recognition accuracy from 93% to as high as 98%. Command-and-control systems are more often used over the telephone for automatically dialing telephone numbers or for requesting specific services before (or without) speaking to a human operator. Telephone companies use ASR to allow customers to automatically place calls even from a rotary telephone, and airlines now utilize telephone-based ASR systems to help passengers locate and reclaim lost luggage. Research is currently being conducted on systems that allow the user to interact naturally with an ASR system for goals such as making airline or hotel reservations.

Despite these successes, the performance of ASR is often about an order of magnitude worse than human-level performance, even with superior hardware and long processing delays. For example, recognition of the digits “zero” through “nine” over the telephone has word-level accuracy of about 98% to 99% using ASR, but nearly perfect recognition by humans. Transcription of radio broadcasts by world-class ASR systems has accuracy of less than 87%. This relatively low accuracy of current ASR systems has limited its use; it is not yet possible to reliably and consistently recognize and act on a wide variety of commands from different users.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0122272404001647

Prototyping

Rex Hartson, Pardha Pyla, in The UX Book (Second Edition), 2019

20.7 Software Tools for Making Wireframes

Wireframes can be sketched using any drawing or word processing software package that supports creating and manipulating shapes. While many applications suffice for simple wireframing, we recommend tools designed specifically for this purpose. We use Sketch, a drawing app, to do all the drawing. Craft is a plug-in to Sketch that connects it to InVision, allowing you to export Sketch screen designs to InVision to incorporate hotspots as working links.

In the “Build mode” of InVision, you work on one screen at a time, adding rectangular overlays that are the hotspots. For each hotspot, you specify what other screen you go to when someone clicks on that hotspot in “Preview mode.” You get a nice bonus using InVision: In the “operate” mode, you, or the user, can click anywhere in an open space in the prototype and it highlights all the available links. These tools are available only on Mac computers, but similar tools are available under Windows.

Beyond this discussion, it’s not wise to try to cover software tools for making prototypes in this kind of textbook. The field is changing fast and whatever we could say here would be out of date by the time you read this. Plus, it wouldn’t be fair to the numerous other perfectly good tools that didn’t get cited. To get the latest on software tools for prototyping, it’s better to ask an experienced UX professional or to do your research online.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128053423000205

Design Production

Rex Hartson, Partha S. Pyla, in The UX Book, 2012

9.5.3 How to Build Wireframes?

Wireframes can be built using any drawing or word processing software package that supports creating and manipulating shapes, such as iWork Pages, Keynote, Microsoft PowerPoint, or Word. While such applications suffice for simple wireframing, we recommend tools designed specifically for this purpose, such as OmniGraffle (for Mac), Microsoft Visio (for PC), and Adobe InDesign.

Many tools and templates for making wireframes are used in combination—truly an invent-as-you-go approach serving the specific needs of prototyping. For example, some tools are available to combine the generic-looking placeholders in wireframes with more detailed mockups of some screens or parts of screens. In essence they allow you to add color, graphics, and real fonts, as well as representations of real content, to the wireframe scaffolding structure.

In early stages of design, during ideation and sketching, you started with thinking about the high-level conceptual design. It makes sense to start with that here, too, first by wireframing the design concept and then by going top down to address major parts of the concept. Identify the interaction conceptual design using boxes with labels, as shown in Figure 9-4.

Take each box and start fleshing out the design details. What are the different kinds of interaction needed to support each part of the design, and what kinds of widgets work best in each case? What are the best ways to lay them out? Think about relationships among the widgets and any data that need to go with them. Leverage design patterns, metaphors, and other ideas and concepts from the work domain ontology. Do not spend too much time with exact locations of these widgets or on their alignment yet. Such refinement will come in later iterations after all the key elements of the design are represented.

As you flesh out all the major areas in the design, be mindful of the information architecture on the screen. Make sure the wireframes convey that inherent information architecture. For example, do elements on the screen follow a logical information hierarchy? Are related elements on the screen positioned in such a way that those relationships are evident? Are content areas indented appropriately? Are margins and indents communicating the hierarchy of the content in the screen?

Next it is time to think about sequencing. If you are representing a workflow, start with the “wake-up” state for that workflow. Then make a wireframe representing the next state, for example, to show the result of a user action such as clicking on a button. In Figure 9-6 we showed what happens when a user clicks on the “Related information” expander widget. In Figure 9-7 we showed what happens if the user clicks on the “One-up” view switcher button.

Once you create the key screens to depict the workflow, it is time to review and refine each screen. Start by specifying all the options that go on the screen (even those not related to this workflow). For example, if you have a toolbar, what are all the options that go into that toolbar? What are all the buttons, view switchers, window controllers (e.g., scrollbars), and so on that need to go on the screen? At this time you are looking at scalability of your design. Is the design pattern and layout still working after you add all the widgets that need to go on this screen?

Think of cases when the windows or other container elements such as navigation bars in the design are resized or when different data elements that need to be supported are larger than shown in the wireframe. For example, in Figures 9-5 and 9-6, what must happen if the number of photo collections is greater than what fits in the default size of that container? Should the entire page scroll or should new scrollbars appear on the left-hand navigation bar alone? How about situations where the number of people identified in a collection are large? Should we show the first few (perhaps ones with most number of associated photos) with a “more” option, should we use an independent scrollbar for that pane, or should we scroll the entire page? You may want to make wireframes for such edge cases; remember they are less expensive and easier to do using boxes and lines than in code.

As you iterate your wireframes, refine them further, increasing the fidelity of the deck. Think about proportions, alignments, spacing, and so on for all the widgets. Refine the wording and language aspects of the design. Get the wireframe as close to the envisioned design as possible within the constraints of using boxes and lines.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780123852410000099

Improve Article

Save Article

Like Article

Improve Article

Save Article

Like Article

Word Processing Software :

The word “word processor” means it processes words with pages and paragraphs. Word processors are of 3 types which are electronic, mechanical, and software.

The word processing software is used to apply the basic editing and design and also helps in manipulating the text to your pages whereas the word processor, is a device that provides editing, input, formatting, and output of the given text with some additional features.

It is a type of computer software application or an electronic device. In today’s generation, the word processor has become the word processing software or programs that are running on general-purpose computers.

Examples or Applications of a Word Processing Software :

- Wordpad

- Microsoft Word

- Lotus word pro

- Notepad

- WordPerfect (Windows only),

- AppleWorks (Mac only),

- Work pages

- OpenOffice Writer

Features :

- They are stand-alone devices that are dedicated to the function.

- Their programs are running on general-purpose computers

- It is easy to use

- Helps in changing the shape and style of the characters of the paragraphs

- Basic editing like headers & footers, bullets, numbering is being performed by it.

- It has a facility for mail merge and preview.

Functions :

- It helps in Correcting grammar and spelling of sentences

- It helps in storing and creating typed documents in a new way.

- It provides the function of Creating the documents with basic editing, saving, and printing of it or same.

- It helps in Copy the text along with moving deleting and pasting the text within a given document.

- It helps in Formatting text like bold, underlining, font type, etc.

- It provides the function of creating and editing the formats of tables.

- It helps in Inserting the various elements from some other types of software.

Advantages :

- It benefits the environment by helping in reducing the amount of paperwork.

- The cost of paper and postage waste is being reduced.

- It is used to manipulate the document text like a report

- It provides various tools like copying, deleting and formatting, etc.

- It helps in recognizing the user interface feature

- It applies the basic design to your pages

- It makes it easier for you to perform repetitive tasks

- It is a fully functioned desktop publishing program

- It is time-saving.

- It is dynamic in nature for exchanging the data.

- It produces error-free documents.

- Provide security to our documents.

Disadvantages :

- It does not give you complete control over the look and feel of your document.

- It did not develop out of computer technology.

Like Article

Save Article

From Wikipedia, the free encyclopedia

The following outline is provided as an overview of and topical guide to natural-language processing:

natural-language processing – computer activity in which computers are entailed to analyze, understand, alter, or generate natural language. This includes the automation of any or all linguistic forms, activities, or methods of communication, such as conversation, correspondence, reading, written composition, dictation, publishing, translation, lip reading, and so on. Natural-language processing is also the name of the branch of computer science, artificial intelligence, and linguistics concerned with enabling computers to engage in communication using natural language(s) in all forms, including but not limited to speech, print, writing, and signing.

Natural-language processing[edit]

Natural-language processing can be described as all of the following:

- A field of science – systematic enterprise that builds and organizes knowledge in the form of testable explanations and predictions about the universe.[1]

- An applied science – field that applies human knowledge to build or design useful things.

- A field of computer science – scientific and practical approach to computation and its applications.

- A branch of artificial intelligence – intelligence of machines and robots and the branch of computer science that aims to create it.

- A subfield of computational linguistics – interdisciplinary field dealing with the statistical or rule-based modeling of natural language from a computational perspective.

- A field of computer science – scientific and practical approach to computation and its applications.

- An application of engineering – science, skill, and profession of acquiring and applying scientific, economic, social, and practical knowledge, in order to design and also build structures, machines, devices, systems, materials and processes.

- An application of software engineering – application of a systematic, disciplined, quantifiable approach to the design, development, operation, and maintenance of software, and the study of these approaches; that is, the application of engineering to software.[2][3][4]

- A subfield of computer programming – process of designing, writing, testing, debugging, and maintaining the source code of computer programs. This source code is written in one or more programming languages (such as Java, C++, C#, Python, etc.). The purpose of programming is to create a set of instructions that computers use to perform specific operations or to exhibit desired behaviors.

- A subfield of artificial intelligence programming –

- A subfield of computer programming – process of designing, writing, testing, debugging, and maintaining the source code of computer programs. This source code is written in one or more programming languages (such as Java, C++, C#, Python, etc.). The purpose of programming is to create a set of instructions that computers use to perform specific operations or to exhibit desired behaviors.

- An application of software engineering – application of a systematic, disciplined, quantifiable approach to the design, development, operation, and maintenance of software, and the study of these approaches; that is, the application of engineering to software.[2][3][4]

- An applied science – field that applies human knowledge to build or design useful things.

- A type of system – set of interacting or interdependent components forming an integrated whole or a set of elements (often called ‘components’ ) and relationships which are different from relationships of the set or its elements to other elements or sets.

- A system that includes software – software is a collection of computer programs and related data that provides the instructions for telling a computer what to do and how to do it. Software refers to one or more computer programs and data held in the storage of the computer. In other words, software is a set of programs, procedures, algorithms and its documentation concerned with the operation of a data processing system.

- A type of technology – making, modification, usage, and knowledge of tools, machines, techniques, crafts, systems, methods of organization, in order to solve a problem, improve a preexisting solution to a problem, achieve a goal, handle an applied input/output relation or perform a specific function. It can also refer to the collection of such tools, machinery, modifications, arrangements and procedures. Technologies significantly affect human as well as other animal species’ ability to control and adapt to their natural environments.

- A form of computer technology – computers and their application. NLP makes use of computers, image scanners, microphones, and many types of software programs.

- Language technology – consists of natural-language processing (NLP) and computational linguistics (CL) on the one hand, and speech technology on the other. It also includes many application oriented aspects of these. It is often called human language technology (HLT).

- A form of computer technology – computers and their application. NLP makes use of computers, image scanners, microphones, and many types of software programs.

Prerequisite technologies[edit]

The following technologies make natural-language processing possible:

- Communication – the activity of a source sending a message to a receiver

- Language –

- Speech –

- Writing –

- Computing –

- Computers –

- Computer programming –

- Information extraction –

- User interface –

- Software –

- Text editing – program used to edit plain text files

- Word processing – piece of software used for composing, editing, formatting, printing documents

- Input devices – pieces of hardware for sending data to a computer to be processed[5]

- Computer keyboard – typewriter style input device whose input is converted into various data depending on the circumstances

- Image scanners –

- Language –

Subfields of natural-language processing[edit]

- Information extraction (IE) – field concerned in general with the extraction of semantic information from text. This covers tasks such as named-entity recognition, coreference resolution, relationship extraction, etc.

- Ontology engineering – field that studies the methods and methodologies for building ontologies, which are formal representations of a set of concepts within a domain and the relationships between those concepts.

- Speech processing – field that covers speech recognition, text-to-speech and related tasks.

- Statistical natural-language processing –

- Statistical semantics – a subfield of computational semantics that establishes semantic relations between words to examine their contexts.

- Distributional semantics – a subfield of statistical semantics that examines the semantic relationship of words across a corpora or in large samples of data.

- Statistical semantics – a subfield of computational semantics that establishes semantic relations between words to examine their contexts.

[edit]

Natural-language processing contributes to, and makes use of (the theories, tools, and methodologies from), the following fields:

- Automated reasoning – area of computer science and mathematical logic dedicated to understanding various aspects of reasoning, and producing software which allows computers to reason completely, or nearly completely, automatically. A sub-field of artificial intelligence, automatic reasoning is also grounded in theoretical computer science and philosophy of mind.

- Linguistics – scientific study of human language. Natural-language processing requires understanding of the structure and application of language, and therefore it draws heavily from linguistics.

- Applied linguistics – interdisciplinary field of study that identifies, investigates, and offers solutions to language-related real-life problems. Some of the academic fields related to applied linguistics are education, linguistics, psychology, computer science, anthropology, and sociology. Some of the subfields of applied linguistics relevant to natural-language processing are:

- Bilingualism / Multilingualism –

- Computer-mediated communication (CMC) – any communicative transaction that occurs through the use of two or more networked computers.[6] Research on CMC focuses largely on the social effects of different computer-supported communication technologies. Many recent studies involve Internet-based social networking supported by social software.

- Contrastive linguistics – practice-oriented linguistic approach that seeks to describe the differences and similarities between a pair of languages.

- Conversation analysis (CA) – approach to the study of social interaction, embracing both verbal and non-verbal conduct, in situations of everyday life. Turn-taking is one aspect of language use that is studied by CA.

- Discourse analysis – various approaches to analyzing written, vocal, or sign language use or any significant semiotic event.

- Forensic linguistics – application of linguistic knowledge, methods and insights to the forensic context of law, language, crime investigation, trial, and judicial procedure.

- Interlinguistics – study of improving communications between people of different first languages with the use of ethnic and auxiliary languages (lingua franca). For instance by use of intentional international auxiliary languages, such as Esperanto or Interlingua, or spontaneous interlanguages known as pidgin languages.

- Language assessment – assessment of first, second or other language in the school, college, or university context; assessment of language use in the workplace; and assessment of language in the immigration, citizenship, and asylum contexts. The assessment may include analyses of listening, speaking, reading, writing or cultural understanding, with respect to understanding how the language works theoretically and the ability to use the language practically.

- Language pedagogy – science and art of language education, including approaches and methods of language teaching and study. Natural-language processing is used in programs designed to teach language, including first- and second-language training.

- Language planning –

- Language policy –

- Lexicography –

- Literacies –

- Pragmatics –

- Second-language acquisition –

- Stylistics –

- Translation –

- Computational linguistics – interdisciplinary field dealing with the statistical or rule-based modeling of natural language from a computational perspective. The models and tools of computational linguistics are used extensively in the field of natural-language processing, and vice versa.

- Computational semantics –

- Corpus linguistics – study of language as expressed in samples (corpora) of «real world» text. Corpora is the plural of corpus, and a corpus is a specifically selected collection of texts (or speech segments) composed of natural language. After it is constructed (gathered or composed), a corpus is analyzed with the methods of computational linguistics to infer the meaning and context of its components (words, phrases, and sentences), and the relationships between them. Optionally, a corpus can be annotated («tagged») with data (manually or automatically) to make the corpus easier to understand (e.g., part-of-speech tagging). This data is then applied to make sense of user input, for example, to make better (automated) guesses of what people are talking about or saying, perhaps to achieve more narrowly focused web searches, or for speech recognition.

- Metalinguistics –

- Sign linguistics – scientific study and analysis of natural sign languages, their features, their structure (phonology, morphology, syntax, and semantics), their acquisition (as a primary or secondary language), how they develop independently of other languages, their application in communication, their relationships to other languages (including spoken languages), and many other aspects.

- Applied linguistics – interdisciplinary field of study that identifies, investigates, and offers solutions to language-related real-life problems. Some of the academic fields related to applied linguistics are education, linguistics, psychology, computer science, anthropology, and sociology. Some of the subfields of applied linguistics relevant to natural-language processing are:

- Human–computer interaction – the intersection of computer science and behavioral sciences, this field involves the study, planning, and design of the interaction between people (users) and computers. Attention to human-machine interaction is important, because poorly designed human-machine interfaces can lead to many unexpected problems. A classic example of this is the Three Mile Island accident where investigations concluded that the design of the human–machine interface was at least partially responsible for the disaster.

- Information retrieval (IR) – field concerned with storing, searching and retrieving information. It is a separate field within computer science (closer to databases), but IR relies on some NLP methods (for example, stemming). Some current research and applications seek to bridge the gap between IR and NLP.

- Knowledge representation (KR) – area of artificial intelligence research aimed at representing knowledge in symbols to facilitate inferencing from those knowledge elements, creating new elements of knowledge. Knowledge Representation research involves analysis of how to reason accurately and effectively and how best to use a set of symbols to represent a set of facts within a knowledge domain.

- Semantic network – study of semantic relations between concepts.

- Semantic Web –

- Semantic network – study of semantic relations between concepts.

- Machine learning – subfield of computer science that examines pattern recognition and computational learning theory in artificial intelligence. There are three broad approaches to machine learning. Supervised learning occurs when the machine is given example inputs and outputs by a teacher so that it can learn a rule that maps inputs to outputs. Unsupervised learning occurs when the machine determines the inputs structure without being provided example inputs or outputs. Reinforcement learning occurs when a machine must perform a goal without teacher feedback.

- Pattern recognition – branch of machine learning that examines how machines recognize regularities in data. As with machine learning, teachers can train machines to recognize patterns by providing them with example inputs and outputs (i.e. Supervised Learning), or the machines can recognize patterns without being trained on any example inputs or outputs (i.e. Unsupervised Learning).

- Statistical classification –

Structures used in natural-language processing[edit]

- Anaphora – type of expression whose reference depends upon another referential element. E.g., in the sentence ‘Sally preferred the company of herself’, ‘herself’ is an anaphoric expression in that it is coreferential with ‘Sally’, the sentence’s subject.

- Context-free language –

- Controlled natural language – a natural language with a restriction introduced on its grammar and vocabulary in order to eliminate ambiguity and complexity

- Corpus – body of data, optionally tagged (for example, through part-of-speech tagging), providing real world samples for analysis and comparison.

- Text corpus – large and structured set of texts, nowadays usually electronically stored and processed. They are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific subject (or domain).

- Speech corpus – database of speech audio files and text transcriptions. In Speech technology, speech corpora are used, among other things, to create acoustic models (which can then be used with a speech recognition engine). In Linguistics, spoken corpora are used to do research into phonetic, conversation analysis, dialectology and other fields.

- Grammar –

- Context-free grammar (CFG) –

- Constraint grammar (CG) –

- Definite clause grammar (DCG) –

- Functional unification grammar (FUG) –

- Generalized phrase structure grammar (GPSG) –

- Head-driven phrase structure grammar (HPSG) –

- Lexical functional grammar (LFG) –

- Probabilistic context-free grammar (PCFG) – another name for stochastic context-free grammar.

- Stochastic context-free grammar (SCFG) –

- Systemic functional grammar (SFG) –

- Tree-adjoining grammar (TAG) –

- Natural language –

- n-gram – sequence of n number of tokens, where a «token» is a character, syllable, or word. The n is replaced by a number. Therefore, a 5-gram is an n-gram of 5 letters, syllables, or words. «Eat this» is a 2-gram (also known as a bigram).

- Bigram – n-gram of 2 tokens. Every sequence of 2 adjacent elements in a string of tokens is a bigram. Bigrams are used for speech recognition, they can be used to solve cryptograms, and bigram frequency is one approach to statistical language identification.

- Trigram – special case of the n-gram, where n is 3.

- Ontology – formal representation of a set of concepts within a domain and the relationships between those concepts.

- Taxonomy – practice and science of classification, including the principles underlying classification, and the methods of classifying things or concepts.

- Hyponymy and hypernymy – the linguistics of hyponyms and hypernyms. A hyponym shares a type-of relationship with its hypernym. For example, pigeon, crow, eagle and seagull are all hyponyms of bird (their hypernym); which, in turn, is a hyponym of animal.

- Taxonomy for search engines – typically called a «taxonomy of entities». It is a tree in which nodes are labelled with entities which are expected to occur in a web search query. These trees are used to match keywords from a search query with the keywords from relevant answers (or snippets).

- Taxonomy – practice and science of classification, including the principles underlying classification, and the methods of classifying things or concepts.

- Textual entailment – directional relation between text fragments. The relation holds whenever the truth of one text fragment follows from another text. In the TE framework, the entailing and entailed texts are termed text (t) and hypothesis (h), respectively. The relation is directional because even if «t entails h», the reverse «h entails t» is much less certain.

- Triphone – sequence of three phonemes. Triphones are useful in models of natural-language processing where they are used to establish the various contexts in which a phoneme can occur in a particular natural language.

Processes of NLP[edit]

Applications[edit]

- Automated essay scoring (AES) – the use of specialized computer programs to assign grades to essays written in an educational setting. It is a method of educational assessment and an application of natural-language processing. Its objective is to classify a large set of textual entities into a small number of discrete categories, corresponding to the possible grades—for example, the numbers 1 to 6. Therefore, it can be considered a problem of statistical classification.

- Automatic image annotation – process by which a computer system automatically assigns textual metadata in the form of captioning or keywords to a digital image. The annotations are used in image retrieval systems to organize and locate images of interest from a database.

- Automatic summarization – process of reducing a text document with a computer program in order to create a summary that retains the most important points of the original document. Often used to provide summaries of text of a known type, such as articles in the financial section of a newspaper.

- Types

- Keyphrase extraction –

- Document summarization –

- Multi-document summarization –

- Methods and techniques

- Extraction-based summarization –

- Abstraction-based summarization –

- Maximum entropy-based summarization –

- Sentence extraction –

- Aided summarization –

- Human aided machine summarization (HAMS) –

- Machine aided human summarization (MAHS) –

- Types

- Automatic taxonomy induction – automated construction of tree structures from a corpus. This may be applied to building taxonomical classification systems for reading by end users, such as web directories or subject outlines.

- Coreference resolution – in order to derive the correct interpretation of text, or even to estimate the relative importance of various mentioned subjects, pronouns and other referring expressions need to be connected to the right individuals or objects. Given a sentence or larger chunk of text, coreference resolution determines which words («mentions») refer to which objects («entities») included in the text.

- Anaphora resolution – concerned with matching up pronouns with the nouns or names that they refer to. For example, in a sentence such as «He entered John’s house through the front door», «the front door» is a referring expression and the bridging relationship to be identified is the fact that the door being referred to is the front door of John’s house (rather than of some other structure that might also be referred to).

- Dialog system –

- Foreign-language reading aid – computer program that assists a non-native language user to read properly in their target language. The proper reading means that the pronunciation should be correct and stress to different parts of the words should be proper.

- Foreign-language writing aid – computer program or any other instrument that assists a non-native language user (also referred to as a foreign-language learner) in writing decently in their target language. Assistive operations can be classified into two categories: on-the-fly prompts and post-writing checks.

- Grammar checking – the act of verifying the grammatical correctness of written text, especially if this act is performed by a computer program.

- Information retrieval –

- Cross-language information retrieval –

- Machine translation (MT) – aims to automatically translate text from one human language to another. This is one of the most difficult problems, and is a member of a class of problems colloquially termed «AI-complete», i.e. requiring all of the different types of knowledge that humans possess (grammar, semantics, facts about the real world, etc.) in order to solve properly.

- Classical approach of machine translation – rules-based machine translation.

- Computer-assisted translation –

- Interactive machine translation –

- Translation memory – database that stores so-called «segments», which can be sentences, paragraphs or sentence-like units (headings, titles or elements in a list) that have previously been translated, in order to aid human translators.

- Example-based machine translation –

- Rule-based machine translation –

- Natural-language programming – interpreting and compiling instructions communicated in natural language into computer instructions (machine code).

- Natural-language search –

- Optical character recognition (OCR) – given an image representing printed text, determine the corresponding text.

- Question answering – given a human-language question, determine its answer. Typical questions have a specific right answer (such as «What is the capital of Canada?»), but sometimes open-ended questions are also considered (such as «What is the meaning of life?»).

- Open domain question answering –

- Spam filtering –

- Sentiment analysis – extracts subjective information usually from a set of documents, often using online reviews to determine «polarity» about specific objects. It is especially useful for identifying trends of public opinion in the social media, for the purpose of marketing.

- Speech recognition – given a sound clip of a person or people speaking, determine the textual representation of the speech. This is the opposite of text to speech and is one of the extremely difficult problems colloquially termed «AI-complete» (see above). In natural speech there are hardly any pauses between successive words, and thus speech segmentation is a necessary subtask of speech recognition (see below). In most spoken languages, the sounds representing successive letters blend into each other in a process termed coarticulation, so the conversion of the analog signal to discrete characters can be a very difficult process.

- Speech synthesis (Text-to-speech) –

- Text-proofing –

- Text simplification – automated editing a document to include fewer words, or use easier words, while retaining its underlying meaning and information.

Component processes[edit]

- Natural-language understanding – converts chunks of text into more formal representations such as first-order logic structures that are easier for computer programs to manipulate. Natural-language understanding involves the identification of the intended semantic from the multiple possible semantics which can be derived from a natural-language expression which usually takes the form of organized notations of natural-languages concepts. Introduction and creation of language metamodel and ontology are efficient however empirical solutions. An explicit formalization of natural-languages semantics without confusions with implicit assumptions such as closed-world assumption (CWA) vs. open-world assumption, or subjective Yes/No vs. objective True/False is expected for the construction of a basis of semantics formalization.[7]

- Natural-language generation – task of converting information from computer databases into readable human language.

Component processes of natural-language understanding[edit]

- Automatic document classification (text categorization) –

- Automatic language identification –

- Compound term processing – category of techniques that identify compound terms and match them to their definitions. Compound terms are built by combining two (or more) simple terms, for example «triple» is a single word term but «triple heart bypass» is a compound term.

- Automatic taxonomy induction –

- Corpus processing –

- Automatic acquisition of lexicon –

- Text normalization –

- Text simplification –

- Deep linguistic processing –

- Discourse analysis – includes a number of related tasks. One task is identifying the discourse structure of connected text, i.e. the nature of the discourse relationships between sentences (e.g. elaboration, explanation, contrast). Another possible task is recognizing and classifying the speech acts in a chunk of text (e.g. yes-no questions, content questions, statements, assertions, orders, suggestions, etc.).

- Information extraction –

- Text mining – process of deriving high-quality information from text. High-quality information is typically derived through the devising of patterns and trends through means such as statistical pattern learning.

- Biomedical text mining – (also known as BioNLP), this is text mining applied to texts and literature of the biomedical and molecular biology domain. It is a rather recent research field drawing elements from natural-language processing, bioinformatics, medical informatics and computational linguistics. There is an increasing interest in text mining and information extraction strategies applied to the biomedical and molecular biology literature due to the increasing number of electronically available publications stored in databases such as PubMed.

- Decision tree learning –

- Sentence extraction –

- Terminology extraction –

- Text mining – process of deriving high-quality information from text. High-quality information is typically derived through the devising of patterns and trends through means such as statistical pattern learning.

- Latent semantic indexing –

- Lemmatisation – groups together all like terms that share a same lemma such that they are classified as a single item.

- Morphological segmentation – separates words into individual morphemes and identifies the class of the morphemes. The difficulty of this task depends greatly on the complexity of the morphology (i.e. the structure of words) of the language being considered. English has fairly simple morphology, especially inflectional morphology, and thus it is often possible to ignore this task entirely and simply model all possible forms of a word (e.g. «open, opens, opened, opening») as separate words. In languages such as Turkish, however, such an approach is not possible, as each dictionary entry has thousands of possible word forms.

- Named-entity recognition (NER) – given a stream of text, determines which items in the text map to proper names, such as people or places, and what the type of each such name is (e.g. person, location, organization). Although capitalization can aid in recognizing named entities in languages such as English, this information cannot aid in determining the type of named entity, and in any case is often inaccurate or insufficient. For example, the first word of a sentence is also capitalized, and named entities often span several words, only some of which are capitalized. Furthermore, many other languages in non-Western scripts (e.g. Chinese or Arabic) do not have any capitalization at all, and even languages with capitalization may not consistently use it to distinguish names. For example, German capitalizes all nouns, regardless of whether they refer to names, and French and Spanish do not capitalize names that serve as adjectives.

- Ontology learning – automatic or semi-automatic creation of ontologies, including extracting the corresponding domain’s terms and the relationships between those concepts from a corpus of natural-language text, and encoding them with an ontology language for easy retrieval. Also called «ontology extraction», «ontology generation», and «ontology acquisition».

- Parsing – determines the parse tree (grammatical analysis) of a given sentence. The grammar for natural languages is ambiguous and typical sentences have multiple possible analyses. In fact, perhaps surprisingly, for a typical sentence there may be thousands of potential parses (most of which will seem completely nonsensical to a human).

- Shallow parsing –

- Part-of-speech tagging – given a sentence, determines the part of speech for each word. Many words, especially common ones, can serve as multiple parts of speech. For example, «book» can be a noun («the book on the table») or verb («to book a flight»); «set» can be a noun, verb or adjective; and «out» can be any of at least five different parts of speech. Some languages have more such ambiguity than others. Languages with little inflectional morphology, such as English are particularly prone to such ambiguity. Chinese is prone to such ambiguity because it is a tonal language during verbalization. Such inflection is not readily conveyed via the entities employed within the orthography to convey intended meaning.

- Query expansion –

- Relationship extraction – given a chunk of text, identifies the relationships among named entities (e.g. who is the wife of whom).

- Semantic analysis (computational) – formal analysis of meaning, and «computational» refers to approaches that in principle support effective implementation.

- Explicit semantic analysis –

- Latent semantic analysis –

- Semantic analytics –

- Sentence breaking (also known as sentence boundary disambiguation and sentence detection) – given a chunk of text, finds the sentence boundaries. Sentence boundaries are often marked by periods or other punctuation marks, but these same characters can serve other purposes (e.g. marking abbreviations).

- Speech segmentation – given a sound clip of a person or people speaking, separates it into words. A subtask of speech recognition and typically grouped with it.

- Stemming – reduces an inflected or derived word into its word stem, base, or root form.

- Text chunking –

- Tokenization – given a chunk of text, separates it into distinct words, symbols, sentences, or other units

- Topic segmentation and recognition – given a chunk of text, separates it into segments each of which is devoted to a topic, and identifies the topic of the segment.

- Truecasing –

- Word segmentation – separates a chunk of continuous text into separate words. For a language like English, this is fairly trivial, since words are usually separated by spaces. However, some written languages like Chinese, Japanese and Thai do not mark word boundaries in such a fashion, and in those languages text segmentation is a significant task requiring knowledge of the vocabulary and morphology of words in the language.

- Word-sense disambiguation (WSD) – because many words have more than one meaning, word-sense disambiguation is used to select the meaning which makes the most sense in context. For this problem, we are typically given a list of words and associated word senses, e.g. from a dictionary or from an online resource such as WordNet.

- Word-sense induction – open problem of natural-language processing, which concerns the automatic identification of the senses of a word (i.e. meanings). Given that the output of word-sense induction is a set of senses for the target word (sense inventory), this task is strictly related to that of word-sense disambiguation (WSD), which relies on a predefined sense inventory and aims to solve the ambiguity of words in context.

- Automatic acquisition of sense-tagged corpora –

- W-shingling – set of unique «shingles»—contiguous subsequences of tokens in a document—that can be used to gauge the similarity of two documents. The w denotes the number of tokens in each shingle in the set.

Component processes of natural-language generation[edit]

Natural-language generation – task of converting information from computer databases into readable human language.

- Automatic taxonomy induction (ATI) – automated building of tree structures from a corpus. While ATI is used to construct the core of ontologies (and doing so makes it a component process of natural-language understanding), when the ontologies being constructed are end user readable (such as a subject outline), and these are used for the construction of further documentation (such as using an outline as the basis to construct a report or treatise) this also becomes a component process of natural-language generation.

- Document structuring –

History of natural-language processing[edit]

History of natural-language processing

- History of machine translation

- History of automated essay scoring

- History of natural-language user interface

- History of natural-language understanding

- History of optical character recognition

- History of question answering

- History of speech synthesis

- Turing test – test of a machine’s ability to exhibit intelligent behavior, equivalent to or indistinguishable from, that of an actual human. In the original illustrative example, a human judge engages in a natural-language conversation with a human and a machine designed to generate performance indistinguishable from that of a human being. All participants are separated from one another. If the judge cannot reliably tell the machine from the human, the machine is said to have passed the test. The test was introduced by Alan Turing in his 1950 paper «Computing Machinery and Intelligence,» which opens with the words: «I propose to consider the question, ‘Can machines think?'»

- Universal grammar – theory in linguistics, usually credited to Noam Chomsky, proposing that the ability to learn grammar is hard-wired into the brain.[8] The theory suggests that linguistic ability manifests itself without being taught (see poverty of the stimulus), and that there are properties that all natural human languages share. It is a matter of observation and experimentation to determine precisely what abilities are innate and what properties are shared by all languages.

- ALPAC – was a committee of seven scientists led by John R. Pierce, established in 1964 by the U. S. Government in order to evaluate the progress in computational linguistics in general and machine translation in particular. Its report, issued in 1966, gained notoriety for being very skeptical of research done in machine translation so far, and emphasizing the need for basic research in computational linguistics; this eventually caused the U. S. Government to reduce its funding of the topic dramatically.

- Conceptual dependency theory – a model of natural-language understanding used in artificial intelligence systems. Roger Schank at Stanford University introduced the model in 1969, in the early days of artificial intelligence.[9] This model was extensively used by Schank’s students at Yale University such as Robert Wilensky, Wendy Lehnert, and Janet Kolodner.

- Augmented transition network – type of graph theoretic structure used in the operational definition of formal languages, used especially in parsing relatively complex natural languages, and having wide application in artificial intelligence. Introduced by William A. Woods in 1970.

- Distributed Language Translation (project) –

Timeline of NLP software[edit]

| Software | Year | Creator | Description | Reference |

|---|---|---|---|---|

| Georgetown experiment | 1954 | Georgetown University and IBM | involved fully automatic translation of more than sixty Russian sentences into English. | |

| STUDENT | 1964 | Daniel Bobrow | could solve high school algebra word problems.[10] | |

| ELIZA | 1964 | Joseph Weizenbaum | a simulation of a Rogerian psychotherapist, rephrasing her (referred to as her not it) response with a few grammar rules.[11] | |

| SHRDLU | 1970 | Terry Winograd | a natural-language system working in restricted «blocks worlds» with restricted vocabularies, worked extremely well | |

| PARRY | 1972 | Kenneth Colby | A chatterbot | |

| KL-ONE | 1974 | Sondheimer et al. | a knowledge representation system in the tradition of semantic networks and frames; it is a frame language. | |

| MARGIE | 1975 | Roger Schank | ||

| TaleSpin (software) | 1976 | Meehan | ||

| QUALM | Lehnert | |||

| LIFER/LADDER | 1978 | Hendrix | a natural-language interface to a database of information about US Navy ships. | |

| SAM (software) | 1978 | Cullingford | ||

| PAM (software) | 1978 | Robert Wilensky | ||

| Politics (software) | 1979 | Carbonell | ||

| Plot Units (software) | 1981 | Lehnert | ||

| Jabberwacky | 1982 | Rollo Carpenter | chatterbot with stated aim to «simulate natural human chat in an interesting, entertaining and humorous manner». | |

| MUMBLE (software) | 1982 | McDonald | ||

| Racter | 1983 | William Chamberlain and Thomas Etter | chatterbot that generated English language prose at random. | |

| MOPTRANS | 1984 | Lytinen | ||

| KODIAK (software) | 1986 | Wilensky | ||

| Absity (software) | 1987 | Hirst | ||

| AeroText | 1999 | Lockheed Martin | Originally developed for the U.S. intelligence community (Department of Defense) for information extraction & relational link analysis | |

| Watson | 2006 | IBM | A question answering system that won the Jeopardy! contest, defeating the best human players in February 2011. | |

| MeTA | 2014 | Sean Massung, Chase Geigle, Cheng{X}iang Zhai | MeTA is a modern C++ data sciences toolkit featuringL text tokenization, including deep semantic features like parse trees; inverted and forward indexes with compression and various caching strategies; a collection of ranking functions for searching the indexes; topic models; classification algorithms; graph algorithms; language models; CRF implementation (POS-tagging, shallow parsing); wrappers for liblinear and libsvm (including libsvm dataset parsers); UTF8 support for analysis on various languages; multithreaded algorithms | |

| Tay | 2016 | Microsoft | An artificial intelligence chatterbot that caused controversy on Twitter by releasing inflammatory tweets and was taken offline shortly after. |

General natural-language processing concepts[edit]

- Sukhotin’s algorithm – statistical classification algorithm for classifying characters in a text as vowels or consonants. It was initially created by Boris V. Sukhotin.

- T9 (predictive text) – stands for «Text on 9 keys», is a USA-patented predictive text technology for mobile phones (specifically those that contain a 3×4 numeric keypad), originally developed by Tegic Communications, now part of Nuance Communications.

- Tatoeba – free collaborative online database of example sentences geared towards foreign-language learners.

- Teragram Corporation – fully owned subsidiary of SAS Institute, a major producer of statistical analysis software, headquartered in Cary, North Carolina, USA. Teragram is based in Cambridge, Massachusetts and specializes in the application of computational linguistics to multilingual natural-language processing.

- TipTop Technologies – company that developed TipTop Search, a real-time web, social search engine with a unique platform for semantic analysis of natural language. TipTop Search provides results capturing individual and group sentiment, opinions, and experiences from content of various sorts including real-time messages from Twitter or consumer product reviews on Amazon.com.

- Transderivational search – when a search is being conducted for a fuzzy match across a broad field. In computing the equivalent function can be performed using content-addressable memory.

- Vocabulary mismatch – common phenomenon in the usage of natural languages, occurring when different people name the same thing or concept differently.

- LRE Map –

- Reification (linguistics) –

- Semantic Web –

- Metadata –

- Spoken dialogue system –

- Affix grammar over a finite lattice –

- Aggregation (linguistics) –

- Bag-of-words model – model that represents a text as a bag (multiset) of its words that disregards grammar and word sequence, but maintains multiplicity. This model is a commonly used to train document classifiers

- Brill tagger –

- Cache language model –

- ChaSen, MeCab – provide morphological analysis and word splitting for Japanese

- Classic monolingual WSD –

- ClearForest –

- CMU Pronouncing Dictionary – also known as cmudict, is a public domain pronouncing dictionary designed for uses in speech technology, and was created by Carnegie Mellon University (CMU). It defines a mapping from English words to their North American pronunciations, and is commonly used in speech processing applications such as the Festival Speech Synthesis System and the CMU Sphinx speech recognition system.

- Concept mining –

- Content determination –

- DATR –

- DBpedia Spotlight –

- Deep linguistic processing –

- Discourse relation –

- Document-term matrix –

- Dragomir R. Radev –

- ETBLAST –

- Filtered-popping recursive transition network –

- Robby Garner –

- GeneRIF –

- Gorn address –

- Grammar induction –

- Grammatik –

- Hashing-Trick –

- Hidden Markov model –

- Human language technology –

- Information extraction –

- International Conference on Language Resources and Evaluation –

- Kleene star –

- Language Computer Corporation –

- Language model –

- LanguageWare –

- Latent semantic mapping –

- Legal information retrieval –

- Lesk algorithm –

- Lessac Technologies –

- Lexalytics –

- Lexical choice –

- Lexical Markup Framework –

- Lexical substitution –

- LKB –

- Logic form –

- LRE Map –

- Machine translation software usability –

- MAREC –

- Maximum entropy –

- Message Understanding Conference –

- METEOR –

- Minimal recursion semantics –

- Morphological pattern –

- Multi-document summarization –

- Multilingual notation –

- Naive semantics –

- Natural language –

- Natural-language interface –

- Natural-language user interface –

- News analytics –

- Nondeterministic polynomial –

- Open domain question answering –

- Optimality theory –

- Paco Nathan –

- Phrase structure grammar –

- Powerset (company) –

- Production (computer science) –

- PropBank –

- Question answering –

- Realization (linguistics) –

- Recursive transition network –

- Referring expression generation –

- Rewrite rule –

- Semantic compression –

- Semantic neural network –

- SemEval –

- SPL notation –

- Stemming – reduces an inflected or derived word into its word stem, base, or root form.

- String kernel –

Natural-language processing tools[edit]

- Google Ngram Viewer – graphs n-gram usage from a corpus of more than 5.2 million books

Corpora[edit]

- Text corpus (see list) – large and structured set of texts (nowadays usually electronically stored and processed). They are used to do statistical analysis and hypothesis testing, checking occurrences or validating linguistic rules within a specific language territory.

- Bank of English

- British National Corpus

- Corpus of Contemporary American English (COCA)

- Oxford English Corpus

Natural-language processing toolkits[edit]

The following natural-language processing toolkits are notable collections of natural-language processing software. They are suites of libraries, frameworks, and applications for symbolic, statistical natural-language and speech processing.

| Name | Language | License | Creators |

|---|---|---|---|

| Apertium | C++, Java | GPL | (various) |

| ChatScript | C++ | GPL | Bruce Wilcox |

| Deeplearning4j | Java, Scala | Apache 2.0 | Adam Gibson, Skymind |

| DELPH-IN | LISP, C++ | LGPL, MIT, … | Deep Linguistic Processing with HPSG Initiative |

| Distinguo | C++ | Commercial | Ultralingua Inc. |

| DKPro Core | Java | Apache 2.0 / Varying for individual modules | Technische Universität Darmstadt / Online community |

| General Architecture for Text Engineering (GATE) | Java | LGPL | GATE open source community |

| Gensim | Python | LGPL | Radim Řehůřek |

| LinguaStream | Java | Free for research | University of Caen, France |

| Mallet | Java | Common Public License | University of Massachusetts Amherst |

| Modular Audio Recognition Framework | Java | BSD | The MARF Research and Development Group, Concordia University |

| MontyLingua | Python, Java | Free for research | MIT |

| Natural Language Toolkit (NLTK) | Python | Apache 2.0 | |

| Apache OpenNLP | Java | Apache License 2.0 | Online community |

| spaCy | Python, Cython | MIT | Matthew Honnibal, Explosion AI |

| UIMA | Java / C++ | Apache 2.0 | Apache |

Named-entity recognizers[edit]

- ABNER (A Biomedical Named-Entity Recognizer) – open source text mining program that uses linear-chain conditional random field sequence models. It automatically tags genes, proteins and other entity names in text. Written by Burr Settles of the University of Wisconsin-Madison.

- Stanford NER (Named-Entity Recognizer) — Java implementation of a Named-Entity Recognizer that uses linear-chain conditional random field sequence models. It automatically tags persons, organizations, and locations in text in English, German, Chinese, and Spanish languages. Written by Jenny Finkel and other members of the Stanford NLP Group at Stanford University.

Translation software[edit]

- Comparison of machine translation applications

- Machine translation applications

- Google Translate

- DeepL

- Linguee – web service that provides an online dictionary for a number of language pairs. Unlike similar services, such as LEO, Linguee incorporates a search engine that provides access to large amounts of bilingual, translated sentence pairs, which come from the World Wide Web. As a translation aid, Linguee therefore differs from machine translation services like Babelfish and is more similar in function to a translation memory.

- UNL Universal Networking Language

- Yahoo! Babel Fish

- Reverso

Other software[edit]

- CTAKES – open-source natural-language processing system for information extraction from electronic medical record clinical free-text. It processes clinical notes, identifying types of clinical named entities — drugs, diseases/disorders, signs/symptoms, anatomical sites and procedures. Each named entity has attributes for the text span, the ontology mapping code, context (family history of, current, unrelated to patient), and negated/not negated. Also known as Apache cTAKES.

- DMAP –

- ETAP-3 – proprietary linguistic processing system focusing on English and Russian.[12] It is a rule-based system which uses the Meaning-Text Theory as its theoretical foundation.