Where can I search for words using descriptive sentences? For example, now I’m looking for a word meaning «to purify (by hand) a quantity of grain and take away anything that isn’t good grain.»

How could I get words like that? I know it in my first-language, but I don’t have a classic version of it to use in automatic translators.

Maybe a search engine that uses content words from my description and offers words that could mean the same with their dictionary definitions. Nothing I’d tried in (translate.)google.com gave me the desired result.

Update:

An example dictionary that I use is (Oxford’s Word-Power Dictionary). It’s not for the purpose of the question but for clarification.

Oxford’s has a 3,000 word list of ‘bare-bone-essentials.’ It contains the most-used words in English and all of the grammatical operators. Ideally, these are the words necessary for a learner to use an English-English dictionary such as Oxford’s. The descriptions of words in Oxford’s rely on this list for definitions.

An electronic (web-based) dictionary for word-meaning-search should have a larger list (maybe 10,000) and use a thesaurus to down-level difficult words a user may use in the search then, using all content words in the search phrase, present a list of words whose definitions seem appropriate. Another regular dictionary may be used from there.

-

Example:

Search query: «to use hands to purify a quantity of grain taking away anything that isn’t good grain.»

Content Words: «use-hands; purify; quantity-of-grain; taking-away; anything; isn’t; good-grain»

Filtered: «use-hands; clean; quantity-of-seeds; take-away;anything; not-good-grain»

Words: «1; 2; 3; 4» that use the content words to varying degrees.

And that’s a five minutes’ work!

PS. Sorry about the delay; connection problems…

: computer software used to search data (such as text or a database) for specified information

also

: a site on the World Wide Web that uses such software to locate key words in other sites

Example Sentences

Recent Examples on the Web

Microsoft’s Bing search engine now uses ChatGPT. Lee, a computer scientist who runs Microsoft research around the world, is particularly interested in the medical and scientific potential of ChatGPT.

—

Enlarge Microsoft Microsoft has spent a lot of time and energy over the last few months adding generative AI features to all its products, particularly its long-standing, long-struggling Bing search engine.

—

The news is the culmination of a years-long effort by Microsoft to reinvent its search engine, which has long played second fiddle to Google before getting a boost from OpenAI’s GPT-4.

—

In 2010, Google Inc. stopped censoring the internet for China by shifting its search engine off the mainland to Hong Kong.

—

Google is opening public access to the conversational computer program Bard, its answer to the viral chatbot ChatGPT, while stopping short of integrating the new tool into its flagship search engine.

—

The technology already drives the chatbot available to a limited number of people using Microsoft’s Bing search engine.

—

Microsoft’s Bing search engine has passed the 100 million daily active users milestone just weeks after the software maker launched its AI-powered Bing Chat feature.

—

On Twitter, Musk has been calling out the flaws of ChatGPT, which is being integrated into Microsoft’s Bing search engine to enhance the experience.

—

See More

These examples are programmatically compiled from various online sources to illustrate current usage of the word ‘search engine.’ Any opinions expressed in the examples do not represent those of Merriam-Webster or its editors. Send us feedback about these examples.

Word History

First Known Use

1984, in the meaning defined above

Time Traveler

The first known use of search engine was

in 1984

Dictionary Entries Near search engine

Cite this Entry

“Search engine.” Merriam-Webster.com Dictionary, Merriam-Webster, https://www.merriam-webster.com/dictionary/search%20engine. Accessed 14 Apr. 2023.

Share

More from Merriam-Webster on search engine

Last Updated:

8 Apr 2023

— Updated example sentences

Subscribe to America’s largest dictionary and get thousands more definitions and advanced search—ad free!

Merriam-Webster unabridged

4 Easy Ways to Find Meaning of Any Word with Google

Ofter while reading online, we need to find simple meaning of some complex word. For example, while reading about unfamiliar topics or in-depth article from McKinsey Quarterly, we may find some unfamiliar words in course and then we leave/misunderstand them due to lack of time. This is not at all advisable as a little misunderstanding with a word can change the complete meaning of the sentence.

Being a Google oriented blog, we will solve with this concern with available Google tools. Here are 4 easiest ways to find meaning of any word:

1. The Easiest Way – Using Google Dictionary

For any word, the simplest tool to use Google Dictionary. It is a dedicated site for finding word meanings, definitions, related phrases, synonyms and pronunciations. It also offers word bookmarking for remembering complex words and translations for better understanding.

Note: Dictionary may not work in all countries. In that case try – http://www.google.ca/dictionary

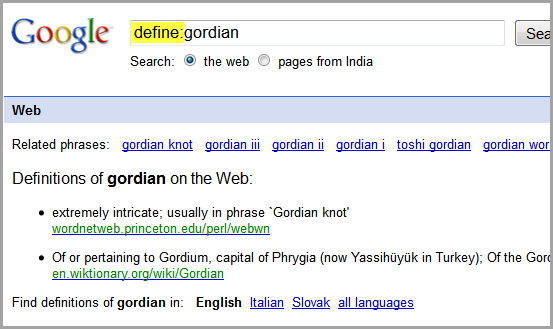

2. Using define Operator in Google Search

Do you know that you instantaneously find the web meaning of any word by searching it on Google with define prefix. For example, try define:endogamy .

To use this method, just write define: before your search word in Firefox Search Box or Chrome Omnibar (Address bar)and press Enter. You will be surprised to see its simplicity and usefulness.

Related: Google Search Prefixes for better redefined results.

3. Use Browser Addon or Extension

This method is quite preferable as you don’t have to leave the page to check any word meaning. You can keep on reading and to find any word definition, just double click on it for fancy in-page popup.

For Chrome, here is the official extension available from the Google team which works perfectly.

For Firefox, try “Google Dictionary and Google Translate” addon. Though it is a third party addon, but words equally great.

4. Hack Firefox Address Bar Default Behavior

Previously, we have written about turning Firefox address bar into word lookup dictionary. You can use this hack to directly jump to result page of Google Dictionary.

Mostly I use define: prefix in Google. Its easy, convenient and fast. Which method will you use? Tell us in your comments.

- Top Definitions

- Quiz

- Related Content

- Examples

- British

- Cultural

This shows grade level based on the word’s complexity.

[ surch-en-juhn ]

/ ˈsɜrtʃ ˌɛn dʒən /

This shows grade level based on the word’s complexity.

noun Digital Technology.

a computer program that searches documents, especially on the World Wide Web, for a specified word or words and provides a list of documents in which they are found.

QUIZ

CAN YOU ANSWER THESE COMMON GRAMMAR DEBATES?

There are grammar debates that never die; and the ones highlighted in the questions in this quiz are sure to rile everyone up once again. Do you know how to answer the questions that cause some of the greatest grammar debates?

Which sentence is correct?

Also called re·triev·al en·gine [ri-tree-vuhl en-juhn] /rɪˈtri vəl ˌɛn dʒən/ .

Origin of search engine

First recorded in 1990–95

Words nearby search engine

sear, sea ranger, sea raven, search, search dog, search engine, search engine optimization, searching, searchless, searchlight, search me

Dictionary.com Unabridged

Based on the Random House Unabridged Dictionary, © Random House, Inc. 2023

Words related to search engine

How to use search engine in a sentence

-

Google’s John Mueller posted 12 tips and more on how to optimize your images for search engines.

-

So while you may have assiduously compiled a list of the most relevant keywords, it’s unlikely that they’ll dominate search engines within a few months.

-

If you take the time and put a little extra effort into properly optimizing your site’s images for both users and search engines every time you add an image to your website, you can give your pages a little extra edge in the search engines.

-

An external link is a source of free traffic for your website and is a crucial element of Google’s search engine algorithm.

-

This needs to change, and in order for that to happen, we need to stop thinking of Google as a search engine.

-

They not only disrupted service in China, they apparently crashed the search engine worldwide.

-

But what started out as a search engine has evolved into a search-and-destroy machine.

-

Revenge, it seems, is a dish best served through search engine optimization.

-

We make things easier for the people as well as the search engine.

-

I doubt the company wants to risk millions of people taking their search engine relationship elsewhere.

-

He did not need an Intel inside computer or search engine to find what he wanted.

British Dictionary definitions for search engine

noun

computing a service provided on the internet enabling users to search for items of interest

Collins English Dictionary — Complete & Unabridged 2012 Digital Edition

© William Collins Sons & Co. Ltd. 1979, 1986 © HarperCollins

Publishers 1998, 2000, 2003, 2005, 2006, 2007, 2009, 2012

Cultural definitions for search engine

Web sites or software that search the Internet for documents that contain a key word, phrase, or subject that is specified by the user to the search engine. Each engine has its own method of searching for information.

The New Dictionary of Cultural Literacy, Third Edition

Copyright © 2005 by Houghton Mifflin Harcourt Publishing Company. Published by Houghton Mifflin Harcourt Publishing Company. All rights reserved.

How search engines understand human language

«Rose is a rose is a rose is a rose.»

Gertrude Stein

The famous quote by Gertrude Stein is a fine example that shows how complicated human language can be. The phrase is ambiguous and allows for multiple interpretations. Stein explained the meaning as follows «the poet could use the name of the thing and the thing was really there.» A curious human reader can come to a similar conclusion. But can we create a smart algorithm which is really capable of understanding the meaning of human language?

In this article, I will try to shed some light on the way search engines find documents related to a user’s query. I will also show some approaches that can be used to extract semantic meaning from a proposition.

Before Google introduced RankBrain, semantic search and all things machine learning, the life of SEOs seemed to be easier. Now we are exposed to a flood of speculative information from industry influencers. To make things worse, Google’s spokespersons share obscure bits of information about the quality signals and repeat the «Content is king» mantra. Is there a way to tell assumptions from real facts about the algorithms? The answer is «yes». There are two fields of computer science, which are natural language processing and information retrieval, that address a huge set of problems related to SEO. There exist well-documented algorithms for text classification and retrieval of relevant documents in response to a user query. No guess-work – just pure science.

If you are interested to learn slightly more academic concepts behind «semantic search», «keyword research», «content optimization», and other SEO buzzwords – read on! However, be aware that this article is not going to give you any magic recipe for on-page SEO but rather answer some of the «why» questions you probably have.

Note: I deliberately avoid quoting mathematical formulas or using excessive mathematical terminology in this article. Those of you who lack this knowledge can refer to Wikipedia links that I provide underway for further reading.

What is a word

Word is the smallest building block of meaning and a logical starting point of our journey. Let’s try to find out how words are represented in computer programs. Along the way, I am going to highlight some strengths and weaknesses of these approaches.

In the simplest case, a computer program sees a text as a sequence of alphanumeric characters and punctuation. This is a so-called raw representation .

"The programmer's programs had been programmed."

Separate words can be delimited by spaces or punctuation. As a result, we get a list of tokens . Note that at this point we consider punctuation characters as distinct tokens as well.

The very first token in the list below poses a dilemma. How should we treat capitalized characters? Should we change all the characters to lowercase? It seems reasonable to go for this choice. After all, «The» and «the» obviously represent the same word, namely, the definite article. But what about «Smith» and «smith»? It can be a proper name, a noun with a meaning «someone who makes and repairs things made of iron», or the same noun at the beginning of a sentence.

Unprocessed tokens preserve all the linguistic information, but simultaneously we get more noise in the input. Further post-processing can be used to get rid of unnecessary information.

the programmer 's programs had been programmed.

Words may have different forms. For example, the token «programs» from our example is actually a plural form of the noun «program». «Programmed» is the past simple of the verb «program». The initial forms of words or the forms that represent words in a dictionary are called lemmas . And a further logical step is to represent words by their respective lemmas.

the programmer 's program have be program.

So far, so good. You have probably heard that search engines use the lists of stop words in order to preprocess input texts. A stop word list is a set of tokens that are removed from a text. Stop words may include functional words and punctuation. Functional words are words that do not have independent meaning, for example, auxiliary verbs or pronouns.

Let’s strip off functional words from the sentence. As a result, the initial utterance includes only content words (the words that have semantic meaning). However, there is no way to tell that the program in question is somehow related to the programmer.

programmer program program

I can imagine you are getting bored. But wait for it! We have not finished yet! A word can be represented by its stem . A stem is a part of a word to which we normally attach affixes. For example, we can attach the suffix «-er» (with a meaning of someone performing an action) to the stem «program».

Have a look at the input sentence after replacing the lemmas with the stems.

program program program

We managed to truncate the initial input into a not so informative sequence. The lesson here is: there are 3 typical ways to represent words:

- Tokens

- Lemmas

- Stems

Additionally, we can strip off functional words or convert words to lowercase.

These representations and their combinations are used depending on the actual language processing task. For example, it is unreasonable to strip off functional words if we need to differentiate texts in English and French. If we need to detect proper names in a text, it is reasonable to preserve the original character case and so on.

Note: there are more ways to segment a sentence. One can split it into N-grams, constituents, chunks , etc. These concepts are out of the scope of this article. But it is helpful to know that there are some bigger units than a word but smaller than a sentence.

The linguistic units discussed above are the building blocks for bigger structures such as documents that we will discuss later.

SEO take-aways

- It is important to understand how and why one splits a sentence into units because these units are a part of a metric that all SEOs use or are aware of, namely, «keyword density». Although reputable SEOs argue against it rather categorically «Keyword Density is Not Used — How Many Times Do We Have to Say It». And they proposed TF-IDF as a better alternative since it is related to semantic search. I will show further in the article that both raw word counts and weighted word counts (TF-IDF) can be used for lexical as well as semantic search.

- Keyword density is a handy and simple metric that has the right to existence. Just do not get obsessed with it.

- It is also worth keeping in mind that grammatical word forms are most likely treated by search engines as the same word type, thus it might be of little use trying to «optimize» a web page, say, for singular and plural forms of the same keyword.

The bag of words

The bag-of-words is a model used in natural language processing to represent a text (anything from a search query to a full-scale book). Although the concept dates back to the 1950s, it is still used for text classification and information retrieval (i.e. search engines).

I will use it in order to show how search engines can find a relevant document from a collection in response to a search query.

If we want to represent a text as the bag-of-words, we just count the number of times each distinct word appears in the text and list these counts (in mathematical terms, it is a vector). Before counting, one can apply preprocessing techniques described in the previous part of the article.

As a result, one loses all the information about text structure, syntax, and grammar of the text.

the programmer ‘s programs had been programmed

{the: 1, programmer: 1, ‘s: 1, programs: 1, had: 1, been: 1, programmed: 1 } or

[1, 1, 1, 1, 1, 1, 1]

programmer program program

{programmer: 1, program: 2} or

[1, 2]

There is not much practical use in representing a separate text as a list of digits. However, if we have a collection of documents (e.g. all the webpages indexed by a certain search engine), we can build a so-called vector space model out of the available texts.

The term may sound scary but in fact, the idea is rather simple. Imagine a spreadsheet where each column represents the bag-of-words of a text (text vector), and each row represents a word from the collection of these texts (word vector). The number of columns equals the number of documents in the collection. The number of rows equals the number of unique words that are found across the whole collection of documents.

The value in the intersection of each row and column is the number of times the corresponding word appears in the corresponding text. The spreadsheet below represents vector space for some plays by Shakespeare. For the sake of simplicity, we are using just four words.

| As You Like It | Twelfth Night | Julius Caesar | Henry V | |

|---|---|---|---|---|

| battle | 1 | 0 | 7 | 13 |

| good | 114 | 80 | 62 | 89 |

| fool | 36 | 58 | 1 | 4 |

| wit | 20 | 15 | 2 | 3 |

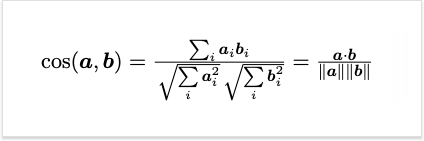

Remember, I’ve mentioned earlier that the bag-of-words is actually a vector. A cool thing about vectors is that we can measure the distance or angle between them. The smaller the distance or angle – the more «similar» are vectors and the documents that they represent. This can be achieved using cosine similarity metric. The resulting value ranges from 0 to 1. The higher the value, the more similar documents are.

Finding a relevant document: how-to

Now we have enough information to explain how one can find a document in the collection that is relevant to a search query.

Let’s say a user is searching for «battle of Agincourt». This is a short document that can be embedded in the vector space from the example above. The respective vector is [1, 0, 0, 0]. «good’, «fool», and «wit» have zero counts. We can then calculate the similarity of the search query to each document in the collection. The results are in the table below. One can notice that Henry V is the best match for the query. It’s no surprise since the word «battle» appears in this text most often and thus the document can be considered more relevant to the query. Also, note that it is not necessary that all the words in a search query are present in the text.

| Similarity | |

|---|---|

| As You Like It | 0.008249825 |

| Twelfth Night | 0 |

| Julius Caesar | 0.11211846 |

| Henry V | 0.144310885 |

There are several obvious drawbacks of such an over-simplistic approach:

- It is vulnerable to keyword stuffing. One can substantially increase the relevance of a document to a search query by simply repeating the required word as many times as necessary in order to beat the competing documents in the collection.

- The bag-of-words representation for the following document

I was impressed, this was not bad!

I was not impressed, this was bad!will be absolutely the same, though they have different meanings. Remember, that the bag-of-words model removes all the structure of the underlying documents.

- The bag-of-words model with word frequency is not the best measure. It is skewed towards very frequent words and is not discriminative. In the following subsection, you are going to learn why.

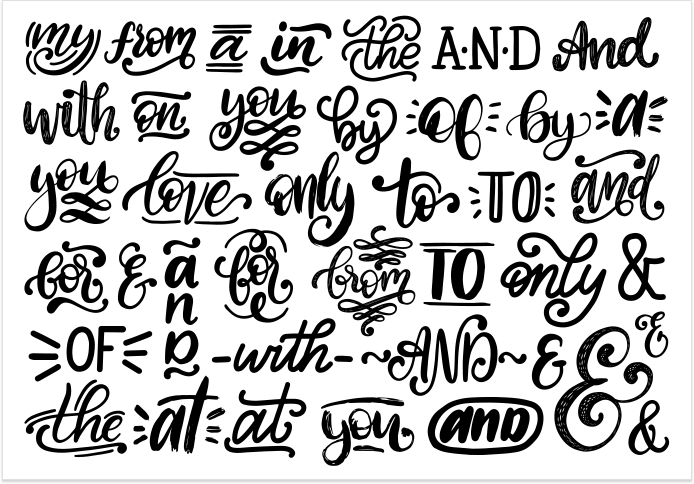

TF-IDF and Zipf’s Law

Zipf’s Law states that given a large sample of words used, the frequency of any word is inversely proportional to its rank in the frequency table. So the word number N has a frequency proportional to 1/N. As a result, there holds an interesting pattern in natural languages. The most frequently used 18% (approximately) of words account for over 80% of word occurrences. It means that a few words are used often and many words are used rarely.

Frequent words will show up in many texts across a collection. As a result, such words make it more difficult to differentiate texts represented as the bag-of-words. Furthermore, the most frequent words are often functional words with no semantic meaning. They cannot describe the topic of a text.

These are the top 10 most frequent words in English.

- the

- be

- to

- of

- and

- a

- in

- that

- have

- I

We can apply the TF-IDF (term frequency – inverse document frequency) weighting in order to decrease the weight of words that are used frequently across the collection of texts. The TF-IDF score is calculated using the following formula:

Where the first component is the term frequency — the frequency of the word in the document. And the second component is the inverse document frequency. It is the number of documents in a collection divided by the document frequency of a word, which is the total number of documents the word appears in. The second component is used to give a higher weight to words that occur only in a few documents across the collection.

In the table below are some IDF values for some words in the Shakespeare plays, ranging from extremely informative words which occur in only one play like Romeo, to those which are so common as to be completely non-discriminative since they occur in all 37 plays like «good» or «sweet».

| Word | DF | IDF |

|---|---|---|

| Romeo | 1 | 1.57 |

| salad | 2 | 1.27 |

| Falstaff | 4 | 0.967 |

| forest | 12 | 0.489 |

| battle | 21 | 0.074 |

| fool | 36 | 0.012 |

| good | 37 | 0 |

| sweet | 37 | 0 |

IDF of very common words equals 0, as a result, their frequencies in the bag-of-words model will also equal 0. The frequencies of rare words will be increased.

SEO take-aways

- I doubt that the bag-of-words model is used nowadays in commercial search engines. There are models that are better at capturing the structure of text and take into account more linguistic features, but the basic idea remains the same. Documents and search queries are transformed into vectors, and the similarity or distance between the vectors is used as a measure of relevance.

- This model gives an understanding of how lexical search works as opposed to semantic search. It is essential for lexical search that a document contains words mentioned in a search query. While this is not necessary for semantic search.

- Zipf’s Law demonstrates that there exist predictable proportions in a text written in natural language. The deviations from the typical proportions are easy to detect. Thus, it is not a difficult task to flag overoptimized text that is «unnatural».

- The TF-IDF weighting scheme is not a key into the magic world of semantic search. With TF-IDF applied to a model, the document features labeled by content words get more weight in the document vector. And it is tempting to interpret the phenomenon as something related to «semantics».

TF-IDF weighting is integrated into the content optimization module of Website Auditor. You can learn more about the feature from this blog post.

Word semantics

Semantic search has been a buzzword in the SEO community since 2013. Semantic search denotes search with meaning, as distinguished from lexical search where the search engine looks for literal matches of the query words or variants of them, without understanding the overall meaning of the query.

Note: The bag-of-words model is an example of lexical search.

But how can a search engine learn the meaning of a word? There is even a more difficult high-level problem. How should we represent the meaning so that a computer program can understand and practically use it?

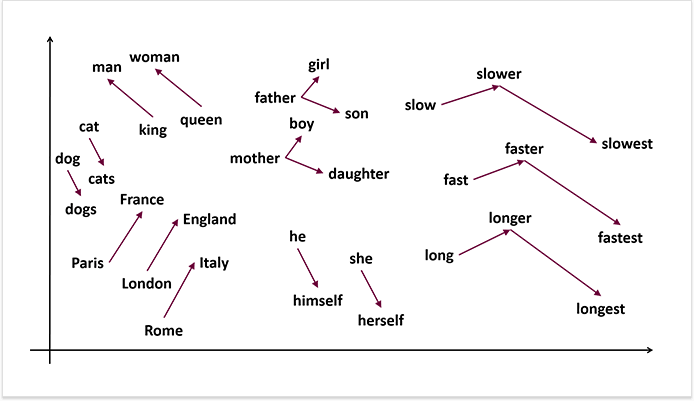

The key concept that helps answer these questions is the distributional hypothesis that was first formulated as early as in the 1950s. Linguists noticed that words with similar meaning tended to occur in the same environment (i.e. near the same words) with the amount of difference in the meaning between two words corresponding roughly to the amount of difference in their environments.

Here is a simple example. Let’s say you encounter the following sentences and you have no idea what a langoustine is:

- Langoustines are considered a delicacy.

- Langoustines have white fleshy meat in the tail and body that is juicy, slightly sweet and lean.

- When choosing langoustines we pay attention to a clear orange color.

And you also encounter the following. I assume that most of the readers know what a shrimp is:

- Shrimps are a delicacy that matches well with white wine and butter sauce.

- Tender shrimp meat can be added to pasta.

- When boiled, shrimps change their color to red.

The fact that langoustine occurs with words like delicacy, meat, and pasta might suggest that langoustine is a sort of edible crustacean similar in some way to a shrimp. Thus one can define a word by the environment it occurs in language use, such as the set of contexts in which it occurs, the neighboring words.

How can we transform these observations into something meaningful for a computer program? We can build a model similar to the bag-of-words. But instead of documents, we are going to label the columns by words. It is common to use small contexts about four words around a target word. In this case, each cell in the model represents the number of times the column word occurs in a context window (e.g. plus-minus four words) around the row word. Let’s consider these four-word windows (the example from the book Jurafsky & Martin, Speech and Language Processing):

| Context | Target | Context |

|---|---|---|

| sugar, a sliced lemon, a tablespoonful of | apricot | jam, a pinch each of, |

| their enjoyment. Cautiously she sampled her first | pineapple | and another fruit whose taste she likened |

| well suited to programming on the digital | computer | In finding the optimal R-stage policy from |

| for the purpose of gathering data and | information | necessary for the study authorized in the |

For each word, we collect the counts (from the windows around each occurrence) of the occurrences of context words. And we get the word-word co-occurrence matrix similar to the one we have already seen previously. You can notice that «digital» and «information» are more similar to each other than to «apricot». Note that word counts can be substituted by other metrics such as pointwise mutual information.

| aardvark | … | computer | data | pinch | result | sugar | … | |

|---|---|---|---|---|---|---|---|---|

| apricot | 0 | … | 0 | 0 | 1 | 0 | 1 | … |

| pineapple | 0 | … | 0 | 0 | 1 | 0 | 1 | … |

| digital | 0 | … | 2 | 1 | 0 | 1 | 0 | … |

| information | 0 | … | 1 | 6 | 0 | 4 | 0 | … |

Each word and its semantics are represented with a vector. The semantic properties of each word are determined by its neighbors, i.e. by the typical contexts the word appears in. Such a model can easily capture synonymy and word relatedness. The vectors of two similar words will appear near each other. And the vectors of the words that appear in the same topic field will form clusters in space.

There is no magic behind semantic search. The conceptual difference is that words are represented as vector embeddings but not as lexical items (strings of characters).

SEO take-aways

- Semantic models are good at capturing synonyms, related words, and semantic frames. A semantic frame is a set of words that denote perspectives or participants in a particular type of event. For example, the «5 o’clock tea» semantic frame can include tradition, tea, cup, kettle, spoon, sugar, drink, poor, brew, etc.

When creating fresh content it might be helpful to think in the dimension of semantic frames. I.e. keep in mind the semantic frame you want your page to rank for, not a particular keyword.

- Content spinning is likely to have little to no effect. Synonymous words like «flat» and «apartment» will have very similar vectors. Spinning the synonyms will produce a text with a footprint that is very close to the initial variant.

- Search engines have become better at extracting information from webpages, but it is always a good idea to give them hints using structured data markup.

Round-up

Computational linguistics is an exciting and fast evolving science. The concepts presented in this article are not new or revolutionary. But they are rather simple and they help to get the basic high-level view over the problem field. If you are willing to learn more about how search engines actually work, I would recommend reading a book Introduction to Information Retrieval by Manning, Raghavan, & Schütze. It is also officially available online.

As always, questions, suggestions and critical views are very welcome in the comments section.

By: Yauhen Khutarniuk