Word Meaning and Similarity Word Senses and Word Relations

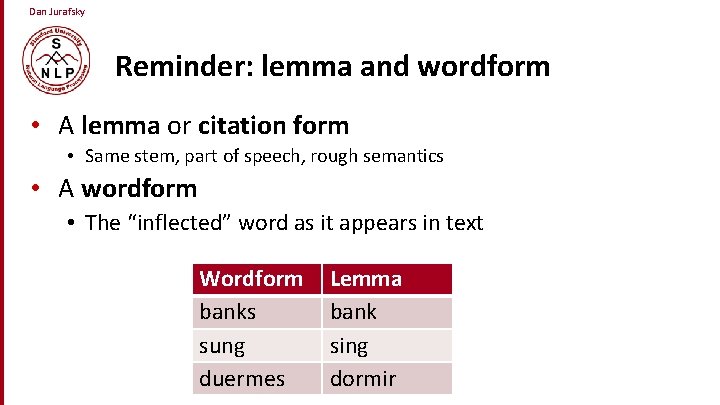

Dan Jurafsky Reminder: lemma and wordform • A lemma or citation form • Same stem, part of speech, rough semantics • A wordform • The “inflected” word as it appears in text Wordform banks sung duermes Lemma bank sing dormir

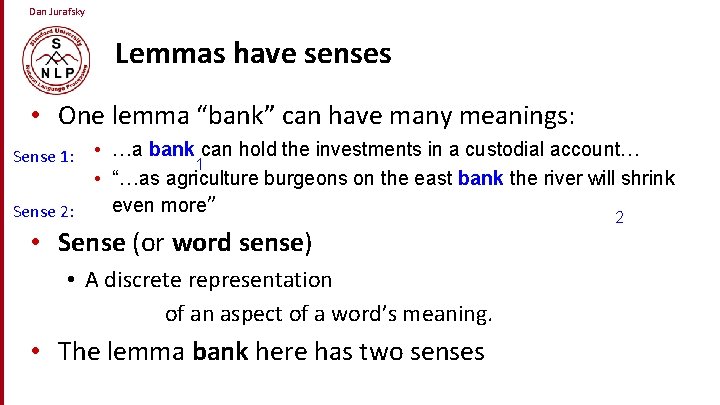

Dan Jurafsky Lemmas have senses • One lemma “bank” can have many meanings: • …a bank can hold the investments in a custodial account… 1 • “…as agriculture burgeons on the east bank the river will shrink even more” Sense 2: 2 Sense 1: • Sense (or word sense) • A discrete representation of an aspect of a word’s meaning. • The lemma bank here has two senses

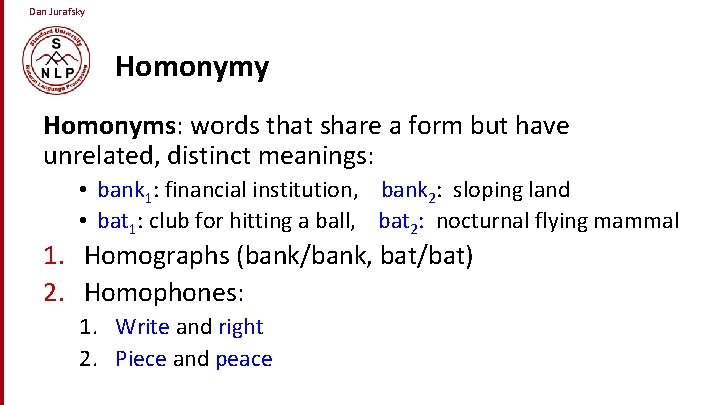

Dan Jurafsky Homonyms: words that share a form but have unrelated, distinct meanings: • bank 1: financial institution, bank 2: sloping land • bat 1: club for hitting a ball, bat 2: nocturnal flying mammal 1. Homographs (bank/bank, bat/bat) 2. Homophones: 1. Write and right 2. Piece and peace

Dan Jurafsky Homonymy causes problems for NLP applications • Information retrieval • “bat care” • Machine Translation • bat: murciélago (animal) or bate (for baseball) • Text-to-Speech • bass (stringed instrument) vs. bass (fish)

Dan Jurafsky Polysemy • 1. The bank was constructed in 1875 out of local red brick. • 2. I withdrew the money from the bank • Are those the same sense? • Sense 2: “A financial institution” • Sense 1: “The building belonging to a financial institution” • A polysemous word has related meanings • Most non-rare words have multiple meanings

Dan Jurafsky Metonymy or Systematic Polysemy: A systematic relationship between senses • Lots of types of polysemy are systematic • School, university, hospital • All can mean the institution or the building. • A systematic relationship: • Building Organization • Other such kinds of systematic polysemy: Author (Jane Austen wrote Emma) Works of Author (I love Jane Austen) Tree (Plums have beautiful blossoms) Fruit (I ate a preserved plum)

Dan Jurafsky How do we know when a word has more than one sense? • The “zeugma” test: Two senses of serve? • Which flights serve breakfast? • Does Lufthansa serve Philadelphia? • ? Does Lufthansa serve breakfast and San Jose? • Since this conjunction sounds weird, • we say that these are two different senses of “serve”

Dan Jurafsky Synonyms • Word that have the same meaning in some or all contexts. • • • filbert / hazelnut couch / sofa big / large automobile / car vomit / throw up Water / H 20 • Two lexemes are synonyms • if they can be substituted for each other in all situations • If so they have the same propositional meaning

Dan Jurafsky Synonyms • But there are few (or no) examples of perfect synonymy. • Even if many aspects of meaning are identical • Still may not preserve the acceptability based on notions of politeness, slang, register, genre, etc. • Example: • Water/H 20 • Big/large • Brave/courageous

Dan Jurafsky Synonymy is a relation between senses rather than words • Consider the words big and large • Are they synonyms? • How big is that plane? • Would I be flying on a large or small plane? • How about here: • Miss Nelson became a kind of big sister to Benjamin. • ? Miss Nelson became a kind of large sister to Benjamin. • Why? • big has a sense that means being older, or grown up • large lacks this sense

Dan Jurafsky Antonyms • Senses that are opposites with respect to one feature of meaning • Otherwise, they are very similar! dark/light short/long hot/cold up/down fast/slow in/out • More formally: antonyms can • define a binary opposition or be at opposite ends of a scale • long/short, fast/slow • Be reversives: • rise/fall, up/down rise/fall

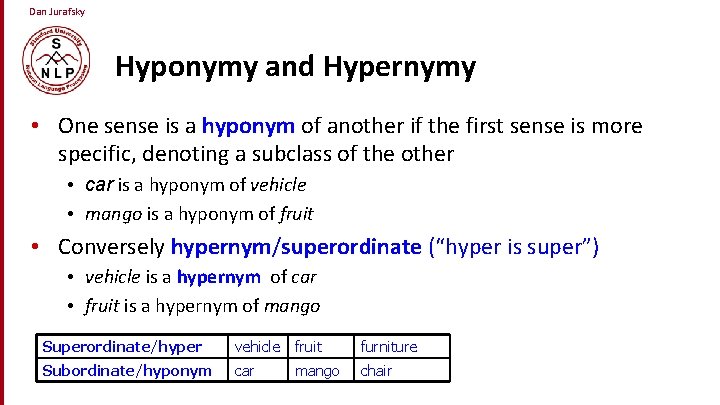

Dan Jurafsky Hyponymy and Hypernymy • One sense is a hyponym of another if the first sense is more specific, denoting a subclass of the other • car is a hyponym of vehicle • mango is a hyponym of fruit • Conversely hypernym/superordinate (“hyper is super”) • vehicle is a hypernym of car • fruit is a hypernym of mango Superordinate/hyper vehicle fruit furniture Subordinate/hyponym car chair mango

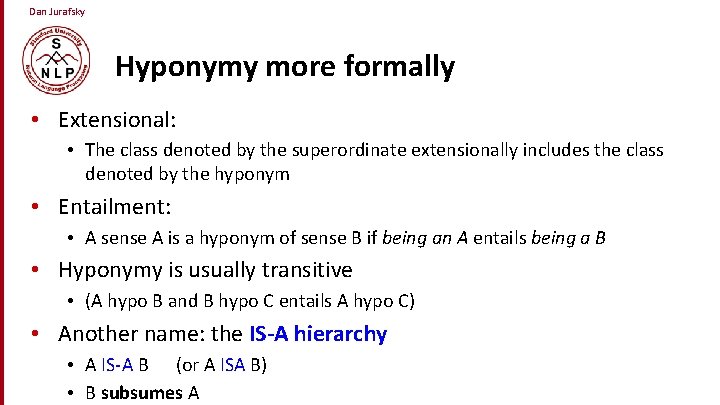

Dan Jurafsky Hyponymy more formally • Extensional: • The class denoted by the superordinate extensionally includes the class denoted by the hyponym • Entailment: • A sense A is a hyponym of sense B if being an A entails being a B • Hyponymy is usually transitive • (A hypo B and B hypo C entails A hypo C) • Another name: the IS-A hierarchy • A IS-A B (or A ISA B) • B subsumes A

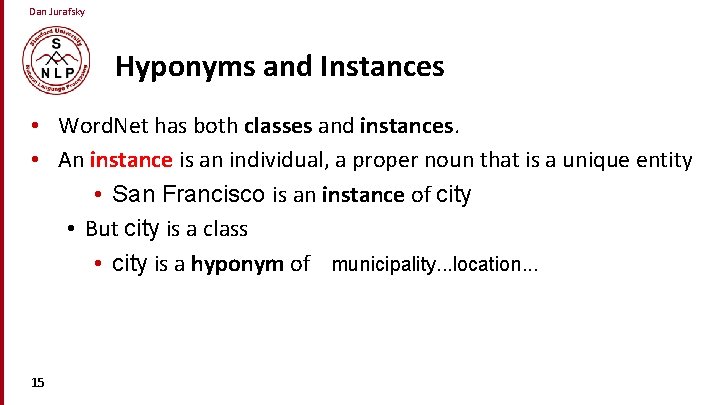

Dan Jurafsky Hyponyms and Instances • Word. Net has both classes and instances. • An instance is an individual, a proper noun that is a unique entity • San Francisco is an instance of city • But city is a class • city is a hyponym of municipality. . . location. . . 15

Word Meaning and Similarity Word Senses and Word Relations

Word Meaning and Similarity Word. Net and other Online Thesauri

Dan Jurafsky Applications of Thesauri and Ontologies • • • Information Extraction Information Retrieval Question Answering Bioinformatics and Medical Informatics Machine Translation

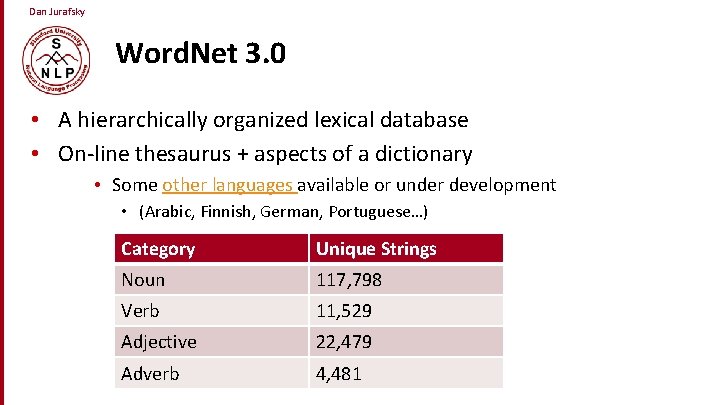

Dan Jurafsky Word. Net 3. 0 • A hierarchically organized lexical database • On-line thesaurus + aspects of a dictionary • Some other languages available or under development • (Arabic, Finnish, German, Portuguese…) Category Unique Strings Noun 117, 798 Verb 11, 529 Adjective 22, 479 Adverb 4, 481

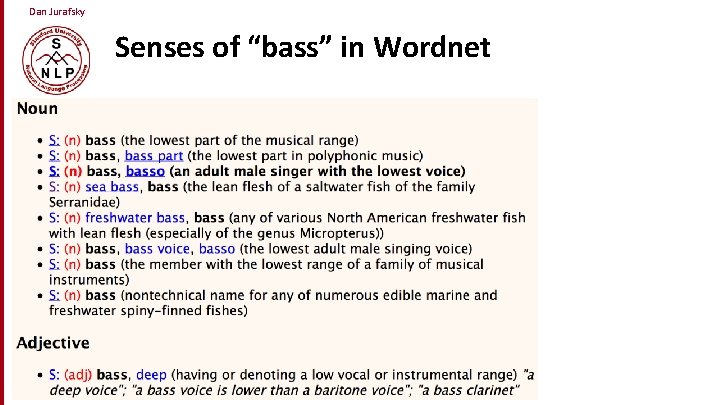

Dan Jurafsky Senses of “bass” in Wordnet

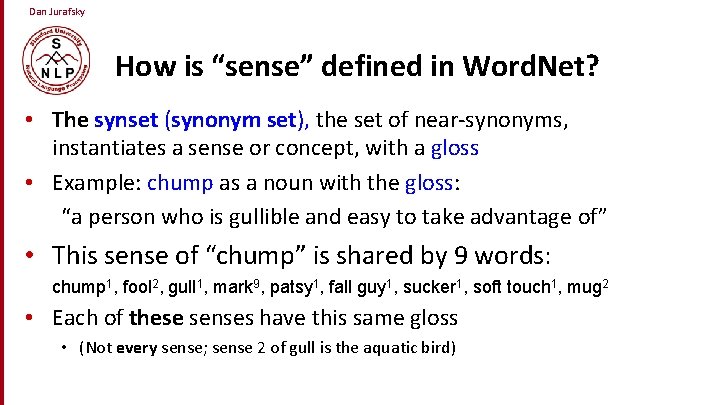

Dan Jurafsky How is “sense” defined in Word. Net? • The synset (synonym set), the set of near-synonyms, instantiates a sense or concept, with a gloss • Example: chump as a noun with the gloss: “a person who is gullible and easy to take advantage of” • This sense of “chump” is shared by 9 words: chump 1, fool 2, gull 1, mark 9, patsy 1, fall guy 1, sucker 1, soft touch 1, mug 2 • Each of these senses have this same gloss • (Not every sense; sense 2 of gull is the aquatic bird)

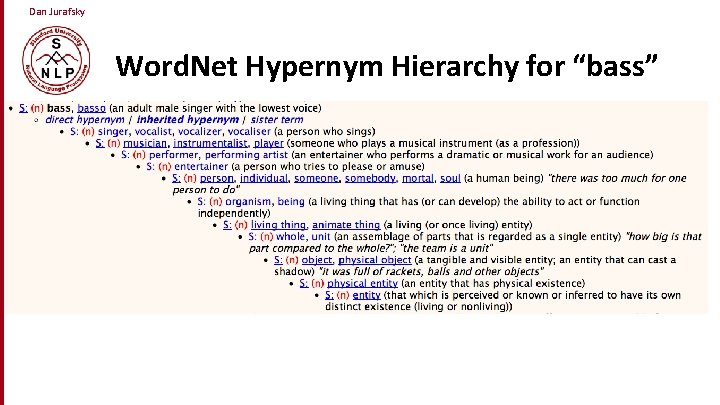

Dan Jurafsky Word. Net Hypernym Hierarchy for “bass”

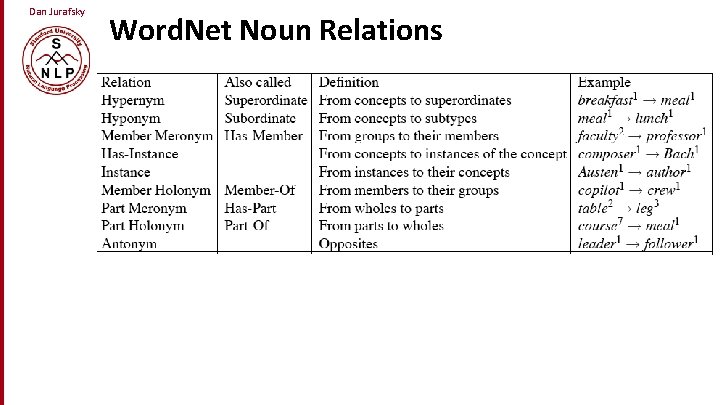

Dan Jurafsky Word. Net Noun Relations

Dan Jurafsky Word. Net 3. 0 • Where it is: • http: //wordnetweb. princeton. edu/perl/webwn • Libraries • Python: Word. Net from NLTK • http: //www. nltk. org/Home • Java: • JWNL, ext. JWNL on sourceforge

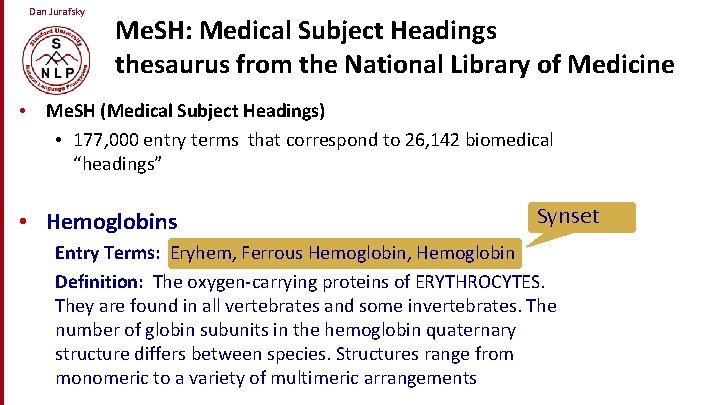

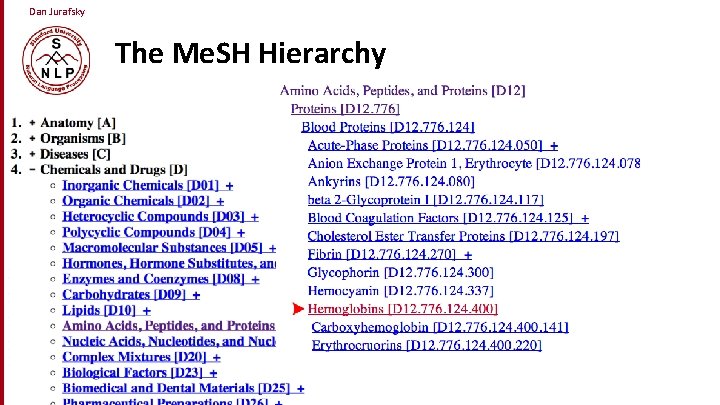

Dan Jurafsky Me. SH: Medical Subject Headings thesaurus from the National Library of Medicine • Me. SH (Medical Subject Headings) • 177, 000 entry terms that correspond to 26, 142 biomedical “headings” • Hemoglobins Synset Entry Terms: Eryhem, Ferrous Hemoglobin, Hemoglobin Definition: The oxygen-carrying proteins of ERYTHROCYTES. They are found in all vertebrates and some invertebrates. The number of globin subunits in the hemoglobin quaternary structure differs between species. Structures range from monomeric to a variety of multimeric arrangements

Dan Jurafsky The Me. SH Hierarchy • a 26

Dan Jurafsky Uses of the Me. SH Ontology • Provide synonyms (“entry terms”) • E. g. , glucose and dextrose • Provide hypernyms (from the hierarchy) • E. g. , glucose ISA monosaccharide • Indexing in MEDLINE/Pub. MED database • NLM’s bibliographic database: • 20 million journal articles • Each article hand-assigned 10 -20 Me. SH terms

Word Meaning and Similarity Word. Net and other Online Thesauri

Word Meaning and Similarity Word Similarity: Thesaurus Methods

Dan Jurafsky Word Similarity • Synonymy: a binary relation • Two words are either synonymous or not • Similarity (or distance): a looser metric • Two words are more similar if they share more features of meaning • Similarity is properly a relation between senses • The word “bank” is not similar to the word “slope” • Bank 1 is similar to fund 3 • Bank 2 is similar to slope 5 • But we’ll compute similarity over both words and senses

Dan Jurafsky Why word similarity • • Information retrieval Question answering Machine translation Natural language generation Language modeling Automatic essay grading Plagiarism detection Document clustering

Dan Jurafsky Word similarity and word relatedness • We often distinguish word similarity from word relatedness • Similar words: near-synonyms • Related words: can be related any way • car, bicycle: similar • car, gasoline: related, not similar

Dan Jurafsky Two classes of similarity algorithms • Thesaurus-based algorithms • Are words “nearby” in hypernym hierarchy? • Do words have similar glosses (definitions)? • Distributional algorithms • Do words have similar distributional contexts?

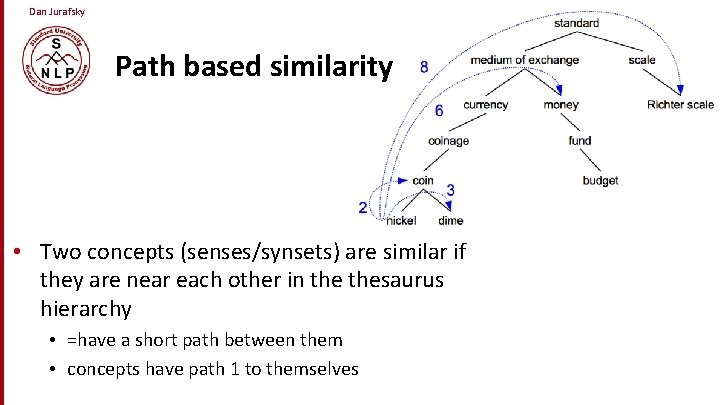

Dan Jurafsky Path based similarity • Two concepts (senses/synsets) are similar if they are near each other in thesaurus hierarchy • =have a short path between them • concepts have path 1 to themselves

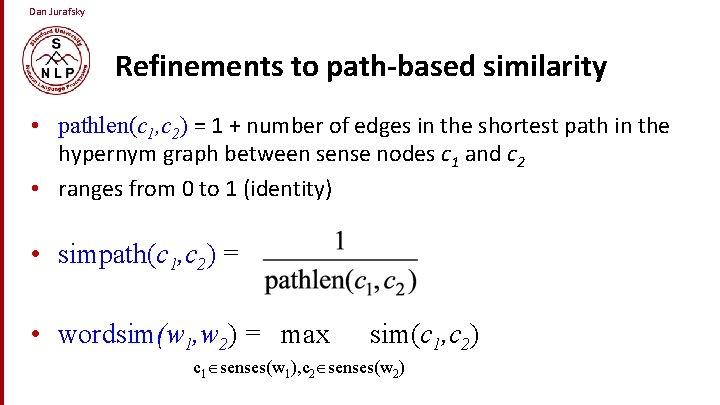

Dan Jurafsky Refinements to path-based similarity • pathlen(c 1, c 2) = 1 + number of edges in the shortest path in the hypernym graph between sense nodes c 1 and c 2 • ranges from 0 to 1 (identity) • simpath(c 1, c 2) = • wordsim(w 1, w 2) = max sim(c 1, c 2) c 1 senses(w 1), c 2 senses(w 2)

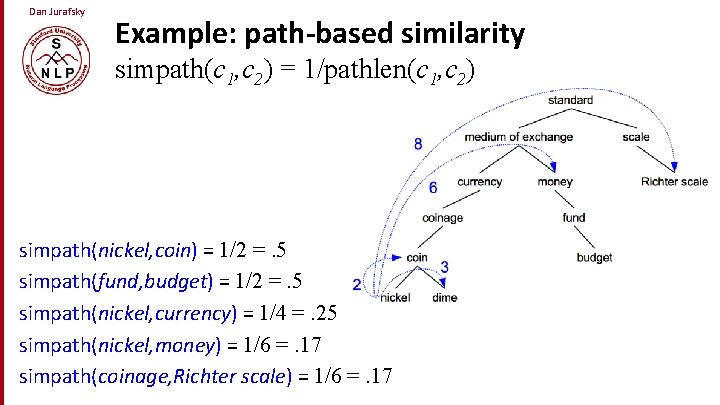

Dan Jurafsky Example: path-based similarity simpath(c 1, c 2) = 1/pathlen(c 1, c 2) simpath(nickel, coin) = 1/2 =. 5 simpath(fund, budget) = 1/2 =. 5 simpath(nickel, currency) = 1/4 =. 25 simpath(nickel, money) = 1/6 =. 17 simpath(coinage, Richter scale) = 1/6 =. 17

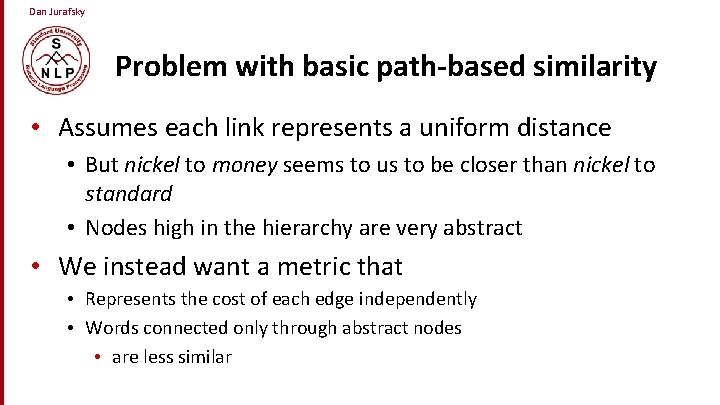

Dan Jurafsky Problem with basic path-based similarity • Assumes each link represents a uniform distance • But nickel to money seems to us to be closer than nickel to standard • Nodes high in the hierarchy are very abstract • We instead want a metric that • Represents the cost of each edge independently • Words connected only through abstract nodes • are less similar

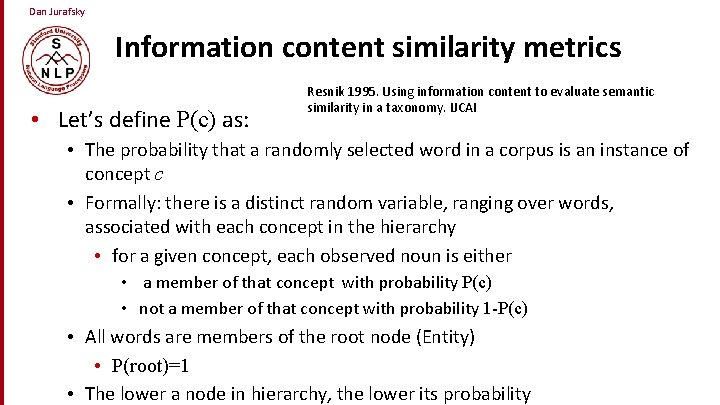

Dan Jurafsky Information content similarity metrics • Let’s define P(c) as: Resnik 1995. Using information content to evaluate semantic similarity in a taxonomy. IJCAI • The probability that a randomly selected word in a corpus is an instance of concept c • Formally: there is a distinct random variable, ranging over words, associated with each concept in the hierarchy • for a given concept, each observed noun is either • a member of that concept with probability P(c) • not a member of that concept with probability 1 -P(c) • All words are members of the root node (Entity) • P(root)=1 • The lower a node in hierarchy, the lower its probability

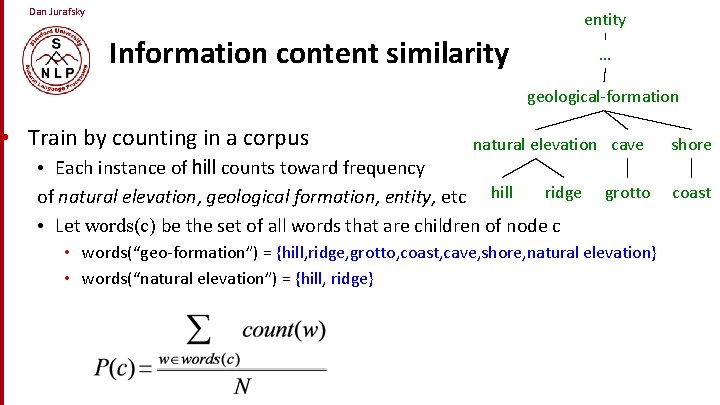

Dan Jurafsky entity Information content similarity … geological-formation • Train by counting in a corpus natural elevation cave • Each instance of hill counts toward frequency ridge of natural elevation, geological formation, entity, etc hill • Let words(c) be the set of all words that are children of node c grotto • words(“geo-formation”) = {hill, ridge, grotto, coast, cave, shore, natural elevation} • words(“natural elevation”) = {hill, ridge} shore coast

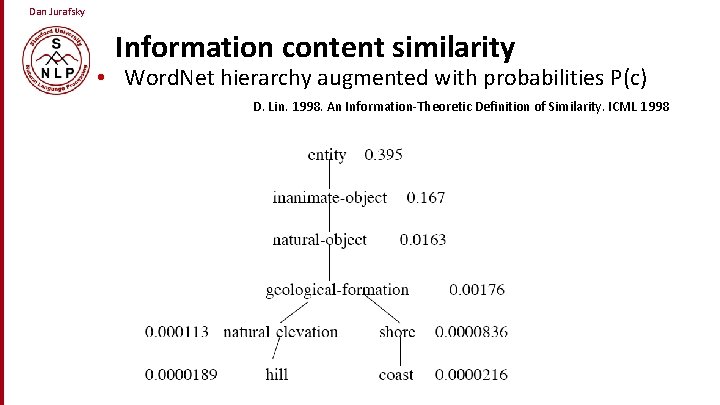

Dan Jurafsky Information content similarity • Word. Net hierarchy augmented with probabilities P(c) D. Lin. 1998. An Information-Theoretic Definition of Similarity. ICML 1998

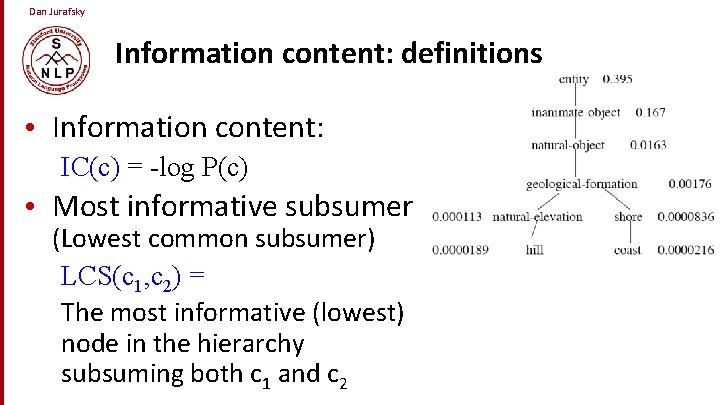

Dan Jurafsky Information content: definitions • Information content: IC(c) = -log P(c) • Most informative subsumer (Lowest common subsumer) LCS(c 1, c 2) = The most informative (lowest) node in the hierarchy subsuming both c 1 and c 2

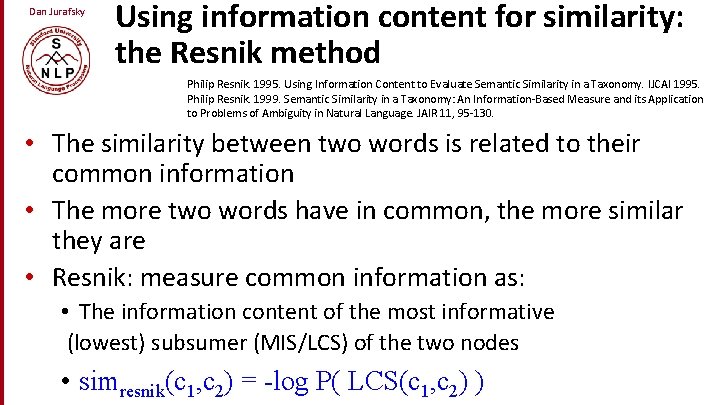

Dan Jurafsky Using information content for similarity: the Resnik method Philip Resnik. 1995. Using Information Content to Evaluate Semantic Similarity in a Taxonomy. IJCAI 1995. Philip Resnik. 1999. Semantic Similarity in a Taxonomy: An Information-Based Measure and its Application to Problems of Ambiguity in Natural Language. JAIR 11, 95 -130. • The similarity between two words is related to their common information • The more two words have in common, the more similar they are • Resnik: measure common information as: • The information content of the most informative (lowest) subsumer (MIS/LCS) of the two nodes • simresnik(c 1, c 2) = -log P( LCS(c 1, c 2) )

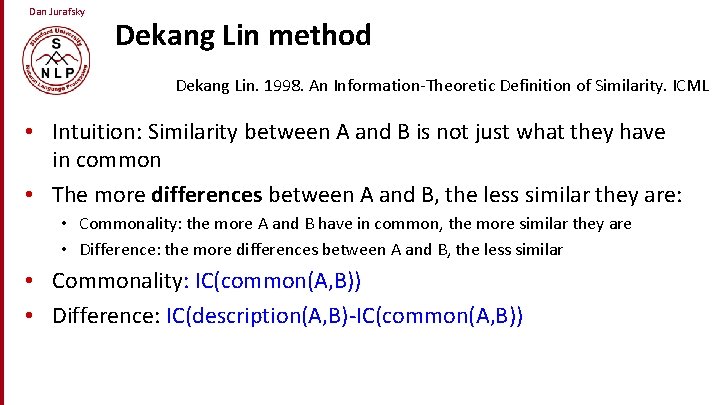

Dan Jurafsky Dekang Lin method Dekang Lin. 1998. An Information-Theoretic Definition of Similarity. ICML • Intuition: Similarity between A and B is not just what they have in common • The more differences between A and B, the less similar they are: • Commonality: the more A and B have in common, the more similar they are • Difference: the more differences between A and B, the less similar • Commonality: IC(common(A, B)) • Difference: IC(description(A, B)-IC(common(A, B))

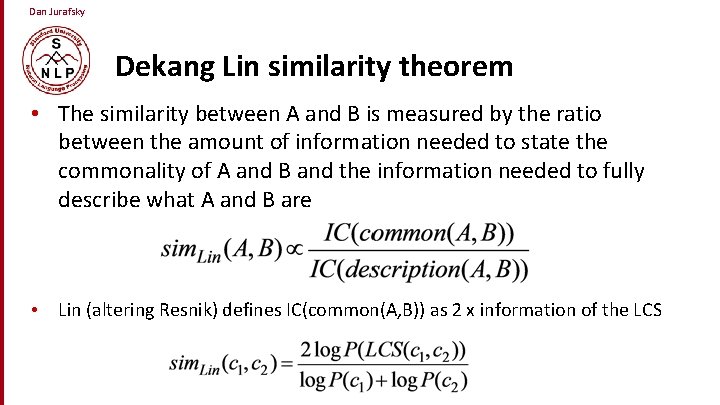

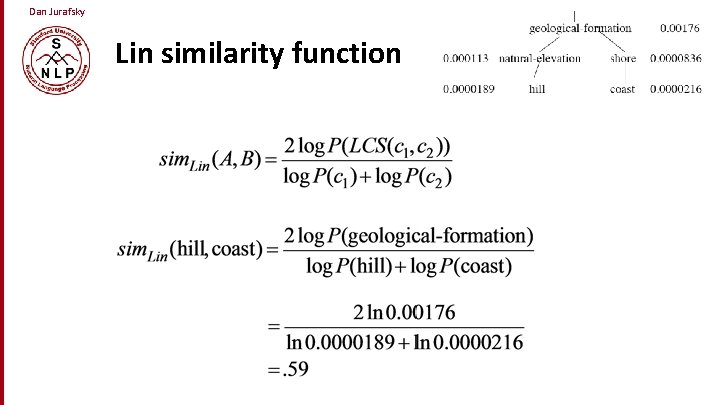

Dan Jurafsky Dekang Lin similarity theorem • The similarity between A and B is measured by the ratio between the amount of information needed to state the commonality of A and B and the information needed to fully describe what A and B are • Lin (altering Resnik) defines IC(common(A, B)) as 2 x information of the LCS

Dan Jurafsky Lin similarity function

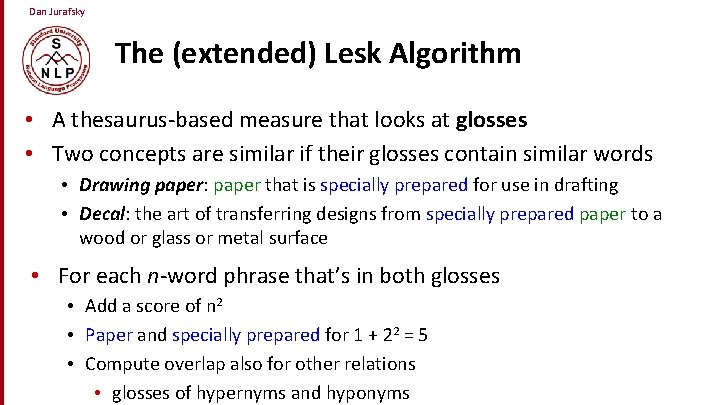

Dan Jurafsky The (extended) Lesk Algorithm • A thesaurus-based measure that looks at glosses • Two concepts are similar if their glosses contain similar words • Drawing paper: paper that is specially prepared for use in drafting • Decal: the art of transferring designs from specially prepared paper to a wood or glass or metal surface • For each n-word phrase that’s in both glosses • Add a score of n 2 • Paper and specially prepared for 1 + 22 = 5 • Compute overlap also for other relations • glosses of hypernyms and hyponyms

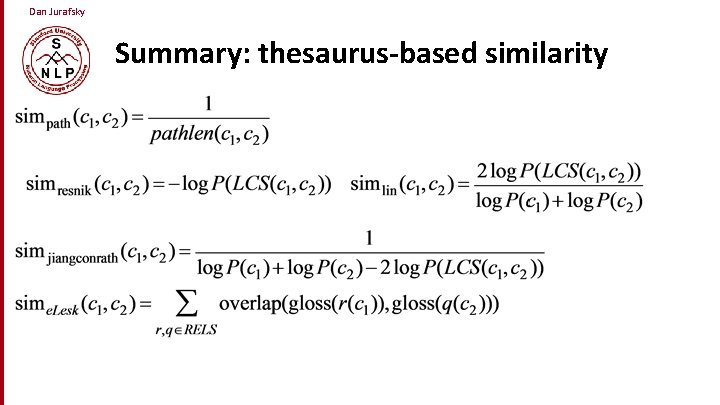

Dan Jurafsky Summary: thesaurus-based similarity

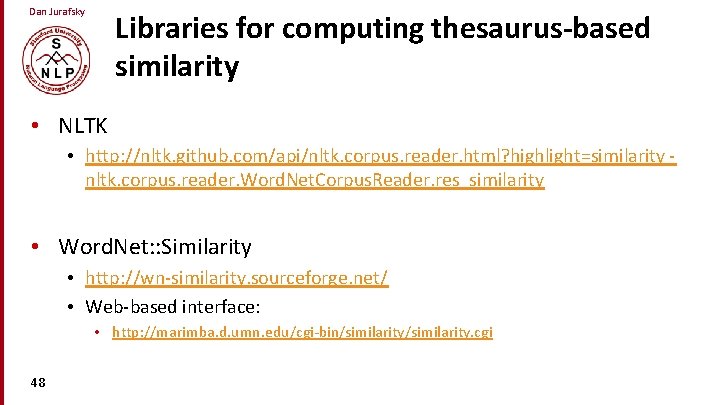

Dan Jurafsky Libraries for computing thesaurus-based similarity • NLTK • http: //nltk. github. com/api/nltk. corpus. reader. html? highlight=similarity nltk. corpus. reader. Word. Net. Corpus. Reader. res_similarity • Word. Net: : Similarity • http: //wn-similarity. sourceforge. net/ • Web-based interface: • http: //marimba. d. umn. edu/cgi-bin/similarity. cgi 48

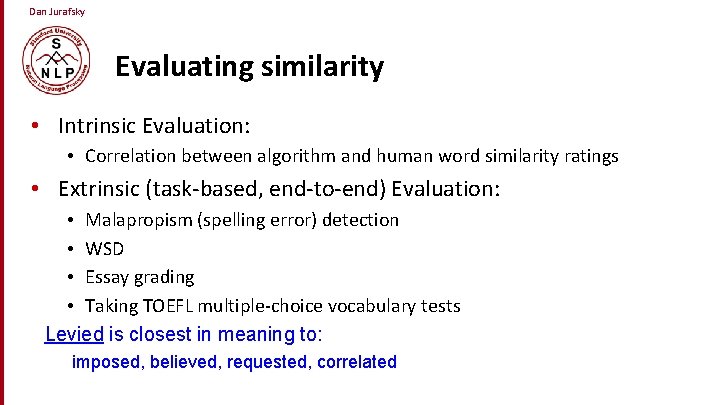

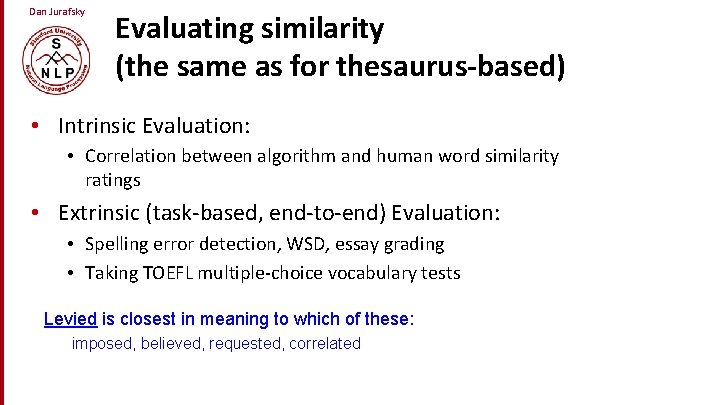

Dan Jurafsky Evaluating similarity • Intrinsic Evaluation: • Correlation between algorithm and human word similarity ratings • Extrinsic (task-based, end-to-end) Evaluation: • Malapropism (spelling error) detection • WSD • Essay grading • Taking TOEFL multiple-choice vocabulary tests Levied is closest in meaning to: imposed, believed, requested, correlated

Word Meaning and Similarity Word Similarity: Thesaurus Methods

Word Meaning and Similarity Word Similarity: Distributional Similarity (I)

Dan Jurafsky Problems with thesaurus-based meaning • We don’t have a thesaurus for every language • Even if we do, they have problems with recall • • Many words are missing Most (if not all) phrases are missing Some connections between senses are missing Thesauri work less well for verbs, adjectives • Adjectives and verbs have less structured hyponymy relations

Dan Jurafsky Distributional models of meaning • Also called vector-space models of meaning • Offer much higher recall than hand-built thesauri • Although they tend to have lower precision • Zellig Harris (1954): “oculist and eye-doctor … occur in almost the same environments…. If A and B have almost identical environments we say that they are synonyms. • Firth (1957): “You shall know a word by the 53 company it keeps!”

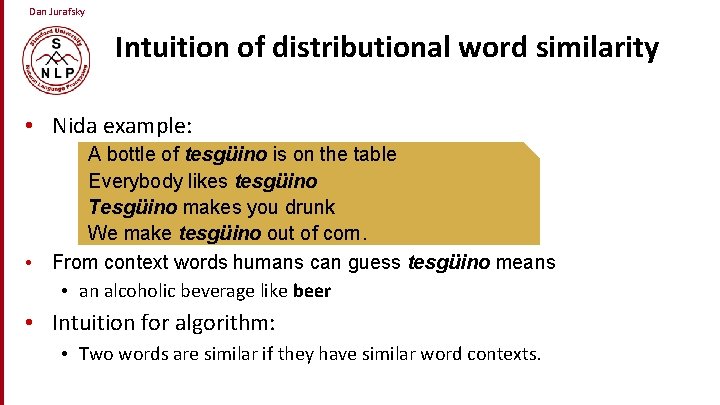

Dan Jurafsky Intuition of distributional word similarity • Nida example: A bottle of tesgüino is on the table Everybody likes tesgüino Tesgüino makes you drunk We make tesgüino out of corn. • From context words humans can guess tesgüino means • an alcoholic beverage like beer • Intuition for algorithm: • Two words are similar if they have similar word contexts.

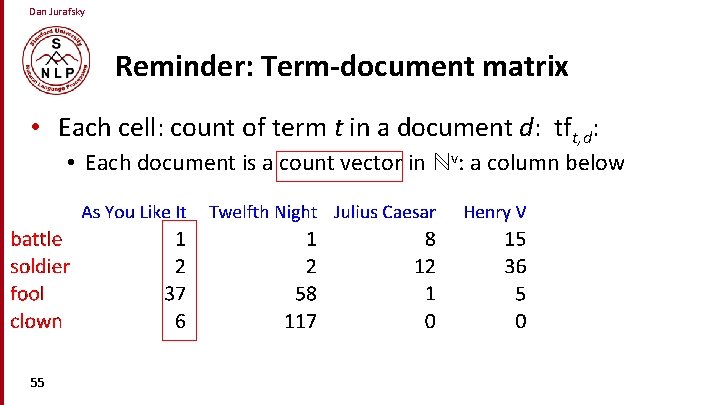

Dan Jurafsky Reminder: Term-document matrix • Each cell: count of term t in a document d: tft, d: • Each document is a count vector in ℕv: a column below 55

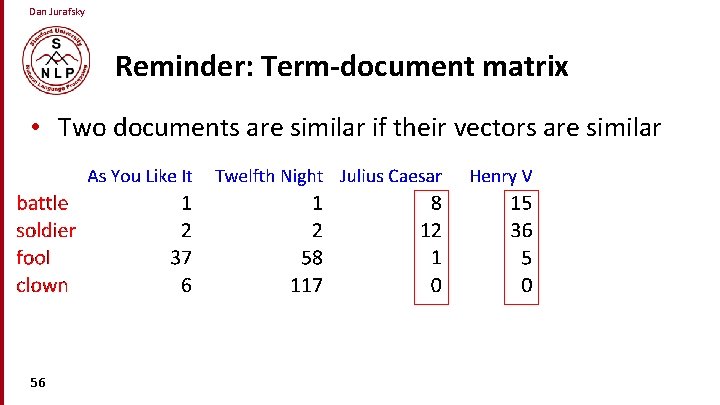

Dan Jurafsky Reminder: Term-document matrix • Two documents are similar if their vectors are similar 56

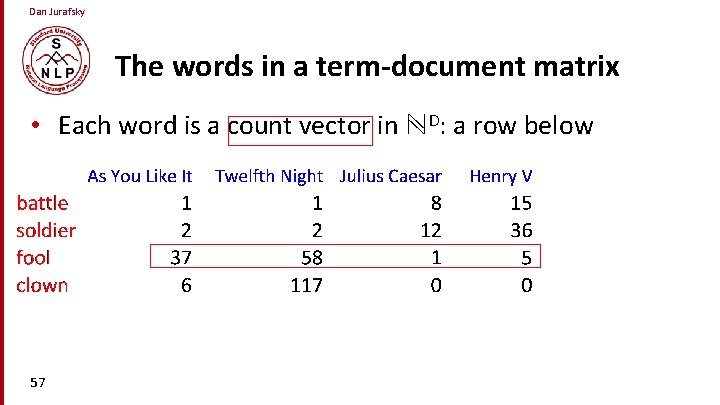

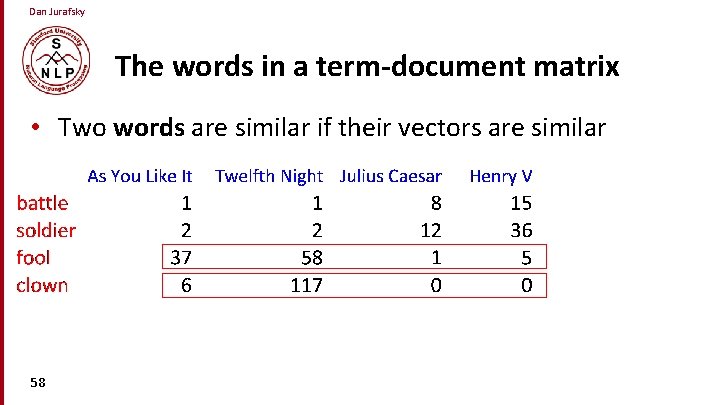

Dan Jurafsky The words in a term-document matrix • Each word is a count vector in ℕD: a row below 57

Dan Jurafsky The words in a term-document matrix • Two words are similar if their vectors are similar 58

Dan Jurafsky The Term-Context matrix • Instead of using entire documents, use smaller contexts • Paragraph • Window of 10 words • A word is now defined by a vector over counts of context words 59

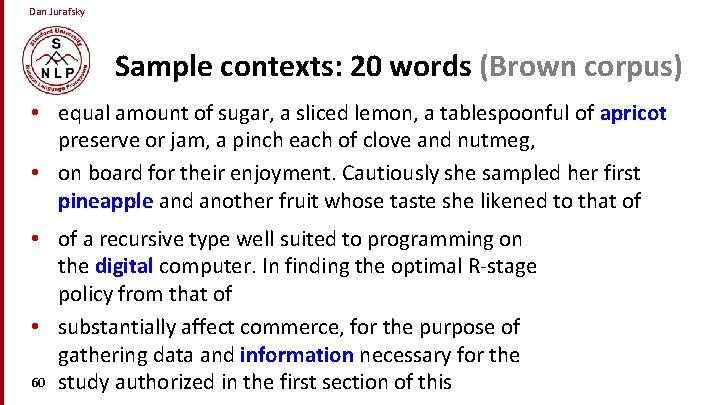

Dan Jurafsky Sample contexts: 20 words (Brown corpus) • equal amount of sugar, a sliced lemon, a tablespoonful of apricot preserve or jam, a pinch each of clove and nutmeg, • on board for their enjoyment. Cautiously she sampled her first pineapple and another fruit whose taste she likened to that of • of a recursive type well suited to programming on the digital computer. In finding the optimal R-stage policy from that of • substantially affect commerce, for the purpose of gathering data and information necessary for the 60 study authorized in the first section of this

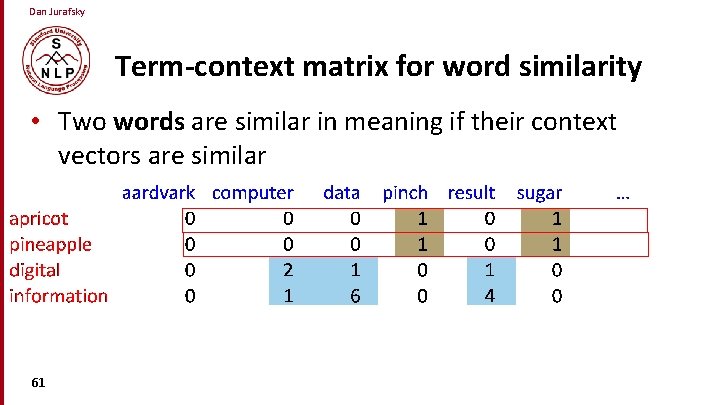

Dan Jurafsky Term-context matrix for word similarity • Two words are similar in meaning if their context vectors are similar 61

Dan Jurafsky Should we use raw counts? • For the term-document matrix • We used tf-idf instead of raw term counts • For the term-context matrix • Positive Pointwise Mutual Information (PPMI) is common 62

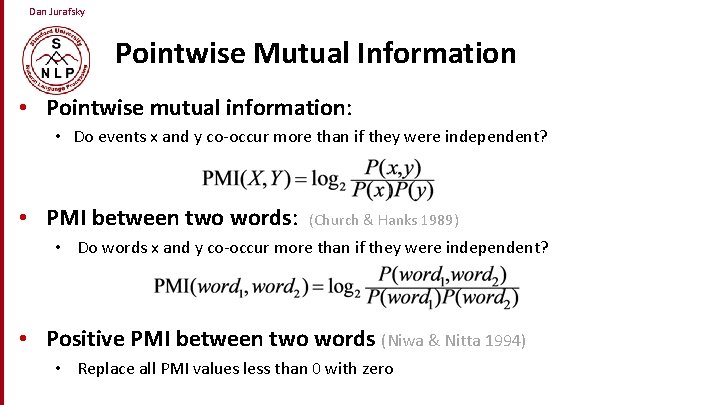

Dan Jurafsky Pointwise Mutual Information • Pointwise mutual information: • Do events x and y co-occur more than if they were independent? • PMI between two words: (Church & Hanks 1989) • Do words x and y co-occur more than if they were independent? • Positive PMI between two words (Niwa & Nitta 1994) • Replace all PMI values less than 0 with zero

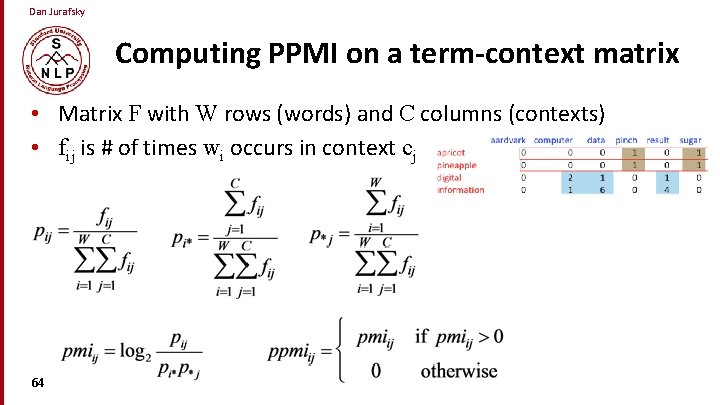

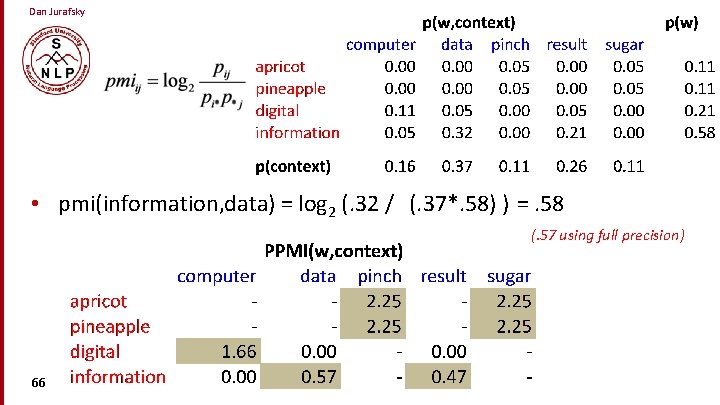

Dan Jurafsky Computing PPMI on a term-context matrix • Matrix F with W rows (words) and C columns (contexts) • fij is # of times wi occurs in context cj 64

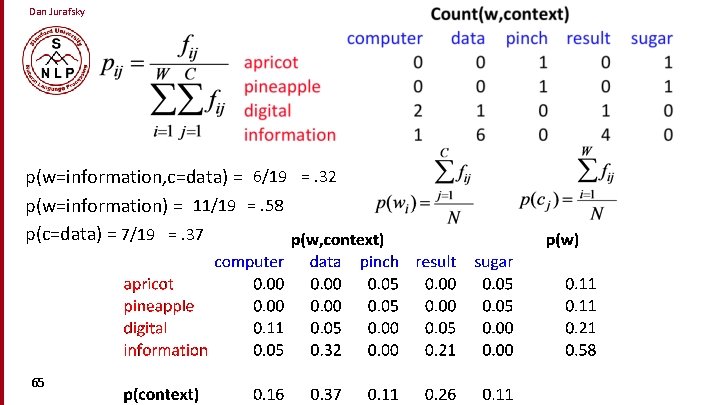

Dan Jurafsky p(w=information, c=data) = 6/19 =. 32 p(w=information) = 11/19 =. 58 p(c=data) = 7/19 =. 37 65

Dan Jurafsky • pmi(information, data) = log 2 (. 32 / (. 37*. 58) ) =. 58 (. 57 using full precision) 66

Dan Jurafsky Weighing PMI • PMI is biased toward infrequent events • Various weighting schemes help alleviate this • See Turney and Pantel (2010) • Add-one smoothing can also help 67

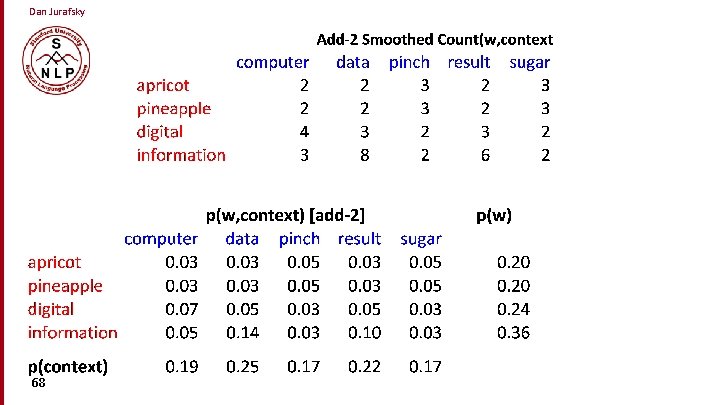

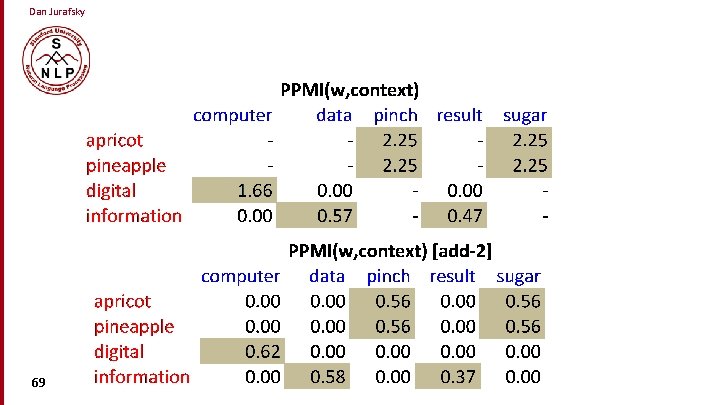

Dan Jurafsky 68

Dan Jurafsky 69

Word Meaning and Similarity Word Similarity: Distributional Similarity (I)

Word Meaning and Similarity Word Similarity: Distributional Similarity (II)

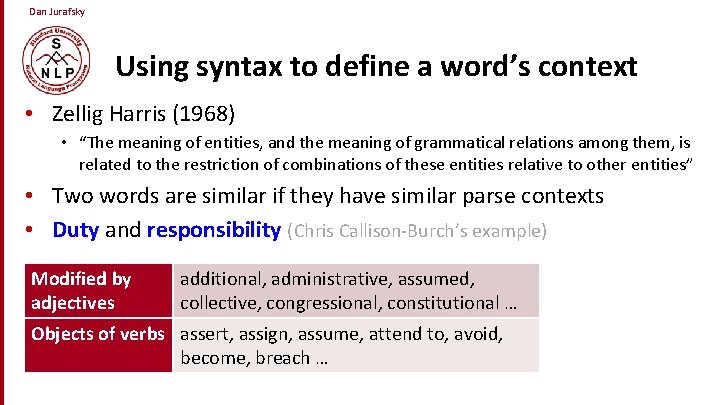

Dan Jurafsky Using syntax to define a word’s context • Zellig Harris (1968) • “The meaning of entities, and the meaning of grammatical relations among them, is related to the restriction of combinations of these entities relative to other entities” • Two words are similar if they have similar parse contexts • Duty and responsibility (Chris Callison-Burch’s example) Modified by adjectives additional, administrative, assumed, collective, congressional, constitutional … Objects of verbs assert, assign, assume, attend to, avoid, become, breach …

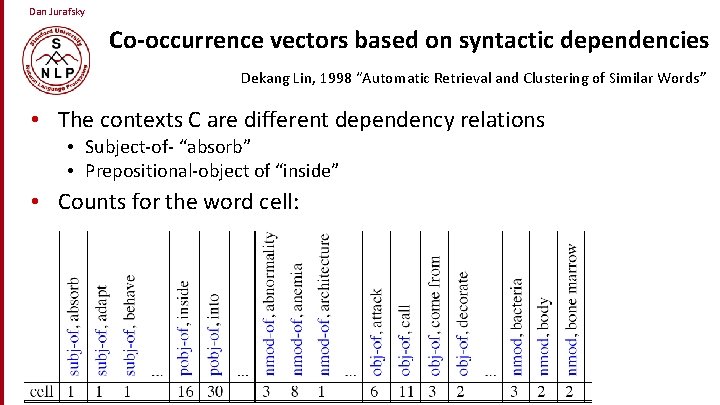

Dan Jurafsky Co-occurrence vectors based on syntactic dependencies Dekang Lin, 1998 “Automatic Retrieval and Clustering of Similar Words” • The contexts C are different dependency relations • Subject-of- “absorb” • Prepositional-object of “inside” • Counts for the word cell:

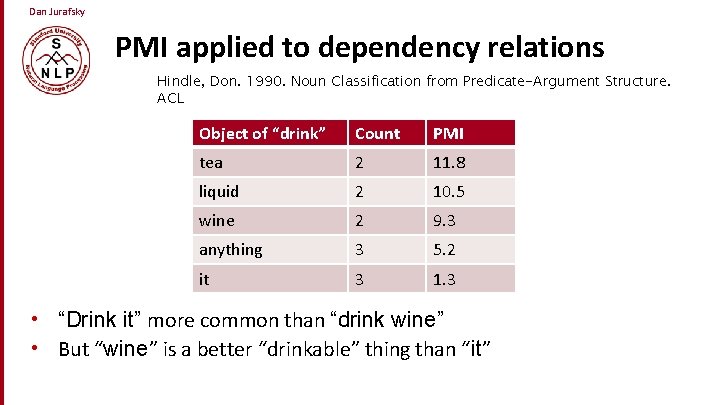

Dan Jurafsky PMI applied to dependency relations Hindle, Don. 1990. Noun Classification from Predicate-Argument Structure. ACL Object of “drink” Count PMI it tea 3 2 1. 3 11. 8 anything liquid 3 2 5. 2 10. 5 wine 2 9. 3 tea anything 2 3 11. 8 5. 2 liquid it 2 3 10. 5 1. 3 • “Drink it” more common than “drink wine” • But “wine” is a better “drinkable” thing than “it”

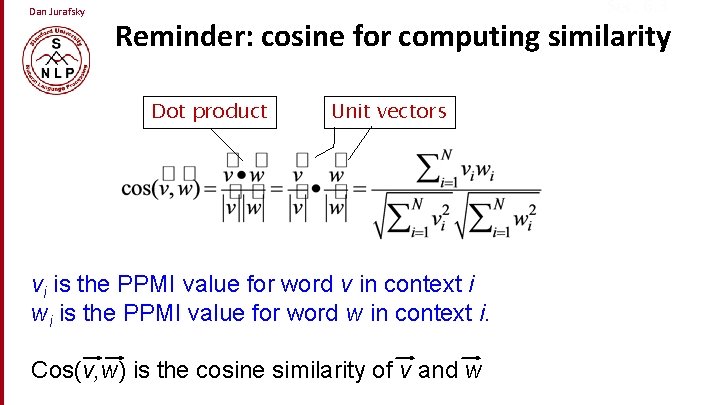

Dan Jurafsky Sec. 6. 3 Reminder: cosine for computing similarity Dot product Unit vectors vi is the PPMI value for word v in context i wi is the PPMI value for word w in context i. Cos(v, w) is the cosine similarity of v and w

Dan Jurafsky Cosine as a similarity metric • -1: vectors point in opposite directions • +1: vectors point in same directions • 0: vectors are orthogonal • Raw frequency or PPMI are nonnegative, so cosine range 0 -1 76

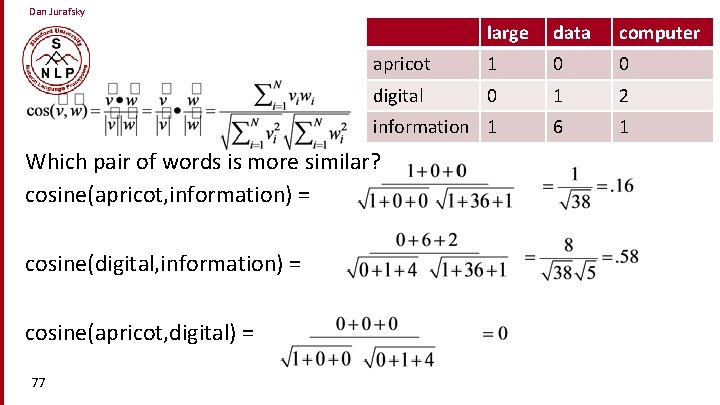

Dan Jurafsky large data computer apricot 1 0 0 digital 0 1 2 information 1 6 1 Which pair of words is more similar? cosine(apricot, information) = cosine(digital, information) = cosine(apricot, digital) = 77

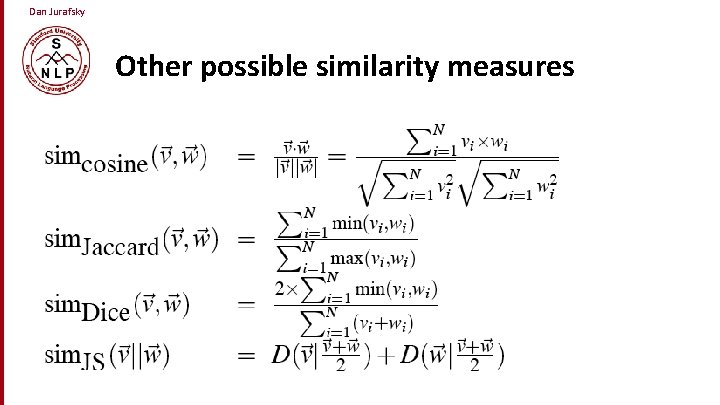

Dan Jurafsky Other possible similarity measures

Dan Jurafsky Evaluating similarity (the same as for thesaurus-based) • Intrinsic Evaluation: • Correlation between algorithm and human word similarity ratings • Extrinsic (task-based, end-to-end) Evaluation: • Spelling error detection, WSD, essay grading • Taking TOEFL multiple-choice vocabulary tests Levied is closest in meaning to which of these: imposed, believed, requested, correlated

Word Meaning and Similarity Word Similarity: Distributional Similarity (II)

From Wikipedia, the free encyclopedia

In linguistics, a word sense is one of the meanings of a word. For example, a dictionary may have over 50 different senses of the word «play», each of these having a different meaning based on the context of the word’s usage in a sentence, as follows:

We went to see the play Romeo and Juliet at the theater.

The coach devised a great play that put the visiting team on the defensive.

The children went out to play in the park.

In each sentence different collocates of «play» signal its different meanings.

People and computers, as they read words, must use a process called word-sense disambiguation[1][2] to reconstruct the likely intended meaning of a word. This process uses context to narrow the possible senses down to the probable ones. The context includes such things as the ideas conveyed by adjacent words and nearby phrases, the known or probable purpose and register of the conversation or document, and the orientation (time and place) implied or expressed. The disambiguation is thus context-sensitive.

Advanced semantic analysis has resulted in a sub-distinction. A word sense corresponds either neatly to a seme (the smallest possible unit of meaning) or a sememe (larger unit of meaning), and polysemy of a word of phrase is the property of having multiple semes or sememes and thus multiple senses.

Relations between senses[edit]

Often the senses of a word are related to each other within a semantic field. A common pattern is that one sense is broader and another narrower. This is often the case in technical jargon, where the target audience uses a narrower sense of a word that a general audience would tend to take in its broader sense. For example, in casual use «orthography» will often be glossed for a lay audience as «spelling», but in linguistic usage «orthography» (comprising spelling, casing, spacing, hyphenation, and other punctuation) is a hypernym of «spelling». Besides jargon, however, the pattern is common even in general vocabulary. Examples are the variation in senses of the term «wood wool» and in those of the word «bean». This pattern entails that natural language can often lack explicitness about hyponymy and hypernymy. Much more than programming languages do, it relies on context instead of explicitness; meaning is implicit within a context. Common examples are as follows:

- The word «diabetes» without further specification usually refers to diabetes mellitus.

- The word «angina» without further specification usually refers to angina pectoris.

- The word «tuberculosis» without further specification usually refers to pulmonary tuberculosis.

- The word «emphysema» without further specification usually refers to pulmonary emphysema.

- The word «cervix» without further specification usually refers to the uterine cervix.

Usage labels of «sensu» plus a qualifier, such as «sensu stricto» («in the strict sense») or «sensu lato» («in the broad sense») are sometimes used to clarify what is meant by a text.

Relation to etymology[edit]

Polysemy entails a common historic root to a word or phrase. Broad medical terms usually followed by qualifiers, such as those in relation to certain conditions or types of anatomical locations are polysemic, and older conceptual words are with few exceptions highly polysemic (and usually beyond shades of similar meaning into the realms of being ambiguous).

Homonymy is where two separate-root words (lexemes) happen to have the same spelling and pronunciation.

See also[edit]

- denotation

- semantics – study of meaning

- lexical semantics – the study of what the words of a language denote and how it is that they do this

- word-sense induction – the task of automatically acquiring the senses of a target word

- word-sense disambiguation – the task of automatically associating a sense with a word in context

- lexical substitution – the task of replacing a word in context with a lexical substitute

- sememe – unit of meaning

- linguistics – the scientific study of language, which can be theoretical or applied.

- sense and reference

- functor — a mathematical term which is the overarching generalization of the intentionality behind the class of transfers of intelligability at two different levels of analysis.

References[edit]

- ^ N. Ide and J. Véronis (1998). «Word Sense Disambiguation: The State of the Art» (PDF). Computational Linguistics. 24: 1–40. Archived from the original (PDF) on 2006-01-06.

- ^ R. Navigli. Word Sense Disambiguation: A Survey, ACM Computing Surveys, 41(2), 2009, pp. 1-69.

External links[edit]

- «I don’t believe in word senses» – Adam Kilgarriff (1997) – archive

- WordNet(R) – a large lexical database of English words and their meanings maintained by the Princeton Cognitive Science Laboratory

Meaning and Sense: what is a difference between them

Dear all,

According to a document that I found on the Web, “the term of semantics has the following linked sense in linguistics: the study of the meaning of words and sentences, their denotations, connotations, implications, and ambiguities”. As indicated in the title of this thread, my question is that what any differences between the words, i.e., meaning and sense. What is the meaning and sense of the term of “meaning», and what is the meaning and sense of the term of “sense”?

The background of this question: I am reading a book about the theories of political economy, in particular, embracing the so-called linguistic turn in social sciences. And, with no specific explanations of the words, authors use the terms of sense-making and meaning-making. It seems to me that there is a difference between them, but I cannot grasp the difference exactly.

Now, I understand that, in linguistics, there are a lot of “meanings” such as denotative meaning (conceptual, referential meaning, cognitive meaning), connotative meaning (associative meanings of words), affective meaning (emotional meaning), reflective meaning, collocated meaning (combinational meaning), thematic meaning, stylistic meaning. But, I still have no idea of the difference between sense and meaning.

Thank you very much for your replies and discussions.

Sociologist

Presentation on theme: «Word Meaning and Similarity Word Senses and Word Relations.»— Presentation transcript:

1

Word Meaning and Similarity Word Senses and Word Relations

2

Dan Jurafsky Reminder: lemma and wordform A lemma or citation form Same stem, part of speech, rough semantics A wordform The “inflected” word as it appears in text WordformLemma banksbank sungsing duermesdormir

3

Dan Jurafsky Lemmas have senses One lemma “bank” can have many meanings: …a bank can hold the investments in a custodial account… “…as agriculture burgeons on the east bank the river will shrink even more ” Sense (or word sense) A discrete representation of an aspect of a word’s meaning. The lemma bank here has two senses 1 2 Sense 1: Sense 2:

4

Dan Jurafsky Homonymy Homonyms: words that share a form but have unrelated, distinct meanings: 1.Homographs (bank/bank, bat/bat):same spelling,same/different pronunciation, different meaning 1.bank 1 : financial institution, bank 2 : sloping land 2.bat 1 : club for hitting a ball, bat 2 : nocturnal flying mammal 3.bass 1 ( بيس ): stringed instrument, bass 2 ( باس ): fish 2.Homophones: different spelling, same pronunciation, different meaning 1.Write and right 2.Piece and peace Spelling/pronunciation

5

Dan Jurafsky Homonymy causes problems for NLP applications 1.Information retrieval If user search for “ bat care” Problem: you do not know whether user looking for bat(mammal) or bat(for basball) 2.Machine Translation (MT) bat : translate to (animal) or translate to (baseball) 3.Text-to-Speech bass (stringed instrument) vs. bass (fish)

6

Dan Jurafsky Polysemy 1. I withdrew the money from the bank 2. The bank was constructed in 1875 out of local red brick. Are those the same sense? Sense 2: “A financial institution” Sense 1: “The building belonging to a financial institution” A polysemous word has related meanings bank is a polysemous word Most non-rare words have multiple meanings

7

Dan Jurafsky Lots of types of polysemy are systematic School, university, hospital All can mean the institution or the building. A systematic relationship: Building Institution Other such kinds of systematic polysemy: Author ( Jane Austen wrote Emma ) Works of Author ( I love Jane Austen ) Tree (Plums have beautiful blossoms) Fruit (I ate a preserved plum) Metonymy or Systematic Polysemy: A systematic relationship between senses

8

Dan Jurafsky How do we know when a word has more than one sense? The “zeugma” test: Two senses of serve ? Which flights serve breakfast? Does Lufthansa serve Philadelphia? “Zeugma” test say: Let form sentence using both senses of “serve” above and see how is bad it sounds.so, let is write the following sentence: Does Lufthansa serve breakfast and Philadelphia? Since this conjunction sounds weird, we say that these are two different senses of “serve”

9

Dan Jurafsky Synonyms Word that have the same meaning in some or all contexts. couch / sofa big / large automobile / car vomit / throw up Two lexemes are perfect synonyms if they can be substituted for each other in all situations

10

Dan Jurafsky Synonyms But there are few (or no) examples of perfect synonymy. Even if many aspects of meaning are identical Still may not preserve the acceptability based on notions of politeness, slang, register, genre, etc. Example: Water/H 2 0 Big/large (let see if they are perfect synonymy or not) Brave/courageous

11

Dan Jurafsky Synonymy is a relation between senses rather than words Consider the words big and large Are they synonyms? YES How big is that plane? How large is that plane? How about here: NO Miss Nelson became a kind of big sister to Benjamin. Miss Nelson became a kind of large sister to Benjamin. They are Synonyms but not perfect synonyms Why not perfect synonyms? big has a sense that means being older, or grown up large lacks this sense

12

Dan Jurafsky Antonyms Senses that are opposites with respect to one feature of meaning dark/light short/long fast/slowrise/fall hot/cold up/down More formally: antonyms can define different scales long/short, fast/slow Define different directions: rise/fall, up/down

13

Dan Jurafsky Hyponymy and Hypernymy One sense is a hyponym (“hypo is sub”)of another if the first sense is more specific, denoting a subclass of the other. Examples: car is a hyponym of vehicle mango is a hyponym of fruit Conversely hypernym/superordinate (“hyper is super”) Examples: vehicle is a hypernym of car fruit is a hypernym of mango Superordinate/hypernymvehiclefruitfurniture Subordinate/hyponymcarmangochair

14

Dan Jurafsky Hyponymy more formally Extensional: The class denoted by the superordinate extensionally includes the class denoted by the hyponym Entailment: A sense A is a hyponym of sense B if being an A entails being a B Hyponymy is usually transitive (A hypo B and B hypo C entails A hypo C) Another name: the IS-A hierarchy A IS-A B (or A ISA B) B subsumes A

15

Dan Jurafsky Hyponyms and Instances WordNet has both classes and instances. An instance is an individual, a proper noun that is a unique entity San Francisco is an instance of city But city is a class city is a hyponym of…location… 15

16

Word Meaning and Similarity Word Similarity: Thesaurus Methods

17

Dan Jurafsky Word Similarity Synonymy: a binary relation Two words are either synonymous or not Similarity (or distance): a looser metric Two words are more similar if they share more features of meaning Similarity is properly a relation between senses The word “ bank ” is not similar to the word “ slope ” Bank 1 is similar to fund 3 Bank 2 is similar to slope 5 But we’ll compute similarity over both words and senses

18

Dan Jurafsky Why word similarity Information retrieval Question answering Machine translation Natural language generation Language modeling Automatic essay grading Plagiarism detection Document clustering

19

Dan Jurafsky Word similarity and word relatedness We often distinguish word similarity from word relatedness Similar words: near-synonyms Related words: can be related any way car, bicycle : similar car, gasoline : related, not similar

20

Dan Jurafsky Two classes of similarity algorithms Thesaurus-based algorithms Are words “nearby” in hypernym hierarchy? Do words have similar glosses (definitions)? Distributional algorithms Do words have similar distributional contexts?

21

Dan Jurafsky Path based similarity Two concepts (senses/synsets) are similar if they are near each other in the thesaurus hierarchy = have a short path between them concepts have path 1 to themselves

22

Dan Jurafsky Refinements to path-based similarity pathlen(c 1,c 2 ) = 1 + number of edges in the shortest path in the hypernym graph between sense nodes c 1 and c 2 ranges from 0 to 1 (identity) simpath(c 1,c 2 ) = wordsim(w 1,w 2 ) = max sim(c 1,c 2 ) c 1 senses(w 1 ),c 2 senses(w 2 )

23

Dan Jurafsky Example: path-based similarity simpath(c 1,c 2 ) = 1/pathlen(c 1,c 2 ) simpath(nickel,coin) = 1/2 =.5 simpath(fund,budget) = 1/2 =.5 simpath(nickel,currency) = 1/4 =.25 simpath(nickel,money) = 1/6 =.17 simpath(coinage,Richter scale) = 1/6 =.17

Word meaning

Word meaning noun — The accepted meaning of a word.

Word sense

Word sense noun — The accepted meaning of a word.

Mutual synonyms

- APA

- MLA

- CMS

Google Ngram Viewer shows how «word meaning» and «word sense» have occurred on timeline