The Word Error Rate (short: WER) is a way to measure performance of an ASR. It compares a reference to an hypothesis and is defined like this:

$$mathit{WER} = frac{S+D+I}{N}$$

where

- S is the number of substitutions,

- D is the number of deletions,

- I is the number of insertions and

- N is the number of words in the reference

Examples

REF: What a bright day HYP: What a day

In this case, a deletion happened. «Bright» was deleted by the ASR.

REF: What a day HYP: What a bright day

In this case, an insertion happened. «Bright» was inserted by the ASR.

REF: What a bright day HYP: What a light day

In this case, an substitution happened. «Bright» was substituted by «light» by

the ASR.

Range of values

As only addition and division with non-negative

numbers happen, WER cannot get negativ. It is 0 exactly when the hypothesis is

the same as the reference.

WER can get arbitrary large, because the ASR can insert an arbitrary amount of

words.

Interestingly, the WER is just the Levenshtein distance for words.

I’ve understood it after I saw this on the German Wikipedia:

begin{align}

m &= |r|\

n &= |h|\

end{align}

begin{align}

D_{0, 0} &= 0\

D_{i, 0} &= i, 1 leq i leq m\

D_{0, j} &= j, 1 leq j leq n

end{align}

$$

text{For } 1 leq ileq m, 1leq j leq n\

D_{i, j} = min begin{cases}

D_{i — 1, j — 1}&+ 0 {rm if} u_i = v_j\

D_{i — 1, j — 1}&+ 1 {rm(Replacement)} \

D_{i, j — 1}&+ 1 {rm(Insertion)} \

D_{i — 1, j}&+ 1 {rm(Deletion)}

end{cases}

$$

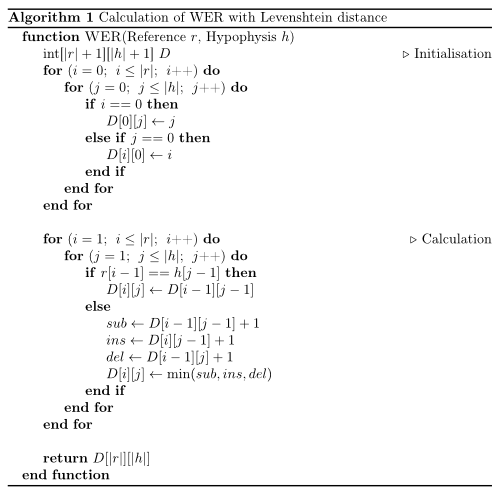

But I have written a piece of pseudocode to make it even easier to code this algorithm:

Python

#!/usr/bin/env python

def wer(r, h):

"""

Calculation of WER with Levenshtein distance.

Works only for iterables up to 254 elements (uint8).

O(nm) time ans space complexity.

Parameters

----------

r : list

h : list

Returns

-------

int

Examples

--------

>>> wer("who is there".split(), "is there".split())

1

>>> wer("who is there".split(), "".split())

3

>>> wer("".split(), "who is there".split())

3

"""

# initialisation

import numpy

d = numpy.zeros((len(r) + 1) * (len(h) + 1), dtype=numpy.uint8)

d = d.reshape((len(r) + 1, len(h) + 1))

for i in range(len(r) + 1):

for j in range(len(h) + 1):

if i == 0:

d[0][j] = j

elif j == 0:

d[i][0] = i

# computation

for i in range(1, len(r) + 1):

for j in range(1, len(h) + 1):

if r[i - 1] == h[j - 1]:

d[i][j] = d[i - 1][j - 1]

else:

substitution = d[i - 1][j - 1] + 1

insertion = d[i][j - 1] + 1

deletion = d[i - 1][j] + 1

d[i][j] = min(substitution, insertion, deletion)

return d[len(r)][len(h)]

if __name__ == "__main__":

import doctest

doctest.testmod()

Explanation

No matter at what stage of the code you are, the following is always true:

- If

r[i]equalsh[j]you don’t have to change anything. The error will be the same as it was forr[:i-1]andh[:j-1] - If its a substitution, you have the same number of errors as you had before when comparing the

r[:i-1]andh[:j-1] - If it was an insertion, then the hypothesis will be longer than the reference. So you can delete one from the hypothesis and compare the rest. As this is the other way around for deletion, you don’t have to worry when you have to delete something.

Word Error Rate (WER) and Word Recognition Rate (WRR) with Python

“WAcc(WRR) and WER as defined above are, the de facto standard most often used in speech recognition.”

WER has been developed and is used to check a speech recognition’s engine accuracy. It works by calculating the distance between the engine’s reults — called the hypothesis — and the real text — called the reference.

The distance function is based on the Levenshtein Distance (for finding the edit distance between words). The WER, like the Levenshtein distance, defines the distance by the amount of minimum operations that has to been done for getting from the reference to the hypothesis. Unlike the Levenshtein distance, however, the operations are on words and not on individual characters. The possible operations are:

Deletion: A word was deleted. A word was deleted from the reference.

Insertion: A word was added. An aligned word from the hypothesis was added.

Substitution: A word was substituted. A word from the reference was substituted with an aligned word from the hypothesis.

Also unlike the Levenshtein distance, the WER counts the deletions, insertion and substitutions done, instead of just summing up the penalties. To do that, we’ll have to first create the table for the Levenshtein distance algorithm, and then backtrace in it through the shortest route to [0,0], counting the operations on the way.

Then, we’ll use the formula to calculate the WER:

From this, the code is self explanatory:

def wer(ref, hyp ,debug=False):

r = ref.split()

h = hyp.split()

#costs will holds the costs, like in the Levenshtein distance algorithm

costs = [[0 for inner in range(len(h)+1)] for outer in range(len(r)+1)]

# backtrace will hold the operations we've done.

# so we could later backtrace, like the WER algorithm requires us to.

backtrace = [[0 for inner in range(len(h)+1)] for outer in range(len(r)+1)]

OP_OK = 0

OP_SUB = 1

OP_INS = 2

OP_DEL = 3

DEL_PENALTY=1 # Tact

INS_PENALTY=1 # Tact

SUB_PENALTY=1 # Tact

# First column represents the case where we achieve zero

# hypothesis words by deleting all reference words.

for i in range(1, len(r)+1):

costs[i][0] = DEL_PENALTY*i

backtrace[i][0] = OP_DEL

# First row represents the case where we achieve the hypothesis

# by inserting all hypothesis words into a zero-length reference.

for j in range(1, len(h) + 1):

costs[0][j] = INS_PENALTY * j

backtrace[0][j] = OP_INS

# computation

for i in range(1, len(r)+1):

for j in range(1, len(h)+1):

if r[i-1] == h[j-1]:

costs[i][j] = costs[i-1][j-1]

backtrace[i][j] = OP_OK

else:

substitutionCost = costs[i-1][j-1] + SUB_PENALTY # penalty is always 1

insertionCost = costs[i][j-1] + INS_PENALTY # penalty is always 1

deletionCost = costs[i-1][j] + DEL_PENALTY # penalty is always 1

costs[i][j] = min(substitutionCost, insertionCost, deletionCost)

if costs[i][j] == substitutionCost:

backtrace[i][j] = OP_SUB

elif costs[i][j] == insertionCost:

backtrace[i][j] = OP_INS

else:

backtrace[i][j] = OP_DEL

# back trace though the best route:

i = len(r)

j = len(h)

numSub = 0

numDel = 0

numIns = 0

numCor = 0

if debug:

print("OPtREFtHYP")

lines = []

while i > 0 or j > 0:

if backtrace[i][j] == OP_OK:

numCor += 1

i-=1

j-=1

if debug:

lines.append("OKt" + r[i]+"t"+h[j])

elif backtrace[i][j] == OP_SUB:

numSub +=1

i-=1

j-=1

if debug:

lines.append("SUBt" + r[i]+"t"+h[j])

elif backtrace[i][j] == OP_INS:

numIns += 1

j-=1

if debug:

lines.append("INSt" + "****" + "t" + h[j])

elif backtrace[i][j] == OP_DEL:

numDel += 1

i-=1

if debug:

lines.append("DELt" + r[i]+"t"+"****")

if debug:

lines = reversed(lines)

for line in lines:

print(line)

print("Ncor " + str(numCor))

print("Nsub " + str(numSub))

print("Ndel " + str(numDel))

print("Nins " + str(numIns))

return (numSub + numDel + numIns) / (float) (len(r))

wer_result = round( (numSub + numDel + numIns) / (float) (len(r)), 3)

return {'WER':wer_result, 'Cor':numCor, 'Sub':numSub, 'Ins':numIns, 'Del':numDel}

# Run:

ref='Tuan anh mot ha chin'

hyp='tuan anh mot hai ba bon chin'

wer(ref, hyp ,debug=True)

OP REF HYP

SUB Tuan tuan

OK anh anh

OK mot mot

INS **** hai

INS **** ba

SUB ha bon

OK chin chin

Ncor 3

Nsub 2

Ndel 0

Nins 2

0.8

Ref

- https://martin-thoma.com/word-error-rate-calculation/

WER

Bản gốc tại đây

#!/usr/bin/env python

def wer(r, h):

"""

Calculation of WER with Levenshtein distance.

Works only for iterables up to 254 elements (uint8).

O(nm) time ans space complexity.

Parameters

----------

r : list

h : list

Returns

-------

int

Examples

--------

>>> wer("who is there".split(), "is there".split())

1

>>> wer("who is there".split(), "".split())

3

>>> wer("".split(), "who is there".split())

3

"""

# initialisation

import numpy

d = numpy.zeros((len(r)+1)*(len(h)+1), dtype=numpy.uint8)

d = d.reshape((len(r)+1, len(h)+1))

for i in range(len(r)+1):

for j in range(len(h)+1):

if i == 0:

d[0][j] = j

elif j == 0:

d[i][0] = i

# computation

for i in range(1, len(r)+1):

for j in range(1, len(h)+1):

if r[i-1] == h[j-1]:

d[i][j] = d[i-1][j-1]

else:

substitution = d[i-1][j-1] + 1

insertion = d[i][j-1] + 1

deletion = d[i-1][j] + 1

d[i][j] = min(substitution, insertion, deletion)

return d[len(r)][len(h)]

if __name__ == "__main__":

import doctest

doctest.testmod()

Module Interface¶

- class torchmetrics.WordErrorRate(**kwargs)[source]

-

Word error rate (WordErrorRate) is a common metric of the performance of an automatic speech recognition

system. This value indicates the percentage of words that were incorrectly predicted. The lower the value, the

better the performance of the ASR system with a WER of 0 being a perfect score. Word error rate can then be

computed as:where:

—is the number of substitutions,

—is the number of deletions,

—is the number of insertions,

—is the number of correct words,

—is the number of words in the reference (

).

Compute WER score of transcribed segments against references.

As input to

forwardandupdatethe metric accepts the following input:-

preds(List): Transcription(s) to score as a string or list of strings -

target(List): Reference(s) for each speech input as a string or list of strings

As output of

forwardandcomputethe metric returns the following output:-

wer(Tensor): A tensor with the Word Error Rate score

- Parameters

-

kwargs¶ (

Any) – Additional keyword arguments, see Advanced metric settings for more info.

Examples

>>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> wer = WordErrorRate() >>> wer(preds, target) tensor(0.5000)

Initializes internal Module state, shared by both nn.Module and ScriptModule.

-

Functional Interface¶

- torchmetrics.functional.word_error_rate(preds, target)[source]

-

Word error rate (WordErrorRate) is a common metric of the performance of an automatic speech recognition

system. This value indicates the percentage of words that were incorrectly predicted. The lower the value, the

better the performance of the ASR system with a WER of 0 being a perfect score.- Parameters

-

-

preds¶ (

Union[str,List[str]]) – Transcription(s) to score as a string or list of strings -

target¶ (

Union[str,List[str]]) – Reference(s) for each speech input as a string or list of strings

-

- Return type

-

Tensor - Returns

-

Word error rate score

Examples

>>> preds = ["this is the prediction", "there is an other sample"] >>> target = ["this is the reference", "there is another one"] >>> word_error_rate(preds=preds, target=target) tensor(0.5000)

Project description

JiWER is a simple and fast python package to evaluate an automatic speech recognition system.

It supports the following measures:

- word error rate (WER)

- match error rate (MER)

- word information lost (WIL)

- word information preserved (WIP)

- character error rate (CER)

These measures are computed with the use of the minimum-edit distance between one or more reference and hypothesis sentences.

The minimum-edit distance is calculated using RapidFuzz, which uses C++ under the hood, and is therefore faster than a pure python implementation.

Documentation

For further info, see the documentation at jitsi.github.io/jiwer.

Installation

You should be able to install this package using poetry:

$ poetry add jiwer

Or, if you prefer old-fashioned pip and you’re using Python >= 3.7:

$ pip install jiwer

Usage

The most simple use-case is computing the word error rate between two strings:

from jiwer import wer reference = "hello world" hypothesis = "hello duck" error = wer(reference, hypothesis)

Licence

The jiwer package is released under the Apache License, Version 2.0 licence by 8×8.

For further information, see LICENCE.

Reference

For a comparison between WER, MER and WIL, see:

Morris, Andrew & Maier, Viktoria & Green, Phil. (2004). From WER and RIL to MER and WIL: improved evaluation measures for connected speech recognition.

Download files

Download the file for your platform. If you’re not sure which to choose, learn more about installing packages.

Source Distribution

Built Distribution

I was given this dummy code for Microsoft Speech Recognition Lab.

I am trying to find word error rate(individually as well as sum) of all the sentences stored in the file.

I have loaded the files in memory using Numpy arrays now I am struggling to find the sentence error rate for each sentence present in the file. There are a total of three sentences and I want my program to go through each sentence and compute the word error rate. My loop runs thrice yet the result is only being accumulated for the first sentence. Have a look at my code and guide me where am I going wrong. Thanks.

Provided Code:

def string_edit_distance(ref="ref_data", hyp="hyp_data"):

if ref is None or hyp is None:

RuntimeError("ref and hyp are required, cannot be None")

x = ref

y = hyp

tokens = len(x)

if (len(hyp)==0):

return (tokens, tokens, tokens, 0, 0)

# p[ix,iy] consumed ix tokens from x, iy tokens from y

p = np.PINF * np.ones((len(x) + 1, len(y) + 1)) # track total errors

e = np.zeros((len(x)+1, len(y) + 1, 3), dtype=np.int) # track deletions, insertions, substitutions

p[0] = 0

for ix in range(len(x) + 1):

for iy in range(len(y) + 1):

cst = np.PINF*np.ones([3])

s = 0

if ix > 0:

cst[0] = p[ix - 1, iy] + 1 # deletion cost

if iy > 0:

cst[1] = p[ix, iy - 1] + 1 # insertion cost

if ix > 0 and iy > 0:

s = (1 if x[ix - 1] != y[iy -1] else 0)

cst[2] = p[ix - 1, iy - 1] + s # substitution cost

if ix > 0 or iy > 0:

idx = np.argmin(cst) # if tied, one that occurs first wins

p[ix, iy] = cst[idx]

if (idx==0): # deletion

e[ix, iy, :] = e[ix - 1, iy, :]

e[ix, iy, 0] += 1

elif (idx==1): # insertion

e[ix, iy, :] = e[ix, iy - 1, :]

e[ix, iy, 1] += 1

elif (idx==2): # substitution

e[ix, iy, :] = e[ix - 1, iy - 1, :]

e[ix, iy, 2] += s

edits = int(p[-1,-1])

deletions, insertions, substitutions = e[-1, -1, :]

What I have Tried Till Now:

with open("misc/hyp.trn") as f:

hyp_data = f.readlines()

with open("misc/ref.trn") as f:

ref_data = f.readlines()

hypData = []

refData = []

for lines in hyp_data:

hypData.append(lines[:][:-20])

for line in ref_data:

refData.append(line[:][:-20])

for i in range(len(hypData)):

print("Line Number: ",i, refData[i], hypData[i])

print("Total number of reference sentences in the test set: ", len(refData))

print("Number of sentences with an error", len(hypData))

print("Total number of reference words", tokens)

print("Total number of word substitutions, insertions, and deletions: ")

print("----------------------------------------------------------------")

print("Scores: N="+str(tokens)+", S="+str(substitutions)+", D= "+str(deletions)+",

I="+str(insertions))

print("The percentage of total errors (WER) and percentage of substitutions, insertions, and

deletions")

wer = (deletions+insertions+substitutions)/tokens

print("The percentage of total errors (WER): ", int((wer*100)*10 + 0.5)/10)

print("Percentage of substitutions: ", int((substitutions*100 + 0.5)/10))

print("Percentage of insertions: ", int((insertions*100 + 0.5)/10))

print("Percentage of deletions: ",int((deletions*100 + 0.5)/10))

string_edit_distance()

is the number of substitutions,

is the number of substitutions, is the number of deletions,

is the number of deletions, is the number of insertions,

is the number of insertions, is the number of correct words,

is the number of correct words, is the number of words in the reference (

is the number of words in the reference ( ).

).