This is my first question so be nice lol…

Think of it this way. Python is written in C, which is written in an older C compiler, which is written in an even older C compiler, which is written in B, which is written in (I think) BCPL. I am not sure what BCPL is written in, but it seems that there must be an original language somewhere?

In other words, every programming language is written in an older programming language. So what came first, and what was that coded in?

asked Oct 14, 2020 at 23:31

3

What are programming languages written in?

Programming language compilers and runtimes are written in programming languages — not necessarily languages that are older or are different than the one they take as input. Some of the runtime code will drop into assembly to access certain hardware instructions or code sequences not easily obtained through the compiler.

Once bootstrapped, programming languages can self-host, so they are often written in the same language they compile. For example, C compilers are written in C or C++ and C#’s Roselyn compiler is written in C#.

When the Roselyn compiler adds a new language feature, they won’t use it in the source code for the compiler until it is debugged and working (e.g. released). This akin to the bootstrapping exercise (limited to a new feature rather than the whole language).

But to be clear, there is the potential (and often realized) for the programming language to be written in the latest version of its input language.

So what came first, and what was that coded in?

Machine code came first, and the first assemblers were themselves very very simple (early assembly languages were very easy to parse and generate machine code for), they were written in machine code, until bootstrapped and self-hosted.

answered Oct 15, 2020 at 1:07

Erik EidtErik Eidt

32.9k5 gold badges55 silver badges90 bronze badges

Think of it this way. Python is written in C,

No, it is not.

You seem to be confusing a Programming Language like Python or C with a Programming Language Implementation (e.g. a Compiler or Interpreter) like PyPy or Clang.

A Programming Language is a set of semantic and syntactic rules and restrictions. It is just an idea. A piece of paper. It isn’t «written in» anything (in the sense that e.g. Linux is «written in» C). At most, we can say it is written in English, or more precisely, in a specific jargon of English, a semi-format subset of English extended with logic notation.

Different specifications are written in different styles, here is an example of some specifications:

- The Java Language Specification

- The Scala Language Specification

- The Haskell 2010 Language Report

- The Revised7 Report on the Algorithmic Language Scheme

- The ECMA-262 ECMAScript® Language Specification

- Python does not really have a single Language Specification like many other languages do, the information is kind of splintered between the Python Language Reference, the Python Enhancement Proposals, as well as a lot of implicit institutional knowledge that only exists in the collective heads of the Python community

There are multiple Python implementations in common use today, and only one of them is written in C:

- Brython is written in ECMAScript

- IronPython is written in C#

- Jython is written in Java

- GraalPython is written in Java, using the Truffle Language Implementation Framework

- PyPy is written in the RPython Programming Language (a statically typed language roughly at the abstraction level of Java, roughly with the performance of C, with syntax and runtime semantics that are a proper subset of Python) using the RPython Language Implementation Framework

- CPython is written in C

In other words, every programming language is written in an older programming language. So what came first, and what was that coded in?

Again, you are confusing Programming Languages and Programming Language Implementations.

Programming Languages are written in English. Programming Language Implementations are written in Programming Languages. They can be written in any Programming Language. For example, Jython is a Python implementation written in Java. GHC is a Haskell implementation written in Haskell. GCC is a C compiler written in C. tsc is a TypeScript compiler written in TypeScript. rustc is a Rust compiler written in Rust. NSC is a Scala compiler written in Scala. javac is a Java compiler written in Java. Roslyn is a C# compiler written in C#.

And so on and so forth, there really is no restriction on the language used to implement a compiler or interpreter. (There is a theoretical limitation in that an interpreter for a Turing-complete language must also be written in a Turing-complete language.)

answered Oct 15, 2020 at 7:55

Jörg W MittagJörg W Mittag

101k24 gold badges217 silver badges316 bronze badges

8

Each machine has an instruction set it natively executes.

That instruction set is the first language.

The first higher level language was assembly, literally allowing the programmer to write a long expression like mov ax bx instead of the corresponding binary word.

The first compiler was written in machine language, though more accurately it would have been called an assembler but today’s standards. It would have taken the assembly language and translated it to the binary encoding.

This has happened many times over for many different machines until the first cross-compilers were developed that could rewrite a program into another machine language.

Even now though there are still languages who are first implemented in terms of a machine language.

answered Oct 15, 2020 at 0:32

Kain0_0Kain0_0

15.7k16 silver badges36 bronze badges

2

Welcome to the amazing world of programming. This is one of the most useful and powerful skills that you can learn and use to make your visions come true.

In this handbook, we will dive into why programming is important, its applications, its basic concepts, and the skills you need to become a successful programmer.

You will learn:

- What programming is and why it is important.

- What a programming language is and why it is important.

- How programming is related to binary numbers.

- Real-world applications of programming.

- Skills you need to succeed as a programmer.

- Tips for learning how to code.

- Basic programming concepts.

- Types of programming languages.

- How to contribute to open source projects.

- And more…

Are you ready? Let’s begin! ✨

Did you know that computer programming is already a fundamental part of your everyday lives? Let’s see why. I’m sure that you will be greatly surprised.

Every time you turn on your smartphone, laptop, tablet, smart TV, or any other electronic device, you are running code that was planned, developed, and written by developers. This code creates the final and interactive result that you can see on your screen.

That is exactly what programming is all about. It is the process of writing code to solve a particular problem or to implement a particular task.

Programming is what allows your computer to run the programs you use every day and your smartphone to run the apps that you love. It is an essential part of our world as we know it.

Whenever you check your calendar, attend virtual conferences, browse the web, or edit a document, you are using code that has been written by developers.

«And what is code?» you may ask.

Code is a sequence of instructions that a programmer writes to tell a device (like a computer) what to do.

The device cannot know by itself how to handle a particular situation or how to perform a task. So developers are in charge of analyzing the situation and writing explicit instructions to implement what is needed.

To do this, they follow a particular syntax (a set of rules for writing the code).

A developer (or programmer) is the person who analyzes a problem and implements a solution in code.

Sounds amazing, right? It’s very powerful and you can be part this wonderful world too by learning how to code. Let’s see how.

You, as a developer.

Let’s put you in a developer’s shoes for a moment. Imagine that you are developing a mobile app, like the ones that you probably have installed on your smartphone right now.

What is the first thing that you would do?

Think about this for a moment.

The answer is…

Analyzing the problem. What are you trying to build?

As a developer, you would start by designing the layout of the app, how it will work, its different screens and functionality, and all the small details that will make your app an awesome tool for users around the world.

Only after you have everything carefully planned out, you can start to write your code. To do that, you will need to choose a programming language to work with. Let’s see what a programming language is and why they are super important.

🔸 What is a Programing Language?

A programming language is a language that computers can understand.

We cannot just write English words in our program like this:

«Computer, solve this task!»

and hope that our computer can understand what we mean. We need to follow certain rules to write the instructions.

Every programming language has its own set of rules that determine if a line of code is valid or not. Because of this, the code you write in one programming language will be slightly different from others.

💡 Tip: Some programming languages are more complex than others but most of them share core concepts and functionality. If you learn how to code in one programming language, you will likely be able to learn another one faster.

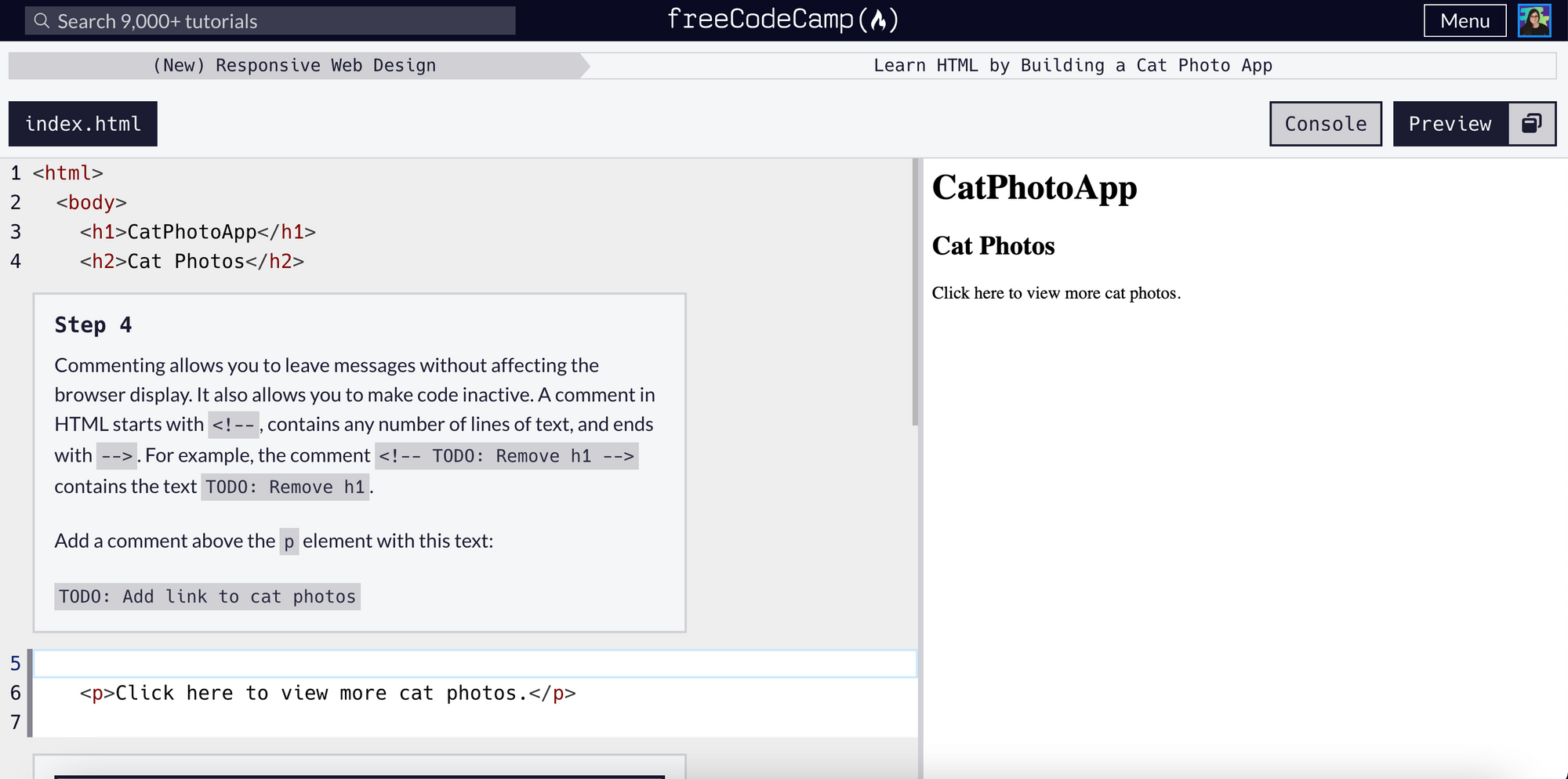

Before you can start writing awesome programs and apps, you need to learn the basic rules of the programming language you chose for the task.

💡 Tip: a program is a set of instructions written in a programming language for the computer to execute. We usually write the code for our program in one or multiple files.

For example, this is a line of code in Python (a very popular programming language) that shows the message "Hello, World!":

print("Hello, World!")But if we write the same line of code in JavaScript (a programming language mainly used for web development), we will get an error because it will not be valid.

To do something very similar in JavaScript, we would write this line of code instead:

console.log("Hello, World!");Visually, they look very different, right? This is because Python and JavaScript have a different syntax and a different set of built-in functions.

💡 Tip: built-in functions are basically tasks that are already defined in the programming language. This lets us use them directly in our code by writing their names and by specifying the values they need.

In our examples, print() is a built-in function in Python while console.log() is a function that we can use in JavaScript to see the message in the console (an interactive tool) if we run our code in the browser.

Examples of programming languages include Python, JavaScript, TypeScript, Java, C, C#, C++, PHP, Go, Swift, SQL, and R. There are many programming languages and most of them can be used for many different purposes.

💡 Tip: These were the most popular programming languages on the Stack Overflow Developer Survey 2022:

There are many other programming languages (hundreds or even thousands!) but usually, you will learn and work with some of the most popular ones. Some of them have broader applications like Python and JavaScript while others (like R) have more specific (and even scientific) purposes.

This sounds very interesting, right? And we are only starting to talk about programming languages. There is a lot to learn about them and I promise you that if you dive deeper into programming, your time and effort will be totally worth it.

Awesome! Now that you know what programming is and what programming languages are all about, let’s see how programming is related to binary numbers.

🔹 Programming and Binary Numbers

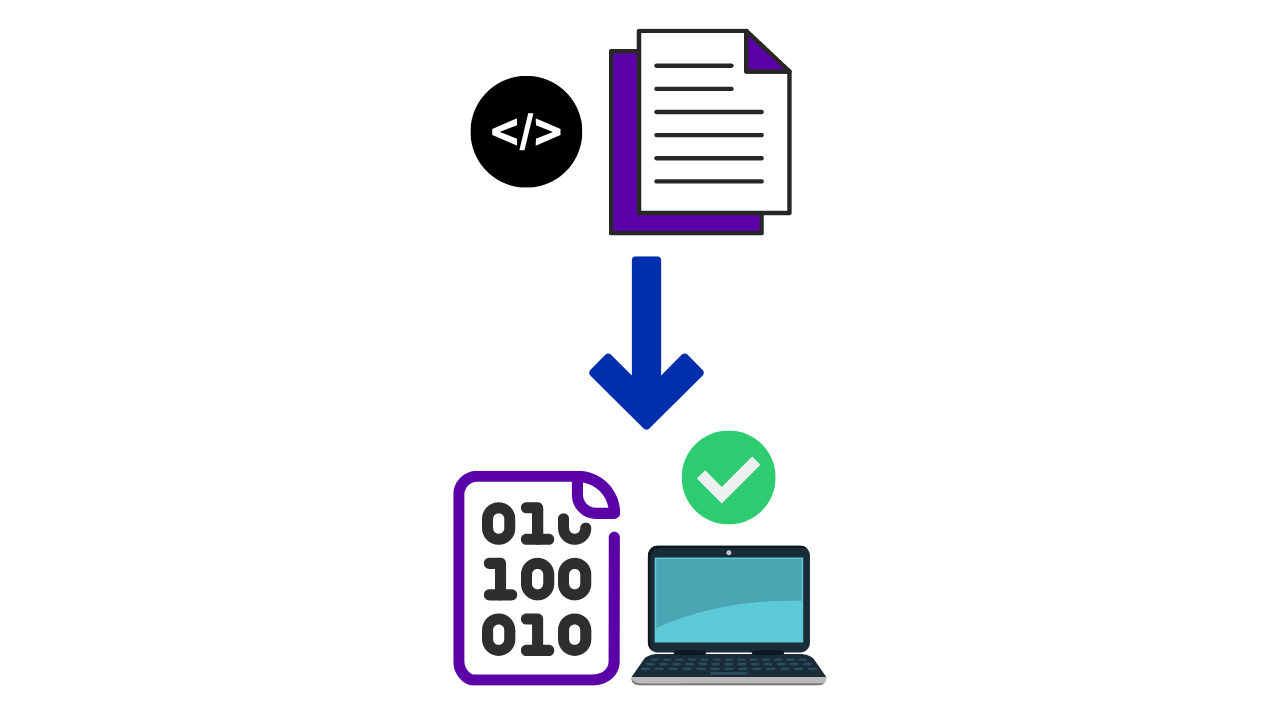

When you think about programming, perhaps the first thing that comes to your mind is something like the below image, right? A sequence of 0s and 1s on your computer.

Programming is indeed related to binary numbers ( 0 and 1) but in an indirect way. Developers do not actually write their code using zeros and ones.

We usually write programs in a high-level programming language, a programming language with a syntax that recognizes specific words (called keywords), symbols, and values of different data types.

Basically, we write code in a way that humans can understand.

For example, these are the keywords that we can use in Python:

False class from or

None continue global pass

True def if raise

and del import return

as elif in try

assert else is while

async except lambda with

await finally nonlocal yield

break for not Every programming language has its own set of keywords (words written in English). These keywords are part of the syntax and core functionality of the programming language.

But keywords are just common words in English, almost like the ones that we would find in a book.

That leads us to two very important questions:

- How does the computer understand and interpret what we are trying to say?

- Where does the binary number system come into play here?

The computer does not understand these words, symbols, or values directly.

When a program runs, the code that we write in a high-level programming language that humans can understand is automatically transformed into binary code that the computer can understand.

This transformation of source code that humans can understand into binary code that the computer can understand is called compilation.

According to Britannica, a compiler is defined as:

Computer software that translates (compiles) source code written in a high-level language (e.g., C++) into a set of machine-language instructions that can be understood by a digital computer’s CPU.

Britannica also mentions that:

The term compiler was coined by American computer scientist Grace Hopper, who designed one of the first compilers in the early 1950s.

Some programming languages can be classified as compiled programming languages while others can be classified as interpreted programming languages based on how to they are transformed into machine-language instructions.

However, they all have to go through a process that converts them into instructions that the computer can understand.

Awesome. Now you know why binary code is so important for computer science. Without it, basically programming would not exist because computers would not be able to understand our instructions.

Now let’s dive into the applications of programming and the different areas that you can explore.

🔸 Real-World Applications of Programming

Programming has many different applications in many different industries. This is truly amazing because you can apply your knowledge in virtually any industry that you are interested in.

From engineering to farming, from game development to physics, the possibilities are endless if you learn how to code.

Let’s see some of them. (I promise you. They are amazing! ⭐) .

Front-End Web Development

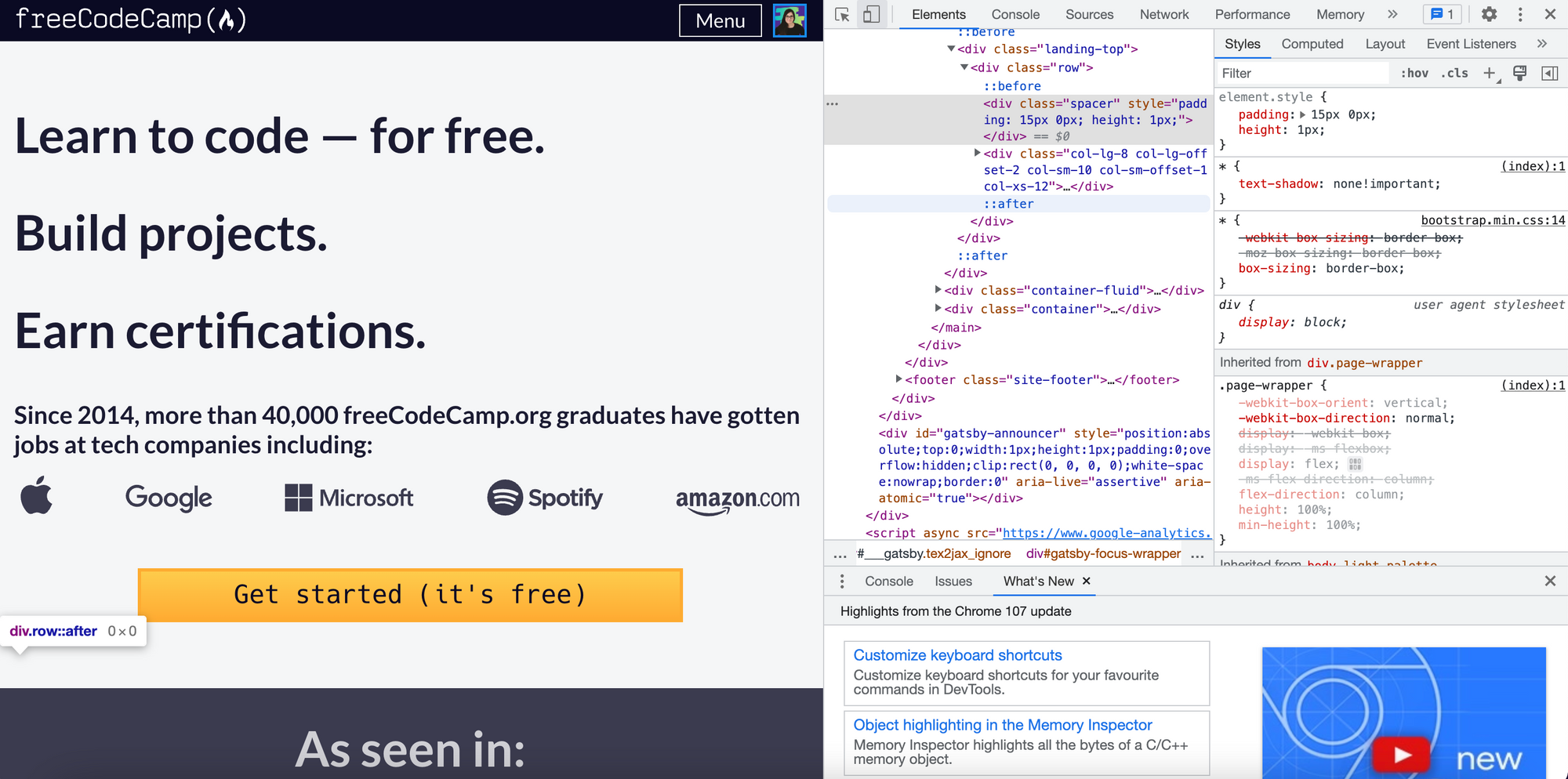

If you learn how to code, you can use your programming skills to design and develop websites and online platforms. Front-End Web Developers create the parts of the websites that users can see and interact with directly.

For example, right now you are reading an article on freeCodeCamp’s publication. The publication looks like this and it works like this thanks to code that front-end web developers wrote line by line.

💡 Tip: If you learn front-end web development, you can do this too.

Front-End Web Developers use HTML and CSS to create the structure of the website (these are markup languages, which are used to present information) and they write JavaScript code to add functionality and interactivity.

If you are interested in learning front-end web development, you can learn HTML and CSS with these free courses on freeCodeCamp’s YouTube Channel:

- Learn HTML5 and CSS3 From Scratch — Full Course

- Learn HTML & CSS – Full Course for Beginners

- Frontend Web Development Bootcamp Course (JavaScript, HTML, CSS)

- Introduction To Responsive Web Design — HTML & CSS Tutorial

You can also learn JavaScript for free with these free online courses:

- Learn JavaScript — Full Course for Beginners

- JavaScript Programming — Full Course

- JavaScript DOM Manipulation – Full Course for Beginners

- Learn JavaScript by Building 7 Games — Full Course

💡 Tip: You can also earn a Responsive Web Design Certification while you learn with interactive exercises on freeCodeCamp.

Back-End Web Development

More complex and dynamic web applications that work with user data also require a server. This is a computer program that receives requests and sends appropriate responses. They also need a database, a collection of values stored in a structured way.

Back-End Web Developers are in charge of developing the code for these servers. They decide how to handle the different requests, how to send appropriate resources, how to store the information, and basically how to make everything that runs behind the scenes work smoothly and efficiently.

A real-world example of back-end web development is what happens when you create an account on freeCodeCamp and complete a challenge. Your information is stored on a database and you can access it later when you sign in with your email and password.

This amazing interactive functionality was implemented by back-end web developers.

💡 Tip: Full-stack Web Developers are in charge of both Front-End and Back-End Web Development. They have specialized knowledge on both areas.

All the complex platforms that you use every day, like social media platforms, online shopping platforms, and educational platforms, use servers and back-end web development to power their amazing functionality.

Python is an example of a powerful programming language used for this purpose. This is one of the most popular programming languages out there, and its popularity continues to rise every year. This is partly because it is simple and easy to learn and yet powerful and versatile enough to be used in real-world applications.

💡 Tip: if you are curious about the specific applications of Python, this is an article I wrote on this topic.

JavaScript can also be used for back-end web development thanks to Node.js.

Other programming languages used to develop web servers are PHP, Ruby, C#, and Java.

If you would like to learn Back-End Web Development, these are free courses on freeCodeCamp’s YouTube channel:

- Python Backend Web Development Course (with Django)

- Node.js and Express.js — Full Course

- Full Stack Web Development for Beginners (Full Course on HTML, CSS, JavaScript, Node.js, MongoDB)

- Node.js / Express Course — Build 4 Projects

💡 Tip: freeCodeCamp also has a free Back End Development and APIs certification.

Mobile App Development

Mobile apps have become part of our everyday lives. I’m sure that you could not imagine life without them.

Think about your favorite mobile app. What do you love about it?

Our favorite apps help us with our daily tasks, they entertain us, they solve a problem, and they help us to achieve our goals. They are always there for us.

That is the power of mobile apps and you can be part of this amazing world too if you learn mobile app development.

Developers focused on mobile app development are in charge of planning, designing, and developing the user interface and functionality of these apps. They identify a gap in the existing apps and they try to create a working product to make people’s lives better.

💡 Tip: regardless of the field you choose, your goal as a developer should always be making people’s lives better. Apps are not just apps, they have the potential to change our lives. You should always remember this when you are planning your projects. Your code can make someone’s life better and that is a very important responsibility.

Mobile app developers use programming languages like JavaScript, Java, Swift, Kotlin, and Dart. Frameworks like Flutter and React Native are super helpful to build cross-platform mobile apps (that is, apps that run smoothly on multiple different operating systems like Android and iOS).

According to Flutter’s official documentation:

Flutter is an open source framework by Google for building beautiful, natively compiled, multi-platform applications from a single codebase.

If you would like to learn mobile app development, these are free courses that you can take on freeCodeCamp’s YouTube channel:

- Flutter Course for Beginners – 37-hour Cross Platform App Development Tutorial

- Flutter Course — Full Tutorial for Beginners (Build iOS and Android Apps)

- React Native — Intro Course for Beginners

- Learn React Native Gestures and Animations — Tutorial

Game Development

Games create long-lasting memories. I’m sure that you still remember your favorite games and why you love (or loved) them so much. Being a game developer means having the opportunity of bringing joy and entertainment to players around the world.

Game developers envision, design, plan, and implement the functionality of a game. They also need to find or create assets such as characters, obstacles, backgrounds, music, sound effects, and more.

💡 Tip: if you learn how to code, you can create your own games. Imagine creating an awesome and engaging game that users around the world will love. That is what I personally love about programming. You only need your computer, your knowledge, and some basic tools to create something amazing.

Popular programming languages used for game development include JavaScript, C++, Python, and C#.

If you are interested in learning game development, you can take these free courses on freeCodeCamp’s YouTube channel:

- JavaScript Game Development Course for Beginners

- Learn JavaScript by Building 7 Games — Full Course

- Learn Unity — Beginner’s Game Development Tutorial

- Learn Python by Building Five Games — Full Course

- Code a 2D Game Using JavaScript, HTML, and CSS (w/ Free Game Assets) – Tutorial

- 2D Game Development with GDevelop — Crash Course

- Pokémon Coding Tutorial — CS50’s Intro to Game Development

Biology, Physics, and Chemistry

Programming can be applied in every scientific field that you can imagine, including biology, physics, chemistry, and even astronomy. Yes! Scientists use programming all the time to collect and analyze data. They can even run simulations to test hypotheses.

Biology

In biology, computer programs can simulate population genetics and population dynamics. There is even an entire field called bioinformatics.

According to this article «Bioinformatics» by Ardeshir Bayat, member of the Centre for Integrated Genomic Medical Research at the University of Manchester:

Bioinformatics is defined as the application of tools of computation and analysis to the capture and interpretation of biological data.

Dr. Bayat mentions that bioinformatics can be used for genome sequencing. He also mentions that its discoveries may lead to drug discoveries and individualized therapies.

Frequently used programming languages for bioinformatics include Python, R, PHP, PERL, and Java.

💡 Tip: R is a programming «language and environment for statistical computing and graphics» (source).

An example of a great tool that scientists can use for biology is Biopython. This is a Python framework with «freely available tools for biological computation.»

If you would like to learn more about how you can apply your programming skills in science, these are free courses that you can take on freeCodeCamp’s YouTube channel:

- Python for Bioinformatics — Drug Discovery Using Machine Learning and Data Analysis

- R Programming Tutorial — Learn the Basics of Statistical Computing

- Learn Python — Full Course for Beginners [Tutorial]

Physics

Physics requires running many simulations and programming is perfect for doing exactly that. With programming, scientists can program and run simulations based on specific scenarios that would be hard to replicate in real life. This is much more efficient.

Programming languages that are commonly used for physics simulations include C, Java, Python, MATLAB, and JavaScript.

Chemistry

Chemistry also relies on simulations and data analysis, so it’s a field where programming can be a very helpful tool.

In this scientific article by Dr. Ivar Ugi and his colleagues from Organisch-chemisches Institut der Technischen Universität München, they mention that:

The design of entirely new syntheses, and the classification and documentation of structures, substructures, and reactons are examples of new applications of computers to chemistry.

Scientific experiments also generate detailed data and results that can be analyzed with computer programs developed by scientists.

Think about it: writing a program to generate a box plot or a scatter plot or any other type of plot to visualize trends in thousands of measurements can save researchers a lot of time and effort. This lets them focus on the most important part of their work: analyzing the results.

💡 Tips: if you are interested in diving deeper into this, this is a list of chemistry simulations by the American Chemical Society. These simulations were programmed by developers and they are helping thousands of students and teachers around the world.

Think about it…You could build the next great simulation. If you are interested in a scientific field, I totally recommend learning how to code. Your work will be much more productive and your results will be easier to analyze.

If you are interested in learning programming for scientific applications, these are free courses on freeCodeCamp’s YouTube channel:

- Python for Bioinformatics — Drug Discovery Using Machine Learning and Data Analysis

- Python for Data Science — Course for Beginners (Learn Python, Pandas, NumPy, Matplotlib)

Data Science and Engineering

Talking about data…programming is also essential for a field called Data Science. If you are interested in answering questions through data and statistics, this field might be exactly what you are looking for and having programming skills will help you to achieve your goals.

Data scientists collect and analyze data in order to answer questions in many different fields. According to UC Berkeley in the article «What is Data Science?»:

Effective data scientists are able to identify relevant questions, collect data from a multitude of different data sources, organize the information, translate results into solutions, and communicate their findings in a way that positively affects business decisions.

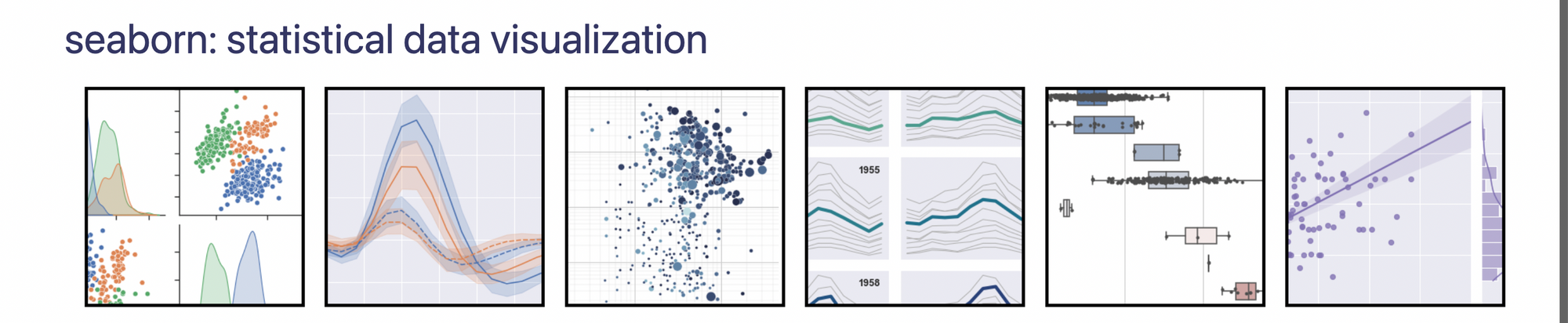

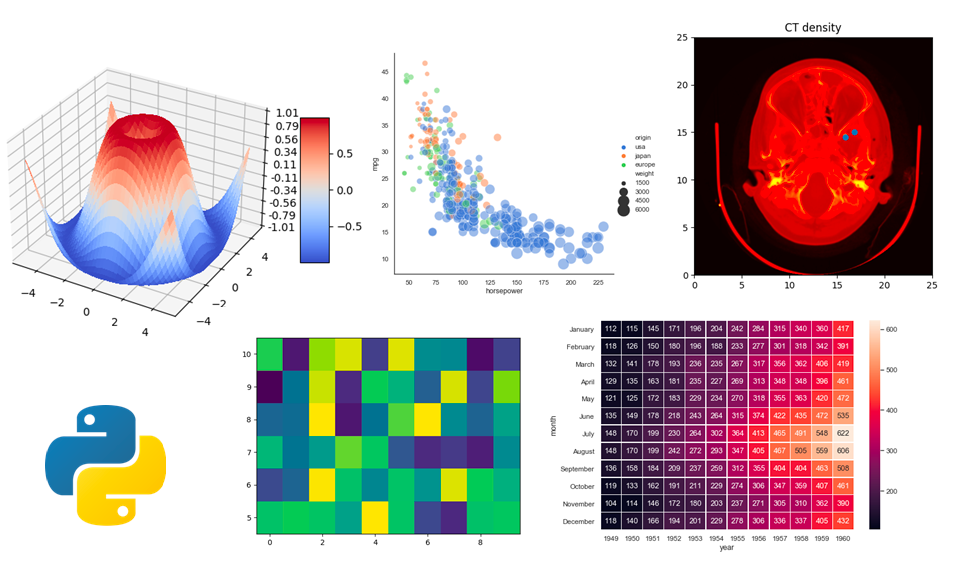

There are many powerful programming languages for analyzing and visualizing data, but perhaps one of the most frequently used ones for this purpose is Python.

This is an example of the type of data visualizations that you can create with Python. They are very helpful to analyze data visually and you can customize them to your fit needs.

If you are interested in learning programming for data science, these are free courses on freeCodeCamp’s YouTube channel:

- Learn Data Science Tutorial — Full Course for Beginners

- Intro to Data Science — Crash Course for Beginners

- Python for Data Science — Course for Beginners (Learn Python, Pandas, NumPy, Matplotlib)

- Build 12 Data Science Apps with Python and Streamlit — Full Course

- Data Analysis with Python — Full Course for Beginners (Numpy, Pandas, Matplotlib, Seaborn)

💡 Tip: you can also earn these free certifications on freeCodeCamp:

- Data Visualization

- Data Analysis with Python

Engineering

Engineering is another field where programming can help you to succeed. Being able to write your own computer programs can make your work much more efficient.

There are many tools created specifically for engineers. For example, the R programming language is specialized in statistical applications and Python is very popular in this field too.

Another great tool for programming in engineering is MATLAB. According to its official website:

MATLAB is a programming and numeric computing platform used by millions of engineers and scientists to analyze data, develop algorithms, and create models.

Really, the possibilities are endless.

You can learn MATLAB with this crash course on the freeCodeCamp YouTube channel.

If you are interested in learning engineering tools related to programming, this is a free course on freeCodeCamp’s YouTube channel that covers AutoCAD, a 2D and 3D computer-aided design software used by engineers:

- AutoCAD for Beginners — Full University Course

Medicine and Pharmacology

Medicine and pharmacology are constantly evolving by finding new treatments and procedures. Let’s see how you can apply your programming skills in these fields.

Medicine

Programming is really everywhere. If you are interested in the field of medicine, learning how to code can be very helpful for you too. Even if you would like to focus on computer science and software development, you can apply your knowledge in both fields.

Specialized developers are in charge of developing and writing the code that powers and controls the devices and machines that are used by modern medicine.

Think about it…all these machines and devices are controlled by software and someone has to write that software. Medical records are also stored and tracked by specialized systems created by developers. That could be you if you decide to follow this path. Sounds exciting, right?

According to the scientific article Application of Computer Techniques in Medicine:

Major uses of computers in medicine include hospital information system, data analysis in medicine, medical imaging laboratory computing, computer assisted medical decision making, care of critically ill patients, computer assisted therapy and so on.

Pharmacology

Programming and computer science can also be applied to develop new drugs in the field of pharmacology.

A remarkable example of what you can achieve in this field by learning how to code is presented in this article by MIT News. It describes how an MIT senior, Kristy Carpenter, was using computer science in 2019 to develop «new, more affordable drugs.» Kristy mentions that:

Artificial intelligence, which can help compute the combinations of compounds that would be better for a particular drug, can reduce trial-and-error time and ideally quicken the process of designing new medicines.

Another example of a real-world application of programming in pharmacology is related to Python (yes, Python has many applications!). Among its success stories, we find that Python was selected by AstraZeneca to develop techniques and programs that can help scientists to discover new drugs faster and more efficiently.

The documentation explains that:

To save time and money on laboratory work, experimental chemists use computational models to narrow the field of good drug candidates, while also verifying that the candidates to be tested are not simple variations of each other’s basic chemical structure.

If you are interested in learning programming for medicine or health-related fields, this is a free course on freeCodeCamp’s YouTube channel on programming for healthcare imaging:

- PyTorch and Monai for AI Healthcare Imaging — Python Machine Learning Course

Education

Have you ever thought that programming could be helpful for education? Well, let me tell you that it is and it is very important. Why? Because the digital learning tools that students and teachers use nowadays are programmed by developers.

Every time a student opens an educational app, browses an educational platform like freeCodeCamp, writes on a digital whiteboard, or attends a class through an online meeting platform, programming is making that possible.

As a programmer or as a teacher who knows how to code, you can create the next great app that will enhance the learning experience of students around the world.

Perhaps it will be a note-taking app, an online learning platform, a presentation app, an educational game, or any other app that could be helpful for students.

The important thing is to create it with students in mind if your goal is to make something amazing that will create long-lasting memories.

If you envision it, then you can create it with code.

Teachers can also teach their students how to code to develop their problem-solving skills and to teach them important skills for their future.

💡 Tip: if you are teaching students how to code, Scratch is a great programming language to teach the basics of programming. It is particularly focused on teaching children how to code in an interactive way.

According to the official Scratch website:

Scratch is the world’s largest coding community for children and a coding language with a simple visual interface that allows young people to create digital stories, games, and animations.

If you are interested in learning how to code for educational purposes, these are courses that you may find helpful on freeCodeCamp’s YouTube channel:

- Scratch Tutorial for Beginners — Make a Flappy Bird Game

- Computational Thinking & Scratch — Intro to Computer Science — Harvard’s CS50 (2018)

- Android Development for Beginners — Full Course

- Flutter Course for Beginners – 37-hour Cross Platform App Development Tutorial

- Learn Unity — Beginner’s Game Development Tutorial

Machine Learning, Artificial Intelligence, and Robotics

Some of the most amazing fields that are directly related to programming are Machine Learning, Artificial Intelligence, and Robotics. Let’s see why.

Artificial Intelligence is defined by Britannica as:

The project of developing systems endowed with the intellectual processes characteristic of humans, such as the ability to reason, discover meaning, generalize, or learn from past experience.

Machine learning is a branch or a subset of the field of Artificial Intelligence in which systems can learn on their own based on data. The goal of this learning process is to predict the expected output. These models continuously learn how to «think» and how to analyze situations based on their previous training.

The most commonly used programming languages in these fields are Python, C, C#, C++, and MATLAB.

Artificial intelligence and Machine Learning have amazing applications in various industries, such as:

- Image and object detection.

- Making predictions based on patterns.

- Text recognition.

- Recommendation engines (like when an online shopping platform shows you products that you may like or when YouTube shows you videos that you may like).

- Spam detection for emails.

- Fraud detection.

- Social media features like personalized feeds.

- Many more… there are literally millions of applications in virtually every industry.

If you are interested in learning how to code for Artificial Intelligence and Machine Learning, these are free courses on freeCodeCamp’s YouTube channel:

- Machine Learning for Everybody – Full Course

- Machine Learning Course for Beginners

- PyTorch for Deep Learning & Machine Learning – Full Course

- TensorFlow 2.0 Complete Course — Python Neural Networks for Beginners Tutorial

- Self-Driving Car with JavaScript Course – Neural Networks and Machine Learning

- Python TensorFlow for Machine Learning – Neural Network Text Classification Tutorial

- Practical Deep Learning for Coders — Full Course from fast.ai and Jeremy Howard

- Deep Learning Crash Course for Beginners

- Advanced Computer Vision with Python — Full Course

💡 Tip: you can also earn a Machine Learning with Python Certification on freeCodeCamp.

Robotics

Programming is also very important for robotics. Yes, robots are programmed too!

Robotics is defined by Britannica as the:

Design, construction, and use of machines (robots) to perform tasks done traditionally by human beings.

Robots are just like computers. They do not know what to do until you tell them what to do by writing instructions in your programs. If you learn how to code, you can program robots and industrial machinery found in manufacturing facilities.

If you are interested in learning how to code for robotics, electronics, and related fields, this is a free course on Arduino on freeCodeCamp’s YouTube channel:

- Arduino Course for Beginners — Open-Source Electronics Platform

Other Applications

There are many other fascinating applications of programming in almost every field. These are some highlights:

- Agriculture: in this article by MIT News, a farmer developed an autonomous tractor app after learning how to code.

- Self-driving cars: autonomous cars rely on software to analyze their surroundings and to make quick and accurate decisions on the road. If you are interested in this area, this is a course on this topic on freeCodeCamp’s YouTube channel.

- Finance: programming can also be helpful to develop programs and models that predict financial indicators and trends. For example, this is a course on algorithmic trading on freeCodeCamp’s YouTube channel.

The possibilities are endless. I hope that this section will give you a notion of why learning how to code is so important for your present and for your future. It will be a valuable skill to have in any field you choose.

Awesome. Now let’s dive into the soft skills that you need to become a successful programmer.

🔹 Skills of a Successful Programmer

After going through the diverse range of applications of programming, you must be curious to know what skills are needed to succeed in this field.

Curiosity

A programmer should be curious. Whether you are just starting to learn how to code or you already have 20 years of experience, coding projects will always present you with new challenges and learning opportunities. If you take these opportunities, you will continously improve your skills and succeed.

Enthusiasm

Enthusiasm is a key trait of a successful programmer but this applies in general to any field if you want to succeed. Enthusiasm will keep you happy and curious about what you are creating and learning.

💡 Tip: If you ever feel like you are not as enthusiastic as you used to be, it’s time to find or learn something new that can light the spark in you again and fill you with hope and dreams.

Patience

A programmer must be patient because transforming an initial idea into a working product can take time, effort, and many different steps. Patience will keep you focused on your final goal.

Resilience

Programming can be challenging. That is true. But what defines you is not how many challenges you face, it’s how you face them. If you thrive despite these challenges, you will become a better programmer and you could create something that could change the world.

Creativity

Programmers must be creative because even though every programming language has a particular set of rules for writing the code, coding is like using LEGOs. You have the building-blocks but you need to decide what to create and how to create it. The process of writing the code requires creativity while following the established best practices.

Problem-solving and Analysis

Programming is basically analyzing and solving problems with code. Depending on your field of choice, those problems will be simpler or more complex but they will all require some level of problem-solving skills and a thorough analysis of the situation.

Questions like:

- What should I build?

- How can I build it?

- What is the best way to build this?

Are part of the everyday routine of a programmer.

Ability to Focus for Long Periods of Time

When you are working on a coding project, you will need to focus on a task for long periods of time. From creating the design, to planning and writing the code, to testing the result, and to fixing bugs (issues with the code), you will dedicate many hours to a particular task. This is why it’s essential to be able to focus and to keep your final goal in mind.

Taking Detailed Notes

This skill is very important for programmers, particularly when you are learning how to code. Taking detailed notes can be help you to understand and remember the concepts and tools you learn. This also applies for experienced programmers, since being a programmer involves life-long learning.

Communication

Initially, you might think that programming is a solitary activity and imagine that a programmer spends hundreds of hours alone sitting on a desk.

But the reality is that when you find your first job, you will see that communication is super important to coordinate tasks with other team members and to exchange ideas and feedback.

Open to Feedback

In programming, there is usually more than one way to implement the same functionality. Different alternatives may work similarly, but some may be easier to read or more efficient in terms of time or resource consumption.

When you are learning how to code, you should always take constructive feedback as a tool for learning. Similarly, when you are working on a team, take your colleagues’ feedback positively and always try to improve.

Life-long Learning

Programming equals life-long learning. If you are interested in learning how to code, you must know that you will always need to be learning new things as new technologies emerge and existing technologies are updated. Think about it… that is great because there is always something interesting and new to learn!

Open to Trying New Things

Finally, an essential skill to be a successful programmer is to be open to trying new things. Step out of your comfort zone and be open to new technologies and products. In the technology industry, things evolve very quickly and adapting to change is essential.

🔸 Tips for Learning How to Code

Now that you know more about programming, programming languages, and the skills you need to be a successful programmer, let’s see some tips for learning how to code.

💡 Tip: these tips are based on my personal experience and opinions.

- Choose one programming language to learn first. When you are learning how to code, it’s easy to feel overwhelmed with the number of options and entry paths. My advice would be to focus on understanding the essential computer science concepts and one programming language first. Python and JavaScript are great options to start learning the fundamentals.

- Take detailed notes. Note-taking skills are essential to record and to analyze the topics you are learning. You can add custom comments and annotations to explain what you are learning.

- Practice constantly. You can only improve your problem-solving skills by practicing and by learning new techniques and tools. Try to practice every day.

💡 Tip: There is a challenge called the #100DaysOfCode challenge that you can join to practice every day.

- Always try again. If you can’t solve a problem on your first try, take a break and come back again and again until you solve it. That is the only way to learn. Learn from your mistakes and learn new approaches.

- Learn how to research and how to find answers. Programming languages, libraries, and frameworks usually have official documentations that explain their built-in elements and tools and how you can use them. This is a precious resource that you should definitely refer to.

- Browse Stack Overflow. This is an amazing platform. It is like an online encyclopedia of answers to common programming questions. You can find answers to existing questions and ask new questions to get help from the community.

- Set goals. Motivation is one of the most important factors for success. Setting goals is very important to keep you focused, motivated, and enthusiastic. Once you reach your goals, set new ones that you find challenging and exciting.

- Create projects. When you are learning how to code, applying your skills will help you to expand your knowledge and remember things better. Creating projects is the perfect way to practice and to create a portfolio that you can show to potential employers.

🔹 Basic Programming Concepts

Great. If reading this article has helped you confirm that you want to learn programming, let’s take your first steps.

These are some basic programming concepts that you should know:

- Variable: a variable is a name that we assign to a value in a computer program. When we define a variable, we assign a value to a name and we allocate a space in memory to store that value. The value of a variable can be updated during the program.

- Constant: a constant is similar to a variable. It stores a value but it cannot be modified. Once you assign a value to a constant, you cannot change it during the entire program.

- Conditional: a conditional is a programming structure that lets developers choose what the computer should do based on a condition. If the condition is True, something will happen but if the condition is False, something different can happen.

- Loop: a loop is a programming structure that let us run a code block (a sequence of instructions) multiple times. They are super helpful to avoid code repetition and to implement more complex functionality.

- Function: a function helps us to avoid code repetition and to reuse our code. It is like a code block to which we assign a name but it also has some special characteristics. We can write the name of the function to run that sequence of instructions without writing them again.

💡 Tip: Functions can communicate with main programs and main programs can communicate with functions through parameters, arguments, and return statements.

- Class: a class is used as a blueprint to define the characteristics and functionality of a type of object. Just like we have objects in our real world, we can represent objects in our programs.

- Bug: a bug is an error in the logic or implementation of a program that results in an unexpected or incorrect output.

- Debugging: debugging is the process of finding and fixing bugs in a program.

- IDE: this acronym stands for Integrated Development Environment. It is a software development environment that has the most helpful tools that you will need to write computer programs such as a file editor, an explorer, a terminal, and helpful menu options.

💡 Tip: a commonly used and free IDE is Visual Studio Code, created by Microsoft.

Awesome! Now you know some of the fundamental concepts in programming. Like you learned, each programming language has a different syntax, but they all share most of these programming structures and concepts.

🔸 Types of Programming Languages

Programming languages can be classified based on different criteria. If you want to learn how to code, it’s important for you to learn these basic classifications:

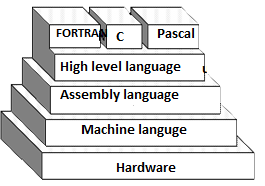

Complexity

- High-level programming languages: they are designed to be understood by humans and they have to be converted into machine code before the computer can understand them. They are the programming languages that we commonly use. For example: JavaScript, Python, Java, C#, C++, and Kotlin.

- Low-level programming languages: they are more difficult to understand because they are not designed for humans. They are designed to be understood and processed efficiently by machines.

Conversion into Machine Code

- Compiled programming languages: programs written with this type of programming language are converted directly into machine code by a compiler. Examples include C, C++, Haskell, and Go.

- Interpreted programming languages: programs written with this type of programming language rely on another program called the interpreter, which is in charge of running the code line by line. Examples include Python, JavaScript, PHP, and Ruby.

💡 Tip: according to this article on freeCodeCamp’s publication:

Most programming languages can have both compiled and interpreted implementations – the language itself is not necessarily compiled or interpreted. However, for simplicity’s sake, they’re typically referred to as such.

There are other types of programming languages based on different criteria, such as:

- Procedural programming languages

- Functional programming languages

- Object-oriented programming languages

- Scripting languages

- Logic programming languages

And the list of types of programming languages continues. This is very interesting because you can analyze the characteristics of a programming language to help you choose the right one for your project.

🔹 How to Contribute to Open Source Projects

Finally, you might think that coding implies sitting at a desk for many hours looking at your code without any human interaction. But let me tell you that this does not have to be true at all. You can be part of a learning community or a developer community.

Initially, when you are learning how to code, you can participate in a learning community like freeCodeCamp. This way, you will share your journey with others who are learning how to code, just like you.

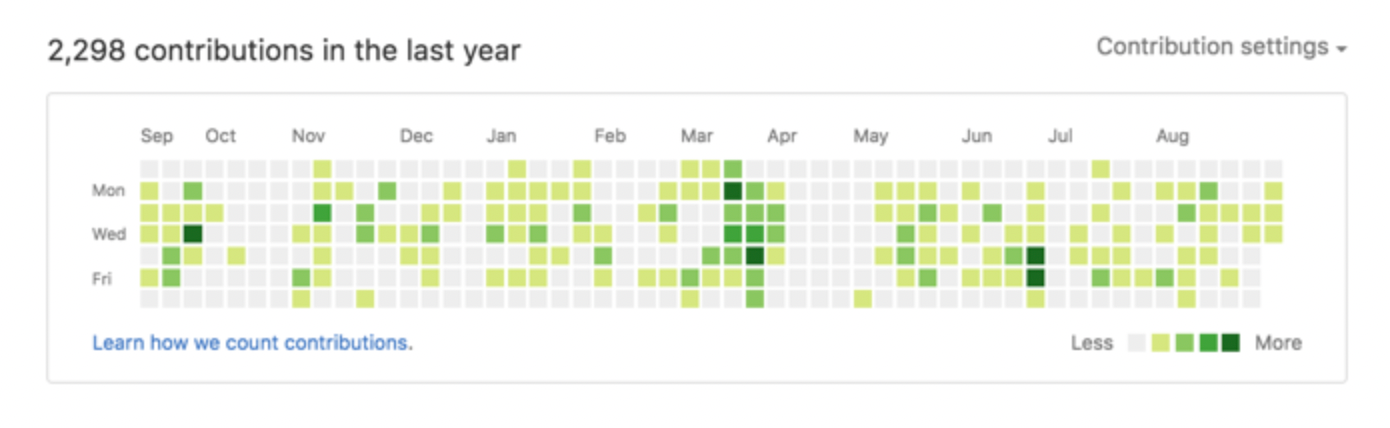

Then, when you have enough skills and confidence in your knowledge, you can practice by contributing to open source projects and join developer communities.

Open source software is defined by Opensource.com as:

Software with source code that anyone can inspect, modify, and enhance.

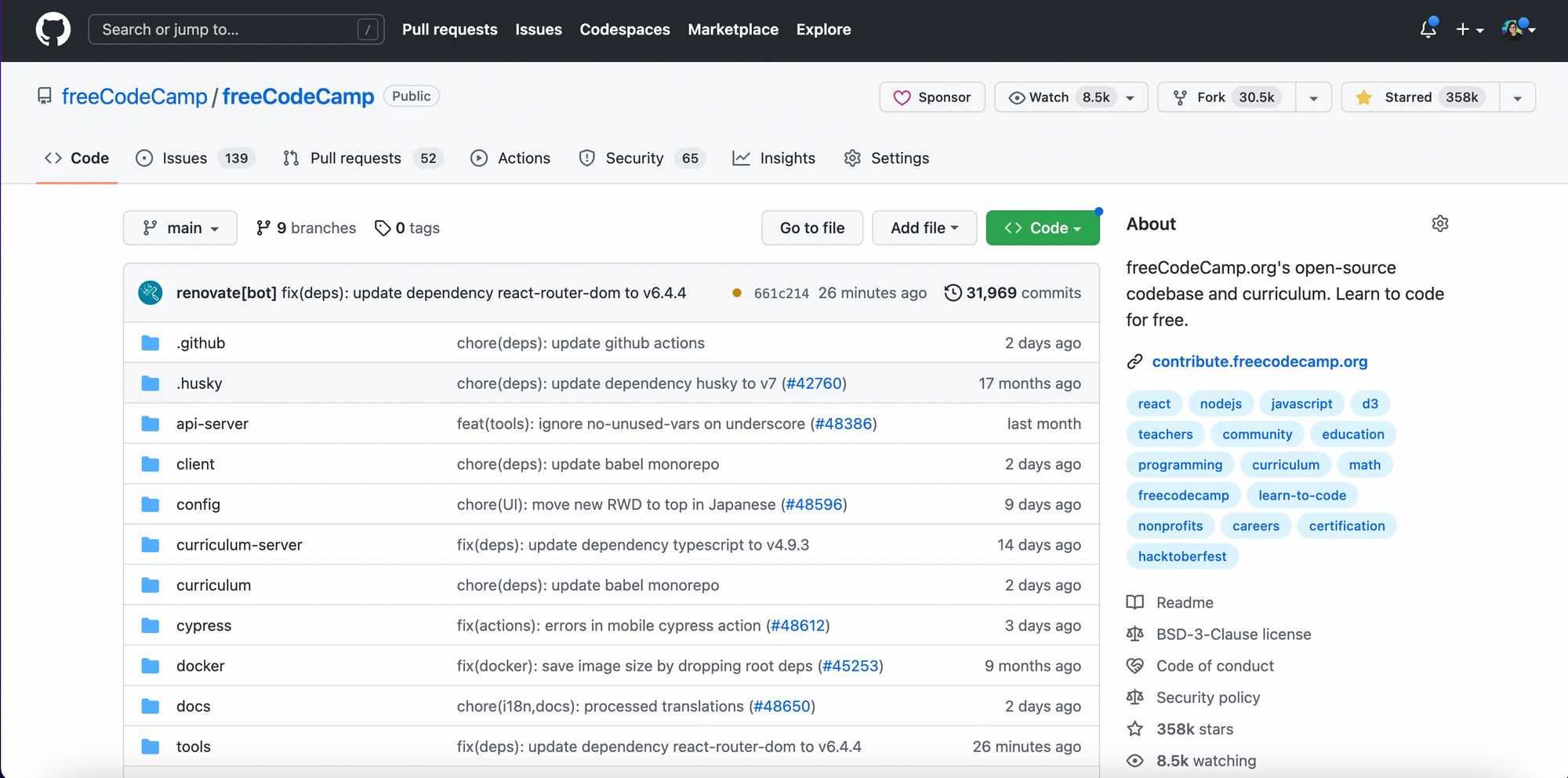

GitHub is an online platform for hosting projects with version control. There, you can find many open source projects (like freeCodeCamp) that you can contribute to and practice your skills.

💡 Tip: many open source projects welcome first-time contributions and contributions from all skill levels. These are great opportunities to practice your skills and to contribute to real-world projects.

Contributing to open source projects on GitHub is great to acquire new experience working and communicating with other developers. This is another important skill for finding a job in this field.

Working on a team is a great experience. I totally recommend it once you feel comfortable enough with your skills and knowledge.

You did it! You reached the end of this article. Great work. Now you know what programming is all about. Let’s see a brief summary.

🔸 In Summary

- Programming is a very powerful skill. If you learn how to code, you can make your vision come true.

- Programming has many different applications in many different fields. You can find an application for programming in basically any field you choose.

- Programming languages can be classified based on different criteria and they share basic concepts such as variables, conditionals, loops, and functions.

- Always set goals and take detailed notes. To succeed as a programmer, you need to be enthusiastic and consistent.

Thank you very much for reading my article. I hope you liked it and found it helpful. Now you know why you should learn how to code.

🔅 I invite you to follow me on Twitter (@EstefaniaCassN) and YouTube (Coding with Estefania) to find coding tutorials.

Learn to code for free. freeCodeCamp’s open source curriculum has helped more than 40,000 people get jobs as developers. Get started

Vocabulary

-

Match the words with their definitions:

|

[ɪ’pɪfənɪ] |

|

|

[kənstre͟ɪnt] |

|

|

[ɪn’kʌmbə] |

|

|

[kən’kʌr(ə)nt] |

|

|

[pro͟ʊn] |

|

|

[kəm’paɪl] |

|

|

[‘liŋkər] |

|

|

[ɪm’bɔdɪ], |

|

Before

you read

-

Discuss with your partner the following questions.

-

What

do you know about programming languages and paradigms? -

Is there any difference? Which one if any?

-

What

are the reasons for using programming languages and paradigms?

-

Skim the text to check your ideas.

READING

What is what?

I

n

this article, we will discuss programming languages and paradigms so

that you have a complete understanding. Let us first inspect if

there any difference is.

The

difference between programming paradigms and programming languages

is that programming language

is an artificial language that has vocabulary and sets of

grammatical rules to instruct a computer to perform specific tasks.

Programing paradigm

is a particular way (i.e., a ‘school of

thought’) of looking at a programming problem.

T

he

term programming language

usually refers to high-level languages, such as BASIC, C, C++,

COBOL, FORTRAN, Ada, and Pascal. Each language has a unique set of

keywords (words that it understands) and a special syntax

for organizing program instructions.

High-level programming languages, while simple compared to human

languages, are more complex than the languages the computer actually

understands, called machine languages.

Each different type of CPU has its own unique machine language.

Assembly languages

are lying between machine languages and high-level languages.

Assembly languages are similar to machine languages, but they are

much easier to program in because they allow a programmer to

substitute names for numbers. Machine languages consist of numbers

only. Lying above high-level languages are languages called

fourth-generation languages

(usually abbreviated 4GL).

4GLs are far removed from machine languages and represent the class

of computer languages closest to human languages. Regardless of what

language you use, you eventually need to convert your program into

machine language so that the computer can understand it. There are

two ways to do this: compile

the program and interpret

the program. A program that executes instructions is

written in a high-level language.

There are two ways to run programs written in a high-level language.

The most common is to compile the program. To transform a program

written in a high-level programming language from source

code into object

code. Programmers write programs in a form

called source code. Source code must go through several steps before

it becomes an executable program. The first step is to pass the

source code through a compiler,

which translates the high-level language instructions into object

code. The final step in producing an executable program — after the

compiler has produced object code — is to pass the object code

through a linker.

The linker combines modules and gives real values to all symbolic

addresses, thereby producing machine code.

The

other method is to pass the program through an interpreter. An

interpreter translates high-level instructions into an intermediate

form, which it then executes. In contrast, a compiler translates

high-level instructions directly into machine language. Compiled

programs generally run faster than interpreted programs. The

advantage of an interpreter, however, is that it does not need to go

through the compilation stage during which machine instructions are

generated. This process can be time-consuming if the program is

long. The interpreter, on the other hand, can immediately execute

high-level programs. For this reason, interpreters are sometimes

used during the development of a program, when a programmer wants to

add small sections at a time and test them quickly. In addition,

interpreters are often used in education because they allow students

to program interactively. Both interpreters and compilers are

available for most high-level languages. However, BASIC and LISP are

especially designed to be executed by an interpreter. In addition,

page description languages, such as PostScript, use an interpreter.

Every PostScript printer, for example, has a built-in interpreter

that executes PostScript instructions. The question of which

language is best is one that consumes a lot of time and energy among

computer professionals. Every language has its strengths and

weaknesses. For example, FORTRAN is a particularly good language for

processing numerical data, but it does not lend itself very well to

organizing large programs. Pascal is very good for writing

well-structured and readable programs, but it is not as flexible as

the C programming language. C++ embodies

powerful object-oriented features, but it is complex and difficult

to learn. The choice of which language to use depends on the type of

computer the program is to run

on, what sort of program it is, and the expertise of the programmer.

Computer programmers have evolved from the early days of the bit

processing first generation languages into sophisticated logical

designers of complex software applications. Programming is a rich

discipline and practical programming languages are usually quite

complicated. Fortunately, the important ideas of programming

languages are simple.

Adapted

from http://www.info.ucl.ac.be/~pvr/paradigms.htm

Usually,

the word «paradigm» is used to describe a thought pattern

or methodology that exists during a certain period of time. When

scientists refer to a scientific paradigm, they are talking about

the prevailing system of ideas that was dominant in a scientific

field at a point in time. When a person or field has a paradigm

shift,

it means that they are no longer using the old methods of thought

and approach, but have decided on a new approach, often reached

through an epiphany.

Programming

paradigm is a framework that defines how

the user conceptualized and interprets complex problems. It is also

is a fundamental style or the logical

approach to programming a computer based on a mathematical theory or

a coherent set of principles used in software engineering to

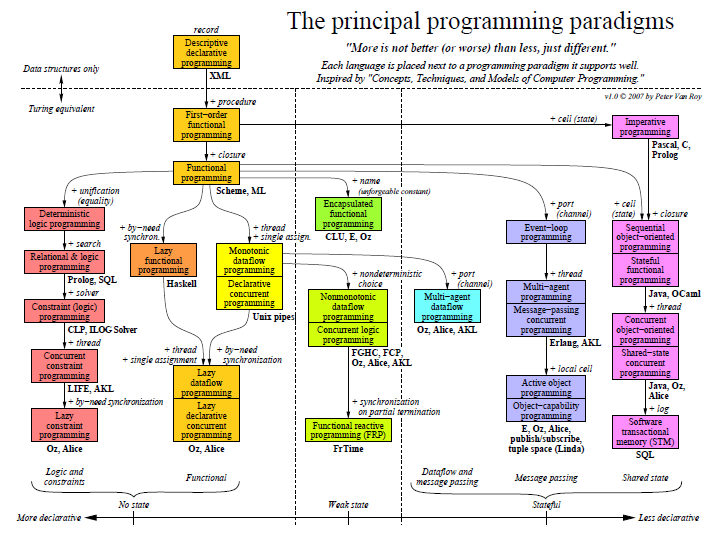

implement a programming language. There

are currently 27 paradigms (see the chart above) exist in the world.

Most of them are of similar concepts extending from the 4 main

programming paradigms.

P

rogramming

languages should support many paradigms. Let

us name 4 main programming paradigms: the

imperative paradigm,

the

functional paradigm,

the

logical paradigm,

the

object-oriented paradigm.

Other possible programming paradigms are: the visual paradigm, one

of the parallel/

concurrent paradigms

and the constraint

based

paradigm. The

paradigms are not exclusive, but reflect the different emphasis of

language designers. Most practical languages embody features of more

than one paradigm.

Each

paradigm supports a set of concepts that makes it the best for a

certain kind of problem. For example, object-oriented programming is

best for problems with a large number of related data abstractions

organized in a hierarchy. Logic programming is best for transforming

or navigating complex symbolic structures according to logical

rules. Discrete synchronous programming is best for reactive

problems, i.e., problems that consist of reactions to sequences of

external events. Programming

paradigms are unique to each language within the computer

programming domain, and many programming

languages utilize multiple paradigms. The term paradigm

is best described as a «pattern or model.» Therefore, a

programming

paradigm

can be defined as a pattern or model used within a software

programming

language to create software applications. Languages that support

these three paradigms are given in a classification table below.

|

Imperative/Algorithmic |

Declarative |

Object-Oriented |

|

|

Functional |

Logic |

||

|

Algol Cobol PL/1 Ada C Modula-3 Esterel |

Lisp Haskell ML Miranda APL |

Prolog |

Smalltalk Simula C++ Java |

Popular

mainstream languages such as Java or C++ support just one or two

separate paradigms. This is unfortunate, since different programming

problems need different programming concepts to solve them cleanly,

and those one or two paradigms often do not contain the right

concepts. A language should ideally support many concepts in a

well-factored way, so that the programmer can choose the right

concepts whenever they are needed without being encumbered

by the others. This style of programming is sometimes called

multiparadigm programming, implying that it is something exotic and

out of the ordinary.

Programming

languages are extremely logical and follow standard rules of

mathematics. Each language has a unique method for applying these

rules, especially around the areas of functions, variables, methods,

and objects. For example, programs written in C++ or Object Pascal

can be purely procedural, or purely object-oriented, or contain

elements of both paradigms. Software designers and programmers

decide how to use those paradigm elements. In object-oriented

programming, programmers can think of a program as a collection of

interacting objects, while in functional programming a program can

be thought of as a sequence of stateless function evaluations. When

programming computers or systems with many processors,

process-oriented programming allows programmers to think about

applications as sets of concurrent

processes acting upon logically shared data structures. Just as

different groups in software engineering advocate different

methodologies,

different programming languages advocate different programming

paradigms.

Some languages are designed to support one particular paradigm

(Smalltalk supports object-oriented programming, Haskell supports

functional programming), while other programming languages support

multiple paradigms (such as Object Pascal, C++, C#, Visual Basic,

Common Lisp, Scheme, Perl, Python, Ruby, Oz and F Sharp).

It

is helpful to understand the history of the programming

language and software in general to better grasp the concept of the

programming

paradigm. In the

early days of software development, software engineering was

completed by creating binary code or machine code, represented by 1s

and 0s. These binary manipulations caused programs to react in a

specified manner. This early computer programming is commonly

referred to as the «low-level» programming

paradigm. This

was a tedious and error prone

method for creating programs. Programming

languages quickly evolved into the «procedural» paradigm

or third generation languages including COBOL, Fortran, and BASIC.

These procedural programming languages define programs in a

step-by-step approach.

The

next evolution of programming

languages was to create a more logical approach to software

development, the «object oriented» programming

paradigm. This

approach is used by the programming

languages of Java™, Smalltalk, and Eiffel. This paradigm

attempts to abstract modules of a program into reusable objects.

In

addition to these programming

paradigms, there is also the «declarative» paradigm

and the «functional» paradigm.

While some programming

languages strictly enforce the use of a single paradigm,

many support multiple paradigms. Some examples of these types

include C++, C#, and Visual Basic®.

Each

paradigm

has unique requirements on the usage and abstractions of processes

within the programming

language. Nevertheless, Peter Van Roy says that understanding the

right concepts can help improve programming style even in languages

that do not directly support them, just as object-oriented

programming is possible in C with the right programmer attitude.

By

allowing developers flexibility within programming

languages, a programming

paradigm can be

utilized that best meets the business problem to be solved. As the

art of computer programming

has evolved, so too has the creation of the programming

paradigm. By

creating a framework of a pattern or model for system development,

programmers can create computer programs to be the most efficiency

within the selected paradigm.

LANGUAGE

DEVELOPMENT

Соседние файлы в предмете [НЕСОРТИРОВАННОЕ]

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

A programming language is a system of notation for writing computer programs.[1] Most programming languages are text-based formal languages, but they may also be graphical. They are a kind of computer language.

The description of a programming language is usually split into the two components of syntax (form) and semantics (meaning), which are usually defined by a formal language. Some languages are defined by a specification document (for example, the C programming language is specified by an ISO Standard) while other languages (such as Perl) have a dominant implementation that is treated as a reference. Some languages have both, with the basic language defined by a standard and extensions taken from the dominant implementation being common.

Programming language theory is the subfield of computer science that studies the design, implementation, analysis, characterization, and classification of programming languages.

Definitions[edit]

There are many considerations when defining what constitutes a programming language.

Computer languages vs programming languages[edit]

The term computer language is sometimes used interchangeably with programming language.[2] However, the usage of both terms varies among authors, including the exact scope of each. One usage describes programming languages as a subset of computer languages.[3] Similarly, languages used in computing that have a different goal than expressing computer programs are generically designated computer languages. For instance, markup languages are sometimes referred to as computer languages to emphasize that they are not meant to be used for programming.[4]

One way of classifying computer languages is by the computations they are capable of expressing, as described by the theory of computation. The majority of practical programming languages are Turing complete,[5] and all Turing complete languages can implement the same set of algorithms. ANSI/ISO SQL-92 and Charity are examples of languages that are not Turing complete, yet are often called programming languages.[6][7] However, some authors restrict the term «programming language» to Turing complete languages.[1][8]

Another usage regards programming languages as theoretical constructs for programming abstract machines and computer languages as the subset thereof that runs on physical computers, which have finite hardware resources.[9] John C. Reynolds emphasizes that formal specification languages are just as much programming languages as are the languages intended for execution. He also argues that textual and even graphical input formats that affect the behavior of a computer are programming languages, despite the fact they are commonly not Turing-complete, and remarks that ignorance of programming language concepts is the reason for many flaws in input formats.[10]

Domain and target[edit]

In most practical contexts, a programming language involves a computer; consequently, programming languages are usually defined and studied this way.[11] Programming languages differ from natural languages in that natural languages are only used for interaction between people, while programming languages also allow humans to communicate instructions to machines.

The domain of the language is also worth consideration. Markup languages like XML, HTML, or troff, which define structured data, are not usually considered programming languages.[12][13][14] Programming languages may, however, share the syntax with markup languages if a computational semantics is defined. XSLT, for example, is a Turing complete language entirely using XML syntax.[15][16][17] Moreover, LaTeX, which is mostly used for structuring documents, also contains a Turing complete subset.[18][19]

Abstractions[edit]

Programming languages usually contain abstractions for defining and manipulating data structures or controlling the flow of execution. The practical necessity that a programming language support adequate abstractions is expressed by the abstraction principle.[20] This principle is sometimes formulated as a recommendation to the programmer to make proper use of such abstractions.[21]

History[edit]

Early developments[edit]

Very early computers, such as Colossus, were programmed without the help of a stored program, by modifying their circuitry or setting banks of physical controls.

Slightly later, programs could be written in machine language, where the programmer writes each instruction in a numeric form the hardware can execute directly. For example, the instruction to add the value in two memory locations might consist of 3 numbers: an «opcode» that selects the «add» operation, and two memory locations. The programs, in decimal or binary form, were read in from punched cards, paper tape, magnetic tape or toggled in on switches on the front panel of the computer. Machine languages were later termed first-generation programming languages (1GL).

The next step was the development of the so-called second-generation programming languages (2GL) or assembly languages, which were still closely tied to the instruction set architecture of the specific computer. These served to make the program much more human-readable and relieved the programmer of tedious and error-prone address calculations.

The first high-level programming languages, or third-generation programming languages (3GL), were written in the 1950s. An early high-level programming language to be designed for a computer was Plankalkül, developed for the German Z3 by Konrad Zuse between 1943 and 1945. However, it was not implemented until 1998 and 2000.[22]

John Mauchly’s Short Code, proposed in 1949, was one of the first high-level languages ever developed for an electronic computer.[23] Unlike machine code, Short Code statements represented mathematical expressions in an understandable form. However, the program had to be translated into machine code every time it ran, making the process much slower than running the equivalent machine code.

At the University of Manchester, Alick Glennie developed Autocode in the early 1950s. As a programming language, it used a compiler to automatically convert the language into machine code. The first code and compiler was developed in 1952 for the Mark 1 computer at the University of Manchester and is considered to be the first compiled high-level programming language.[24][25]

The second auto code was developed for the Mark 1 by R. A. Brooker in 1954 and was called the «Mark 1 Autocode». Brooker also developed an auto code for the Ferranti Mercury in the 1950s in conjunction with the University of Manchester. The version for the EDSAC 2 was devised by D. F. Hartley of University of Cambridge Mathematical Laboratory in 1961. Known as EDSAC 2 Autocode, it was a straight development from Mercury Autocode adapted for local circumstances and was noted for its object code optimization and source-language diagnostics which were advanced for the time. A contemporary but separate thread of development, Atlas Autocode was developed for the University of Manchester Atlas 1 machine.

In 1954, FORTRAN was invented at IBM by John Backus. It was the first widely used high-level general-purpose programming language to have a functional implementation, as opposed to just a design on paper.[26][27] It is still a popular language for high-performance computing[28] and is used for programs that benchmark and rank the world’s fastest supercomputers.[29]

Another early programming language was devised by Grace Hopper in the US, called FLOW-MATIC. It was developed for the UNIVAC I at Remington Rand during the period from 1955 until 1959. Hopper found that business data processing customers were uncomfortable with mathematical notation, and in early 1955, she and her team wrote a specification for an English programming language and implemented a prototype.[30] The FLOW-MATIC compiler became publicly available in early 1958 and was substantially complete in 1959.[31] FLOW-MATIC was a major influence in the design of COBOL, since only it and its direct descendant AIMACO were in actual use at the time.[32]

Refinement[edit]

The increased use of high-level languages introduced a requirement for low-level programming languages or system programming languages. These languages, to varying degrees, provide facilities between assembly languages and high-level languages. They can be used to perform tasks that require direct access to hardware facilities but still provide higher-level control structures and error-checking.

The period from the 1960s to the late 1970s brought the development of the major language paradigms now in use:

- APL introduced array programming and influenced functional programming.[33]

- ALGOL refined both structured procedural programming and the discipline of language specification; the «Revised Report on the Algorithmic Language ALGOL 60» became a model for how later language specifications were written.

- Lisp, implemented in 1958, was the first dynamically-typed functional programming language.

- In the 1960s, Simula was the first language designed to support object-oriented programming; in the mid-1970s, Smalltalk followed with the first «purely» object-oriented language.

- C was developed between 1969 and 1973 as a system programming language for the Unix operating system and remains popular.[34]

- Prolog, designed in 1972, was the first logic programming language.

- In 1978, ML built a polymorphic type system on top of Lisp, pioneering statically-typed functional programming languages.

Each of these languages spawned descendants, and most modern programming languages count at least one of them in their ancestry.

The 1960s and 1970s also saw considerable debate over the merits of structured programming, and whether programming languages should be designed to support it.[35] Edsger Dijkstra, in a famous 1968 letter published in the Communications of the ACM, argued that Goto statements should be eliminated from all «higher-level» programming languages.[36]

Consolidation and growth[edit]

A small selection of programming language textbooks

The 1980s were years of relative consolidation. C++ combined object-oriented and systems programming. The United States government standardized Ada, a systems programming language derived from Pascal and intended for use by defense contractors. In Japan and elsewhere, vast sums were spent investigating the so-called «fifth-generation» languages that incorporated logic programming constructs.[37] The functional languages community moved to standardize ML and Lisp. Rather than inventing new paradigms, all of these movements elaborated upon the ideas invented in the previous decades.

One important trend in language design for programming large-scale systems during the 1980s was an increased focus on the use of modules or large-scale organizational units of code. Modula-2, Ada, and ML all developed notable module systems in the 1980s, which were often wedded to generic programming constructs.[38]

The rapid growth of the Internet in the mid-1990s created opportunities for new languages. Perl, originally a Unix scripting tool first released in 1987, became common in dynamic websites. Java came to be used for server-side programming, and bytecode virtual machines became popular again in commercial settings with their promise of «Write once, run anywhere» (UCSD Pascal had been popular for a time in the early 1980s). These developments were not fundamentally novel; rather, they were refinements of many existing languages and paradigms (although their syntax was often based on the C family of programming languages).

Programming language evolution continues, in both industry and research. Current directions include security and reliability verification, new kinds of modularity (mixins, delegates, aspects), and database integration such as Microsoft’s LINQ.

Fourth-generation programming languages (4GL) are computer programming languages that aim to provide a higher level of abstraction of the internal computer hardware details than 3GLs. Fifth-generation programming languages (5GL) are programming languages based on solving problems using constraints given to the program, rather than using an algorithm written by a programmer.

Elements[edit]

All programming languages have some primitive building blocks for the description of data and the processes or transformations applied to them (like the addition of two numbers or the selection of an item from a collection). These primitives are defined by syntactic and semantic rules which describe their structure and meaning respectively.

Syntax[edit]