A perceiver’s job in spoken word recognition is to use the data from their senses to decide which of the hundreds of words they know best fits the context. After 40 years of study, it is generally agreed that we recognize words via an engagement and competition process, with more frequently used terms receiving preference. Modern speech recognition models all use this procedure. However, the specifics may differ.

Explaining Spoken Word Recognition

Listeners with normal hearing can quickly and seemingly effortlessly adjust to various variations in the speech signal and the immediate listening environment. Strong SWR relies on early sensory processing and storage of language into lexical representations. However, the robust character of SWR cannot be fully accounted for by audibility and sensory processing, particularly in compromised listening environments. , researchers provide a background on the subject, cover some key theoretical concerns, and then examine some modern models of SWR. Finally, we highlight some exciting new avenues to pursue and hurdles to overcome, such as the ability of deaf youngsters with cochlear implantation, bilinguals, and elderly persons to understand speech with an accent.

Recently Developed Speech Recognition Models

Word recognition systems function best when they can dependably pick out the word whose lexical representation is most similar to the input representation. Even though this may seem obvious, a recognition system that merely compared the perceptual input to each lexical entry and picked the one with the best fit would be the best way to perform isolated word recognition without the interference of higher-level contextual constraints.

Track Down

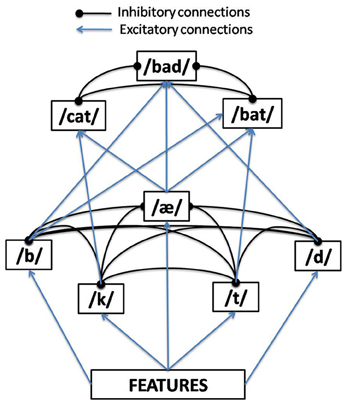

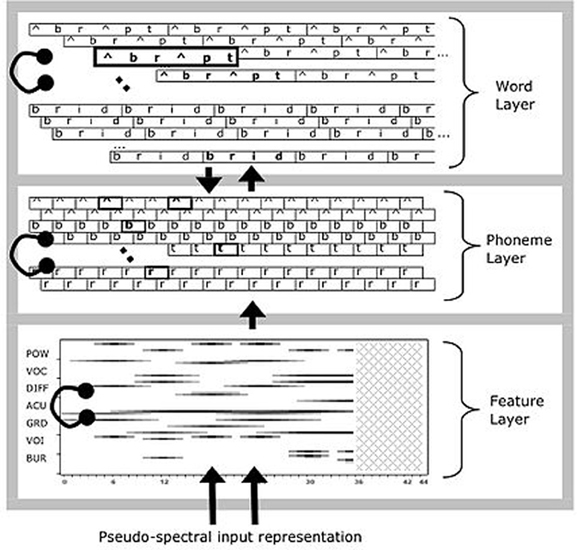

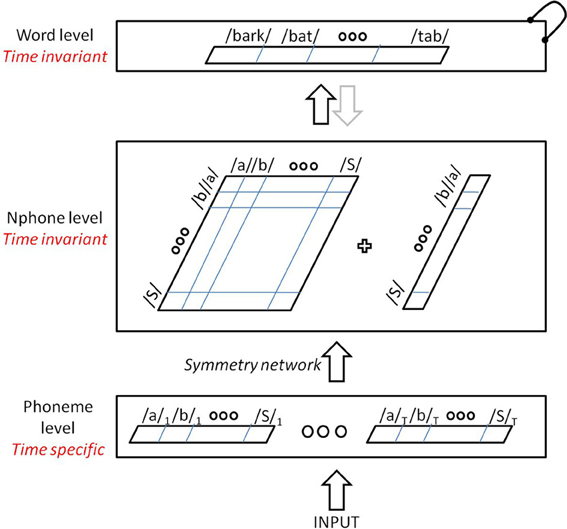

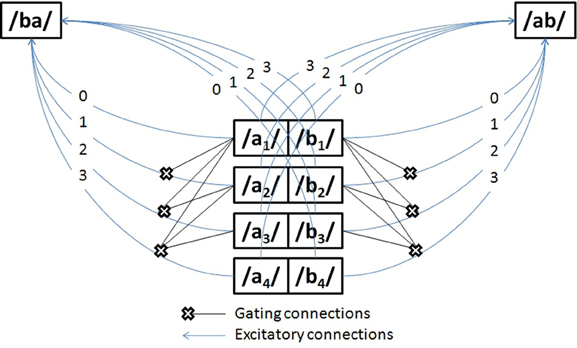

Trace model is a localist fully convolutional model of spoken word recognition based on interactive activation, with three tiers of nodes representing feature representations, phoneme representations, and word representations, respectively. Loyalist versions of word recognition treat allophones, phonemes, and words as discrete units. The processing units in Trace are interconnected by excitatory and inhibitory pathways, respectively, to increase and decrease unit activation in response to incoming stimuli and system activity.

Parsyn

The Parsyn model is a regionalist connectionist architecture with three tiers of linked units: input allophone, pattern allophone, and word. Within a level, connections between units are antagonistic to one another. However, linking respondents’ need to answer units at the design level is helpful in both directions.

Method for Analyzing Cohorts in a Distributed Setting

In the OCM (distributed cohort model), the activation associated with a word is spread among many low-level processors. The speech-based featural input is projected onto the basic semantic and phonological elements. Due to the decentralized nature of the OCM, no intermediary or sublexical representation elements can be found in the OCM. As a bonus, in contrast to the localist models’ reliance on a method of lateral inhibition, the lexical rivalry is depicted as a blending of multiple consistent lexical elements based on bottom-up input.

Activation-Competition Models

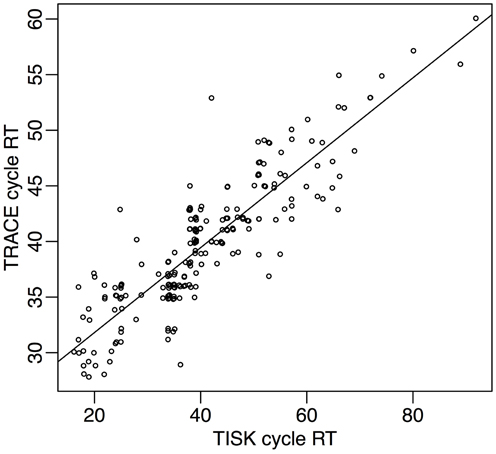

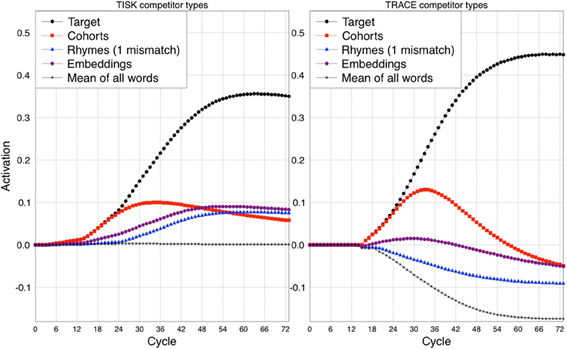

When put into perspective, the new batch of activation-competition systems has rather modest distinctions. According to all, multiple activation and rivalry among form-based lexical components define spoken word recognition. The fundamentals have been established, even though the particulars may differ. Segmentation, vocabulary, the type of lexical feedback, the significance of context, and so on are only a few of the phenomena that the models attempt to explain. Given the fundamental similarities of the existing models, it seems unlikely that these issues would ultimately determine which model should win out.

Referential Variation and Processing

Spoken word processing is significantly affected by subtle differences in the presentation of acoustic stimuli. Pisani (1992) as the first researchers to examine the processing costs associated with talker variability (a kind of indexical variation) Peters examined the differences in the clarity of single-talker and multi-talker transmissions in the presence of background noise. He discovered that one-on-one conversations were consistently easier to understand than group chats.

Phonological Shifts in Allophony

The present state of speech recognition models is inadequate when accounting for individual differences in pronunciation. Scientific studies on how indexical diversity in speech recognition is represented and processed provide weight to our argument. New research on allophonic variance points to gaps in the existing models. Allophonic variance refers to effective passive and acoustic differences among vocal stations belonging to the same phonics category insights into the possible shortcomings of present modeling methodologies have been provided by recent studies of allophonic variance.

Edges Activate Phonetic Counterparts

This finding defies capture by any existing computer model spoken or word recognition. For instance, the discovery that flaps trigger their phonemic counterparts dictates that, at a minimum, both Trace and Shortlist should include an allophonic layer of representation. Allophonic support is unique to PARSYN. On the other hand, PARSYN’s absence of phonemic representations may make it difficult to account for the activation of so. Some mediated access theories may also explain the observation that core representations are engaged. However, these theories need to explain the time course of cognition, specifically why the impacts of representations disappear when answers are fast. Finally, while the DCM may account for cases in which underlying models become deactivated, it will likely need help to emulate cases in which processing is impeded. For the umpteenth time, the current models cannot handle the pressure of variance.

Conclusion

Fundamental complications are presented by variance, requiring a rethinking of our models’ representational systems. New information points to the existence simultaneously as forms that contain both the concrete and the general. Furthermore, we need to imagine systems in which the processing of the particular and the general follows a time course that is predictable and represents the underlying design of the processing system. Last but not least, the next wave of models we develop will need to account for the malleable character of human perception. The adult brain seems capable of fine and frequent tuning in response to external input. Models of recognition that do justice to the subject need to include control conditions that can take into account the ability to adapt to perception, which will undoubtedly have far-reaching consequences for the structure and design of the representational system.

Such accounts of SWR assume that highly detailed stimulus information in the speech signal and listening environment is encoded, processed, and stored by the listener and subsequently becomes an inseparable component of rich and highly detailed lexical representations of spoken words (Port, 2010a, 2010b).

From: Neurobiology of Language, 2016

Spoken Word Recognition

David B. Pisoni, Conor T. McLennan, in Neurobiology of Language, 2016

The most distinctive hallmark of human spoken word recognition (SWR) is its perceptual robustness to the presence of acoustic variability in the transmission and reception of the talker’s linguistic message. Normal-hearing listeners adapt rapidly with little apparent effort to many different sources of variability in the speech signal and their immediate listening environment. Sensory processing and early encoding of speech into lexical representations are critical for robust SWR. However, audibility and sensory processing are not sufficient to account for the robust nature of SWR, especially under degraded listening conditions. In this chapter, we describe the historical roots of the field, present a selective review of the principle theoretical issues, and then consider several contemporary models of SWR. We conclude by identifying promising new directions and future challenges, including the perception of foreign accented speech, SWR by deaf children with cochlear implants, bilinguals, and older adults.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780124077942000201

Computational Psycholinguistics

R. Klabunde, in International Encyclopedia of the Social & Behavioral Sciences, 2001

3.2 Computer Models of Language Comprehension

Since comprehension begins with the recognition of words, this section deals first with models of spoken word recognition. In spoken word recognition, the models proposed must answer two main questions. First, they must describe how the sensory input is mapped onto the lexicon, and second, what the processing units are in this process.

The Trace model (McClelland and Elman 1986) is an interactive model that simulates how a word will be identified from a continuous speech signal. The problem with this task is that continuity of the signal does not provide clear hints about where a word begins and where it ends. By means of activation spreading through a network that consists of three layers (features, phonemes, and words), the system generates competitor forms, converging to the ultimately selected word. Competition is realized by inhibitory links between candidates.

The Shortlist model (Norris 1994) is based on a modular architecture with two distinct processing stages. It uses spreading activation as well, but in a strictly bottom-up way. Contrary to Trace, it generates a restricted set of lexical candidates during the first stage. During the second stage, the best fitting words are linked via an activation network.

Both models account for experimental data on the time course of word recognition. Assumptions on the direction of activation flow and its nature lead to several differing predictions, but the main different prediction concerns lexical activation. While Trace assumes that a very large number of items are activated, Shortlist assumes that a much smaller set of candidates is available so that the recognition of words beginning at different time points is explained differently.

The last model is a model of sentence processing. In sentence processing, one of the fundamental questions is why certain sentences receive a preferred syntactic structure and semantic interpretation.

The Sausage Machine (Fodor and Frazier 1980) is a parsing model that assumes two stages in parsing with both stages having a limited working capacity. The original idea behind the model is to explain preferences in syntactic processing solely on the architectural basis by means of the limitations in the working memories.

The Sausage Machine is a quasi-deterministic model, because one syntactic structure for each sentence is generated. Only if the analysis turns out to be wrong, is a reanalysis performed. Since reanalyzing a sentence is a time consuming process, the system tries to avoid this whenever possible. The model accounts for garden path effects and the difficulty in understanding multiple center embedded sentences (like ‘the house the man sold burnt down’). Furthermore, the model explains interpretation preferences by means of the limitation in working memories. However, it is now understood that the architecture of a system cannot be the only factor that is responsible for processing preferences, but additional parsing principles must be assumed (Wanner 1980). Newer computational models of sentence processing show that an explanation of several phenomena in sentence processing requires the early check of partial syntactic structures with lexical and semantic knowledge (Hemforth 1993).

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767005428

The Temporal Lobe

Delaney M. Ubellacker, Argye E. Hillis, in Handbook of Clinical Neurology, 2022

Conclusions

Lesion studies show that specific regions of the left (or bilateral) temporal cortex are associated with distinct impairments in object recognition, spoken word comprehension, naming, reading, and spelling. These areas are thought to be critical nodes in networks of the brain that link various forms of input to meaning and to output. Although the nodes work together as neural networks, damage to different nodes results in distinct language deficits. Studies of functional and structural imaging are beginning to reveal how these cortical nodes and associated white matter tracts work together to support language functions.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128234938000134

Word Recognition

J. Zevin, in Encyclopedia of Neuroscience, 2009

Superior Temporal Gyrus and Superior Temporal Sulcus

Superior temporal gyrus (STG) is the site of auditory association cortex (and a site of multisensory integration) and thus necessarily plays some role in spoken word recognition. Evidence that its role extends beyond that of higher-level perceptual processing was found as early as 1871 by Karl Wernicke. He discovered that lesions in the posterior portion of the left STG were associated with the loss of the ability to comprehend and produce spoken words. More recently, it has been found that this phenomenon of ‘Wernicke’s aphasia’ is observed to result from brain damage to a broad variety of sites; furthermore, damage to traditional Wernicke’s area does not always result in aphasic symptoms.

Neuroimaging studies of healthy subjects have found evidence for a role in processing of word meanings for both anterior and posterior STG as well as superior temporal sulcus (STS; the sulcus that divides the STG from the middle temporal gyrus). The STS, in particular, responds more strongly to interpretable speech than to a range of stimuli matched on lower level acoustic dimensions. The STS is also a likely site of integration between print and sound in visual word recognition.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780080450469018817

The Neurobiology of Lexical Access

Matthew H. Davis, in Neurobiology of Language, 2016

44.5 Conclusion

This chapter has presented a tripartite account of the brain regions that support lexical processing of spoken words. The functional goals of three temporal lobe systems have been introduced in the context of key computational challenges associated with spoken word recognition. First, listeners must integrate current speech sounds with previously heard speech in recognizing words. This motivates a hierarchy of representations that temporally integrate speech signals over time localized to anterior regions of the STG. A second challenge is that for listeners to repeat degraded words correctly requires that they recover the speakers’ intended articulatory gestures. The tripartite account localizes this process to auditory-motor links mediated by TPJ regions and proposes that these links play a key role in supporting robust identification and perceptual learning of degraded spoken words. The third challenge relates to extracting meaning from spoken words, which is proposed to be supported by cortical areas in posterior ITG and surrounding regions (MTG and fusiform). Despite the three-way functional segregation that is at the heart of this triparate account, this chapter also acknowledges that reliable recognition of familiar words, optimally efficient processing of incoming speech, and learning of novel spoken words all depend on combining information between these processing pathways. This is achieved through convergent connectivity within the lateral temporal lobe, in frontal or medial temporal regions, and through top-down predictions mapped back to peri-auditory regions of the STG. A key goal for future research must be to specify these convergent mechanisms in more detail and derive precise computational proposals for how the tripartite lexical system supports the everyday demands of human speech comprehension.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780124077942000444

Language in Aged Persons

E.A.L. Stine-Morrow, M.C. Shake, in Encyclopedia of Neuroscience, 2009

Word processing

Vocabulary often shows an increase with age, particularly among those who read regularly. Word recognition appears to be highly resilient through late life. For example, word frequency effects (i.e., faster processing for more familiar words) in reading and word naming are typically at least as large for older adults as they are for young. Sublexical features (e.g., neighborhood density), however, may have a smaller effect on processing time on older readers, suggesting that accumulated experience with literacy may increase the efficiency of orthographic decoding.

By contrast, declines in auditory processing can make spoken word recognition more demanding so that more acoustic information is needed to isolate the lexical item. Such effects may not merely disrupt encoding of the surface form but also tax working memory resources that would otherwise be used to construct a representation of the text’s meaning. For example, when elders with normal or impaired hearing listen to a word list and are interrupted periodically to report the last word heard, they may show negligible differences. However, if asked to report the last three words, hearing-impaired elders will likely show deficits. The explanation for such a provocative finding is that the hearing-impaired elders overcome a sensory loss at some attentional cost so as to exert a toll on semantic and elaborative processes that enhance memory. Presumably, the same mechanisms would operate in ordinary language processing.

At the same time, there is evidence that older adults can take differential advantage of context in the recognition of both spoken and written words, especially in noisy environments. Semantic processes at the lexical level also appear to be largely preserved. Semantic priming effects (i.e., facilitation in word processing by prior exposure to a related word) are typically at least as large among older adults as among the young. Also, in the arena of neurocognitive function, evoked potentials show similar lexical effects for young and old – a reduced N400 component for related words relative to unrelated controls. One area of difficulty that older adults may have in word processing is in deriving the meaning of novel lexical items from context, with research showing that older adults are likely to infer more generalized and imprecise meanings relative to the young – a difference that can be largely accounted for in terms of working memory deficits.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780080450469018726

Word Recognition, Cognitive Psychology of

M.A. Moreno, G.C. van Orden, in International Encyclopedia of the Social & Behavioral Sciences, 2001

2 Word Recognition as Information Processing

The logic of the additive factors method also fits the metaphor of cognition as information processing. For the chain of dominoes, substitute the guiding analogy of a flow chart of information processing, like unidirectional flow charts of computer programs. Information flows from input (stimulus) to output (behavior) through a sequence of cognitive components. In word recognition, input representations from a sensory process—visual or auditory features of a word—are transformed into an output representation—the identity of the word—that, in turn, becomes the input representation for a component downstream (i.e., a component of response production or sentence processing). In this tradition, empirical studies of word recognition pertain to the structure and function of the lexicon. The lexicon is a memory component, a mental dictionary, containing representations of the meanings, spellings, pronunciations, and syntactic functions of words. Structure refers to how the word entries are organized, and function refers to how words are accessed in, or retrieved from, the lexicon. Two seminal findings illustrate the distinction: semantic priming effects and word frequency effects. Both effects are found in lexical decision performance.

2.1 The Lexical Decision Task

In the lexical decision task, a person is presented, on each trial, with a target string of letters, and must judge whether the target string is a correctly spelled word in English (or some other reference language). Some trials are catch trials, which present nonwords such as ‘glurp.’ (One may also present words and nonwords auditorally, to examine spoken word recognition.) The participant presses a ‘word’ key to indicate a word and a ‘nonword’ key otherwise. The experimenter takes note of the response time, from when the target stimulus appeared until the response key is pressed, and whether the response was correct. Response time and accuracy are the performance measures.

2.2 Semantic Priming and the Structure of the Lexicon

Word pairs with related meanings, such as ‘doctor’ and ‘nurse’ or ‘bread’ and ‘butter,’ produce semantic priming effects. Semantic priming was discovered by David Meyer and Roger Schvaneveldt, working independently (they chose to report their findings together). Lexical decision performance to a word is improved by prior presentation of its semantically related word. Prior recognition of ‘doctor,’ as a word, facilitates subsequent recognition of ‘nurse’; lexical decisions to ‘nurse’ are faster and more accurate, compared with a control condition. This finding is commonly interpreted to mean that semantically related words are structurally connected in the lexicon, such that retrieval of one inevitably leads to retrieval of the other (in part or in whole).

2.3 Word Frequency and the Function of Lexical Access

Word frequency is estimated using frequency counts. The occurrence of each word, per million, is counted in large samples of text. Lexical decision performance is correlated with word frequency. Words that occur more often in text (or in speech) are recognized faster and more accurately than words that occur infrequently. This finding is interpreted in a variety of ways. The common theme is that lexical access functions in a manner that favors high-frequency words. In one classical account, proposed by John Morton, access to a lexical entry is via a threshold. Word features may sufficiently activate a lexical entry, to cross its activation threshold, and thus make that entry available. Common, high-frequency words have lower threshold values than less common words. In a different classical account, proposed by Kenneth Forster, the lexicon is searched in order of word frequency, beginning with high frequency words.

2.4 Challenges to the Information Processing Approach

Additive interaction effects are almost never observed in word recognition experiments, and, while it is not possible to manipulate all word factors simultaneously in one experiment, it is possible to trace chains of nonadditive interactions across published experiments that preclude the assignment of any factors to distinct components. Moreover, all empirical phenomena of word recognition appear to be conditioned by task, task demands, and even the reference language, as the examples that follow illustrate.

The same set of words, which produce a large word frequency effect in the lexical decision task, produce a reduced or statistically unreliable word frequency effect in naming and semantic categorization tasks. All these tasks would seem to include word recognition, but they do not yield the same word recognition effects. Also, within the lexical decision task, itself, it is possible to modulate the word frequency effect by making the nonwords more or less word-like (and, in turn, to modulate a nonadditive interaction effect between word frequency and semantic priming). Across languages, Hebrew produces a larger word frequency (familiarity) effect than English, and English than Serbo–Croation.

Consider the previous examples together, within the guidelines of additive factors logic. Word recognition factors cannot be individuated from each other, and they cannot be individuated from the context of their occurrence (task, task demands, and language). The limitations of additive factors method are well known. Because additivity is never consistently observed, we have no empirical basis for individualizing cognitive components. The de facto practice in cognitive psychology is to assume that laboratory tasks and manipulations may differ from each other by the causal equivalent of one component (‘one domino’). But how does one know which tasks or manipulations differ by exactly one component? We require a priori knowledge of cognitive components, and which components are involved in which laboratory tasks, to know reliably which or how many components task conditions entail. Notice this circularity, pointed out by Robert Pachella: the goal is to discover cognitive components in observed laboratory performance, but the method requires prior knowledge of the self same components.

Despite these problems, most theorists share the intuition that a hypothetical component of word recognition exists. When intuitions diverge, however, there may be no way to reconcile differences. Theorists who assume that reading is primarily an act of visual perception discover a visual component of word recognition; theorists who assume that reading is primarily a linguistic process discover a linguistic component of word recognition, in the same performance phenomena. Repeated contradictory discoveries, in the empirical literature, have lead to a vast debate concerning which task conditions provide an unambiguous view of word recognition in operation. The debate hinges on exclusionary criteria that may correctly exclude task effects and bring word recognition into clearer focus. Otherwise, inevitably, one laboratory’s word recognition effect is another laboratory’s task artifact.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767015539

Language and Aging☆

Matthew C. Shake, Elizabeth A.L. Stine-Morrow, in Reference Module in Neuroscience and Biobehavioral Psychology, 2017

Word Processing

Vocabulary often shows an increase with age, particularly among those with well-developed and sustained literacy practices. Word recognition appears to be highly resilient through late life. For example, word frequency effects (i.e., faster processing for more familiar words) in reading and word naming are typically at least as large for older adults as they are for young. Sublexical features (e.g., neighborhood density, a measure of how similar a word is to other words in the language), however, may have a smaller effect on processing time on older readers, suggesting that accumulated experience with literacy may increase the efficiency of orthographic decoding.

By contrast, declines in auditory processing can make spoken word recognition more demanding so that more acoustic information is needed to isolate the lexical item. Such effects may not merely disrupt encoding of the surface form but also tax working memory resources that would otherwise be used to construct a representation of the text’s meaning. For example, when elders with normal or impaired hearing listen to a word list and are interrupted periodically to report the last word heard, they may show negligible differences. However, if asked to report the last three words, hearing-impaired elders will likely show deficits. The explanation for such a provocative finding is that the hearing-impaired elders overcome a sensory loss at some attentional cost so as to exert a toll on semantic and elaborative processes that enhance memory. Presumably, the same mechanisms would operate in ordinary language processing.

At the same time, there is behavioral evidence that older adults can take differential advantage of context in the recognition of both spoken and written words, especially in noisy environments. Semantic processes at the lexical level also appear to be largely preserved. Semantic priming effects (i.e., facilitation in word processing by prior exposure to a related word) are typically at least as large among older adults as among the young. Also, in the arena of neurocognitive function, electrophysiological data show that some kinds of stimulus-evoked changes in brain potentials (known as event-related brain potentials, or ERPs) are similar in young and old for words presented in isolation; for example, a reduced “N400 component” (a negative shift in waveform amplitude occurring approximately 400 ms after word presentation) for predictable relative to unpredictable words. However, older adults tend to show this effect to a much reduced degree, suggesting that activation of meaning features as the text unfolds may be more constrained among older adults relative to the young. Older adults with higher verbal fluency, on the other hand, appear better able to engage in anticipatory language processing as younger adults do. A puzzle that remains is to reconcile the electrophysiological data suggesting that older adults are less likely to use predictive processing to isolate words from context with behavioral data showing enhanced contextual facilitation with age.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128093245018897

Processing Tone Languages

Jackson T. Gandour, Ananthanarayan Krishnan, in Neurobiology of Language, 2016

87.4 Tonal Versus Segmental Units

Linguistic theory informs us that the onset and rime of a syllable contain segmental units. They differ in their duration and the order in which their information unfolds in time over the duration of a syllable. Rimes and tones, however, overlap substantially in the order in which their information unfolds in time. Tones are suprasegmental; they are mapped onto (morpho)syllables.

Depending on task demands, tones elicit effects that differ from those of segments. The time course and amplitude of N400 (a negative component associated with lexical semantic processing that peaks approximately 400 ms after the auditory stimulus) were the same for consonant, rime, and tone violations in Cantonese (12: Schirmer, Tang, Penney, Gunter, & Chen, 2005). Their findings were replicated in Mandarin, but syllable violations elicited an earlier and stronger N400 than tone (17: Malins & Joanisse, 2012; cf. 16: Zhao, Guo, Zhou, & Shu, 2011). This separation of tone from its carrier syllable was also reported in an auditory verbal recognition paradigm in which subjects selectively attended to either the syllable or the tone (10: Li et al., 2003). In a spoken word recognition paradigm, tones elicited larger late positive event-related potential (ERP) component than vowels (19: Hu, Gao, Ma, & Yao, 2012). In a left brain-damaged Chinese aphasic, vowels were spared and tones were severely impaired (11: Liang & van Heuven, 2004). These findings together support a functional dissociation of tonal and segmental information.

It is well-known that hemispheric specialization may be driven by differences in acoustic features associated with segments. The question is whether hemispheric specialization for tone can be dissociated from segments. Tones induce greater activation in the right posterior middle frontal gyrus (MFG) for English speakers when compared with consonants or rimes (9: Gandour et al., 2003). This area has been implicated in pitch perception (Zatorre et al., 2002). Their increased activation is presumably due to their lack of experience with Chinese tones. Using a tone identification task, the right IFG was found to be activated in English learners of Mandarin tone only after training (Wang, Sereno, Jongman, & Hirsch, 2003). This finding demonstrates early cortical effects of learning a second language that involve recruitment of cortical regions implicated in tonal processing. Focusing on hemispheric specialization for tone production (14: Liu et al., 2006), Mandarin tones elicited more activity in the right IFG than vowels. This rightward preference for tonal processing converges more broadly with the role of the RH in mediating speech prosody (Friederici & Alter, 2004; Glasser & Rilling, 2008; Wildgruber, Ackermann, Kreifelts, & Ethofer, 2006).

As measured by the mismatch negativity (MMN), a fronto-centrally distributed cortical ERP that indexes a change in auditory detection, it is well-known that language experience may influence the automatic, involuntary processing of consonants and vowels (Naatanen, 2001, review). Therefore, one would expect language experience to modulate the automatic cortical processing of lexical tones. Tones evoked stronger MMN in the RH relative to the LH, whereas consonants produced the opposite pattern (13: Luo et al., 2006). An fMRI study showed that Mandarin tones, relative to consonants or rimes, elicited increased activation in right frontoparietal areas (15: Li et al., 2010). Taken together, these data suggest the balance of hemispheric specialization may be modulated by distinct acoustic features associated with tonal as compared with segmental units.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780124077942000870

Neural Networks and Related Statistical Latent Variable Models

M.A. Tanner, R.A. Jacobs, in International Encyclopedia of the Social & Behavioral Sciences, 2001

1 Introduction

This article presents statistical features associated with artificial neural networks, as well as two other related models, namely mixtures-of-experts and hidden Markov models.

Historically, the study of artificial neural networks was motivated by attempts to understand information processing in biological nervous systems (McCulloch and Pitts 1943). Recognizing that the mammalian brain consists of very complex webs of interconnected neurons, artificial neural networks are built from densely interconnected simple processing units, where each unit receives a number of inputs and produces a single numerical output. However, while artificial neural networks have been motivated by biological systems, there are many aspects of biological systems not adequately modeled by artificial neural networks. Indeed, many aspects of artificial neural networks are inconsistent with biological systems. Though the brain metaphor provides a useful source of inspiration, the viewpoint adopted here is that artificial neural networks are a general class of parameterized statistical models consisting of interconnected processing units.

Artificial neural networks provide a general and practical approach for approximating real-valued (regression problems) and discrete-valued (classification problems) mappings between covariate and response variables. These models, combined with simple methods of estimation (i.e., learning algorithms), have proven highly successful in such diverse areas as handwriting recognition (LeCun et al. 1989), spoken word recognition (Lang et al. 1990), face recognition (Cottrell 1990), text-to-speech translation (Sejnowski and Rosenberg 1986), and autonomous vehicle navigation (Pomerleau 1993).

An artificial neural network consists of multiple sets of units: one set of units corresponds to the covariate variables, a second set of units corresponds to the response variables, and a third set of units, referred to as hidden units, corresponds to latent variables. The hidden units (or, more precisely, their associated hidden or latent variables) mediate the nonlinear mapping from covariate to response variables. Based on observed pairings of covariate and response variables, the back-propagation algorithm is commonly used to estimate the parameters, called weights, of the hidden units.

Some mixture models have statistical structures that resemble those of artificial neural networks. Mixture models are multiple-component models in which each observable data item is generated by one, and only one, component of the model. Like artificial neural networks, mixture models also contain latent or hidden variables. The hidden variables of a mixture model indicate the component that generated each data item.

Mixtures-of-experts (ME) models combine simple conditional probability distributions, such as unimodal distributions, in order to form a complex (e.g., multimodal) conditional distribution of the response variables given the covariate variables. They combine properties of generalized linear models (McCullagh and Nelder 1989) with those of mixture models. Like generalized linear models, they are used to model the relationship between covariate and response variables. Typical applications include nonlinear regression and binary or multiway classification. However, unlike standard generalized linear models they assume that the conditional distribution of the responses is a finite mixture distribution. Mixtures-of-experts are ‘piecewise estimators’ in the sense that different mixture components summarize the relationship between covariate and response variables for different subsets of the observable data items. The subsets do not, however, have hard boundaries; a data item might simultaneously be a member of multiple subsets. Because ME assume that the probability of the response variables given the covariate variables is a finite mixture distribution, they provide a motivated alternative to nonparametric models such as artificial neural networks, and provide a richer class of distributions than standard generalized linear models.

Hidden Markov models (HMMs), in contrast with ME models, do not map covariate to response variables; instead, they are used to summarize time-series data. To motivate HMMs, consider the following scenario (Rabiner 1989). A person has three coins. At each time step, she performs two actions. First, she randomly selects a coin by sampling from a multinomial distribution. The parameters of this distribution are a function of the coin that was selected at the previous time step. Next, the selected coin is flipped in order to produce an outcome. Each coin has a different probability of producing either a head or a tail. A second person only observes the sequence of outcomes. Based on this sequence, the person constructs a statistical model of the underlying process that generated the sequence. A useful statistical model for this scenario is a hidden Markov model.

HMMs are mixture models whose components are typically members of the exponential family of distributions. It is assumed that each data item was generated by one, and only one, of the components. The dependencies among the data items are captured by the fact that HMMs include temporal dynamics which govern the transition from one component to another at successive time steps. More specifically, HMMs differ from conventional mixture models in that the selection of the mixture component at time step t is based upon a multinomial distribution whose parameters depend on the component that was selected at time step t−1. HMMs are useful for modeling time series data because they explicitly model the transitions between mixture components, or states as they are known in the engineering literature. An advantage of HMMs is that they can model data that violate the stationarity assumptions characteristic of many other types of time series models. The states of the HMM would be Bernoulli distributions in the above scenario. More commonly HMMs are used to model sequences of continuous signals, such as speech data. The states of an HMM would be Gaussian distributions in this case.

Artificial neural networks and mixture models, such as mixtures-of-experts and hidden Markov models, can be seen as instances of two ends of a continuum. Artificial neural networks are an example of a ‘multiple-cause’ model. In a multiple-cause model, each data item is a function of multiple hidden variables. For instance, a response variable in a neural network is a function of all hidden variables. An advantage of multiple-cause models is that they are representationally efficient. If each hidden variable has a Bernoulli distribution, for example, and if one seeks N bits of information about the underlying state of a data item, then a model with N latent variables is sufficient. In the case when there is a linear relationship between the latent and observed variables, and when the variables are Gaussian distributed, such as is the case with factor models, then the equations for statistical inference (i.e., the determination of a probability distribution for the hidden variables given the values of the observed variables) are computationally efficient. However, a disadvantage of multiple-cause models is that inference tends to be computationally intractable when there is a nonlinear relationship between latent and observed variables, as is frequently the case with neural networks.

Consequently, researchers also consider ‘single-cause’ models, such as mixture models. Mixture models are regarded as instances of single-cause models due to their assumption that each observable data item was generated by one, and only one, component of the model. An advantage of this assumption is that it leads to a set of equations for performing statistical inference that is computationally efficient. A disadvantage of this assumption is that it makes mixture models inefficient from a representational viewpoint. Consider, for example, a two-component mixture model where each component is a Gaussian distribution. This model provides one bit of information regarding the underlying state of a data item (either the data item was sampled from the first Gaussian distribution or it was sampled from the second Gaussian distribution). Suppose that one seeks N bits of information about the underlying state of a data item. A mixture model requires 2N components in order to provide this amount of information. That is, as the amount of information grows linearly, the number of required components grows exponentially.

As discussed at the end of this article, a recent trend in the research literature is to consider novel statistical models that are not purely single-cause and are not purely multiple-cause, but rather combine aspects of both.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767004307

What is speech recognition?

Speech recognition, or speech-to-text, is the ability of a machine or program to identify words spoken aloud and convert them into readable text. Rudimentary speech recognition software has a limited vocabulary and may only identify words and phrases when spoken clearly. More sophisticated software can handle natural speech, different accents and various languages.

Speech recognition uses a broad array of research in computer science, linguistics and computer engineering. Many modern devices and text-focused programs have speech recognition functions in them to allow for easier or hands-free use of a device.

Speech recognition and voice recognition are two different technologies and should not be confused:

- Speech recognition is used to identify words in spoken language.

- Voice recognition is a biometric technology for identifying an individual’s voice.

How does speech recognition work?

Speech recognition systems use computer algorithms to process and interpret spoken words and convert them into text. A software program turns the sound a microphone records into written language that computers and humans can understand, following these four steps:

- analyze the audio;

- break it into parts;

- digitize it into a computer-readable format; and

- use an algorithm to match it to the most suitable text representation.

Speech recognition software must adapt to the highly variable and context-specific nature of human speech. The software algorithms that process and organize audio into text are trained on different speech patterns, speaking styles, languages, dialects, accents and phrasings. The software also separates spoken audio from background noise that often accompanies the signal.

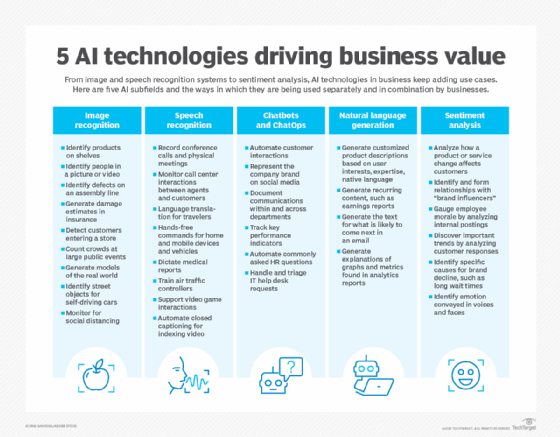

To meet these requirements, speech recognition systems use two types of models:

- Acoustic models. These represent the relationship between linguistic units of speech and audio signals.

- Language models. Here, sounds are matched with word sequences to distinguish between words that sound similar.

What applications is speech recognition used for?

Speech recognition systems have quite a few applications. Here is a sampling of them.

Mobile devices. Smartphones use voice commands for call routing, speech-to-text processing, voice dialing and voice search. Users can respond to a text without looking at their devices. On Apple iPhones, speech recognition powers the keyboard and Siri, the virtual assistant. Functionality is available in secondary languages, too. Speech recognition can also be found in word processing applications like Microsoft Word, where users can dictate words to be turned into text.

Education. Speech recognition software is used in language instruction. The software hears the user’s speech and offers help with pronunciation.

Customer service. Automated voice assistants listen to customer queries and provides helpful resources.

Healthcare applications. Doctors can use speech recognition software to transcribe notes in real time into healthcare records.

Disability assistance. Speech recognition software can translate spoken words into text using closed captions to enable a person with hearing loss to understand what others are saying. Speech recognition can also enable those with limited use of their hands to work with computers, using voice commands instead of typing.

Court reporting. Software can be used to transcribe courtroom proceedings, precluding the need for human transcribers.

Emotion recognition. This technology can analyze certain vocal characteristics to determine what emotion the speaker is feeling. Paired with sentiment analysis, this can reveal how someone feels about a product or service.

Hands-free communication. Drivers use voice control for hands-free communication, controlling phones, radios and global positioning systems, for instance.

What are the features of speech recognition systems?

Good speech recognition programs let users customize them to their needs. The features that enable this include:

- Language weighting. This feature tells the algorithm to give special attention to certain words, such as those spoken frequently or that are unique to the conversation or subject. For example, the software can be trained to listen for specific product references.

- Acoustic training. The software tunes out ambient noise that pollutes spoken audio. Software programs with acoustic training can distinguish speaking style, pace and volume amid the din of many people speaking in an office.

- Speaker labeling. This capability enables a program to label individual participants and identify their specific contributions to a conversation.

- Profanity filtering. Here, the software filters out undesirable words and language.

What are the different speech recognition algorithms?

The power behind speech recognition features comes from a set of algorithms and technologies. They include the following:

- Hidden Markov model. HMMs are used in autonomous systems where a state is partially observable or when all of the information necessary to make a decision is not immediately available to the sensor (in speech recognition’s case, a microphone). An example of this is in acoustic modeling, where a program must match linguistic units to audio signals using statistical probability.

- Natural language processing. NLP eases and accelerates the speech recognition process.

- N-grams. This simple approach to language models creates a probability distribution for a sequence. An example would be an algorithm that looks at the last few words spoken, approximates the history of the sample of speech and uses that to determine the probability of the next word or phrase that will be spoken.

- Artificial intelligence. AI and machine learning methods like deep learning and neural networks are common in advanced speech recognition software. These systems use grammar, structure, syntax and composition of audio and voice signals to process speech. Machine learning systems gain knowledge with each use, making them well suited for nuances like accents.

What are the advantages of speech recognition?

There are several advantages to using speech recognition software, including the following:

- Machine-to-human communication. The technology enables electronic devices to communicate with humans in natural language or conversational speech.

- Readily accessible. This software is frequently installed in computers and mobile devices, making it accessible.

- Easy to use. Well-designed software is straightforward to operate and often runs in the background.

- Continuous, automatic improvement. Speech recognition systems that incorporate AI become more effective and easier to use over time. As systems complete speech recognition tasks, they generate more data about human speech and get better at what they do.

What are the disadvantages of speech recognition?

While convenient, speech recognition technology still has a few issues to work through. Limitations include:

- Inconsistent performance. The systems may be unable to capture words accurately because of variations in pronunciation, lack of support for some languages and inability to sort through background noise. Ambient noise can be especially challenging. Acoustic training can help filter it out, but these programs aren’t perfect. Sometimes it’s impossible to isolate the human voice.

- Speed. Some speech recognition programs take time to deploy and master. The speech processing may feel relatively slow.

- Source file issues. Speech recognition success depends on the recording equipment used, not just the software.

The takeaway

Speech recognition is an evolving technology. It is one of the many ways people can communicate with computers with little or no typing. A variety of communications-based business applications capitalize on the convenience and speed of spoken communication that this technology enables.

Speech recognition programs have advanced greatly over 60 years of development. They are still improving, fueled in particular by AI.

Learn more about the AI-powered business transcription software in this Q&A with Wilfried Schaffner, chief technology officer of Speech Processing Solutions.

This was last updated in September 2021

Continue Reading About speech recognition

- How can speech recognition technology support remote work?

- Automatic speech recognition may be better than you think

- Speech recognition use cases enable touchless collaboration

- Automated speech recognition gives CX vendor an edge

- Speech API from Mozilla’s Web developer platform

Dig Deeper on Customer service and contact center

-

Siri

By: Erica Mixon

-

natural language processing (NLP)

By: Ben Lutkevich

-

voice recognition (speaker recognition)

By: Alexander Gillis

-

interactive voice response (IVR)

By: Karolina Kiwak

Speech recognition is an interdisciplinary subfield of computer science and computational linguistics that develops methodologies and technologies that enable the recognition and translation of spoken language into text by computers with the main benefit of searchability. It is also known as automatic speech recognition (ASR), computer speech recognition or speech to text (STT). It incorporates knowledge and research in the computer science, linguistics and computer engineering fields. The reverse process is speech synthesis.

Some speech recognition systems require «training» (also called «enrollment») where an individual speaker reads text or isolated vocabulary into the system. The system analyzes the person’s specific voice and uses it to fine-tune the recognition of that person’s speech, resulting in increased accuracy. Systems that do not use training are called «speaker-independent»[1] systems. Systems that use training are called «speaker dependent».

Speech recognition applications include voice user interfaces such as voice dialing (e.g. «call home»), call routing (e.g. «I would like to make a collect call»), domotic appliance control, search key words (e.g. find a podcast where particular words were spoken), simple data entry (e.g., entering a credit card number), preparation of structured documents (e.g. a radiology report), determining speaker characteristics,[2] speech-to-text processing (e.g., word processors or emails), and aircraft (usually termed direct voice input).

The term voice recognition[3][4][5] or speaker identification[6][7][8] refers to identifying the speaker, rather than what they are saying. Recognizing the speaker can simplify the task of translating speech in systems that have been trained on a specific person’s voice or it can be used to authenticate or verify the identity of a speaker as part of a security process.

From the technology perspective, speech recognition has a long history with several waves of major innovations. Most recently, the field has benefited from advances in deep learning and big data. The advances are evidenced not only by the surge of academic papers published in the field, but more importantly by the worldwide industry adoption of a variety of deep learning methods in designing and deploying speech recognition systems.

HistoryEdit

The key areas of growth were: vocabulary size, speaker independence, and processing speed.

Pre-1970Edit

- 1952 – Three Bell Labs researchers, Stephen Balashek,[9] R. Biddulph, and K. H. Davis built a system called «Audrey»[10] for single-speaker digit recognition. Their system located the formants in the power spectrum of each utterance.[11]

- 1960 – Gunnar Fant developed and published the source-filter model of speech production.

- 1962 – IBM demonstrated its 16-word «Shoebox» machine’s speech recognition capability at the 1962 World’s Fair.[12]

- 1966 – Linear predictive coding (LPC), a speech coding method, was first proposed by Fumitada Itakura of Nagoya University and Shuzo Saito of Nippon Telegraph and Telephone (NTT), while working on speech recognition.[13]

- 1969 – Funding at Bell Labs dried up for several years when, in 1969, the influential John Pierce wrote an open letter that was critical of and defunded speech recognition research.[14] This defunding lasted until Pierce retired and James L. Flanagan took over.

Raj Reddy was the first person to take on continuous speech recognition as a graduate student at Stanford University in the late 1960s. Previous systems required users to pause after each word. Reddy’s system issued spoken commands for playing chess.

Around this time Soviet researchers invented the dynamic time warping (DTW) algorithm and used it to create a recognizer capable of operating on a 200-word vocabulary.[15] DTW processed speech by dividing it into short frames, e.g. 10ms segments, and processing each frame as a single unit. Although DTW would be superseded by later algorithms, the technique carried on. Achieving speaker independence remained unsolved at this time period.

1970–1990Edit

- 1971 – DARPA funded five years for Speech Understanding Research, speech recognition research seeking a minimum vocabulary size of 1,000 words. They thought speech understanding would be key to making progress in speech recognition, but this later proved untrue.[16] BBN, IBM, Carnegie Mellon and Stanford Research Institute all participated in the program.[17][18] This revived speech recognition research post John Pierce’s letter.

- 1972 – The IEEE Acoustics, Speech, and Signal Processing group held a conference in Newton, Massachusetts.

- 1976 – The first ICASSP was held in Philadelphia, which since then has been a major venue for the publication of research on speech recognition.[19]

During the late 1960s Leonard Baum developed the mathematics of Markov chains at the Institute for Defense Analysis. A decade later, at CMU, Raj Reddy’s students James Baker and Janet M. Baker began using the Hidden Markov Model (HMM) for speech recognition.[20] James Baker had learned about HMMs from a summer job at the Institute of Defense Analysis during his undergraduate education.[21] The use of HMMs allowed researchers to combine different sources of knowledge, such as acoustics, language, and syntax, in a unified probabilistic model.

- By the mid-1980s IBM’s Fred Jelinek’s team created a voice activated typewriter called Tangora, which could handle a 20,000-word vocabulary[22] Jelinek’s statistical approach put less emphasis on emulating the way the human brain processes and understands speech in favor of using statistical modeling techniques like HMMs. (Jelinek’s group independently discovered the application of HMMs to speech.[21]) This was controversial with linguists since HMMs are too simplistic to account for many common features of human languages.[23] However, the HMM proved to be a highly useful way for modeling speech and replaced dynamic time warping to become the dominant speech recognition algorithm in the 1980s.[24]

- 1982 – Dragon Systems, founded by James and Janet M. Baker,[25] was one of IBM’s few competitors.

Practical speech recognitionEdit

The 1980s also saw the introduction of the n-gram language model.

- 1987 – The back-off model allowed language models to use multiple length n-grams, and CSELT[26] used HMM to recognize languages (both in software and in hardware specialized processors, e.g. RIPAC).

Much of the progress in the field is owed to the rapidly increasing capabilities of computers. At the end of the DARPA program in 1976, the best computer available to researchers was the PDP-10 with 4 MB ram.[23] It could take up to 100 minutes to decode just 30 seconds of speech.[27]

Two practical products were:

- 1984 – was released the Apricot Portable with up to 4096 words support, of which only 64 could be held in RAM at a time.[28]

- 1987 – a recognizer from Kurzweil Applied Intelligence

- 1990 – Dragon Dictate, a consumer product released in 1990[29][30] AT&T deployed the Voice Recognition Call Processing service in 1992 to route telephone calls without the use of a human operator.[31] The technology was developed by Lawrence Rabiner and others at Bell Labs.

By this point, the vocabulary of the typical commercial speech recognition system was larger than the average human vocabulary.[23] Raj Reddy’s former student, Xuedong Huang, developed the Sphinx-II system at CMU. The Sphinx-II system was the first to do speaker-independent, large vocabulary, continuous speech recognition and it had the best performance in DARPA’s 1992 evaluation. Handling continuous speech with a large vocabulary was a major milestone in the history of speech recognition. Huang went on to found the speech recognition group at Microsoft in 1993. Raj Reddy’s student Kai-Fu Lee joined Apple where, in 1992, he helped develop a speech interface prototype for the Apple computer known as Casper.

Lernout & Hauspie, a Belgium-based speech recognition company, acquired several other companies, including Kurzweil Applied Intelligence in 1997 and Dragon Systems in 2000. The L&H speech technology was used in the Windows XP operating system. L&H was an industry leader until an accounting scandal brought an end to the company in 2001. The speech technology from L&H was bought by ScanSoft which became Nuance in 2005. Apple originally licensed software from Nuance to provide speech recognition capability to its digital assistant Siri.[32]

2000sEdit

In the 2000s DARPA sponsored two speech recognition programs: Effective Affordable Reusable Speech-to-Text (EARS) in 2002 and Global Autonomous Language Exploitation (GALE). Four teams participated in the EARS program: IBM, a team led by BBN with LIMSI and Univ. of Pittsburgh, Cambridge University, and a team composed of ICSI, SRI and University of Washington. EARS funded the collection of the Switchboard telephone speech corpus containing 260 hours of recorded conversations from over 500 speakers.[33] The GALE program focused on Arabic and Mandarin broadcast news speech. Google’s first effort at speech recognition came in 2007 after hiring some researchers from Nuance.[34] The first product was GOOG-411, a telephone based directory service. The recordings from GOOG-411 produced valuable data that helped Google improve their recognition systems. Google Voice Search is now supported in over 30 languages.

In the United States, the National Security Agency has made use of a type of speech recognition for keyword spotting since at least 2006.[35] This technology allows analysts to search through large volumes of recorded conversations and isolate mentions of keywords. Recordings can be indexed and analysts can run queries over the database to find conversations of interest. Some government research programs focused on intelligence applications of speech recognition, e.g. DARPA’s EARS’s program and IARPA’s Babel program.

In the early 2000s, speech recognition was still dominated by traditional approaches such as Hidden Markov Models combined with feedforward artificial neural networks.[36]

Today, however, many aspects of speech recognition have been taken over by a deep learning method called Long short-term memory (LSTM), a recurrent neural network published by Sepp Hochreiter & Jürgen Schmidhuber in 1997.[37] LSTM RNNs avoid the vanishing gradient problem and can learn «Very Deep Learning» tasks[38] that require memories of events that happened thousands of discrete time steps ago, which is important for speech.

Around 2007, LSTM trained by Connectionist Temporal Classification (CTC)[39] started to outperform traditional speech recognition in certain applications.[40] In 2015, Google’s speech recognition reportedly experienced a dramatic performance jump of 49% through CTC-trained LSTM, which is now available through Google Voice to all smartphone users.[41] Transformers, a type of neural network based on solely on attention, have been widely adopted in computer vision[42][43] and language modeling,[44][45] sparking the interest of adapting such models to new domains, including speech recognition.[46][47][48] Some recent papers reported superior performance levels using transformer models for speech recognition, but these models usually require large scale training datasets to reach high performance levels.

The use of deep feedforward (non-recurrent) networks for acoustic modeling was introduced during the later part of 2009 by Geoffrey Hinton and his students at the University of Toronto and by Li Deng[49] and colleagues at Microsoft Research, initially in the collaborative work between Microsoft and the University of Toronto which was subsequently expanded to include IBM and Google (hence «The shared views of four research groups» subtitle in their 2012 review paper).[50][51][52] A Microsoft research executive called this innovation «the most dramatic change in accuracy since 1979».[53] In contrast to the steady incremental improvements of the past few decades, the application of deep learning decreased word error rate by 30%.[53] This innovation was quickly adopted across the field. Researchers have begun to use deep learning techniques for language modeling as well.

In the long history of speech recognition, both shallow form and deep form (e.g. recurrent nets) of artificial neural networks had been explored for many years during 1980s, 1990s and a few years into the 2000s.[54][55][56]

But these methods never won over the non-uniform internal-handcrafting Gaussian mixture model/Hidden Markov model (GMM-HMM) technology based on generative models of speech trained discriminatively.[57] A number of key difficulties had been methodologically analyzed in the 1990s, including gradient diminishing[58] and weak temporal correlation structure in the neural predictive models.[59][60] All these difficulties were in addition to the lack of big training data and big computing power in these early days. Most speech recognition researchers who understood such barriers hence subsequently moved away from neural nets to pursue generative modeling approaches until the recent resurgence of deep learning starting around 2009–2010 that had overcome all these difficulties. Hinton et al. and Deng et al. reviewed part of this recent history about how their collaboration with each other and then with colleagues across four groups (University of Toronto, Microsoft, Google, and IBM) ignited a renaissance of applications of deep feedforward neural networks to speech recognition.[51][52][61][62]

2010sEdit

By early 2010s speech recognition, also called voice recognition[63][64][65] was clearly differentiated from speaker recognition, and speaker independence was considered a major breakthrough. Until then, systems required a «training» period. A 1987 ad for a doll had carried the tagline «Finally, the doll that understands you.» – despite the fact that it was described as «which children could train to respond to their voice».[12]

In 2017, Microsoft researchers reached a historical human parity milestone of transcribing conversational telephony speech on the widely benchmarked Switchboard task. Multiple deep learning models were used to optimize speech recognition accuracy. The speech recognition word error rate was reported to be as low as 4 professional human transcribers working together on the same benchmark, which was funded by IBM Watson speech team on the same task.[66]

Models, methods, and algorithmsEdit

Both acoustic modeling and language modeling are important parts of modern statistically based speech recognition algorithms. Hidden Markov models (HMMs) are widely used in many systems. Language modeling is also used in many other natural language processing applications such as document classification or statistical machine translation.

Hidden Markov modelsEdit

Modern general-purpose speech recognition systems are based on hidden Markov models. These are statistical models that output a sequence of symbols or quantities. HMMs are used in speech recognition because a speech signal can be viewed as a piecewise stationary signal or a short-time stationary signal. In a short time scale (e.g., 10 milliseconds), speech can be approximated as a stationary process. Speech can be thought of as a Markov model for many stochastic purposes.

Another reason why HMMs are popular is that they can be trained automatically and are simple and computationally feasible to use. In speech recognition, the hidden Markov model would output a sequence of n-dimensional real-valued vectors (with n being a small integer, such as 10), outputting one of these every 10 milliseconds. The vectors would consist of cepstral coefficients, which are obtained by taking a Fourier transform of a short time window of speech and decorrelating the spectrum using a cosine transform, then taking the first (most significant) coefficients. The hidden Markov model will tend to have in each state a statistical distribution that is a mixture of diagonal covariance Gaussians, which will give a likelihood for each observed vector. Each word, or (for more general speech recognition systems), each phoneme, will have a different output distribution; a hidden Markov model for a sequence of words or phonemes is made by concatenating the individual trained hidden Markov models for the separate words and phonemes.

Described above are the core elements of the most common, HMM-based approach to speech recognition. Modern speech recognition systems use various combinations of a number of standard techniques in order to improve results over the basic approach described above. A typical large-vocabulary system would need context dependency for the phonemes (so phonemes with different left and right context have different realizations as HMM states); it would use cepstral normalization to normalize for a different speaker and recording conditions; for further speaker normalization, it might use vocal tract length normalization (VTLN) for male-female normalization and maximum likelihood linear regression (MLLR) for more general speaker adaptation. The features would have so-called delta and delta-delta coefficients to capture speech dynamics and in addition, might use heteroscedastic linear discriminant analysis (HLDA); or might skip the delta and delta-delta coefficients and use splicing and an LDA-based projection followed perhaps by heteroscedastic linear discriminant analysis or a global semi-tied co variance transform (also known as maximum likelihood linear transform, or MLLT). Many systems use so-called discriminative training techniques that dispense with a purely statistical approach to HMM parameter estimation and instead optimize some classification-related measure of the training data. Examples are maximum mutual information (MMI), minimum classification error (MCE), and minimum phone error (MPE).

Decoding of the speech (the term for what happens when the system is presented with a new utterance and must compute the most likely source sentence) would probably use the Viterbi algorithm to find the best path, and here there is a choice between dynamically creating a combination hidden Markov model, which includes both the acoustic and language model information and combining it statically beforehand (the finite state transducer, or FST, approach).

A possible improvement to decoding is to keep a set of good candidates instead of just keeping the best candidate, and to use a better scoring function (re scoring) to rate these good candidates so that we may pick the best one according to this refined score. The set of candidates can be kept either as a list (the N-best list approach) or as a subset of the models (a lattice). Re scoring is usually done by trying to minimize the Bayes risk[67] (or an approximation thereof): Instead of taking the source sentence with maximal probability, we try to take the sentence that minimizes the expectancy of a given loss function with regards to all possible transcriptions (i.e., we take the sentence that minimizes the average distance to other possible sentences weighted by their estimated probability). The loss function is usually the Levenshtein distance, though it can be different distances for specific tasks; the set of possible transcriptions is, of course, pruned to maintain tractability. Efficient algorithms have been devised to re score lattices represented as weighted finite state transducers with edit distances represented themselves as a finite state transducer verifying certain assumptions.[68]

Dynamic time warping (DTW)-based speech recognitionEdit

Dynamic time warping is an approach that was historically used for speech recognition but has now largely been displaced by the more successful HMM-based approach.

Dynamic time warping is an algorithm for measuring similarity between two sequences that may vary in time or speed. For instance, similarities in walking patterns would be detected, even if in one video the person was walking slowly and if in another he or she were walking more quickly, or even if there were accelerations and deceleration during the course of one observation. DTW has been applied to video, audio, and graphics – indeed, any data that can be turned into a linear representation can be analyzed with DTW.

A well-known application has been automatic speech recognition, to cope with different speaking speeds. In general, it is a method that allows a computer to find an optimal match between two given sequences (e.g., time series) with certain restrictions. That is, the sequences are «warped» non-linearly to match each other. This sequence alignment method is often used in the context of hidden Markov models.

Neural networksEdit

Neural networks emerged as an attractive acoustic modeling approach in ASR in the late 1980s. Since then, neural networks have been used in many aspects of speech recognition such as phoneme classification,[69] phoneme classification through multi-objective evolutionary algorithms,[70] isolated word recognition,[71] audiovisual speech recognition, audiovisual speaker recognition and speaker adaptation.

Neural networks make fewer explicit assumptions about feature statistical properties than HMMs and have several qualities making them attractive recognition models for speech recognition. When used to estimate the probabilities of a speech feature segment, neural networks allow discriminative training in a natural and efficient manner. However, in spite of their effectiveness in classifying short-time units such as individual phonemes and isolated words,[72] early neural networks were rarely successful for continuous recognition tasks because of their limited ability to model temporal dependencies.

One approach to this limitation was to use neural networks as a pre-processing, feature transformation or dimensionality reduction,[73] step prior to HMM based recognition. However, more recently, LSTM and related recurrent neural networks (RNNs),[37][41][74][75] Time Delay Neural Networks(TDNN’s),[76] and transformers.[46][47][48] have demonstrated improved performance in this area.

Deep feedforward and recurrent neural networksEdit

Deep Neural Networks and Denoising Autoencoders[77] are also under investigation. A deep feedforward neural network (DNN) is an artificial neural network with multiple hidden layers of units between the input and output layers.[51] Similar to shallow neural networks, DNNs can model complex non-linear relationships. DNN architectures generate compositional models, where extra layers enable composition of features from lower layers, giving a huge learning capacity and thus the potential of modeling complex patterns of speech data.[78]

A success of DNNs in large vocabulary speech recognition occurred in 2010 by industrial researchers, in collaboration with academic researchers, where large output layers of the DNN based on context dependent HMM states constructed by decision trees were adopted.[79][80][81] See comprehensive reviews of this development and of the state of the art as of October 2014 in the recent Springer book from Microsoft Research.[82] See also the related background of automatic speech recognition and the impact of various machine learning paradigms, notably including deep learning, in

recent overview articles.[83][84]

One fundamental principle of deep learning is to do away with hand-crafted feature engineering and to use raw features. This principle was first explored successfully in the architecture of deep autoencoder on the «raw» spectrogram or linear filter-bank features,[85] showing its superiority over the Mel-Cepstral features which contain a few stages of fixed transformation from spectrograms.

The true «raw» features of speech, waveforms, have more recently been shown to produce excellent larger-scale speech recognition results.[86]

End-to-end automatic speech recognitionEdit

Since 2014, there has been much research interest in «end-to-end» ASR. Traditional phonetic-based (i.e., all HMM-based model) approaches required separate components and training for the pronunciation, acoustic, and language model. End-to-end models jointly learn all the components of the speech recognizer. This is valuable since it simplifies the training process and deployment process. For example, a n-gram language model is required for all HMM-based systems, and a typical n-gram language model often takes several gigabytes in memory making them impractical to deploy on mobile devices.[87] Consequently, modern commercial ASR systems from Google and Apple (as of 2017) are deployed on the cloud and require a network connection as opposed to the device locally.

The first attempt at end-to-end ASR was with Connectionist Temporal Classification (CTC)-based systems introduced by Alex Graves of Google DeepMind and Navdeep Jaitly of the University of Toronto in 2014.[88] The model consisted of recurrent neural networks and a CTC layer. Jointly, the RNN-CTC model learns the pronunciation and acoustic model together, however it is incapable of learning the language due to conditional independence assumptions similar to a HMM. Consequently, CTC models can directly learn to map speech acoustics to English characters, but the models make many common spelling mistakes and must rely on a separate language model to clean up the transcripts. Later, Baidu expanded on the work with extremely large datasets and demonstrated some commercial success in Chinese Mandarin and English.[89] In 2016, University of Oxford presented LipNet,[90] the first end-to-end sentence-level lipreading model, using spatiotemporal convolutions coupled with an RNN-CTC architecture, surpassing human-level performance in a restricted grammar dataset.[91] A large-scale CNN-RNN-CTC architecture was presented in 2018 by Google DeepMind achieving 6 times better performance than human experts.[92]