Web severs are everywhere.

Heck you are interacting with one right now!

No matter what type of software engineer you are, at some point in your career you will have to interact with web servers. May be you are building an API server for a backend service. Or may be you are just configuring a web server for your website.

In this article, I will cover how to create the most basic http web server in Python.

But because I want to make sure you understand what we are building, I am going to give an overview first about what web servers are and how they work.

If you already know how web servers work, then you can skip directly to this section.

- What is an HTTP server?

- The TCP socket address

- Create a simple HTTP file

- Create an HTTP web server

What is an HTTP Server?

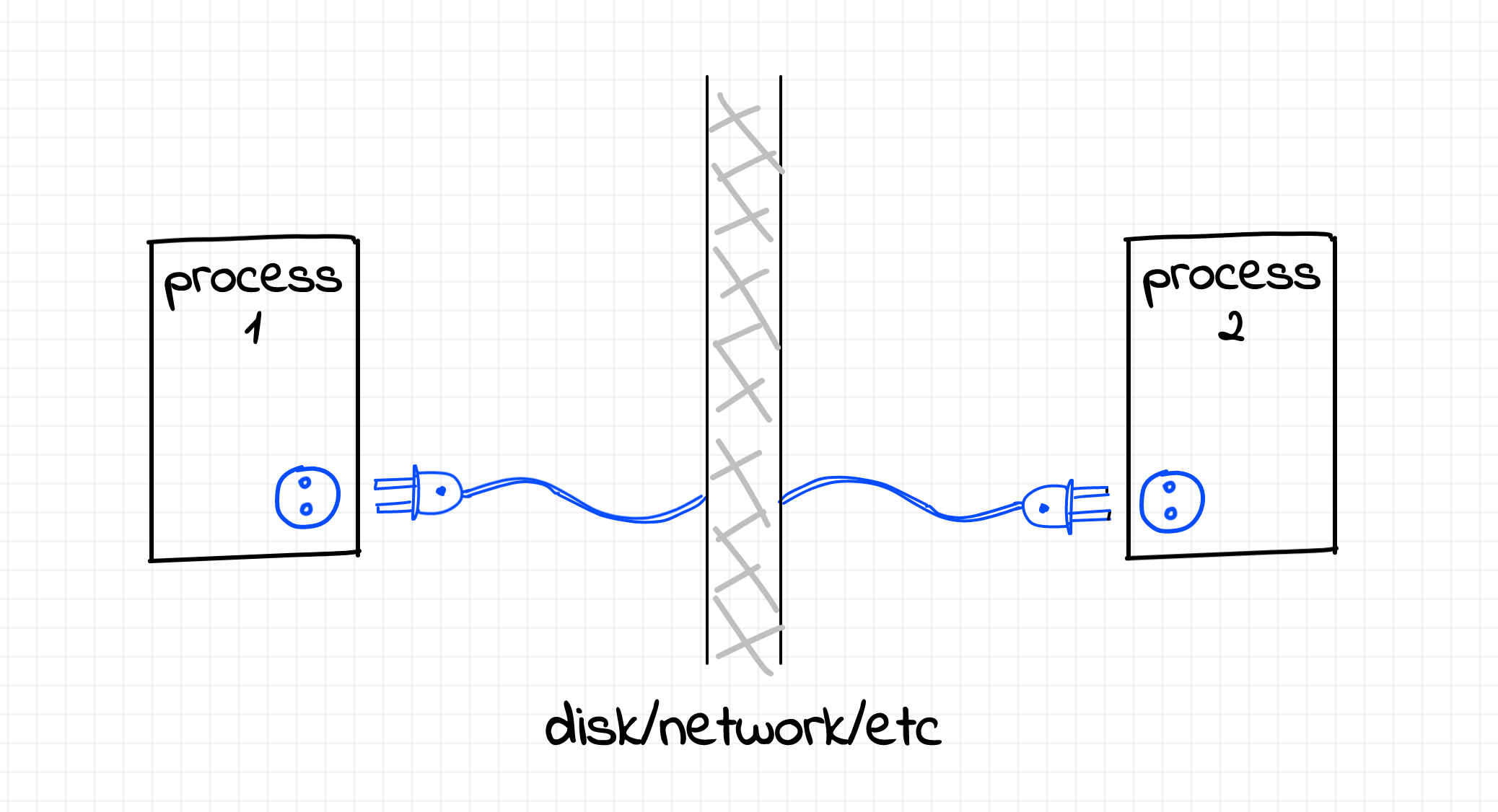

An HTTP web server is nothing but a process that is running on your machine and does exactly two things:

1- Listens for incoming http requests on a specific TCP socket address (IP address and a port number which I will talk about later)

2- Handles this request and sends a response back to the user.

Let me make my point less abstract.

Imagine you pull up your Chrome browser and type www.yahoo.com in the address bar.

Of course you are going to get the Yahoo home page rendered on your browser window.

But what really just happened under the hood?

Actually a lot of things have happened and I might dedicate a whole article to explain the magic behind how this happened.

But for the sake of simplicity, I will abstract away some of the details and talk about this at a very high level.

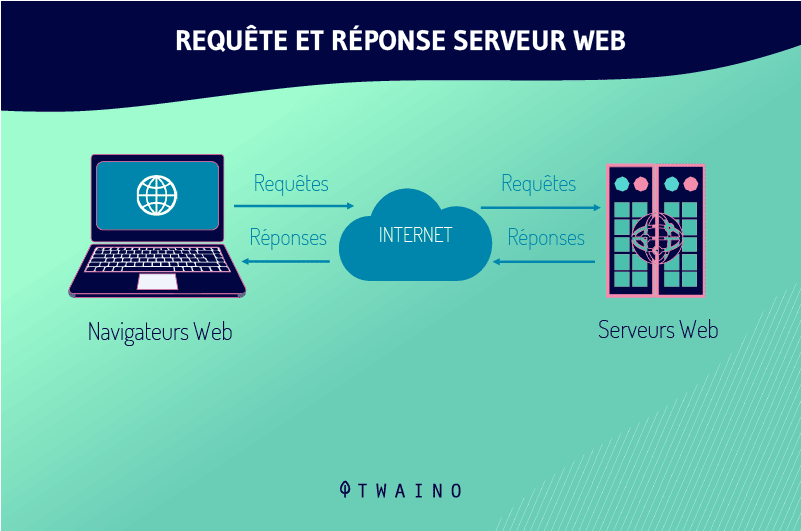

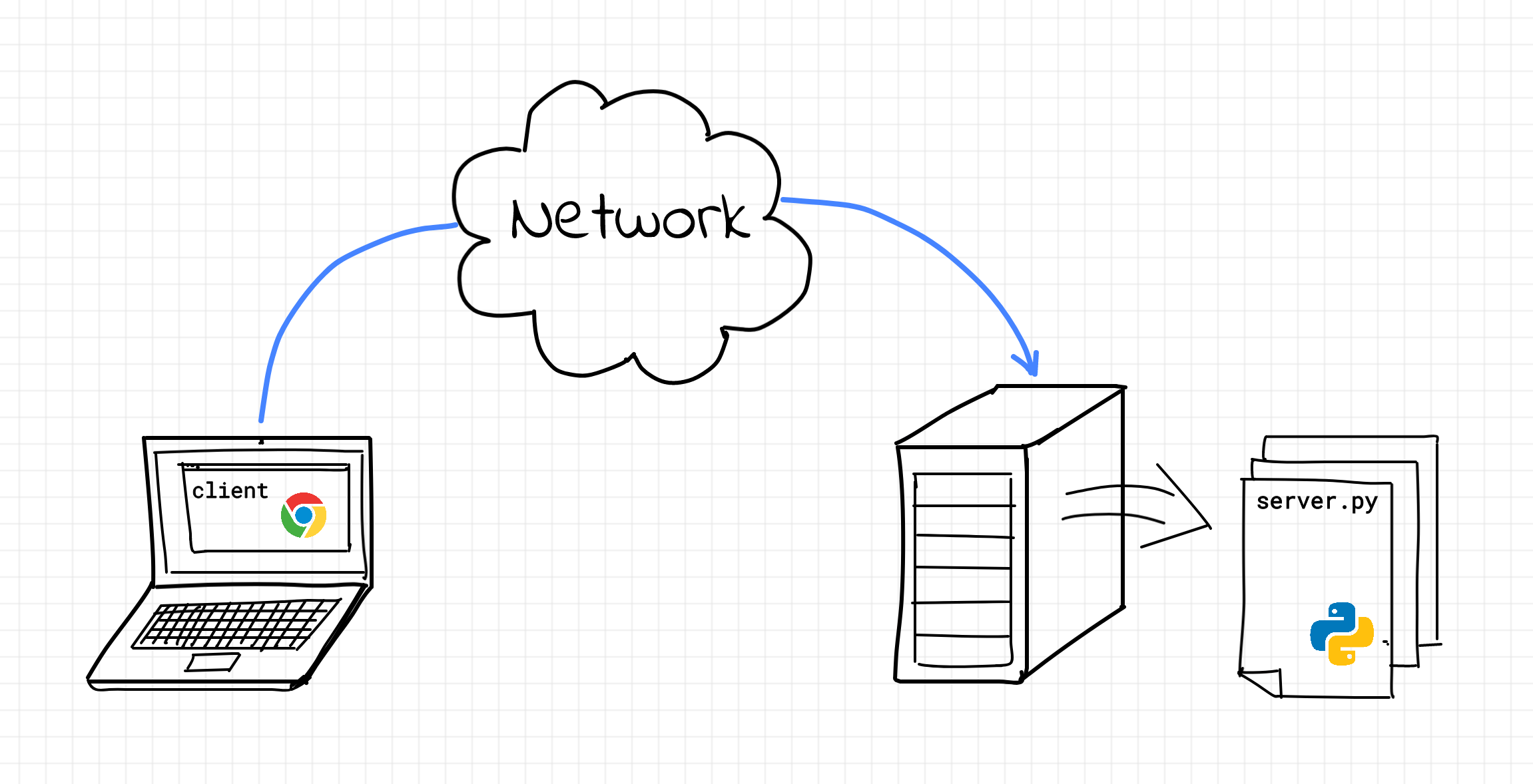

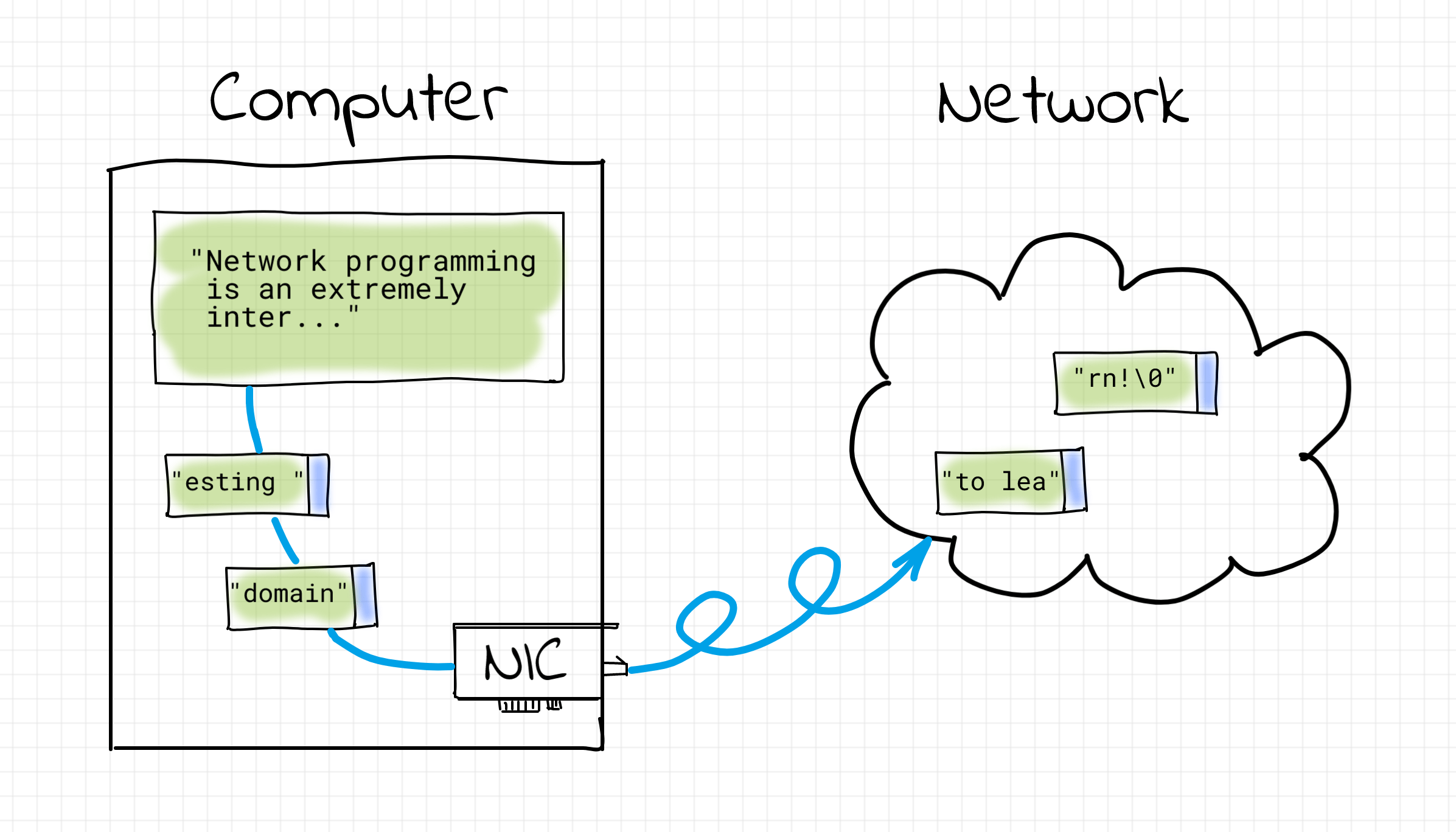

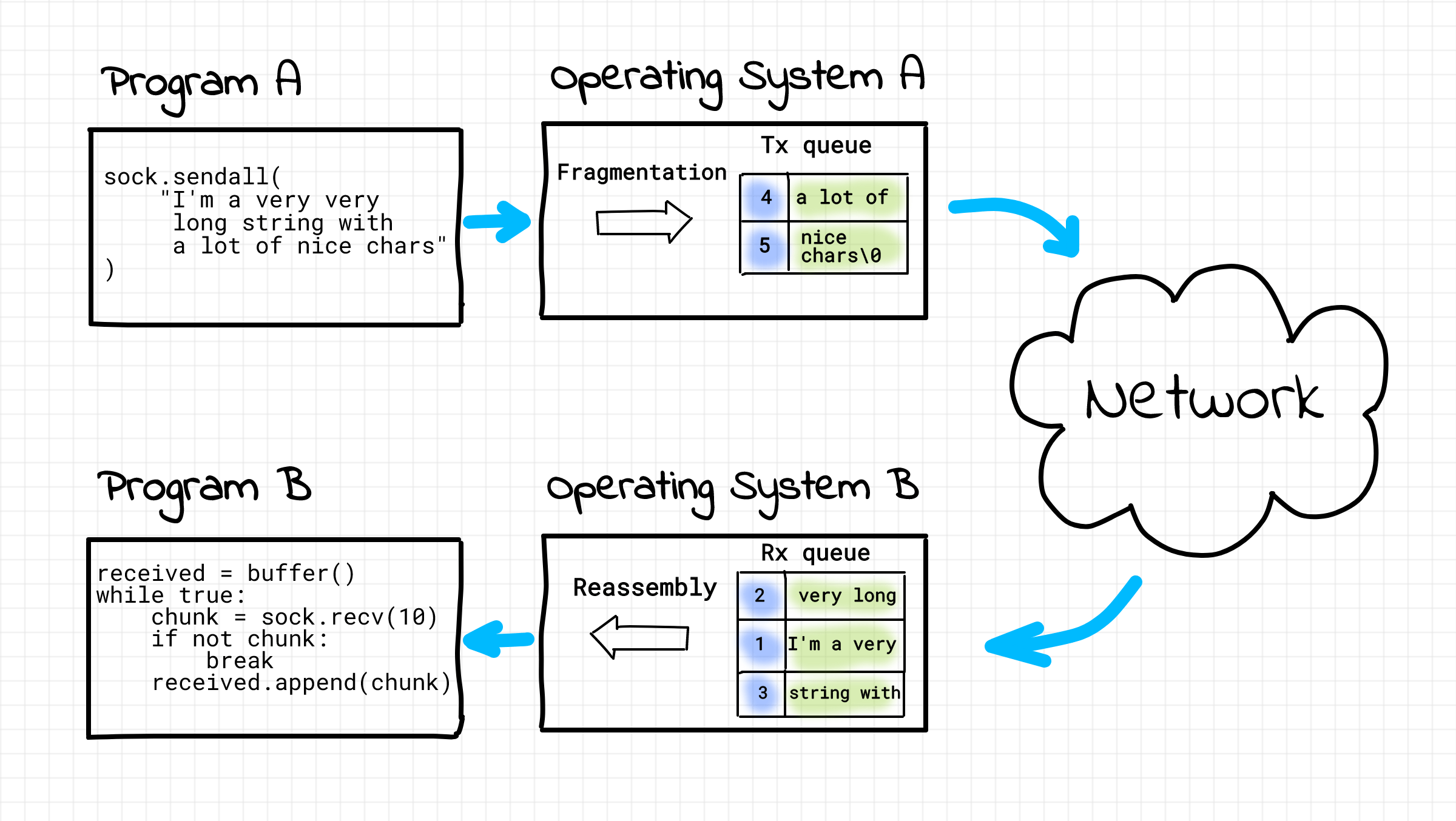

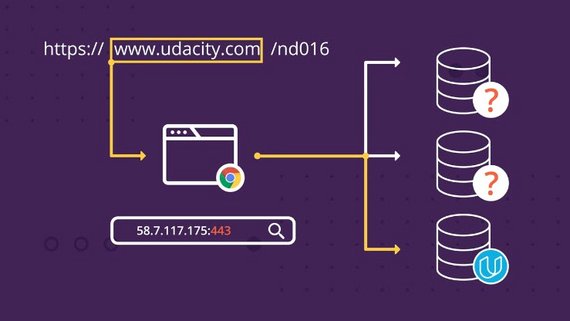

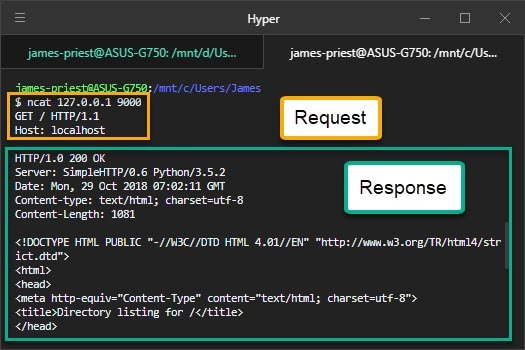

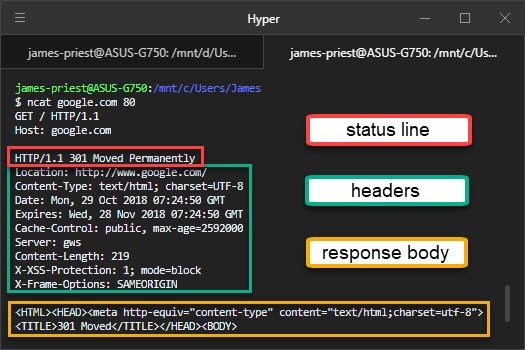

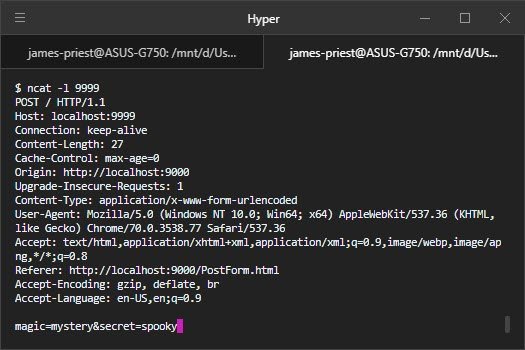

At a high level, when you type www.yahoo.com on your browser, your browser will create a network message called an HTTP request.

This Request will travel all the way to a Yahoo computer that has a web server running on it. This web server will intercept your request, and handle it by responding back with the html of the Yahoo home page.

Finally your browser renders this html on the screen and that’s what you see on your screen.

Every interaction with the Yahoo home page after that (for example, when you click on a link) initiates a new request and response exactly like the first one.

To reiterate, the machine that receives the http request has a software process called a web server running on it. This web server is responsible for intercepting these requests and handling them appropriately.

Alright, now that you know what a web server is and what its function is exactly, you might be wondering how does the request reach that yahoo machine in the first place?

Good question!

In fact this is one of my favorite questions that I ask potential candidates in a coding interview.

Let me explain how, but again….at a high level.

The TCP Socket Address

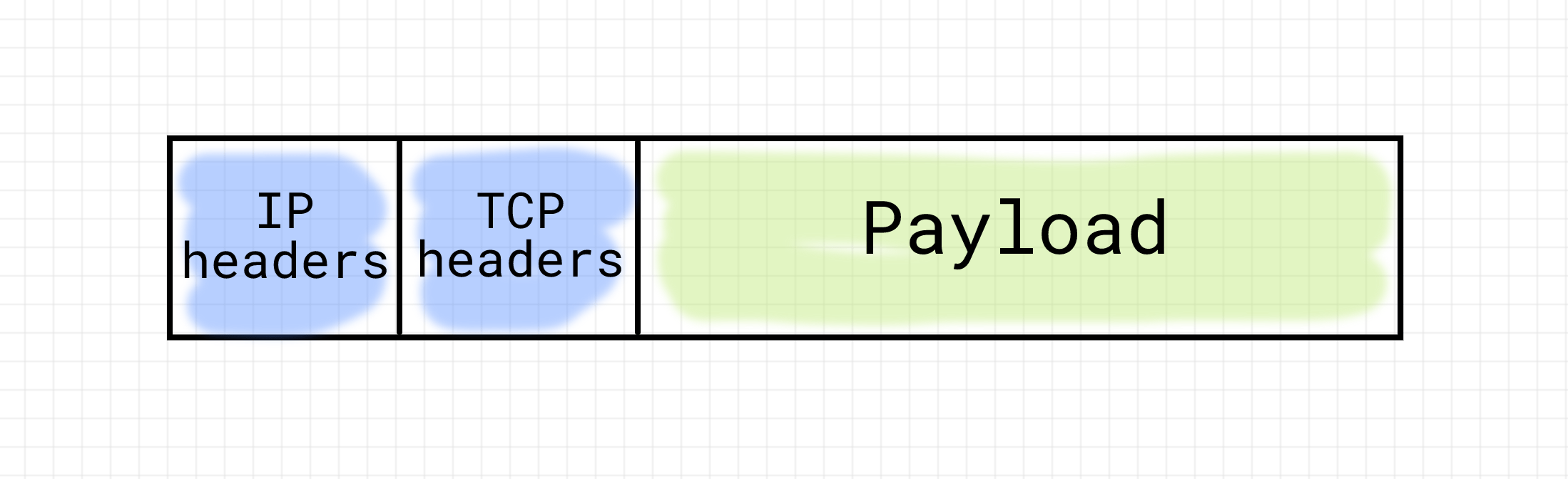

Any http message (whether it is a request or response) needs to know how to reach its destination.

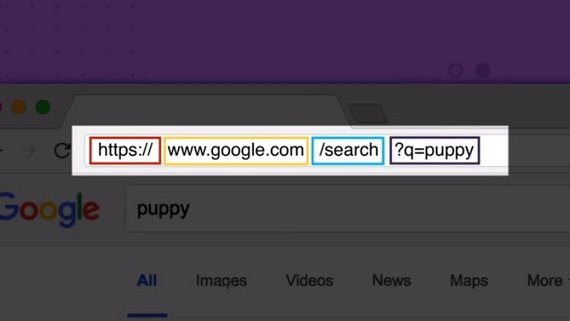

In order to reach its destination, each http message carries an address called the destination TCP address.

And each TCP address is composed of an IP address and a port number.

I know all these acronyms (TCP, IP, etc..) might be overwhelming if your networking concepts are not strong.

I will try to keep it simple but if you are interested in improving your knowledge of networking concepts, I highly recommend this book by Ross and Kurose.

So where is that address when all you did was type www.yahoo.com on your browser?

Well, this domain name is converted into an IP address through a large distributed database called the DNS.

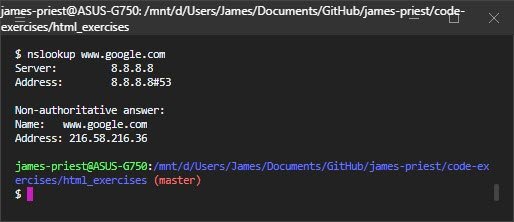

Do you want to check out what this IP address is?

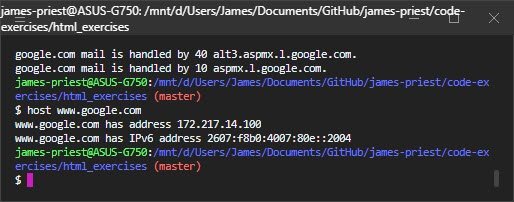

Easy! Head to your terminal and do the following:

$ host yahoo.com

yahoo.com has address 98.138.219.231

yahoo.com has address 98.137.246.8

yahoo.com has address 98.138.219.232

yahoo.com has address 72.30.35.9

yahoo.com has address 98.137.246.7

yahoo.com has address 72.30.35.10

yahoo.com has IPv6 address 2001:4998:44:41d::3

yahoo.com has IPv6 address 2001:4998:c:1023::5

yahoo.com has IPv6 address 2001:4998:c:1023::4

yahoo.com has IPv6 address 2001:4998:58:1836::10

yahoo.com has IPv6 address 2001:4998:58:1836::11

yahoo.com has IPv6 address 2001:4998:44:41d::4

yahoo.com mail is handled by 1 mta5.am0.yahoodns.net.

yahoo.com mail is handled by 1 mta6.am0.yahoodns.net.

yahoo.com mail is handled by 1 mta7.am0.yahoodns.net.As you can see, the DNS will translate yahoo.com to any of the addresses above.

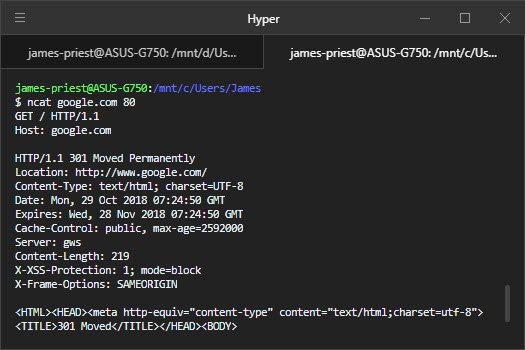

The IP address alone will allow the HTTP message to arrive at the right machine, but you still need the port number in order for the HTTP request to arrive exactly at the web server.

In other words, the web server is a regular network application that is listening on a specific port.

And the http request MUST be addressed to that port.

So where is the port number when you type www.yahoo.com?

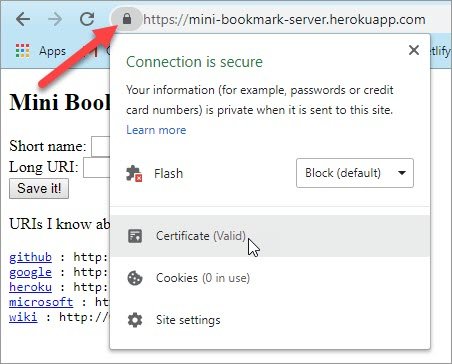

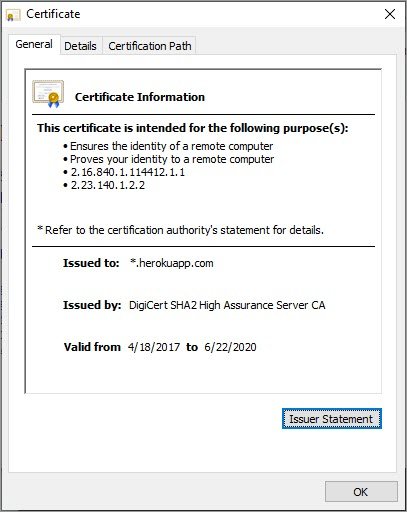

By default, the port number is 80 for http and 443 for https, so even though you haven’t explicitly specified the port number, it is still there.

And if the web server is listening on a non-default port number (neither 80 nor 443), you must explicitly specify the port number like this:

By now you should have all the necessary information to create an http web server in Python.

So without further ado, let’s get started.

Create a simple HTML file

Here is what we want to do.

We want to create a simple http server that serves a static html web page.

Let’s create our html page.

<html>

<head>

<title>Python is awesome!</title>

</head>

<body>

<h1>Afternerd</h1>

<p>Congratulations! The HTTP Server is working!</p>

</body>

</html>Now go ahead and save this file as index.html

With the web page that we want to serve out of the way, the next step is to create a web server that will serve this html page.

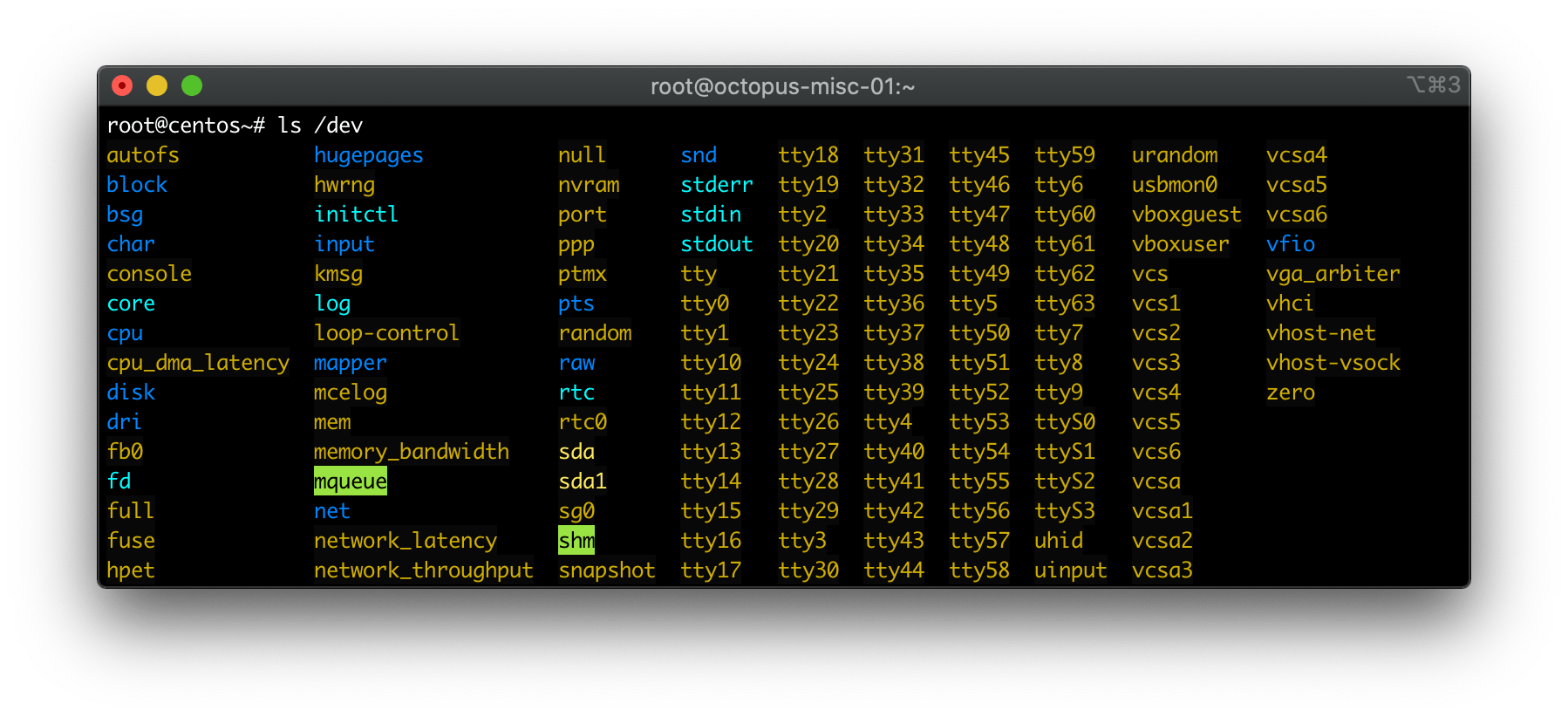

In order to create a web server in Python 3, you will need to import two modules: http.server and socketserver

Notice that in Python 2, there was a module named SimpleHTTPServer. This module has been merged into http.server in Python 3

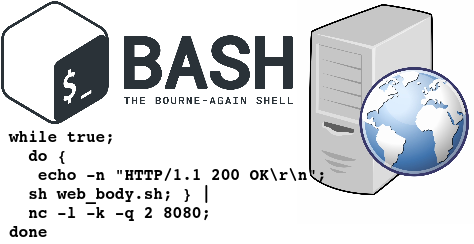

Let’s take a look at the code to create an http server

import http.server

import socketserver

PORT = 8080

Handler = http.server.SimpleHTTPRequestHandler

with socketserver.TCPServer(("", PORT), Handler) as httpd:

print("serving at port", PORT)

httpd.serve_forever()Just like that we have a functional http server.

Now let’s dissect this code line-by-line.

First, as I mentioned earlier, a web server is a process that listens to incoming requests on specific TCP address.

And as you know by now a TCP address is identified by an ip address and a port number.

Second, a web server also needs to be told how to handle incoming requests.

These incoming requests are handled by special handlers. You can think of a web server as a dispatcher, a request comes in, the http server inspects the request and dispatches it to a designated handler.

Of course these handlers can do anything you desire.

But what do you think the most basic handler is?

Well, that would be a handler that just serves a static file.

In other words, when I go to yahoo.com, the web server at the other end sends back a static html file.

This is in fact what we are exactly trying to do.

And that, my friend, is what the http.server.SimpleHTTPRequestHandler is: a simple HTTP request handler that serves files from the current directory and any of its subdirectories.

Now let’s talk about the socketserver.TCPServer class.

An instance of TCPServer describes a server that uses the TCP protocol to send and receive messages (http is an application layer protocol on top of TCP).

To instantiate a TCP Server, we need two things:

1- The TCP address (IP address and a port number)

2- The handler

socketserver.TCPServer(("", PORT), Handler)As you can see, the TCP address is passed as a tuple of (ip address, port number)

Passing an empty string as the ip address means that the server will be listening on any network interface (all available IP addresses).

And since PORT stores the value of 8080, then the server will be listening on incoming requests on that port.

For the handler, we are passing the simple handler that we talked about earlier.

Handler = http.server.SimpleHTTPRequestHandler

Well, how about serve_forever?

serve_forever is a method on the TCPServer instance that starts the server and begins listening and responding to incoming requests.

Cool, let’s save this file as server.py in the same directory as index.html because by default the SimpleHTTPRequestHandler will look for a file named index.html in the current directory.

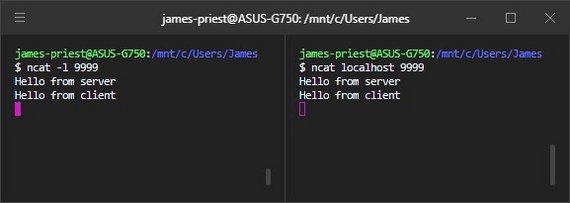

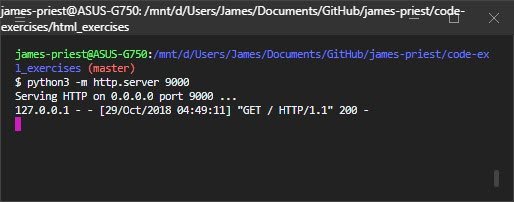

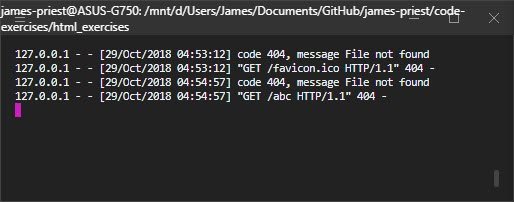

In that directory, start the web server:

$ python server.py

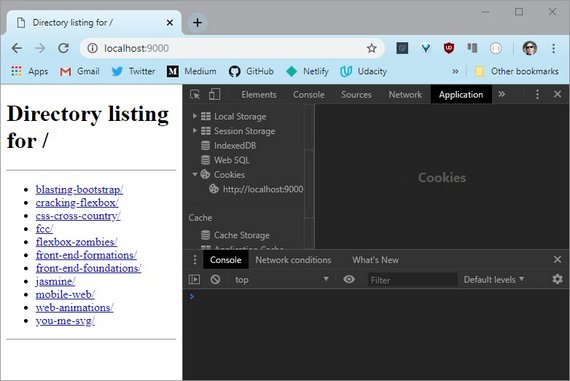

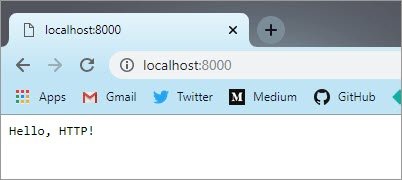

serving at port 8080By doing that, you now have an HTTP server that is listening on any interface at port 8080 waiting for incoming http requests.

It’s time now for the fun stuff!

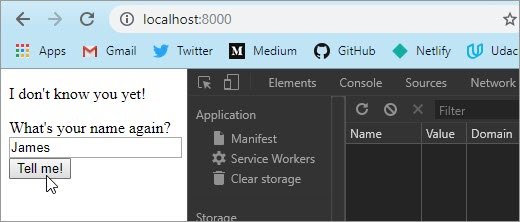

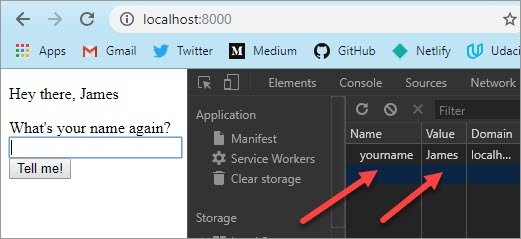

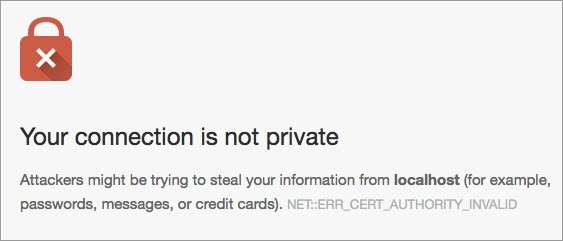

Open your browser and type localhost:8080 in the address bar.

Awesome! Looks like everything is working fine.

But hey what is localhost?

localhost is a host name that means this computer. It is used to access the network services that are running on the host via the loopback network interface.

And since the web server is listening on any interface, it is also listening on the loopback interface.

You want to know what IP address corresponds to localhost?

You got it.

$ host localhost

localhost has address 127.0.0.1

localhost has IPv6 address ::1

Host localhost not found: 3(NXDOMAIN)In fact you can totally replace localhost with 127.0.0.1 in your browser and you would still get the same result.

Try it out 🙂

One Final Word

You can actually start a web server with python without even having to write any scripts.

Just go to your terminal and do the following (but make sure you are on python 3)

python -m http.server 8080By default, this server will be listening on all interfaces and on port 8080.

If you want to listen to a specific interface, do the following:

python -m http.server 8080 --bind 127.0.0.1Also starting from Python 3.7, you can use the –directory flag to serve files from a directory that is not necessarily the current directory.

So the question now is, why would you ever need to write a script when you can just invoke the server easily from the terminal?

Well, remember that you are using the SimpleHTTPRequestHandler. If you want to create your custom handlers (which you will probably want to do) then you won’t be able to do that from the terminal.

Learning Python?

Check out the Courses section!

Featured Posts

- The Python Learning Path (From Beginner to Mastery)

- Learn Computer Science (From Zero to Hero)

- Coding Interview Preparation Guide

- The Programmer’s Guide to Stock Market Investing

- How to Start Your Programming Blog?

Are you Beginning your Programming Career?

I provide my best content for beginners in the newsletter.

- Python tips for beginners, intermediate, and advanced levels.

- CS Career tips and advice.

- Special discounts on my premium courses when they launch.

And so much more…

Subscribe now. It’s Free.

There are several types of web servers on the market and among them is Apache, one of the oldest servers

Although new, more modern and efficient alternatives have emerged in recent years, Apache continues to be a reference server.

Statistically, Apache covers 33.7% of the top 1000 sites, just behind Nginx with its 38.8%

It is widely used in Unix-like operating systems, and can be used on almost all platforms such as Windows, OS X, OS/2, etc

In this detailed guide, we will see:

- What Apache is precisely;

- Its importance;

- How it works;

- Its advantages and disadvantages;

- Difference between it and other Web servers on the market;

- Its installation and configuration ;

- etc.

Let’s start!

Chapter 1: What is the Apache server and how does it work?

1.1) What is Apache and what does it do?

The Apache server is one of the most powerful free Web servers on the market. It was created in 1995 by Rob McCool, then an employee of NCSA

According to the statistics of W3techs, its use is 31.5% of all websites for which the server is known

This is the core technology of theApache Software Foundationit is the core technology of the Apache platform, responsible for more than a dozen projects involving :

- Web transmission technologies

- Data processing;

- And the execution of distributed applications

Why Apache? The word, Apache, was taken from the name of the American Native Tribe ” Apache “, famous for its skills in warfare and strategy.

Initially, it was just a group of patches for the NCSA HTTPd web server, which the author then completely replaced by rewriting all the original code.

In short, Apache is an open source HTTP Web server developed and maintained by a community of users around theApache Software Foundation. It is used to respond to content requests from Web clients (browsers).

Currently and since 1996, it is the most used free Web server in the world because of its security and stability.

In addition, most web hosting companies work with Apache servers.

1.1.1. What is a web server?

A web server is a program that processes and returns information when a user makes a request

In other words, it hosts websites on one or more servers and displays the content (websites) of the server via client software (a browser).

Most servers are Linux-compatible. The most important Linux Web servers are Apache and NGINX.

1.1.2. What is the biggest challenge of a Web server?

The files that servers process are written in different languages

- Python

- Java

- PHP

- Etc.

When a user wants to load a page from a website, his browser sends a request to the server, and Apache returns a response with all the requested files:

- Text

- Images;

- Videos

- Etc.

In short, the server provides the pages requested by different users and guarantees a correct and secure communication. The server and the client communicate via the HTTP protocol

1.2) How does an Apache server work?

The main functionality of this web service is to provide users with all the files needed to view the web. The users’ requests are usually made via a browser:

- Chrome

- Firefox

- Safari;

- Etc.

Apache ensures that the communication between the web server and the web client (user requesting the information) is smooth and consistent.

Thus, when a user makes an HTTP request via the browser to enter a specific website or URL, Apache returns the requested information via the HTTP protocol.

For example, when a user writes twaino.com in his browser, this request will reach our Apache server, which will provide the texts, images, styles… via the HTTP protocol.

In addition, Apache allows you to apply a high level of customization through its modular system.

In fact, the server has a structure module-based that allows you to enable and disable various features like

- security modules like mod_security

- caching modules like Varnish;

- or header customization modules like mod_headers.

These Apache modules should be used with caution as they can affect the security and functionality of the web server. (We’ll see more details on Apache modules below).

Apache also allows you to customize your hosting’s PHP settings through the .htaccess file.

1.3. Some features of Apache

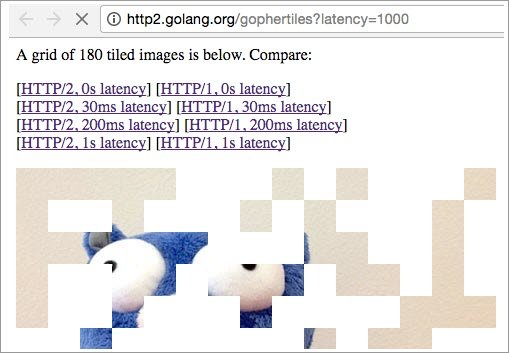

It is a web server with support for HTTP/1.1 and later HTTP/2.0 according to RFC 7540

It was one of the first servers to support VirtualHost (also known as virtual site) for domains and IPs following the normal RFC 2616 standard

This allows multiple domains to be hosted on the same server with the same IP, something that today seems basic but in the 90s was a revolution.

Among the main features of Apache, we find the following:

- It is free and open source;

- Easy to install and configure;

- Highly extensible and adaptable thanks to modules;

- Built-in features for user authentication and validation;

- Support for languages such as Perl, PHP and Python.

Chapter 2: What are the advantages and disadvantages of Apache?

2.1. Advantages of Apache

Some of the advantages that we can find in an Apache server are the following:

- Support: It is one of the most used servers for more than two decades, so behind it there is a very large community accompanied by extensive documentation.

- Cross-platform: It runs on both Microsoft Windows, Unix/Linux and Mac, making it an excellent service for both a server and a development environment on any workstation.

- Features: It contains a large number of official and third-party modules that allow you to extend the functionality of the server.

- Simplicity: It is one of the simplest servers to install and configure, especially in Linux distributions where it is part of the repositories of each distribution. In the case of Windows, it can be found packaged with the MySQL server and PHP in a single installer which makes installation much easier.

- Security: Apache has built-in features to secure the software with Authorization and Authentication modules and Access Control functions, as well as support for SSL/TLS certificate encryption.

- It has a large community of developers around the world, who help improve the software, as the original source code is freely available for viewing;

- Module-based structure;

- It is open source and free;

- High level of security through constant updates;

- Customization via independent .htaccess in each hosting;

- Compatible with the main CMS, online stores and e-learning platforms;

- It is one of the oldest web servers on the market, therefore super reliable;

- Allows you to enable and disable features;

- Easy to use for beginners.

2.2 Disadvantages of Apache

Here are the two disadvantages of Apache:

- Performance can be affected on high traffic pages, especially after 10,000 connections;

- If the modules are not used properly, security holes can be generated.

The only notable drawback is the poor performance compared to other alternatives on the market that are faster and able to handle much more traffic with the same resources.

Chapter 3: Differences Between Apache and Other Major Web Servers

3.1. Apache versus Nginx

Although NGINX is relatively young (born in 2004), it has become popular very quickly because of the advantages it offers in handling high traffic of more than 10,000 connections at a time.

Source: Apache Vs NGINX – Which Is The Best Web Server for You?

It can be defined as an application for Web servers. It was mainly designed to solve the problem that some servers like Apache had: Owners of sites with a lot of traffic were having performance difficulties.

NGINX allows all requests to a Web site to be made in a single process and provides better scalability.

For this reason, it is used by international brands such as Netflix, Airbnb, Pinterest or Wikipedia

In short, for high-traffic websites, NGINX is what you need and for sites with fewer visits, Apache is ideal.

To provide a high-performance web service in terms of connection, some servers have an Apache and Nginx configuration running in parallel.

3.1.1. The differences between Nginx and Apache

The American server Apache and the Russian Ngnix have been fighting and gaining ground in the world of web hosting. They are two of the most used resources for managing thousands of websites on local servers.

And although both servers serve the same purpose of keeping the world connected, it is worth reviewing what their most important differences are and here you will learn more about them.

3.1.1.1 They have a different structure and scope

The Apache web server has a simple software structure, is open source and processes a user request via HTTP or HTTPS, one at a time.

On the other hand, Ngnix is more robust, open source and suitable for all types of websites and web traffic, as it can handle a large number of parallel connections from the work process.

3.1.1.2. Apache utility for shared hosting

One feature of Apache, unlike Nginx, is that the customer can choose the configuration of his web site on the server without affecting the others, or changing the overall server layout in shared hosting.

The above function is possible from .htaccess files, with which each directory of the web page can be configured independently.

Although this is a great advantage, this flexibility in configuration can affect the performance of the server when it receives a large number of requests.

3.1.1.3. Compatibility of the two servers

Apache is highly compatible with Linux, Windows and the most commonly used CMS for creating and managing websites such as WordPress and Joomla.

On the other hand, Nginx still does not achieve ideal synchronization with Windows, but it is also compatible with popular content managers and the Python programming language.

3.1.1.4. Apache and Nginx facilitate the web-user relationship

Web servers have come to us to facilitate the exchange of information

If we look at it from a more specific point of view, they allow pages to achieve their goals by maintaining a loading speed that allows the user to quickly get what he is looking for.

There is no doubt about the usefulness of Web servers, Apache and Nginx are valuable

3.1.1.5. Other differences

- CPU and memory usage is consistent on Nginx even under heavy loads compared to Apache.

- Apache uses conventional file-based methods to handle static content and dynamic content by integrating the language renderer, while Nginx lacks the ability to render dynamic content natively.

- Apache allows additional per-directory configuration by evaluating and interpreting directives in hidden files called .htaccess, while Nginx does not allow additional per-directory configuration by evaluating and interpreting directives.

- Apache interprets .htaccess while Nginx does not interpret .htaccess

- Requests are served faster by Nginx than by Apache.

- The security level is high in Nginx compared to Apache, because Nginx does not allow distributing access to directory-level settings to an individual user.

- Apache was designed as a web server, while Nginx was designed as a web server as well as a proxy server.

- In Apache, modules are loaded and unloaded dynamically, but in Nginx, modules are not loaded dynamically.

- In Nginx, modules are selected and then compiled into the main software, while in Apache, this is done dynamically.

Now that their differences stand out, it’s up to you to decide which one is right for the type of project you want to set up.

3.2. Apache versus Tomcat

Tomcat (Apache tomcat) is a sub-project of the Apache Foundation and is a project at the same level as the Apache server (i.e. httpd)

Tomcat is intended exclusively for Java applications.

In terms of similarity between the two, we have :

- They are developed by the Apache organization;

- They have the function of HTTP service;

- They are open source and free.

In terms of distinction, we have

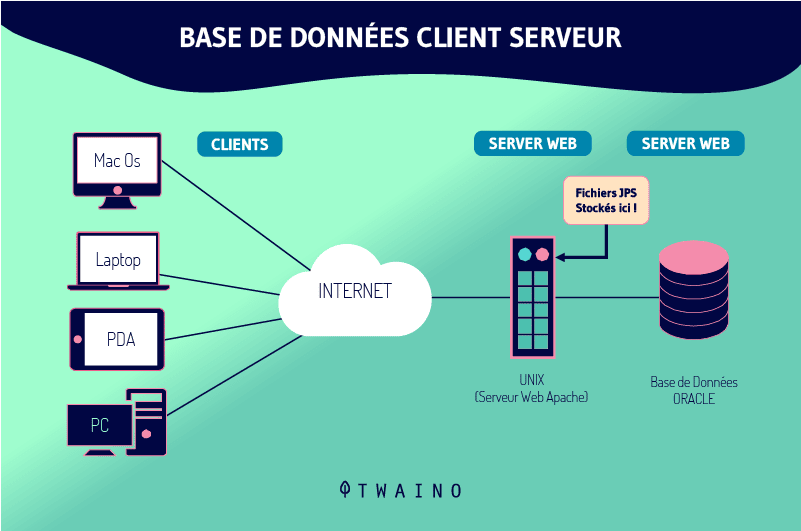

1. Tomcat is special in that it is also a Java web container and can handle JSPs, while Apache usually comes with PHP and cannot handle JSPs.

2. Apache only allows static web pages and requires Tomcat to handle dynamic web pages like Jsp. Tomcat can also be used for static pages but in some cases it is less efficient than Apache .

3. It cannot be configured as simply as Apache.

4. Apache and Tomcat integration:

- – If the client requests a static page, only the Apache server must answer the request;

- – If the client requests a dynamic page, the Tomcat server answers the request.

5. Apache and Tomcat are independent and can be integrated in the same server.

6. Apache is implemented in the C language and supports various features and modules to extend the basic functionality. Tomcat is written in Java and is better suited for Servlet and JSP.

7. Apache is a web server (static parsing, like HTML), tomcat is a Java application server (dynamic parsing, like JSP).

8. Tomcat is just a servlet container, which can be considered as an extension of apache, but can work independently of apache.

9. Apache is an ordinary server, which only supports HTML web pages, i.e. normal web pages. In contrast, Tomcat is a jsp/servlet container, and it also supports HTML, JSP, ASP, PHP, CGI, etc.

10. Apache focuses on the HTTP server and Tomcat on the servlet engine

11. Unlike Tomcat, Apache can run for a year without restarting, and the stability is very good.

3.3. Apache versus LiteSpeed

Many modern server solutions use an existing technology as a base to build on. This is exactly the case with LiteSpeed.

LiteSpeed Web Server (LSWS) was first released in 2003 and was marketed as a replacement for Apache

It has the ability to read Apache configuration files, execute the same commands and fully integrate with all control panels such as cPanel, Plesk and DirectAdmin.

The main improvements were in performance and scalability.

Although LiteSpeed was intended to replace Apache, it does not use the same code base. In fact, its event-driven architecture makes the solution much more similar to Nginx

The software uses predictive mechanisms to handle incoming traffic spikes and lighten the load on the server.

Now it’s time to compare LiteSpeed vs Apache and find out which one is better:

- Popularity – LiteSpeed vs Apache – Winner: Apache

There could be only one winner for this factor. After all, Apache was already conquering the market. When LiteSpeed arrived, its competitor was already powering over 50% of all websites.

In fact, the percentage varies depending on the third-party source you are looking at.

According to Netcraft’s April 2020 report, Apache has already lost the top spot to Nginx, now holding only 25% of the market share

On the other hand, web survey giant W3Techs tells a different story. Their recent report on usage statistics shows that Apache is still king, ruling 39% of the Internet. Nginx is second with 31.9% and LiteSpeed is in 5th place, responsible for 6.4% of online sites.

Therefore, it is clear that the winner here is Apache.

- Performance (static content) – LiteSpeed vs Apache – Winner: LiteSpeed

Due to its asynchronous architecture, LiteSpeed is much more flexible with higher traffic and concurrent connections

It uses an intelligent caching methodology in which the system caches compressed files instead of creating a new request every time the user tries to view them.

In this way, LSWS is able to increase PHP server performance by 50%, eventually making it up to six times faster than Apache for static content.

There is another advantage: LiteSpeed works wonders when it comes to compressing pages. This way, each new request is not only served faster, but also uses less RAM.

Therefore, LiteSpeed lives up to its name.

- Performance (dynamic content) – LiteSpeed vs Apache: Equality

Most websites today are dynamic. They use server-side scripts, which determine how the server handles requests from different users.

Content Management Systems (CMS) are tools often used to create dynamic content: WordPress, Magento, Joomla, Drupal… it is very likely that your site uses a CMS solution

However, there is no clear winner when you put LiteSpeed’s performance to the test against Apache.

You can easily pre-configure it with modules like FastCGI and PHP-FPM. This way it will be able to handle higher loads and speed up your pages in a multi-user PHP environment.

LSWS is just as good, and benchmarks have confirmed this notion. Apache offered slightly better raw speed, but LiteSpeed’s advanced page compression gave it a slight advantage for larger websites.

- Operating System Support – LiteSpeed vs Apache – Winner: Apache

The operating system is another crucial element in the configuration of your website. Linux servers are still the dominant solution in the field of web hosting. Therefore, Unix-like operating systems are a natural choice

In this regard, it wouldn’t make much difference which web server you use.

Apache is compatible with all versions and instances of the Linux operating system

- Fedora;

- Ubuntu;

- RedHat

- Etc

And since LiteSpeed uses Apache as a base… well, you can connect the dots.

For Windows users, things change.

While Apache is fully optimized for Windows instances, LiteSpeed is not operating system friendly. According to the developers, Windows systems are quite different from Unix-like solutions and require many adjustments to the existing configuration.

Here Apache wins the duel.

- Security – LiteSpeed vs Apache – Winner: LiteSpeed

the security aspect should never be neglected. Cybercrime reports show a staggering 600% increase in online attacks since 2017, causing an estimated $500 billion in losses to the global economy.

Apache comes with everything you need to secure a web server. You can apply security rules, block user access or remove unnecessary modules

Moreover, Apache tries to follow the latest server security standards, frequently releasing vulnerability patches and security optimizations.

Nevertheless, you need some knowledge of administration and reconfiguration. LiteSpeed provides high-level protection for the website from the start

It supports Apache’s mod_security rules, so you can expect the same enhanced server defenses.

However, there are some things LiteSpeed excels at, and one of them is certainly protection against brute force and other DDoS attacks

In a simulated test, LSWS easily handled the same number of server requests that previously caused Apache to crash.

Every little bit counts, and LiteSpeed will have to win this one.

- Support – LiteSpeed vs Apache – Winner: LiteSpeed

Comparing LiteSpeed’s web server to Apache’s in terms of support, both solutions maintain detailed documentation for anyone interested in self-help

Naturally, the guides are a bit more technically oriented, but even a novice user can find enough learning material.

Still, LiteSpeed seems to have a better organized community. In addition to dedicated forums, LSWS users have their own groups on Slack and Facebook, where optimization tips are often discussed or customer problems solved.

As for live technical support, Apache simply does not have any. The software is open source, so the community is your best option for live support

In comparison, LiteSpeed is a commercial product, so they duly offer dedicated support. You can easily submit a help ticket from your user area, and operators are generally helpful.

You can even opt for premium support with LiteSpeed and get services such as:

- Installing modules

- Configuration of DDoS packages;

- Or removal of blacklists.

Of course, these services can be quite expensive. For example, $999 per year for server management seems too expensive for a start-up or a small business. But if you don’t have an IT person and can afford it, the experts at LSWS may be just what you’re looking for.

LiteSpeed wins this round because of the wide variety of support options and dedicated assistance it offers.

- Prize – LiteSpeed vs Apache – Winner: Apache

Apache already has the upper hand here. The software has always been distributed for free, which is one of the reasons why it is such a popular solution for web server management.

As a proprietary platform, LiteSpeed is a paid product, with prices ranging from $10/month to $92/month at higher levels. There is also a free version, but it is quite limited: it can only support one domain and gives about 2 GB of RAM.

In the end, if price is your main selling point, free (Apache) will always beat paid (LiteSpeed).

3.4. Apache versus Lighttpd

About 10 years ago (while Nginx was still in its infancy), Lighttpd was a lightweight alternative server to Apache, as it better supported simultaneous connections of thousands of users.

Source: Wikipedia

Lighttpd is a software written in C by Jan Kneschke, distributed under BSD license and available for Unix and Linux.

One of the features of the web server is that it consumes very little RAM and CPU resources. This makes it particularly useful for VPS or Dedicated servers with low resources.

It supports communication and integration with FastCGI, SCGI and CGI. Therefore, it is able to respond to page requests made in any programming language.

One of the disadvantages of lighttpd is that it does not support .htaccess, as well as the fact that it lacks comprehensive documentation and a massively adopted community, unlike its rivals Apache and Nginx.

Here are 7 main differences between Apache and Lighttpd servers:

- Nginx and Lighttpd are probably the two most well-known asynchronous servers, and Apache is by far the most well-known process-based server.

- Most tests show that there is an inherent performance gain in using Lighttpd over Apache.

- Apache supports CGI and FastCGI. It is tested and its implementation has not changed for years. You can find documents that are over ten years old and still valid. Lighttpd supports CGI and FastCGI via the module.

- Lighttpd supports X-Sendfile since version 1.5. Apache 1 does not support X-Sendfile. For Apache 2 there is a third party module.

- URL rewriting in Apache can be handled in a configuration (at site level) or via a replacement in the .htaccess state. In Lighttpd, you are limited to rewriting only via the configuration level, which means that for platforms like WordPress, you’ll have to hard code following the format rewrite rule.

- Lighttpd is distributed under the terms of the revised BSD license. This licensing option greatly reduces collaborative friction between Lighttpd code and code from other projects distributed under other licenses. Apache uses the Apache 2.0 license of the same name, which is sometimes considered “permissive” in the sense that it is an open source license that is not a copyleft license.

- Installing PHP is not as easy in Lighttpd as in Apache because there is no module for it.

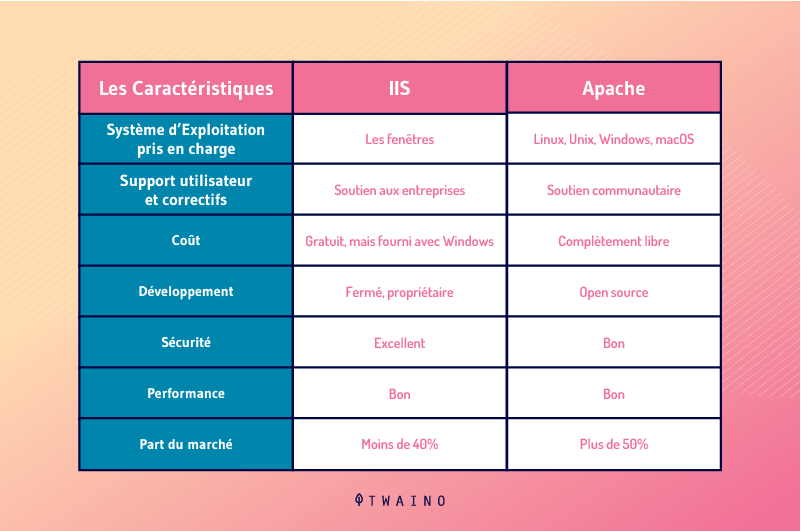

3.5. Apache versus IIS

IIS(Internet Information Services – formerly called Internet Information Server) is a web server created by Microsoft for its server operating systems

Although it is a proprietary system, it relies on several publicly available protocols, including:

- The Hypertext Transfer Protocol (HTTP)

- The Simple Mail Transfer Protocol (SMTP);

- And the file transfer protocol (FTP)

Its first version was introduced with Windows NT Server version 4 and has undergone several updates. Currently, the most recent version is IIS 10 (only available on Windows Server 2019)

After the launch of the .NET platform in 2002, IIS also acquired the role of managingASP.NET

ASP.NET, like its direct competitor JSP, is compiled before execution. This feature has advantages over interpreted options such as ASP and PHP.

Now, which one to choose?

Apache and IIS both have their advantages and disadvantages

- IIS comes with Windows but Apache does not have high profile enterprise support

- Apache has excellent security but does not offer the excellent .NET support of IIS

- Apache is free and IIS is not free;

- Language modules are easier to install on IIS because you just need to run the installer;

- IIS comes with the ASP library, ready to run scripts that use the language, while with Apache, the user has to install the appropriate packages;

- Etc

The final choice may well be defined by the solution that best meets your unavoidable needs

The following summary table can also help you:

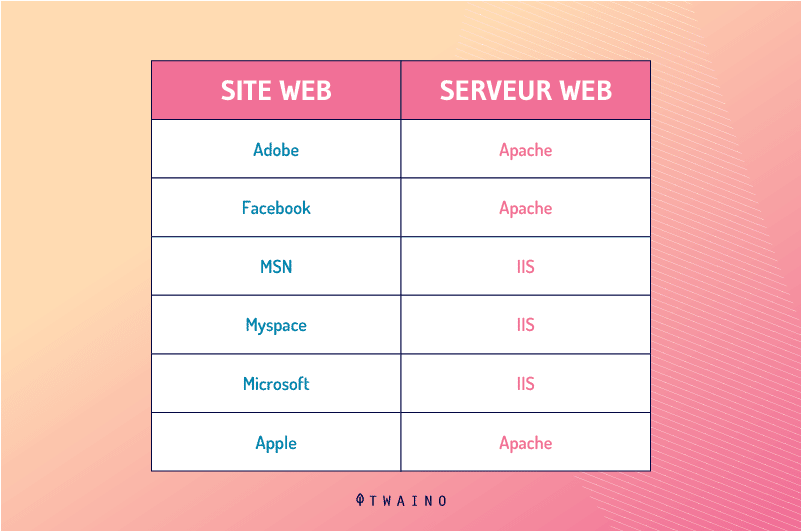

Here is a short list of sites and their web servers:

3.6. Apache versus Caddy

Caddy is an open source web server platform designed to be simple, easy to use and secure

Written in Go with no dependencies, Caddy is easy to download and works on almost any platform that Go compiles on.

By default, Caddy supports automatic HTTPS by provisioning and renewing certificates via Let’s Encrypt. Unlike Apache, Caddy provides these features out of the box, and it also comes with automatic redirection of HTTP traffic to HTTPS.

Compared to Apache, Caddy’s configuration files are much smaller. In addition, Caddy runs on TLS 1.3, the latest standard for transport security.

In terms of performance, Caddy has proven to be competitive with Apache (but behind Nginx) both in terms of requests processed per second and load stability.

Another possible disadvantage of Caddy is that it currently holds a small market share, which may limit resources for troubleshooting

Chapter 4: Apache Installation and Configuration

4.1 Installing and Configuring the Apache Server: Short Video Tutorials

To help you see everything clearly, we have selected a few short, self-explanatory tutorial videos for the installation and configuration of the Apache HTTP Server:

- Tutorial to Install and configure Apache Web Server on Windows 10

- Tutorial to install and configure Apache on Linux:

- Tutorial to install and configure the Apache web server in Ubuntu Linux (for beginners)

4.2. Starting Apache

Use the following commands:

- To start the Apache service

sudo service apache2 start

- To check if the Apache configuration is valid and to reload

sudo apache2ctl configtest

sudo service apache2 reload

- To list the available Apache HTTP Server modules

/etc/init.d/apache2 -l

4.3. Configuring Apache via .htaccess

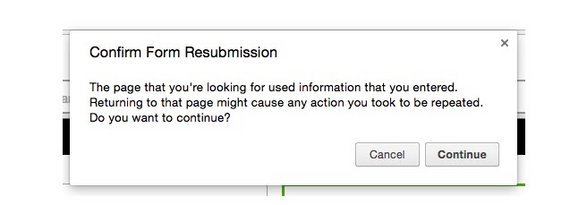

Use the “.htacess” file to configure some of the Apache HTTP behaviors. A major application of this file is to redirect a URL to other URLs.

The following .htacess file redirects http://twaino.com to http://www.twaino.com. It also redirects access to a certain web page to another web page via a 301 redirect

The 301 redirect will tell search engines that this page has been moved and is the recommended method for moving web pages.

Here is an explicit video that can help you with the configuration of Apache via .htacess

4.4. Using modules on Apache Http

Apache Http supports the use of modules. To enable modules, use a2enmod + command. To enable the rewrite module for example, use a2enmod rewrite.

Here is a video that explains how to enable/disable modules in Apache2: https: //youtu.be/YkZC38HfOQU

4.5. Performance – Enable gzip compression

To optimize the download time of your web pages, you can enable gzip compression. This requires the Apache module“mod_deflate” which can be installed with the following command:

a2enmod deflate

sudo /etc/init.d/apache2 restart

Compression can be enabled in the default configuration file for this module located in /etc/apache2/mods-available/deflate.conf or via the file “.htaccess“.

# compress all text & html:

AddOutputFilterByType DEFLATE text/plain

AddOutputFilterByType DEFLATE text/html

AddOutputFilterByType DEFLATE text/xml

AddOutputFilterByType DEFLATE text/css

AddOutputFilterByType DEFLATE application/xml

AddOutputFilterByType DEFLATE application/xhtml+xml

AddOutputFilterByType DEFLATE application/rss+xml

AddOutputFilterByType DEFLATE application/javascript

AddOutputFilterByType DEFLATE application/x-javascript

[[Other Apache modules]] == Support for php and wordpress :

sudo apt-get install libapache2-mod-fcgid

sudo apt-get install php5-cgi

Then activate the corresponding modules:

sudo a2enmod fastcgi

sudo a2enmod proxy

# required for wordpress blog

sudo a2enmod rewrite

Chapter 5: How to secure the Apache Web server?

Securing your web server is very important, it means:

- Prevent your confidential information from being displayed

- Protecting your data;

- And restricting access.

Here are 7 common things that can improve the security of your Apache web servers:

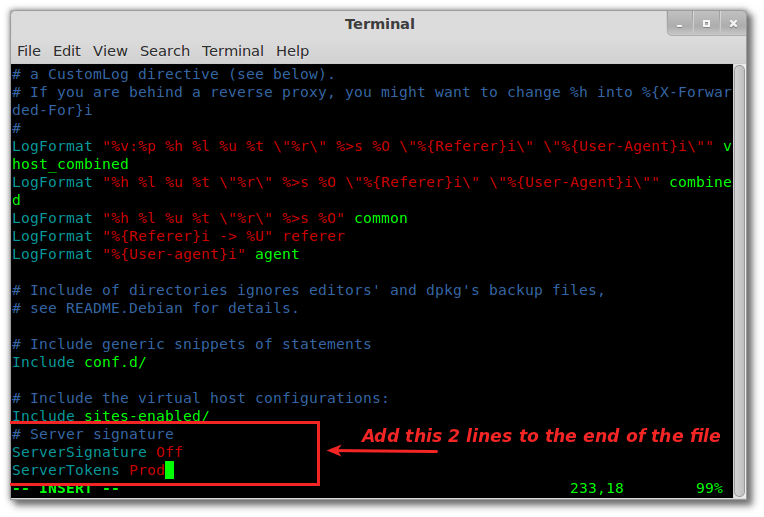

5.1. Hide Apache version and operating system information

Generally, Apache displays its version and operating system name in errors.

An attacker can use this information to launch an attack using publicly available vulnerabilities in the particular server or operating system version.

In order to prevent the Apache web server from displaying this information, you must modify the “ server signature ” option available in the apache configuration file. By default it is“ON“, you need to put it“OFF“.

You can also set“ServerTokens Prod” which tells the web server to return only Apache and to remove major and minor versions of the operating system.

Source : Unixmen

After changing the configuration file, you need to restart/reload your apache web server to make it effective.

5.2. Disable directory listing

If the root directory of your document does not have an index file, by default your apache web server will display the entire contents of the document’s root directory.

This feature can be disabled for a specific directory via the “options directive” option available in the Apache configuration file.

5.3. Disable unnecessary modules

It is recommended to disable all unnecessary modules that are not used. You can see the list of enabled modules available in your apache configuration file.

Many of the modules listed can be disabled like:

- mod_imap

- mod_include

- mod_info

- mod_userdir

- mod_autoindex ;

- etc

They are hardly used by production web servers.

Once you have removed the modules that are not useful, save the file.

Restart the Apache services with the following command:

/etc/init.d/httpd restart

5.4. Restrict access to files outside the Web root directory

If you want to make sure that files outside the web root directory are not accessible, you need to make sure that the directory is restricted with the“Allow” and“Deny” option in your web server configuration file.

Once you have restricted access outside the web root directory, you will not be able to access any files located in any other folder on your web server. You will therefore get a 404 return code.

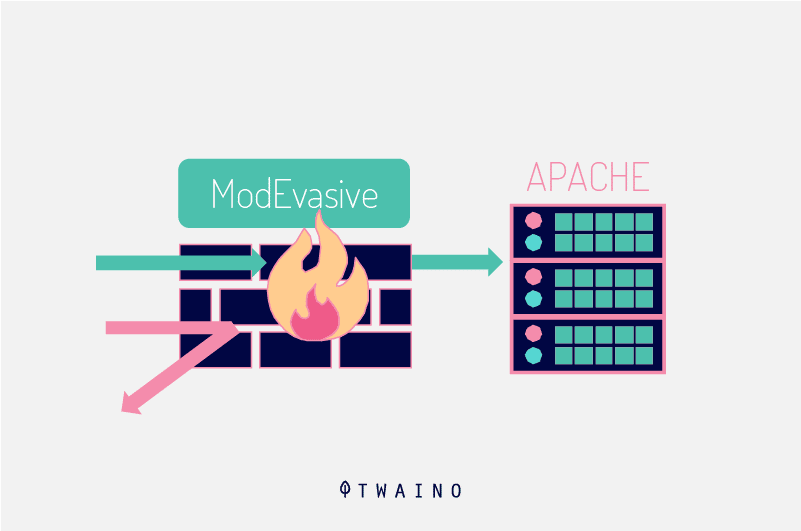

5.5. Use mod_evasive to refute DoS attack

If you want to protect your web server against Dos (i.e. Denial of Service), you should enable the mod_evasive module

This is a third-party module that detects the Dos attack and prevents it from doing as much damage as it would if it were to take its course

It could be downloaded here : Download the file here.

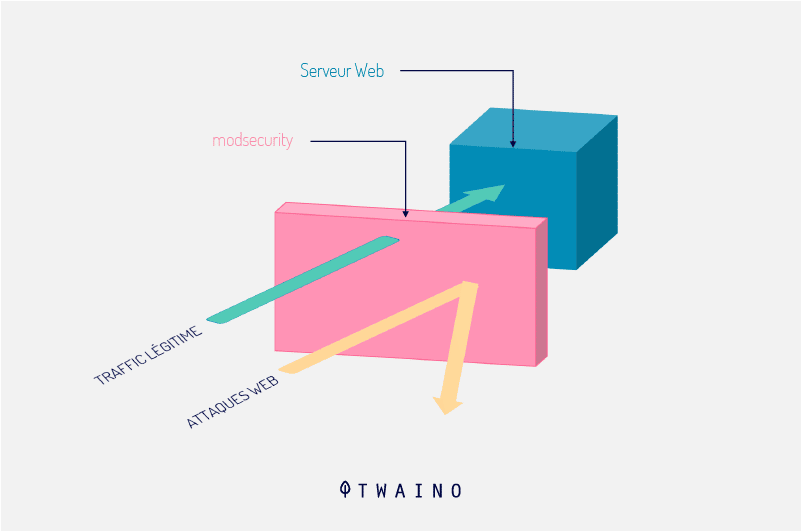

5.6. Use mod_security to improve apache security

This module works as a firewall for Apache and allows you to monitor the traffic in real time. It also prevents the web server from brute force attacks

The mod_security module can be installed with the default package manager of your distribution.

5.7. Limit the size of requests

Apache has no restrictions on the total size of the http request that could lead to a DoS attack. However, you can limit the request size of an Apache “LimitRequestBody” directive with the directory tag

The value can be set between 0 and 2 GB (i.e. 2147483647 bytes) depending on your needs.

Chapter 6: Modular architecture of the HTTP server

The server has a modular architecture consisting of :

- a Core that supports the common basic functions

- then a series of proprietary/third party modules that extend its functionality that can be enabled or disabled in an installation as needed.

Among the best known are auth_basic and mod_rewrite, which are the ones a programmer uses most. The list is actually much longer

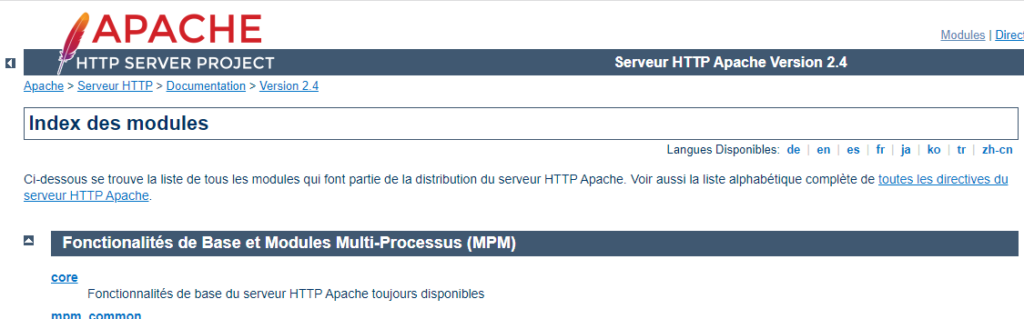

The complete list of modules can be seen in the module index available in the Apache documentation:

Among the most important modules, we can find the MPM ( Multi-Processing-Module ) modules which define the internal architecture

The way the work is distributed can vary depending on the MPM module used:

- Multi-processing prefork (mpm-prefork);

- Multiprocessing worker (mpm-worker);

- Multiprocessing event (mpm-event) ;

- ITK multiprocessing (mpm-itk).

These multi-processing modules (also called MPM) are the ones in charge of processing HTTP requests, managing the processes and the different execution threads of the service.

The choice of the module is a crucial decision since it will determine whether or not the server will function properly, depending on the use you wish to give the server.

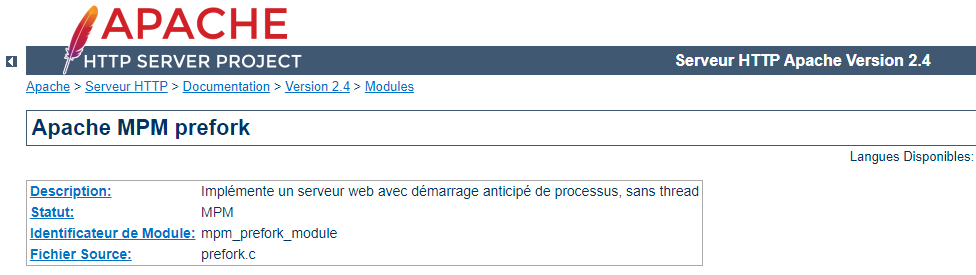

6.1. mpm_prefork

The default module used by the server for processing is mpm-prefork. This module opens different processes to organize the work

It is considered the safest because there are some configurations and modules that are not safe to use with thread processing

Therefore, it is safer to use the server with mpm-prefork which instead of opening threads opens independent processes.

Although security is gained, it is also the one that consumes the most resources since independent processes consume much more CPU and RAM memory than threads.

6.2. mpm_worker

This module has better performance than mpm-prefork. Its function is to open several processes as prefork, and each of them in turn opens different threads to respond to requests.

Basically, it has two drawbacks

- The first is that it has a poorer error handling than prefork and in case of a thread failure, it can lose requests;

- And the second is that not all available modules are safe to use in this mode.

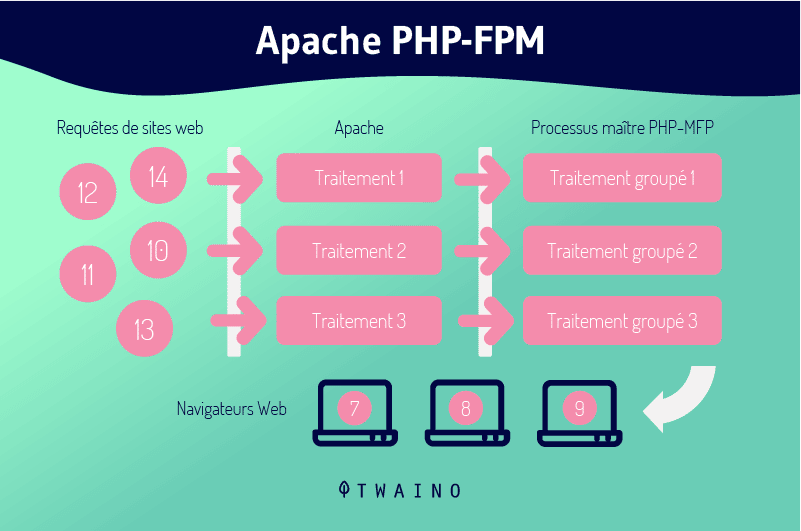

For example, the easiest way to do PHP configuration in Apache is via mod_php, but this module cannot be used with Worker. Therefore, it must be replaced by an example of PHP-FPM

The latter in turn adds an improvement in resource usage, both memory and CPU.

6.3. mpm_event

Event is quite similar to mpm-worker in that it is based on it. It focuses on improving persistent requests for which Worker is not so good.

Normally a connection will be closed if it doesn’t have more information. To re-transmit a new connection, it involves a round trip communication to establish some parameters.

Once the communication is established, it is much more efficient to keep the channel open. This is done throughKeep-Alive requests that facilitate keeping a connection open until the client or server decides to close it.

This is where Event outperforms Worker and for the rest they share the advantages as well as the disadvantages since Event is based on Worker only with the improvement of these requests.

6.4. mpm_itk

This is the most recent module and like Prefork (which works with child processes instead of threads), the main innovation of this module is that it allows you to assign each VirtualHost (each hosted domain) a user.

This way of separating sites with different users allows :

- each site to have its own security permissions ;

- and that the user processes cannot interact with each other, obtaining confidentiality and security of the data.

The same thing can be achieved with PHP-FPM where each site can work with its respective user

However, the configuration of each one is more cumbersome and involves more memory usage since there is a PHP-FPM process for each site.

Chapter 7: Other Questions About the Apache Server

7.1. What is Apache?

Apache2 HTTP Server is a web server that uses the http protocol. It is developed by the Apache Software Foundation (ASF). It is open source, cross-platform (works on different operating systems), free and downloadable. The project website is www.apache.org.

7.2. Why is Apache so popular?

Apache is open source, and as such, it is developed and maintained by a large group of global volunteers. One of the main reasons it is so popular is that the software can be downloaded and used by anyone for free.

In addition, the Apache web server is an easy to customize environment, it is fast, reliable and highly secure. This makes it a common choice for the best companies.

7.3. How do I know if my Apache server is working?

- Access your server using your favorite SSH client.

- Enter the following command: sudo service apache2 status.

- If Apache is running, you will see the following message Apache is running (pid 26874).

7.4. What does Apache include?

Apache has modules for :

- Security ;

- Caching;

- URL rewriting;

- Password authentication;

- And so on

You can also adjust your own server settings via a file called .htaccess, which is an Apache configuration file.

7.5. How is the Apache server installed?

To install apache as a service :

- In the Windows menu, search for: cmd;

- Run cmd with administrator rights;

- Go to the apache : cd c:Apache24bin.

- Install the service with the instruction : httpd.exe -k install.

- Finally, start apache with the instruction : httpd.exe -k start.

7.6. How do I know if I am using Apache?

There are several ways to know the software used by our server, one of them is to use tools such as GTMetrix, Pingdom…

The easiest way would be to analyze the website via Pingdom, from the File Requests section.

7.7. What communication port does the Apache web server use?

By default, the Apache HTTP server is configured to listen on port 80 for insecure web communications and on port 443 for secure web communications.

7.8. What are the versions of Apache?

There are currently three versions of Apache running: versions 2.0, 2.2 and 2.4. Previously, there was version 1.3 which is the best known and the one that meant the big expansion of the server.

7.9. How do I start, restart or stop the Apache server?

To start, stop or restart Apache as a web server, you just need to access the terminal of your server via ssh and execute one of the following commands:

- Start Apache: /etc/init.d/ apache2 start.

- Restart Apache: /etc/init.d/ apache2 restart.

- Stop Apache: /etc/init.d/apache2 stop . 5/5 – (3 votes)

7.10. how does Apache work?

As a web server, Apache is responsible foraccepting directory requests (HTTP) from Internet users and sending them the desired informationin the form of files and web pages

Most web software and code is designed to work with Apache’s functionality.

7.what is MySQL and PHP in Apache?

Apache is the web server that processes requests and serves web resources and content via HTTP

MySQL is the database that stores all your information in an easily searchable format.

PHP is the programming language that works with Apache to help create dynamic web content.

7.12. Can Nginx replace Apache?

Both solutions are capable of handling various workloads. Although Apache and Nginx share many qualities, they should not be considered entirely interchangeable.

7.13. Is Nginx the same as Apache?

The main difference between Apache and NGINX is their design architecture

Apache uses a process-oriented approach and creates a new thread for each request. NGINX, on the other hand, uses an event-driven architecture to handle multiple requests within a single thread.

In summary

As we have seen before, Apache is the Web server that thousands of hosting companies around the world work with.

It is ideal for small and medium-sized businesses that want to be present in the digital world. Very compatible with WordPress that allows you to work in a simple and orderly way.

I hope this guide has helped you weigh the pros and cons to make the right decision for your project!

Thanks for reading and see you soon!

In this article, we explain what web servers are, how web servers work, and why they are important.

| Prerequisites: |

You should already know how the Internet works, and understand the difference between a web page, a website, a web server, and a search engine. |

|---|---|

| Objective: |

You will learn what a web server is and gain a general understanding of how it works. |

Summary

The term web server can refer to hardware or software, or both of them working together.

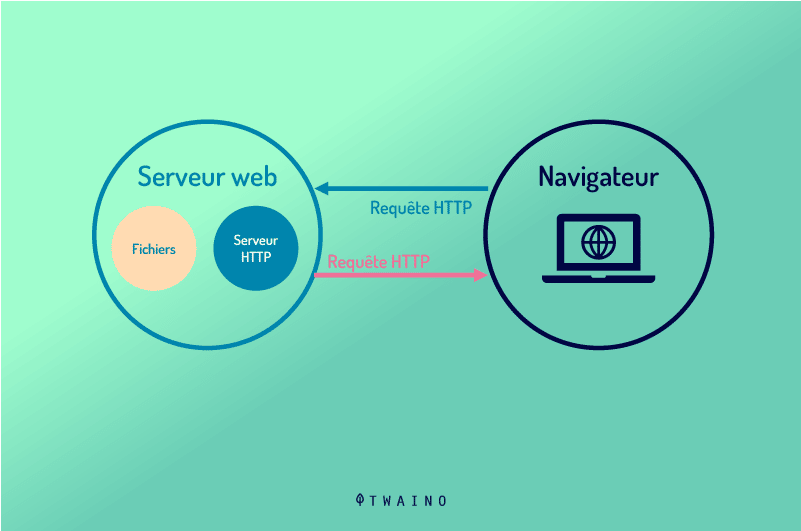

- On the hardware side, a web server is a computer that stores web server software and a website’s component files (for example, HTML documents, images, CSS stylesheets, and JavaScript files). A web server connects to the Internet and supports physical data interchange with other devices connected to the web.

- On the software side, a web server includes several parts that control how web users access hosted files. At a minimum, this is an HTTP server. An HTTP server is software that understands URLs (web addresses) and HTTP (the protocol your browser uses to view webpages). An HTTP server can be accessed through the domain names of the websites it stores, and it delivers the content of these hosted websites to the end user’s device.

At the most basic level, whenever a browser needs a file that is hosted on a web server, the browser requests the file via HTTP. When the request reaches the correct (hardware) web server, the (software) HTTP server accepts the request, finds the requested document, and sends it back to the browser, also through HTTP. (If the server doesn’t find the requested document, it returns a 404 response instead.)

To publish a website, you need either a static or a dynamic web server.

A static web server, or stack, consists of a computer (hardware) with an HTTP server (software). We call it «static» because the server sends its hosted files as-is to your browser.

A dynamic web server consists of a static web server plus extra software, most commonly an application server and a database. We call it «dynamic» because the application server updates the hosted files before sending content to your browser via the HTTP server.

For example, to produce the final webpages you see in the browser, the application server might fill an HTML template with content from a database. Sites like MDN or Wikipedia have thousands of webpages. Typically, these kinds of sites are composed of only a few HTML templates and a giant database, rather than thousands of static HTML documents. This setup makes it easier to maintain and deliver the content.

Deeper dive

To review: to fetch a webpage, your browser sends a request to the web server, which searches for the requested file in its own storage space. Upon finding the file, the server reads it, processes it as needed, and sends it to the browser. Let’s look at those steps in more detail.

Hosting files

First, a web server has to store the website’s files, namely all HTML documents and their related assets, including images, CSS stylesheets, JavaScript files, fonts, and video.

Technically, you could host all those files on your own computer, but it’s far more convenient to store files all on a dedicated web server because:

- A dedicated web server is typically more available (up and running).

- Excluding downtime and system troubles, a dedicated web server is always connected to the Internet.

- A dedicated web server can have the same IP address all the time. This is known as a dedicated IP address. (Not all ISPs provide a fixed IP address for home lines.)

- A dedicated web server is typically maintained by a third party.

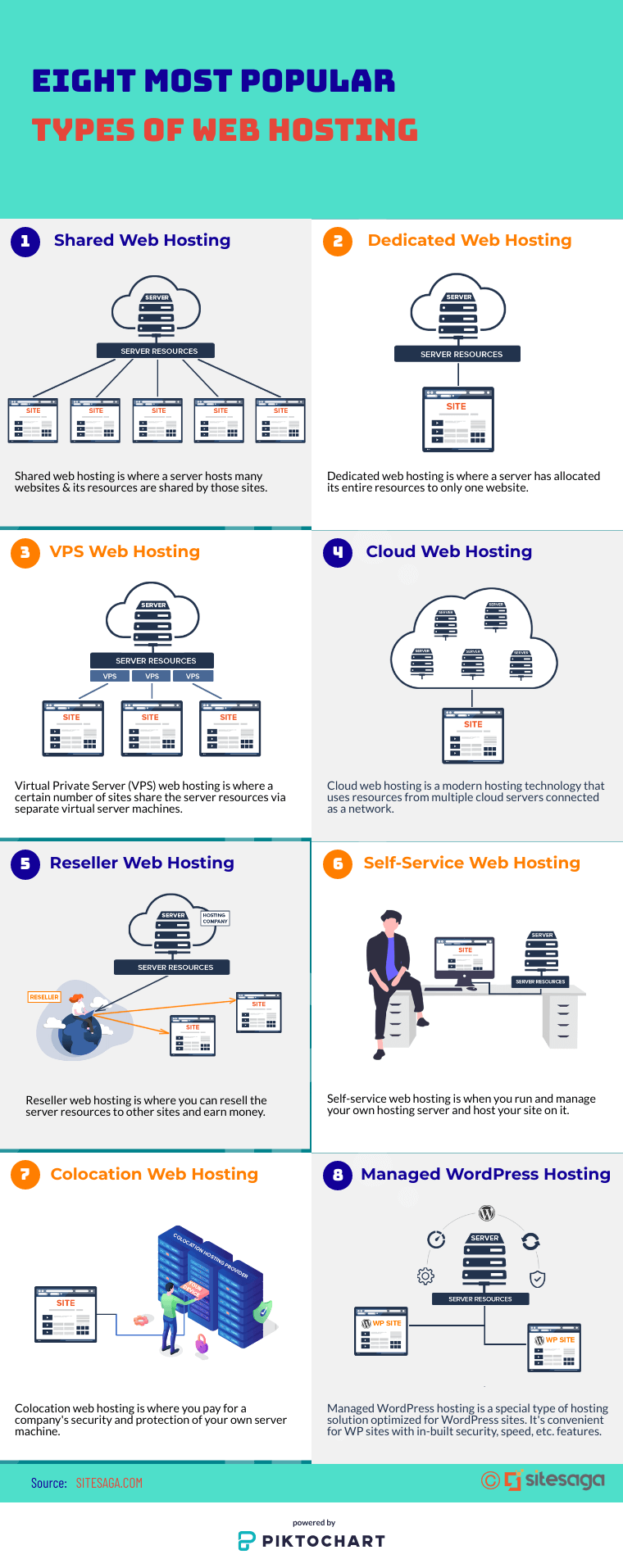

For all these reasons, finding a good hosting provider is a key part of building your website. Examine the various services companies offer. Choose one that fits your needs and budget. (Services range from free to thousands of dollars per month.) You can find more details in this article.

Once you have web hosting service, you must upload your files to your web server.

Communicating through HTTP

Second, a web server provides support for HTTP (Hypertext Transfer Protocol). As its name implies, HTTP specifies how to transfer hypertext (linked web documents) between two computers.

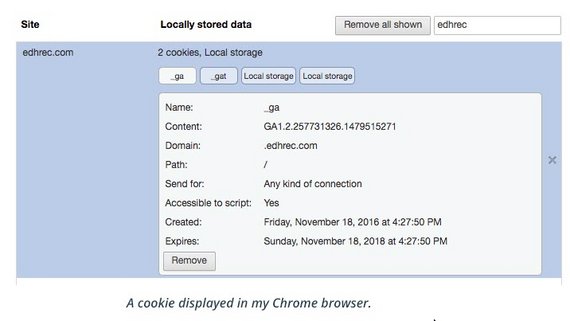

A Protocol is a set of rules for communication between two computers. HTTP is a textual, stateless protocol.

- Textual

-

All commands are plain-text and human-readable.

- Stateless

-

Neither the server nor the client remember previous communications. For example, relying on HTTP alone, a server can’t remember a password you typed or remember your progress on an incomplete transaction. You need an application server for tasks like that. (We’ll cover that sort of technology in other articles.)

HTTP provides clear rules for how a client and server communicate. We’ll cover HTTP itself in a technical article later. For now, just be aware of these things:

- Usually only clients make HTTP requests, and only to servers. Servers respond to a client‘s HTTP request. A server can also populate data into a client cache, in advance of it being requested, through a mechanism called server push.

- When requesting a file via HTTP, clients must provide the file’s URL.

- The web server must answer every HTTP request, at least with an error message.

On a web server, the HTTP server is responsible for processing and answering incoming requests.

- Upon receiving a request, an HTTP server checks if the requested URL matches an existing file.

- If so, the web server sends the file content back to the browser. If not, the server will check if it should generate a file dynamically for the request (see Static vs. dynamic content).

-

If neither of these options are possible, the web server returns an error message to the browser, most commonly

404 Not Found.

The 404 error is so common that some web designers devote considerable time and effort to designing 404 error pages.

Static vs. dynamic content

Roughly speaking, a server can serve either static or dynamic content. Remember that the term static means «served as-is». Static websites are the easiest to set up, so we suggest you make your first site a static site.

The term dynamic means that the server processes the content or even generates it on the fly from a database. This approach provides more flexibility, but the technical stack is more complex, making it dramatically more challenging to build a website.

There are so many application server technologies that it’s difficult to suggest a particular one. Some application servers cater to specific website categories like blogs, wikis, or e-commerce; others are more generic. If you’re building a dynamic website, take the time to choose technology that fits your needs. Unless you want to learn web server programming (which is an exciting area in itself!), you don’t need to create your own application server. That’s just reinventing the wheel.

Next steps

PC clients communicating via the network with a web server serving static content only.

The inside and front of a Dell PowerEdge server, a computer designed to be mounted in a rack mount environment. It is often used as a web server.

Multiple web servers may be used for a high-traffic website.

Web server farm with thousands of web servers used for super-high traffic websites.

A web server is computer software and underlying hardware that accepts requests via HTTP (the network protocol created to distribute web content) or its secure variant HTTPS. A user agent, commonly a web browser or web crawler, initiates communication by making a request for a web page or other resource using HTTP, and the server responds with the content of that resource or an error message. A web server can also accept and store resources sent from the user agent if configured to do so.[1]

[2]

The hardware used to run a web server can vary according to the volume of requests that it needs to handle. At the low end of the range are embedded systems, such as a router that runs a small web server as its configuration interface. A high-traffic Internet website might handle requests with hundreds of servers that run on racks of high-speed computers.

A resource sent from a web server can be a pre-existing file (static content) available to the web server, or it can be generated at the time of the request (dynamic content) by another program that communicates with the server software. The former usually can be served faster and can be more easily cached for repeated requests, while the latter supports a broader range of applications.

Technologies such as REST and SOAP, which use HTTP as a basis for general computer-to-computer communication, as well as support for WebDAV extensions, have extended the application of web servers well beyond their original purpose of serving human-readable pages.

History[edit]

First web proposal (1989) evaluated as «vague but exciting…»

The world’s first web server, a NeXT Computer workstation with Ethernet, 1990. The case label reads: «This machine is a server. DO NOT POWER IT DOWN!!»

This is a very brief history of web server programs, so some information necessarily overlaps with the histories of the web browsers, the World Wide Web and the Internet; therefore, for the sake of clearness and understandability, some key historical information below reported may be similar to that found also in one or more of the above-mentioned history articles.

Initial WWW project (1989-1991)[edit]

In March 1989, Sir Tim Berners-Lee proposed a new project to his employer CERN, with the goal of easing the exchange of information between scientists by using a hypertext system. The proposal titled «HyperText and CERN», asked for comments and it was read by several people. In October 1990 the proposal was reformulated and enriched (having as co-author Robert Cailliau), and finally, it was approved.[3] [4] [5]

Between late 1990 and early 1991 the project resulted in Berners-Lee and his developers writing and testing several software libraries along with three programs, which initially ran on NeXTSTEP OS installed on NeXT workstations:

[6]

[7]

[5]

- a graphical web browser, called WorldWideWeb;

- a portable line mode web browser;

- a web server, later known as CERN httpd.

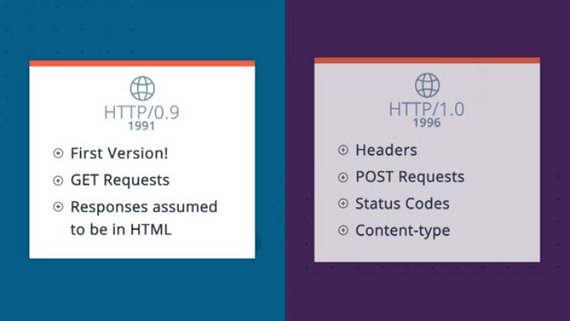

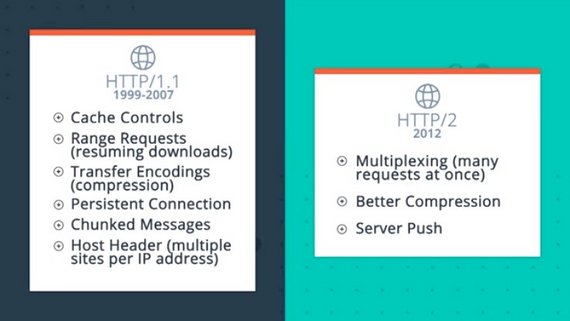

Those early browsers retrieved web pages from web server(s) using a new basic communication protocol that was named HTTP 0.9.

In August 1991 Tim Berner-Lee announced the birth of WWW technology and encouraged scientists to adopt and develop it.[8] Soon after, those programs, along with their source code, were made available to people interested in their usage.[6] In practice CERN informally allowed other people, including developers, etc., to play with and maybe further develop what it has been made till that moment. This was the official birth of CERN httpd. Since then Berner-Lee started promoting the adoption and the usage of those programs along with their porting to other OSs.[5]

Fast and wild development (1991-1995)[edit]

Number of active web sites (1991-1996)[9][10]

In December 1991 the first web server outside Europe was installed at SLAC (U.S.A.).[7] This was a very important event because it started trans-continental web communications between web browsers and web servers.

In 1991-1993 CERN web server program continued to be actively developed by the www group, meanwhile, thanks to the availability of its source code and the public specifications of the HTTP protocol, many other implementations of web servers started to be developed.

In April 1993 CERN issued a public official statement stating that the three components of Web software (the basic line-mode client, the web server and the library of common code), along with their source code, were put in the public domain.[11] This statement freed web server developers from any possible legal issue about the development of derivative work based on that source code (a threat that in practice never existed).

At the beginning of 1994, the most notable among new web servers was NCSA httpd which ran on a variety of Unix-based OSs and could serve dynamically generated content by implementing the POST HTTP method and the CGI to communicate with external programs. These capabilities, along with the multimedia features of NCSA’s Mosaic browser (also able to manage HTML FORMs in order to send data to a web server) highlighted the potential of web technology for publishing and distributed computing applications.

In the second half of 1994, the development of NCSA httpd stalled to the point that a group of external software developers, webmasters and other professional figures interested in that server, started to write and collect patches thanks to the NCSA httpd source code being available to the public domain. At the beginning of 1995 those patches were all applied to the last release of NCSA source code and, after several tests, the Apache HTTP server project was started.[12][13]

At the end of 1994 a new commercial web server, named Netsite, was released with specific features. It was the first one of many other similar products that were developed first by Netscape, then also by Sun Microsystems, and finally by Oracle Corporation.

In mid-1995 the first version of IIS was released, for Windows NT OS, by Microsoft. This marked the entry, in the field of World Wide Web technologies, of a very important commercial developer and vendor that has played and still is playing a key role on both sides (client and server) of the web.

In the second half of 1995 CERN and NCSA web servers started to decline (in global percentage usage) because of the widespread adoption of new web servers which had a much faster development cycle along with more features, more fixes applied, and more performances than the previous ones.

Explosive growth and competition (1996-2014)[edit]

Number of active web sites (1996-2002)[10][14]

At the end of 1996 there were already over fifty known (different) web server software programs that were available to everybody who wanted to own an Internet domain name and/or to host websites.[15] Many of them lived only shortly and were replaced by other web servers.

The publication of RFCs about protocol versions HTTP/1.0 (1996) and HTTP/1.1 (1997, 1999), forced most web servers to comply (not always completely) with those standards. The use of TCP/IP persistent connections (HTTP/1.1) required web servers both to increase a lot the maximum number of concurrent connections allowed and to improve their level of scalability.

Between 1996 and 1999 Netscape Enterprise Server and Microsoft’s IIS emerged among the leading commercial options whereas among the freely available and open-source programs Apache HTTP Server held the lead as the preferred server (because of its reliability and its many features).

In those years there was also another commercial, highly innovative and thus notable web server called Zeus (now discontinued) that was known as one of the fastest and most scalable web servers available on market, at least till the first decade of 2000s, despite its low percentage of usage.

Apache resulted in the most used web server from mid-1996 to the end of 2015 when, after a few years of decline, it was surpassed initially by IIS and then by Nginx. Afterward IIS dropped to much lower percentages of usage than Apache (see also market share).

From 2005-2006 Apache started to improve its speed and its scalability level by introducing new performance features (e.g. event MPM and new content cache).[16][17] As those new performance improvements initially were marked as experimental, they were not enabled by its users for a long time and so Apache suffered, even more, the competition of commercial servers and, above all, of other open-source servers which meanwhile had already achieved far superior performances (mostly when serving static content) since the beginning of their development and at the time of the Apache decline were able to offer also a long enough list of well tested advanced features.

In fact, a few years after 2000 started, not only other commercial and highly competitive web servers, e.g. LiteSpeed, but also many other open-source programs, often of excellent quality and very high performances, among which should be noted Hiawatha, Cherokee HTTP server, Lighttpd, Nginx and other derived/related products also available with commercial support, emerged.

Around 2007-2008 most popular web browsers increased their previous default limit of 2 persistent connections per host-domain (a limit recommended by RFC-2616) [18] to 4, 6 or 8 persistent connections per host-domain, in order to speed up the retrieval of heavy web pages with lots of images, and to mitigate the problem of the shortage of persistent connections dedicated to dynamic objects used for bi-directional notifications of events in web pages.[19] Within a year, these changes, on average, nearly tripled the maximum number of persistent connections that web servers had to manage. This trend (of increasing the number of persistent connections) definitely gave a strong impetus to the adoption of reverse proxies in front of slower web servers and it gave also one more chance to the emerging new web servers that could show all their speed and their capability to handle very high numbers of concurrent connections without requiring too many hardware resources (expensive computers with lots of CPUs, RAM and fast disks).[20]

New challenges (2015 and later years)[edit]

In 2015, RFCs published new protocol version [HTTP/2], and as the implementation of new specifications was not trivial at all, a dilemma arose among developers of less popular web servers (e.g. with a percentage of usage lower than 1% .. 2%), about adding or not adding support for that new protocol version.[21][22]

In fact supporting HTTP/2 often required radical changes to their internal implementation due to many factors (practically always required encrypted connections, capability to distinguish between HTTP/1.x and HTTP/2 connections on the same TCP port, binary representation of HTTP messages, message priority, compression of HTTP headers, use of streams also known as TCP/IP sub-connections and related flow-control, etc.) and so a few developers of those web servers opted for not supporting new HTTP/2 version (at least in the near future) also because of these main reasons:[21][22]

- protocols HTTP/1.x would have been supported anyway by browsers for a very long time (maybe forever) so that there would be no incompatibility between clients and servers in next future;

- implementing HTTP/2 was considered a task of overwhelming complexity that could open the door to a whole new class of bugs that till 2015 did not exist and so it would have required notable investments in developing and testing the implementation of the new protocol;

- adding HTTP/2 support could always be done in future in case the efforts would be justified.

Instead, developers of most popular web servers, rushed to offer the availability of new protocol, not only because they had the work force and the time to do so, but also because usually their previous implementation of SPDY protocol could be reused as a starting point and because most used web browsers implemented it very quickly for the same reason. Another reason that prompted those developers to act quickly was that webmasters felt the pressure of the ever increasing web traffic and they really wanted to install and to try — as soon as possible — something that could drastically lower the number of TCP/IP connections and speedup accesses to hosted websites.[23]

In 2020–2021 the HTTP/2 dynamics about its implementation (by top web servers and popular web browsers) were partly replicated after the publication of advanced drafts of future RFC about HTTP/3 protocol.

Technical overview[edit]

PC clients connected to a web server via Internet

The following technical overview should be considered only as an attempt to give a few very limited examples about some features that may be implemented in a web server and some of the tasks that it may perform in order to have a sufficiently wide scenario about the topic.

A web server program plays the role of a server in a client–server model by implementing one or more versions of HTTP protocol, often including the HTTPS secure variant and other features and extensions that are considered useful for its planned usage.

The complexity and the efficiency of a web server program may vary a lot depending on (e.g.):[1]

- common features implemented;

- common tasks performed;

- performances and scalability level aimed as a goal;

- software model and techniques adopted to achieve wished performance and scalability level;

- target hardware and category of usage, e.g. embedded system, low-medium traffic web server, high traffic Internet web server.

Common features[edit]

Although web server programs differ in how they are implemented, most of them offer the following common features.

These are basic features that most web servers usually have.

- Static content serving: to be able to serve static content (web files) to clients via HTTP protocol.

- HTTP: support for one or more versions of HTTP protocol in order to send versions of HTTP responses compatible with versions of client HTTP requests, e.g. HTTP/1.0, HTTP/1.1 (eventually also with encrypted connections HTTPS), plus, if available, HTTP/2, HTTP/3.

- Logging: usually web servers have also the capability of logging some information, about client requests and server responses, to log files for security and statistical purposes.

A few other more advanced and popular features (only a very short selection) are the following ones.

- Dynamic content serving: to be able to serve dynamic content (generated on the fly) to clients via HTTP protocol.

- Virtual hosting: to be able to serve many websites (domain names) using only one IP address.

- Authorization: to be able to allow, to forbid or to authorize access to portions of website paths (web resources).

- Content cache: to be able to cache static and/or dynamic content in order to speed up server responses;

- Large file support: to be able to serve files whose size is greater than 2 GB on 32 bit OS.

- Bandwidth throttling: to limit the speed of content responses in order to not saturate the network and to be able to serve more clients;

- Rewrite engine: to map parts of clean URLs (found in client requests) to their real names.

- Custom error pages: support for customized HTTP error messages.

Common tasks[edit]

A web server program, when it is running, usually performs several general tasks, (e.g.):[1]

- starts, optionally reads and applies settings found in its configuration file(s) or elsewhere, optionally opens log file, starts listening to client connections / requests;

- optionally tries to adapt its general behavior according to its settings and its current operating conditions;

- manages client connection(s) (accepting new ones or closing the existing ones as required);

- receives client requests (by reading HTTP messages):

- reads and verify each HTTP request message;

- usually performs URL normalization;

- usually performs URL mapping (which may default to URL path translation);

- usually performs URL path translation along with various security checks;

- executes or refuses requested HTTP method:

- optionally manages URL authorizations;

- optionally manages URL redirections;

- optionally manages requests for static resources (file contents):

- optionally manages directory index files;

- optionally manages regular files;

- optionally manages requests for dynamic resources:

- optionally manages directory listings;

- optionally manages program or module processing, checking the availability, the start and eventually the stop of the execution of external programs used to generate dynamic content;

- optionally manages the communications with external programs / internal modules used to generate dynamic content;

- replies to client requests sending proper HTTP responses (e.g. requested resources or error messages) eventually verifying or adding HTTP headers to those sent by dynamic programs / modules;

- optionally logs (partially or totally) client requests and/or its responses to an external user log file or to a system log file by syslog, usually using common log format;

- optionally logs process messages about detected anomalies or other notable events (e.g. in client requests or in its internal functioning) using syslog or some other system facilities; these log messages usually have a debug, warning, error, alert level which can be filtered (not logged) depending on some settings, see also severity level;

- optionally generates statistics about web traffic managed and/or its performances;

- other custom tasks.

Read request message[edit]

Web server programs are able:[24]

[25]

[26]

- to read an HTTP request message;

- to interpret it;

- to verify its syntax;

- to identify known HTTP headers and to extract their values from them.

Once an HTTP request message has been decoded and verified, its values can be used to determine whether that request can be satisfied or not. This requires many other steps, including security checks.

URL normalization[edit]

Web server programs usually perform some type of URL normalization (URL found in most HTTP request messages) in order:

- to make resource path always a clean uniform path from root directory of website;

- to lower security risks (e.g. by intercepting more easily attempts to access static resources outside the root directory of the website or to access to portions of path below website root directory that are forbidden or which require authorization);