Back to blog

Guide to Extracting Website Data by Using Excel VBA

Microsoft Excel is undoubtedly one of the most used software applications around the world and across various disciplines. It not only stores, organizes, and manipulates the data using different functions and formulas but also allows users to access web pages and extract data from them.

In this tutorial, we are going to focus on the last mentioned feature by demonstrating how to perform Excel web scraping using VBA. We will briefly go through the installation and preparation of the environment and then write a scraper using VBA macro to successfully fetch data from a web page into Excel. Let’s get started.

What is VBA web scraping?

VBA web scraping is a special scraping technique that allows for automatic data gathering from websites to Excel. The scraping itself becomes possible with the use of such external applications like Microsoft Edge browser.

What is VBA?

VBA stands for Visual Basic Application. It is a programming language of Microsoft Corporation. VBA extends the capabilities of Microsoft Office tools and allows users to develop advanced functions and complex automation. VBA can also be used to write macros to pull data from websites into Excel.

Pros and cons of using VBA for scraping

Before we move on to the tutorial part it is essential to highlight some advantages and disadvantages of web scraping to Excel with VBA.

Pros

-

Ready to use – VBA is bundled with Microsoft Office which basically means that if you already have MS Office installed, you don’t have to worry about installing anything else. You can use VBA right away in all the Microsoft Office tools.

-

Reliable – Both Microsoft Excel & VBA are developed and maintained by Microsoft. Unlike other development environments, these tools can be upgraded together to the latest version without much hassle.

-

Out-of-the-box support for browser – VBA web scrapers can take advantage of Microsoft’s latest browser Microsoft Edge which makes scraping dynamic websites pretty convenient.

-

Complete automation – When running the VBA script, you don’t have to perform any additional tasks or interact with the browser. Everything will be taken care of by the VBA script including log-in, scrolling, button clicks, etc.

Cons

-

Only works in Windows – VBA scrapers are not cross-platform. They only work in a Windows environment. While MS Office does have support for Mac, it is way harder to write a working VBA scraper on it. The library supports are also limited, for example, you will be unable to use Microsoft Edge.

-

Tightly coupled with MS Office – VBA scrapers are highly dependent on MS Office tools. Third-party useful scraping tools are hard to integrate with it.

-

Steep learning curve — VBA programming language is less beginner-friendly and a bit harder than other modern programming languages, such as Python or Javascript.

Overall, if you are looking to develop a web scraper for the Windows operating system that automatically pulls data from a website, then VBA-based web scraping will be a good choice.

Tutorial

Before we begin, let us make sure we’ve installed all the prerequisites and set up our environment properly so that it will be easier to follow along.

Prerequisites

In this tutorial, we’ll be using Windows 10 and Microsoft Office 10. However, the steps will be the same or similar for other versions of Windows. You’ll only need a computer with Windows Operating System. In addition, it’s necessary to install Microsoft Office if you don’t have it already. Detailed installation instructions can be found in the Microsoft’s Official documentation.

Preparing the environment

Now, that you’ve installed MS Office, complete the steps below to set up the development environment:

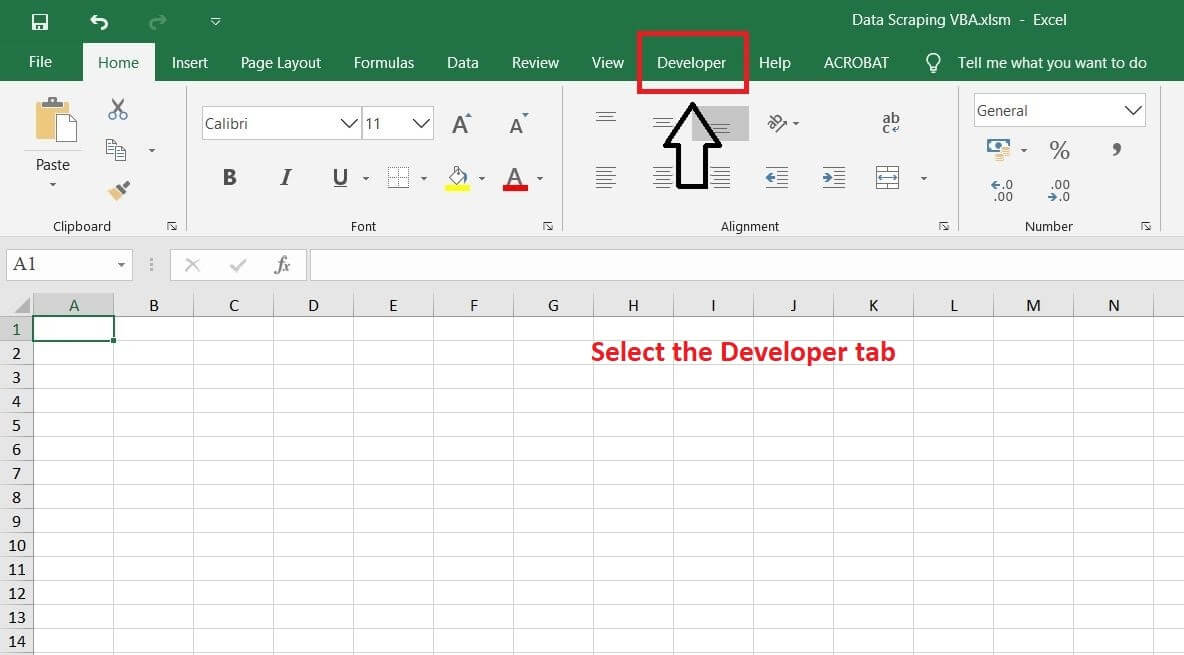

Step 1 — Open Microsoft Excel

From the start menu or Cortana search, find Microsoft Excel and open the application. You will see a similar interface as below:

Click on File

Step 2 — Go to Options to enable developer menu

By default, Excel doesn’t show the developer button in the top ribbon. To enable this, we’ll have to go to “Options” from the File menu.

Step 3 — Select Customize Ribbon

Once you click the “Options,” a dialog will pop up where, from the side menu, you’ll need to select “Customize Ribbon”. Click on the check box next to “developer.” Make sure it is ticked and then click on “OK.”

Step 4 — Open Visual Basic application dialog

Now, you’ll see a new developer button on the top ribbon, clicking on it will expand the developer menu. From the menu, select “Visual Basic.”

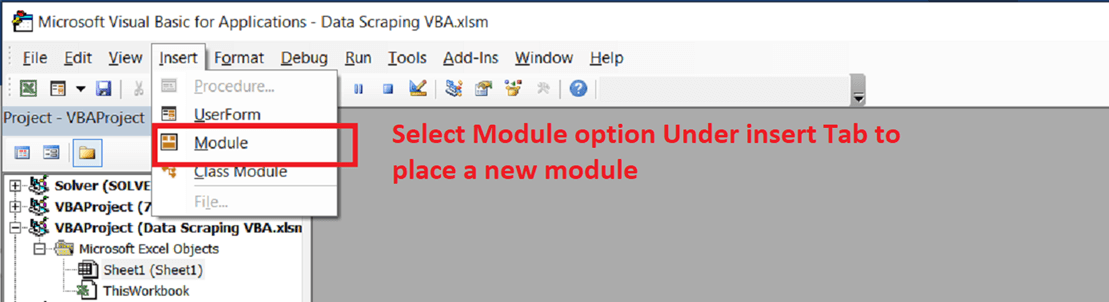

Step 5 — Insert a new Module

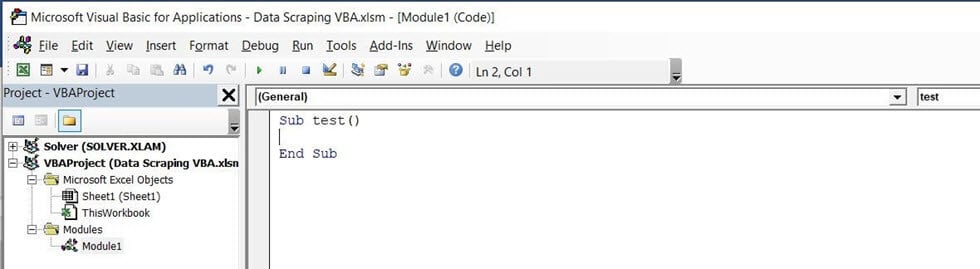

Once you click on “Visual Basic», it will open a new window as demonstrated below:

Click on “Insert” and select “Module” to insert a new module. It will open the module editor.

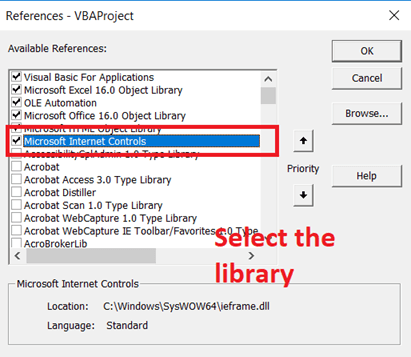

Step 6 — Add new references

From the top menu select Tools > References… which will open a new window like the one below. Make sure to scroll through the available list of references and find Microsoft HTML Client Library and Microsoft Internet Control. Click on the check box next to both of them to enable these references. Once done, click OK.

That’s it! Our development environment is all set. Let’s write our first Excel VBA scraper.

Step 7 — Automate Microsoft Edge to open a website

Now, it’s time to update our newly created module to open the following website: https://quotes.toscrape.com. In the module editor, insert the following code:

Sub scrape_quotes()

Dim browser As InternetExplorer

Dim page As HTMLDocument

Set browser = New InternetExplorer

browser.Visible = True

browser.navigate ("https://quotes.toscrape.com")

End SubWe are defining a subroutine named scrape_quotes(). This function will be executed when we run this script. Inside the subroutine, we are defining two objects “browser” and “page”.

The “browser” object will allow us to interact with Microsoft Edge. Next, we also set the browser as visible so that we can see it in action. The browser.navigate() function tells the VBA browser object to open the URL. The output will be similar to this:

Note: You might be wondering why we are writing “InternetExplorer” to interact with Microsoft Edge. VBA initially only supported Internet Explorer-based automation, but once Microsoft discontinued Internet Explorer, they deployed some updates so that VBA’s InternetExplorer module can run the Microsoft Edge browser in IEMode without any issues. The above code will also work in older Windows that have Internet Explorer still available instead of Edge.

Step 8 — Scrape data using VBA script and save it to Excel

The next step is to scrape the quotes and authors from the website. For simplicity, we’ll store it in the first sheet of the Excel spreadsheet and grab the top 5 quotes for now.

Let’s begin by defining two new objects – one for quotes and another for authors.

Dim quotes As Object

Dim authors As ObjectAfter navigating to the website, we’ll also add a little bit of pause so that the website loads properly by using Loop.

Do While browser.Busy: LoopNext, grab the quotes and authors from the HTML document.

Set page = browser.document

Set quotes = page.getElementsByClassName("quote")

Set authors = page.getElementsByClassName("author")Use a for loop to populate the excel rows with the extracted data by calling the Cells function and passing the row and column position:

For num = 1 To 5

Cells(num, 1).Value = quotes.Item(num).innerText

Cells(num, 2).Value = authors.Item(num).innerText

Next numFinally, close the browser by calling the quit function. The below code will close the browser window.

Output

Now, if we run the script again, it’ll open Microsoft Edge, browse to the quotes.toscrape.com website, grab the top 5 quotes from the list, and save them to the current excel file’s first sheet.

Source Code

Below is an example of a full source code:

Sub scrape_quotes()

Dim browser As InternetExplorer

Dim page As HTMLDocument

Dim quotes As Object

Dim authors As Object

Set browser = New InternetExplorer

browser.Visible = True

browser.navigate ("https://quotes.toscrape.com")

Do While browser.Busy: Loop

Set page = browser.document

Set quotes = page.getElementsByClassName("quote")

Set authors = page.getElementsByClassName("author")

For num = 1 To 5

Cells(num, 1).Value = quotes.Item(num).innerText

Cells(num, 2).Value = authors.Item(num).innerText

Next num

browser.Quit

End SubConclusion

Excel web scraping with VBA is a great choice for Windows automation and web extraction. It’s reliable and ready to use which means you won’t have to worry about any unexpected issues or additional steps. For your convenience, you can also access this tutorial in our GitHub repository.

The biggest disadvantage of VBA web scraping that was highlighted in the article is the lack of cross-platform support. However, if you want to develop web scrapers that can be used on multiple operating systems such as Linux or Mac, Excel Web Query can also be an option. Of course, we also recommend exploring web scraping with Python – one of the most popular programming languages that is capable of developing complex network applications while maintaining its simplified syntax.

About the author

Yelyzaveta Nechytailo

Senior Content Manager

Yelyzaveta Nechytailo is a Senior Content Manager at Oxylabs. After working as a writer in fashion, e-commerce, and media, she decided to switch her career path and immerse in the fascinating world of tech. And believe it or not, she absolutely loves it! On weekends, you’ll probably find Yelyzaveta enjoying a cup of matcha at a cozy coffee shop, scrolling through social media, or binge-watching investigative TV series.

All information on Oxylabs Blog is provided on an «as is» basis and for informational purposes only. We make no representation and disclaim all liability with respect to your use of any information contained on Oxylabs Blog or any third-party websites that may be linked therein. Before engaging in scraping activities of any kind you should consult your legal advisors and carefully read the particular website’s terms of service or receive a scraping license.

Related articles

IN THIS ARTICLE:

-

What is VBA web scraping?

-

Pros and cons of using VBA for scraping

-

Tutorial

-

Output

-

Source Code

-

Conclusion

Forget about complex web scraping processes

Choose Oxylabs’ advanced web scraping solutions to gather real-time public data hassle-free.

VBA Code to Extract Data from Website to Excel

To perform HTML screen scrapping or to automatically pull data from website into Excel vba, use this simple code in this page.

Programmers & Software testing Professionals use this technique when ‘Excel query’ to fetch webpage option could not get required data from web or due to data formatting issues. For example, if we need a stock quotes in Excel, then we can use a Excel webquery option. But, we cannot hand pick the required data alone.

Lets see how to pull data from a website with Excel VBA code automatically.

Also Read: How to automatically pull website data from Chrome using Excel VBA & Selenium Type library?

How to automatically pull data from Website to Excel using VBA?

HTML screen scraping can be done by creating instances of the object “MSXML2.XMLHTTP” or “MSXML2.ServerXMLHTTP”, as explained in the code snippet below.

Use this code in VBA to pull data from website i.,e HTML source of a page & place the content in a excel sheet.

- Create new excel workbook

- Press Alt + F11 to open VB editor.

- Copy paste the below code in space provided for code.

- Change the URL mentioned in the code.

- Execute the code by Pressing F5.

Private Sub HTML_VBA_Pull_Data_From_Website_To_Excel()

Dim oXMLHTTP As Object

Dim sPageHTML As String

Dim sURL As String

'Change the URL before executing the code. URL to Extract data from.

sURL = "http://WWW.WebSiteName.com"

'Pull/Extract data from website to Excel using VBA

Set oXMLHTTP = CreateObject("MSXML2.XMLHTTP")

oXMLHTTP.Open "GET", sURL, False

oXMLHTTP.send

sPageHTML = oXMLHTTP.responseText

'Get webpage data into Excel

ThisWorkbook.Sheets(1).Cells(1, 1) = sPageHTML

MsgBox "XMLHTML Fetch Completed"

End SubOnce you run the above code, it will automatically pull website data in Excel vba as a HTML code.

You can parse the HTML elements from the web page as explained in out another article: How To Extract HTML Table From Website?

It explains with a same VBA HTML Parser code on how to process each HTML element, after the web page content is extracted.

Clear Cache for every VBA Extract Data from Website

Use this below code every time, before executing the above code. Because with every execution, it is possible that extracted website data can reside in cache.

Also Read: Extract URL From Sitemap of a Website

If you run the code again without clearing the cache, then old data will be displayed again. To avoid this, use the below code.

Shell "RunDll32.exe InetCpl.Cpl, ClearMyTracksByProcess 11"

Note: Once this code is executed, it will clear the cache in your web browser. So, please execute this with caution. All your previous sessions and unfinished work will be deleted from browser cache.

Detect Broken URL or Dead Links In Webpage

Sometimes we might need to check only if the URL is active or not. During that time, just insert the below checking IF condition just after the .Send command.

If the URL is not active, then this code snippet will throw an error message.

oXMLHTTP.send

If oXMLHTTP.Status <> 200 Then

MsgBox sURL & ": URL Link is not Active"

End IfSearch Engines Extract Website Data

Web browsers like IE, Chrome, Firefox etc., fetch data from webpages and presents us in beautiful formats.

Why do we need to download Website data with a program? Even few crazy programmers develop such applications & name it as Spider or Crawler? Because it is going to Crawl through the web and extract HTML content from different websites.

Internet Search Engines: Google, Bing etc., have crawler programs that download webpage content and index. Guessing, nobody is trying to build a search engine with Excel, but may be for processing website content for other purposes like counting for words, checking specific URLs, Descriptions or Correctness of Email IDs etc.,

Onpage SEO Analysis: Web admins want to fetch their own website data for SEO analysis, marketing people do it for extracting email ids for promoting their products.

Excel Query Fails: Some websites get updated so frequently, like Stock Index, Facebook timeline, Sports updates, Commodity prices, News updates etc.,In this case one would not like to click on ‘Refresh Page’ multiple times.

Use this VBA to extract data from website and accomplish Automated content processing.

Additional References

Though this article explained about fetching webpage content, the options available with MSXML2.ServerXMLHTTP are not discussed. To know more about this options, visit to MSDN Library.

What is Data Scraping?

Data scraping is the technique that helps in the extraction of desired information from a HTML web page to a local file present in your local machine. Normally, a local file could correspond to an excel file, word file, or to say any Microsoft office application. It helps in channeling critical information from the web page.

The data scraping becomes simple when working on a research-based project on a daily basis, and such a project is purely dependent on the internet and website. To further illustrate on the topic, let us take the example of a day trader who runs an excel macro for pulling market information from a finance website into an excel sheet using VBA.

In this tutorial, you will learn:

- What is Data Scraping?

- How to prepare Excel Macro before performing Data Scraping using Internet explorer?

- How to Open Internet Explorer using Excel VBA?

- How to Open Website in Internet explorer using VBA?

- How to Scrape information from Website using VBA?

How to prepare Excel Macro before performing Data Scraping using Internet explorer?

There are certain prerequisites that has to be performed on the excel macro file before getting into the process of data scraping in excel.

These prerequisites are as follows: –

Step 1) Open an Excel-based Macro and access the developer option of excel.

Step 2) Select Visual Basic option under Developer ribbon.

Step 3) Insert a new module.

Step 4) Initialize a new subroutine

Sub test() End sub

The module would result as follows: –

Step 5) Access the reference option under the tool tab and reference Microsoft HTML Object Library and Microsoft internet control.

The following files are to be referenced to the module as it helps in opening internet explorer and facilitates the development of macro scripting.

Now the Excel file is ready to interact with the internet explorer. The next step would be to incorporate macro scripts that would facilitate data scraping in HTML.

How to Open Internet Explorer using Excel VBA?

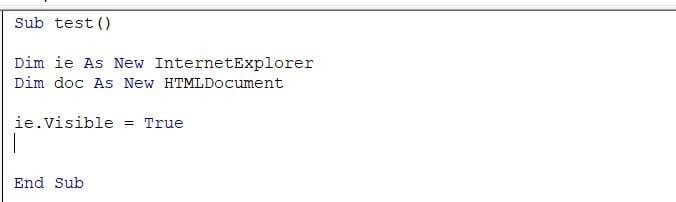

Step 1) Initialize the variable in the subroutines as displayed below

Sub test() Dim ie As New InternetExplorer Dim doc As New HTMLDocument

Step 2) To open internet explorer using VBA, write i.e. visible=true and press F5.

Sub test() Dim ie As New InternetExplorer Dim doc As New HTMLDocument Ie.visible=true

The module would look as follows: –

How to Open Website in Internet explorer using VBA?

Here, are steps to Open Website in Internet exploer using VBA

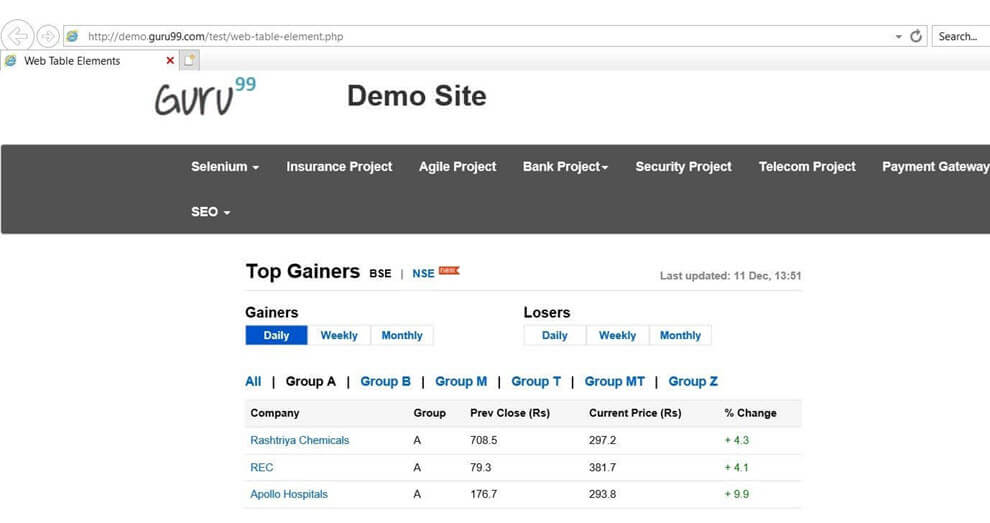

Step 1) Once you are able to access the internet explorer using Excel VBA, the next step would incorporate the accessing of a website using VBA. This facilitated by Navigate Attribute, wherein the URL has to pass as double quotes in the attribute. Follow the following steps as displayed.

Sub test() Dim, ie As New InternetExplorer Dim doc As New HTMLDocument Dim ecoll As Object ie.Visible = True ie.navigate"http://demo.guru99.com/test/web-table-element.php" Do DoEvents Loop Until ie.readyState = READYSTATE_COMPLETE

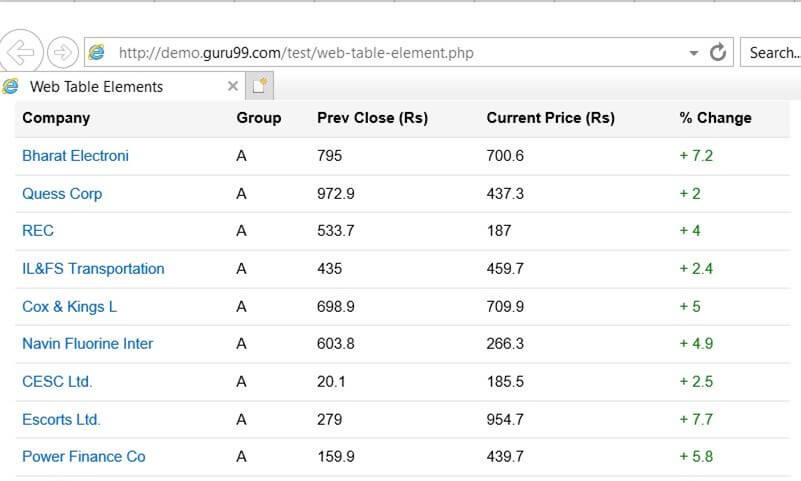

Step 2) – Press F5 to execute the macro. The following webpage would be opened as displayed

Now, the excel macro is ready with respect to performing the scraping functions. The next step would display how the information can be extracted from internet explorer using VBA.

How to Scrape information from Website using VBA?

Suppose the day trader wants to access the data from the website on a daily basis. Each time the day trader presses the click the button, it should auto pull the market data into excel.

From the above website, it would be necessary to inspect an element and observe how the data is structured.

Step 1) Access the below source code of HTML by pressing control + Shift + I

<table class="datatable"> <thead> <tr> <th>Company</th> <th>Group</th> <th>Pre Close (Rs)</th> <th>Current Price (Rs)</th> <th>% Change</th> </tr>

The source code would be as follows: –

Sub test() Dim ie As New InternetExplorer Dim doc As New HTMLDocument Dim ecoll As Object ie.Visible = True ie.navigate "http://demo.guru99.com/test/web-table-element.php" Do DoEvents Loop Until ie.readyState = READYSTATE_COMPLETE Set doc = ie.document

As it can be seen that the data is structured as a single HTML Table. Therefore, in order to pull entire data from the html table, it would require designing of macro which collects the data in the form of a collection.

The collection would then be pasted into excel. To achieve, the desired results perform the below-mentioned steps: –

Step 2) Initialize the Html document in the subroutine

The VBA module would look as follows: –

Step 3) Initialize the collection element present in the HTML document

The VBA module would look as follows: –

Sub test()

Dim ie As New InternetExplorer

Dim doc As New HTMLDocument

Dim ecoll As Object

ie.Visible = True

ie.navigate "http://demo.guru99.com/test/web-table-element.php"

Do

DoEvents

Loop Until ie.readyState = READYSTATE_COMPLETE

Set doc = ie.document

Set ecoll = doc.getElementsByTagName("table")

Step 4) Initialize the excel sheet cells with the help of nested loop as shown

The VBA module would look as follows: –

Sub test()

Dim ie As New InternetExplorer

Dim doc As New HTMLDocument

Dim ecoll As Object

ie.Visible = True

ie.navigate "http://demo.guru99.com/test/web-table-element.php"

Do

DoEvents

Loop Until ie.readyState = READYSTATE_COMPLETE

Set doc = ie.document

Set ecoll = doc.getElementsByTagName("table")

The excel can be initialized using the range attribute of the excel sheet or through cells attribute of the excel sheet. To reduce the complexity of the VBA script, the collection data is initialized to the excel cells attribute of sheet 1 present in the workbook.

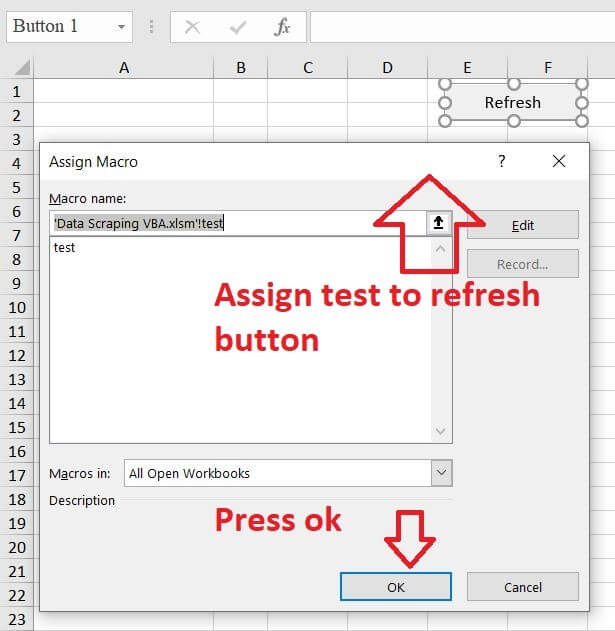

Once the macro script is ready, pass and assign the subroutine to excel button and exit the module of VBA. Label the button as refresh or any suitable name that could be initialized to it. For this example, the button is initialized as a refresh.

Step 5) Press the refresh button to get the below-mentioned output

Step 6) Compare the results in excel with the results of internet explorer

Summary:

- The data scraping allows the user to scrape out only the information that the user wants.

- Scraping can be performed using internet explorer.

- The process of scraping is slower in the case of internet explorer; however, it delivers the desired results to the user.

- The scraping should be performed with absolute carefulness and caution as it can harm and crash the system being utilized for scraping.

This blog shows how to go through a website, making sense of its HTML within

VBA. We’ll break the problem down into several chunks — to whit:

- Defining what we want to achieve.

- Analysing the target URL (the target website).

- Referencing the required applications.

- Getting at the underlying HTML.

- Parsing the data into Excel.

Don’t forget that websites change all the time, so this code may no longer

work when you try it out as the format of the

StackOverflow website may have changed.

The following code also assumes that you have Internet Explorer on your computer

(something which will be true of nearly all Windows computers).

Step 1 — Defining the problem

At the time of writing, here is what the above-mentioned StackOverflow

website’s home page looks like:

The home page lists out the questions which have been asked most recently.

From this we want to extract the raw questions, with just the votes, views and

author information:

What the answer should look like. The list of

questions changes by the second, so the data is

different!

To do this we need to learn the structure of the HTML behind the page.

To scrape websites you need to know a little HTML, and knowing a lot will

help you enormously.

Step 2 — Analysing the target website

In any browser you can right-click and choose to show the underlying HTML for

the page:

How to show the HTML for a webpage in FireFox (the Internet Explorer, Chrome, Safari and other browser options will be similar).

The web page begins with Top Questions, so let’s find that:

Press CTRL +

F to find the given text.

Analysing the HTML which follows this shows that the questions are all encased

in a div tag called question-mini-list:

We’ll loop over all of the HTML elements within this

div tag.

Here’s the HTML for a single question:

The question contains all of the data we want — we just have to get at it!

Here’s how we’ll get at the four bits of data we want:

| Data | Method |

|---|---|

| Id |

We’ll find the div tag with class question-summary narrow, and extract the question number from its id. |

| Votes |

We’ll find the div tag with class name votes, and look at the inner text for this (ie the contents of the div tag, ignoring any HTML tags). By stripping out any blanks and the word vote or votes, we’ll end up with the data we want. |

| Views | An identical process, but using views instead of votes. |

| Author |

We’ll find the tag with class name started, and look at the inner text of the second tag within this (since there are two hyperlinks, and it’s the second one which contains the author’s name). |

Step 3 — Referencing the required applications

To get this macro to work, we’ll need to:

- Create a new copy of Internet Explorer in memory; then

- Work with the elements on the HTML page we find.

To do this, you’ll need to reference two object libraries:

| Library | Used for |

|---|---|

| Microsoft Internet Controls | Getting at Internet Explorer in VBA |

| Microsoft HTML Object Library | Getting at parts of an HTML page |

To do this, in VBA choose from the menu Tools —>

References, then tick the two options shown:

You’ll need to scroll down quite a way to find each of these libraries to reference.

Now we can begin writing the VBA to get at our data!

Step 4 — Getting at the underlying HTML

Let’s now show some code for loading up the HTML at a given web page.

The main problem is that we have to wait until the web browser has responded, so

we keep «doing any events» until it returns the correct state out of the

following choices:

Enum READYSTATE

READYSTATE_UNINITIALIZED = 0

READYSTATE_LOADING = 1

READYSTATE_LOADED = 2

READYSTATE_INTERACTIVE = 3

READYSTATE_COMPLETE = 4

End Enum

Here a subroutine to get at the text behind a web page:

Sub ImportStackOverflowData()

Dim ie As InternetExplorer

Dim html As HTMLDocument

Set ie = New InternetExplorer

ie.Visible = False

ie.navigate «http://stackoverflow.com/»

Do While ie.readyState <> READYSTATE_COMPLETE

Application.StatusBar = «Trying to go to StackOverflow …»

DoEvents

Loop

Set html = ie.document

MsgBox html.DocumentElement.innerHTML

Set ie = Nothing

Application.StatusBar = «»

End Sub

What this does is:

- Creates a new copy of Internet Explorer to run invisibly in

memory. - Navigates to the StackOverflow home page.

- Waits until the home page has loaded.

- Loads up an HTML document, and shows its text.

- Closes Internet Explorer.

You could now parse the HTML using the Document Object Model (for those who

know this), but we’re going to do it the slightly harder way, by finding tags

and then looping over their contents.

Step 5 — Parsing the HTML

Here’s the entire subroutine, in parts, with comments for the HTML bits.

Start by getting a handle on the HTML document, as above:

Sub ImportStackOverflowData()

Dim ie As InternetExplorer

Dim html As HTMLDocument

Set ie = New InternetExplorer

ie.Visible = False

ie.navigate «http://stackoverflow.com/»

Do While ie.readyState <> READYSTATE_COMPLETE

Application.StatusBar = «Trying to go to StackOverflow …»

DoEvents

Loop

Set html = ie.document

Set ie = Nothing

Application.StatusBar = «»

Now put titles in row 3 of the spreadsheet:

Cells.Clear

Range(«A3»).Value = «Question id»

Range(«B3»).Value = «Votes»

Range(«C3»).Value = «Views»

Range(«D3»).Value = «Person»

We’re going to need a fair few variables (I don’t guarantee that this is the

most efficient solution!):

Dim QuestionList As IHTMLElement

Dim Questions As IHTMLElementCollection

Dim Question As IHTMLElement

Dim RowNumber As Long

Dim QuestionId As String

Dim QuestionFields As IHTMLElementCollection

Dim QuestionField As IHTMLElement

Dim votes As String

Dim views As String

Dim QuestionFieldLinks As IHTMLElementCollection

Start by getting a reference to the HTML element which contains all of the

questions (this also initialises the row number in the spreadsheet to 4, the one

after the titles):

Set QuestionList = html.getElementById(«question-mini-list»)

Set Questions = QuestionList.Children

RowNumber = 4

Now we’ll loop over all of the child elements within this tag, finding each

question in turn:

For Each Question In Questions

If Question.className = «question-summary narrow» Then

Each question has a tag giving its id, which we can extract:

QuestionId = Replace(Question.ID, «question-summary-«, «»)

Cells(RowNumber, 1).Value = CLng(QuestionId)

Now we’ll loop over all of the child elements within each question’s

containing div tag:

Set QuestionFields = Question.all

For Each QuestionField In QuestionFields

For each element, extract its details (either the integer number of votes

cast, the integer number of views or the name of the author):

If QuestionField.className = «votes» Then

votes = Replace(QuestionField.innerText, «votes», «»)

votes = Replace(votes, «vote», «»)

Cells(RowNumber, 2).Value = Trim(votes)

End If

If QuestionField.className = «views» Then

views = QuestionField.innerText

views = Replace(views, «views», «»)

views = Replace(views, «view», «»)

Cells(RowNumber, 3).Value = Trim(views)

End If

If QuestionField.className = «started» Then

Set QuestionFieldLinks = QuestionField.all

Cells(RowNumber, 4).Value = QuestionFieldLinks(2).innerHTML

End If

Next QuestionField

Time now to finish this question, increase the spreadsheet row count by one

and go on to the next question:

RowNumber = RowNumber + 1

End If

Next

Set html = Nothing

Finally, we’ll tidy up the results and put a title in row one:

Range(«A3»).CurrentRegion.WrapText = False

Range(«A3»).CurrentRegion.EntireColumn.AutoFit

Range(«A1:C1»).EntireColumn.HorizontalAlignment = xlCenter

Range(«A1:D1»).Merge

Range(«A1»).Value = «StackOverflow home page questions»

Range(«A1»).Font.Bold = True

Application.StatusBar = «»

MsgBox «Done!»

End Sub

And that’s the complete macro!

As the above shows, website scraping can get quite messy. If you’re

going to be doing much of this, I recommend learning about the HTML DOM

(Document Object Model), and taking advantage of this in your code.

If you’ve learnt something from this blog and wonder how much more we could

teach you, have a look at our online and classroom VBA courses.

I want to import MutualFundsPortfolioValues to Excel. I don’t know how to import data from a web site which I need to do is import web data to Excel within 2 different dates of chosen companies ..

When I input dates to B3 and B4 cells and click Commandbutton1, Excel might import all data from my web-page to my Excel sheets «result»

For example:

date 1: 04/03/2013 <<<< " it will be in sheets "input" cell B3

date 2 : 11/04/2013 <<<<< " it will be in sheet "input " cell B4

choosen companies <<<<<< its Range "B7: B17"

I have added a sample excel worksheet and a printscreen of the web page..

Any ideas?

My web page url :

http://www.spk.gov.tr/apps/MutualFundsPortfolioValues/FundsInfosFP.aspx?ctype=E&submenuheader=0

Sample Excel and Sample picture of the data:

http://uploading.com/folders/get/b491mfb6/excel-web-query

asked Apr 11, 2013 at 21:16

7

Here is the code to import data using IE Automation.

Input Parameters (Enter in Sheet1 as per screenshot below)

start date = B3

end date = B4

Şirketler = B5 (It allows multiples values which should appear below B5 and so on)

ViewSource of page input fileds

How code works :

- The code creates object of Internet Explorer and navigates to

site - Waits till the page is completely loaded and ready. (IE.readystate)

- Creates the object html class

- Enter the values for the input fields from Sheet1 (txtDateBegin,txtDateEnd , lstCompany)

- Clicks on the submit button

- Iterates thru each row of table dgFunds and dumps into excel Sheet2

Code:

Dim IE As Object

Sub Website()

Dim Doc As Object, lastRow As Long, tblTR As Object

Set IE = CreateObject("internetexplorer.application")

IE.Visible = True

navigate:

IE.navigate "http://www.spk.gov.tr/apps/MutualFundsPortfolioValues/FundsInfosFP.aspx?ctype=E&submenuheader=0"

Do While IE.readystate <> 4: DoEvents: Loop

Set Doc = CreateObject("htmlfile")

Set Doc = IE.document

If Doc Is Nothing Then GoTo navigate

Set txtDtBegin = Doc.getelementbyid("txtDateBegin")

txtDtBegin.Value = Format(Sheet1.Range("B3").Value, "dd.MM.yyyy")

Set txtDtEnd = Doc.getelementbyid("txtDateEnd")

txtDtEnd.Value = Format(Sheet1.Range("B4").Value, "dd.MM.yyyy")

lastRow = Sheet1.Range("B65000").End(xlUp).row

If lastRow < 5 Then Exit Sub

For i = 5 To lastRow

Set company = Doc.getelementbyid("lstCompany")

For x = 0 To company.Options.Length - 1

If company.Options(x).Text = Sheet1.Range("B" & i) Then

company.selectedIndex = x

Set btnCompanyAdd = Doc.getelementbyid("btnCompanyAdd")

btnCompanyAdd.Click

Set btnCompanyAdd = Nothing

wait

Exit For

End If

Next

Next

wait

Set btnSubmit = Doc.getelementbyid("btnSubmit")

btnSubmit.Click

wait

Set tbldgFunds = Doc.getelementbyid("dgFunds")

Set tblTR = tbldgFunds.getelementsbytagname("tr")

Dim row As Long, col As Long

row = 1

col = 1

On Error Resume Next

For Each r In tblTR

If row = 1 Then

For Each cell In r.getelementsbytagname("th")

Sheet2.Cells(row, col) = cell.innerText

col = col + 1

Next

row = row + 1

col = 1

Else

For Each cell In r.getelementsbytagname("td")

Sheet2.Cells(row, col) = cell.innerText

col = col + 1

Next

row = row + 1

col = 1

End If

Next

IE.Quit

Set IE = Nothing

MsgBox "Done"

End Sub

Sub wait()

Application.wait Now + TimeSerial(0, 0, 10)

Do While IE.readystate <> 4: DoEvents: Loop

End Sub

Ouput table in Sheet 2

HTH

answered Apr 12, 2013 at 2:22

SantoshSantosh

12.1k4 gold badges41 silver badges72 bronze badges

9