If you’re looking for a fun word association game or activity, then you’re certainly in the right place. Keep on reading for everything you need to know about fun word association games.

Word Association Activity

This ESL word association activity is an ideal way to help students activate prior knowledge that they might have about a topic. Or, you can use it at the end of a unit to show students how much they have learned!

The key to having a happy ESL classroom is to mix things up in your classes. After all, nobody likes doing the same thing over and over again. Try out some new activities today…here’s a simple vocabulary one you can start with.

Skills: Reading/writing/speaking

Age: 7+ (must be able to read + write)

Materials Required: Workbook or butcher paper and pens

Word Association is an ESL vocabulary activity that can be used to introduce a new topic, lesson, theme, etc. You have to write a single relevant word in the middle of the board or paper and have students take turns adding as many words or images related to that word as possible.

For example…the centre word could be “school.” Some of the other branches could be subjects (Math, English, History, Gym, etc) while another branch could be school supplies (pen, paper, ruler, etc.) Finally, you might have one about recess or break time (play games, tag, climb, jump, swing set). And keep going with more associations from there.

The subject or topic can be whatever you’re teaching that day. Another topic it works well for is body parts. Check out some more parts of the body activities here.

For large classes, have students work in groups with separate pieces of paper taped to the wall or the top of the table/ grouped desks. After a given amount of time (3-5 minutes, or when you see no one is adding anything new), discuss their answers.

It’s also a nice activity for teaching English online so give it a try today!

Teaching Tips for This ESL Vocabulary Activity

For large classes, butcher paper works best, so more students can write at one time. If that isn’t possible, have 5-6 board markers available.

If using butcher paper, prepare in advance, including taping to the wall, unless students will be working at their desks. Finally, if students will be working at their desks, write the word on each table’s page in advance, but don’t hand them out until you have given your instructions.

This activity is often quite a fun way to start off a holiday themed class. For even more ideas, check out: ESL Christmas Activities and Games.

Procedure for Word Association Game

1. Write a single word relevant to your new topic, lesson, or theme on the white board or butcher paper.

2. Have students take turns adding as many words or images related to that word as possible. My rule is that each student has to add at least one word, no matter how small.

3. After 3-5 minutes (or less, if no one is adding anything new), discuss their answers.

4. For larger classes, put students into groups of 4-6 and let them work together on this. You can choose the most well-organized one to show as an example to the rest of the class. And these papers can act as resources for the rest of the class.

Word Associations Activity for ESL

Follow-Up to this Word Association Activity

You may wish to spend quite a bit of time teaching your students how to do mind-mapping, or brainstorming the first time they do it. This is because it can be a very useful skill that they can take with them to all of their classes. It’s often a great first step before doing almost any kind of writing task or project.

Remember to teach your students that they’re not to edit their ideas at this point. Just to write down anything that comes to mind. And that they should also be doing this very quickly.

Word Associations Worksheets

Do you want to give your students some extra practice with this kind of thing? The good news is that there are a ton of great word association exercises online that you can just print off and use in your classes. If that’s not some ESL teaching gold then I’m not sure what it! Here are some of the best options:

ISL Collective

ESL Printables

ESL Galaxy

Did you Like this Word Associations Game?

Do you love this English vocabulary Activity? Then you’re going to love this book over on Amazon: 39 ESL Warm-Ups for Kids. There are almost 40 top-quality ESL warm-ups and icebreakers to help you get your classes started off in style. Help ease your students into using English and get them ready for the main part of the lesson ahead.

English warm-up activities and games are the perfect way to review ESL Vocabulary from previous classes. Do it the tricky way—without students even realizing what you’re doing!

The authors of the book have decades of experience teaching kids, teenagers, university students and adults. Learn from their experience about how to help your students actually learn English.

39 ESL Warm-Ups is available in both print and digital formats. The (cheaper!) digital copy can be ready on any device by downloading the free Kindle reading app. It’s super easy to have fun, engaging ESL warm-ups at your fingertips anywhere you might plan your lessons.

You can check out the book for yourself over on Amazon:

—>39 ESL Warm-Ups: For Kids (7+)<—

Word Association ESL Vocabulary Activity: Join the Conversation

Do you like this activity, or do you have some other favourite word association games? Leave a comment below and let us know your thoughts. We’d love to hear from you about anything word association game!

Also be sure to give this article a share on Twitter, Facebook, or Pinterest. It’ll help other busy teachers, like yourself find this useful resource.

Word association game

Last update on 2022-07-17 / Affiliate links / Images from Amazon Product Advertising API

There are several techniques that can help you learn and remember vocabulary in the language you are learning. In this post, we will explore techniques that help you remember what a word means by associating it with an image in your mind. Association links new information with old information stored in your memory. If you link a word with an image, it can be linked with other information already stored in your memory and so you will remember it better. For example, to remember a person’s name, you can relate it to a feature of their appearance. Here are a few more examples of using images to help you remember vocabulary.

Linkword Technique

The Linkword mnemonic (memory-aid) technique, developed by Michael Gruneberg, uses an image to link a word in one language with a word in another language. Here are some examples from French vocabulary for English speakers: the word for “rug” or “carpet” in French is “tapis”. To remember this, the Linkword technique says you should imagine an image of an oriental rug with the picture of a tap woven into it in chrome thread. “Tap” is found at the beginning of “tapis” so should help you remember the word when you visualise a rug. Next, the word for “grumpy” is “grognon”, so you should imagine a grumpy man groaning – “groan” sounds like “grognon” so should help you remember it. Other examples from German and Spanish are: to remember “Raupe” (German for “caterpillar”), you should imagine a caterpillar with a rope around its middle. To remember the Spanish word for cat, “gato”, you can imagine a cat eating a chocolate cake, or “gateau”.

Visualisation Technique

It is not always necessary to think of words in your own language in the visualisation. It is also possible to learn vocabulary by associating the word with an image. This technique uses the idea that when you hear a word, you visualise things that are associated with it in your mind. For example, when you hear “bird”, you think of what a bird looks like. When you hear “sweet”, you think of things that taste or smell sweet such as desserts or flowers. This is how we understand the word’s meaning, according to this technique.

Teachers teaching languages can show students a picture representing the meaning of a word they are trying to teach them. Otherwise, they can act out the meaning. They can ask students to think of things that are associated with the word, such as food if the word is “tasty” or a successful or hardworking person if the word is “ambitious” (and abstract concept). If you are learning by yourself, you can draw pictures of the words you are learning or think about images that the word conjures up.

This visualisation technique can also help you learn connotations of words (ideas or feelings that a word invokes beyond its literal meaning).

The Town Language Mnemonic

An extended example of the visualisation technique is the town language mnemonic developed by Dominic O’Brien. It is based on the idea that the core vocabulary of a language relates to everyday things – which can typically be found in a town or village. To use this technique, you should choose a town you are familiar with and use objects there as cues to recall images that link to words in your new language. Here are some examples:

Nouns in the town

Nouns should be associated with locations where you might find them: the word for “book” should be associated with an image in your mind of a book on a shelf in the library. The word for “bread” should be associated with an image of a loaf in a bakery. Words for vegetables should be associated with a greengrocer’s shop. If there is a farm outside the town it can help you remember the names of animals.

Adjectives in the park

Adjectives should be associated with a park in the town: words like “green”, “small”, “cold”. People in the park can help you remember adjectives for different characteristics or hair colour or

Verbs in the gym

Verbs can be associated with a gym or playing field. This allows you to make associations for “lift”, “run”, “walk”, “hit”, “eat”, “swim”, “drive”, etc.

Try It Yourself

As well as being powerful tools for learning and memorising vocabulary, these techniques can be fun and can keep you interested in learning new words. Lists of words can be useful too but images can help jog your memory. You may remember the words better if you write them on a whiteboard too – you are active and moving around when you do this so your brain is stimulated more than when you are sitting at a desk. We hope you find these tips useful. Let us know if they work for you!

Written by Suzannah Young

- Review

- Open Access

- Published: 09 March 2017

Asian-Pacific Journal of Second and Foreign Language Education

volume 2, Article number: 1 (2017)

Cite this article

-

8884 Accesses

-

13 Citations

-

Metrics details

Abstract

Word Associates Format (WAF) tests are often used to measure second language learners’ vocabulary depth with a focus on their network knowledge. Yet, there were often many variations in the specific forms of the tests and the ways they were used, which tended to have an impact on learners’ response behaviors and, more importantly, the psychometric properties of the tests. This paper reviews the general practices, key issues, and research findings that pertain to WAF tests in four major areas, including the design features of WAF tests, conditions for test administration, scoring methods, and test-taker characteristics. In each area, a set of variables is identified and described with relevant research findings also presented and discussed. Around eight topics, the General Discussion section provides some suggestions and directions for the development of WAF tests and the use of them as research tools in the future. This paper is hoped to help researchers become better aware that the results generated by a WAF test may vary depending on what specific design the test has, how it is administered and scored, and who the learners are, and consequently, make better decisions in their research that involves a WAF test.

Introduction

Vocabulary knowledge is multi-dimensional and entails different aspects of knowledge about knowing a word (Chapelle 1994; Henriksen 1999; Milton and Fitzpatrick 2014; Nagy and Scott 2000; Nation 1990, 2001; Schmitt 2014). Among the various conceptualizations of the dimensions of vocabulary knowledge, the best-known one is perhaps the differentiation between size or breadth and depth, with the former commonly known as referring to how many words one knows and the latter how well one knows those words (Anderson and Freebody 1981; Schmitt 2014; Wesche and Paribakht 1996). Defining vocabulary size in a numeric sense appears to make it “easily” assessable with learners demonstrating knowledge of form-meaning connections for a selected set of words that represent different frequency bands, such as in the case of the Vocabulary Levels Test (Nation 1990; Schmitt et al. 2001). On the other hand, what depth means has been unclear, and the diverse conceptualizations and discussions have posed a challenge to the assessment of this dimension of knowledge (Chapelle 1994; Henriksen 1999; Nation 2001; *Qian 2002; Read 2000, 2004; Richards 1976; Wesche and Paribakht 1996; see Schmitt 2014 for a recent review).

According to Read (2000), there were two general approaches in the literature to the assessment of second language (L2) vocabulary depth. The “developmental” approach, which reflects the incremental nature of vocabulary acquisition and is represented by the Vocabulary Knowledge Scales (Wesche and Paribakht 1996), describes word mastery as following a continuum from not knowing anything about a word to full mastery characterized by the ability to correctly use the word across contexts. The “dimensional” approach, on the other hand, contends that vocabulary depth encompasses various aspects of knowledge about words, such as form, meaning, and use in both receptive and productive senses and in both spoken and written modalities (Nation 2001; Schmitt 2014). Read (2004), for example, distinguished between three separate but related meanings of depth, including precision of meaning (“the difference between having a limited unclear idea of what a word means and having much more specific knowledge of its meaning), comprehensive word knowledge (“knowing the semantic feature of a word and its orthographic, phonological, morphological, syntactic, collocational and pragmatic characteristics”), and network knowledge (“the incorporation of the word into its related words in the schemata, and the ability to distinguish its meaning and use from related words”) (pp. 211–212).

Given the vast scope, there is perhaps no need, and logistically impractical, to assess every aspect of knowledge implied in Read’s (2004) three meanings of depth (Schmitt 2014). As Read (2000) argued, including more and more aspects of word knowledge to be assessed means that fewer and fewer words will become the target of assessment, which is certainly not a desirable direction to follow. Focusing on the aspect of network knowledge and based on the concept of word association, *Read (1993; 1998) pioneered in the development of what he called Word Associates Format (WAF) tests for assessing L2 vocabulary depth. As Read (2004) argued,

“as a learner’s vocabulary size increases, newly acquired words need to be accommodated within a network of already known words, and some restructuring of the network may be needed as a result…This means that depth can be understood in terms of learners’ developing ability to distinguish semantically related words and, more generally, their knowledge of the various ways in which individual words are linked to each other.” (p. 219)

Word association tasks have long been used in the literature to examine developing organizations of words in native-speaking (L1) children’s mental lexicon (Aitchison 1994; Entwisle 1966; Nelson 1977). Typically, children are asked to provide a word that has connection with a stimulus word (i.e., free association), and their responses for a set of stimulus words are then categorized according to different types of association relationships, such as paradigmatic (i.e., an associate of the same word class as the stimulus word and performing the same grammatical function in a sentence, such as a synonym), syntagmatic (i.e., an associate of a different word class from the stimulus word and having a sequential relationship with the stimulus word, such as a collocate), and clan/phonological (i.e., an associate having sound resemblance to a stimulus word). Through comparisons of the proportions of the different types of associates in students’ responses (across time), an inference is usually made on how words are organized and how the organization develops in the students’ mental lexicon.

The free association paradigm has also been commonly used in the L2 literature to probe into the mental organization of words and the development of that organization in L2 learners (e.g., Fitzpatrick 2013; *Henriksen 2008; Jiang 2002; Nissen and Henriksen 2006; Wolter 2001; Zareva 2007). From the perspective of assessing vocabulary depth, however, the free association paradigm has some notable limitations. Meara (1983; see also Meara 2009) found that L2 learners’ responses through free association differed systematically from native speakers’ and could be very diverse and unstable across test administrations. In addition, it is not always straightforward and easy to score learners’ productions and identify possible individual differences, even though a few ways, typically using native speakers’ canonical responses as a benchmark for scoring/coding to represent the “nativelikeness” of learner responses, have been proposed and validated in the literature (e.g., Fitzpatrick et al. 2015; *Henriksen 2008; Higginbotham 2010; Schmitt 1998; Vermeer 2001; Zareva 2007; Zareva and Wolter 2012).

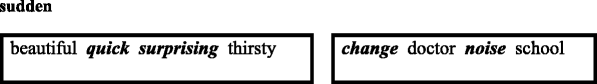

The aforementioned concerns about free association for assessment purposes were a major reason that motivated *Read (1993; 1998) to develop the Word Associates Test (WAT) for assessing university English learners’ vocabulary learning. Different from a free association task, the WAT takes a controlled, receptive format. In *Read’s (1998) WAT, a target word (i.e., an adjective) is followed by eight other words, half of which are semantically associated with the target word (i.e., associates) and the other half are not (i.e., distractors). The associates have two types of relationship with a target word: paradigmatic and syntagmatic. As shown in the example below (*Read 1998, p. 46), the target word sudden is an adjective followed by two boxes of four words. The four words on the left are all adjectives with associates being synonymic to sudden (i.e., quick and surprising), and the four words on the right are all nouns with associates being collocates of sudden (i.e., change and noise). The other four words (e.g., thirsty and school) are semantically unrelated. Asking learners to choose the associates of sudden from a given set of choices, rather than the associates being elicited through a free association paradigm, thus allows researchers to have good control over the responses to be given by the learners. It also makes scoring much easier than in the case of free association, although as will be seen in the review below, how a WAT item is to be best scored is still an issue under debate.

Ever since *Read (1993; 1998) developed the WAT, various other WAF tests following the prototype have been developed and validated in English as well as other languages, depending on the specific designing features reviewed later in this paper (see also Additional file 1: Appendix B). Those tests have also been used widely in the L2 literature where learners’ level of vocabulary depth needs to be assessed, and individual differences need to be obtained. Despite WAF tests’ generally strong psychometric properties (e.g., reliability and concurrent validity) and usefulness as research tools (e.g., indexing vocabulary depth and predicting the development of language proficiency, such as reading comprehension), there are also a number of questions unanswered about them (Beglar and Nation 2014; Schmitt 2014), given the big variations in what specifically the tests are and how they are used with what learners.

It is thus the interest of this paper to review current practices, key issues, and research findings about WAF tests and their applications in L2 research, and provide some suggestions and directions for the development of WAF tests and the use of them as research tools in the future. Specifically, this review is guided by the following four questions:

-

1.

What design features of WAF tests have received attention from L2 researchers? Are test responses or performance, and more importantly, the psychometric properties of WAF tests influenced by those design features?

-

2.

Does the way WAF tests are administered have an influence on test responses and the psychometric properties of the tests?

-

3.

How are responses to WAF tests scored? How, if at all, do different scoring methods have an influence on the psychometric properties of WAF tests?

-

4.

Do test-taker characteristics have an influence on WAF tests, or do WAF tests create testing bias among different types of test-takers?

To prepare for this review, we referred to two electronic research databases (i.e., MLA International Bibliography and PsycINFO), supplemented by Google Scholar, to located research outputs using a set of keywords, such as word association/word associates, vocabulary knowledge, depth/deep knowledge, lexical network/semantic network, and second language/foreign language. In addition, the reference lists of some existing publications, particularly recent reviews that involved vocabulary depth, such as Read (2014) and Schmitt (2014), were also consulted. To meet the inclusion criteria for this review, the publications would need to be published, appear in English, and report empirical research, either on the development and validation of a WAF test or using a WAF test primarily as a research tool. To be considered as a WAF test, the focal task/test would need to be based on word association and assess learners’ lexical network knowledge in a controlled, receptive format with a target word followed by a number of choices. Consequently, a total of 29 papers with 31 studies met those criteria and were included in this review (see Additional file 1: Appendix A).

The rest of this paper is divided into two major sections, including a review section and a general discussion section. The review section consists of four parts that cover the general practices in the literature, and where applicable research findings as well, about the design features of WAF tests, test administration, scoring, and test-taker characteristics, in correspondence to the four aforementioned questions that guided this review. Additional file 1: Appendix A provides a list of all the studies that involved a WAF test(s) with information about what the test was like and how it was administered and scored. Additional file 1: Appendix B provides a list of the key issues/variables related to the four review areas (i.e., test itself, administration, scoring, and test-takers) and the studies that directly addressed one or more of those issues. Around eight major issues, the General Discussion section provides some suggestions and directions for the development of WAF tests and the use of them as research tools in the future.

Review

Design features of WAF tests

There are many issues to be considered for developing a WAF test, such as what words to be used, what association relationships to be addressed, how many options should be included, whether the number of associates should be fixed across items or it can vary, how associates are to be distributed among choices, what kind of distractors to be used. As the review below shows, these issues are all important, but not all have received (an equal amount of) attention in the literature; and the research that directly addressed these issues to inform WAT test development and use is also very limited.

Word Frequency

Developing a WAF test first requires selecting a certain number of words as target words (and also choices). An immediate consideration closely related to the content and construct validity of a WAF test is whether learners should have at least partial knowledge of a target word (and its choices) or it is acceptable to include low frequency words that may not be known to the learners. Presumably, all words should be known to the target group(s) of learners so that any variance in performance on the test would represent learners’ individual differences in network knowledge rather than how many words (in the test) they actually know, which would be the focus of a vocabulary size test.

Given the aforementioned concern, there is perhaps no surprise that high frequency words or words considered the most useful for target group(s) of leaners were often sampled for developing a WAT test. In developing the first version of his WAT for university ESL learners, *Read (1993), for example, randomly selected the 50 stimulus words from the University Word List (Xue and Nation 1984), which is a list of 836 words commonly appearing in academic texts. In addition, the choice words were ensured to have similar or higher frequency than the stimulus words. Later, for the revised WAT, *Read (1998) seemed to give more careful consideration for high frequency words as the target words were mostly sampled from Bernard’s Second and Third Thousand Word Lists. “Because the purpose of the test was to measure depth of knowledge, the emphasis was on words with which most of the test-takers were likely to have at least some familiarity” (*Read 1998, p. 45). *Schoonen and Verhallen (2008), in their report on the development of a Dutch WAT for young L2 learners, also made it clear that “the starting point was that familiarity with all the words could be taken for granted for nine-year-olds in Grade 3 of primary school (the youngest target group)” (pp. 219–220).

Despite careful consideration for word frequency, learners’ familiarity with the words developers chose is obviously “an assumption, not a certainty” (*Schoonen and Verhallen 2008, p. 219). A consequence of this lack of certainty is that the validity of the test might be threatened. While some learners may choose not to respond for target words that they do not know and are unwilling to guess, other may make guesses in a similar situation (*Read 1993; 1998; Schmitt 2014). *Read (1993), for example, found from learners’ verbal reports that some higher proficiency learners tended to guess, often with success, for target words that were unknown or partially known (e.g., denominator and diffuse).

Although selecting high frequency words presumably known to all learners appeared to be a general principle, a few studies attempted to examine how words of distinct frequency bands may indeed have an impact on WAF tests. In their development of a WAF test for Dutch-speaking university learners of French, *Greidanus and Nienhus (2001) selected the 50 target words and their 300 choices from five distinct frequency bands based on a frequency list of about 5000 words (i.e., 1000, 2000, 3000, 4000, and 5000). There was a general pattern that learners scored significantly better on more frequent items than less frequent ones, which was true for learners with different years of studying French in their respective university. Yet, no significant difference was observed between the two lowest frequency levels (i.e., 1000 and 2000) for the more proficient group of learners. A subsequent study (*Greidanus et al. 2004), however, produced mixed findings. For the initial version of a Deep Word Knowledge (DWK) test, very similar word frequency effects like in *Greidanus and Nienhus (2001) were observed. An improved version of the DWK, however, overall, showed significant frequency effects only among less proficient learners but not more proficient learners (and native French speakers). *Greidanus et al. (2005) further addressed the effect of word frequency on test performance by selecting the target (and choice) words from the frequency range of 5000–10,000. Different from the findings of *Greidanus and Nienhus (2001), no significant difference was found across all the frequency levels (i.e., 6000, 7000, 8000, 9000, 10,000) for all groups of participants. In *Horiba’s (2012) Japanese WAF test, all the words were selected from the two higher or more difficult levels of the four levels of words for the Japanese Language Proficiency Test. It was found that Korean-speaking learners’ performance was significantly better on more frequent target words than on less frequent ones; yet, such a word frequency effect did not surface for Chinese-speaking learners.

The lack of significant frequency effects in *Greidanus and Nienhus (2001) for more advanced learners, *Horiba (2012) for Chinese learners, and *Greidanus et al. (2005) seemed to suggest that there may be a “threshold” word frequency for a frequency effect to occur or not occur among learners at a certain proficiency level. On the one hand, if test words are highly frequent, such as in the case of those selected from 1000 and 2000 frequency bands in *Greidanus and Nienhus (2001), advanced learners might have developed similarly strong network knowledge for all words within the frequency band(s) disregarding the words’ actual frequency; on the other hand, if words tend to be low in frequency, such as in the case of *Horiba (2012) and *Greidanus et al. (2005), it might make the WAF test very similar to a test of vocabulary breath. In *Horiba’s (2012) study, Korean-speaking learners’ WAF test scores showed a very high correlation (r about .90) with a measure that assessed their vocabulary breadth, which perhaps explains why over and above breath, depth did not explain any significant amount of additional variance in reading comprehension.

In summary, while it seems desirable to ensure that the words of a WAF test are high in frequency, as this would help reduce the kind of guessing effect reported in *Read (1993; 1998) and thus the possibility of threatening the validity of the test, having the frequency too high might end up with the test not being able to discriminate the depth knowledge of advanced learners. On the other hand, having all words too challenging (or very low in frequency) might threaten the validity of the test as a depth measure, too, as there is a risk that the test may be essentially addressing learners’ vocabulary breadth, in addition to a risk of guessing. The intricate issue of word frequency warrants more attention in future research, preferably with psychometric evidence over and beyond test score comparisons.

Word class

In addition to frequency, another consideration for word selection is about word class. While all studies used content words (i.e., nouns, adjectives, and verbs) (see Additional file 1: Appendix A), they differed in which one or more of the word classes were included. *Greidanus and Nienhus (2001), for example, included nouns, adjectives, as well as verbs (but mostly nouns) in their Dutch WAF test. *Henriksen’s (2008) word connection task included an equal number of nouns and adjectives. *Schoonen and Verhallen (2008) included words of all three form classes, but their proportional distributions were unknown. In *Read’s (1993) initial version of the English WAT, target words also included adjectives, nouns, and verbs. However, the heterogeneity in structure across items led him to suggest that “it will be necessary to develop tests that focus on more homogeneous subsets of vocabulary items so that greater consistency can be achieved in the semantic relationships among the words and in the pattern of responses elicited” (p. 369). Consequently, *Read (1998) chose to focus only on adjectives in his revised WAT. Such an approach was also adopted by *Qian and Schedl (2004) when they developed a Depth of Vocabulary Knowledge (DVK) measure for possible inclusion in the TOEFL.

The narrower focus on adjectives only, which did not seem to result in weak psychometric properties of a WAF test (*Read 1998; *Qian and Schedl 2004), is not free from concerns. For example, when the test is used as a research tool for measuring learners’ vocabulary depth (e.g., *Akbarian 2010; *Guo and Roehrig 2011; *Qian 1999; 2002; *Qian and Schedl 2004; *Zhang 2012), the scope of the competence measured would be necessarily narrow and would not be able to capture the full repertoire of the network knowledge that learners have; as a result, the predictive effect of vocabulary depth (on reading comprehension) might have been underestimated. In addition, as *Dronjic and Helms-Park (2014) argued, noun phrases, such as in the case of adjective-noun collocations like sudden change, should have the noun as the center. In natural speech production of adjectival phrases, nouns govern the choice of their modifiers, rather than the other way around, whether the modifier is prenominal or post-nominal. To this end, a WAF test with adjectives as target words and nouns as choices seems to test a process opposed to that in natural speech production in that the test requires learners to begin with a modifier and then search for its potential heads.

The above concerns seem to suggest that it would be desirable to include words from different form classes and it might not even be a good choice to have adjectives included. A conclusion like this, however, would be too hasty, in view of the heterogeneity of learner responses reported in *Read (1993). A deeper understanding of this issue would require comparisons of learners’ response patterns across words of different form classes as well as the psychometric properties of subsets of a WAF test that includes different word classes. So far, there has been little research in this direction. In *Read (1993) where item-wise heterogeneity was discussed, how the heterogeneity might be specifically attributed to diverse word classes was unknown because the author did not conduct any direct comparison by either drawing upon learners’ verbal reports or examining the psychometric properties of the test separately for verbs, adjectives, and nouns. *Greidanus and Nienhus (2001) and *Schoonen and Verhallen (2008), while both including words of different form classes as mentioned earlier, did not attempt to separately analyze them and make any comparison, either. Using a free association task (as opposed to a WAF test), Nissen and Henriksen (2006) found word class tended to moderate the distribution of associates belonging to different types of association relationships among Danish-speaking learners of English as a Foreign Language (EFL). The authors suggested that words of different form classes may be organized differently in learners’ mental lexicon. Thus, it should be a strong interest in future research to examine whether verbal, nominal, and adjectival target words might involve different thought processes among learners, and more importantly, whether or not learners’ responses to subsets for those word classes would be unidimensional in assessing their network knowledge.

Association relationships

Another decision to be made for a WAF test is the types of association relationships to be tested for the target words selected. As shown in Additional file 1: Appendix A, there were variations in what association relationships were addressed in previous WAF tests, but most included paradigmatic and syntagmatic association (e.g., *Qian and Schedl 2004; *Read 1998), with some others considering analytic association as well (e.g., *Greidanus and Nienhus 2001; *Greidanus et al. 2005; *Horiba 2012; *Read 1993).

Possibly because of the predominance of paradigmatic associates in L2 learners’ free association responses over other types of association, including syntagmatic association (e.g., Jiang 2002; Wolter 2001), there was an interest in the WAF literature to compare learners’ performance for different types of association relationships. *Greidanus and Nienhus (2001), for example, found Dutch-speaking learners of French, disregarding the number of years of university learning of French, consistently showed a better performance for paradigmatic (and analytic) association than for syntagmatic association. *Greidanus et al. (2005) largely replicated that finding, with a similar test that had lower frequency target words, among more diverse groups of Dutch-speaking French learners (as well as native speakers). A similar finding was also observed in *Horiba (2012) among Chinese-speaking learners of Japanese, but not Korean-speaking learners, for whom there was no significant difference between paradigmatic and syntagmatic association.

*Dronjic and Helms-Park (2014) administered *Qian and Schedl’s (2004) DVK to two compatible groups of native English speakers. Largely corroborating the aforementioned findings, paradigmatic scores were found to be significantly higher than syntagmatic scores, disregarding whether the participants knew the number of associates to be selected and how the test was scored. In addition, the participants’ responses for syntagmatic association were far more heterogeneous than for paradigmatic association, which seemed to resonate a concern that *Read (1998) voiced about his differentiation between the two types of association relationships in his revised WAT. Specifically, there is a question as to “whether the two types of associates represent the same kind of knowledge of the target words, or whether, say, the ‘semantic’ knowledge expressed in the paradigmatic associates is distinct from the ‘collocational’ knowledge tapped by the syntagmatic ones” (p. 57).

An initial understanding about whether paradigmatic and syntagmatic (and other types of) association may tap the same kind of network knowledge can be obtained from the correlational relationships reported in some studies. *Greidanus and Nienhus (2001), for example, in addition to comparing learners’ scores, found that the correlations between the three types of relationships themselves (i.e., paradigmatic, syntagmatic, and analytic) and their correlations with learners’ general French proficiency were all very small and non-significant. *Horiba (2012), in contrast, found strong correlations between the same three types of association relationships for Korean-speaking learners of Japanese (rs about .79-.85). In addition, all three types of association also showed strong correlations with their vocabulary breadth (rs about .77-.91) with paradigmatic association demonstrating the highest correlation. Yet, the correlations between the three types of association were all very small, albeit significant, among Chinese-speaking learners (rs about .29-.36). And only paradigmatic and syntagmatic association showed significant correlations with vocabulary breadth (rs about .34-.60). Notable variations were also observed in the correlations with reading comprehension for the three types of association (and between the two groups of learners). *Qian and Schedl (2004) found the paradigmatic and syntagmatic sections of the DVK showed a significant correlation of about .80 among university ESL learners. Using the same test and the same scoring method, *Dronjic and Helms-Park (2014), however, found native English speakers’ performance on the two subsets showed much smaller, albeit significant, correlations (.383-.460). Only when a new scoring method that gave credit to selection of associates as well as rejection of distractors was used did the two types of association show a strong correlation (.78–.88). The authors thus suggested that “knowledge of collocability and knowledge of semantic relations such as synonymy and polysemy might represent two different dimensions of lexical depth. This finding also reflects the commonsense observation that it is at least theoretically possible to have a speaker who knows a lot about word meanings and little about how these words combine with other words…” (p. 210).

Two studies went beyond simple bivariate correlations to examine the factor structure of association knowledge measured with different types of association. In *Shin’s (2015) study on elementary school EFL learners in Korea, two different sets of items were designed to address paradigmatic and syntagmatic association, respectively, which made the test different from *Read’s (1998) WAT and many other WAF tests where paradigmatic and syntagmatic association were addressed simultaneously for the same target words. The test as a whole and the two subsets all had moderate and significant correlations with the students’ performance on a standardized reading comprehension test. More importantly, confirmatory factor analysis (CFA) revealed that all test items significantly loaded on the factor of their respective association relationship (factor loadings from .35 to .77). In addition, the two factors were also significantly and strongly correlated (r = .83).

While *Shin (2015) concluded from the aforementioned CFA result that paradigmatic and syntagmatic relationships tap rather different dimensions of deep word knowledge, the strong correlation between them seemed to suggest significant overlap between them and the two “factors” might further load on a higher-order factor. In this respect, *Batty’s (2012) study provided a more nuanced understanding. Based on *Qian’s (2002) DVK administered to Japanese-speaking university EFL learners, *Batty (2012) tested three CFA models: the one factor model hypothesized that all the WAT items loaded on a general vocabulary factor; the two-factor model hypothesized that the syntagmatic and paradigmatic associates formed two separate but correlated factors (i.e., the model tested in *Shin 2015); the bifactor model hypothesized that all WAT items loaded on a single general factor (of vocabulary depth), while the syntagmatic and paradigmatic associates additionally loaded on two separate, smaller factors. The first two models did not show satisfactory model fits, although the two-factor model had slightly better fits than the one-factor model. The bifactor model exhibited the best fits of all three models, and the highest item loadings were overall largely on the general factor of vocabulary knowledge/depth, which was particularly true for the loadings of syntagmatic associates. Despite this finding, it seemed difficult to conclude that paradigmatic and syntagmatic association are unidimensional in accessing vocabulary (depth), because the one-factor model showed poor model fits on the one hand and the raw correlation between the two types of items was low (r = .61) compared to the correlations within each type of items (rs = .79 and .80 for syntagmatic and paradigmatic association, respectively) on the other. In addition, half of the paradigmatic associates loaded actually more highly on the synonym factor than on the general vocabulary factor.

The above studies, which differed in focal languages, learners, and WAF tests, painted a complex picture about whether different types of association tap the same or different aspects of network knowledge. Before more conclusive evidence is obtained in the future, it does not seem as explicit as researchers often did to simply aggregate the scores for different types of association to form a total score to represent learners’ vocabulary depth knowledge (see Additional file 1: Appendix A). It is suggested that in future use of WAF tests, separate scores for different types of association and their internal relationships (e.g., correlation) should at least be first reported before a combined score is used to index learners’ vocabulary depth. In a case when vocabulary depth is used to predict other variables, such as lexical inferencing (e.g., *Ehsanzadeh 2012; *Nassaji 2004) or reading comprehension (e.g., Akbarian 2010; *Guo and Roehrig 2011; *Horiba 2012; *Qian and Schedl 2004; *Zhang 2012), we suggest that separate analyses be done for different association relationships (with or without analysis using the aggregated score) so that the findings could be more revealing about the nuance of the role of lexical network knowledge in language skills development.

Number of options

Although the options for a target word could be any number, most WAF tests, as shown in Additional file 1: Appendix A, had six (e.g., *Greidanus and Nienhus 2001; *Schoonen and Verhallen 2008) or eight options (e.g., *Read 1993; 1998; *Qian 1999; 2002; *Qian and Schedl 2004), typically with an equal number of associates and distractors. Two notable exceptions are *Horiba’s (2012) Japanese WAF test, which had seven options with three associates and four distractors, and *Henriksen’s (2008) word connection task, which had ten options with five associates and five distractors.

Despite the variation in number of options, it was unclear whether the choice made for a particular WAF test was more for practical considerations or psychometric advantages. Presumably, having a smaller number of options would give developers more flexibility to find appropriate associates and distractors within a particular pool (or frequency range) of words, and thus reduce the possibility of having to include less frequent words because not enough words at the desirable frequency level could be found. This consideration was represented in Read’s (1993) decision to not restrict his choice words to the University Word List. In addition, having fewer options may also make the test less challenging to young learners (*Schoonen and Verhallen 2008).

So far there was little research on how having different numbers of options may influence, if at all, the psychometric properties of WAF tests. *Schmitt et al. (2011) seemed to be the only study that directly compared WAF tests with different numbers of options. In their Study 2, *Schmitt et al. (2011) administered to university ESL learners a WAF test designed following *Read (1998). The test had two versions, one with 6 options and the other 8 options. After taking a paper-and-pencil test for both versions, learners were interviewed to demonstrate their actual knowledge of the words in the test and share the thought processes for their responses. Based on the interview responses, learners’ degree of knowledge was coded at three levels, including no knowledge, partial knowledge, and full knowledge. In relation to the focus of this review part on number of options, it appeared that compared to the 6-option version, the 8-option version resulted in a higher proportion of cases of “mismatch” between learners’ knowledge assessed through the paper test and the interview. The authors suggested that the 8-option format tended to overestimate learners’ actual knowledge more seriously than did the 6-option format and thus may be a less desirable choice for assessing learners’ depth knowledge. More validation evidence is needed in the future.

Number of associates

In either situation, 6 or 8 options, there is a need to consider two additional issues with respect to the number of associates. The first one is whether the number of associates should be fixed or it could be allowed to vary across items. As shown in Additional file 1: Appendix A, in most cases, WAF tests had a fixed number of associates with an equal number of associates and distractors. For example, *Read’s (1998) WAT and *Qian and Schedl’s (2004) DVK items all have four associates (out of eight choices). *Schoonen and Verhallen’s (2008) Dutch WAT and *Greidanus and Nienhus (2001) French test items all had three associates (out of six choices). The French tests in *Greidanus et al. (2004; 2005) seemed to be the only ones that had varied numbers of associates (2–4 but with six choices for all).

The second issue is an extension of the first one, that is, should there be an equal number of associates (and distractors) for different types of association relationships? Many WAF tests were unclear on the total number of associates for different types of association relationships. In *Read’s (1998) revised WAT, for example, there were three types of distributions for paradigmatic and syntagmatic associates in the left and right boxes, respectively, including 1–3, 2–2, and 3–1. Thus, there is a possibility of associates for the two types of association not being equally represented in the test, which may pose a challenge when there is a need to compare between those types of association, which we reviewed earlier.Footnote 1 This was perhaps a reason that in the few studies where different types of association were compared, each item had one associate for each type of association so that different types of association had an equal number of associates or the same range of scores. For example, in *Greidanus and Nienhus (2001) and *Horiba (2012), an item had three associates (the others were all distractors), one for paradigmatic association, one for syntagmatic association, and the third one for analytic association.

A question to ask about the above issues related to the number of associates is, do they really matter for WAF tests, for example, by affecting their psychometric properties? If *Greidanus et al.’s (2005) argument holds, that is, “if there were always three correct responses, the participants could make their choice by elimination. With a variable number of correct responses they had to determine each time whether the responses word belonged to the network of the stimulus word” (p. 194), having varied numbers of associates across items would mean higher validity of a WAT test. So far, no studies, however, have directly tested this issue.Footnote 2 Thus, no evaluation could be made on whether it would be preferred to vary the number of associates across items or have the number fixed. In addition, it was unclear whether there is a need to make sure the number of items is the same for different association relationships, given the possibility of using proportion of correct responses (*Qian and Schedl 2004) and/or scoring methods to create a “balance” between them.

Distribution of associates

In *Read’s (1998) revised WAT where the choices for paradigmatic and syntagmatic association were presented in two separate groups/boxes, there were varied distributions of associates, as it was believed that having the same pattern of distribution for all words, such as two associates (and two distractors) in each box, might provide a pattern for learners to follow, and thus might lead to guessing. Consequently, three distributions of associates for paradigmatic and syntagmatic association were adopted to counteract guessing (i.e., 1–3, 2–2, and 3–1). Such a feature of test design appeared to be effective, as it was found in *Qian and Schedl (2004) that learners interviewed all reported that it was difficult to guess, because the number of associates in each box of the DVK, which followed the format of *Read (1998), was not fixed.

On the other hand, *Schmitt et al.’s (2011) Study 2 suggested that the validity of WAF tests could be possibly impacted by associate distributions. Specifically, the study revealed correlations of different strengths for items with different distribution patterns for paradigmatic and syntagmatic associates. For example, those items with the 2–2 distribution showed the strongest correlation between the paper test scores and the scores on an interview, which was believed to better represent learners’ actual depth knowledge (r about .871). In contrast, the items with the 1–3 distribution showed the lowest correlation (r about .736), which, as the authors explained, might be attributed to the semantic relatedness of the (three) syntagmatic associates in the choices. In other words, like the learners in *Read (1993), those learners might have used patterns in the choices to guess successfully for a target word that they did not even know.

*Schmitt et al.’s (2011) finding appeared to suggest that compared to the other two distributions, the 1–3 distribution might be most susceptible to guessing and overestimate learners’ actual depth knowledge. However, it is noted that the source of guessing (i.e., semantic relatedness of syntagmatic associates) was perhaps a result of the difficulty that the authors had in finding three collocates with distinct meanings. Thus, it is still questionable whether it was the 1–3 distribution per se or a lack of appropriate selection of choice words for test items with that distribution that had resulted in the lowest correlation. More research is certainly needed in the future to further our understanding of how learners’ performance, response behaviors, and the psychometric properties of WAF tests may be influenced by how associates are distributed. It might be because of the simpler pattern of associate distribution in the 6-option test (there are only two variations: 1–2 and 2–1) that *Schmitt et al. (2011) did not examine the issue for this format. Nevertheless, given that this format was perhaps the most commonly used one in the literature (see Additional file 1: Appendix A), it should be of interest to explore it as well in future research, and compare the findings with those revealed by *Schmitt et al. (2011) about the 8-option format.

Distractor properties

Another important feature to consider for WAF tests is what distractors to include, notably whether distractors should be semantically related or unrelated to target words. *Read (1993; 1998) argued that distractors should not have semantic links to the stimulus word. This principle was subsequently followed in some other studies, such as Greidanus et al. (2004), but not universally endorsed (e.g., *Henriksen 2008; *Schoonen and Verhallen 2008). *Schoonen and Verhallen (2008), in their WAF test for young Dutch learners, purposefully used semantically related distractors, which had less strong an association with the target words than the associates. The authors believed that generalization and abstraction play an important role in students’ word knowledge development, and argued that it is “on the basis of these processes that the attribution of meaning is gradually decontextualized” (p. 157). Thus, WAF tests as a measure of depth knowledge should assess learners’ generalized, decontextualized knowledge of a stimulus word (i.e., “words that always belong to the target word;” p. 157), such as fruit, yellow, and peel for banana, rather than knowledge of incidental, content-dependent meanings (e.g., monkey for banana). In *Henriksen’s (2008) word connection task, each target word was followed by 10 words. The five answer words showed the most frequent associations from many native speaker norming lists, whereas the five semantically related distractors were “infrequent responses given by only one native speaker in the norming lists; that is, these words represent potential but clearly more peripheral links in the lexical net” (p. 42).

*Greidanus and Nienhus (2001) explicitly tested how different types of distractors would have an influence on their French WAF test for Dutch-speaking university students. It was found that learners of different proficiency groups consistently showed better scores for the items with semantically related distractors than for the same items with semantically unrelated distractors. This score difference did not appear to be a surprise, given that semantically unrelated distractors were much easier to eliminate than related ones, and that the scoring method valued successful elimination or non-selection of distractors.

Learners’ better scores on items with semantically related distractors, however, do not indicate that those items should necessarily be a more preferred test design. *Read (1998), when validating the revised WAT where distractors were semantically unrelated, found from students’ verbal reports that more proficient learners tended to use the relationships, or the lack thereof, among the options, to make guesses on associates for unknown stimulus words. In this respect, a test with semantically related distractors may make guessing harder and engage learners’ network knowledge better and thus be a more preferred design. This seemed to be partly confirmed by the better reliability of the items with semantically related distractors (.76, as opposed to .63 for items with semantically unrelated distractors) as well as the correlations *Greidanus and Nienhus (2001) found between the two sets of the test and between the test and learners’ general proficiency. Specifically, disregarding learners’ proficiency level, the two item types did not show a significant correlation, suggesting that they may tap network knowledge in distinct ways as a result of the variation in distractor properties. In addition, in the lower proficiency group, the scores of neither item type correlated significantly with general proficiency; in the higher proficiency group, however, the items with semantically related distractors, as opposed to those with unrelated distractors, correlated significantly with learners’ general proficiency.

*Schmitt et al.’s (2011) validation study suggested that other factors related to WAF tests may also need to be considered for evaluating different types of distractors. In Study 2, *Schmitt et al. (2011) correlated university ESL learners’ scores for a paper WAF test where the items had three types of distractors with their scores on an interview which elicited their actual depth knowledge. It was found that the items with distractors having no semantic relationships (r = .776) produced a notably weaker correlation with the interview scores than did the items with semantically related distractors (r = .910) for the WAF test with six options, whereas in the WAF test with eight options, a reverse pattern was found (rs = .912 and .813, respectively). In both test situations, the correlations between the paper test scores and the interview scores were the least strong for the distractors with orthographic resemblance to stimulus words (rs = .636 and .663, respectively, for the 6-option and the 8-option format). The authors thus concluded that “different WAF versions may benefit from different distractor types, with Meaning-based distractors being better for the shorter 6-option version, but with No-relationship distractors being better for the 8-option version,” and “formal distracters should be less frequently used” (p. 121).

Whether or not distractors should be semantically related or unrelated to the target word is certainly an issue that deserves more research in the future. On the one hand, it should be helpful to include other types of validation evidence, such as concurrent or predictive validity, to compare the two item designs; on the other hand, variation in item design would also need to be considered in conjunction with other test-related variables, particularly scoring method. *Read (1998), for example, adopted a scoring method that only considered association selection for his English WAT with semantically unrelated distractors; *Schoonen and Verhallen (2008), on the other hand, adopted a method that awarded a point only if the response precisely matches the answer for their Dutch WAF test based on semantically related distractors. Yet, in *Greidanus and Nienhus (2001) where the two item designs were compared, a third method that gives credit for both associates selection and distractor non-selection was used. Thus, it appears that comparing distractor types in isolation from other factors which also have an impact on learners’ thought processes or response behaviors would not provide the best evidence for evaluation.

Administering WAF tests

A second set of issues that concern WAF tests pertains to how the tests should be (better) administered. For example, should the test be conducted in print or would it make any difference if all the words are read aloud to learners? Should learners be informed on the number of associates or should they be asked to select as many as they believe to be the associates even though the number of associates is fixed across items? Additionally, given that different scoring methods were often used (see the next section on Scoring WAF Tests), how might learners’ knowledge, or the lack thereof, about the method to be used for scoring their responses influence their response behaviors and the psychometric properties of a WAT test?

Written vs. aural modality

As Additional file 1: Appendix A shows, WAF tests were almost exclusively administered in the written form. Learners typically complete a paper-and-pencil test. In rare situations were learners also interviewed for the purpose of validating a paper test (e.g., *Read 1993; 1998; *Schmitt et al. 2011). The issue of modality in assessing vocabulary knowledge is of course not relevant to WAF tests alone. All conceptualizations of what it means to know a word seem to involve word forms, such as sound and orthography (e.g., Nation 2001; Read 2004). This suggests that vocabulary tests all need to assess learners’ ability to identify a word in both aural and written modalities. In the L1 literature, however, it is not necessarily the case in that (young) learners typically have acquired a lot of word meanings (and their semantic links) through oral language acquisition yet without being able to recognize all those words in print — they need to learn to decode words to access their meanings (and the network of meanings) in the mental lexicon. Thus, oral vocabulary is often distinguished from written vocabulary. This also explains why young children’s (free) word association was often elicited through oral interviews, and why there are oral vocabulary knowledge tests which do not require students to decode print words, such as the Peabody Picture Vocabulary Test.

In the L2 literature, which used to be concerned more about teaching foreign language learners (as opposed to learners of a second language), there tended to be an assumption that “if a word is known, then it is likely to be known in both written and aural forms” as a result of the concurrent focus of classroom instruction and learning on both sound (pronunciation), orthography (spelling), and meaning (Milton 2009, p. 93). Consequently, a view of assessment might have been taken that conceptualizes the ability to recognize words in print as an integral component of vocabulary knowledge and should thus be tested as such. This view could be legitimate if the assessment focus is on form(orthography)-meaning connection, which is the focus of most vocabulary size tests, such as the VLT and Yes/No tests. In other words, variance due to individual differences in the ability to process the orthographic forms of target words (for meaning access) might be considered as “construct-relevant.” In the case of WAF tests, however, the legitimacy of this view may be questionable, as the primary assessment focus is on meanings and their links (i.e., network knowledge) (Read 2004). In other words, possible variance induced by orthographic processing should be a significant factor to consider for WAF tests.

Such an issue seems to be particularly salient for learners of non-alphabetic languages who come from an alphabetic background, such as English-speaking learners of languages like Chinese and Japanese where a logographic system is used. Unlike alphabetic languages like English, Dutch, and French, which follow the rule of phoneme-to-letter correspondence and allow for the use of alphabetic principle to decode words, Chinese characters and Japanese kanji are square-shape symbols composed of strokes and stroke patterns. Thus, to access the meaning of a word in print, which is often composed of multiple characters, the component characters need to be recognized without the kind of immediate phonological clues available as in the case of words in alphabetic languages.

Thus, given the primary assessment focus of WAF tests on network knowledge, any demonstrated knowledge through the tests presumably should not be confounded by learners’ failure to recognize characters or words in print, which would threaten the validity of the tests. This might be a reason why Jiang (2002) adopted the aural modality when he administered a free association task to Chinese L2 learners, that is, learners listened to target words and orally provided associates. It also seems to explain the consideration that *Horiba (2012) had for including kana syllabaries together with kanji characters in her Japanese WAF test.

The aforementioned issue may be particularly important for the assessment of depth knowledge for those who learn Chinese or Japanese with substantial aural/oral experiences with the language, such as heritage language learners or non-heritage learners who spent a substantial amount of time learning the language in a second language or societal context (as opposed to those who learn the language primarily in a foreign language context through classroom instruction).Footnote 3 In other words, without appropriate control (e.g., adding pinyin for Chinese words and kana for Japanese kanji) or consideration for the modality of administration (i.e., administering the test in aural/oral form rather than in print), a WAF test might under-estimate the association knowledge of those learners, or testing bias might occur between foreign language learners and heritage learners or those learners for whom the acquisition occurs in a societal context.

Informed vs. uninformed

Previous studies also varied on whether learners are informed on the number of associates to be selected or whether they are asked to select as many associates as they believe to be appropriate even though there is a fixed number of associates across items. *Read (1993), for example, did not tell learners how many associates they were supposed to select; instead, they were asked to choose as many as possible even though they might not be very sure about an item. This practice was also followed in some studies that used a version of *Read (1993; 1998) (e.g., *Qian 1999; *Zhang 2012). In *Greidanus et al. (2004; 2005), learners were told that the number of associates varied without knowing which item had which number of associates. The authors believed that this would avoid participants making their choices by elimination. Yet, many other studies all included the total number of associates in their test instructions (e.g., *Qian and Schedl 2004; *Schoonen and Verhallen 2008). As argued by *Schoonen and Verhallen (2008) for their Dutch WAT which included semantically related distractors, “it is important that the number of required associations is fixed and specified for the test-takers, because association is a relative feature. Depending on a person’s imagination or creativity, he/she could consider all distractors to be related to the target word. A fixed number is helpful in the instruction because it forces test-takers to consider which relations are the strongest or most decontextualized” (p. 218).

From the perspective of learners’ thought processes for working on a WAF test, if they know the number of associates, they might tend to stop when they have had the correct number of associates selected, or they would be pushed to engage further with the other choices when they are sure about only one or two of the associates. On the other hand, when learners are not informed on the number of associates, they might tend to only choose those associates with complete certainty (or alternatively, use wild imagination to select as many as possible, which was the concern of *Schoonen and Verhallen 2008), which could end up with selecting fewer associates than the answer, and avoiding deep engagement with other choices to select the correct number of associates. This presumed effect of the condition under which a WAF test is administered (i.e., informed vs. uninformed) on learners’ test-taking behaviors might have an impact on their test performance, and more importantly, the psychometric properties of the test.

The aforementioned issue has received little attention in the literature. *Greidanus et al. (2005) administered two parallel DWK tests to Dutch- and English-speaking learners of French (as well as native speakers). As described earlier, both tests included six choices, with the first included a fixed number of associates (i.e., 3) and with learners informed on this number, whereas in the second one, the number of associates varied, and learners were told that the number varied but did not know which item had which number of associates. Learners’ performance did not indicate a significant difference between the two tests. Although the first seemed to be slightly stronger in reliability (( alpha ) = .91) than the second (( alpha ) = .88), no other information about the psychometric properties of the two tests was reported.

*Dronjic and Helms-Park (2014) specifically manipulated the administration condition by asking two compatible groups of native English speakers to work on *Qian and Schedl’s (2004) DVK. One group took the test under the constrained (informed) condition knowing that all items had four associates, and the other group worked in the unconstrained (uninformed) condition being asked to select as many choices as they deemed appropriate. Among many other findings, disregarding scoring methods, the constrained condition showed significantly better performance than the unconstrained condition. In addition, the participants’ scores were more homogeneous in the constrained condition than in the unconstrained condition.

*Dronjic and Helms-Park’s (2014) findings seemed to suggest a preference over letting learners know the number of associates. While the more homogeneous test performance may not be conclusive evidence for such a preference, an implication for research practice is obvious in that there is a need for researchers to specify how their WAF test is administered, which was not always the case in the literature (see Additional file 1: Appendix A). Given the findings reviewed above, without knowing how learners were instructed to work on the test, it would be difficult to make reasonable comparisons on learners’ test performance across studies on the one hand and evaluate the psychometric properties of different WAF tests on the other.

Scoring WAF tests

At least three methods have been used in the literature for scoring WAF tests. The first method, which was initially adopted by *Read (1993), scores learners’ responses based on their associate selection only. In other words, neither non-selection of any distractor would be awarded a point nor the selection of one would be penalized. The second method awards a point for selection of an associate as well as non-selection of a distractor. The third method and perhaps also the strictest of the three, awards a point for a response only if it precisely matches the answer (i.e., selection of all associates but not any distractor).

Named by *Schmitt et al. (2011) as the One-Point, Correct-Wrong, and All-or-Nothing methods, respectively, all three methods have been adopted in previous studies (see Additional file 1: Appendix A). Yet, empirically to what extent one might be preferred over another has received little attention in the literature. *Dronjic and Helms-Park (2014) found from native English speakers’ responses to *Qian and Schedl’s (2004) DVK that scoring methods moderated how association relationships (paradigmatic vs. syntagmatic) and administration condition (constrained vs. unconstrained) affected learners’ test performance. For example, with the One-Point method (i.e., the original scores), ANOVA revealed main effects of both type of association relationships and administration condition, and there was no significant interaction effect; with the Correct-Wrong method (i.e., the revised scores), while the main effects remained significant, a significant interaction effect also emerged, that is, the difference between the two conditions was notably smaller for paradigmatic association and larger for syntagmatic association.

*Schmitt et al. (2011) found that for the WAF test with six options, the All-or-Nothing method produced the largest correlation (.884) between learners’ paper test and interview scores. The One-Point and Correct-Wrong methods produced correlations of .871 and .877, respectively. For the WAF test with eight options, the One-Point method produced the strongest correlation (.885) and the correlations were .855 and .871 for the Correct-Wrong and All-or-Nothing methods, respectively. *Schmitt et al. (2011) thus concluded that the Correct-Wrong method could be discounted as it is much more complicated than the other two methods without yielding more encouraging results; and the All-or-Nothing might be better for the 6-option format, and the One-Point for the 8-option format.

Despite the evaluation conclusion that favored the All-or-Nothing method, *Schmitt et al. (2011) noted that an evaluation of scoring methods also needs to consider the purpose of the assessment. If the purpose is to know how a student(s) stands in the level of vocabulary depth knowledge compared to his/her peers in a class, then the All-or-Nothing would give the quickest result; and in a research situation, it would perhaps also be time-saving to use this method for scoring responses from a large number of learners. On the other hand, if the purpose is to diagnose the specific associates which learners have mastered or where they may display poor knowledge, the One-Point or the Correct-Wrong method might be a better choice in that they could give more specific information of diagnosis.

It was indicated at the beginning of the previous section on test administration condition that it is perhaps also important to consider the issue of whether or not learners know how their responses are to be scored. No studies listed in Additional file 1: Appendix A had explicit information about this issue. In language testing, familiarizing test-takers with the format of a test is critical, and in large scale testing, test-takers are usually well-informed on what and how they will be tested, including how their answers will be scored, notably for performance tasks, such as speaking and writing. In the vocabulary assessment literature, many tests have a single correct answer, such as the VLT and Yes/NO checklists. Thus, the scoring method (as in the form of correct number of choices), even though uninformed, could be immediately clear to learners. On the other hand, in the case of WAF tests where multiple choices are usually expected, learners’ knowledge of which method is used to score their responses should be an important issue to consider.

To illustrate how learners’ test-taking behaviors (and thus the validity of a WAF test) might be influenced by whether or not they know the scoring method to be used, the “One-Point” method, in an extreme case, may result in everybody selecting all choices to achieve the maximum score if the method is known to learners. On the other hand, if learners know that their responses are to be scored with the Correct-Wrong method, they would presumably discourage themselves from using such a testing strategy. In this regard, the lack of clarity in the literature on this issue could be disconcerting. It is suggested that future studies that use a WAF test should specify, among other things, whether or not their participants are clear about how their scores are to be scored. Research is of course also need in the future to examine how learners’ knowledge of the scoring method, or the lack thereof, might influence their thought processes and performance on a test and subsequently the psychometric properties of the test.

Test-takers and WAF tests

The impact of test-taker characteristics on test performance and the psychometric properties of a test has long been acknowledged in language testing (Bachman and Palmer 1996). While some characteristics can be unpredictable and may not be controlled for, there are possibilities to avoid or minimize the effects of others through careful attention to test design and administration. Bachman and Palmer (1996), for example, discussed four major categories of individual characteristics of test-takers, including personal characteristics (e.g., age, sex, and native language), the topical knowledge that test-takers bring to the language testing situation, their affective schemata, and language ability. (O’Sullivan, B: Towards a model of performance in oral language testing, unpublished) classified test-taker characteristics into three major categories, including physical/physiological (e.g., age, sex, and disabilities), psychological (e.g., personality, cognitive still, motivation), and experiential (e.g., education, examination preparedness, and target language country residence). Using these lists as a reference, only a few studies in the WAF literature directly addressed a small number of test-taker characteristics, such as L2 proficiency (e.g., *Greidanus and Nienhus 2001; *Henriksen 2008; *Schmitt et al. 2011) and L1 background (*Horiba 2012).

L2 proficiency

As reviewed earlier, an immediate concern for developing a WAF test is related to target group(s) of learners in that words need to be largely known to the learners so as to make the test assess an aspect of knowledge (i.e., depth) distinct from vocabulary size. Thus, learners’ proficiency should indeed be an important consideration; yet, only a few studies specifically compared learners at different proficiency levels to examine the possible impact on WAF tests.

In *Read (1993), ESL learners’ verbal report indicated that when they encountered an unknown stimulus word, their strategies appeared to vary according to proficiency level. While lower proficiency learners were more inclined to skip such an item, learners with higher proficiency were more willing to guess, such as using relational information in the choice words for selecting associates. Later, however, *Read (1998) revealed that some high proficiency learners also showed unwillingness to guess. Thus, there did not seem to be convincing evidence for a direct relationship between learners’ proficiency level and guessing on the WAT or the validity of the test.

*Greidanus and Nienhus (2001) compared the performance of Dutch-speaking learners at different proficiency levels on a French WAF test. Among other findings, those who had more years of university learning of French performed significantly better than those who had less years of studying French, thus providing strong evidence for the discriminatory power of the test. While there is consistency between the two groups in word frequency effects as reviewed earlier, difference was observed between them with respect to the extent to which items with semantically related and unrelated distractors were related to learners’ general language proficiency.