THE EFFICACY of using simultaneous signs and verbal language to facilitate early spoken words in hearing children with language delays has been documented in the literature (Baumann Leech & Cress, 2011; Dunst, Meter, & Hamby, 2011; Robertson, 2004; Wright, Kaiser, Reikowsky, & Roberts, 2013). In their systematic review, Dunst et al. (2011) concluded that using sign as an intervention to promote verbal language is promising, regardless of the population served (e.g., autism spectrum disorder, Down syndrome, developmental delays, physical disabilities) or the type of sign language used (e.g., American Sign Language [ASL], Signed English). Theoretical support comes from developmental research on the gesture–language continuum (Goodwyn, Acredolo, & Brown, 2000; McCune-Nicolich, 1981; McLaughlin, 1998), as well as language-learning theories such as the socially-based transactional model (Sameroff & Chandler, 1975; Yoder & Warren, 1993) and the cognitively-based information processing model (Ellis Weismer, 2000; Just & Carpenter, 1992). In this article, we review the developmental, theoretical, and empirical research that supports using sign language as an intervention in clinical populations. We further apply research to the tasks of choosing early word–sign targets and implementing word–sign intervention.

Guidance on signing with children is readily available for parents and practitioners in popular parenting books (e.g., Acredolo & Goodwyn, 2009), children’s board books (e.g., Acredolo & Goodwyn, 2002), and easily accessed websites (e.g., https://www.babysigns.com/; https://www.babysignlanguage.com/), as well as practitioner websites (e.g., the Center for Early Literacy Learning [CELL], 2010a, 2010b, 2010c, 2010d) and professional magazines (e.g., Seal, 2010). Although these works report translating research to practice, it must be noted they are without peer review (Nelson, White, & Grewe, 2012). For this reason, additional reflection on this topic is warranted.

Regarding recommendations on selecting a sign system, the aforementioned practice guidelines are in general agreement. Seal (2010) proposes the use of formal sign language signs (e.g., ASL) but accepts child modifications on the basis of motor skill. The Center for Early Literacy Learning (2010a, 2010b, 2010c, 2010d) uses a combination of ASL, ASL-modified, and homemade “baby signs.” Acredolo and Goodwyn (2009), the originators of the Baby SignsR program, added an ASL-only program in response to families who wish to teach universally consistent signs. Although no standard definition exists (Moores, 1978), ASL is a form of gestural communication utilized by individuals with profound hearing impairment (Nicolosi, Harryman, & Kresheck, 1996). American Sign Language is a distinct and formal language with an established system of morphology and syntax, different from that of spoken English. In ASL, some signs are iconic (i.e., how easily the sign visually resembles the concept it is trying to convey; Meuris, Maes, DeMeyer, & Zink, 2014) and motorically easy to produce, whereas others are not.

“Baby signs” are defined as stand-alone gestures made by infants and toddlers to communicate (Acredolo & Goodwyn, 2009; Acredolo, Goodwyn, Horobin, & Emmons, 1999). Baby signs are motorically simple, often generated by the toddler or created by the parent, and most often represent either an object or an activity (e.g., panting to represent “dog,” pulling at lower lip to represent “brush teeth”). Baby signs are also highly iconic. Iconicity has been discussed as a key factor in choosing early signs (Fristoe & Lloyd, 1980). Unlike ASL, baby signs are typically single words with no formal grammar.

Since Dunst et al. (2011) concluded that all sign interventions have value, we advocate here that in teaching isolated vocabulary (i.e., key words), homemade baby signs, formal ASL signs, and ASL-adapted signs all are appropriate as long as signs are iconic and consistent. Hereafter, these signs will be referred to as key word signs (KS) to differentiate them from any trademarked baby signs programs or ASL.

Published practice guidelines have further addressed how to choose word–sign pairs (Acredolo & Goodwyn, 2009; CELL, 2010a, 2010b, 2010c, 2010d; Seal, 2010). Although all agree that targets should be pragmatically functional and developmentally appropriate, none has systematically considered the research on spoken lexical development. Developmental lexical data are available (Fenson et al., 1994; Tardif et al., 2008), as are guidelines for choosing first spoken words (Holland, 1975; Lahey & Bloom, 1977; Lederer, 2002, 2011). These are important resources in choosing first word–sign pairs when the goal is to produce spoken words.

Finally, these research-to-practice guidelines provide useful information for intervention. Tips include the importance of gaining joint attention, pairing signs with spoken words, and the power of repetition within and across contexts, among others (Acredolo & Goodwyn, 2009; CELL, 2010a, 2010b, 2010c, 2010d; Seal, 2010). Collectively, these approaches combine the best of traditional language therapy with sign language intervention.

The purpose of this article is to integrate the aforementioned literature in an effort to guide clinical decision-making for young children with language delays in the absence of hearing loss. Specifically, this article will (a) review the developmental, theoretical, and empirical support for using signs to facilitate spoken words in children with language delays, (b) review guidelines for choosing first word–sign pairs, evaluate specific target recommendations, offer a sample lexicon, and (c) combine recommended practices in sign intervention and early language intervention.

RESEARCH BASIS

Natural gestures have been defined as actions produced by the whole body, arms, hands, or fingers for the purpose of communicating (Centers for Disease Control and Prevention, 2012; Iverson & Thal, 1998). Natural gestures have been further categorized as either deictic or representational (Capone & McGregor, 2004; Crais, Watson, & Baranek, 2009; Iverson & Thal, 1998). Deictic gestures include pointing, showing, giving, and reaching and emerge between 10 and 13 months (Capone & McGregor, 2004). They are used to gain attention and change on the basis of the context. To illustrate, babies may use pointing for several functions and meanings, based on context. For example, a baby may point to a picture in a book to label a duck and point to a bottle to request it.

Representational gestures are used to express a specific language concept (e.g., nodding to signify agreement, waving to greet, and sniffing to signify “flower”) and, therefore, are not context-dependent. Representational gestures can stand alone. For example, if a child pretends to sniff a flower, and the flower is not present, the listener still knows what the child is attempting to communicate. These gestures begin to appear at 12 months (Bates, Benigni, Bretherton, Camaioni, & Volterra, 1979; Capone & McGregor, 2004).

The gesture–speech continuum

Researchers studying gestures in young children have noted a continuum from prelinguistic gestures to first words (McLaughlin, 1998) to multiword combinations (Goodwyn et al., 2000), as well as concomitant milestones such as first symbolic/pretend play gestures and first words (McCune-Nicolich, 1981). Findings from longitudinal research in this area (Goodwyn et al., 2000; Rowe & Goldin-Meadow, 2009; Watt, Wetherby, & Shumway, 2006) have concluded that development of gesture predicts three critical early language-based domains: (a) lexical development (Acredolo & Goodwyn, 1988; Watt et al., 2006); (b) syntactic development in the transition to two-word utterances (Goodwyn et al., 2000); and (c) vocabulary size in kindergarten (Rowe & Goldin-Meadow, 2009).

Children with language impairments often have delays in gesture development (Luyster, Kadlec, Carter, & Tager-Flusberg, 2008; Sauer, Levine, & Goldin-Meadow, 2010). The nature of their gestural lexicons can be used to reliably predict who will and will not catch up in language development (i.e., late bloomers and late talkers, respectively; Thal, Tobias, & Morrision, 1991) and differentiate among those with various disabilities such as autism (Zwaigenbaum et al., 2005) and Down syndrome (Mundy, Kasari, Sigman, & Ruskin, 1995). In two recent studies, both teaching gestures directly to children (McGregor, 2009) and increasing parent use of gestures (Longobardi, Rossi-Arnaud, & Spataro, 2012) supported verbal word learning.

Theoretical support

Two models of language acquisition, that is, the transactional model (Sameroff & Chandler, 1975; Yoder & Warren, 1993) and the information processing model (Ellis Weismer, 2000; Just & Carpenter, 1992) provide further support for pairing spoken words with representational gestures/signs (i.e., KS). The transactional model (Sameroff & Chandler, 1975; Yoder & Warren, 1993) posits that the language-learning process is reciprocal and dynamic. A child-initiated gesture invites an adult to respond. Children with language delays, who do not initiate or respond (either with gestures or words), risk diminished conversational efforts by adults, further compromising the language-learning experience (Rice, 1993). Kirk, Howlett, Pine, and Fletcher (2013) provided support for this model, reporting that the use of baby signs (vs. words alone) increased parents’ responsiveness to their children’s nonverbal cues in infants who are developing typically.

Information processing is a second model of language acquisition that may support the use of simultaneous speech/sign. This model places emphasis upon the importance of a child’s cognitive processing abilities in the areas of attention, discrimination, organization, memory, and retrieval (Ellis Weismer, 2000; Just & Carpenter, 1992). Accordingly, deficits in any one process or task demand that exceed overall processing abilities will cause the system to break down.

Simultaneous speech/sign intervention can address information processing problems in at least four different ways. First, from a neurological perspective, while verbal language engages only the auditory cortex, sign engages both the visual and auditory cortices (Abrahamsen, Cavallo, & McCluer, 1985; Daniels, 1996). A child who has difficulty processing information by solely listening has the added opportunity to learn through the visual modality. This position is aligned with universal design for learning, in that educators and clinicians afford students with multiple means of representation, multiple means of engagement, and multiple means of expression (McGuire, Scott, & Shaw, 2006). Second, words are more fleeting than signs. Although a spoken word quickly fades from a child’s auditory attention, gestures linger longer in the visual domain, thus providing more processing time (Abrahamsen et al., 1985; Gathercole & Baddeley, 1990; Just & Carpenter, 1992; Lahey & Bloom, 1994). Third, visual signs invite joint attention, an important prelinguistic precursor to communication development (Acredolo et al., 1999; Goodwyn et al., 2000; Tomasello & Farrar, 1986). The more signs presented, the more opportunities there are for the child to share attention and intention with the conversational partner. Fourth, both the sign and the word are symbolic. When used together, they essentially cross-train mental representation skills (Goodwyn & Acredolo, 1993; Petitto, 2000).

Empirical support

Inspired by the theoretical and developmental rationales for using signs to facilitate spoken words, researchers have sought to obtain empirical evidence to support use of KS as an intervention strategy in children with language delays (Baumann Leech & Cress, 2011; Dunst et al., 2011; Robertson, 2004; Wright et al., 2013). Dunst et al. (2011) conducted a critical review of 33 studies on the influence of sign/speech intervention on oral language production. Studies included in their review were investigations of clinical populations including autism spectrum disorders, social-emotional disorders, Down syndrome, intellectual disabilities, and physical disabilities. Their review concluded that, regardless of the type of sign system used (e.g., ASL, Signed English), the use of multimodal cues (i.e., sign paired with spoken words) yielded increased verbal communication. It must be noted that a critical review of these studies reveals limited numbers of participants overall (1–21) and primarily single-subject, within-group designs. No randomized, between-group comparisons (the gold standard for empirical research) were identified in this review.

Baumann Leech and Cress (2011) utilized a single-subject, multiple baseline research design to compare two different augmentative alternative communication (AAC) treatment approaches (i.e., picture symbol exchange vs. [unspecified form of] sign) in one participant diagnosed as a “Late Talker” (i.e., a child with expressive language delays only). The participant learned spoken target words using both methods of AAC and generalized these words to different communicative scenarios. Although no difference was noted between AAC intervention strategies, sign (as one of the two strategies) did facilitate spoken language.

Robertson (2004) reported the results of a single-subject, alternating treatment study in which two late-talking toddlers were presented with 20 novel vocabulary words. Ten spoken words were paired with signs, whereas the remaining 10 served as controls. Both children learned all 10 signed words and carried them over to conversational speech versus learning only half of the nonsigned words.

Wright et al. (2013) studied the effect of a speech/sign intervention on four toddlers with Down syndrome exposed to enhanced milieu teaching (EMT; Hancock & Kaiser, 2006) blended with joint attention, symbolic play, and emotional regulation (Kasari, Freeman, & Paparella, 2006). After participating in 20 biweekly sessions, all four children increased their use of signs and spoken words. However, without a control group, it is not possible to infer a cause–effect relationship.

Given this promising empirical research base, coupled with developmental and theoretical support, the authors here conclude that use of KS as an intervention strategy is supported. Therefore, two questions remain: (1) How can research guide choosing first word–sign pairs? (2) What evidence-based strategies should be used to facilitate their production?

HOW TO CHOOSE FIRST WORD–SIGN PAIRS

Choosing first signs, similar to choosing first words, must be based on a variety of both context and content concerns (Holland, 1975; Lahey & Bloom, 1977; Lederer, 2001, 2002, 2011, 2013). In relation to context, targeted word–sign pairs should be useful for communicating an array of pragmatic functions (i.e., the reason why we send a message). For example, children can request to have their needs met, protest to express displeasure, comment to express ideas, and ask a question to obtain information, to name a few of the many pragmatic functions possible (Bloom & Lahey, 1978; Lahey, 1988).

Word–sign pairs should be highly motivating and suitable for use during a range of activities and across settings (e.g., home, school; Lahey & Bloom, 1977; Lederer, 2013). Furthermore, they should be easy to both demonstrate and understand (i.e., highly iconic; Fristoe & Lloyd, 1980; Lahey & Bloom, 1977). In terms of content, rationales for choosing individual word–sign targets and a core lexicon should be derived from both general lexical development and child- and family-specific vocabulary needs. Finally, lexical variety, which lays the foundation for syntax, must be considered (Bloom & Lahey, 1978; Lahey, 1988).

Research to practice guidelines for choosing word–sign pairs provided by CELL (2010a, 2010b, 2010c, 2010d), Acredolo and Goodwyn (2009), and Seal (2010) place emphasis on the contextually-based aspects of language. Regarding content, Seal (2010) consulted developmental ASL research (Anderson & Reilly, 2002) and considered motor development. Acredolo and Goodywn (2009) referred to their own research on the natural development of Baby SignsR (Acredolo & Goodwyn, 1988). However, recommendations from the aforementioned experts do not systematically consider developmental spoken lexical research. Because the purpose of using signs with children with language delays is to facilitate first spoken words, the authors here conclude that the logical approach to selecting word–sign pairs is to identify the spoken targets first.

Early lexical development

Early spoken word targets should be drawn largely from developmental lexical research (e.g., Benedict, 1979; Fenson et al., 1994; Nelson, 1973; Tardif et al., 2008). For a child with typical development, a majority of his or her first 20 words will be nouns, greetings, and “no” (Tardif et al., 2008). As a child’s vocabulary approaches or exceeds 50 words, prepositions subsequently emerge (e.g., “up,” “down”), followed by action verbs (e.g., “go,” “eat”) and adjectives/modifiers (e.g., “more,” “all done,” “hot”; Bloom & Lahey, 1978; Fenson et al., 1994; Lahey, 1988). The lexicon at 50 words typically contains two-thirds of substantive words (i.e., objects or classes of objects expressed with nouns and pronouns such as names of people, toys, animals, and foods) and one-third of relational words (i.e., expressing relationships between objects using verbs, prepositions, adjectives, and other modifiers; Nelson, 1973; Owens, 2011). Late-talking toddlers (Rescorla, Alley, & Christine, 2001) and children with Down syndrome (Oliver & Buckley, 1994) have been reported to follow the same order of lexical acquisition as children developing language typically, but do so at a slower pace.

To help clinicians further facilitate semantic variety, Bloom and Lahey (1978) and Lahey (1988) developed a popular taxonomy to code substantive and relational words. They identified nine different early semantic categories of words and their meanings. Substantive words are contained in the category of existence, whereas relational words can be sorted into the following eight categories: nonexistence, recurrence, rejection, action, locative action, attribution, possession, and denial. Definitions and developmentally early verbal exemplars for each category can be found in Table 1.

Taxonomy of Content Categories With Definitions and Earliest Exemplars

Recommendations for choosing word–sign targets

To begin, Lederer (2001, 2002, 2011) and others (e.g., Girolametto, Pearce, & Weitzman, 1996) recommend choosing a small set of 10–12 developmentally early targets representing a range of semantic categories to express a variety of pragmatic intentions. All exemplars in Table 1 meet these criteria. For children who have more significant language impairments, fewer targets should be selected.

As mentioned in the introduction of this article, recommended targets can be found in popular and professional publications (Acredolo & Goodwyn, 2002; Seal, 2010) and websites (e.g., https://www.babysignlanguage.com/; CELL, 2010a, 2010b, 2010c, 2010d). Since CELL (2010a, 2010b, 2010c, 2010d) and Seal (2010) chose their word–sign targets for special populations, we will use these to hone clinical decision-making skills. Specifically, we will reflect on their strengths and weaknesses in relation to (a) spoken lexical development, (b) representation of substantive and relational targets, and (c) variety within and across semantic categories. CELL’s (2010a, 2010b, 2010c, 2010d) and Seal’s (2010) targets appear in Table 2. We will conclude with a sample lexicon for clinical intervention.

Proposed Word–Sign Targets by Center for Early Literacy Learning (CELL) (2010) and Seal (2010)

Spoken lexical development

The majority of the targets offered by CELL (2010a, 2010b, 2010c, 2010d) and Seal (2010) are words acquired early by children developing spoken language typically (Fenson et al., 1994; Tardif et al., 2008). (These are bolded in Table 2.) However, both lists include some targets that are acquired after 24 months. (These are not bolded in Table 2.) Targets that are starred in Table 2 did not appear in the database generated by Fenson et al. (1994). Finally, a denotation of “X” indicated that neither CELL (2010a, 2010b, 2010c, 2010d) nor Seal (2010) account for these targets.

In general, choosing words for language intervention that appear developmentally after 24 months is not recommended by the authors here. By the age of two years, toddlers who are developing typically have a vocabulary of approximately 200 words and are generating (at least) two-word combinations (Paul & Norbury, 2012). Given that the single-word lexicon is of approximately 50 words (Nelson, 1973; Owens, 2011), establishing a cutoff at two years of age provides a large enough pool from which to select developmentally early targets.

Substantive–relational representation

Both CELL (2010a, 2010b, 2010c, 2010d) and Seal (2010) provide word lists that contain a majority of relational words. Choosing relational words for children with language delays is highly recommended because they can be used more frequently across activities and settings than substantive words (Lahey & Bloom, 1977). In fact, CELL (2010a, 2010b, 2010c, 2010d) recommends only one substantive word (“book”). Because substantive words are easier to learn than relational words (i.e., they are more easily represented; Bloom & Lahey, 1978; Lahey, 1988), the authors recommend building early lexicons that include both substantive and relational words, with a greater emphasis on the latter, as did Seal (2010).

Semantic variety

Semantic variety refers both to within and across lexical category considerations. With respect to within category substantive words, first nouns include names of people, toys, foods, animals, clothes, and body parts (Fenson et al., 1994). Seal (2010) includes a sufficient semantic variety with people, toys, and food.

With regard to within category relational words, both CELL (2010a, 2010b, 2010c, 2010d), and Seal (2010) include a large number of verbs, similar to those seen in the first 35 ASL signs in young children who are deaf (Anderson & Reilly, 2002) but dissimilar in first word learners (Fenson et al., 1994; Tardif et al., 2008). Anderson and Reilly (2002) explain that these early verb concepts can be easily demonstrated with natural gestures (e.g., “clap,” “hug,” “kiss”). Spoken verbs are among the latest category of single words to be acquired (e.g., the first verb “go” appears at 19 months; the first nouns, “mommy” and “daddy” appear at 12 months; Fenson et al., 1994). Bloom and Lahey (1978) and Lahey (1988) explain that verbs are harder to learn than nouns because they are not always easily represented, permanent, or perceptually distinct from the noun (e.g., “eat” means someone is eating something). Because verbs are harder to learn, but easier to gesture, the authors here recommend including a minimum of two action verbs when building a KS lexicon.

Finally, with respect to variety of relational words across semantic categories, we need to look for exemplars from each of the early nine categories (Bloom & Lahey, 1978; Lahey, 1988). Inspection of CELL’s (2010a, 2010b, 2010c, 2010d) and Seal’s (2010) recommended targets reveals missing lexical items from certain categories as identified by an X in Table 2. According to Bloom and Lahey (1978), a first lexicon should include relational words from at least nonexistence, recurrence, rejection, action, and locative action.

Making decisions

Table 1 provides the earliest acquired words in each semantic category. The bolded targets in Table 2, which are not seen in Table 1, provide additional word–sign targets for consideration. In addition to these recommended developmental targets, child “favorites” and family-specific vocabulary must be included. These are obtained through family interviews about child-preferred items (e.g., toys and foods), as well as alternative labels (e.g., people and foods), which have cultural significance to the family and the child. Rationales for including child-specific targets (e.g., Elmo) stem from individually motivating objects or events. Rationales for identifying culturally-guided (e.g., “ee-mah” for “mommy”) vocabulary foster positive rapport and respect (Robertson, 2007).

Taking spoken lexical development, substantive–relational representation, and semantic variety into account, Table 3 provides a sample first lexicon. These targets are adapted from Lederer (2002, 2011). Suggested KS descriptions are provided. The signs are derived from ASL and Baby SignsR. Users should modify as needed.

Sample First Word–Sign Lexicon With Sign Instructions

STRATEGIES TO FACILITATE EARLY WORD–SIGN TARGETS

Many strategies that are effective in facilitating early spoken words can be expanded to include word-sign targets. Evidence-based practices for these shared objectives include the following: (a) focused stimulation (Ellis Weismer & Robertson, 2006; Ellis Weismer & Murray-Branch, 1989; Girolametto et al., 1996; Lederer, 2002; Wolfe & Heilmann, 2010); (b) Enhance Milieu Teaching (EMT) (Hancock & Kaiser, 2006; Wright et al., 2013); and (c) embedded learning opportunities (ELOs; Horn & Banerjee, 2009; Lederer, 2013; Noh, Allen, & Squires, 2009). In addition, evidence-based strategies for facilitating sign language also must be considered (Seal, 2010). Regardless of the teaching strategy being used, parents and professionals should always pair the spoken word with the KS in short, grammatically correct phrases or sentences (Bredin-Oja & Fey, 2013). The child’s sign alone should be accepted fully with the assumption that it will fade once the spoken word emerges (Iverson & Goldin-Meadow, 2005).

Focused stimulation

Focused stimulation is a language intervention approach in which a small pool of target words is preselected and each is modeled five to 10 times before another target is modeled (Ellis Weismer & Murray-Branch, 1989; Girolametto et al., 1996; Lederer, 2002; Wolfe & Heilmann, 2010). Repeating limited targets is supported by information processing theories, suggesting minimizing demands on the processing system (Ellis Weismer, 2000; Just & Carpenter, 1992). The target is presented in short but natural phrases/sentences to help build the concept linguistically. Other modes of representation to build the concept, such as pictures, signs, or demonstrations, are also used. In focused stimulation, the KS is repeated each time the target is spoken. No verbal or signed production is expected or overtly elicited from the child in the classic form of focused stimulation. Exposure alone has been proven sufficient to facilitate learning, using both parents (Girolametto et al., 1996) and professionals (Ellis Weismer & Robertson, 2006; Wolfe & Heilmann, 2010) as intervention agents. A study by Lederer (2001) demonstrated that parents and professionals collaborating in the use of focused stimulation were effective in facilitating vocabulary development. Table 4 provides a sample focused stimulation dialog for facilitating the word–sign target “eat.”

Sample Implementation for Three Treatment Strategies to Facilitate the Word–Sign Target “Eat”

Enhanced milieu teaching

Enhanced milieu teaching is a group of language facilitation strategies combining environmental arrangement to stimulate a child’s initiation, responsive interactions, and milieu teaching. Examples of environmental arrangement include placing desired objects out of reach, providing small portions of preferred foods, giving the objects/activities requiring assistance (e.g., bubbles with the top sealed very tightly), or doing something silly (e.g., trying to pour juice with the cap still in place). Responsive interaction strategies include “following the child’s lead, responding to the child’s verbal and nonverbal initiations, providing meaningful semantic feedback, expanding the child’s utterances” both semantically and syntactically (Hancock & Kaiser, 2006, p. 209). These strategies are designed to engage the child and scaffold language. Milieu teaching strategies include but are not limited to asking questions, providing fill-ins, offering choices, and modeling word–sign in increasingly more directive styles (Hancock & Kaiser, 2006).

Enhanced milieu teaching’s theoretical basis comes from both behaviorist (Hart & Rogers-Warren, 1978) and social interactionist theories (e.g., transactional; Ellis Weismer, 2000; Just & Carpenter, 1992). Enhanced milieu teaching uses operant conditioning (i.e., antecedent, behavior, consequence; Skinner, 1957) in prearranged but natural contexts (Hart & Rogers-Warren, 1978). The antecedent can be either nonverbal or verbal.

Both parents and professionals have been shown to implement EMT effectively (Hancock & Kaiser, 2006). Similar to focused stimulation, collaborative use of EMT between interventionists and parents has been shown to produce the greatest impact on vocabulary expansion (Kaiser & Roberts, 2013). See Table 4 for a sample EMT interaction to facilitate the word–sign “eat.”

Embedded learning opportunities

Although focused stimulation and EMT have been shown to help children generalize newly acquired vocabulary, they cannot address the issue of generalization alone. To help children both acquire and generalize new vocabulary, they need to be exposed to words and signs across activities and settings. This is made possible through systematic ELOs; Horn & Banerjee, 2009; Lederer, 2013; Noh et al., 2009). To plan for ELOs, professionals and families must work together to identify opportunities across the child’s day in which the intended targets can be facilitated. Parents are made partners in the decision-making process for selecting targets and identifying multiple opportunities to facilitate these targets. See Table 4 for ELO opportunities to facilitate the word–sign target “eat.”

Sign language strategies

In addition to traditional language facilitation strategies, recommended practices in teaching signs should be considered. Many of these practices are adapted from parents of young children who are deaf and learning ASL. Strategies include establishing joint attention such as tapping a child who does not respond to his name (Clibbens, Powell, & Atkinson, 2002; Waxman & Spencer, 1997) and keeping the sign in front of the child for the duration of the spoken word or phrase (Iverson, Longobardi, Spampinato, & Caselli, 2006; Seal, 2010). In addition, Seal (2010) suggests sitting behind children for hand-over-hand facilitation to help with perspective but also signing face-to-face so that children can see facial expressions and mouth movements. Like parents of children developing language typically, Seal (2010) encourages both parents and professionals to use “motherese,” that is, to present signs slowly, exaggerate their size, extend their duration, and increase their frequency.

SUMMARY

Regarding recommended practices in implementing a word–sign intervention, this article extends the work of previous guidelines and specific word–sign recommendations (Acredolo & Goodwyn, 2002; Acredolo & Goodwyn, 2009; CELL, 2010a, 2010b, 2010c, 2010d; Seal, 2010). This article more systematically considers the roles of spoken language development, as well as language and sign facilitation strategies, in choosing and facilitating early word–sign targets. In addition to pragmatic context considerations embraced by reviewed researchers, early spoken lexical research must be consulted in terms of acquisition of specific words within and across a variety of semantic categories. This process will ensure creation of a diverse early lexicon necessary for communication in the present and the ultimate transition to syntax.

For children with language delays, combining signs with spoken words to facilitate spoken language has strong developmental and theoretical support. Empirical support is promising but more controlled studies are needed. Specifically, researchers must study larger numbers of participants and employ between-group designs, ideally using randomization of participants. In addition, the late talker population has received little attention with respect to word–sign interventions. Because research suggests that these children are the mildest of those with language delays and may even “catch up” without intervention (Paul & Norbury, 2012), it is important to ascertain whether a KS intervention program could speed up the process even further than language therapy without signs. Finally, given research that supports the use of parents as language facilitators (Girolametto et al., 1996; Hancock & Kaiser, 2006), an investigation of whether a parent-implemented home program using KS is warranted.

REFERENCES

Abrahamsen A. A., Cavallo M. M., McCluer J. A. (1985). Is the sign advantage a robust phenomenon? From gesture to language in two modalities. Merrill-Palmer Quarterly, 31, 17–209.

- Cited Here

Acredolo L., Goodwyn S. (1988). Symbolic gesturing in normal infants. Child Development, 59, 450–466.

- Cited Here

Acredolo L., Goodwyn S. (2002). My first baby signs. New York, NY: Harper Festival.

- Cited Here

Acredolo L., Goodwyn S. (2009). Baby signs: How to talk with your baby before your baby can talk (3rd ed.). New York, NY: McGraw-Hill.

- Cited Here

Acredolo L. P., Goodwyn S. W., Horobin K., Emmons Y. (1999). The signs and sounds of early language development. InBalter L., Tamis-LeMonda C. (Eds.), Child psychology: A handbook of contemporary issues (pp. 116–139). New York, NY: Psychology Press.

- Cited Here

Anderson D., Reilly J. (2002). The MacArthur communicative development inventory: Normative data for American sign language. Journal of Deaf Studies and Deaf Education, 7(2), 83–119.

- Cited Here

Bates E., Benigni I., Bretherton I., Camaioni L., Volterra V. (1979). The emergence of symbols; cognition and communication in infancy. New York, NY: Academic Press.

- Cited Here

Baumann Leech E. R., Cress C. J. (2011). Indirect facilitation of speech in a late talking child by prompted production of picture symbols or signs. Augmentative and Alternative Communication, 27(1), 40–52.

- Cited Here

Benedict H. (1979). Early lexical development: Comprehension and production. Journal of Child Language, 6, 183–200.

- Cited Here

Bloom L., Lahey M. (1978). Language development and language disorders. New York, NY: Wiley.

- Cited Here

Bredin-Oja S. L., Fey M. E. (2013). Children’s responses to telegraphic and grammatically complete prompts to imitate. American Journal of Speech Language Pathology. Retrieved June 18, 2014, from http://ajslp.asha.org/cgi/content/abstract/1058-0360_2013_12-0155v1

- Cited Here

Capone N. C., McGregor K. (2004). Gesture development: A review for clinical and research practices. Journal of Speech, Language, and Hearing Research, 47, 173–186.

- Cited Here

Center for Early Literacy Learning. (2010a). Infant gestures. CELL practices. Asheville, NC: Orelena Hawks Puckett Institute. Retrieved June 18, 2014, from www.EarlyLiteracyLearning.org

- Cited Here

Center for Early Literacy Learning. (2010b). Joint attention activities. CELL practices. Asheville, NC: Orelena Hawks Puckett Institute. Retrieved June 18, 2014, from www.EarlyLiteracyLearning.org

- Cited Here

Center for Early Literacy Learning. (2010c). Sign language activities. CELL practices. Asheville, NC: Orelena Hawks Puckett Institute. Retrieved June 18, 2014, from www.EarlyLiteracyLearning.org

- Cited Here

Center for Early Literacy Learning. (2010d). Infant sign language dictionary. CELL practices. Asheville, NC: Orelena Hawks Puckett Institute. Retrieved June 18, 2014, from www.EarlyLiteracyLearning.org

- Cited Here

Centers for Disease Control and Prevention. (2012, March 1). National Center on Birth Defects and Developmental Disabilities. Retrieved June 18, 2014, from http://www.cdc.gov/ncbddd/hearingloss/parentsguide/building/natural-gestures.html

- Cited Here

Clibbens J., Powell G. G., Atkinson E. (2002). Strategies for achieving joint attention when signing to children with Down’s syndrome. International Journal of Language & Communication Disorders, 37, 309–323.

- Cited Here

Crais E. R., Watson L. R., Baranek G. T. (2009). Use of gesture development in profiling children’s prelinguistic communication skills. American Journal of Speech-Language Pathology, 18, 95–108.

- Cited Here

Daniels M. (1996). Seeing language: The effect over time of sign language on vocabulary development in early childhood education. Child Study Journal, 26(3), 193–209.

- Cited Here

Dunst C. J., Meter D., Hamby D. W. (2011). Influences of sign and oral language interventions on the speech and oral language production of young children with disabilities. CELLpractices. Asheville, NC. Orelena Hawks Puckett Institute. Retrieved June 18, 2014, from www.EarlyLiteracyLearning.org

- Cited Here

Ellis Weismer S. (2000). Language intervention for children with developmental language delay. InBishop D., Leonard L. (Eds.), Speech and language impairments: From theory to practice (pp. 157–176). Philadelphia, PA: Psychology Press.

- Cited Here

Ellis Weismer S., Murray-Branch J. (1989). Modeling versus modeling plus evoked production training: A comparison of two language intervention methods. The Journal of Speech and Hearing Disorders, 54, 269–281.

- Cited Here

Ellis Weismer S., Robertson S. (2006). Focused stimulation. InMcCauley R., Fey M. (Eds.), Treatment of language disorders in children (pp. 175–202). Baltimore, MD: Brookes.

- Cited Here

Fenson L., Dale P., Reznick J., Bates E., Thal D., Pethick J. (1994). Variability in early communication development. Monographs of the Society for Research in Child Development, 59 (5, Serial No. 242).

- Cited Here

Fristoe M., Lloyd L. (1980). Planning an initial expressive sign lexicon for persons with severe communication impairment. The Journal of Speech and Hearing Disorders, 45, 170–180.

- Cited Here

Gathercole S., Baddeley A. (1990). Phonological memory deficits in language disordered children: Is there a causal connection? Journal of Memory and Language, 29, 336–360.

- Cited Here

Girolametto L., Pearce P., Weitzman E. (1996). Interactive focused stimulation for toddlers with expressive vocabulary delays. The Journal of Speech and Hearing Research, 39, 1274–1283.

- Cited Here

Goodwyn S., Acredelo L. (1993). Symbolic gesture versus word: Is there a modality advantage for onset of symbol use? Child Development, 64, 688–701.

- Cited Here

Goodwyn S., Acredolo L., Brown C. (2000). Impact of symbolic gesturing on early language development. Journal of Nonverbal Behavior, 24, 81–103.

- Cited Here

Hancock T. B., Kaiser A. P. (2006). Enhanced milieu teaching. InMcCauley R., Fey M. (Eds.), Treatment of language disorders in children (pp. 203–236). Baltimore, MD: Brookes.

- Cited Here

Hart B., Rogers-Warren A. (1978). A milieu approach to teaching language. In Schiefelbusch R. L. (Ed.), Language intervention strategies (pp. 193–235). Baltimore, MD: University Park Press.

- Cited Here

Holland A. L. (1975). Language therapy for children: Some thoughts on context and content. The Journal of Speech and Hearing Disorders, 40, 514–523.

- Cited Here

Horn E., Banerjee R. (2009). Understanding curriculum modifications and embedded learning opportunities in the context of supporting all children’s success. Language, Speech, and Hearing Services in Schools, 40, 406–415.

- Cited Here

Iverson J. M., Goldin-Meadow S. (2005). Gesture paves the way for language development. American Psychological Society, 16, 367–371.

- Cited Here

Iverson J. M., Longobardi E., Spampinato K., Caselli M. C. (2006). Gesture and speech in maternal input to children with Down syndrome. International Journal of Language & Communication Disorders, 41, 235–251.

- Cited Here

Iverson J. M., Thal D. J. (1998). Communicative transitions: There’s more to the hand than meets the eye. InWetherby A. M., Warren S. F., Reichle J. (Eds.), Transitions in prelinguistic communication: Preintentional to intentional and presymbolic to symbolic (pp. 59–86). Baltimore, MD: Paul H. Brookes.

- Cited Here

Jocelyn M. (2010). Eats. Plattsburg, NY: Tundra Books.

Just M. A., Carpenter P. A. (1992). A capacity theory of comprehension: Individual differences in working memory. Psychological Review, 99(1), 122–149.

- Cited Here

Kaiser A. P., Roberts M. Y. (2013). Parent-implemented enhanced milieu teaching with preschool children who have intellectual disabilities. Journal of Speech, Language, and Hearing Research, 56, 295–209.

- Cited Here

Kasari C., Freeman S., Paparella T. (2006). Joint attention and symbolic play in young children with autism: A randomized controlled intervention study. Journal of Child Psychology and Psychiatry, and Allied Disciplines, 47(6), 611–620.

- Cited Here

Kirk E., Howlett N., Pine K. J., Fletcher B. (2013). To sign or not to sign? The impact of encouraging infants to gesture on infant language and maternal mind-mindedness. Child Development, 84(2), 574–590.

- Cited Here

Lahey M. (1988). Language disorders and language development. New York NY: Macmillan.

- Cited Here

Lahey M., Bloom L. (1977). Planning a first lexicon: Which words to teach first. The Journal of Speech and Hearing Disorders, 42, 340–350.

- Cited Here

Lahey M., Bloom L. (1994). Variability and language learning disabilities. InWallach G. P., Butler K. G. (Eds.), Language learning disabilities in school-age children and adolescents. New York, NY: Macmillan.

- Cited Here

Lederer S. H. (2001). Efficacy of parent-child language group intervention for late talking toddlers. Infant-Toddler Intervention, 11, 223–235.

- Cited Here

Lederer S. H. (2002). Selecting and facilitating the first vocabulary for children with developmental language delays: A focused stimulation approach. Young Exceptional Children, 6, 10–17.

- Cited Here

Lederer S. H. (2011). Finding and facilitating early lexical targets. Retrieved June 18, 2014, from http://www.speechpathology.com/slp-ceus/course/finding-and-facilitating-early-lexical-4189

- Cited Here

Lederer S. H. (2013). Integrating best practices in language intervention and curriculum design to facilitate first words. Young Exceptional Children. Retrieved June 18, 2014, from http://yec.sagepub.com/content/early/2013/06/18/1096250613493190.citation

- Cited Here

Longobardi E., Rossi-Arnaud C., Spataro P. (2012). Individual differences in the prevalence of words and gestures in the second year of life: Developmental trends in Italian children. Infant Behavior Development, 35(4), 847–859.

- Cited Here

Luyster R. J., Kadlec M. B., Carter A., Tager-Flusberg H. (2008). Language assessment and development in toddlers with autism spectrum disorders. Journal of Autism and Developmental Disorders, 38(8), 1426–1438.

- Cited Here

McCune-Nicolich L. (1981). Toward symbolic functioning: Structure of early pretend games and potential parallels with language. Child Development, 52, 785–797.

- Cited Here

McGregor W. B. (2009). Linguistics: An introduction. New York, NY: Continuum International Publishing Group.

- Cited Here

McGuire J. M., Scott S. S., Shaw S. F. (2006). Universal design and its applications in educational environments. Remedial and Special Education, 27(3), 166–175.

- Cited Here

McLaughlin R. (1998). Introduction to language development. San Diego, CA: Singular.

- Cited Here

Meuris K., Maes B., DeMeyer A. M., Zink I. (2014). Manual signs in adults with intellectual disability: Influence of sing characteristics on functional sign vocabulary. Journal of Speech, Language, and Hearing Research, 57, 990–1010.

- Cited Here

Moores D. F. (1978). Educating the deaf. Boston, MA: Houghton Mifflin.

- Cited Here

Mundy P., Kasari C., Sigman M., Ruskin E. (1995). Nonverbal-communication and early language-acquisition in children with Down syndrome and in normally developing children. The Journal of Speech and Hearing Research, 38, 157–167.

- Cited Here

Nelson K. (1973). Structure and strategy in learning to talk. Monographs of the Society for Research in Child Development, 38 (Serial No. 149).

- Cited Here

Nelson L. H., White K. R., Grewe J. (2012). Evidence for website claims about the benefits of teaching sign language to infants and toddlers with normal hearing. Infant Child Development, 21, 474–502.

- Cited Here

Nicolosi L., Harryman E., Kresheck J. (1996). Terminology of communication disorders: Speech-language-hearing (4th ed.). Baltimore, MD: Lippincott Williams & Wilkins.

- Cited Here

Noh J., Allen D., Squires J. (2009). Use of embedded learning opportunities within daily routines by early intervention/early childhood special education teachers. International Journal of Special Education, 24, 1–10.

- Cited Here

Oliver B., Buckley S. (1994). The language development of children with Down syndrome: First words to two-word phrases. Down syndrome Research and Practice, 2(2), 71–75.

- Cited Here

Owens R. (2011). Language development: An introduction (8th ed.). Boston, MA: Allyn & Bacon.

- Cited Here

Paul R., Norbury C. (2012). Language disorders from infancy through adolescence: Listening, speaking, reading, writing, and communicating (4th ed.). St. Louis, Missouri, MO: Mosby.

- Cited Here

Petitto L. A. (2000). On the biological foundations of human language. InEmmorey K., Lane H. (Eds.), The signs of language revisited: An anthology in honor of Ursula Bellugi and Edward Klima. Mahway, NJ: Lawrence Erlbaum Assoc. Inc.

- Cited Here

Rescorla L., Alley A., Christine J. (2001). Word frequencies in toddlers’ lexicons. Journal of Speech, Language, and Hearing Research, 44, 598–609.

- Cited Here

Rice M. (1993). “Don’t talk to him, he’s weird.” A social consequences account of language and social interactions. InKaiser A. P., Gray D. B. (Eds.), Communication and language intervention issues: Volume 2. Enhancing children’s communication: Research foundations for intervention (pp. 139–158). Baltimore, MD: Paul H. Brookes Publishers.

- Cited Here

Robertson S. (2004). Proceedings from ASHA convention ‘07: The effects of sign on the oral vocabulary of two late talking toddlers, Indiana, PA.

- Cited Here

Robertson S. (2007). Got EQ? Increasing cultural and clinical competence through emotional intelligence. Communication Disorders Quarterly, 29(1), 14–19.

- Cited Here

Rowe M. L, Goldin-Meadow S. (2009). Early gesture selectively predicts later language learning. Developmental Science, 12, 182–187.

- Cited Here

Sameroff A., Chandler M. (1975). Reproductive risk and the continuum of caretaking casualty. InHorowitz M. F. D., Hetherington E. M., Scarr-Salapatek S., Seigel G. (Eds.), Review of child development research (pp. 187–244). Chicago, IL: University Park Press. Washington, DC: American Psychological Association.

- Cited Here

Sauer E., Levine S.C., Goldin-Meadow S. (2010). Early gesture predicts language delay in children with pre- or perinatal brain lesions. Child Development, 81(2), 528–539.

- Cited Here

Seal B. (2010). About baby signing. The ASHA Leader. Retrieved June 18, 2014, from http://www.asha.org/publications/leader/2010/101102/about-baby-signing.htm

- Cited Here

Skinner B. F. (1957). Verbal behavior. Cambridge, MA: Prentice Hall, Inc.

- Cited Here

Tardif T., Liang W., Zhang Z., Fletcher P., Kaciroti N., Marchman V. A. (2008). Baby’s first 10 words. Developmental Psychology, 44, 929–938.

- Cited Here

Thal D., Tobias S., Morrison D. (1991). Language and gesture in late talkers: A 1-year follow-up. The Journal of Speech and Hearing Research, 34(3), 604–612.

- Cited Here

Tomasello M., Farrar M. (1986). Joint attention and early language. Child Development, 57, 1454–1463.

- Cited Here

Watt N., Wetherby A., Shumway S. (2006). Prelinguistic predictors of language ‘outcome at three years of age. Journal of Speech, Language, and Hearing Research, 49, 1224–1237.

- Cited Here

Waxman R., Spencer P. (1997). What mothers do to support infant visual attention: Sensitivities to age and hearing status. Journal of Deaf Studies and Deaf Education, 2(2), 104–114.

- Cited Here

Wolfe D., Heilmann J. (2010). Simplified and expanded input in a focused stimulation program for a child with expressive language delay (ELD). Child Language Teaching and Therapy, 26, 335–346.

- Cited Here

Wright C. A., Kaiser A. P., Reikowsky D. I., Roberts M. Y. (2013). Effects of a naturalistic sign intervention on expressive language of toddlers with Down syndrome. Journal of Speech, Language, and Hearing Research, 56, 994–1008.

- Cited Here

Yoder P. J., Warren S. F. (1993). Can developmentally delayed children’s language development be enhanced through prelinguistic intervention? InKaiser A. P., Gray D. B. (Eds.), Enhancing children’s communication: Research foundations for intervention (pp. 35–62). Baltimore, MD: Brookes.

- Cited Here

Zwaigenbaum L., Bryson S., Rogers T., Roberts W., Brian J., Szatmari P. (2005). Behavioral manifestations of autism in the first year of life. International Journal of Developmental Neuroscience, 23(2–3), 143–152.

- Cited Here

Keywords:

children with language delays; key word signs; recommended practices; sign language

First time? Quick how-to.

This visual quick how-to guide shows you how to search a word, for example «handspeak».

- All

- A

- B

- C

- D

- E

- F

- G

- H

- I

- J

- K

- L

- M

- N

- O

- P

- Q

- R

- S

- T

- U

- V

- W

- X

- Y

- Z

Search Tips and Pointers

Search/Filter: Enter a keyword in the filter/search box to see a list of available words with the «All» selection. Click on the page number if needed. Click on the blue link to look up the word. For best result, enter a partial word to see variations of the word.

Alphabetical letters: It’s useful for 1) a single-letter word (such as A, B, etc.) and 2) very short words (e.g. «to», «he», etc.) to narrow down the words and pages in the list.

For best result, enter a short word in the search box, then select the alphetical letter (and page number if needed), and click on the blue link.

Don’t forget to click «All» back when you search another word with a different initial letter.

If you cannot find (perhaps overlook) a word but you can still see a list of links, then keep looking until the links disappear! Sharpening your eye or maybe refine your alphabetical index skill.

Add a Word: This dictionary is not exhaustive; ASL signs are constantly added to the dictionary. If you don’t find a word/sign, you can send your request (only if a single link doesn’t show in the result).

Videos: The first video may be NOT the answer you’re looking for. There are several signs for different meanings, contexts, and/or variations. Browsing all the way down to the next search box is highly recommended.

Video speed: Signing too fast in the videos? See HELP in the footer.

ASL has its own grammar and structure in sentences that works differently from English. For plurals, verb inflections, word order, etc., learn grammar in the «ASL Learn» section. For search in the dictionary, use the present-time verbs and base words. If you look for «said», look up the word «say». Likewise, if you look for an adjective word, try the noun or vice versa. E.g. The ASL signs for French and France are the same. If you look for a plural word, use a singular word.

Are you a Deaf artist, author, traveler, etc. etc.?

Some of the word entries in the ASL dictionary feature Deaf stories or anecdotes, arts, photographs, quotes, etc. to educate and to inspire, and to be preserved in Deaf/ASL history, and to expose and recognize Deaf works, talents, experiences, joys and pains, and successes.

If you’re a Deaf artist, book author, or creative and would like your work to be considered for a possible mention on this website/webapp, introduce yourself and your works. Are you a Deaf mother/father, traveler, politician, teacher, etc. etc. and have an inspirational story, anecdote, or bragging rights to share — tiny or big doesn’t matter, you’re welcome to email it. Codas are also welcome.

Hearing ASL student, who might have stories or anecdotes, also are welcome to share.

ASL to English reverse dictionary

Don’t know what a sign mean? Search ASL to English reverse dictionary to find what an ASL sign means.

Vocabulary building

To start with the First 100 ASL signs for beginners, and continue with the Second 100 ASL signs, and further with the Third 100 ASL signs.

Language Building

Learning ASL words does not equate with learning the language. Learn the language beyond sign language words.

Contextual meaning: Some ASL signs in the dictionary may not mean the same in different contexts and/or ASL sentences. A meaning of a word or phrase can change in sentences and contexts. You will see some examples in video sentences.

Grammar: Many ASL words, especially verbs, in the dictionary are a «base»; be aware that many of them can be grammatically inflected within ASL sentences. Some entries have sentence examples.

Sign production (pronunciation): A change or modification of one of the parameters of the sign, such as handshape, movement, palm orientation, location, and non-manual signals (e.g. facial expressions) can change a meaning or a subtle variety of meaning. Or mispronunciation.

Variation: Some ASL signs have regional (and generational) variations across North America. Some common variations are included as much as possible, but for specifically local variations, interact with your local community to learn their local variations.

Fingerspelling: When there is no word in one language, borrowing is a loanword from another language. In sign language, manual alphabet is used to represent a word of the spoken/written language.

American Sign Language (ASL) is very much alive and indefinitely constructable as any spoken language. The best way to use ASL right is to immerse in daily language interactions and conversations with Ameslan/Deaf people (or ASLians).

Sentence building

Browse phrases and sentences to learn sign language, specifically vocabulary, grammar, and how its sentence structure works.

Sign Language Dictionary

According to the archives online, did you know that this dictionary is the oldest sign language dictonary online since 1997 (DWW which was renamed to Handspeak in 2000)?

This dictionary is not exhaustive; the ASL signs are constantly added to the dictionary. If you don’t find the word/sign, you can send your request via email. Browse the alphabetical letters or search a signed word above.

Regional variation: there may be regional variations of some ASL words across the regions of North America.

Inflection: most ASL words in the dictionary are a «base», but many of them are grammatically inflectable within ASL sentences.

Contextual meaning: These ASL signs in the dictionary may not mean the same in different contexts and/or ASL sentences. You will see some examples in video sentences.

ASL is very much alive and indefinitely constructable as any spoken language. The best way to use ASL right is to immerse in daily interaction with Deaf Ameslan people (ASLers).

|

|

В этой статье не хватает ссылок на источники информации.

Информация должна быть проверяема, иначе она может быть поставлена под сомнение и удалена. |

Spoken word (в переводе с английского: произносимое слово) — форма литературного, а иногда и ораторского искусства, художественное выступление, в котором текст, стихи, истории, эссе больше говорятся, чем поются. Термин часто используется (особенно в англоязычных странах) для обозначения соответствующей CD-продукции, не являющейся музыкальной.

Формами «spoken word» могут быть как литературные чтения, чтения стихов и рассказов, доклады, так и поток сознания, и популярные в последнее время политические и социальные комментарии артистов в художественной или театральной форме. Нередко артистами в жанре «spoken word» бывают поэты и музыканты. Иногда голос сопровождается музыкой, но музыка в этом жанре совершенно необязательна.

Так же как и с музыкой, со «spoken word» выпускаются альбомы, видеорелизы, устраиваются живые выступления и турне.

Среди русскоязычных артистов в этом жанре можно отметить Дмитрия Гайдука и альбом Пожары, группы Сансара (Екб.) и рэп группу Marselle (L`One и Nel)

Некоторые представители жанра

(в алфавитном порядке)

- Бликса Баргельд

- Уильям Берроуз

- Бойд Райс

- Джелло Биафра

- GG Allin

- Дмитрий Гайдук

- Аллен Гинзберг

- Джек Керуак

- Лидия Ланч

- Евгений Гришковец

- Егор Летов

- Джим Моррисон

- Лу Рид

- Генри Роллинз

- Патти Смит

- Серж Танкян

- Том Уэйтс

- Дэвид Тибет

- Levi The Poet

- Listener

См. также

- Декламационный стих

- Мелодекламация

- Речитатив

- Художественное чтение

Introduction

Humans acquire language in an astonishingly diverse set of circumstances. Nearly everyone learns a spoken language from birth and a majority of individuals then follow this process by learning to read, an extension of their spoken language experience. In contrast to these two tightly-coupled modalities (written words are a visual representation of phonological forms, specific to a given language), there exists another language form that bears no inherent relationship to a spoken form: Sign language. When deaf children are raised by deaf parents and acquire sign as their native language from birth, they develop proficiency within the same time frame and in a similar manner to that of spoken language in hearing individuals (Anderson and Reilly, 2002; Mayberry and Squires, 2006). This is not surprising given that sign languages have sublexical and syntactic complexity similar to spoken languages (Emmorey, 2002; Sandler and Lillo-Martin, 2006). Neural investigations of sign languages have also shown a close correspondence between the processing of signed words in deaf (Petitto et al., 2000; MacSweeney et al., 2008; Mayberry et al., 2011; Leonard et al., 2012) and hearing native signers (MacSweeney et al., 2002, 2006) and spoken words in hearing individuals (many native signers are also fluent in a written language, although the neural basis of reading in deaf individuals is largely unknown). The predominant finding is that left anteroventral temporal, inferior prefrontal, and superior temporal cortex are the main loci of lexico-semantic processing in spoken/written (Marinkovic et al., 2003) and signed languages, as long as the language is learned early or to a high level of proficiency (Mayberry et al., 2011). However, it is unknown whether the same brain areas are used for sign language processing in hearing second language (L2) learners who are beginning to learn sign language. This is a key question for understanding the generalizability of L2 proficiency effects, and more broadly for understanding language mechanisms in the brain.

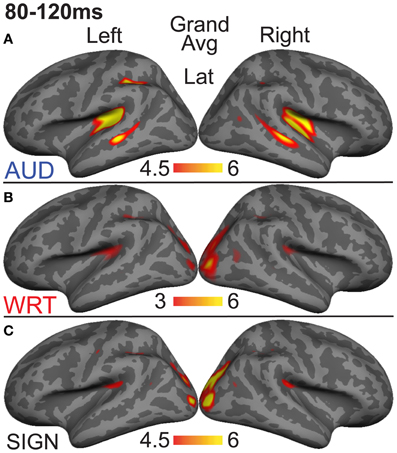

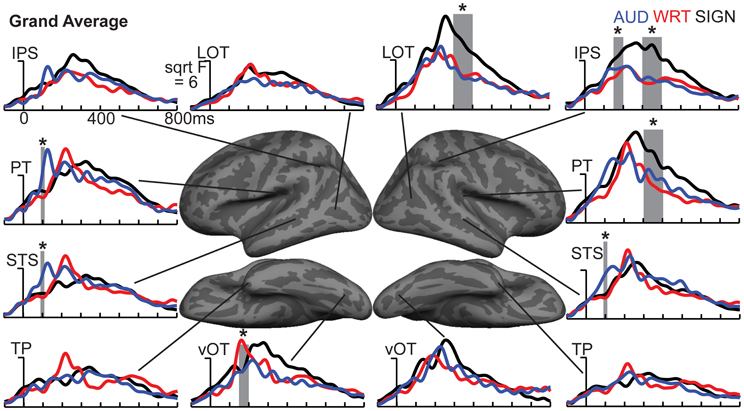

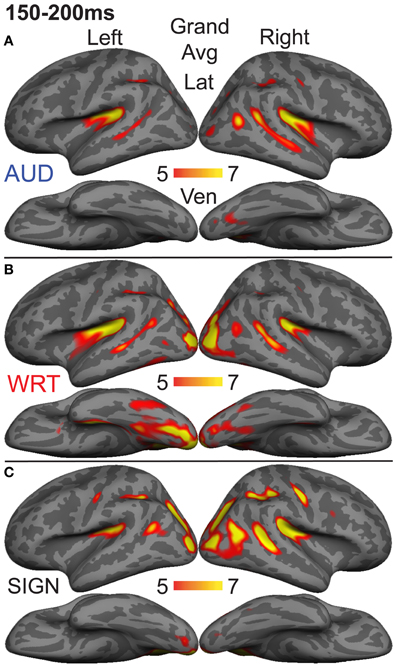

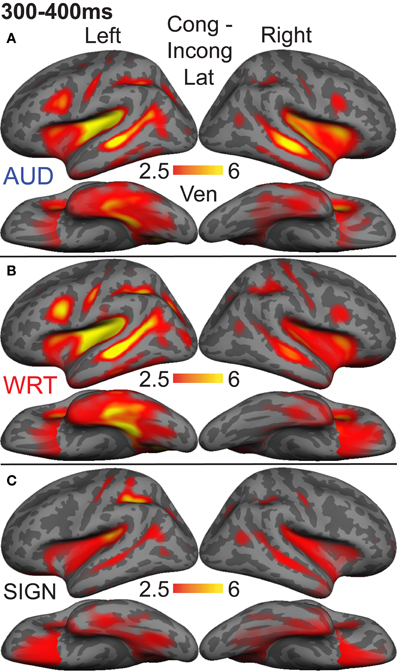

In contrast to the processing of word meaning, which occurs between ~200–400 ms after the word is seen or heard (Kutas and Federmeier, 2011), processing of the word form and sublexical structure appears to be modality-specific. Written words are encoded for their visual form primarily in left ventral occipitotemporal areas (McCandliss et al., 2003; Vinckier et al., 2007; Dehaene and Cohen, 2011; Price and Devlin, 2011). Spoken words are likewise encoded for their acoustic-phonetic and phonemic forms in left-lateralized superior temporal cortex, including the superior temporal gyrus/sulcus and planum temporale (Hickok and Poeppel, 2007; Price, 2010; DeWitt and Rauschecker, 2012; Travis et al., in press). Both of these processes occur within the first ~170 ms after the word is presented. While an analogous form encoding stage presumably exists with similar timing for sign language, no such process has been identified. The findings from monolingual users of spoken/written and signed languages to date suggest at least two primary stages of word processing: An early, modality-specific word form encoding stage (observed for spoken/written words and hypothesized for sign), followed by a longer latency response that converges on the classical left fronto-temporal language network where meaning is extracted and integrated independent of the original spoken, written, or signed form (Leonard et al., 2012).

Much of the world’s population is at least passingly familiar with more than one language, which provides a separate set of circumstances for learning and using words. Often, an L2 is acquired later with ultimately lower proficiency compared to the native language. Fluent, balanced speakers of two or more languages have little difficulty producing words in the contextually correct language, and they understand words as rapidly and efficiently as words in their native language (Duñabeitia et al., 2010). However, prior to fluent understanding, the brain appears to go through a learning process that uses the native language as a scaffold, but diverges in subtle, yet important ways from native language processing. The extent of these differences (both behaviorally and neurally) fluctuates in relation to the age at which L2 learning begins, the proficiency level at any given moment during L2 learning, the amount of time spent using each language throughout the course of the day, and possibly the modality of the newly-learned language (DeKeyser and Larson-Hall, 2005; van Heuven and Dijkstra, 2010). Thus, L2 learning provides a unique opportunity to examine the role of experience in how the brain processes words.

In agreement with many L2 speakers’ intuitive experiences, several behavioral studies using cross-language translation priming have found that proficiency and language dominance impact the extent and direction of priming (Basnight-Brown and Altarriba, 2007; Duñabeitia et al., 2010; Dimitropoulou et al., 2011). The most common finding is that priming is strongest in the dominant to non-dominant direction, although the opposite pattern has been observed (Duyck and Warlop, 2009). These results are consistent with models of bilingual lexical representations, including the Revised Hierarchical Model (Kroll and Stewart, 1994) and the Bilingual Interactive Activation + (BIA+) model (Dijkstra and van Heuven, 2002), both of which posit interactive and asymmetric connections between word (and sublexical) representations in both languages. The BIA+ model is particularly relevant here, in that it explains the proficiency-related differences as levels of activation of the integrated (i.e., shared) lexicon driven by the bottom-up input of phonological/orthographic and word-form representations.

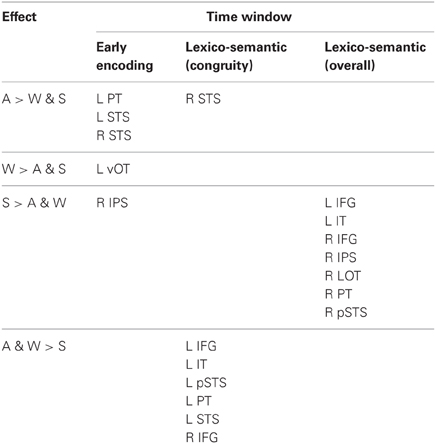

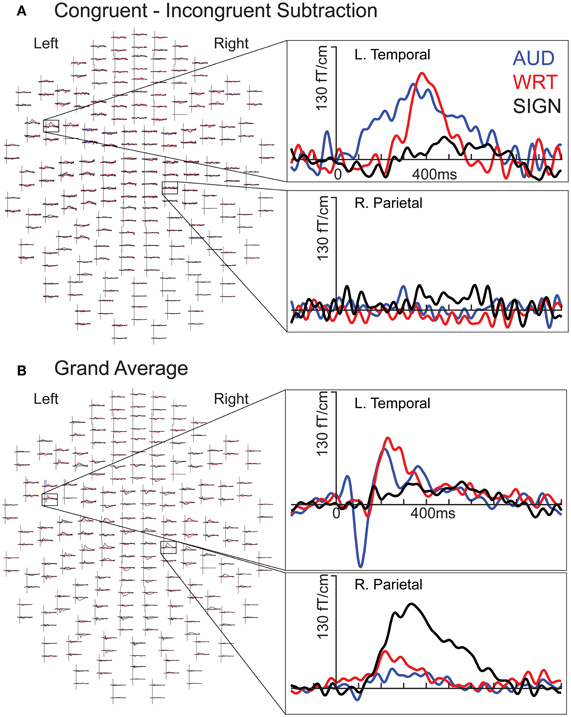

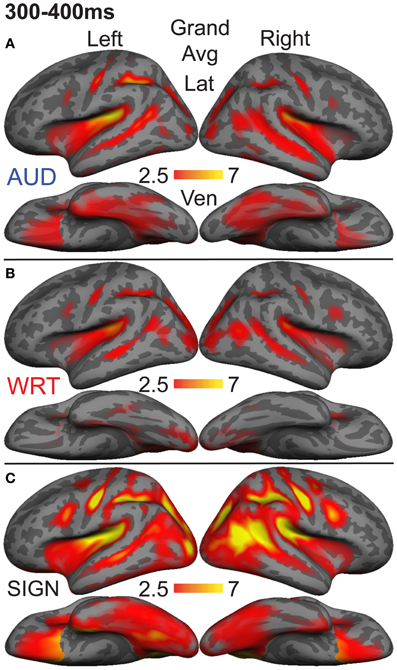

An important question is how these behavioral proficiency effects manifest in neural activity patterns: Does the brain process less proficient words differently from more familiar words? Extensive neuroimaging and neurophysiological evidence supports these models, and shows a particularly strong role for proficiency in cortical organization (van Heuven and Dijkstra, 2010). Two recent studies that measured neural activity with magnetoencephalography (MEG) constrained by individual subject anatomy obtained with magnetic resonance imaging (MRI) found that, while both languages for Spanish-English bilinguals evoked activity in the classical left hemisphere fronto-temporal network, the non-dominant language additionally recruited posterior and right hemisphere regions (Leonard et al., 2010, 2011). These areas showed significant non-dominant > dominant activity during an early stage of word encoding (between ~100–200 ms), continuing through the time period typically associated with lexico-semantic processing (~200–400 ms). Crucially, these and other studies (e.g., van Heuven and Dijkstra, 2010) showed that language proficiency was the main factor in determining the recruitment of non-classical language areas. The order in which the languages were acquired did not greatly affect the activity.

These findings are consistent with the hemodynamic imaging and electrophysiological literatures. Using functional MRI (fMRI), proficiency-modulated differences in activity have been observed (Abutalebi et al., 2001; Chee et al., 2001; Perani and Abutalebi, 2005), and there is evidence for greater right hemisphere activity when processing the less proficient L2 (Dehaene et al., 1997; Meschyan and Hernandez, 2006). While fMRI provides spatial resolution on the order of millimeters, the hemodynamic response unfolds over the course of several seconds, far slower than the time course of linguistic processing in the brain. Electroencephalographic methods including event-related potentials (ERPs) are useful for elucidating the timing of activity, and numerous studies have found proficiency-related differences between bilinguals’ two languages. One measure of lexico-semantic processing, the N400 [or N400 m in MEG; (Kutas and Federmeier, 2011)] is delayed by ~40–50 ms in the L2 (Ardal et al., 1990; Weber-Fox and Neville, 1996; Hahne, 2001), and this effect is constrained by language dominance (Moreno and Kutas, 2005), in agreement with the behavioral and MEG studies discussed above. In general, greater occipito-temporal activity in the non-dominant language (particularly on the right), viewed in light of delayed processing, suggests that lower proficiency involves less efficient processing that requires recruitment of greater neural resources. While the exact neural coding mechanism is not known, this is a well-established phenomenon that applies to both non-linguistic (Carpenter et al., 1999) and high-level language tasks (St George et al., 1999) at the neuronal population level.

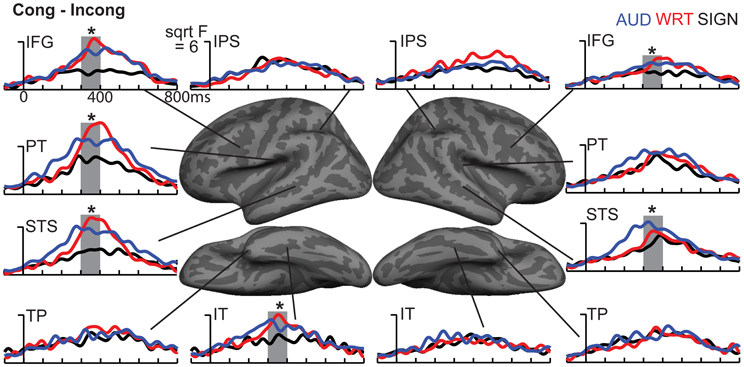

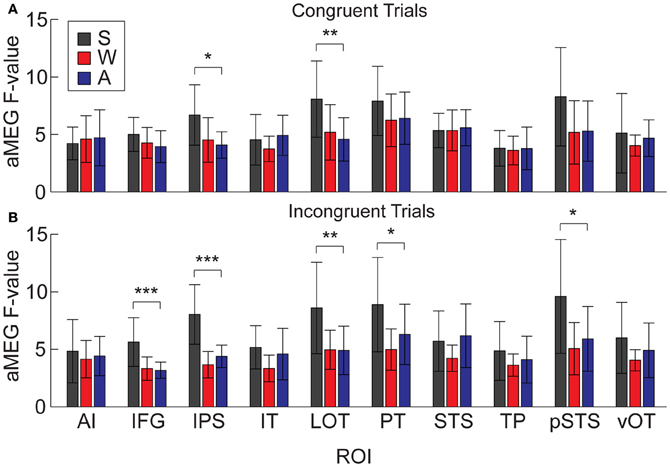

The research to date thus demonstrates two main findings: (1) In nearly all subject populations that have been examined, lexico-semantic processing is largely unaffected by language modality with respect to spoken, written, and signed language, and (2) lower proficiency involves the recruitment of a network of non-classical language regions that likewise appear to be modality-independent. In the present study, we sought to determine whether the effects of language proficiency extend to hearing individuals who are learning sign language as an L2. Although these individuals have extensive experience with a visual language form (written words), their highly limited exposure to dynamic sign language forms allows us to investigate proficiency (English vs. ASL) and modality (spoken vs. written, vs. signed) effects in a single subject population. We tested a group of individuals with a unique set of circumstances as they relate to these two factors. The subjects were undergraduate students who were native English speakers who began learning American Sign Language (ASL) as an L2 in college. They had at least 40 weeks of experience, and were the top academic performers in their ASL courses and hence able to understand simple ASL signs and phrases. They were, however, unbalanced bilinguals with respect to English/ASL proficiency. Although there have been a few previous investigations of highly proficient, hearing L2 signers (Neville et al., 1997; Newman et al., 2001), no studies have investigated sign language processing in L2 learners with so little instruction. Likewise, no studies have investigated this question using methods that afford high spatiotemporal resolution to determine both the cortical sources and timing of activity during specific processing stages. Similar to our previous studies on hearing bilinguals with two spoken languages, here we combined MEG and structural MRI to examine neural activity in these subjects while they performed a semantic task in two languages/modalities: spoken English, visual (written) English, and visual ASL.

While it is not possible to fully disentangle modality and proficiency effects within a single subject population, these factors have been systematically varied separately in numerous studies with cross-language and between-group comparisons (Marinkovic et al., 2003; Leonard et al., 2010, 2011, 2012), and are well-characterized in isolation. It is in this context that we examined both factors in this group of L2 learners. We hypothesized that a comparison between the magnitudes of MEG responses to spoken, written, and signed words would reveal a modality-specific word encoding stage between ~100–200 ms (left superior planar regions for spoken words, left ventral occipitotemporal regions for written words, and an unknown set of regions for signed words), followed by stronger responses for ASL (the lower proficiency language) in a more extended network of brain regions used to process lexico-semantic content between ~200–400 ms post-stimulus onset. These areas have previously been identified in spoken language L2 learners and include bilateral posterior visual and superior temporal areas (Leonard et al., 2010, 2011). Finding similar patterns for beginning ASL L2 learners would provide novel evidence that linguistic proficiency effects are generalizable, a particularly striking result given the vastly different sensory characteristics of spoken English and ASL. We further characterized the nature of lexico-semantic processing in this group by comparing the N400 effect across modalities, which would reveal differences in the loci of contextual integration for relatively inexperienced learners of a visual second language.

Materials and Methods

Participants

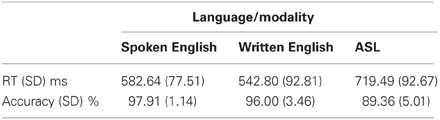

Eleven hearing native English speakers participated in this study (10 F; age range = 19.74–33.16 years, mean = 22.42). All were healthy adults with no history of neurological or psychological impairment, and had normal hearing and vision (or wore corrective lenses that were applied in the MEG). All participants had at least four academic quarters (40 weeks) of instruction in ASL, having reached the highest level of instruction at either UCSD or Mesa College. Participants were either currently enrolled in a course taught in ASL or had been enrolled in such a course in the previous month. One participant had not taken an ASL course in the previous 4 months. Participants completed a self-assessment questionnaire that asked them to rate their ASL proficiency on a scale from 1 to 10. For ASL comprehension, the average score was 7.1 ± 1.2; ASL production was 6.5 ± 1.9; Fingerspelling comprehension was 6.4 ± 1.6; and fingerspelling production was 6.8 ± 1.7. Six participants reported using ASL on a daily basis at the time of enrollment in the study, while the remaining participants indicated weekly use (one participant indicated monthly use).

Participants gave written informed consent to participate in the study, and were paid $20/h for their time. This study was approved by the Institutional Review Board at the University of California, San Diego.

Stimuli and Procedure

In the MEG, participants performed a semantic decision task that involved detecting a match in meaning between a picture and a word. For each trial, subjects saw a photograph of an object for 700 ms, followed by a word that either matched (“congruent”) or mismatched (“incongruent”) the picture in meaning. Participants were instructed to press a button when there was a match; response hand was counterbalanced across blocks within subjects. Words were presented in blocks by language/modality for spoken English, written English, and ASL. Each word appeared once in the congruent and once in the incongruent condition, and did not repeat across modalities. All words were highly imageable concrete nouns that were familiar to the participants in both languages. Since no frequency norms exist for ASL, the stimuli were selected from ASL developmental inventories (Schick, 1997; Anderson and Reilly, 2002) and picture naming data (Bates et al., 2003; Ferjan Ramirez et al., 2013b). The ASL stimuli were piloted with four other subjects who had the same type of ASL instruction to confirm that they were familiar with the words. Stimulus length was the following: Spoken English mean = 473.98 ± 53.17 ms; Written English mean = 4.21 ± 0.86 letters; ASL video clips mean = 467.92 ± 62.88 ms. Written words appeared on the screen for 1500 ms. Auditory stimuli were delivered through earphones at an average amplitude of 65 dB SPL. Written and signed word videos subtended <5 degrees of visual angle on a screen in front of the subjects. For all stimulus types, the total trial duration varied randomly between 2600 and 2800 ms (700 ms picture + 1500 ms word container + 400–600 ms inter-trial interval).

Each participant completed three blocks of stimuli in each language/modality. Each block had 100 trials (50 stimuli in each of the congruent and incongruent conditions) for a total of 150 congruent and incongruent trials in each language/modality. The order of the languages/modalities was counterbalanced across participants. Prior to starting the first block in each language/modality, participants performed a practice run to ensure they understood the stimuli and task. The practice runs were repeated as necessary until subjects were confident in their performance (no subjects required more than one repetition of the practice blocks).

MEG Recording

Participants sat in a magnetically shielded room (IMEDCO-AG, Switzerland) with the head in a Neuromag Vectorview helmet-shaped dewar containing 102 magnetometers and 204 gradiometers (Elekta AB, Helsinki, Finland). Data were collected at a continuous sampling rate of 1000 Hz with minimal filtering (0.1 to 200 Hz). The positions of four non-magnetic coils affixed to the subjects’ heads were digitized along with the main fiduciary points such as the nose, nasion, and preauricular points for subsequent coregistration with high-resolution MR images. The average 3-dimensional Euclidian distance for head movement from the beginning of the session to the end of the session was 7.38 mm (SD = 5.67 mm).

Anatomically-Constrained MEG (aMEG) Analysis

The data were analyzed using a multimodal imaging approach that constrains the MEG activity to the cortical surface as determined by high-resolution structural MRI (Dale et al., 2000). This noise-normalized linear inverse technique, known as dynamic statistical parametric mapping (dSPM) has been used extensively across a variety of paradigms, particularly language tasks that benefit from a distributed source analysis (Marinkovic et al., 2003; Leonard et al., 2010, 2011, 2012; Travis et al., in press), and has been validated by direct intracranial recordings (Halgren et al., 1994; McDonald et al., 2010).

The cortical surface was obtained in each participant with a T1-weighted structural MRI, and was reconstructed using FreeSurfer. The images were collected at the UCSD Radiology Imaging Laboratory with a 1.5T GE Signa HDx scanner using an eight-channel head coil (TR = 9.8 ms, TE = 4.1 ms, TI = 270 ms, flip angle = 8°, bandwidth = ± 15.63 kHz, FOV = 24 cm, matrix = 192 × 192, voxel size = 1.25 × 1.25 × 1.2 mm). All T1 scans were collected using online prospective motion correction (White et al., 2010). A boundary element method forward solution was derived from the inner skull boundary (Oostendorp and Van Oosterom, 1992), and the cortical surface was downsampled to ~2500 dipole locations per hemisphere (Dale et al., 1999; Fischl et al., 1999). The orientation-unconstrained MEG activity of each dipole was estimated every 4 ms, and the noise sensitivity at each location was estimated from the average pre-stimulus baseline from −190 to −20 ms for the localization of the subtraction for congruent-incongruent trials.

The data were inspected for bad channels (channels with excessive noise, no signal, or unexplained artifacts), which were excluded from all further analyses. Additionally, trials with large (>3000 fT for gradiometers) transients were rejected. Blink artifacts were removed using independent components analysis (Delorme and Makeig, 2004) by pairing each MEG channel with the electrooculogram (EOG) channel, and rejecting the independent component that contained the blink. On average, fewer than five trials were rejected for each condition.

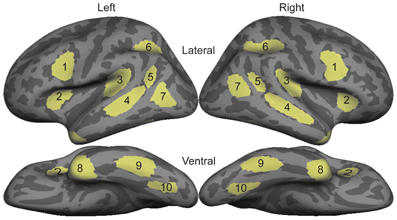

Individual participant dSPMs were constructed from the averaged data in the trial epoch for each condition using only data from the gradiometers, and then these data were combined across subjects by taking the mean activity at each vertex on the cortical surface and plotting it on an average brain. Vertices were matched across subjects by morphing the reconstructed cortical surfaces into a common sphere, optimally matching gyral-sulcal patterns and minimizing shear (Sereno et al., 1996; Fischl et al., 1999).