About

«You Keep Using That Word, I Do Not Think It Means What You Think It Means» is a phrase used to call out someone else’s incorrect use of a word or phrase during online conversations. It is typically iterated as an image macro series featuring the fictional character Inigo Montoya from the 1987 romantic comedy film The Princess Bride.

Origin

The quote “You keep using that word, I do not think it means what you think it means” was said by American actor Mandy Patinkin[2] who portrayed the swordsman Inigo Montoya[3] in the 1987 romantic comedy The Princess Bride.[1] Throughout the movie, Sicilian boss Vizzini (portrayed by Wallace Shawn[4]) repeatedly describes the unfolding events as “inconceivable.” After Vizzini attempts to cut a rope the Dread Pirate Roberts is climbing up, he yells out that it was inconceivable that the pirate did not fall. To this, Montoya replied with the quote:

The clip of the scene from Princess Bride was uploaded via YouTube channel Bagheadclips on February 4th, 2007. Since its upload, the video has been used in the comments of Reddit posts as early as since January 2008[20] and has gained more than 644,000 views as of July 2012.

Spread

Though the quote had been used to refute posters on 4chan[17] as early as March 2010, the first advice animal style image macro with the quote over a still photo of Mandy Patinkin as the character was shared on the advice animals subreddit[8] on June 18th, 2011. The caption used the word “decimate” as the example of what was being misused. While the word is defined as “to kill, destroy, or remove a large percentage of”[9], it was originally used in the Roman era[10] to refer to a punishment in which 1 in 10 men were killed. The misuse of the word to mean anything more than ten percent has been blogged about on Listverse[11], personal blog World Wide Words[12] and WikiHow.[13]

More instances of the image macro have appeared on other subreddits including /r/RonPaul[14] and /r/Anarcho_Capitalism.[15] As of July 2012, the Quickmeme[5] page has 640 submissions and the Memegenerator[6]page has more than 1800 submissions. Additional instances are posted on Memebase[18], Reddit[16] and Tumblr[7]with the tag “I do not think it means what you think it means.”

Notable Examples

Search Interest

External References

Related Entries

3

total

Recent Videos

2 total

Recent Images

38 total

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

+ Add a Comment

Epigenetics. You keep using that word. I do not think it means what you think it means.

I realize I overuse that little joke, but I can’t help but think that virtually every time I see advocates of so-called “complementary and alternative medicine” (CAM) or, as it’s known more commonly now, “integrative medicine” discussing epigenetics. All you have to do to view mass quantities of misinterpretation of the science of epigenetics is to type the word into the “search” box of a website like Mercola.com or NaturalNews.com, and you’ll be treated to large numbers of articles touting the latest discoveries in epigenetics and using them as “evidence” of “mind over matter” and that you can “reprogram your genes.” It all sounds very “science-y” and impressive, but is it true?

Would that it were that easy!

You might recall that last year I discussed a particularly silly article by Joe Mercola entitled How your thoughts can cause or cure cancer, in which Mercola proclaims that “your mind can create or cure disease.” If you’ve been following the hot fashions and trends in quackery, you’ll know that quacks are very good at leaping on the latest bandwagons of science and twisting them to their own ends. The worst part of this whole process is that sometimes there’s a grain of truth at the heart of what they say, but it’s so completely dressed up in exaggerations and pseudoscience that it’s really, really hard for anyone without a solid grounding in the relevant science to recognize it. Such is the case with how purveyors of “alternative health” like Joe Mercola and Mike Adams have latched on to the concept of epigenetics.

Before we can analyze how epigenetics is being used by real scientists and abused by quacks, however, it’s necessary to explain briefly what epigenetics is. To put it succinctly (I know, a difficult and rare thing for me), epigenetics is the study of heritable traits that do not depend upon the primary sequence of DNA. I happen to agree (for once) with P.Z. Myers when he laments that this definition is unsatisfactory in that it is rather vague, which is perhaps why quacks have such an easy time abusing concepts in epigenetics. As P.Z. puts it, the term “epigenetics” basically “includes everything. Gene regulation, physiological adaptation, disease responses…they all fall into the catch-all of epigenetics.” Processes that are considered to be epigenetic encompass DNA methylation (in which the cell silences specific genes by attaching methyl groups to bases that make up the DNA sequence) and wrapping the primary DNA sequence around protein complexes into nucleosomes, which are made up of proteins called histones. Indeed, in eukaroytes, the whole histone-DNA complex is known as chromatin, and the “tightness” of the wrapping of the DNA into chromatin is an important mechanism by which the cell controls gene expressions, and this “tightness” can be controlled by a process known as histone acetylation, in which acetyl groups are tacked onto histones (or removed from them). Acetylation removes a positive charge on the histones, thereby decreasing its ability to interact with negatively charged phosphate groups elsewhere on the histones. The end result is that the “tightness” of the condensed (more tightly packed) histone-DNA complex relaxes into a state associated with greater levels of gene transcription. (I realize that this model has been challenged, but for purposes of this discussion it’s adequate.) This process is reversed by a class of enzymes known as histone deacetylases (HDACs). In my own field of cancer HDAC inhibitors are a hot area of research as “targeted” therapies, although I must admit that I have a hard time figuring out how a drug that can affect the expressions of hundreds of genes by deacetylating their histones can be considered to be tightly “targeted.” But that’s just me.

I’ve only just touched upon a couple of the mechanisms of epigenetics, as discussing them all could easily push the length of this post beyond the epic lengths of even a typical post of mine; so I’ll spare you for the moment. Suffice to say that epigenetic modifications can be viewed as mechanisms that can ensure accurate transmission of chromatin states and gene expression profiles over generations. We now recognize many epigenetic processes and mechanisms that can regulate the expression of genes, and their number seems to grow every year. It’s become a hideously complex field.

The first brand of cranks to abuse epigenetics were, not surprisingly, creationists. In epigenetics and the observation that there are traits that are heritable that do not directly depend on the primary DNA sequence they saw what they thought was a “fatal flaw” in Darwin’s theory of evolution. (Never mind that Darwin didn’t even know what DNA was and nothing in his theory says what the mediator through which traits are passed from one generation to the next is.) Some even thought epigenetics as “proof” of Lamarckian evolution; i.e., the theory that existed before Darwin that postulated that acquired traits could be passed on to offspring. The most common example used to illustrate the Lamarckian concept of evolution is the giraffe, in which successive generations of primordial giraffes stretching their necks to reach higher branches of trees to feed on each passed on to their offspring a tendency to a slightly longer neck, so that over time this acquired trait resulted in today’s giraffe’s with extremely long necks. In any case, to be fair, one can hardly blame creationists for leaping on this particular concept of epigenetics as support for a form of neo-Lamarckian evolution, as several respectable scientists also argued basically the same thing, encouraging credulous journalists to label epigenetics to be the “death knell of Darwin” using breathless headlines. I even saw just such an article last week, which has the advantage of both touting arguments used to link epigenetics to CAM and arguments used linking epigenetics to the “consternation of strict Darwinists.” (More on that later.) It’s an argument that Jerry Coyne has refuted well on more than one occasion. In brief:

Their arguments are unconvincing for a number of reasons. Epigenetic inheritance, like methylated bits of DNA, histone modifications, and the like, constitute temporary “inheritance” that may transcend one or two generations but don’t have the permanance to effect evolutionary change. (Methylated DNA, for instance, is demethylated and reset in every generation.) Further, much epigenetic change, like methylation of DNA, is really coded for in the DNA, so what we have is simply a normal alteration of the phenotype (in this case the “phenotype” is DNA) by garden variety nucleotide mutations in the DNA. There’s nothing new here—certainly no new paradigm. And when you map adaptive evolutionary change, and see where it resides in the genome, you invariably find that it rests on changes in DNA sequence, either structural-gene mutations or nucleotide changes in miRNAs or regulatory regions. I know of not a single good case where any evolutionary change was caused by non-DNA-based inheritance.

Indeed. Moreover, epigenetic changes are not very stably heritable, rarely persisting anywhere near enough generations to be a major force in evolution.

Of course, I only dwelled on evolution briefly because (1) the same sorts of arguments are being made for epigenetic modifications as a “mechanism” through which various CAM modalities “work” and (2) evolution interests me and we don’t talk about it enough in medicine. To boil it down, CAM advocates look to epigenetics as basically magic, a way that you—yes, you!—can reprogram your very own DNA (and all without Toby Alexander and the need to mess with all those messy etheric strands of DNA) and thereby heal yourself of almost anything or even render yourself basically immune to nearly every disease that plagues modern humans. Consequently, you see articles on Mercola.com and similar outlets with titles like How Your Thoughts Can Cause or Cure Cancer (through epigenetic modifications of your genome, of course, which you can supposedly control consciously!), Your Diet Could be More Important Than Your Genes, Can the theory of epigenetics be linked to Naturopathic and Alternative Medicine?, Falling for This Myth Could Give You Cancer (the “myth” being, of course, the central dogma of molecular biology in which genes make RNA, which make proteins), Epigenetics reinforces theory that positive mind states heal, Epigenetics discoveries challenge outdated medical beliefs about DNA, inheritance and gene expression, and Why Your DNA Isn’t Your Destiny. You also see videos like this interview with Bruce Lipton, one of the foremost promoters of the idea that you can do almost anything to your epigenome (and thus your health) just by thinking happy thoughts:

Can you count the number of straw men in Lipton’s description of biology? Particularly amusing is how Lipton tries to argue that the central dogma of biology was never scientifically proven, which is utter nonsense. Now, I’ve said before that I really never liked using the word “dogma” to describe a scientific concept like the central dogma of molecular biology. In fact, I’ve always hated it, because it does indeed imply that what is being described is a religious concept; so it’s no surprise that Lipton blathers on about how, back when he apparently still “believed in the old thinking,” he was actually “teaching religion.”

Of course, Lipton is a well-known crank, whose central idea seems to be a variant of The Secret, in which wanting something badly enough makes it so and that “modern science has bankrupted our souls.” Basically, he questions the “Newtonian vision of the primacy of a physical, mechanical Universe”; that “genes control biology”; that evolution resulted from random genetic mutations; and that evolution is driven by the survival of the fittest. As is the case with epigenetics in evolution, there are some scientists who provide the basis for Lipton’s claims in such a way that he can be off and running into the woo-sphere with claims that start out as being reasonable speculations based on the new science of epigenetics. No less a luminary than cancer biologist Robert Weinberg was, after all, quoted in an article entitled Epigenetics: How our experiences affect our offspring as saying that the evidence that epigenetics plays a major role in cancer has become “absolutely rock solid.” And so it has. If it weren’t, HDAC inhibitors wouldn’t be viewed as such a promising new class of drugs to use to treat cancer. Some, however, take a good idea a bit too far and claim that cancer is an “epigenetic disease”; it’s probably likely that it’s a combination of epigenetic and genetic changes that lead to cancer and that the relative contribution of each depends on the cancer. Even so, cancers virtually all have what I like to call (using my favorite scientific term, of course) “messed up genomes” so complicated that it’s no wonder we haven’t cured cancer yet.

Is it any wonder that a couple of years ago, Der Spiegel did a ten page feature on epigenetics? The cover of the issue in which this feature was published touted it with a nude blonde (and oh-so-Nordic) female emerging from the water with a DNA double helix-like twist of water covering up her naughty bits, with the headline proclaiming, “The victory over the genes. Smarter, healthier, happier. How we can outwit our genome.”

Then we have books like Happiness Genes: Unlock the Positive Potential Hidden in Your DNA by James D. Baird and Laurie Nadel, in which we are told, “Happiness is at your fingertips, or rather sitting in your DNA, right now! The new science of epigenetics reveals there are reserves of natural happiness within your DNA that can be controlled by you, by your emotions, beliefs and behavioral choices.”

I’m not sure how epigenetics will make you happy, but I’m sure Baird and Nadel are more than happy to explain if you buy their book. Not surprisingly, naturopaths are jumping on the bandwagon, claiming that epigenetics is at the root of how naturopathy “works”:

Generally speaking, if we want to express a gene and turn it into a protein, we would express certain DNA machinery (through histone proteins, promoters, regulators, etc) to make that happen, and vice versa to turn a gene off. So speaking from a naturopathic viewpoint, what we put into our bodies, the type of water that we drink, the way that we adapt to stress influences whether or not a certain gene is going to be turned on. For a more personal example, what I put into my body is going to influence my genetic code to promote or stop transcription and translation of the BRCA1 or BRCA2 genes, which could eventually result into cancer.

I would suggest that any woman with a BRCA mutation, as Ms. Plonski apparently has, who relies on diet to prevent the adverse effects of cancer-causing BRCA mutations is taking an enormous risk.

Whenever I see the hype over epigenetics (which, let’s face it, is not just a quack phenomenon—it’s just that the quacks take it beyond hype into magical thinking), one thing that always strikes me about it is that there is often a blending (or even confusing) of simple gene regulation compared to epigenetics. In other words, do the diet and lifestyle changes that, for example, Dean Ornish has implicated in inducing changes in gene expression profiles in prostate epithelium work through short-term gene regulatory mechanisms or through epigenetic mechanisms that persist long term? Certainly, he argues that things start happening short term; one of his favorite examples is a graph that suggests that a single high-fat meal transiently impairs endothelial function and decreases blood flow within hours. When you see typical arguments that “lifestyle” or “environment” can overcome genetics, the term “epigenetics” becomes such a broad, wastebasket term as to be meaningless. Basically, anything that changes gene expression is lumped into “epigenetics,” whether those changes are in fact heritable or not. For example, in this brief blog post, we are told that food can cause or cure certain cancers. The reason:

Genes tell our bodies what to do and rebuild new cells so that we can continue to live a normal life. Our bodies have a system outside of our genes that was designed to keep our bodies running well. This system looks to turn off failing genes and activate genes needed to fight diseases. This management system is called epigenetics. We obviously need food, water, and nutrients to live. These things come from our food source. If we constantly eat bad foods we will knock the management system off-key just like putting bad fuel in our cars will eventually destroy the engine.

Yes, and no. Again, epigenetics, strictly defined, is about heritable changes in gene expression. What is being described here is any change in gene expression that can be induced by outside influences. They are not the same. Again, epigenetic changes are long term changes that are potentially heritable, and, as I pointed out above, most epigenetic changes are not passed on to offspring, certainly not to the point that they have a detectable effect on evolution. The rest is gene regulation, which is often transient but, depending on the process, can continue long term for as long as the stimulus causing the change in regulation is present. As is frequently pointed out, the quickest way to get an organ to start to return to normal is to stop doing the bad things to it that were causing it dysfunction in the first place. As P.Z. Myers put it:

In part, the root of the problem here is that we’re falling into an artificial dichotomy, that there is the gene as an enumerable, distinct character that can be plucked out and mapped as a fixed sequence of bits in a computer database, and there are all these messy cellular processes that affect what the gene does in the cell, and we try too hard to categorize these as separate. It’s a lot like the nature-nurture controversy, where the real problem is that biology doesn’t fall into these simple conceptual pigeonholes and we strain too hard to distinguish the indistinguishable. Grok the whole, people! You are the product of genes and cellular and environmental interactions.

Moreover, the straw man frequently (and gleefully) torn down by CAM advocates that doctors believe that genes are “destiny” notwithstanding, in reality, as far as I’ve been able to ascertain, doctors have been trying to subvert people’s “genetic destiny” for a long time, perhaps even longer than we have known that there is even such a thing as genes. For example, women with BRCA mutations that produce an alarmingly high lifetime risk of developing breast and/or ovarian cancer are often advised to take Tamoxifen to lower that risk, to undergo frequent screening to try to catch such cancers early, or even to undergo bilateral mastectomies and oophorectomies to remove as much of the tissue at risk of developing cancer as possible. People with a strong family history of heart disease are regularly advised to exercise and switch to a diet that lowers their risk of progression of atherosclerosis. People with type II diabetes similarly are advised to exercise and lose weight, which can in many cases decrease the level of glucose intolerance from which they suffer, sometimes to the point where they no longer fit the diagnostic criteria for type II diabetes. None of this is new or radical. What is new is the realization of the possibility that some of the mechanisms behind these changes involve epigenetic changes. And this is all fine.

Understanding epigenetics is likely to help us to understand certain long-term chronic diseases, but it is not, as you will hear from CAM advocates, some sort of magical panacea that will overcome our genetic predispositions. Nor will it be likely to allow us to “pass the health benefits of your healthy lifestyle…to your children through epigenomes’ reprogramming your DNA,” as is frequently claimed, as much as one might want to do that. Moreover, the science of epigenetics is in its infancy. There are still some serious methodological problems to overcome when doing epidemiological research of the effects of epigenetic changes, as this presentation by Dr. Jonathan Mill explains, an explanation that he echoed in a commentary entitled The seven plagues of epigenetic epidemiology. The worst of the “plagues” include that we do not know what to look for or where; the technology is very imperfect; sample sizes are way too small; whatever we do it won’t be enough to fully account for epigenetic differences between tissues and cells; and we might be trying to find small effect sizes using sub-optimal methods. Note the small effect sizes. Proponents of epigenetics as the heart of all “efficacy” of CAM tend to exaggerate the potential benefits. Again, remember how they claim that epigenetics can completely overcome genetics. There’s really no good evidence that I’m aware of that it can.

In the end, what is most concerning about the hype of epigenetics is how it feeds into what I’ve referred to (ironically, of course) as the “central dogma” of CAM: Namely The Secret. I fear that epigenetics is being grafted onto such mysticism such that not only can “positive thoughts” heal, but that they induce permanent (or at least long-lasting) changes in our genome through epigenetics. Besides the obvious danger that thinking does not usually make it so, which is a dangerous delusion for patients, the embrace of epigenetics as giving us “total control” over our health also produces the flip side of The Secret, which is that if one is ill it is his fault for not doing the right things or thinking happy enough faults.

Erick Erickson:

Democrats keep talking about our refusal to compromise. They don’t realize our compromise is defunding Obamacare. We actually want to repeal it.

I guess the next stage is to seek compromise on what ‘compromise’ means. Conservatives want ‘compromise’ to mean: we get almost everything. You get nothing. Erickson’s planning to threaten the dictionary people, maybe? (‘Dat’s a nice language you got ‘der. Be a shame if somethin’ wuz teh happin to it.’)

A kidnapper who asks for $1 million or he shoots the kid is seeking compromise, so long as he would prefer $10 million?

UPDATE: Here’s another use of the new word from Grover Norquist:

The administration asking us to raise taxes is not an offer; that’s not a compromise. That’s just losing. I’m in favor of compromise. When we did the $2.5 trillion spending restraint in the BCA, we wanted $6 trillion. I considered myself very compromised. Overly reasonable.

‘Compromise’ means conservatives getting a lot for nothing, just not absolutely everything you might ever want, for nothing. But bottom line: if you have to give to get, that’s just losing, not compromise.

A diorama of a moon landing graces the magazine’s cover, and the article’s caption reads “We live in an age when all manner of scientific knowledge — from climate change to vaccinations — faces furious opposition. Some even have doubts about the moon landing,” erroneously implying that all scientific “doubt” springs from the same source and is of equal value or validity — or lack thereof – and that “doubting” the validity of science with regard to vaccines and genetically modified organisms is equivalent to “doubting” climate science, evolution, and moon landings.

As a geeky physics major who happens to be quite proud of my father’s contribution to the moon landings (he was part of the team responsible for the lunar module’s antenna and communication system), yet has the temerity to question the science and wisdom behind the current vaccine schedule and widespread dissemination of genetically modified organisms, I finally find myself irritated enough by this journalistic trend to rebut to the popular conception of those who question vaccine science as “anti-science.”

I find Achenbach’s piece fascinating – well-written and persuasive, yet built upon logical inconsistencies and false assumptions that, taken together, make a better case against his thesis than for it – at least with regard to vaccine science. He quotes geophysicist Marcia McNutt, editor of Science magazine, as saying, “Science is not a body of facts. Science is a method for deciding whether what we choose to believe has a basis in the laws of nature or not.” And he himself claims, “Scientific results are always provisional, susceptible to being overturned by some future experiment or observation. Scientists rarely proclaim an absolute truth or absolute certainty. Uncertainty is inevitable at the frontiers of knowledge.”

Having studied science pretty intensively at Williams College, including a major in physics and concentrations in astronomy and chemistry – there were semesters it felt like l lived in the science quad – I fully concur with these statements. Sitting through lecture after lecture laying out elaborate scientific theories that were once accepted and used to further scientific knowledge and then discarded when it became clear they did not account for all the available data, it would have been hard not to be aware that scientific results are provisional and subject to change when a more complete picture is developed. And indeed, information received by the Hubble Telescope and continuing work conducted by people like Stephen Hawking have changed the landscape in astronomy and physics rather dramatically since I graduated in 1983.

The episodic nature of scientific progress

The old paradigm is never given up lightly or easily in the face of new evidence. In fact, an established paradigm is generally not abandoned until overwhelming evidence accumulates that the paradigm cannot account for all the observed phenomena in scientific research and an alternative credible hypothesis has been developed. Wikipedia summarizes it well,

As a paradigm is stretched to its limits, anomalies — failures of the current paradigm to take into account observed phenomena — accumulate. Their significance is judged by the practitioners of the discipline. . . But no matter how great or numerous the anomalies that persist, Kuhn observes, the practicing scientists will not lose faith in the established paradigm until a credible alternative is available; to lose faith in the solvability of the problems would in effect mean ceasing to be a scientist.

When The Structure of Scientific Revolutions was first published it garnered some controversy, according to Wikipedia, because of “Kuhn’s insistence that a paradigm shift was a mélange of sociology, enthusiasm and scientific promise, but not a logically determinate procedure.” Since 1962, though, Kuhn’s theory has become largely accepted and his book has come to be considered “one of The Hundred Most Influential Books Since the Second World War,” according to the Times Literary Supplement and is taught in college history of science courses all over the country.

It would seem likely that a journalist writing a high-profile article on science for National Geographic would not only be aware of Kuhn’s work, but would also understand it well. Achenbach seems to understand the evolution of science as inherently provisional and subject to change when new information comes in, but then undercuts that understanding with the claim, “The media would also have you believe that science is full of shocking discoveries made by lone geniuses. Not so. The (boring) truth is that it usually advances incrementally, through the steady accretion of data and insights gathered by many people over many years.”

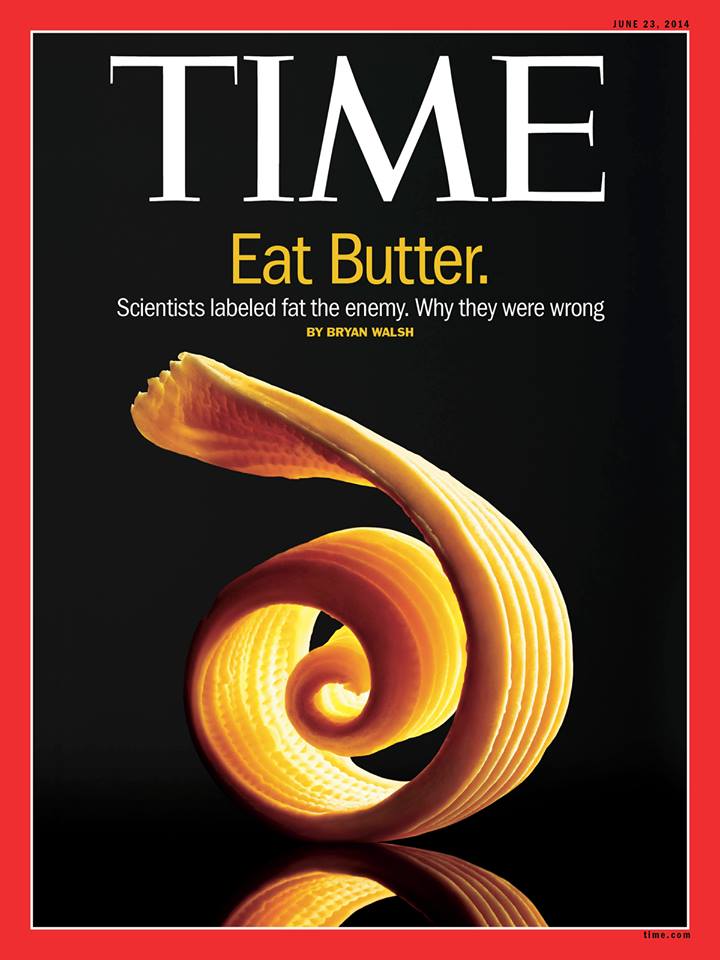

This statement is patently false. First off, the mainstream media tends to downplay, if not completely ignore, any contributions of “lone geniuses” to science, as exemplified by the 2014 Time magazine cover story proclaiming “Eat Butter! Scientists labeled fat the enemy. Why they were wrong.” Suddenly, everyone was reporting that consumption of fat, in general, and saturated fat, in particular, is not the cause of high serum cholesterol levels and is not in fact bad for you. “Lone geniuses” (also known as “quacks” in the parlance of the old paradigm) understood and accepted these facts 25-30 years ago and have been operating under a completely different paradigm ever since, but it wasn’t until 2014 that a tipping point occurred in mainstream medical circles and the mainstream media finally took note.

Secondly, “the steady accretion of data and insights gathered by many people over many years,” what Kuhn calls “normal science,” cannot by its nature bring about the biggest advancements in science – the scientific revolutions. Also from Wikipedia,

In any community of scientists, Kuhn states, there are some individuals who are bolder than most. These scientists, judging that a crisis exists, embark on what Thomas Kuhn calls revolutionary science, exploring alternatives to long-held, obvious-seeming assumptions. Occasionally this generates a rival to the established framework of thought. The new candidate paradigm will appear to be accompanied by numerous anomalies, partly because it is still so new and incomplete. The majority of the scientific community will oppose any conceptual change (emphasis mine), and, Kuhn emphasizes, so they should. To fulfill its potential, a scientific community needs to contain both individuals who are bold and individuals who are conservative. There are many examples in the history of science in which confidence in the established frame of thought was eventually vindicated. It is almost impossible to predict whether the anomalies in a candidate for a new paradigm will eventually be resolved. Those scientists who possess an exceptional ability to recognize a theory’s potential will be the first whose preference is likely to shift in favour of the challenging paradigm (emphasis mine). There typically follows a period in which there are adherents of both paradigms. In time, if the challenging paradigm is solidified and unified, it will replace the old paradigm, and a paradigm shift will have occurred.

That paradigm shift will usher in a scientific revolution resulting in an explosion of new ideas and new directions for research. Achenbach recognizes this tension between the bolder and more conservative scientists to a degree:

Even for scientists, the scientific method is a hard discipline. Like the rest of us, they’re vulnerable to what they call confirmation bias — the tendency to look for and see only evidence that confirms what they already believe. But unlike the rest of us, they submit their ideas to formal peer review before publishing them.

Scientific consensus relies heavily on the flawed process of peer review

Achenbach acknowledges that scientists are human beings and, as such, are subject to the very same biases and stresses to which other human beings are subject, but implies that those biases are somehow held in check by the magical process of peer review. What Achenbach fails to mention, however, is the fact that the process of peer review is hardly a “scientific” discipline itself. In fact, peer review is so imperfect in practice that Richard Smith, former editor of the prestigious British Medical Journal, described it this way in his 2006 article, “Peer Review: A Flawed Process at the Heart of Science and Journals”:

My point is that peer review is impossible to define in operational terms (an operational definition is one whereby if 50 of us looked at the same process we could all agree most of the time whether or not it was peer review). Peer review is thus like poetry, love, or justice. But it is something to do with a grant application or a paper being scrutinized by a third party — who is neither the author nor the person making a judgement (sic) on whether a grant should be given or a paper published. But who is a peer? Somebody doing exactly the same kind of research (in which case he or she is probably a direct competitor)? Somebody in the same discipline? Somebody who is an expert on methodology? And what is review? Somebody saying ‘The paper looks all right to me‘, which is sadly what peer review sometimes seems to be. Or somebody pouring (sic) all over the paper, asking for raw data, repeating analyses, checking all the references, and making detailed suggestions for improvement? Such a review is vanishingly rare.

What is clear is that the forms of peer review are protean. Probably the systems of every journal and every grant giving body are different in at least some detail; and some systems are very different. There may even be some journals using the following classic system. The editor looks at the title of the paper and sends it to two friends whom the editor thinks know something about the subject. If both advise publication the editor sends it to the printers. If both advise against publication the editor rejects the paper. If the reviewers disagree the editor sends it to a third reviewer and does whatever he or she advises. This pastiche—which is not far from systems I have seen used—is little better than tossing a coin, because the level of agreement between reviewers on whether a paper should be published is little better than you’d expect by chance.

That is why Robbie Fox, the great 20th century editor of the Lancet, who was no admirer of peer review, wondered whether anybody would notice if he were to swap the piles marked ‘publish’ and ‘reject’. He also joked that the Lancet had a system of throwing a pile of papers down the stairs and publishing those that reached the bottom. When I was editor of the BMJ I was challenged by two of the cleverest researchers in Britain to publish an issue of the journal comprised only of papers that had failed peer review and see if anybody noticed. I wrote back ‘How do you know I haven’t already done it?’

In the introduction to their book Peerless Science, Peer Review and U.S. Science Policy, Daryl E. Chubin and Edward J. Hackett, lament

Peer review is not a popular subject. Scientists, federal program managers, journal editors, academic administrators and even our social science colleagues, become uneasy when it is discussed. This occurs because the study of peer review challenges the current state of affairs. Most prefer not to question the way things are done – even if at times those ways appear illogical, unfair and detrimental to the collective life of science and the prospects of one’s own career. Instead, it is more comfortable to defer to tradition, place faith in collective wisdom and hope that all shall be well.

In short, exactly what Achenbach does.

Problems with scientific research run much deeper than peer review

Dr. Marcia Angell, former editor-in-chief of the also-prestigious New England Journal of Medicine makes the case that the problems with scientific research, especially with respect to the pharmaceutical industry, go much deeper than peer review issues. In May 2000 she wrote an editorial in the NEJM that asked “Is Academic Medicine for Sale?” about the increasingly blurry lines between academic institutions (and their research) and the pharmaceutical companies that pay the bills. The editorial was prompted by a research article written by authors whose conflicts-of-interest disclosures were longer than the article itself. In 2005, Angell wrote The Truth About the Drug Companies: How They Deceive Us and What to Do About It, a book that Janet Maslin of The New York Times described as “a scorching indictment of drug companies and their research and business practices . . . tough, persuasive and troubling.”

Marcia Angell, former Editor in Chief of the New England Journal of Medicine

What determines who will be among the bold scientists who usher in a paradigm shift and who will be the more conservative scientists opposing it? I submit that it is those very scientists who can extrapolate from their own experiences and observations, i.e. “anecdotes,” and synthesize them with their understanding of the scientific research to-date who possess the “exceptional ability to recognize a theory’s potential.” In other words, those who can take a step back from the “puzzle-solving” of “normal science” enough to see the bigger picture. Pediatric neurologist and Harvard researcher, Dr. Martha Herbert, describes this eloquently in her introduction to Robert F. Kennedy Jr.’s book, Thimerosal: Let the Science Speak:

What is an error? Put simply, it is a mismatch between our predictions and the outcomes. Put in systems terms, an “error” is an action that looks like a success when viewed through a narrow lens, but whose disruptive additional effects become apparent when we zoom out.

Why do predictions fail to anticipate major complications? Ironically the exquisite precision of our science may itself promote error generation. This is because precision is usually achieved by ignoring context and all the variation outside of our narrow focus, even though biological systems in particular are intrinsically variable and complex rather than uniform and simple. In fact our brains utilize this subtlety and context to make important distinctions, but our scientific methods mostly do not. The problems that come back to bite us then come from details we didn’t consider.

Once an error is entrenched it can be hard to change course. The initial investment in the error, plus fear of the likely expense (both in terms of time and money) of correcting the error, as well as the threat of damage to the reputations of those involved — these all serve as deterrents to shifting course. Patterns of avoidance then emerge that interfere with free and unbiased conduct of scientific investigations and public discourse. But if the error is not corrected, its negative consequences will continue to accumulate. When change eventually becomes unavoidable, it will be a bigger, more complicated, and expensive problem to correct – with further delay making things still worse.

Personally, I think a large part of the brewing paradigm shift in medical science (which I expect to predominate in the near future) comes from the very tension that Herbert describes between the view of bodies, biological systems, as machines that respond predictably and reliably to a particular force or intervention and the view of bodies as “intrinsically variable and complex.” Virtually every area of biological research has identified outliers to every kind of treatment or intervention that are not explainable in terms of the old paradigm, arguing for a more individualized approach to medicine that takes the whole person into account.

For instance, it is clear that most overweight people will lose weight on a high-protein/very low-carbohydrate diet such as Dr. Robert C. Atkins promoted or the Paleo Diet that is all the current rage. What is not clear, however, is how an individual will feel on that diet, which feeling will determine to a large degree the overall outcome of the diet strategy. Some will feel fantastic, while others will feel like the cat’s dinner after it has been vomited up on the carpet. Logically, one can see that it doesn’t make sense to make both types of people conform to one type of diet. “One size” does not fit all.

There are those who believe that all we need is more biological information about a particular system in order to reliably predict outcomes, but there is a good deal of evidence to show that this may never be the case as biological systems appear to be as susceptible to subtle energetic differences as they are to gross chemical and physical interventions. The old paradigm of body as predictable machine has no mechanism to account for the effectiveness of acupuncture on easing chronic pain or the difference that group prayer can make in the length of a hospital stay. The biological sciences may be giving way to their own version of quantum theory, just as Newtonian physics had to.

Intuition as a characteristic of scientists who perform “revolutionary science”

The ability to “utilize this subtlety and context to make important distinctions” that Herbert describes constitutes the difference between the scientific revolutionaries and those who will continue defending an error until long past the point that it has been well and truly proven to be an error. It is an ability that Albert Einstein possessed to a larger degree than most. Einstein felt that “The true sign of intelligence is not knowledge but imagination.” And that “All great achievements of science must start from intuitive knowledge. I believe in intuition and inspiration . . . . At times I feel certain I am right while not knowing the reason.” Interestingly, another well-known scientist whom many consider to have been “revolutionary” was known to place a great deal of emphasis on intuition. Jonas Salk, the creator of the first inactivated polio vaccine to be licensed, even wrote a book called Anatomy of Reality: Merging Intuition and Reason.

Albert Einstein

Gavin de Becker, private security expert and author of the 1999 best-selling book The Gift of Fear, upended the prevailing idea that the eruption of violent behavior is inherently unpredictable by explaining how we can and do predict it with the use of intuition. Like Einstein and Salk, far from denigrating intuition as an irrational response based on “naïve beliefs,” de Becker considers intuition a valid form of knowledge that does not involve the conscious mind. He teaches people to recognize, honor, and rely upon their intuition in order to keep themselves and their loved ones safe. In fact, if we could not do so and had to rely solely upon our conscious minds to protect us from danger, chances are very good human beings would no longer walk the earth.

Anenbach makes the argument that our intuition will lead us astray, encouraging men to get a prostate-specific antigen test, for instance, even though it’s no longer recommended because studies have shown that on a population level the PSA doesn’t increase the overall number of positive outcomes. But there are people whose first indication of prostate cancer was a high PSA result, and those people’s lives might have been saved due to having that test. Who is to say that the person requesting the test will not be among them? In other words, intuition is not necessarily wrong just because it encourages you to do something that is statistically out of the norm or has yet to be “proven” by science.

With regard to proof that a hazardous waste dump is causing a high rate of cancer, Anenbach, says that

To be confident there’s a causal connection between the [hazardous waste] dump and the [local cluster of] cancers, you need statistical analysis showing that there are many more cancers than would be expected randomly, evidence that the victims were exposed to chemicals from the dump, and evidence that the chemicals really can cause cancer.

That’s true, of course, but surely it’s not all that you would – or should – take into account when deciding whether or not to build your house next to the hazardous waste dump. And if you had to wait for the corporation doing the dumping to produce that statistical analysis, something that could presumably be expected to run counter to its own interests, it seems likely that the stronger the correlation between the dumping and the cancers, the longer you would be waiting for that analysis to appear.

Love Canal, site of infamous toxic waste dump

Consider the case of a child growing up in a house with chain smokers in the early 1900s, listening to them hacking up phlegm after every cigarette and upon rising every morning. The smokers die youngish, at least one riddled with lung cancer making every breath a torture. The child has an inkling that the cigarette smoking, the coughing, and subsequent lung cancer are all related. What would be the best choice for that child to make – to assume that the lung cancer and the smoking were not related until science had proven 50 years later that smoking does indeed cause lung cancer or to listen to that initial intuition and steer clear of cigarettes in the first place? Obviously, in retrospect, the latter option would have been the far better choice. As indeed avoidance of the hazardous waste dump may be as well in Anenbach’s example.

Some of you may know that I was on Larry Wilmore’s The Nightly Show in February of this year because it is well known that I do not vaccinate my children. That show also featured Dr. Holly Phillips, medical contributor on CBS News. What most of you won’t know is that Dr. Phillips also spent her undergraduate years at Williams College, my alma mater, but unlike me, she didn’t major in science; she majored in English literature. (Coincidentally, I was also on the CBS News show UpClose with Diana Williams that month with Dr. Richard Besser who was also at Williams while I was there. He was an economics major.)

I’m quite certain that Dr. Phillips learned the body of facts taught in medical school as well as anyone, but I think it’s very likely she’s deeply entrenched in the old paradigm of the body as predictable machine. I was taken aback and, frankly, horrified to hear her say, “I think it’s one of those things where there’s a mother’s intuition where you don’t necessarily want to put a needle in your [healthy] child, but I think this is one of those times when you have to let science trump intuition.”

Did she actually tell people to ignore their intuition – that ability to utilize subtlety and context to make distinctions extolled by Einstein, Salk and de Becker – in favor of someone else’s interpretation of “scientific consensus”? To quote those fabulously creative geniuses Phineas and Ferb, “Yes. Yes, she did.” I regret not finding an opportunity that night to point out how dangerous Dr. Phillips’s advice was.

“Doubt” of scientific consensus is due to adherence to the “tribe”

Anenbach’s thesis ultimately fails due to his reliance on the application of Dan Kahan of Yale University’s theory to explain all science “doubt,”

Americans fall into two basic camps, Kahan says. Those with a more “egalitarian” and “communitarian” mind-set are generally suspicious of industry and apt to think it’s up to something dangerous that calls for government regulation; they’re likely to see the risks of climate change. In contrast, people with a “hierarchical” and “individualistic” mind-set respect leaders of industry and don’t like government interfering in their affairs; they’re apt to reject warnings about climate change, because they know what accepting them could lead to—some kind of tax or regulation to limit emissions.

In the U.S., climate change somehow has become a litmus test that identifies you as belonging to one or the other of these two antagonistic tribes. When we argue about it, Kahan says, we’re actually arguing about who we are, what our crowd is. We’re thinking, People like us believe this. People like that do not believe this. For a hierarchical individualist, Kahan says, it’s not irrational to reject established climate science: Accepting it wouldn’t change the world, but it might get him thrown out of his tribe.

This is crystallized by another quote from Marcia McNutt,

We’re all in high school. We’ve never left high school. People still have a need to fit in, and that need to fit in is so strong that local values and local opinions are always trumping science. And they will continue to trump science, especially when there is no clear downside to ignoring science.

The problem with this viewpoint is that it is inherently contradictory. On the one hand, it pretends that only science that fits in with the prevailing viewpoint is “correct” science or worthy of note, when it is apparent from Kuhn’s work on scientific revolution that that is not the case. When, then, is it “okay” to “ignore” science? Anenbach makes the case that it is okay to ignore any science that does not fit the “scientific consensus,” or the prevailing paradigm. For instance, he says that “vaccines really do save lives,” without ever mentioning the fact that, while that may be true, they maim and kill some people as well, and he says that “people who believe vaccines cause autism . . . are undermining ‘herd immunity’ to such diseases as whooping cough and measles” when science has made it clear that, at least for now, they are doing no such thing. In addition, he pretends that there is no other science than the infamous 1998 case series of 12 children written by Andrew Wakefield and twelve of his eminent colleagues that supports a link between vaccines and autism, when there are in fact a large number of studies that do so.

Corporate interests may slant scientific findings

Anenbach uses an interesting argument to encourage “ignoring” climate science that opposes the prevailing paradigm, “It’s very clear, however, that organizations funded in part by the fossil fuel industry have deliberately tried to undermine the public’s understanding of the scientific consensus by promoting a few skeptics.” It may surprise you to know that I tend to agree with Anenbach on this point. I don’t have an opinion on climate science because I haven’t read it. What I do have is a healthy distrust of “consensus” – given what I know about paradigm shifts – coupled with an even stronger distrust of science that is conducted by an industry that stands to gain from the outcome of that science, and an intuition that leads me to believe that we been heaping abuse upon the planet and that recent bizarre weather patterns – tornadoes in Brooklyn? – are among the many signs that it will not be long before the Earth can no longer sustain that level of abuse.

But, illogically, Anenbach doesn’t show that same mistrust of science performed or financed by an industry that stands to gain when the industry itself controls the prevailing paradigm. The vast majority of vaccine science, for instance, is conducted by the vaccine manufacturers themselves or the Centers for Disease Control and Prevention, which is largely staffed by people with tremendous conflicts of interest. Vaccines are one of the fastest rising sectors in a hugely profitable industry. In fact, according to Marcia Angell, for over two decades the pharmaceutical industry has been far and away the most profitable in the United States. This year total sales of vaccines, a number the World Health Organization says tripled from 2000 to 2013, is expected to reach $40 billion, and the WHO predicts that it will rise to $100 billion by 2025 (by the way, is anyone else a little creeped out by all the economic data on vaccine profitability in that WHO report?) – none of which could possibly be finding its way to the people staffing our government agencies or making decisions on which vaccines to “recommend,” could it?

Julie Gerberding, who left her job as the director of the CDC to take over the vaccine division at Merck soon after overseeing most of the research that supposedly “exonerates” vaccines in general, and Merck’s MMR in particular, of any role in rising autism rates was just an anomaly, right? Unfortunately, no. No, she wasn’t. Robert F. Kennedy Jr.’s description of the CDC as a “cesspool of corruption” may be strongly stated, but it is largely borne out by recent studies. And the situation is eerily similar when it comes to safety studies on genetically modified organisms conducted by Monsanto and rubber-stamped by the FDA.

Scientists willing to break with the “tribe” are more likely to be truth tellers

Practically in the same breath that Anenbach tells us we should ignore any science that does not fit the prevailing paradigm, he makes the claim that those scientists who are most dedicated to truth – and therefore presumably the most trustworthy – are the ones who are willing to break with their “tribe” in order to accurately report what they have observed or discovered, despite the risks of censure, loss of prestige, or even loss of career. But what is a scientist’s “tribe” made up of but other scientists – the very ones so invested in the prevailing paradigm of “scientific consensus.” It sounds to me as if those truth-telling scientists might even be accurately described as “lone geniuses.” But didn’t Anenbach just imply that we should ignore those people willing to say that the Emperor is in fact naked, despite the inherent risk in doing so, in favor of the “tribe” of “scientific consensus”? Does he truly not see the inherent irony of this position?

Andrew Wakefield with TMR’s B.K.

There is no one who sacrificed his position in the “tribe” by speaking his truth more than Andrew Wakefield, who, prior to publication of the infamous 1998 case study, was a well-respected gastroenterologist with a prestigious position at the Royal Free Hospital in London – a deeply entrenched member of the “tribe” of physicians in other words – and who, as a result of standing by his work and that of his colleagues, has since had his medical license revoked and almost never sees his name in print without the word “discredited” next to it, yet still performs and supports work that undercuts the prevailing paradigm because, as he puts it, “this issue is far too important.” By Anenbach’s own argument, Andrew Wakefield is inherently more credible than all the scientists clinging to the “vaccines are (all) safe and effective” “consensus” position. Frankly, I’m inclined to agree.

Anenbach uses this fear of betrayal of the tribe to explain why people do not put their faith in the prevailing paradigm. And it may perhaps explain certain aspects of scientific doubt in some quarters, but it certainly does not explain why most of the people who question the safety or wisdom of vaccines or genetically modified organisms, and the science that purports to establish it, do so. Time and time again I hear about people losing friends, loved ones, and even jobs when they question the current vaccine schedule – and heaven forbid they should express active opposition to it! It can be a very lonely position to take indeed. So lonely, in fact, that many people express profound relief at finally finding like-minded people online. (If you peruse the numerous vaccine blog posts on this website, you will see many examples of this in the comments.) In effect, having given up their place in the tribe, they must seek out a new tribe, a tribe of truth tellers. Evangelical Christians and traditional Catholics, in particular, the very people one might think of as most likely to be “hierarchical individualists” may have the loneliest road of all as many of their periodicals and organizations have come out strongly in support of the vaccine program.

Dr. Bernardine Healy, former head of the National Institutes of Health

Every doctor who publicly expresses perfectly rational questions about vaccine reactions in certain subpopulations is vilified by the press and an increasingly vitriolic group of self-identified “science” bloggers and their followers, despite the fact that many of them start out as vocal supporters of, and believers in, the basic premise of vaccines. In other words, any doctor who even dares to question our current vaccine schedule risks his or her membership in the “tribe.” And yet, surprisingly, quite a few have the courage to do so anyway, including Dr. Bernardine Healy, ex-head of the National Institutes of Health, which makes her a de-facto “tribal chief,” who in a 2008 interview with former CBS correspondent Sharyl Attkisson disclosed that “when she began researching autism and vaccines she found credible published, peer-reviewed scientific studies that support the idea of an association. That seemed to counter what many of her colleagues had been saying for years. She dug a little deeper and was surprised to find that the government has not embarked upon some of the most basic research that could help answer the question of a link.”

In order to get at truth, scientific or otherwise, one needs to be able to take a step back and see the whole picture, incorporating one’s own observations and experience with that of others, including the subtleties and the context. In addition, when it comes to science, one needs to unflinchingly and critically examine all the evidence presented and be willing to break with the prevailing paradigm if the evidence demands it.

In 1987 a “holistic” doctor put me on a diet to lower my cholesterol. Counter to the prevailing paradigm at the time, she put me on a hypoglycemia diet that was very low in carbohydrates but quite high in saturated fat, including cholesterol. In the prevailing paradigm that would have been a recipe for disaster, if anything increasing my serum cholesterol as the proportion of saturated fat in my diet was certainly higher than it had been previously – which is exactly what I feared would happen. So what did happen? My cholesterol dropped from 280 to 140 in a month. Fluke? Could be . . . Only the doctor showed zero surprise at my result, which implied that, while I may have been shocked, she herself had seen many like it before.

Since that time I have read study after study confirming the truth of that doctor’s understanding, serum cholesterol levels can be adequately controlled by diet, but not a low cholesterol diet. Also since that time, I have bored the heck out of my older brothers, at least three of whom have had high cholesterol, with lectures about how the low-cholesterol diets their doctors had prescribed were useless and the statins were unnecessary and maybe even dangerous given the fact that the cholesterol performs a protective anti-inflammatory function in the body. (If you bring down the cholesterol level without bringing down the underlying inflammation, you are setting someone up for disaster.) I briefly hoped they would take note when Dr. Barry Sears’s book Enter the Zone became a bestseller in 1995, but they had to find out the hard way, however. And now that the mainstream has finally caught up with what the “alternative health” folks have known for more than 25 years, it’s a little hard to resist an “I told you so.”

The same is true every time yet another study comes out that supports and confirms the alternative health (a.k.a. “new paradigm”) view of autism as a medical condition with its roots in gut dysbiosis and toxicity, exacerbated by impaired detox pathways, rather than a psychiatric condition.

Technology does not equal science

Dr. Alice Dreger

The biggest problem with Anenbach’s piece, and every other piece that laments the “rejection of science,” is that it conflates rejection of technology with rejection of science. As Alice Dreger, a professor of clinical medical humanities and bioethics at Northwestern University’s Feinberg School of Medicine, beautifully illustrates in an article in The Atlantic titled, “The Most Scientific Birth Is Often the Least Technological Birth,” technology does not equal science. “In fact,” says Dreger, “if you look at scientific studies of birth, you find over and over again that many technological interventions increase risk to the mother and child rather than decreasing it.”

Dreger quotes Bernard Ewigman, the chair of family medicine at the University of Chicago and NorthShore University Health System and author of a major U.S. study of over 15,000 pregnancies, who says that our culture has “a real fascination with technology, and we also have a strong desire to deny death. And the technological aspects of medicine really market well to that kind of culture.” Dreger herself adds, “Whereas a low-interventionist approach to medical care – no matter how scientific – does not.” Indeed. What many scientists forget is that just because something “cool” can be done, doesn’t mean it should be done.

Which brings me to the “Precautionary Principle.” There is no accepted wording of the Precautionary Principle, perhaps because it is something that is largely informed by intuition. According to the Science & Environmental Health Network, “All statements of the Precautionary Principle contain a version of this formula: When the health of humans and the environment is at stake, it may not be necessary to wait for scientific certainty to take protective action.” In other words, if there is any uncertainty about the risk of harm, it is better to err on the side of caution. That seems not only intuitively obvious to me, but logical as well. Science sometimes takes quite a long time to prove something is harmful. It certainly takes long enough that many drugs have done tremendous damage before they were withdrawn from the market: Thalidomide, Vioxx, DES, Darvon, Dexatrim are just a few of the myriad examples.

What the Precautionary Principle isn’t is anti-science. In fact, it supports one of Anenbach’s goals – making efforts to avoid disastrous climate change. It would also support making damned sure that genetically modified organisms can’t do systemic damage to either people or the environment before licensing them for general use (with follow-up studies verifying that is indeed the case after licensing) and testing the vaccines we use against true placebos and in the combinations we actually use them before “recommending” them for every newborn in the country, as well as studying the health outcomes of the vaccinated vs. unvaccinated populations after licensing. It would also support investigation into the commonalities of children with regressive autism whose parents claim that their children were harmed by their vaccines in order to identify possible subpopulations that may be more susceptible to vaccine injury – like, oh say. . . children who exhibit genes that can cause impairment in detoxification pathways, for instance. Wait a second . . . What’s going on here? It sounds like I’m recommending science!

Josef Mengele

Science should serve humanity over corporations

When it comes down to it, science is a tool. And like any tool, it can be used ethically or unethically, morally or immorally, humanely or inhumanely, in pursuit of ends ranging from the sublime to unquestionably evil. Is it anti-science to deplore the experiments conducted by Josef Mengele on concentration camp captives? Is it anti-science to condemn the ethics of the “Tuskegee Study of Untreated Syphilis in the Negro Male”? Was J. Robert Oppenheimer, known as the “father of the atomic bomb,” anti-science when he said, “ . . . the physicists felt a peculiarly intimate responsibility for suggesting, for supporting, and in the end, in large measure, for achieving the realization of atomic weapons . . . . the physicists have known sin, and this is a knowledge which they cannot lose”? Was Hans Albrecht Bethe, Director of the Theoretical Division of Los Alamos during the Manhattan Project, anti-science when 50 years later he called upon fellow scientists to refuse to make atomic weapons?

Science can serve corporate interests or it can serve the interests of humanity. There will certainly be places where the two will intersect, but there will always be places where they will be in opposition and science cannot serve them both. Is it “anti-science” to insist that, where the interests of the two are opposed, science must serve humanity over corporations? Certainly not. A far better description would be “pro-humanity.” We are not even close to being able to say that science is currently putting humanity’s interests first, however, and while it may be prudent for individual scientists to stick with the tribe in order to further their careers, it cannot be prudent for us as a human collective to let corporate interests govern what that tribe thinks and does.

Until the day we can say that we truly use science in service to humanity first, not only is it prudent for us to question, analyze, and even scrutinize “scientific consensus” from a humanist viewpoint, it is also incumbent upon us to do so.

~ Professor

For more by Professor, click here.

I’ve discussed on many occasions over the years how antivaccine activists really, really don’t want to be known as «antivaccine.» Indeed, when they are called «antivaccine» (usually quite correctly, given their words and deeds), many of them will clutch their pearls in indignation, rear up in self-righteous anger, and retort that they are «not antivaccine» but rather «pro-vaccine safety,» «pro-health freedom,» «parental rights,» or some other antivaccine dog whistle that sounds superficially reasonable. In the meantime, they continue to do their best to demonize vaccines as dangerous, «toxin»-laden, immune-system destroying, brain-damaging causes of autism, autoimmune diseases, asthma, diabetes, and any number of other health issues. To them, vaccines are «disease matter» that will sap and contaminate their children’s precious bodily DNA. After all, as I like to say, that’s what it means to be «antivaccine.» Well, that combined with the arrogance of ignorance.

If there’s one thing that rivals how much antivaccinationists detest being called «antivaccine,» it’s being called antiscience. To try to deny that they are antiscience, they will frequently invoke ridiculous analogies such as claiming that being for better car safety does not make one «anti-car» and the like. It is here that the Dunning-Kruger effect comes to the fore, wherein antivaccine activists think that they understand as much or more than actual scientists because of their education and self-taught Google University courses on vaccines, that their pronouncements on vaccines should be taken seriously. If there are two antivaccine blogs that epitomize the Dunning-Kruger effect, they are Age of Autism and, of course, the most hilariously inappropriately named given her history, but nonetheless it’s worth taking a look at her latest post, Anti-science: “You Keep Using That Word. I Do Not Think It Means What You Think It Means.”

Actually, it does. And if The Professor is going to spend nearly 7,000 words riffing on a title derived from a famous The Princess Bride quote, my retort can only be: «Science. You keep using that word. I do not think it means what you think it means.»

Not surprisingly, «The Professor» feels compelled to begin by asserting her alleged science bona fides. Describing herself as a «geeky physics major» who nonetheless has the temerity to «question» vaccine science, she declares herself «irritated enough by this journalistic trend to rebut to the popular conception of those who question vaccine science as ‘anti-science.'» What appears to have particularly irritated her and sparked this screed is a rather good article by Joel Achenbach from the March issue of National Geographic entitled Why Do Many Reasonable People Doubt Science? «The Professor» is particularly incensed by a passage in the article in which Achenbach makes the case that people who «doubt science» are, as she puts it, «driven by emotion.» Of course, that’s not exactly the argument that Achenbach does make. His argument, as you will see if you read his article, is considerably more nuanced than that. Rather, Achenbach points out observations that have been discussed here time and time again, such as how the scientific method sometimes leads to findings that are «less than self-evident, often mind-blowing, and sometimes hard to swallow,» citing Galileo and Charles Darwin as two prominent examples of this phenomenon, as well as modern resistance to climate science that concludes that human beings are significantly changing the climate through our production of CO2. Not surprisingly, Darwin’s theory is still doubted by many today based not on evidence but rather on its conflict with deeply held fundamentalist religious beliefs.

Achenbach also makes this point:

Even when we intellectually accept these precepts of science, we subconsciously cling to our intuitions—what researchers call our naive beliefs. A recent study by Andrew Shtulman of Occidental College showed that even students with an advanced science education had a hitch in their mental gait when asked to affirm or deny that humans are descended from sea animals or that Earth goes around the sun. Both truths are counterintuitive. The students, even those who correctly marked “true,” were slower to answer those questions than questions about whether humans are descended from tree-dwelling creatures (also true but easier to grasp) or whether the moon goes around the Earth (also true but intuitive). Shtulman’s research indicates that as we become scientifically literate, we repress our naive beliefs but never eliminate them entirely. They lurk in our brains, chirping at us as we try to make sense of the world.

Most of us do that by relying on personal experience and anecdotes, on stories rather than statistics. We might get a prostate-specific antigen test, even though it’s no longer generally recommended, because it caught a close friend’s cancer—and we pay less attention to statistical evidence, painstakingly compiled through multiple studies, showing that the test rarely saves lives but triggers many unnecessary surgeries. Or we hear about a cluster of cancer cases in a town with a hazardous waste dump, and we assume pollution caused the cancers. Yet just because two things happened together doesn’t mean one caused the other, and just because events are clustered doesn’t mean they’re not still random.

We have trouble digesting randomness; our brains crave pattern and meaning. Science warns us, however, that we can deceive ourselves. To be confident there’s a causal connection between the dump and the cancers, you need statistical analysis showing that there are many more cancers than would be expected randomly, evidence that the victims were exposed to chemicals from the dump, and evidence that the chemicals really can cause cancer.

This, of course, is an excellent description of antivaccinationists, except that they no longer accept the precepts of science with respect to vaccines but cling to their «naive beliefs.» They rely on personal experience and anecdotes rather than statistics with respect to the question of whether vaccines cause autism, and no amount of science, seemingly, can persuade them otherwise. The Professor herself is a perfect example of this. She believes herself to be «pro-science» and so she is when science tells her what she wants to believe. When it does not, as in the case of vaccines and autism, she rejects it, spreading her disdain from just vaccine science to all of science. Indeed, her entire post is in general a long diatribe, even more than Orac-ian in length, consisting mainly of three key arguments: the «science was wrong before» trope; the «peer review is shit» trope; and the «pharma shill» gambit.

Achenbach notes that even for scientists the scientific method is a «hard discipline.» And so it is, because, after all, scientists are no less human than any other human being. The only difference between us and the rest of humanity is that we are trained and have made a conscious effort to understand the issues discussed above. We know how easy it is to confuse correlation with causation, to exhibit confirmation bias wherein we tend to remember things that support our world view and forget things that do not, and to let wishful thinking bias us. Even knowing all of that, not infrequently we fall prey to the same errors in thinking that any other human being does. Nowhere is this more true than when we wander outside of our own field, as the inaptly named Professor does when she leaves the world of physics and discusses vaccines or when, for example, a climate scientist discusses vaccines.

The hilarity begins when The Professor cites philosopher Thomas Kuhn’s The Structure of Scientific Revolutions. Even more hilariously, The Professor quotes extensively from the Wikipedia entry on Kuhn’s book, rather than from Kuhn himself. Kuhn’s main idea was that science doesn’t progress by the gradual accretion of knowledge but tends to be episodic in nature. According to Kuhn, observations challenging the existing «paradigm» in a field gradually accumulate until the paradigm itself can no longer stand, at which point a new paradigm is formed that completely replaces the old. Kuhn’s view of science is a fascinating topic in and of itself and I haven’t read that book in many years. However, most scientists tend to dismiss many of Kuhn’s views for several reasons, in particular because Kuhn tends to vastly exaggerate the concept of «paradigm shift.» Particularly galling is his concept of «normal science,» where in the interregnum between scientific revolutions he portrays scientists doing «normal science» (science that is not paradigm-changing) as essentially dotting the i’s and crossing the t’s of the previous revolution. (It’s a very dismissive attitude toward what the vast majority of scientists do.) Indeed, Kuhn’s characterization of the history of science has been referred to as a caricature, and I tend to agree. Certainly, at the very least he exaggerates how completely new paradigms place the old, when in reality when new theories supplant old theories the new must completely encompass the old and explain everything the old did plus the new observations that the old theory cannot. As Cormac O’Rafferty puts it, «The new can only replace the old if it explains all the old did, plus a whole lot more (because as new evidence is uncovered, old evidence also remains).» The best example for this idea that I like to cite is Albert Einstein’s theory of relativity, which did not replace Newtonian physics, but rather expanded on Newtonian physics, which is what relativity simplifies down to when applied to velocities that are such a small fraction of the speed of light that relativistic contributions drop out of the equations because they are so close to zero that it is reasonable to approximate them as zero.

None of these nuances are for The Professor. She misuses and abuses Kuhn to construct a «science was wrong before» argument that is truly risible:

It would seem likely that a journalist writing a high-profile article on science for National Geographic would not only be aware of Kuhn’s work, but would also understand it well. Achenbach seems to understand the evolution of science as inherently provisional and subject to change when new information comes in, but then undercuts that understanding with the claim, “The media would also have you believe that science is full of shocking discoveries made by lone geniuses. Not so. The (boring) truth is that it usually advances incrementally, through the steady accretion of data and insights gathered by many people over many years.”

This statement is patently false. First off, the mainstream media tends to downplay, if not completely ignore, any contributions of “lone geniuses” to science, as exemplified by the 2014 Time magazine cover story proclaiming “Eat Butter! Scientists labeled fat the enemy. Why they were wrong.” Suddenly, everyone was reporting that consumption of fat, in general, and saturated fat, in particular, is not the cause of high serum cholesterol levels and is not in fact bad for you. “Lone geniuses” (also known as “quacks” in the parlance of the old paradigm) understood and accepted these facts 25-30 years ago and have been operating under a completely different paradigm ever since, but it wasn’t until 2014 that a tipping point occurred in mainstream medical circles and the mainstream media finally took note.

Um. No. This change, which arguably The Professor vastly overstates, came about through the very accretion of knowledge. Moreover, as much as I castigate David Katz for his nonsense on other issues (such as his embrace of homeopathy «for the good of the patient»), he did get it (mostly) right when he criticized this ridiculous TIME magazine article for many shortcomings and exaggerations, not the least of which is that there never was a «war on dietary fat» and the seeming attitude that it’s OK to eat all the fat you want now. In any case, this is not the «paradigm shift» that The Professor seems to think it is. Rather it was a correction, which is what science does. The process is often messy, but science does correct itself with time.

Next up, of course, is an attack on peer review, something without which no antivaccine article is complete. Of course, criticism of the peer review process is something many scientists engage in. As I like to paraphrase Winston Churchill quoting a saying about democracy, «It has been said that peer review is the worst way to decide which science is published and funded except all the others that have been tried.» Yes, the peer review process is flawed. However, as is the case with attacks on the very concept of a scientific consensus, when you see general attacks on the concept of peer review, it’s usually a pretty good indication that you’re dealing with a crank. There is little doubt that The Professor is a crank. Naturally, she can’t resist including a section that is nothing more than a big pharma shill argument claiming that the science showing that vaccines are safe and effective must be doubted because everyone’s in the pocket of big pharma. No antivaccine article is complete without a variant of that tired old trope.

Hilariously, The Professor ends by arguing that all that tired, boring, old «normal science» (to borrow Kuhn’s term) is wrong and that True Scientists who perform «Revolutionary Science» (quoting Martha Herbert in the introduction to Robert F. Kennedy, Jr.’s pseudoscientific new anti-thimerosal screed, of all things!) all have «intuition»:

The ability to “utilize this subtlety and context to make important distinctions” that Herbert describes constitutes the difference between the scientific revolutionaries and those who will continue defending an error until long past the point that it has been well and truly proven to be an error. It is an ability that Albert Einstein possessed to a larger degree than most. Einstein felt that “The true sign of intelligence is not knowledge but imagination.” And that “All great achievements of science must start from intuitive knowledge. I believe in intuition and inspiration . . . . At times I feel certain I am right while not knowing the reason.” Interestingly, another well-known scientist whom many consider to have been “revolutionary” was known to place a great deal of emphasis on intuition. Jonas Salk, the creator of the first inactivated polio vaccine to be licensed, even wrote a book called Anatomy of Reality: Merging Intuition and Reason.