When you’re ready to print, just click this button:

Make Print-Friendly

Created by carlita

Can you answer these questions about famous classical composers? Was it one of the three B’s? A composer of the classical era or the romantic era? Test your classical music knowledge with this printable word find puzzle…

Instructions: Look at the hints and/or questions and then search for the composer or musical term in the puzzle. Only the last names are present in the word search for composers’ names.

E

V

K

Y

G

I

R

U

N

M

G

R

C

T

I

I

C

A

T

K

W

Z

V

G

X

X

V

H

W

V

Q

M

B

T

U

S

I

D

M

U

Y

Q

Y

O

U

X

B

A

S

B

Q

N

S

N

U

K

N

A

A

B

N

Y

P

P

T

I

X

I

V

N

Y

U

R

Y

U

Q

E

L

R

P

R

W

V

V

O

B

K

V

J

S

M

F

V

S

M

H

A

Z

L

A

N

E

M

P

H

L

S

K

O

S

A

M

U

I

E

R

B

A

F

H

B

Y

D

R

H

C

N

S

S

M

L

T

G

V

K

A

N

E

E

E

T

C

A

Z

S

D

I

S

R

J

R

G

B

T

B

N

E

S

T

R

A

Z

O

M

E

O

K

K

M

L

U

G

E

K

E

W

B

N

F

K

Q

A

O

D

I

V

S

A

B

S

M

T

X

N

M

U

S

I

F

B

S

E

S

W

I

G

S

C

Z

K

E

K

E

K

C

D

W

S

Y

G

M

W

Z

B

B

Show words that are in the puzzle

Show questions/hints

Words

-

Famous composer of the classical era born in Salzburg and wrote the Magic Flute

Mozart -

German composer known for his 5th Symphony and Für Elise

Beethoven -

This Austrian composer is known for his many waltzes including the Blue Danube

Strauss -

Born in Brooklyn, this 20th century musician composed the music for Porgy and Bess and wrote Rhapsody in Blue

Gershwin -

Known for the Ride of the Valkyries and the ‘Ring’ operas

Wagner -

J. S. Bach was a well-known composer of this musical era

Baroque -

Claude _____ was a French impressionist composer known for La Mer (The Sea)

Debussy -

Composer of the Rite of Spring and the Firebird suite

Stravinsky -

This Hungarian composer was also known for being a virtuoso pianist

Liszt -

Czech composer of the 19th century, known for the opera The Bartered Bride and the symphonic work Má vlast (or «The Moldau»)

Smetana

- Teacher Essentials

- Featured Articles

- Report Card Comments

- Needs Improvement Comments

- Teacher’s Lounge

- New Teachers

- Our Bloggers

- Article Library

- Lesson Plans

- Featured Lessons

- Every-Day Edits

- Lesson Library

- Emergency Sub Plans

- Character Education

- Lesson of the Day

- 5-Minute Lessons

- Learning Games

- Lesson Planning

- Standards

- Subjects Center

- Teaching Grammar

- Admin

- Featured Articles

- Hot Topics

- Leadership Resources

- Parent Newsletter Resources

- Advice from School Leaders

- Programs, Strategies and Events

- Principal Toolbox

- Administrator’s Desk

- Interview Questions

- Professional Learning Communities

- Teachers Observing Teachers

- Tech

- Featured Articles

- Article Library

- Tech Lesson Plans

- Science, Math & Reading Games

- WebQuests

- Tech in the Classroom

- Tech Tools

- Web Site Reviews

- Creating a WebQuest

- Digital Citizenship

- PD

- Featured PD Courses

- Online PD

- Classroom Management

- Responsive Classroom

- School Climate

- Dr. Ken Shore: Classroom Problem Solver

- Professional Development Resources

- Graduate Degrees For Teachers

- Worksheets & Printables

- Worksheet Library

- Highlights for Children

- Venn Diagram Templates

- Reading Games

- Word Search Puzzles

- Math Crossword Puzzles

- Friday Fun

- Geography A to Z

- Holidays & Special Days

- Internet Scavenger Hunts

- Student Certificates

- Tools & Templates

WHAT’S NEW

Lesson Plans

- General Archive

- By Subject

- The Arts

- Health & Safety

- History

- Interdisciplinary

- Language Arts

- Lesson of the Day

- Math

- PE & Sports

- Science

- Social Science

- Special Ed & Guidance

- Special Themes

- Top LP Features

- Article Archive

- User Submitted LPs

- Box Cars Math Games

- Every Day Edits

- Five Minute Fillers

- Holiday Lessons

- Learning Games

- Lesson of the Day

- News for Kids

- ShowBiz Science

- Student Engagers

- Work Sheet Library

- More LP Features

- Calculator Lessons

- Coloring Calendars

- Friday Fun Lessons

- Math Machine

- Month of Fun

- Reading Machine

- Tech Lessons

- Writing Bug

- Work Sheet Library

- All Work Sheets

- Critical Thinking Work Sheets

- Animals A to Z

- Backpacktivities

- Coloring Calendars

- EveryDay Edits

- Geography A to Z

- Hunt the Fact Monster

- Internet Scavenger Hunts

- It All Adds Up Math Puzzles

- Make Your Own Work Sheets

- Math Cross Puzzles

- Mystery State

- Math Practice 4 You

- News for Kids

- Phonics Word Search Puzzles

- Readers Theater Scripts

- Sudoku Puzzles

- Vocabulous!

- Word Search Puzzles

- Writing Bug

- Back to School

- Back to School Archive

- Icebreaker Activities

- Preparing for the First Day

- Ideas for All Year

- The Homework Dilemma

- First Year Teachers

- Don’t Forget the Substitute

- More Great Ideas for the New School Year

- Early Childhood

- Best Books for Educators

- Templates

- Assessments

- Award Certificates

- Bulletin Board Resources

- Calendars

- Classroom Organizers

- Clip Art

- Graphic Organizers

- Newsletters

- Parent Teacher Communications

- More Templates

EW Lesson Plans

EW Professional Development

EW Worksheets

Chatter

Trending

125 Report Card Comments

It’s report card time and you face the prospect of writing constructive, insightful, and original comments on a couple dozen report cards or more. Here are 125 positive report card comments for you to use and adapt!

Struggling Students? Check out our Needs Improvement Report Card Comments for even more comments!

You’ve reached the end of another grading period, and what could be more daunting than the task of composing insightful, original, and unique comments about every child in your class? The following positive statements will help you tailor your comments to specific children and highlight their strengths.

You can also use our statements to indicate a need for improvement. Turn the words around a bit, and you will transform each into a goal for a child to work toward. Sam cooperates consistently with others becomes Sam needs to cooperate more consistently with others, and Sally uses vivid language in writing may instead read With practice, Sally will learn to use vivid language in her writing. Make Jan seeks new challenges into a request for parental support by changing it to read Please encourage Jan to seek new challenges.

Whether you are tweaking statements from this page or creating original ones, check out our Report Card Thesaurus [see bottom of the page] that contains a list of appropriate adjectives and adverbs. There you will find the right words to keep your comments fresh and accurate.

We have organized our 125 report card comments by category. Read the entire list or click one of the category links below to jump to that list.

AttitudeBehaviorCharacterCommunication SkillsGroup WorkInterests and TalentsParticipationSocial SkillsTime ManagementWork Habits

Attitude

The student:

is an enthusiastic learner who seems to enjoy school.

exhibits a positive outlook and attitude in the classroom.

appears well rested and ready for each day’s activities.

shows enthusiasm for classroom activities.

shows initiative and looks for new ways to get involved.

uses instincts to deal with matters independently and in a positive way.

strives to reach their full potential.

is committed to doing their best.

seeks new challenges.

takes responsibility for their learning.

Behavior

The student:

cooperates consistently with the teacher and other students.

transitions easily between classroom activities without distraction.

is courteous and shows good manners in the classroom.

follows classroom rules.

conducts themselves with maturity.

responds appropriately when corrected.

remains focused on the activity at hand.

resists the urge to be distracted by other students.

is kind and helpful to everyone in the classroom.

sets an example of excellence in behavior and cooperation.

Character

The student:

shows respect for teachers and peers.

treats school property and the belongings of others with care and respect.

is honest and trustworthy in dealings with others.

displays good citizenship by assisting other students.

joins in school community projects.

is concerned about the feelings of peers.

faithfully performs classroom tasks.

can be depended on to do what they are asked to do.

seeks responsibilities and follows through.

is thoughtful in interactions with others.

is kind, respectful and helpful when interacting with his/her peers

is respectful of other students in our classroom and the school community

demonstrates responsibility daily by caring for the materials in our classroom carefully and thoughtfully

takes his/her classroom jobs seriously and demonstrates responsibility when completing them

is always honest and can be counted on to recount information when asked

is considerate when interacting with his/her teachers

demonstrates his/her manners on a daily basis and is always respectful

has incredible self-discipline and always gets his/her work done in a timely manner

can be counted on to be one of the first students to begin working on the task that is given

perseveres when faced with difficulty by asking questions and trying his/her best

does not give up when facing a task that is difficult and always does his/her best

is such a caring boy/girl and demonstrates concern for his/her peers

demonstrates his/her caring nature when helping his/her peers when they need the assistance

is a model citizen in our classroom

is demonstrates his/her citizenship in our classroom by helping to keep it clean and taking care of the materials in it

can always be counted on to cooperate with his/her peers

is able to cooperate and work well with any of the other students in the class

is exceptionally organized and takes care of his/her things

is always enthusiastic when completing his/her work

is agreeable and polite when working with others

is thoughtful and kind in his/her interactions with others

is creative when problem solving

is very hardworking and always completes all of his/her work

is patient and kind when working with his/her peers who need extra assistance

trustworthy and can always be counted on to step in and help where needed

Communication Skills

The student:

has a well-developed vocabulary.

chooses words with care.

expresses ideas clearly, both verbally and through writing.

has a vibrant imagination and excels in creative writing.

has found their voice through poetry writing.

uses vivid language in writing.

writes clearly and with purpose.

writes with depth and insight.

can make a logical and persuasive argument.

listens to the comments and ideas of others without interrupting.

Group Work

The student:

offers constructive suggestions to peers to enhance their work.

accepts the recommendations of peers and acts on them when appropriate.

is sensitive to the thoughts and opinions of others in the group.

takes on various roles in the work group as needed or assigned.

welcomes leadership roles in groups.

shows fairness in distributing group tasks.

plans and carries out group activities carefully.

works democratically with peers.

encourages other members of the group.

helps to keep the work group focused and on task.

Interests and Talents

The student:

has a well-developed sense of humor.

holds many varied interests.

has a keen interest that has been shared with the class.

displays and talks about personal items from home when they relate to topics of study.

provides background knowledge about topics of particular interest to them.

has an impressive understanding and depth of knowledge about their interests.

seeks additional information independently about classroom topics that pique interest.

reads extensively for enjoyment.

frequently discusses concepts about which they have read.

is a gifted performer.

is a talented artist.

has a flair for dramatic reading and acting.

enjoys sharing their musical talent with the class.

Participation

The student:

listens attentively to the responses of others.

follows directions.

takes an active role in discussions.

enhances group discussion through insightful comments.

shares personal experiences and opinions with peers.

responds to what has been read or discussed in class and as homework.

asks for clarification when needed.

regularly volunteers to assist in classroom activities.

remains an active learner throughout the school day.

Social Skills

The student:

makes friends quickly in the classroom.

is well-liked by classmates.

handles disagreements with peers appropriately.

treats other students with fairness and understanding.

is a valued member of the class.

has compassion for peers and others.

seems comfortable in new situations.

enjoys conversation with friends during free periods.

chooses to spend free time with friends.

Time Management

The student:

tackles classroom assignments, tasks, and group work in an organized manner.

uses class time wisely.

arrives on time for school (and/or class) every day.

is well-prepared for class each day.

works at an appropriate pace, neither too quickly or slowly.

completes assignments in the time allotted.

paces work on long-term assignments.

sets achievable goals with respect to time.

completes make-up work in a timely fashion.

Work Habits

The student:

is a conscientious, hard-working student.

works independently.

is a self-motivated student.

consistently completes homework assignments.

puts forth their best effort into homework assignments.

exceeds expectations with the quality of their work.

readily grasps new concepts and ideas.

generates neat and careful work.

checks work thoroughly before submitting it.

stays on task with little supervision.

displays self-discipline.

avoids careless errors through attention to detail.

uses free minutes of class time constructively.

creates impressive home projects.

Related: Needs Improvement Report Card Comments for even more comments!

Student Certificates!

Recognize positive attitudes and achievements with personalized student award certificates!

Report Card Thesaurus

Looking for some great adverbs and adjectives to bring to life the comments that you put on report cards? Go beyond the stale and repetitive With this list, your notes will always be creative and unique.

Adjectives

attentive, capable, careful, cheerful, confident, cooperative, courteous, creative, dynamic, eager, energetic, generous, hard-working, helpful, honest, imaginative, independent, industrious, motivated, organized, outgoing, pleasant, polite, resourceful, sincere, unique

Adverbs

always, commonly, consistently, daily, frequently, monthly, never, occasionally, often, rarely, regularly, typically, usually, weekly

Copyright© 2022 Education World

Back to Geography Lesson Plan

Where Did Foods Originate?

(Foods of the New World and Old World)

Subjects

Arts & Humanities

—Language Arts

Educational Technology

Science

—Agriculture

Social Studies

—Economics

—Geography

—History

—-U.S. History

—-World History

—Regions/Cultures

Grade

K-2

3-5

6-8

9-12

Advanced

Brief Description

Students explore how New World explorers helped change the Old World’s diet (and vice versa).

Objectives

Students will

learn about changes that occurred in the New World and Old World as a result of early exploration.

use library and Internet sources to research food origins. (Older students only.)

create a bulletin-board map illustrating the many foods that were shared as a result of exploration.

Keywords

Columbus, explorers, origin, food, timeline, plants, map, New World, Old World, colonies, colonial, crops, media literacy, products, consumer

Materials Needed:

library and/or Internet access (older students only)

outline map of the world (You might print the map on a transparency; then use an overhead projector to project and trace a large outline map of the world onto white paper on a bulletin board.)

magazines (optional)

Lesson Plan

The early explorers to the Americas were exposed to many things they had never seen before. Besides strange people and animals, they were exposed to many foods that were unknown in the Old World. In this lesson, you might post an outline map of the continents on a bulletin board. Have students use library and/or Internet resources (provided below) to research some of the edible items the first explorers saw for the first time in the New World. On the bulletin board, draw an arrow from the New World (the Americas) to the Old World (Europe, Asia, Africa) and post around it drawings or images (from magazines or clip art) of products discovered in the New World and taken back to the Old World.

Soon, the explorers would introduce plants/foods from the Old World to the Americas. You might draw a second arrow on the board — from the Old World to the New World — and post appropriate drawings or images around it.

Adapt the Lesson for Younger Students

Younger students will not have the ability to research foods that originated in the New and Old World. You might adapt the lesson by sharing some of the food items in the Food Lists section below. Have students collect or draw pictures of those items for the bulletin board display.

Resources

In addition to library resources, students might use the following Internet sites as they research the geographic origins of some foods:

Curry, Spice, and All Things Nice: Food Origins

The Food Timeline

Native Foods of the Americas

A Harvest Gathered: Food in the New World

We Are What We Eat Timeline (Note: This resource is an archived resource; the original page is no longer live and updated.)

Food Lists Our research uncovered the Old and New World foods below. Students might find many of those and add them to the bulletin board display. Notice that some items appear on both lists — beans, for example. There are many varieties of beans, some with New World origins and others with their origins in the Old World. In our research, we found sources that indicate onions originated in the New and sources that indicate onions originated in the Old World. Students might create a special question mark symbol to post next to any item for which contradictory sources can be found

Note: The Food Timeline is a resource that documents many Old World products. This resource sets up a number of contradictions. For example:

Many sources note that tomatoes originated in the New World; The Food Timeline indicates that tomatoes were introduced to the New World in 1781.

The Food Timeline indicates that strawberries and raspberries were available in the 1st century in Europe; other sources identify them as New World commodities.

Foods That Originated in the New World: artichokes, avocados, beans (kidney and lima), black walnuts, blueberries, cacao (cocoa/chocolate), cashews, cassava, chestnuts, corn (maize), crab apples, cranberries, gourds, hickory nuts, onions, papayas, peanuts, pecans, peppers (bell peppers, chili peppers), pineapples, plums, potatoes, pumpkins, raspberries, squash, strawberries, sunflowers, sweet potatoes, tobacco, tomatoes, turkey, vanilla, wild cherries, wild rice.

Foods That Originated in the Old World: apples, bananas, beans (some varieties), beets, broccoli, carrots, cattle (beef), cauliflower, celery, cheese, cherries, chickens, chickpeas, cinnamon, coffee, cows, cucumbers, eggplant, garlic, ginger, grapes, honey (honey bees), lemons, lettuce, limes, mangos, oats, okra, olives, onions, oranges, pasta, peaches, pears, peas, pigs, radishes, rice, sheep, spinach, tea, watermelon, wheat, yams.

Extension Activities

Home-school connection. Have students and their parents search their food cupboards at home; ask each student to bring in two food items whose origin can be traced to a specific place (foreign if possible, domestic if not). Labels from those products will be sufficient, especially if the products are in breakable containers. Place those labels/items around a world map; use yarn to connect each label to the location of its origin on the map.

Media literacy. Because students will research many sources, have them list the sources for the information they find about each food item. Have them place an asterisk or checkmark next to the food item each time they find that item in a different source. If students find a food in multiple sources, they might consider it «verified»; those foods they find in only one source might require additional research to verify.

Assessment

Invite students to agree or disagree with the following statement:The early explorers were surprised by many of the foods they saw in the New World.

Have students write a paragraph in support of their opinion.

Lesson Plan Source

Education World

Submitted By

Gary Hopkins

National Standards

LANGUAGE ARTS: EnglishGRADES K — 12NL-ENG.K-12.2 Reading for UnderstandingNL-ENG.K-12.8 Developing Research SkillsNL-ENG.K-12.9 Multicultural UnderstandingNL-ENG.K-12.12 Applying Language Skills

SOCIAL SCIENCES: EconomicsGRADES K — 4NSS-EC.K-4.1 Productive ResourcesNSS-EC.K-4.6 Gain from TradeGRADES 5 — 8NSS-EC.5-8.1 Productive ResourcesNSS-EC.5-8.6 Gain from TradeGRADES 9 — 12NSS-EC.9-12.1 Productive ResourcesNSS-EC.9-12.6 Gain from Trade

SOCIAL SCIENCES: GeographyGRADES K — 12NSS-G.K-12.1 The World in Spatial TermsNSS-G.K-12.2 Places and Regions

SOCIAL SCIENCES: U.S. HistoryGRADES K — 4NSS-USH.K-4.1 Living and Working together in Families and Communities, Now and Long AgoNSS-USH.K-4.3 The History of the United States: Democratic Principles and Values and the People from Many Cultures Who Contributed to Its Cultural, Economic, and Political HeritageNSS-USH.K-4.4 The History of Peoples of Many Cultures Around the WorldGRADES 5 — 12NSS-USH.5-12.1 Era 1: Three Worlds Meet (Beginnings to 1620)NSS-USH.5-12.2 Era 2: Colonization and Settlement (1585-1763)NSS-WH.5-12.6 Global Expansion and Encounter, 1450-1770

TECHNOLOGYGRADES K — 12NT.K-12.1 Basic Operations and ConceptsNT.K-12.5 Technology Research Tools

Find many more great geography lesson ideas and resources in Education World’s Geography Center.

Click here to return to this week’s World of Learning lesson plan page.

Updated 10/11/12

50 «Needs Improvement» Report Card Comments

Having a tough time finding the right words to come up with «areas for improvement» comments on your students’ report cards? Check out our helpful suggestions to find just the right one!

The following statements will help you tailor your comments to specific children and highlight their areas for improvement.

Be sure to check out our 125 Report Card Comments for positive comments!

Needs Improvement- all topics

is a hard worker, but has difficulty staying on task.

has a difficult time staying on task and completing his/her work.

needs to be more respectful and courteous to his/her classmates.

needs to listen to directions fully so that he/she can learn to work more independently.

is not demonstrating responsibility and needs to be consistently reminded of how to perform daily classroom tasks.

works well alone, but needs to learn how to work better cooperatively with peers.

does not have a positive attitude about school and the work that needs to be completed.

struggles with completing his/her work in a timely manner.

gives up easily when something is difficult and needs extensive encouragement to attempt the task.

gets along with his/her classmates well, but is very disruptive during full group instruction.

has a difficult time using the materials in the classroom in a respectful and appropriate manner.

has a difficult time concentrating and gets distracted easily.

is having a difficult time with math. Going over _____ at home would help considerably.

is having a very difficult time understanding math concepts for his/her grade level. He/she would benefit from extra assistance.

could benefit from spending time reading with an adult every day.

is enthusiastic, but is not understanding ____. Additional work on these topics would be incredibly helpful.

is having difficulty concentrating during math lessons and is not learning the material that is being taught because of that.

understands math concepts when using manipulatives, but is having a difficult time learning to ____ without them.

is a very enthusiastic reader. He/she needs to continue to work on _____ to make him/her a better reader.

needs to practice reading at home every day to help make him/her a stronger reader.

needs to practice his/her sight words so that he/she knows them on sight and can spell them.

needs to work on his/her spelling. Practicing at home would be very beneficial.

can read words fluently, but has a difficult time with comprehension. Reading with ______ every day would be helpful.

could benefit from working on his/her handwriting. Slowing down and taking more time would help with this.

is having difficulty writing stories. Encouraging him/her to tell stories at home would help with this.

has a difficult time knowing when it is appropriate to share his/her thoughts. We are working on learning when it is a good time to share and when it is a good time to listen.

needs to work on his/her time management skills. _______is able to complete his/her work, but spends too much time on other tasks and rarely completes his/her work.

needs reminders about the daily classroom routine. Talking through the classroom routine at home would be helpful.

is having a difficult time remembering the difference between short and long vowel sounds. Practicing these at home would be very helpful.

is struggling with reading. He/she does not seem to enjoy it and does not want to do it. Choosing books that he/she like and reading them with him/her at home will help build a love of reading.

frequently turns in incomplete homework or does not hand in any homework. Encouraging _______to complete his/her homework would be very helpful.

does not take pride in his/her work. We are working to help him/her feel good about what he/she accomplishes.

does not actively participate in small group activities. Active participation would be beneficial.

has a difficult time remembering to go back and check his/her work. Because of this, there are often spelling and grammar mistakes in his/her work.

does not much effort into his/her writing. As a result, his/her work is often messy and incomplete.

is struggling to understand new concepts in science. Paying closer attention to the class discussions and the readings that we are doing would be beneficial.

is reading significantly below grade level. Intervention is required.

does not write a clear beginning, middle and end when writing a story. We are working to identify the parts of the stories that he/she is writing.

is struggling to use new reading strategies to help him/her read higher level books.

is wonderful at writing creative stories, but needs to work on writing nonfiction and using facts.

has a difficult time understanding how to solve word problems.

needs to slow down and go back and check his/her work to make sure that all answers are correct.

is not completing math work that is on grade level. Intervention is required.

is struggling to understand place value.

is very enthusiastic about math, but struggles to understand basic concepts.

has a difficult time remembering the value of different coins and how to count them. Practicing this at home would be helpful.

would benefit from practicing math facts at home.

is very engaged during whole group math instruction, but struggles to work independently.

is able to correctly answer word problems, but is unable to explain how he/she got the answer.

is having a difficult time comparing numbers.

Related: 125 Report Card Comments for positive comments!

Student Award Certificates!

Recognize positive attitudes and achievements with personalized student award certificates!

Copyright© 2020 Education World

75 Mill St. Colchester, CT 06415

Receive timely lesson ideas and PD tips

Sitemap

Sign up for our free weekly newsletter and receive

top education news, lesson ideas, teaching tips and more!

No thanks, I don’t need to stay current on what works in education!

COPYRIGHT 1996-2016 BY EDUCATION WORLD, INC. ALL RIGHTS RESERVED.

Introduction

Question Answering is a popular application of NLP. Transformer models trained on big datasets have dramatically improved the state-of-the-art results on Question Answering.

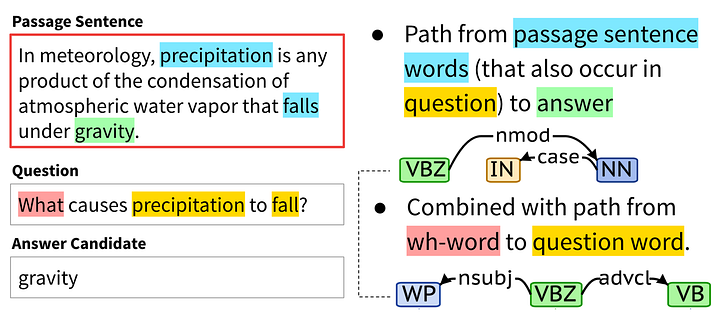

The question answering task can be formulated in many ways. The most common application is an extractive question answering on a small context. The SQuAD dataset is a popular dataset where given a passage and a question, the model selects the word(s) representing the answer. This is illustrated in Fig 1 below.

However, most practical applications of question answering involve very long texts like a full website or many documents in the database. Question Answering with voice assistants like Google Home/Alexa involves searching through a massive set of documents on the web to get the right answer.

In this blog, we build a search and question answering application using Haystack. This application searches through Physics, Biology and Chemistry textbooks from Grades 10, 11 and 12 to answer user questions. The code is made publicly available on Github here. You can also use the Colab notebook here to test the model out.

Search and Ranking

So, how do we retrieve answers from a massive database?

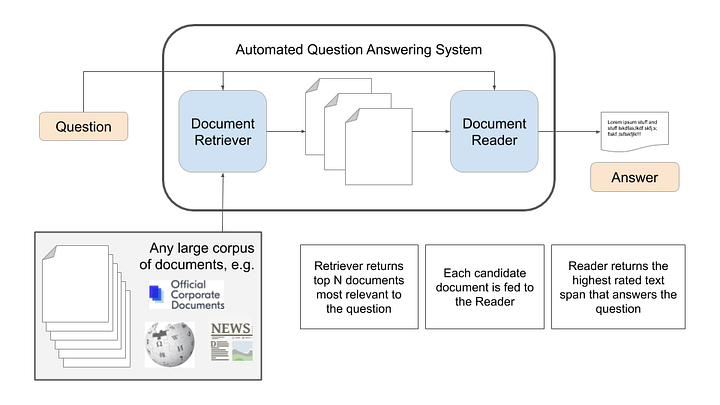

We break the process of Question Answering into two steps:

- Search and Ranking: Retrieving likely passages from our database that may have the answer

- Reading Comprehension: Finding the answer among the retrieved passages

This process is also illustrated in Fig 2 below.

The search and ranking process usually involves indexing the data into a database like ElasticSearch or FAISS. These databases have implemented algorithms for very fast search across millions of records. The search can be done using the words in the query using TFIDF or BM25, or the search can be done considering the semantic meaning of the text by embedding the text or a combination of these can also be used. In our code, we use a TFIDF based retriever from Haystack. The entire database is uploaded to an ElasticSearch database and the search is done using a TFIDF Retriever which sorts results by score. To learn about different types of searches, please check out this blog by Haystack.

The top-k passages from the retriever are then sent to a Question-Answering model to get possible answers. In this blog, we try 2 models — 1. BERT model trained on SQuAD dataset and 2. A Roberta model trained on the SQuAD dataset. The reader in Haystack helps load these models and get the top K answers by running these models on the retrieved passages from the search.

Building our Question-Answering Application

We download PDF files from the NCERT website. There are about 130 PDF chapters spanning 2500 pages. We have created a combined PDF which is available on google drive here. If using Colab upload this PDF to your environment.

We use the HayStack PDF converter to read the PDF to a text file

converter = PDFToTextConverter(remove_numeric_tables=True, valid_languages=["en"])

doc_pdf = converter.convert(file_path="/content/converter_merged.pdf", meta=None)[0]

Then we split the document into many smaller documents using the PreProcessor class in Haystack.

preprocessor = PreProcessor(

clean_empty_lines=True,

clean_whitespace=True,

clean_header_footer=False,

split_by="word",

split_length=100,

split_respect_sentence_boundary=True)

dict1 = preprocessor.process([doc_pdf])

Each document is about 100 words long. The pre-processor creates 10K documents.

Next, we index these documents to an ElasticSearch database and initialize a TFIDF retriever.

retriever = TfidfRetriever(document_store=document_store)

document_store.write_documents(dict1)

The retriever will run a search against the documents in the ElasticSearch database and rank the results.

Next, we initialize the Question-Answering pipeline. We can load any Question Answering model on the HuggingFace hub using the FARM or Transformer Reader.

reader = TransformersReader(model_name_or_path="deepset/bert-large-uncased-whole-word-masking-squad2", tokenizer="bert-base-uncased", use_gpu=-1)

Finally, we combine the retriever and ranker into a pipeline to run them together.

from haystack.pipelines import ExtractiveQAPipeline

pipe = ExtractiveQAPipeline(reader, retriever)

That’s all! We are now ready to test our pipeline.

Testing the Pipeline

To test the pipeline, we run our questions through it setting our preferences for top_k results from retriever and reader.

prediction = pipe.run(query="What is ohm's law?", params={"Retriever": {"top_k": 10}, "Reader": {"top_k": 3}}

When we ask the pipeline to explain Ohm’s law, the top results are quite relevant.

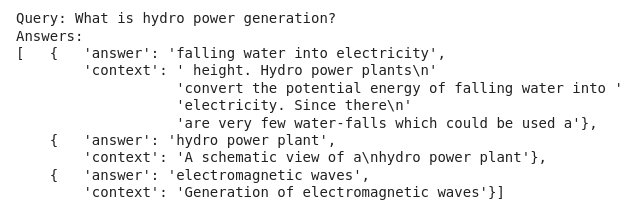

Next, we ask about hydropower generation.

prediction = pipe.run(query="What is hydro power generation?", params={"Retriever": {"top_k": 10}, "Reader": {"top_k": 3}}

We have tested the pipeline on many questions and it performs quite well. It is fun to read on a new topic in the source PDFs and ask a question related to it. Often, I find that the paragraph I read was in the top 3 results.

My experimentation suggested mostly similar results from BERT and Roberta with Roberta being slightly better.

Please use the code on Colab or Github to run your own STEM questions through the model.

Conclusion

This blog shows how question answering can be used for many practical applications involving long texts like a database with PDFs, or through a website containing many articles. We use Haystack here to build a relatively simple application. Haystack has many levers to adjust and fine-tune to further improve performance. I hope you pull down the code and run your own experiments. Please share your experience in the comments below.

At Deep Learning Analytics, we are extremely passionate about using Machine Learning to solve real-world problems. We have helped many businesses deploy innovative AI-based solutions. Contact us through our website here if you see an opportunity to collaborate.

References

Download Article

Download Article

Making a word search for your kids on a rainy day, your students to help them learn vocabulary, or simply for a bored friend can be a fun activity. You can get as creative as you like—just follow these steps to learn how to create your own word search.

-

1

Decide on the theme of your word search. Picking a theme for the words you want to put in your word search will make the word search seem more professional. If you are making this word search for a child, picking a theme will make the puzzle more understandable. Some example themes include: country names, animals, states, flowers, types of food, etc.

- If you do not want to a have a theme for your word search, you don’t have to. It is up to you what you decide to put into your word search.

- If you are making the word search as a gift, you could personalize the word search for the person you are making it for by using themes like, ‘names of relatives’ or ‘favorite things.’

-

2

Select the words you want to use. If you decided to go with a theme, pick words that match that theme. The number of words you choose depends on the size of your grid. Using shorter words will allow you to include more words in your puzzle. Word searches generally have 10-20 words. If you are making a very large puzzle, you could have more than that.

- Examples of words for the theme ‘animals’: dog, cat, monkey, elephant, fox, sloth, horse, jellyfish, donkey, lion, tiger, bear (oh my!), giraffe, panda, cow, chinchilla, meerkat, dolphin, pig, coyote, etc.

Advertisement

-

3

Look up the spelling of words. Do this particularly if you are using more obscure words or the names of foreign countries. Misspelling words will lead to confusion (and someone potentially giving up on your puzzle.)

Advertisement

-

1

Leave space at the top of your page. You will want to add a title to your word search once you have drawn your grid. If you have a theme, you can title your word search accordingly. If you don’t have a theme, simply write ‘Word Search’ across the top of your page.

- You can also make your grid on the computer. To make a grid in versions of word prior to Word 2007: Select ‘View’ at the top of the page. Select ‘Toolbars’ and make sure the ‘Drawing’ toolbar is selected. Click on ‘Draw’ (it looks like an ‘A’ with a cube and a cylinder). Click ‘Draw’ and then click ‘Grid’. A grid option box will pop up—make sure you select ‘Snap to Grid’ and then select any other options you would like for your grid. Click ‘OK’ and make your grid.

- To make a grid in Word 2007: Click ‘Page Layout’ at the top of the page and click the ‘Align’ list within the ‘Arrange’ grouping. Click ‘Grid settings’ and make sure ‘Snap to Grid’ is selected. Select any other options you want for your grid. Click ‘ok’ and draw your grid.

-

2

Draw a grid by hand. It is easiest to make word searches when using graph paper, although you do not have to use graph paper. The standard word search box is 10 squares by 10 squares. Draw a square that is 10 centimeter (3.9 in) by 10 centimeter (3.9 in) and then make a line at each centimeter across the box. Mark each centimeter going down the box as well.

- You do not need to use a 10×10 grid. You can make your grid as big or as small as you like, just remember that you need to be able to draw small squares within your grid. You can make your grid into the shape of a letter (perhaps the letter of the person’s name who you are making it for?) or into an interesting shape.

-

3

Use a ruler to draw lines. Use a pencil to draw the lines evenly and straightly. You need to create small, evenly-sized squares within your grid. The squares can be as big or as small as you like.

- If you are giving the word search to a child, you might consider making the squares larger. Making larger squares will make the puzzle a bit easier because each individual square and letter will be easier to see. To make your puzzle harder, make smaller, closer together squares.

Advertisement

-

1

Make a list of your words. Place the list next to your grid. You can label your words #1, #2 etc. if you want to. Write your words out clearly so that the person doing the word search knows exactly which word he or she is looking for.

-

2

Write all of your words into your grid. Put one letter in each box. You can write them backward, forward, diagonally, and vertically. Try to evenly distribute the words throughout the grid. Get creative with your placements. Make sure to write all of the words that you have listed next to the grid so that they are actually in the puzzle. It would be very confusing to be looking for a word in the word search that isn’t actually there.

- Depending on who you are giving the puzzle to, you may wish to make your letters larger or smaller. If you want your puzzle to be a little less challenging, like if you are giving it to a child, you might consider writing your letters larger. If you want your puzzle to be more challenging, make your letters smaller.

-

3

Create an answer key. Once you have finished writing in all the words, make a photocopy of it and highlight all of the hidden words. This will serve as your answer key so whoever does your puzzle will be able to see if they got everything right (or can get help if they are stuck on one word) without the confusion of the extra, random letters.

-

4

Fill in the rest of the blank squares. Once you have written all of your chosen words into the puzzle, fill the still empty squares with random letters. Doing this distracts the person from finding the words in the search.

- Make sure that you do not accidentally make other words out of your extra letters, especially other words that fit into your theme. This will be very confusing for the person doing the puzzle.

-

5

Make copies. Only do this if you are planning on giving your word search to more than one person.

Advertisement

Add New Question

-

Question

Why do I not use lowercase letters?

Uppercase makes the letters clearer and easier to see.

-

Question

How do I make a crossword?

Rene Teboe

Community Answer

Websites like puzzle-maker.com let you make word searches and crossword puzzles by just typing in the words and clues you want.

-

Question

How do I find a word in a word search?

Look at the beginning letter, then go line by line down the word search until you find the first letter. When you find one, look at the letters surrounding it to see if there is the next letter of the word. If there aren’t any of the next letters there, carry on until you find the word.

See more answers

Ask a Question

200 characters left

Include your email address to get a message when this question is answered.

Submit

Advertisement

Video

-

Write all the letters in capitals so that it doesn’t give away any clues.

-

Make the letters easy to read.

-

If you do not want to take the time to make your word search by hand or in a document on your computer, there are many websites where you can make your own word search online. Type ‘make a word search’ into your search engine and you are guaranteed to find many websites that will generate word searches for you.

Thanks for submitting a tip for review!

Advertisement

Things You’ll Need

- Pen or Pencil

- Paper

- Eraser

- Ruler

About This Article

Article SummaryX

After you’ve decided what words you want in your word search, use a ruler to draw a grid on a piece of graph paper. Fill in your word search by writing your words in the grid, but make sure to spread them out and vary writing them so words are written vertically, diagonally, backwards, and forward. Once you’ve added all of your words, add other letters in the blank squares. Finally, write out a list of your words next to the grid so the person doing the word search knows what they’re looking for. If you want to learn how to make your word search on the computer, keep reading!

Did this summary help you?

Thanks to all authors for creating a page that has been read 141,332 times.

Did this article help you?

7.0 Overview

In January 2011, IBM shocked the world by beating two former Jeopardy! champions with its Watson DeepQA system. The Watson victory was a prominent feature in press reports and the subject of a popular book (Baker, 2013).

This chapter starts by explaining how the IBM Watson system worked. Though it was far from a human-level natural language processing system, it was an amazing accomplishment and is a great example available of how clever programming can make a system appear to be intelligent when nothing could be further from the truth.

Following the discussion of Watson will be a discussion of question-answering research that has taken place since Watson and a description of how AI researchers have attacked the problem of question answering. Question answering research can be divided into five research categories:

(1) Text-to-SQL

Corporations and other organizations have massive amounts of data stored in relational databases. Text-to-SQL systems translate natural language requests for information into SQL commands.

(2) Knowledge Base QA

Knowledge bases store factual information. KB-QA systems translate take natural language factual questions as input and retrieve facts from KBs in response.

(3) Text QA

Text QA systems take natural language questions as input and search document collections such as Wikipedia to find answers. In 2018, both Microsoft and Alibaba announced they had built systems that matched human performance on a standardized reading comprehension test. However, as will be explained below, this reading comprehension test is susceptible to clever Watson-like tricks, and the systems that do well on this and similar tests use these tricks and really don’t understand language like people understand language.

(4) Task-oriented personal assistants and social chatbots

Like KB-QA and text-QA systems, personal assistants (e.g. Alexa and Siri) respond to natural language questions. Additionally, they respond to commands and also keep track of the dialogue state in an effort to provide responses that make sense given the history of the dialogue. Academic research on these types of systems is termed Conversational QA (Zaib et al, 2021).

(5) Commonsense reasoning QA

As will be discussed below, many questions can be answered without requiring any high-level reasoning. Commonsense QA datasets are designed with questions that are difficult to answer without knowledge of the real world and the ability to reason about that knowledge.

This chapter will discuss Text-to-SQL systems, KB-QA, and Text-QA. The chatbots chapter will discuss task-oriented personal assistants and social chatbots.

7.1 IBM’s Watson question answering system

Jeopardy! is a popular question-answering TV show in which a contestant picks one of six categories. Then all three contestants are presented with a question from that category. The first contestant to hit a buzzer is given the opportunity to answer the question. (Actually, the question is given in the form of an answer and the answer must be given in the form of a question). There is a significant penalty for wrong answers. All questions must be answered from “memory”. And no access to the internet is allowed.

PIQUANT: Watson’s question answering predecessor

IBM’s Watson question answering system was developed as a follow-on to research on a question-answering system named PIQUANT that was funded by government research grants and that performed well in conference competitions between 1999 and 2005 (Ferrucci, 2012). The PIQUANT system was oriented toward answering simple factual questions of the type that can be answered using a knowledge base.

The IBM Watson question answering project was kicked off in April 2007 and culminated with the stunning victory in January 2011. The team first considered building on PIQUANT. However, after analyzing the performance of prior Jeopardy! winners, the team determined that Watson would need to buzz in for at least 70% of the questions and get 85% correct. As a baseline, they tested the PIQUANT system and found that when it buzzed in 70% of the time, it only got 16% correct.

The basic approach of PIQUANT was to first determine the knowledge base category of the question. A specialized set of handcrafted information extraction rules were created for each high-level category (e.g. people, organizations, and animals) that found the correct instance of the type. This was possible because of the constrained domain of the conference tasks.

That approach doesn’t work for Jeopardy! or for open-domain question answering (i.e. not restricted to a small subject area) in general because there are just too many possible entity types. For example, the YAGO ontology has 350,000 entity types (i.e. classes). Developing 350,000 separate algorithms would be a big effort.

Documents stored in the IBM Watson system

The Jeopardy! rules do not allow contestants to access the internet, so part of the challenge was to collect and organize a huge body of information. Wikipedia was the primary information source as more than 95.47% of Jeopardy! questions can be answered with Wikipedia titles. The IBM Watson team (Carroll et al, 2012) cited this example:

TENNIS: The first U.S. men’s national singles championship, played right here in 1881, evolved into this New York City tournament. (Answer: US Open)

This clue contains 3 facts about the US Open (1st US men’s championship, first played in 1881, now played in New York City) that are all found in the Wikipedia article titled “US Open (tennis)”. The IBM Watson team noted that Wikipedia articles are also key resources when an entity is mentioned in the question (answer). For example:

Aleksander Kwasniewski became the president of this country in 1995. (Answer: Poland)

The second sentence of Kwasniewski’s Wikipedia article says:

He served as the President of Poland from 1995-2005.

The IBM team then analyzed the 4.53% of questions that couldn’t be answered via Wikipedia titles and found several types of questions. Many questions involved dictionary definitions so they incorporated a modified version of Wiktionary (a crowd-sourced dictionary) which, like Wikipedia, was already title-oriented. Other common sources of questions included Bible quotes, song lyrics, quotes, and book and movie plots and they created title-oriented documents for these categories.

For example, for song lyrics, they created one set of documents with the artist as the title and the lyrics in the article and another set with the song title as the title. As will be explained below, the IBM Watson system used many different strategies for matching questions to documents. Nearly all matching algorithms were lexically-oriented. In other words, the matching algorithms matched words in the questions to words in the documents rather than extracting the meaning of the question into a canonical meaning representation, extracting the meaning of the document into a canonical meaning representation, and matching on the meanings rather than the words.

Since there are many different word combinations for expressing most facts and since each Wikipedia and/or Wiktionary article typically has only a single lexical form for each fact, the team augmented popular articles and definitions with other text found on the web and news wire articles in the hope of capturing other lexical forms of important facts and increasing the likelihood of matching question words or synonyms to documents words or synonyms. These document acquisition methodologies expanded the initial Wikipedia-based knowledge-base from 13 to 59 GB and improved Jeopardy! performance by 11.4%.

Information extraction in IBM Watson

Identifying entities and relations in questions and linking them to Wikipedia titles and other stored documents was a key capability for the IBM Watson DeepQA system.

It used an open-source natural language processing framework that was developed from 2001 to 2006 by IBM named the Unstructured Information Management Architecture (UIMA). UIMA includes tools for language identification, language-specific segmentation, POS tagging, text indexing, sentence boundary detection, tokenization, stemming, regular expressions, and entity detection.

The IBM Watson DeepQA system also used a parsing and representational system named slot grammars (McCord et al, 2012) that captures both surface linguistic information (e.g. POS), morphological information (e.g. tense and number), logical argument structure (subject, object, complement), and dependency information. English, German, French, Spanish, Italian, and Portuguese implementations of the parser were used.

The DeepQA system automatically extracted a set of entities and relations from Wikipedia and used them to create a lexicon that could drive recognition of entities and relations in Jeopardy! questions. To learn lexical entries (i.e. words or phrases) that can be used to identify Wikipedia entities, DeepQA didn’t simply rely on keyword matching to Wikipedia titles. In addition to keywords, they used link information (i.e. words in Wikipedia articles that are hyperlinked to the entity article) to match different surface forms (i.e. words and phrases) to each entity in Wikipedia.

The extracted lexicon included 1.4 million proper noun phrasal entries extracted from Wikipedia, phrasal medical entries extracted from the Unified Medical Language System, and numerous entity and type synonyms extracted using the relationships between Wikipedia, YAGO, and WordNet.

To learn a set of relations and a lexicon to identify each relation, the IBM Watson DeepQA system used Wikipedia infoboxes which frequently contain relations. For example, in describing the IBM Watson relation extraction process, the IBM Watson authors (Wang et al, 2011) note that the infobox in the article for Albert Einstein lists his “alma mater” as “University of Zurich”. Then the first sentence in the article mentioning “alma mater” and “University of Zurich” says

Einstein was awarded a PhD by the University of Zurich

Sentences like this were then used as training sentences for their relations. Classifiers were automatically trained for over 7000 relations based on this type of analysis of Wikipedia text. The IBM Watson team also defined 30 handcrafted patterns to identify common relations with high confidence. An example pattern to identify the authorOf relationship was

[Author] [WriteVerb] [Work]

They also developed a “true case” algorithm to account for the fact that clues are presented in all upper case. This algorithm was trained on thousands of same phrases to transform the upper case clues into mixed case clues and thereby provide algorithms (e.g. NER) with additional information.

How IBM Watson interpreted the questions

When the IBM Watson DeepQA system read a Jeopardy! question, it extracted four key pieces of Jeopardy!-specific information:

Focus: The focus was determined by a set of handcrafted rules such as

A noun phrase with determiner “this” or “these”.

This rule would pick up the correct focus in question such as

THEATRE: A new play based on this Sir Arthur Conan Doyle canine classic opened on the London stage in 2007.

If none of the rules matched, the question (answer) was treated as if it didn’t have a focus.

Lexical answer types (LATs): The DeepQA interprets each question as having one or more Lexical Answer Types (LATs). A LAT is often the Focus of the question. It can also be a class. For example, “dog” would be a LAT in the question

Marmaduke is this breed of dog.

The set of LATs can also include the answer category. For example, “capital” will be a LAT for questions in the category

FOLLOW THE LEADER TO THE CAPITAL

You can think of the LAT as a type of concept. For example, a clue might indicate the response is a type of dog. In this case, “dog” would be the LAT. Here also, we can run into a word matching problem. A clue might reference the concept associated with the word “dog” by many different words and phrases, including “dog,” “canine,” and “man’s best friend.”

Fortunately, there are ontologies that provide this type of mapping. An open-source ontology named YAGO is available online that maps words to concepts, and the IBM Watson team used YAGO to map the LAT word(s) onto YAGO concepts. There is also an open-source mapping from Wikipedia to YAGO named DBPedia, and DeepQA used this to map possible answers to YAGO concepts.

YAGO also contains hierarchical information about concepts. For example, YAGO lists an animal hierarchy (animal->mammal->dog). When the entity and LAT were both available in YAGO, DeepQA used conventionally coded hierarchical rules to determine if the entity matched the LAT concept exactly or matched a subtype or supertype in the YAGO concept hierarchy.

Certain LATs (e.g., countries, US presidents) have a finite list, and the list instances often are matched to the entities in candidate answer documents using Wikipedia lists.

See the IBM Watson team paper on this topic (Lally et al, 2012) for information on how DeepQA determines the LATs.

Question Classification: Factoid questions are the most common type of Jeopardy! question. However, the IBM team found 12 other question categories (e.g. Definition, Multiple-Choice, Puzzle, Common Bonds, Fill-in-the-Blanks, Abbreviation). They developed a separate set of handcrafted rules for recognizing the question class and applied different answering techniques for each type.

Question Sections (QSections): These are annotations placed by the IBM Watson system on the query text that indicate special treatment is required. For example, a phrase like

…this 4-letter word…

is marked as a LEXICAL CONSTRAINT which indicates that it should be used to select the answer but should not be part of the answer. Like Question Classes, these are identified by a set of handcrafted rules.

Using the parsing information discussed earlier, the entities and relations are also extracted from the question.

How IBM Watson identified the candidate answers

The next step is to identify Wikipedia and other documents that contain candidate answers. Consider, for example, the following clue (Carroll et al, 2012b).

MOVIE-ING: Robert Redford and Paul Newman starred in this depression-era grifter flick.

(Answer: The Sting)

Both the focus and LAT are “flick” and two relations are extracted:

actorIn (Robert Redford; flickflick : focus)

actorIn (Paul Newman; flick : focus)

Four types of document searches are performed:

Titled document search: Titled documents (e.g. Wikipedia and Wiktionary) are searched for “Robert Redford”, “Paul Newman”, “star”, “depression”, “era”, “grifter”, and “flick” and rated by several criteria. For example, documents with both the Redford and Newman names are weighted the highest. Documents with the focus word “flick” are weighted next highest. The 50 long documents (e.g. Wikipedia) and 5 short documents (e.g. Wiktionary) that are rated highest are considered candidates. The document titles become the candidate answers.

Passage search: Untitled documents (e.g. news articles) are searched using the same terms in two stages to identify one- or two-sentence passages that contain candidate answers.

First, articles are found for which the words in the headline is contained in the clue. The ten highest-rated candidate passages are retrieved.

Second, all other articles are searched using two different search engines with very different algorithms. The documents are rated based on the similarity of the words in the passage to the words in the query and other factors such as whether the sentence is close to the beginning of the article. Similarity includes exact word matches plus synonym and/or thesaurus matches.

The five highest-rated passages for each search engine are considered candidate documents. For each candidate document, a variety of lexically-oriented techniques are used to extract the candidate answer (Ferrucci et al, 2012).

Structured databases: One might think that simply looking up answers in structured databases such as IMDB (Internet Movie Database) and/or a knowledge base (DBPedia was the knowledge base used by DeepQA) would provide an answer to most factoid questions. The difficulty is that this requires matching the words in the names used in the query to the words in the names in these structured data sources. Moreover, it relies on the words identifying the relations discovered matching the words defining the relations in these data sources. Said another way, the relations might match, but if the words used to express the relation in the question and database are different, the system won’t see the match. As a result, while this technique was found to be effective for some types of relations, especially the ones extracted with handcrafted rules such as actorIn, it was generally a less effective strategy than Title Document Search and Passage Search.

PRISMATIC knowledge base: The IBM Watson team created its own knowledge base named PRISMATIC (Fan et al, 2012). The facts in PRISMATIC are extracted from encyclopedias, dictionaries, news articles, and other sources. The facts include commonsense knowledge such as “scientists publish papers” that can be used to help interpret questions. DeepQA used PRISMATIC as another source of candidate answers.

How IBM Watson scores the candidate answers on LAT match

One of the most important steps in scoring each candidate answer is to determine the likelihood that it matches the LAT. To accomplish this, the IBM watson system canonicalizes entity references using several different techniques including:

- The NER detector from PIQUANT which is used for 100 common named entity types.

- Entity linking by matching the entity mention string to strings in Wikipedia articles that have hyperlinks. Different ways of mentioning the entity in Wikipedia are all linked back to the same Wikipedia page thereby providing a means of canonicalizing the lexical variation in entity references.

- Word co-occurrence algorithms using Wikipedia disambiguation pages and other unstructured and structured resources.

Next, the canonicalized entity reference is matched to the LAT. However, the LAT is just a word or string of words and it also needs to be canonicalized by finding its underlying concept in an ontology. IBM Watson system uses the YAGO ontology and uses WordNet / YAGO links to map words in the mention string to concepts in YAGO. The LAT mention string is looked up in WordNet. In some cases, there are multiple word senses.

The IBM Watson team (Kalyanpur et al, 2012) offered the example of the word “star”. This has two WordNet word senses. The higher ranked word sense is the astronomy sense. The lower-ranked word sense is the movies sense. As a result, the LAT will be associated with two concepts. It will be associated with the astronomy sense with a 75% probability and the movie star sense with a 25% probability. However, if the candidate answer has a named entity that is a movie star, the match will still occur.

The relative probabilities are then adjusted by determining which word senses have more instances in DBPedia and are therefore presumed to be more popular. The IBM Watson system uses several methods to assess each candidate answer against each LAT (Murdoch et al, 2012). Some of these methods are:

Wikipedia Lookup: The type of the entity concept extracted from the candidate answer is looked up in YAGO and compared with the YAGO LAT concept. For example, if the candidate answer matches a link to a Wikipedia title, the entity is looked up in DBPedia which has a cross-reference to YAGO types. Wikipedia articles are also commonly tagged with categories. These categories are matched against the LAT.

Taxonomic Reasoning: When the entity and LAT can both be found in YAGO, it is possible to do taxonomic reasoning and determine if the entity matches the LAT concept exactly or matches a subtype or supertype in the YAGO concept hierarchy.

Gender: A special algorithm is used to compare LAT’s that specify a gender (e.g. “he”) to an entity in the candidate answer.

Closed LATs: Certain LATs (e.g. countries, US presidents) have a finite list and the list instances are matched to the candidate entity.

WordNet: The lexical unit hierarchy is checked for matches between the LAT and candidate answers.

Wikipedia Lists: Wikipedia contains many lists. These lists are stored and matched against question text starting with “List of…”.

Each method also assigns a confidence rating to the type match.

Additional evidence used by IBM Watson

Additional evidence is also gathered for each candidate answer. For example, each candidate answer is merged into the question, and searches are performed looking for text passages that contain some or all the question words in any order. Over 50 separate scoring algorithms are used to create a set of scores that are then used as features in a machine learning algorithm. Some examples:

- The found passages are scored by several different scorers that analyze things like how many question words are matched and how well the word orders match.

- There are multiple temporal answer scorers that determine if the dates for the candidate answer are consistent with the dates in the question. If the question asks about the 1900s, someone who lived in the 1800s is not a plausible answer. Temporal references are normalized and matched against DBPedia which has dates (e.g. birth/death dates of people) and durations of events. Temporal data is also extracted into a DeepQA knowledge base that contains the number of times a date range was mentioned for an entity and this knowledge base is used to score time period references in candidate answers.

- Similarly, location scorers assess the geographic compatibility between the question and candidate answer. Longitude/latitude specifications in a query are normalized using regular expressions and matched against location data stored in DBPedia and Freebase.

- Other scorers check handcrafted rules such as an entity can’t be both a country and a person. There are over 200 such rules.

How IBM Watson computed the final scores

Candidate answers are ultimately scored by applying a logistic regression algorithm (with regularization) to rank candidate answers according to a set of 550 features (Gondek et al, 2012). The classifier is trained to compare each pair of candidate answers and identify the best answer. This produces a ranking of the answers.

A supervised learning algorithm was trained on 25,000 Jeopardy! questions with 5.7 million question-answer pairs (including both correct and incorrect answers). The answer produced by the IBM Watson system is the candidate response with the high rank (converted into a question).

Summary of IBM Watson question answering

The IBM Watson system is an amazing feat of engineering. The result is a system that appears to understand complex English questions. Under the hood, what is really occurring is a massive set of mostly handcrafted rules that extract entities and relations, a set of rules for keyword matches between words in the questions and words in the stored documents and databases, and a massive set of handcrafted rules for identifying the most likely entity answer from a large set of candidate answers.

There is no attempt by the IBM Watson system to understand a question by producing a meaning representation of the question and matching that meaning representation to the meaning representations of documents and knowledge bases. As David Ferrucci, the IBM Watson team leader, said (Ferrucci, 2012),

“DeepQA does not assume that it ever completely understands the question…”

Most of the system is based on brute force lexical matching of questions to documents. The rules used to match the time frame and location specified in the question to the time frame and location specified in the candidate answers and to match the entities and relations in the question to the candidate answers could be considered a form of reasoning if one stretches the definition. However, clever programming is a more apt description of the entire system.

7.2 Text-to-SQL

One of the major early areas of focus of both academic research and commercial system was in the area of natural language interfaces to SQL databases. In the 1970s and 1980s, before business intelligence software revolutionized the way knowledge workers interact with databases, it was thought that natural language interfaces had huge potential for bridging the gap between non-technical business people and the information in structured databases.

Starting in the 1980s, several commercial text-to-SQL systems were created with varying degrees of success. All of these commercial systems used symbolic NLP techniques. Most current research uses machine learning. Both will be discussed below.

7.2.1 Text-to-SQL challenges

Text-to-SQL systems face four major challenges:

(1) Schema encoding

Text-to-SQL systems need to translate natural language into a representation that capture the database schema semantics, i.e. the database tables, columns, and the relationships between the tables. If the text-to-SQL system requires significant manual effort for each new database, the implementation cost can be a barrier to the use of the text-to-SQL system.

(2) Schema linking

Text-to-SQL systems need to correctly interpret references to the tables and columns of the database in the query. Here also, manual effort to create the lexical entries for each new databases is a barrier to use.

(3) Multi-table schemas

Most real-world databases have multiple tables and text-to-SQL systems need to generate SQL that includes joins to link these tables. Text-to-SQL systems developed for single-table databases won’t have this capability.

(4) Syntactically correct but semantically incorrect queries

Many queries contain syntactically correct SQL and, when run against the database, will produce output. However, the output can be meaningless. One example, is SQL with many-to-one-to-many joins. For example, a database may have a table of payments by customer and another table of orders by customer. However, SQL that merges the data from these two tables will generate incorrect data. The data will look like it shows payments for specific orders but that information is not actually contained in the tables.

7.2.2 Datasets for training text-to-SQL systems

Many datasets are available for research on natural language interfaces to databases. These datasets include a SQL database and include questions and correct SQL broken down into training and test sets. Most of these datasets contain databases with a single table. These include

- ATIS (Hemphill et al, 1990), a flight database

- Restaurants (Tang and Mooney, 2000), a database of restaurant locations and food types

- GeoQuery (Zelle and Mooney, 1996), a database of states, cities, and other places

- Academic (Li and Jagadish, 2014), a database of academic publications

- Scholar (Iyer et al, 2017), another database of academic publications

- Yelp, a dataset of Yelp data

- IMDB, a movie database

- Advising (Finegan-Dollak et al, 2017), a database of course information

More recently, researchers have developed datasets that cross a wide range of topics. These include

- WikiSQL (Zhong et al, 2017), a database of questions and answers from a wide range of Wikipedia articles so topic identification is important. Each question references a single table so there is no need for joins. The WikiSQL leaderboard can be found here.

- Spider (Yu et al, 2018), a database of questions and answers cover a wide range of databases extracted from college database classes. Many questions require joins between tables. The Spider leaderboard can be found here.

More importantly, the articles/databases in these last two datasets are not present in the training sets. This forces the system to learn generalized patterns, not ones that are specific to the training data.

7.2.3 Symbolic NLP approaches

The first text-to-SQL system was developed in 1960 by MIT researcher Bert Green (who became my Ph.D. thesis advisor 18 years later). Green and colleagues wrote a computer program (Green et al, 1961) that could answer questions about the 1959 baseball season like:

Who did the Red Sox lose to on July 5? Where did each team play on July 7? What teams won 10 games in July? Did every team play at least once in each park?

Similarly, the LUNAR system (Woods et al, 1972) allowed geologists to ask questions such as:

Give me all lunar samples with magnetite. In which samples has apatite been identified? What is the specific activity of A126 in soil? Analyses of strontium in plagioclase. What are the plag analyses for breccias? What is the average concentration of olivine in breccias? What is the average age of the basalts? What is the average potassiudrubidium ratio in basalts? In which breccias is the average concentration of titanium greater than 6 percent?

In 1971, the LUNAR system was put to a test by actual geologists. Of 111 moon rock questions posed by geologists, 78% were answered correctly. In 1978, Stanford Research Institute developed a system named LADDER (Hendrix et al, 1978) that retrieved Navy ship data.

In 1981, Wendy Lehnert and I (Shwartz, 1982; Lehnert and Shwartz, 1983) developed a natural language interface to oil company well data where one could ask questions like

Show me a map of all tight wells drilled before May I, 1980 but since May I, 1970 by texaco that show oil deeper than 2000, were themselves deeper than 5000, are now operated by shell, are wildcat wells where the operator reported a drilling problem, and have mechanical logs, drill stem tests, and a commercial oil analysis, that were drilled within the area defined by latitude 30 deg 20 min 30 sec to 31:20:30 and 80-81. scale 2000 feet.

These systems all used semantic parsing. Semantic parsers have hand-coded rules that translate natural language queries into a very narrow frame-based meaning representation that only has slots for the information necessary to create a computer program to retrieve the data. These slots primarily fell into three categories:

- A list of fields and/or calculations to be retrieved

- A set of filters that restricted the information

- A set of sorts that defined how the output should be sorted

For example, the EasyTalk commercial text-to-SQL system (Shwartz, 1987) would translate inputs such as

Show me the customer, city, and dollar amount of Q1 sales of men’s blue shirts in the western region sorted by state.

This would result in a frame-based representation:

Display:

customer_name customer_city customer_state sales_$

Filters:

transaction_date >= ‘1/1/1983’ transaction date <= ‘3/31/1983’ product_id in (‘23423’, ‘23424’, ‘24356’) region = ‘west’

Sorts:

customer_state

In order to parse natural language inputs into this frame-based representation, the EasyTalk system used a hand-coded lexicon. A lexicon contains entries for individual words and tokens, phrases, and/or patterns.

So, for example, the word “customer” by itself and the phrase “customer name” both map to the customer_name field. The token “Q1” maps to a transaction date filter. And so on.

From this internal representation, it is a simple matter to generate SQL or some other database query language to retrieve and display the requested data. For example, the Display elements become the SELECT parameters, the Filters become the WHERE clause, and so on.

It was also a simple matter to create paraphrases of the information in order to confirm with the user that the system correctly understood the request. Early natural language interfaces to databases had hand-coded lexicons some of which enabled inferences such as inferring from

Who owes me for over 60 days?

that an outstanding balance is being requested.

However, the amount of hand-coding for each new database and the required expertise made widespread development of this type of interface impractical.