nltk.tokenize package¶

Submodules¶

- nltk.tokenize.api module

- nltk.tokenize.casual module

- nltk.tokenize.destructive module

- nltk.tokenize.legality_principle module

- nltk.tokenize.mwe module

- nltk.tokenize.nist module

- nltk.tokenize.punkt module

- nltk.tokenize.regexp module

- nltk.tokenize.repp module

- nltk.tokenize.sexpr module

- nltk.tokenize.simple module

- nltk.tokenize.sonority_sequencing module

- nltk.tokenize.stanford module

- nltk.tokenize.stanford_segmenter module

- nltk.tokenize.texttiling module

- nltk.tokenize.toktok module

- nltk.tokenize.treebank module

- nltk.tokenize.util module

Module contents¶

NLTK Tokenizer Package

Tokenizers divide strings into lists of substrings. For example,

tokenizers can be used to find the words and punctuation in a string:

>>> from nltk.tokenize import word_tokenize >>> s = '''Good muffins cost $3.88nin New York. Please buy me ... two of them.nnThanks.''' >>> word_tokenize(s) ['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.', 'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

This particular tokenizer requires the Punkt sentence tokenization

models to be installed. NLTK also provides a simpler,

regular-expression based tokenizer, which splits text on whitespace

and punctuation:

>>> from nltk.tokenize import wordpunct_tokenize >>> wordpunct_tokenize(s) ['Good', 'muffins', 'cost', '$', '3', '.', '88', 'in', 'New', 'York', '.', 'Please', 'buy', 'me', 'two', 'of', 'them', '.', 'Thanks', '.']

We can also operate at the level of sentences, using the sentence

tokenizer directly as follows:

>>> from nltk.tokenize import sent_tokenize, word_tokenize >>> sent_tokenize(s) ['Good muffins cost $3.88nin New York.', 'Please buy mentwo of them.', 'Thanks.'] >>> [word_tokenize(t) for t in sent_tokenize(s)] [['Good', 'muffins', 'cost', '$', '3.88', 'in', 'New', 'York', '.'], ['Please', 'buy', 'me', 'two', 'of', 'them', '.'], ['Thanks', '.']]

Caution: when tokenizing a Unicode string, make sure you are not

using an encoded version of the string (it may be necessary to

decode it first, e.g. with s.decode("utf8").

NLTK tokenizers can produce token-spans, represented as tuples of integers

having the same semantics as string slices, to support efficient comparison

of tokenizers. (These methods are implemented as generators.)

>>> from nltk.tokenize import WhitespaceTokenizer >>> list(WhitespaceTokenizer().span_tokenize(s)) [(0, 4), (5, 12), (13, 17), (18, 23), (24, 26), (27, 30), (31, 36), (38, 44), (45, 48), (49, 51), (52, 55), (56, 58), (59, 64), (66, 73)]

There are numerous ways to tokenize text. If you need more control over

tokenization, see the other methods provided in this package.

For further information, please see Chapter 3 of the NLTK book.

- nltk.tokenize.sent_tokenize(text, language=‘english’)[source]¶

-

Return a sentence-tokenized copy of text,

using NLTK’s recommended sentence tokenizer

(currentlyPunktSentenceTokenizer

for the specified language).- Parameters

-

-

text – text to split into sentences

-

language – the model name in the Punkt corpus

-

- nltk.tokenize.word_tokenize(text, language=‘english’, preserve_line=False)[source]¶

-

Return a tokenized copy of text,

using NLTK’s recommended word tokenizer

(currently an improvedTreebankWordTokenizer

along withPunktSentenceTokenizer

for the specified language).- Parameters

-

-

text (str) – text to split into words

-

language (str) – the model name in the Punkt corpus

-

preserve_line (bool) – A flag to decide whether to sentence tokenize the text or not.

-

Как выполняется обработка и очистка текстовых данных для создания модели? Чтобы ответить на этот вопрос, давайте исследуем некоторые интересные концепции, лежащие в основе Natural Language Processing (NLP).

Решение проблемы НЛП – это процесс, разделенный на несколько этапов. Прежде всего, мы должны очистить неструктурированные текстовые данные перед переходом к этапу моделирования. Очистка данных включает несколько ключевых шагов. Эти шаги заключаются в следующем:

- Токенизация слов

- Части предсказания речи для каждого токена

- Лемматизация текста

- Определение и удаление стоп-слов и многое другое.

В данном уроке мы узнаем намного больше о самом основном шаге, известном как токенизация. Мы изучим, что такое токенизация и почему она необходима для обработки естественного языка (NLP). Более того, мы также откроем для себя некоторые уникальные методы для выполнения токенизации в Python.

Понятие токенизации

Токенизация разделяет большое количество текста на более мелкие фрагменты, известные как токены. Эти фрагменты или токены очень полезны для поиска закономерностей и рассматриваются в качестве основного шага для стемминга и лемматизации. Токенизация также поддерживает замену конфиденциальных элементов данных на нечувствительные.

Обработка естественного языка(NLP) используется для создания таких приложений, как классификация текста, сентиментальный анализ, интеллектуальный чат-бот, языковой перевод и многие другие. Таким образом, становится важным понимать текстовый шаблон для достижения указанной выше цели.

Но пока рассмотрим стемминг и лемматизацию как основные шаги для очистки текстовых данных с помощью обработки естественного языка (NLP). Такие задачи, как классификация текста или фильтрация спама, используют NLP вместе с библиотеками глубокого обучения, такими как Keras и Tensorflow.

Значение токенизации в НЛП

Чтобы понять значение токенизации, давайте рассмотрим английский язык в качестве примера. Возьмем любое предложение и запомним его при понимании следующего раздела.

Перед обработкой естественного языка мы должны идентифицировать слова, составляющие строку символов. Таким образом, токенизация представляется наиболее важным шагом для продолжения обработки естественного языка (NLP).

Этот шаг необходим, поскольку фактическое значение текста можно интерпретировать путем анализа каждого слова, присутствующего в тексте.

Теперь давайте рассмотрим следующую строку в качестве примера:

My name is Jamie Clark.

После выполнения токенизации в указанной выше строке мы получим результат, как показано ниже:

[‘My’, ‘name’, ‘is’, ‘Jamie’, ‘Clark’]

Существуют различные варианты использования операции. Мы можем использовать токенизированную форму, чтобы:

- подсчитать общее количество слов в тексте;

- подсчитать частоту слова, то есть общее количество раз, когда конкретное слово присутствует, и многое другое.

Теперь давайте разберемся с несколькими способами выполнения токенизации в обработке естественного языка (NLP) в Python.

Методы

Существуют различные уникальные методы токенизации текстовых данных. Некоторые из этих уникальных способов описаны ниже.

Токенизация с использованием функции split()

Функция split() – один из основных доступных методов разделения строк. Эта функция возвращает список строк после разделения предоставленной строки определенным разделителем. Функция split() по умолчанию разбивает строку в каждом пробеле. Однако при необходимости мы можем указать разделитель.

Рассмотрим следующие примеры:

Пример 1.1: Токенизация Word с использованием функции split()

my_text = """Let's play a game, Would You Rather! It's simple, you have to pick one or the other. Let's get started. Would you rather try Vanilla Ice Cream or Chocolate one? Would you rather be a bird or a bat? Would you rather explore space or the ocean? Would you rather live on Mars or on the Moon? Would you rather have many good friends or one very best friend? Isn't it easy though? When we have less choices, it's easier to decide. But what if the options would be complicated? I guess, you pretty much not understand my point, neither did I, at first place and that led me to a Bad Decision.""" print(my_text.split())

Выход:

['Let's', 'play', 'a', 'game,', 'Would', 'You', 'Rather!', 'It's', 'simple,', 'you', 'have', 'to', 'pick', 'one', 'or', 'the', 'other.', 'Let's', 'get', 'started.', 'Would', 'you', 'rather', 'try', 'Vanilla', 'Ice', 'Cream', 'or', 'Chocolate', 'one?', 'Would', 'you', 'rather', 'be', 'a', 'bird', 'or', 'a', 'bat?', 'Would', 'you', 'rather', 'explore', 'space', 'or', 'the', 'ocean?', 'Would', 'you', 'rather', 'live', 'on', 'Mars', 'or', 'on', 'the', 'Moon?', 'Would', 'you', 'rather', 'have', 'many', 'good', 'friends', 'or', 'one', 'very', 'best', 'friend?', 'Isn't', 'it', 'easy', 'though?', 'When', 'we', 'have', 'less', 'choices,', 'it's', 'easier', 'to', 'decide.', 'But', 'what', 'if', 'the', 'options', 'would', 'be', 'complicated?', 'I', 'guess,', 'you', 'pretty', 'much', 'not', 'understand', 'my', 'point,', 'neither', 'did', 'I,', 'at', 'first', 'place', 'and', 'that', 'led', 'me', 'to', 'a', 'Bad', 'Decision.']

Объяснение:

В приведенном выше примере мы использовали метод split(), чтобы разбить абзац на более мелкие фрагменты или слова. Точно так же мы также можем разбить абзац на предложения, указав разделитель в качестве параметра для функции split(). Как мы знаем, предложение обычно заканчивается точкой “.”; что означает, что мы можем использовать “.” как разделитель для разделения строки.

Давайте рассмотрим то же самое в следующем примере:

Пример 1.2: Токенизация предложения с использованием функции split()

my_text = """Dreams. Desires. Reality. There is a fine line between dream to become a desire and a desire to become a reality but expectations are way far then the reality. Nevertheless, we live in a world of mirrors, where we always want to reflect the best of us. We all see a dream, a dream of no wonder what; a dream that we want to be accomplished no matter how much efforts it needed but we try."""

print(my_text.split('. '))

Выход:

['Dreams', 'Desires', 'Reality', 'There is a fine line between dream to become a desire and a desire to become a reality but expectations are way far then the reality', 'Nevertheless, we live in a world of mirrors, where we always want to reflect the best of us', 'We all see a dream, a dream of no wonder what; a dream that we want to be accomplished no matter how much efforts it needed but we try.']

Объяснение:

В приведенном выше примере мы использовали функцию split() с точкой(.) в качестве параметра, чтобы разбить абзац до точки. Основным недостатком использования функции split() является то, что функция принимает по одному параметру за раз. Следовательно, мы можем использовать только разделитель для разделения строки. Более того, функция split() не рассматривает знаки препинания как отдельный фрагмент.

Токенизация с использованием RegEx(регулярных выражений) в Python

Прежде чем перейти к следующему методу, давайте вкратце разберемся с регулярным выражением. Регулярное выражение, также известное как RegEx, представляет собой особую последовательность символов, которая позволяет пользователям находить или сопоставлять другие строки или наборы строк с помощью этой последовательности в качестве шаблона.

Чтобы начать работу с RegEx в Python предоставляет библиотеку, известную как re. Библиотека re – одна из предустановленных библиотек в Python.

Пример 2.1: Токенизация Word с использованием метода RegEx в Python

import re my_text = """Joseph Arthur was a young businessman. He was one of the shareholders at Ryan Cloud's Start-Up with James Foster and George Wilson. The Start-Up took its flight in the mid-90s and became one of the biggest firms in the United States of America. The business was expanded in all major sectors of livelihood, starting from Personal Care to Transportation by the end of 2000. Joseph was used to be a good friend of Ryan.""" my_tokens = re.findall

Выход:

['Joseph', 'Arthur', 'was', 'a', 'young', 'businessman', 'He', 'was', 'one', 'of', 'the', 'shareholders', 'at', 'Ryan', 'Cloud', 's', 'Start', 'Up', 'with', 'James', 'Foster', 'and', 'George', 'Wilson', 'The', 'Start', 'Up', 'took', 'its', 'flight', 'in', 'the', 'mid', '90s', 'and', 'became', 'one', 'of', 'the', 'biggest', 'firms', 'in', 'the', 'United', 'States', 'of', 'America', 'The', 'business', 'was', 'expanded', 'in', 'all', 'major', 'sectors', 'of', 'livelihood', 'starting', 'from', 'Personal', 'Care', 'to', 'Transportation', 'by', 'the', 'end', 'of', '2000', 'Joseph', 'was', 'used', 'to', 'be', 'a', 'good', 'friend', 'of', 'Ryan']

Объяснение:

В приведенном выше примере мы импортировали библиотеку re, чтобы использовать ее функции. Затем мы использовали функцию findall() библиотеки re. Эта функция помогает пользователям найти все слова, соответствующие шаблону, представленному в параметре, и сохранить их в списке.

Кроме того, “ w” используется для обозначения любого символа слова, относится к буквенно-цифровому (включая буквы, числа) и подчеркиванию(_). «+» обозначает любую частоту. Таким образом, мы следовали шаблону [ w ‘] +, так что программа должна искать и находить все буквенно-цифровые символы, пока не встретит какой-либо другой.

Теперь давайте посмотрим на токенизацию предложения с помощью метода RegEx.

Пример 2.2: Токенизация предложения с использованием метода RegEx в Python

import re

my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him."""

my_sentences = re.compile('[.!?] ').split(my_text)

print(my_sentences)

Выход:

['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America', 'The product became so successful among the people that the production was increased', 'Two new plant sites were finalized, and the construction was started', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories', 'Many popular magazines were started publishing Critiques about him.']

Объяснение:

В приведенном выше примере мы использовали функцию compile() библиотеки re с параметром ‘[.?!]’ И использовали метод split() для отделения строки от указанного разделителя. В результате программа разбивает предложения, как только встречает любой из этих символов.

Токенизация с набором инструментов естественного языка

Набор инструментов для естественного языка, также известный как NLTK, – это библиотека, написанная на Python. Библиотека NLTK обычно используется для символьной и статистической обработки естественного языка и хорошо работает с текстовыми данными.

Набор инструментов для естественного языка(NLTK) – это сторонняя библиотека, которую можно установить с помощью следующего синтаксиса в командной оболочке или терминале:

$ pip install --user -U nltk

Чтобы проверить установку, можно импортировать библиотеку nltk в программу и выполнить ее, как показано ниже:

import nltk

Если программа не выдает ошибку, значит, библиотека установлена успешно. В противном случае рекомендуется повторить описанную выше процедуру установки еще раз и прочитать официальную документацию для получения более подробной информации.

В наборе средств естественного языка(NLTK) есть модуль с именем tokenize(). Этот модуль далее подразделяется на две подкатегории: токенизация слов и токенизация предложений.

- Word Tokenize: метод word_tokenize() используется для разделения строки на токены или слова.

- Sentence Tokenize: метод sent_tokenize() используется для разделения строки или абзаца на предложения.

Давайте рассмотрим пример, основанный на этих двух методах:

Пример 3.1: Токенизация Word с использованием библиотеки NLTK в Python

from nltk.tokenize import word_tokenize my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him.""" print(word_tokenize(my_text))

Выход:

['The', 'Advertisement', 'was', 'telecasted', 'nationwide', ',', 'and', 'the', 'product', 'was', 'sold', 'in', 'around', '30', 'states', 'of', 'America', '.', 'The', 'product', 'became', 'so', 'successful', 'among', 'the', 'people', 'that', 'the', 'production', 'was', 'increased', '.', 'Two', 'new', 'plant', 'sites', 'were', 'finalized', ',', 'and', 'the', 'construction', 'was', 'started', '.', 'Now', ',', 'The', 'Cloud', 'Enterprise', 'became', 'one', 'of', 'America', "'s", 'biggest', 'firms', 'and', 'the', 'mass', 'producer', 'in', 'all', 'major', 'sectors', ',', 'from', 'transportation', 'to', 'personal', 'care', '.', 'Director', 'of', 'The', 'Cloud', 'Enterprise', ',', 'Ryan', 'Cloud', ',', 'was', 'now', 'started', 'getting', 'interviewed', 'over', 'his', 'success', 'stories', '.', 'Many', 'popular', 'magazines', 'were', 'started', 'publishing', 'Critiques', 'about', 'him', '.']

Объяснение:

В приведенной выше программе мы импортировали метод word_tokenize() из модуля tokenize библиотеки NLTK. Таким образом, в результате метод разбил строку на разные токены и сохранил ее в списке. И, наконец, мы распечатали список. Более того, этот метод включает точки и другие знаки препинания как отдельный токен.

Пример 3.1: Токенизация предложения с использованием библиотеки NLTK в Python

from nltk.tokenize import sent_tokenize my_text = """The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America. The product became so successful among the people that the production was increased. Two new plant sites were finalized, and the construction was started. Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care. Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories. Many popular magazines were started publishing Critiques about him.""" print(sent_tokenize(my_text))

Выход:

['The Advertisement was telecasted nationwide, and the product was sold in around 30 states of America.', 'The product became so successful among the people that the production was increased.', 'Two new plant sites were finalized, and the construction was started.', "Now, The Cloud Enterprise became one of America's biggest firms and the mass producer in all major sectors, from transportation to personal care.", 'Director of The Cloud Enterprise, Ryan Cloud, was now started getting interviewed over his success stories.', 'Many popular magazines were started publishing Critiques about him.']

Объяснение:

В приведенной выше программе мы импортировали метод sent_tokenize() из модуля tokenize библиотеки NLTK. Таким образом, в результате метод разбил абзац на разные предложения и сохранил его в списке. Затем мы распечатали список.

Изучаю Python вместе с вами, читаю, собираю и записываю информацию опытных программистов.

To tokenize sentences and words with NLTK, “nltk.word_tokenize()” function will be used. NLTK Tokenization is used for parsing a large amount of textual data into parts to perform an analysis of the character of the text. NLTK for tokenization can be used for training machine learning models, Natural Language Processing text cleaning. The tokenized words and sentences with NLTK can be turned into a data frame and vectorized. Natural Language Tool Kit (NLTK) tokenization involves punctuation cleaning, text cleaning, vectorization of parsed text data for better lemmatization, and stemming along with machine learning algorithm training.

Natural Language Tool Kit Python Libray has a tokenization package is called “tokenize”. In the “tokenize” package of NLTK, there are two types of tokenization functions.

- “word_tokenize” is to tokenize words.

- “sent_tokenize” is to tokenize sentences.

How to Tokenize Words with Natural Language Tool Kit (NLTK)?

Tokenization of words with NLTK means parsing a text into the words via Natural Language Tool Kit. To tokenize words with NLTK, follow the steps below.

- Import the “word_tokenize” from the “nltk.tokenize”.

- Load the text into a variable.

- Use the “word_tokenize” function for the variable.

- Read the tokenization result.

Below, you can see a tokenization example with NLTK for a text.

from nltk.tokenize import word_tokenize

text = "Search engine optimization is the process of improving the quality and quantity of website traffic to a website or a web page from search engines. SEO targets unpaid traffic rather than direct traffic or paid traffic."

print(word_tokenize(text))

>>>OUTPUT

['Search', 'engine', 'optimization', 'is', 'the', 'process', 'of', 'improving', 'the', 'quality', 'and', 'quantity', 'of', 'website', 'traffic', 'to', 'a', 'website', 'or', 'a', 'web', 'page', 'from', 'search', 'engines', '.', 'SEO', 'targets', 'unpaid', 'traffic', 'rather', 'than', 'direct', 'traffic', 'or', 'paid', 'traffic', '.']The explanation of the tokenization example above can be seen below.

- The first line is for importing the “word_tokenize” function.

- The second line of code is to provide text data for tokenization.

- Third line of code to print the output of the “word_tokenize”.

What are the advantages of word tokenization with NLTK?

The word tokenization benefits with NLTK involves the benefits of White Space Tokenization, Dictionary Based Tokenization, Rule-Based Tokenization, Regular Expression Tokenization, Penn Treebank Tokenization, Spacy Tokenization, Moses Tokenization, Subword Tokenization. All type of word tokenization is a part of the text normalization process. Normalizing the text with stemming and lemmatization improves the accuracy of the language understanding algorithms. The benefits and advantages of the word tokenization with NLTK can be found below.

- Removing the stop words easily from the corpora before the tokenization.

- Splitting words into the sub-words for understanding the text better.

- Removing the text disambiguate is faster and requires less coding with NLTK.

- Besides White Space Tokenization, Dictionary Based and Rule-based Tokenization can be implemented easily.

- Performing Byte Pair Encoding, Word Piece Encoding, Unigram Language Model, Setence Piece Encoding is easier with NLTK.

- NLTK has TweetTokenizer for tokenizing the tweets that including emojis and other Twitter norms.

- NLTK has PunktSentenceTokenizer has a pre-trained model for tokenization in multiple European Languages.

- NLTK has Multi Word Expression Tokenizer for tokenizing the compound words such as “in spite of”.

- NLTK has RegexpTokenizer to tokenize sentences based on the regular expressions.

How to Tokenize Sentences with Natural Language Tool Kit (NLTK)?

To tokenize the sentences with Natural Language Tool kit, the steps below should be followed.

- Import the “sent_tokenize” from “nltk.tokenize”.

- Load the text for sentence tokenization into a variable.

- Use the “sent_tokenize” for the specific variable.

- Print the output.

Below, you can see an example of NLTK Tokenization for sentences.

from nltk.tokenize import sent_tokenize

text = "God is Great! I won a lottery."

print(sent_tokenize(text))

Output: ['God is Great!', 'I won a lottery ']At the code block above, the text is tokenized into the sentences. By taking all of the sentences into a list with the sentence tokenization with NLTK can be used to see which sentence is connected to which one, average word per sentence, and unique sentence count.

What are the advantages of sentence tokenization with NLTK?

The advantages of sentence tokenization with NLTK are listed below.

- NLTK provides a chance to perform text data mining for sentences.

- NLTK sentence tokenization involves comparing different text corporas at the sentence level.

- Sentence tokenization with NLTK provides understanding how many sentences are used in a different sources of texts such as websites, or books, and papers.

- Thanks to NLTK “sent_tokenize” function, it is possible to see how the sentences are connected to each other, with what bridge words.

- Via NLTK sentence tokenizer, performing an overall sentiment analysis for the sentences is possible.

- Performing Semantic Role Labeling for the sentences to understand how the sentences are connected each other is one of the benefits of NLTK sentence tokenization.

How to perform Regex Tokenization with NLTK?

Regex Tokenization with NLTK is to perform tokenization based on regex rules. Regex Tokenization via NLTK can be used for extracting certain phrase patterns from a corpus. To perform regex tokenization with NLTK, the “tokenize.regexp()” method should be used. An example of the regex tokenization NLTK is below.

from nltk.tokenize import RegexpTokenizer

regex_tokenizer = RegexpTokenizer('?', gaps = True)

text = "How to perform Regex Tokenization with NLTK? To perform regex tokenization with NLTK, the regex pattern should be chosen."

regex_tokenization = regex_tokenizer.tokenize(text)

print(regex_tokenization)

OUTPUT >>>

['How to perform Regex Tokenization with NLTK', ' To perform regex tokenization with NLTK, the regex pattern should be chosen.']The Regex Tokenization example with NLTK demonstrates that how to take a question sentence and a sentence after it. By taking sentences that end with a question mark, and taking the sentences after it, matching the answers and questions, or taking the question formats from a corpus is possible.

How to perform Rule-based Tokenization with NLTK?

Rule-based Tokenization is tokenization based on certain rules that are generated from certain conditions. NLTK has three different rule-based tokenization algorithms as TweetTokenizer for Twitter Tweets, and MWET for Multi-word tokenization, along with the TreeBankTokenizer for the English Language rules. Rule-based Tokenization is helpful for performing the tokenization based on the best possible conditions for the nature of the textual data.

An example of Rule-based tokenization with MWET for multi-word tokenization can be seen below.

from nltk.tokenize import MWETokenizer

sentence = "I have sent Steven Nissen to the new reserch center for the nutritional value of the coffee. This sentence will be tokenized while Mr. Steven Nissen is on the journey. The #truth will be learnt. And, it's will be well known thanks to this tokenization example."

tokenizer = MWETokenizer()

tokenizer.add_mwe(("Steven", "Nissen"))

result = tokenizer.tokenize(word_tokenize(sentence))

result

OUTPUT >>>

['I',

'have',

'sent',

'Steven',

'Nissen',

'to',

'the',

'new',

'reserch',

'center',

'for',

'the',

'nutritional',

'value',

'of',

'the',

'coffee',

'.',

'This',

'sentence',

'will',

'be',

'tokenized',

'while',

'Mr.',

'Steven',

'Nissen',

'is',

'on',

'the',

'journey',

'.',

'The',

'#',

'truth',

'will',

'be',

'learnt',

'.',

'And',

',',

'it',

"'s",

'will',

'be',

'well',

'known',

'thanks',

'to',

'this',

'tokenization',

'example',

'.']An example of Rule-based tokenization with TreebankWordTokenizer for English language text can be seen below.

from nltk.tokenize import TreebankWordTokenizer

sentence = "I have sent Steven Nissen to the new reserch center for the nutritional value of the coffee. This sentence will be tokenized while Mr. Steven Nissen is on the journey. The #truth will be learnt. And, it's will be well known thanks to this tokenization example."

tokenizer = TreebankWordTokenizer()

result = tokenizer.tokenize(sentence)

result

OUTPUT >>>

['I',

'have',

'sent',

'Steven',

'Nissen',

'to',

'the',

'new',

'reserch',

'center',

'for',

'the',

'nutritional',

'value',

'of',

'the',

'coffee.',

'This',

'sentence',

'will',

'be',

'tokenized',

'while',

'Mr.',

'Steven',

'Nissen',

'is',

'on',

'the',

'journey.',

'The',

'#',

'truth',

'will',

'be',

'learnt.',

'And',

',',

'it',

"'s",

'will',

'be',

'well',

'known',

'thanks',

'to',

'this',

'tokenization',

'example',

'.']An example of Rule-based tokenization with TweetTokenizer for Twitter Tweets’ tokenization can be seen below.

from nltk.tokenize import TweetTokenizer

sentence = "I have sent Steven Nissen to the new reserch center for the nutritional value of the coffee. This sentence will be tokenized while Mr. Steven Nissen is on the journey. The #truth will be learnt. And, it's will be well known thanks to this tokenization example."

tokenizer = TweetTokenizer()

result = tokenizer.tokenize(sentence)

result

OUTPUT>>>

['I',

'have',

'sent',

'Steven',

'Nissen',

'to',

'the',

'new',

'reserch',

'center',

'for',

'the',

'nutritional',

'value',

'of',

'the',

'coffee',

'.',

'This',

'sentence',

'will',

'be',

'tokenized',

'while',

'Mr',

'.',

'Steven',

'Nissen',

'is',

'on',

'the',

'journey',

'.',

'The',

'#truth',

'will',

'be',

'learnt',

'.',

'And',

',',

"it's",

'will',

'be',

'well',

'known',

'thanks',

'to',

'this',

'tokenization',

'example',

'.']The most standard rule-based type of word tokenization is white space tokenization. White-space tokenization is basically taken spaces between words for the tokenization. White-space tokenization can be performed with the “split(” “)” method and argument as below.

sentence = "I have sent Steven Nissen to the new reserch center for the nutritional value of the coffee. This sentence will be tokenized while Mr. Steven Nissen is on the journey. The #truth will be learnt. And, it's will be well known thanks to this tokenization example."

result = sentence.split(" ")

result

OUTPUT >>>

['I',

'have',

'sent',

'Steven',

'Nissen',

'to',

'the',

'new',

'reserch',

'center',

'for',

'the',

'nutritional',

'value',

'of',

'the',

'coffee.',

'This',

'sentence',

'will',

'be',

'tokenized',

'while',

'Mr.',

'Steven',

'Nissen',

'is',

'on',

'the',

'journey.',

'The',

'#truth',

'will',

'be',

'learnt.',

'And,',

"it's",

'will',

'be',

'well',

'known',

'thanks',

'to',

'this',

'tokenization',

'example.']In NLTK tokenization methods, there are other tokenization methodologies such as PunktSentenceTokenizer for detecting the sentence boundaries, and Punctuation-based tokenization for tokenizing the punctuation-related words, and multiword properly.

How to use Lemmatization with NLTK Tokenization?

To use lemmatization with NLTK Tokenization, the “nltk.stem.wordnet.WordNetLemmetizer” should be used. WordNetLemmetizer from NLTK is to lemmatize the words within the text. The word lemmatization is the process of turning a word into its original dictionary form. Unlike stemming, lemmatization removes all of the suffixes, prefixes, and morphological changes for the word. NLTK Lemmatization is useful to see a word’s context and understand which words are actually the same during the word tokenization. Below, you will see word tokenization and lemmatization with NLTK example code block.

from nltk.stem.wordnet import WordNetLemmatizer

lemmatize = WordNetLemmatizer()

lemmatized_words = []

for w in tokens:

rootWord = lemmatize.lemmatize(w)

lemmetized_words.append(rootWord)

counts_lemmetized_words = Counter(lemmatized_words)

df_tokenized_lemmatized_words = pd.DataFrame.from_dict(counts_lemmatized_words, orient="index").reset_index()

df_tokenized_lemmatized_words.sort_values(by=0, ascending=False, inplace=True)

df_tokenized_lemmatized_words[:50]The NLTK Tokenization and Lemmatization example code bloc explanation is below.

- The “nltk.stem.wordnet” is called for importing WordNetLemmatizer.

- It is assigned to a variable which is “lemmatize”.

- An empty list is created for the “lemmatized_words”.

- A for loop is created for lemmatizing every word within the tokenized words with NLTK.

- The lemmatized and tokenized words are appended to the “lemmatized_words” list.

- The counter object has been used for counting them.

- The data frame has been created with lemamtized and tokenized word counts, sorted and called.

You can see the lemmatization and tokenization with the NLTK example result below.

The NLTK Tokenization and Lemmatization stats will be different than the NLTK Tokenization and Stemming. These differences will reflect their methodological differences for the statistical analysis for tokenized textual data with NLTK.

How to use Stemming with NLTK Tokenization?

To use stemming with NLTK Tokenization, the “PorterStemmer” from the “NLTK.stem” should be imported. Stemming is reducing words to the stem forms. Stemming can be useful for a better NLTK Word Tokenization analysis since there are lots of suffixes in the words. Via the NLTK Stemming, the words that come from the same root can be counted as the same. Being able to see which words without suffixes are used is to create a more comprehensive look at the statistical counts of the concepts and phrases within a text. An example of stemming with NLTK Tokenization is below.

from nltk.stem import PorterStemmer

ps = PorterStemmer()

stemmed_words = []

for w in tokens:

rootWord = ps.stem(w)

stemmed_words.append(rootWord)

OUTPUT>>>

['think',

'like',

'seo',

',',

'code',

'like',

'develop',

'python',

'seo',

'techseo',

'theoret',

'seo',

'on-pag',

'seo',

'pagespe',

'UX',

'market',

'think',

'like',

'seo',

',',

'code',

'like',

'develop',

'main',

show more (open the raw output data in a text editor) ...

'in',

'bulk',

'with',

'python',

'.',

...]counts_stemmed_words = Counter(stemmed_words)

df_tokenized_stemmed_words = pd.DataFrame.from_dict(counts_stemmed_words, orient="index").reset_index()

df_tokenized_stemmed_words.sort_values(by=0, ascending=False)

df_tokenized_stemmed_words| index | 0 | |

| 0 | think | 529 |

| 1 | like | 1059 |

| 2 | seo | 5389 |

| 3 | , | 22564 |

| 4 | code | 1128 |

| … | … | … |

| 10342 | pixel. | 1 |

| 10343 | success. | 1 |

| 10344 | pages. | 1 |

| 10345 | free… | 1 |

| 10346 | almost. | 1 |

| 10347 rows × 2 columns |

How to Tokenize Content of a Website via NLTK?

To tokenize the content of a website with NLTK on word, and sentence level the steps below should be followed.

- Crawling the website’s content.

- Extracting the website’s content from the crawl output.

- Using the “word_tokenize” of NLTK for word tokenization.

- Using “sent_tokenize” of NLTK for sentence tokenization.

Interpreting and comparing the output of the tokenization of a website provides benefits for the overall evaluation of the content of a website. Below, you will see an example of a website content tokenization example. To perform NLTK Tokenization with a website’s content, the Python libraries below should be used.

- Advertools

- Pandas

- NLTK

- Collections

- String

Below, you will see the importing process of the necessary libraries and functions for NLTK tokenization from Python for SEO.

import advertools as adv

import pandas as pd

from nltk.tokenize import word_tokenize

from nltk.tokenize import sent_tokenize

from collections import Counter

from nltk.tokenize import RegexpTokenizer

import string

adv.crawl("https://www.holisticseo.digital", "output.jl", custom_settings={"LOG_FILE":"output.log", "DOWNLOAD_DELAY":0.5}, follow_links=True)

df = pd.read_json("output.jl", lines=True)

for i in df.columns:

if i.__contains__("text"):

print(i)

word_tokenize(df["body_text"].explode())

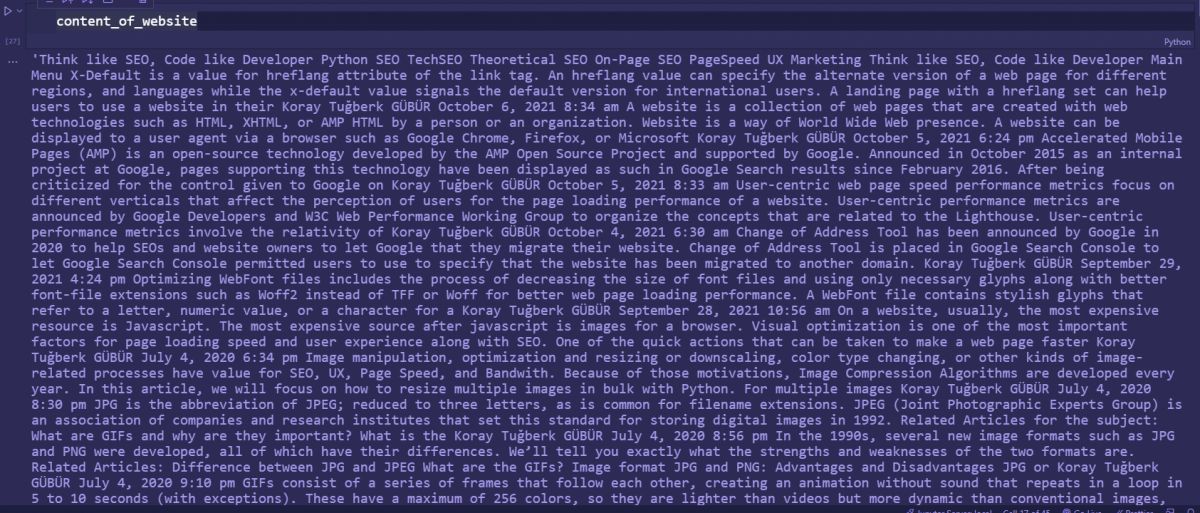

content_of_website = df["body_text"].str.split().explode().str.cat(sep=" ")

tokens = word_tokenize(content_of_website)

tokenized_counts = Counter(tokens)

df_tokenized = pd.DataFrame.from_dict(tokenized_counts, orient="index").reset_index()

df_tokenized.nunique()

df_tokenizedTo crawl the website’s content to perform an NLTK word and sentence tokenization, the Advertools’ “crawl” function will be used to take all of the content of the website into a “jl” extension file. Below, you will see an example of crawling a website with Python.

adv.crawl("https://www.holisticseo.digital", "output.jl", custom_settings={"LOG_FILE":"output.log", "DOWNLOAD_DELAY":0.5}, follow_links=True)

df = pd.read_json("output.jl", lines=True)In the first line, we have started the crawling process of the website, while in the second line we have started to read the “jl” file. Below, you can find the output of the crawled website’s output which is “output.jl” from the code block.

In the third step, the website’s content should be found within the data frame. To do that, a for loop for filtering the data frame columns with the “boyd_text” is necessary. To find it, we will use the “__contains__” method of Python.

for i in df.columns:

if i.__contains__("text"):

print(i)At the next step of NLTK Tokenization for website content, we will use the Pandas library’s “str.cat” method to unite all of the content pieces across different web pages.

content_of_website = df["body_text"].str.split().explode().str.cat(sep=" ")

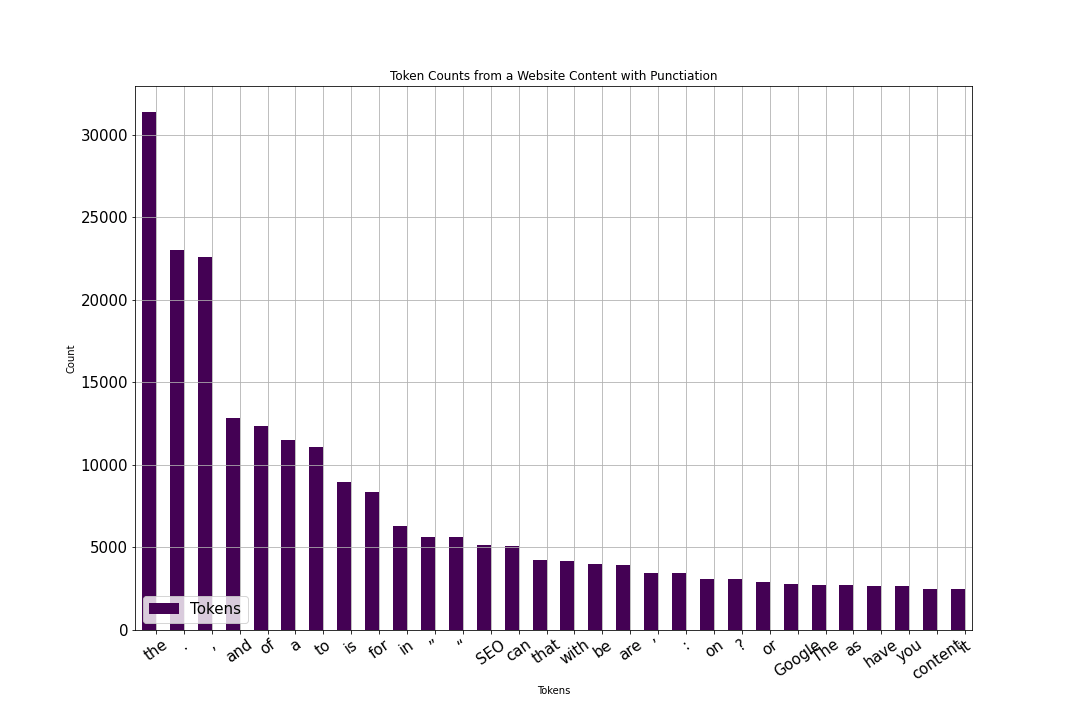

Creating a variable “content_of_website” to assign the united content corpus of the website with the “sep=” parameter with a space value is necessary to decrease the computation need. Instead of performing NLTK Tokenization for every web page’s content separately and then uniting all of the tokenized output of text pieces, uniting all of the content pieces and then performing the NLTK tokenization for the united content piece is better for time and energy saving. At the next step, the “NLTK.word_tokenize” will be performed and the output of the tokenization process will be assigned to a variable.

tokens = word_tokenize(content_of_website)To be able to see the counts of the tokenized words, and their counts, the “Counter” from the “collections” can be used as below.

tokenized_counts = Counter(tokens)

df_tokenized = pd.DataFrame.from_dict(tokenized_counts, orient="index").reset_index()“tokenized_counts = Counter(tokens)” is to provide a counting process for all of the counted objects. And, at the second line of the counting tokenized words with NLTK, the “from_dict” and “reset_index” methods of Pandas have been used to provide a data frame. Thanks to NLTK tokenization, the “unique word count” of a website can be found below.

df_tokenized.nunique()

OUTPUT>>>

index 18056

0 471

dtype: int64The “holisticseo.digital” has 18056 unique words within its content. These words can be seen below.

df_tokenized.sort_values(by=0, ascending=False, inplace=True)

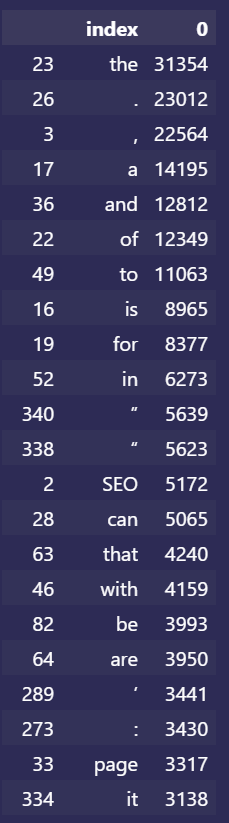

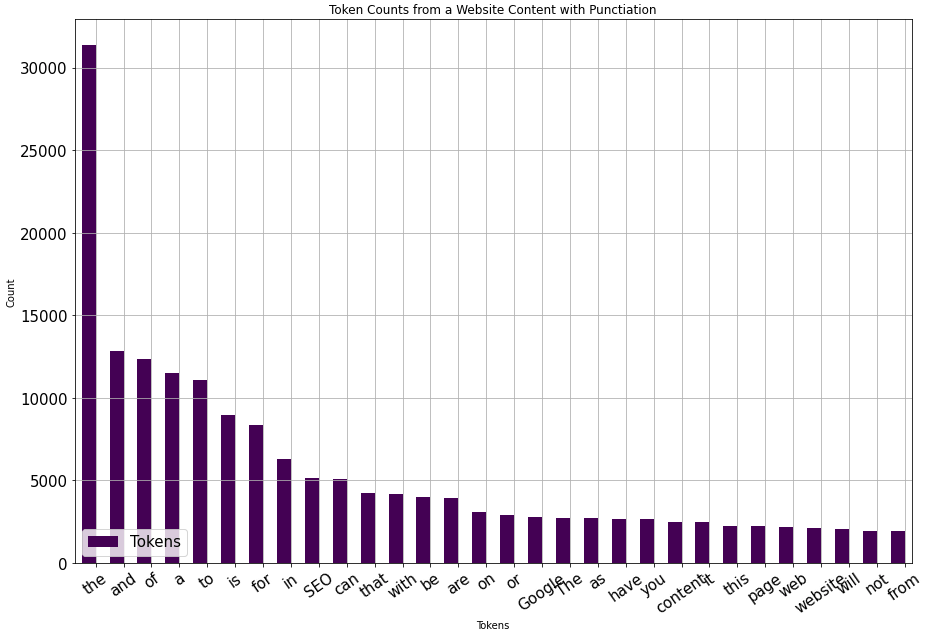

df_tokenizedBelow, you can see the tokenization of words as an image.

If the image of the word tokenization output is not clear for you, you can check the table of the word tokenization output is below.

| index | 0 | |

| 23 | the | 31354 |

| 26 | . | 23012 |

| 3 | , | 22564 |

| 36 | and | 12812 |

| 22 | of | 12349 |

| … | … | … |

| 14747 | NEL | 1 |

| 14748 | CSE | 1 |

| 14749 | recipe-related | 1 |

| 17753 | Plan | 1 |

| 18055 | almost. | 1 |

| 18056 rows × 2 columns |

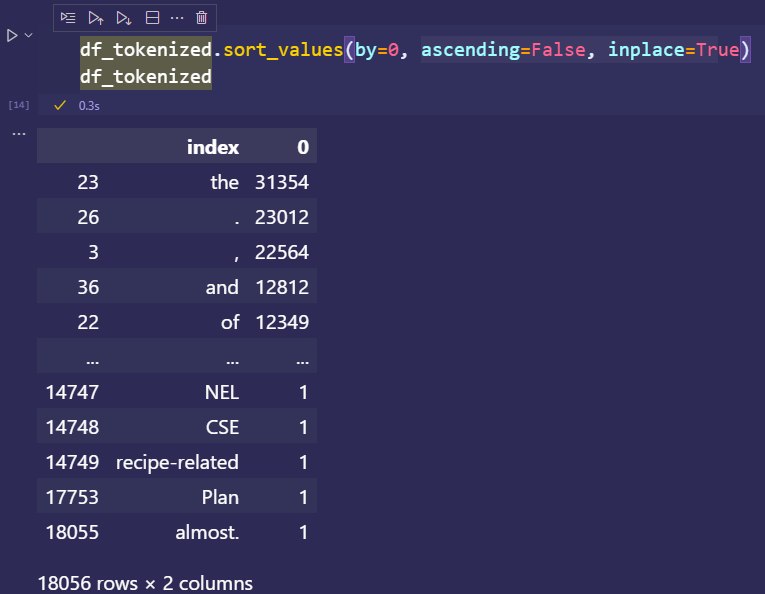

After the word tokenization with NLTK for website content, we see that the words from the header and footer appear more along with the stop words. The visualization of the counted word tokenization can be done as below.

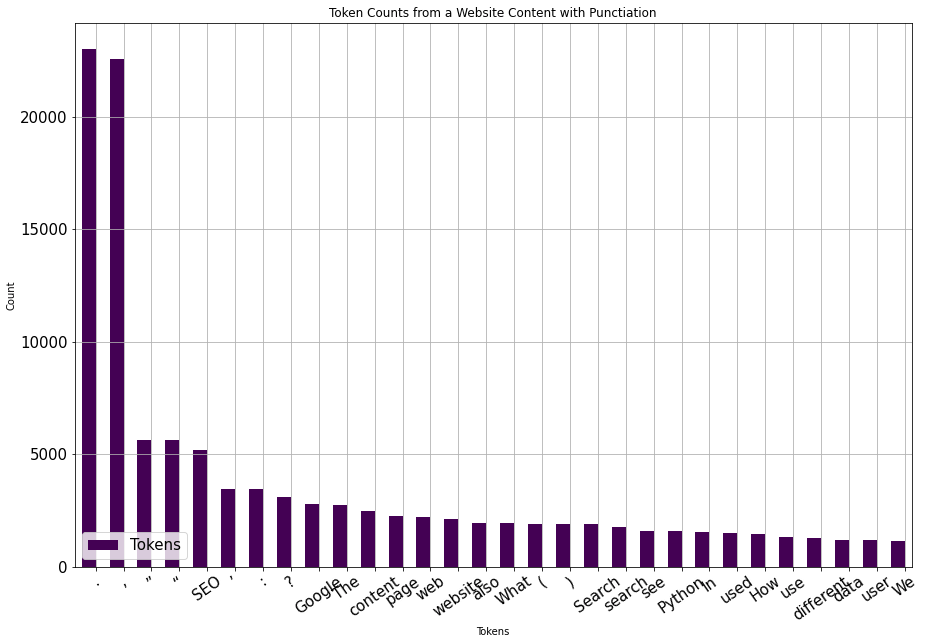

df_tokenized.sort_values(by=0, ascending=False, inplace=True)

df_tokenized[:30].plot(kind="bar",x="index", orientation="vertical", figsize=(15,10), xlabel="Tokens", ylabel="Count", colormap="viridis", table=False, grid=True, fontsize=15, rot=35, position=1, title="Token Counts from a Website Content with Punctiation", legend=True).legend(["Tokens"], loc="lower left", prop={"size":15})Below, you can see the output of the code block for visualizing the word tokenization output.

To save the word tokenization output’s barplot as a PNG, you can use the code block below.

df_tokenized[:30].plot(kind="bar",x="index", orientation="vertical", figsize=(15,10), xlabel="Tokens", ylabel="Count", colormap="viridis", table=False, grid=True, fontsize=15, rot=35, position=1, title="Token Counts from a Website Content with Punctiation", legend=True).legend(["Tokens"], loc="lower left", prop={"size":15}).figure.savefig("word-tokenization-2.png")The word “the” appears more than 30000 times while some of the punctuations are also included within the word tokenization results. It appears that the words “content” and “Google” are the most appeared words besides the punctuation characters and the stop words. To have more insight when it comes to the word tokenization, the “TF-IDF Analysis with Python” can help to understand a word’s weight within a corpus. To create a better insight for SEO and content analysis via NLTK tokenization, the stop words should be removed.

How to Filter out the Stop Words for Tokenization with NLTK?

To remove the stop words from the NLTK Tokenization process’ output, a filter-out process should be performed with a repetitive loop with a list comprehension or a normal for loop. An example of NLTK Tokenization by removing the stop words can be seen below.

stop_words_english = set(stopwords.words("english"))

df_tokenized["filtered_tokens"] = pd.Series([w for w in df_tokenized["index"] if not w.lower() in stop_words_english])To filter out the stop words during the word tokenization, the text cleaning methoıds should be used. To clean the stop words, the “stopwords.words(“english”)” method can be used from NLTK. In the code line above, the first line assigns the stop words within English to the “stop_words_english” variable. At the second line, we created a new column within the “df_tokenized” data frame which uses a list comprehension with the “pd.Series”. Basically, we take every word from the stop words list and filter the tokenized words output with NLTK according to the stop words. The “filtered_tokends” column doesn’t include any of the stop words.

How to Count Tokenized Words with NLTK without Stop Words?

To count the tokenized words with NLTK without the stop words, a list comprehension for subtracting the stop words should be used over the tokenized output. Below, you will see a specific example definition for the NLTK Tokenization tutorial.

To count tokenized words with NLTK by subtracting the stop words in English, the “Counter” object will be used over the list that has been created over the “tokens_without_stop_words” with the list comprehension process of “[word for word in tokens if not a word in stopwords.words(“english”)]”.

Below, you can see a code block to count the tokenized words and their output.

tokenized_counts_without_stop_words = Counter(tokens_without_stop_words)

tokenized_counts_without_stop_words

OUTPUT>>>

Counter({'Think': 381,

'like': 989,

'SEO': 5172,

',': 22564,

'Code': 467,

'Developer': 405,

'Python': 1583,

'TechSEO': 733,

'Theoretical': 700,

'On-Page': 670,

'PageSpeed': 645,

'UX': 699,

'Marketing': 1086,

'Main': 231,

'Menu': 192,

'X-Default': 232,

'value': 370,

'hreflang': 219,

'attribute': 178,

'link': 509,

'tag': 314,

'.': 23012,

'An': 198,

'specify': 42,

'alternate': 109,

show more (open the raw output data in a text editor) ...

'plan': 6,

'reward': 3,

'high-quality': 34,

'due': 82,

'back': 49,

...})The next step is creating a data frame from the Counter Object via the “from_dict” method of the “pd.DataFrame”.

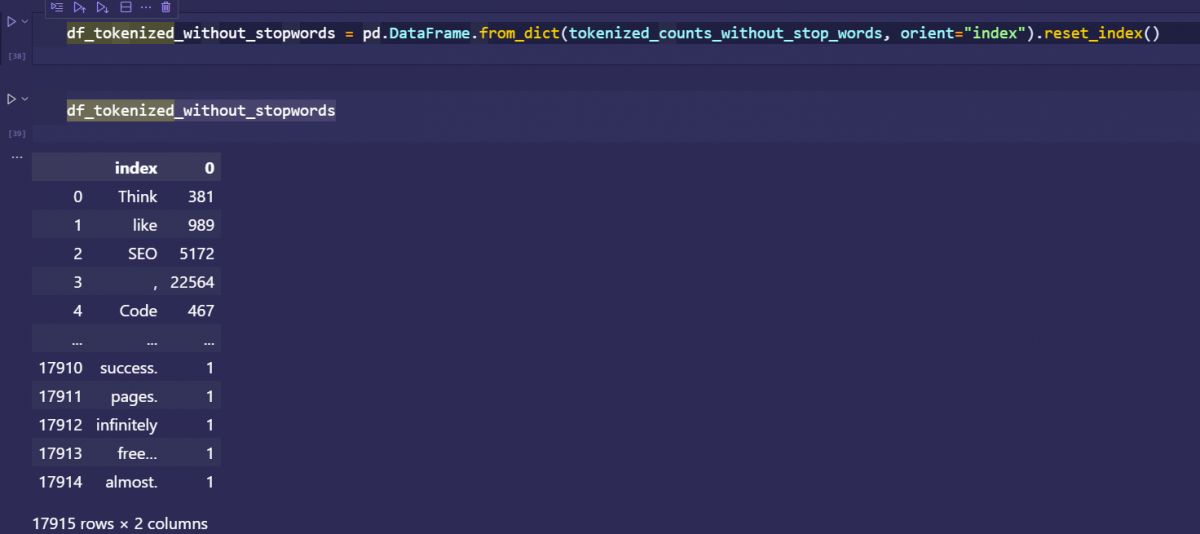

df_tokenized_without_stopwords = pd.DataFrame.from_dict(tokenized_counts_without_stop_words, orient="index").reset_index()

df_tokenized_without_stopwordsBelow, you can see the output.

Below, you can see the table output of the tokenization with NLTK without stop words in English.

| index | 0 | |

| 0 | Think | 381 |

| 1 | like | 989 |

| 2 | SEO | 5172 |

| 3 | , | 22564 |

| 4 | Code | 467 |

| … | … | … |

| 17910 | success. | 1 |

| 17911 | pages. | 1 |

| 17912 | infinitely | 1 |

| 17913 | free… | 1 |

| 17914 | almost. | 1 |

| 17915 rows × 2 columns |

Even if the stop words are removed from the text, still the punctuations exist. To clean the textual data completely for a healthier word tokenization process with NLTK, the stop words should be cleaned. Below, you will see the sorted version of the word tokenization with NLTK output without stop words.

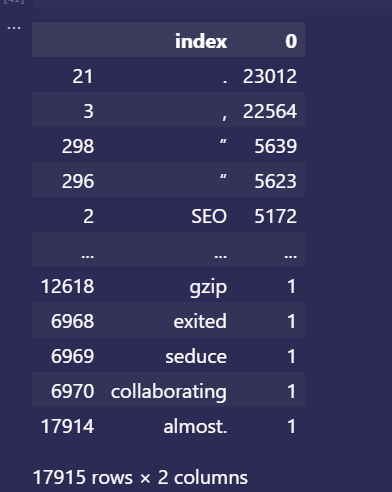

df_tokenized_without_stopwords.sort_values(by=0, ascending=False, inplace=True)

df_tokenized_without_stopwordsYou can see the output of word tokenization with NLTK as an image.

The table output of the word tokenization with NLTK without stop words and sorted values is below.

| index | 0 | |

| 21 | . | 23012 |

| 3 | , | 22564 |

| 298 | ” | 5639 |

| 296 | “ | 5623 |

| 2 | SEO | 5172 |

| … | … | … |

| 12618 | gzip | 1 |

| 6968 | exited | 1 |

| 6969 | seduce | 1 |

| 6970 | collaborating | 1 |

| 17914 | almost. | 1 |

| 17915 rows × 2 columns |

How to visualize the Word Tokenization with NLTK without the Stop Words?

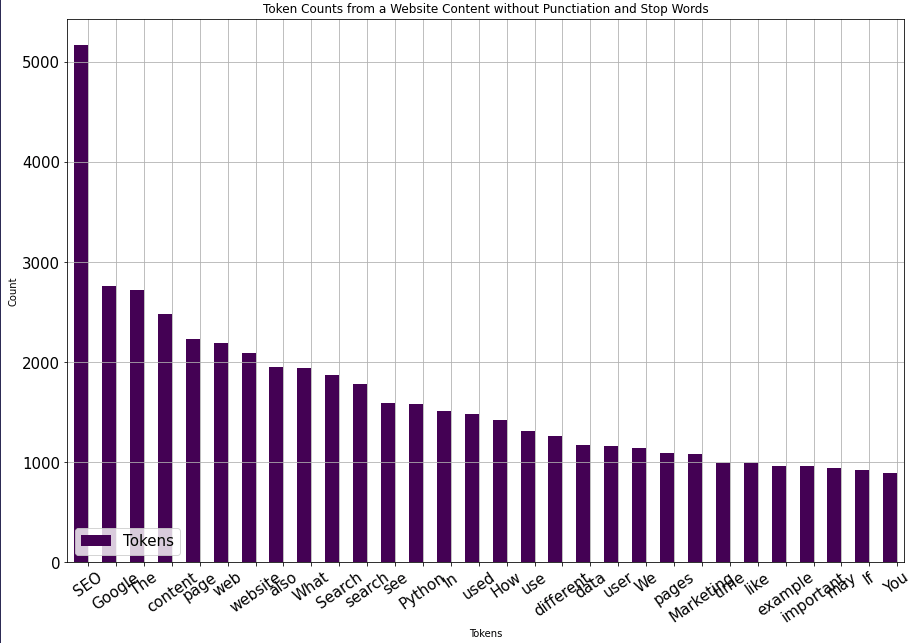

To visualize the NLTK Word Tokenization without the stop words, the “plot” method of Pandas Python Library should be used. Below, you can see an example visualization of the word tokenization with NLTK without stop words.

df_tokenized_without_stopwords[:30].plot(kind="bar",x="index", orientation="vertical", figsize=(15,10), xlabel="Tokens", ylabel="Count", colormap="viridis", table=False, grid=True, fontsize=15, rot=35, position=1, title="Token Counts from a Website Content with Punctiation", legend=True).legend(["Tokens"], loc="lower left", prop={"size":15})You can see the output as an image below.

The effect of the punctuations is more evident within the visualization of the word tokenization via NLTK without stop words.

How to Calculate the Effect of the Stop Words for the Length of the Corpora?

The corpora length represents the total word count of the textual data. To calculate the effect of the stop words for the length of the corpora, the stop words count should be subtracted from the total word counts. Below, you can see an example for the calculation of the total stop word count effect for the length of the corpora.

tokens_without_stop_words = [word for word in tokens if not word in stopwords.words("english")]

print(len(content_of_website))

len(content_of_website) - len(tokens_without_stop_words)

OUTPUT>>>

307199

268147The total word count of the website is 307199, the total word count without the stop words is 268147. And, 18056 of these words are unique. The unique word count can demonstrate a website’s potential query count since every different word is a representational score for relevance to a topic, or concept. Every unique word and n-gram can give a better chance to be relevant to a concept, or phrase in the search bar.

How to Remove the Punctuation from Word Tokenization with NLTK?

To remove the Punctuation from Word Tokenization with NLTK the “isalnum()” method should be used with a list comprehension. In the NLTK Word Tokenization tutorial the “isalnum()” method will be used on the “content_of_website_removed_punct” variable as below.

content_of_website_removed_punct = [word for word in tokens if word.isalnum()]

content_of_website_removed_punct

OUTPUT >>>

['Think',

'like',

'SEO',

'Code',

'like',

'Developer',

'Python',

'SEO',

'TechSEO',

'Theoretical',

'SEO',

'SEO',

'PageSpeed',

'UX',

'Marketing',

'Think',

'like',

'SEO',

'Code',

'like',

'Developer',

'Main',

'Menu',

'is',

'a',

show more (open the raw output data in a text editor) ...

'server',

'needs',

'to',

'return',

'304',

...]As you see all of the punctuations are removed from the tokenized words with NLTK. The next step is using the Counter object for creating a data frame so that the tokenized output with NLTK can be used for analysis and machine learning.

content_of_website_removed_punct_counts = Counter(content_of_website_removed_punct)

content_of_website_removed_punct_counts

OUTPUT >>>

Counter({'Think': 381,

'like': 989,

'SEO': 5172,

'Code': 467,

'Developer': 405,

'Python': 1583,

'TechSEO': 733,

'Theoretical': 700,

'PageSpeed': 645,

'UX': 699,

'Marketing': 1086,

'Main': 231,

'Menu': 192,

'is': 8965,

'a': 11483,

'value': 370,

'for': 8377,

'hreflang': 219,

'attribute': 178,

'of': 12349,

'the': 31354,

'link': 509,

'tag': 314,

'An': 198,

'can': 5065,

show more (open the raw output data in a text editor) ...

'natural': 56,

'Due': 20,

'inaccuracies': 1,

'calculation': 63,

'always': 241,

...})The Counter object has been created for the NLTK Word Tokenization output without the punctuation. Below, you will see an example for creating a data frame methodology via “from_dict” for the result of the NLTK word tokenization without punctuation.

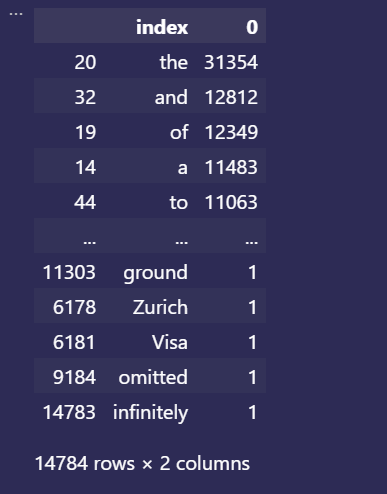

content_of_website_removed_punct_counts_df = pd.DataFrame.from_dict(content_of_website_removed_punct_counts, orient="index").reset_index()

content_of_website_removed_punct_counts_df.sort_values(by=0, ascending=False, inplace=True)

content_of_website_removed_punct_counts_dfBelow, you can see the image output of the NLTK word tokenization by removing the punctuations.

Below, you can see the table output of the NLTK word tokenization by removing the punctuations.

| index | 0 | |

| 20 | the | 31354 |

| 32 | and | 12812 |

| 19 | of | 12349 |

| 14 | a | 11483 |

| 44 | to | 11063 |

| … | … | … |

| 11303 | ground | 1 |

| 6178 | Zurich | 1 |

| 6181 | Visa | 1 |

| 9184 | omitted | 1 |

| 14783 | infinitely | 1 |

| 14784 rows × 2 columns |

How to visualize the NLTK Word Tokenization result without punctuation?

To visualize the NLTK Word Tokenization result within a data frame without punctuation, the “plot” method of the pandas should be used. An example visualization of the NLTK Word Tokenization without punctuation can be seen below.

content_of_website_removed_punct_counts_df[:30].plot(kind="bar",x="index", orientation="vertical", figsize=(15,10), xlabel="Tokens", ylabel="Count", colormap="viridis", table=False, grid=True, fontsize=15, rot=35, position=1, title="Token Counts from a Website Content with Punctiation", legend=True).legend(["Tokens"], loc="lower left", prop={"size":15})The output of the visualization of the NLTK Word Tokenization result without punctuation is below.

How to Remove stop words and punctuations from text data for a better NLTK Word Tokenization?

To remove the stop words and punctuations for cleaning the text in order to have a better NLTK Word Tokenization result, the list comprehension should be used with an “if” statement. Multiple conditional list comprehensions are to provide a faster text cleaning process for the removal of punctuation and stop words. An example of the cleaning of punctuation and stop words can be seen below.

content_of_website_filtered_stopwords_and_punctiation = [w for w in tokens if not w in set(stopwords.words("english")) if w.isalnum()]

content_of_website_filtered_stopwords_and_punctiation_counts = Counter(content_of_website_filtered_stopwords_and_punctiation)

content_of_website_filtered_stopwords_and_punctiation_counts_df = pd.DataFrame.from_dict(content_of_website_filtered_stopwords_and_punctiation_counts, orient="index").reset_index()

content_of_website_filtered_stopwords_and_punctiation_counts_df.sort_values(by=0, ascending=False, inplace=True)

content_of_website_filtered_stopwords_and_punctiation_counts_df[:30].plot(kind="bar",x="index", orientation="vertical", figsize=(15,10), xlabel="Tokens", ylabel="Count", colormap="viridis", table=False, grid=True, fontsize=15, rot=35, position=1, title="Token Counts from a Website Content with Punctiation", legend=True).legend(["Tokens"], loc="lower left", prop={"size":15})The explanation of the removal of the punctuation and the stop words in English from the tokenized words via NLTK code block is below.

- Remove the stop words and the punctuations via the “stopwods.words(“english”)” and “isalnum()”.

- Count the rest of the words with the “Counter” functions from Collections Python built-in module.

- Create the dataframe with the “from_dict” method of the “pd.DataFrame” with the “orientation”, “figsize”, “kind”, “x” parameters.

- Sort the values from high to low.

- Use the plot method of the Pandas Python Library for the visualization with the “label”, “label”, “colormap”, “table”, “grid”, “fontsize”, “rot”, “position”, “title”, “legend” parameters.

The output of the removal of the punctuation and the stop words from the tokenized words with NLTK for visualization can be seen below.

The table version of the NLTK Tokenization for words without punctuations and stop words in English can be seen below.

| index | 0 | |

| 2 | SEO | 5172 |

| 63 | 2762 | |

| 160 | The | 2716 |

| 280 | content | 2477 |

| 23 | page | 2230 |

| … | … | … |

| 9600 | estimations | 1 |

| 9595 | Politic | 1 |

| 9594 | 396 | 1 |

| 9593 | Politics | 1 |

| 14642 | infinitely | 1 |

| 14643 rows × 2 columns |

How to perform sentence Tokenization with NLTK?

To perform the sentence tokenization with NLTK, the “sent_tokenize” method of the NLTK should be used. The steps below can be used for NLTK Sentence Tokenization.

- Extract the text and assign a variable

- Import the NLTK and sent_tokenize” method.

- Use the “sent_tokenize” on the extracted text.

- Use the Counter from Collections to count the sentences.

- Create a Data Frame from the values of the count process’ output.

- Call the dataframe.

| index | 0 | |

| 104 | required fields are marked * name* email* webs… | 175 |

| 105 | python seo techseo theoretical seo on-page seo… | 175 |

| 103 | post navigation ← → your email address will no… | 156 |

| 3259 | you may see the result below. | 43 |

| 383 | you can see the result below. | 42 |

| 867 | 2 | 34 |

| 879 | 3 | 29 |

| 885 | 4 | 24 |

| 857 | 1 | 21 |

| 908 | 6 | 15 |

| 891 | 5 | 15 |

| 917 | 7 | 13 |

| 165 | reply your email address will not be published. | 13 |

| 924 | 8 | 12 |

| 14947 | below, you can see the result. | 11 |

| 3450 | you also may want to read our some of the rela… | 11 |

| 942 | 10 | 8 |

| 932 | 9 | 8 |

| 16 | in this article, we will focus on how to resiz… | 7 |

| 3146 | you can see an example below. | 7 |

| 15 | because of those motivations, image compressio… | 7 |

| 15040 | below, you will see an example. | 7 |

| 3204 | you may see the output below. | 6 |

| 3451 | return of investment definition and importance… | 6 |

| 3452 | what is conversion rate optimization? | 6 |

| 11046 | you may see an example below. | 6 |

| 62 | what is conversion funnel? | 6 |

| 3147 | you can see the output below. | 6 |

| 1 | an hreflang value can specify the alternate ve… | 5 |

| 38 | the click path plays a role above all in terms… | 5 |

| 24100 | read more » python seo techseo theoretical seo… | 5 |

| 34 | for get and head methods, the server will retu… | 5 |

| 35 | if the resource’s etag is not on the list, the… | 5 |

| 8357 | what is a news sitemap? | 5 |

| 32 | trust elements are called by definition all th… | 5 |

| 31 | trust elements are used in this context. | 5 |

| 28 | translating a pandas data frame with python ca… | 5 |

| 3 | website is a way of world wide web presence. | 5 |

| 5 | announced in october 2015 as an internal proje… | 5 |

| 7 | user-centric performance metrics are announced… | 5 |

How to perform sentence tokenization with NLTK without the stop words?

To remove the stop words from the sentence tokenization with NLTK output, the “join()” method should be used for the textual data will be tokenized. The steps that will be followed for the sentence tokenization with NLTK without the stop words can be seen below.

- Remove the stop words from the tokenized text data.

- Join the tokens with space via the “join(” “)” method and argument.

- Use the “sent_tokenize()” over the joined tokens without stop words.

An example of sentence tokenization with NLTK without the stop words can be found below.

sent_tokens_counts = Counter([sent.lower() for sent in sent_tokens])

sent_tokens_counts_df = pd.DataFrame.from_dict(sent_tokens_counts, orient="index").reset_index()

sent_tokens_counts_df.sort_values(by=0, ascending=False)[0:40]The table output of the sentence tokenization without the stop words can be found below.

| index | 0 | |

| 104 | required fields are marked * name* email* webs… | 175 |

| 105 | python seo techseo theoretical seo on-page seo… | 175 |

| 103 | post navigation ← → your email address will no… | 156 |

| 3259 | you may see the result below. | 43 |

| 383 | you can see the result below. | 42 |

| 867 | 2 | 34 |

| 879 | 3 | 29 |

| 885 | 4 | 24 |

| 857 | 1 | 21 |

| 908 | 6 | 15 |

| 891 | 5 | 15 |

| 917 | 7 | 13 |

| 165 | reply your email address will not be published. | 13 |

| 924 | 8 | 12 |

| 14947 | below, you can see the result. | 11 |

| 3450 | you also may want to read our some of the rela… | 11 |

| 942 | 10 | 8 |

| 932 | 9 | 8 |

| 16 | in this article, we will focus on how to resiz… | 7 |

| 3146 | you can see an example below. | 7 |

| 15 | because of those motivations, image compressio… | 7 |

| 15040 | below, you will see an example. | 7 |

| 3204 | you may see the output below. | 6 |

| 3451 | return of investment definition and importance… | 6 |

| 3452 | what is conversion rate optimization? | 6 |

| 11046 | you may see an example below. | 6 |

| 62 | what is conversion funnel? | 6 |

| 3147 | you can see the output below. | 6 |

| 1 | an hreflang value can specify the alternate ve… | 5 |

| 38 | the click path plays a role above all in terms… | 5 |

| 24100 | read more » python seo techseo theoretical seo… | 5 |

| 34 | for get and head methods, the server will retu… | 5 |

| 35 | if the resource’s etag is not on the list, the… | 5 |

| 8357 | what is a news sitemap? | 5 |

| 32 | trust elements are called by definition all th… | 5 |

| 31 | trust elements are used in this context. | 5 |

| 28 | translating a pandas data frame with python ca… | 5 |

| 3 | website is a way of world wide web presence. | 5 |

| 5 | announced in october 2015 as an internal proje… | 5 |

| 7 | user-centric performance metrics are announced… | 5 |

How to Interpret the Tokenized Text with NLTK?

To interpret the tokenized text with NLTK for SEO, or NLP and text quality understanding, the metrics and dimensions below can be used.

- The unique word count within the text data.

- The unique word count within the headings of a website.

- The unique word count within the anchor texts.

- The sentence count per article of a website.

- The unique sentence count per article of a website.

- The unique word count per article of a website.

- The most used words within the headings

- The most used words within the text

- The percentage of the stop words to the unique words.

- Checking the impressions, clicks and rankings for the unique group of words from different website sections such as footer, header, main content area, side bar, or the headings.

In terms of Search Engine Optimization, and understanding the text’s quality, the interpretation methods above can be used.

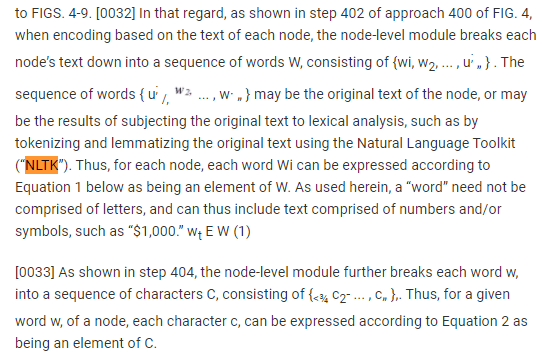

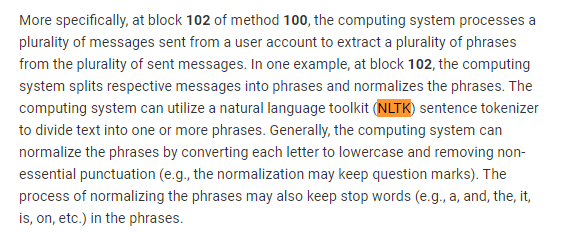

How can a Search Engine Use Tokenization?

A search engine can use tokenization to split the text into “tokens” so that the information retrieval can have a match between the queries and the document. Tokenization is used for text normalization. A search engine uses word tokenization, and sentence tokenization to perform text normalization so that they can decrease the cost of computation for their own algorithms. Pairing words from different contexts with different prefixes and suffixes, recognizing word pairs, vectorizing the N-grams within the sentences, supporting the part of speech tag with tokenized word data from a corpus are among the usage of word tokenization purposes for a search engine. For tokenization purposes, a search engine can use NLTK and other NLP Libraries such as Genism, Keras, or TensorFlow. Natural Language Tool Kit (NLTK) can be used by Google and other search engines with the same purposes. Below, you will see two patent from Google, and Max Benjamin Braun, Ying Sheng that includes the usage of NLTK.

Below, you will see another example that shows how a search engine can use NLTK and tokenization from Google Search Engine.

Do Search Engines use NLTK for tokenization? Yes, search engines use tokenization. Search Engines such as Microsoft Bing, Google, and DuckDuckGo can use word and sentence tokenization to create indexes of words, and indexes of documents to understand the contextual connection between the queries, and the documents. A word’s place, a word’s surrounding other words can help a search engine to understand the relevance of words to each other and to a topic. Word Tokenization and sentence tokenization are to provide a better lemmatization, stemming, word grouping, and textual data aggregation for search engines. To learn more about how a search engine can use Natural Language Processing, and its sub-practices such as tokenization, you can read the following articles.

- Named Entity Recognition

- Semantic Search

- Semantic SEO

- Semantic Role Labeling

- Lexical Semantics

- Sentiment Analysis

Last Thoughts on NLTK Tokenize and Holistic SEO

NLTK Word Tokenization is important to interpret a website’s content or a book’s text. Word Tokenization is an important and basic step for Natural Language Processing. It can be used for analyzing the SEO Performance of a website or cleaning a text for NLP Algorithm training. Using lemmatization, stemming, stop word cleaning, punctuation cleaning, and visualizing the NLTK Tokenization outputs are beneficial to perform statistical analysis for a text. Filtering certain documents that mention a word, or filtering certain documents based on their content, content length, and unique word count can be beneficial to perform a faster and scaled analysis.

The NLTK Tutorials and NLTK Tokenize Guideline will be updated over time.

- Author

- Recent Posts

Owner and Founder at Holistic SEO & Digital

Koray Tuğberk GÜBÜR is the CEO and Founder of Holistic SEO & Digital where he provides SEO Consultancy, Web Development, Data Science, Web Design, and Search Engine Optimization services with strategic leadership for the agency’s SEO Client Projects. Koray Tuğberk GÜBÜR performs SEO A/B Tests regularly to understand the Google, Microsoft Bing, and Yandex like search engines’ algorithms, and internal agenda. Koray uses Data Science to understand the custom click curves and baby search engine algorithms’ decision trees. Tuğberk used many websites for writing different SEO Case Studies. He published more than 10 SEO Case Studies with 20+ websites to explain the search engines. Koray Tuğberk started his SEO Career in 2015 in the casino industry and moved into the white-hat SEO industry. Koray worked with more than 700 companies for their SEO Projects since 2015. Koray used SEO to improve the user experience, and conversion rate along with brand awareness of the online businesses from different verticals such as retail, e-commerce, affiliate, and b2b, or b2c websites. He enjoys examining websites, algorithms, and search engines.

Python NLTK | nltk.tokenizer.word_tokenize()

Improve Article

Save Article

Like Article

Improve Article

Save Article

Like Article

With the help of nltk.tokenize.word_tokenize() method, we are able to extract the tokens from string of characters by using tokenize.word_tokenize() method. It actually returns the syllables from a single word. A single word can contain one or two syllables.

Syntax :

tokenize.word_tokenize()

Return : Return the list of syllables of words.

Example #1 :

In this example we can see that by using tokenize.word_tokenize() method, we are able to extract the syllables from stream of words or sentences.

from nltk import word_tokenize

tk = SyllableTokenizer()

gfg = "Antidisestablishmentarianism"

geek = tk.tokenize(gfg)

print(geek)

Output :

[‘An’, ‘ti’, ‘dis’, ‘es’, ‘ta’, ‘blish’, ‘men’, ‘ta’, ‘ria’, ‘nism’]

Example #2 :

from nltk.tokenize import word_tokenize

tk = SyllableTokenizer()

gfg = "Gametophyte"

geek = tk.tokenize(gfg)

print(geek)

Output :

[‘Ga’, ‘me’, ‘to’, ‘phy’, ‘te’]

Like Article

Save Article

To run the below python program, (NLTK) natural language toolkit has to be installed in your system.

The NLTK module is a massive tool kit, aimed at helping you with the entire Natural Language Processing (NLP) methodology.

In order to install NLTK run the following commands in your terminal.

The above installation will take quite some time due to the massive amount of tokenizers, chunkers, other algorithms, and all of the corpora to be downloaded.

So basically tokenizing involves splitting sentences and words from the body of the text.

from nltk.tokenize import sent_tokenize, word_tokenize

text = "Natural language processing (NLP) is a field " +

"of computer science, artificial intelligence " +

"and computational linguistics concerned with " +

"the interactions between computers and human " +

"(natural) languages, and, in particular, " +

"concerned with programming computers to " +

"fruitfully process large natural language " +

"corpora. Challenges in natural language " +

"processing frequently involve natural " +

"language understanding, natural language" +

"generation frequently from formal, machine" +

"-readable logical forms), connecting language " +

"and machine perception, managing human-" +

"computer dialog systems, or some combination " +

"thereof."

print(sent_tokenize(text))

print(word_tokenize(text))`

OUTPUT

[‘Natural language processing (NLP) is a field of computer science, artificial intelligence and computational linguistics concerned with the interactions between computers and human (natural) languages, and, in particular, concerned with programming computers to fruitfully process large natural language corpora.’, ‘Challenges in natural language processing frequently involve natural language understanding, natural language generation (frequently from formal, machine-readable logical forms), connecting language and machine perception, managing human-computer dialog systems, or some combination thereof.’]

[‘Natural’, ‘language’, ‘processing’, ‘(‘, ‘NLP’, ‘)’, ‘is’, ‘a’, ‘field’, ‘of’, ‘computer’, ‘science’, ‘,’, ‘artificial’, ‘intelligence’, ‘and’, ‘computational’, ‘linguistics’, ‘concerned’, ‘with’, ‘the’, ‘interactions’, ‘between’, ‘computers’, ‘and’, ‘human’, ‘(‘, ‘natural’, ‘)’, ‘languages’, ‘,’, ‘and’, ‘,’, ‘in’, ‘particular’, ‘,’, ‘concerned’, ‘with’, ‘programming’, ‘computers’, ‘to’, ‘fruitfully’, ‘process’, ‘large’, ‘natural’, ‘language’, ‘corpora’, ‘.’, ‘Challenges’, ‘in’, ‘natural’, ‘language’, ‘processing’, ‘frequently’, ‘involve’, ‘natural’, ‘language’, ‘understanding’, ‘,’, ‘natural’, ‘language’, ‘generation’, ‘(‘, ‘frequently’, ‘from’, ‘formal’, ‘,’, ‘machine-readable’, ‘logical’, ‘forms’, ‘)’, ‘,’, ‘connecting’, ‘language’, ‘and’, ‘machine’, ‘perception’, ‘,’, ‘managing’, ‘human-computer’, ‘dialog’, ‘systems’, ‘,’, ‘or’, ‘some’, ‘combination’, ‘thereof’, ‘.’]

So there, we have created tokens, which are sentences initially and words later.

This article is contributed by Pratima Upadhyay. If you like GeeksforGeeks and would like to contribute, you can also write an article using contribute.geeksforgeeks.org or mail your article to contribute@geeksforgeeks.org. See your article appearing on the GeeksforGeeks main page and help other Geeks.

Please write comments if you find anything incorrect, or you want to share more information about the topic discussed above.