Improve Article

Save Article

Like Article

Improve Article

Save Article

Like Article

It is not always possible to get the dataset in CSV format. So, Pandas provides us the functions to convert datasets in other formats to the Data frame. An excel file has a ‘.xlsx’ format.

Before we get started, we need to install a few libraries.

pip install pandas pip install xlrd

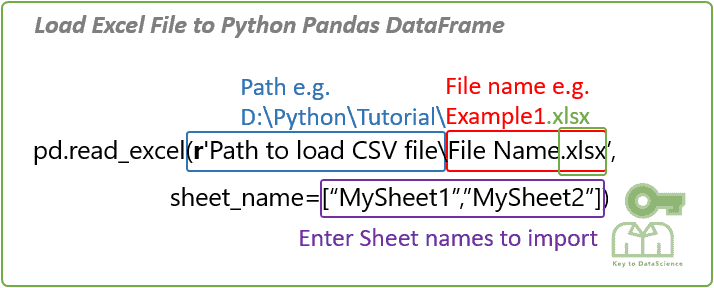

For importing an Excel file into Python using Pandas we have to use pandas.read_excel() function.

Syntax: pandas.read_excel(io, sheet_name=0, header=0, names=None,….)

Return: DataFrame or dict of DataFrames.

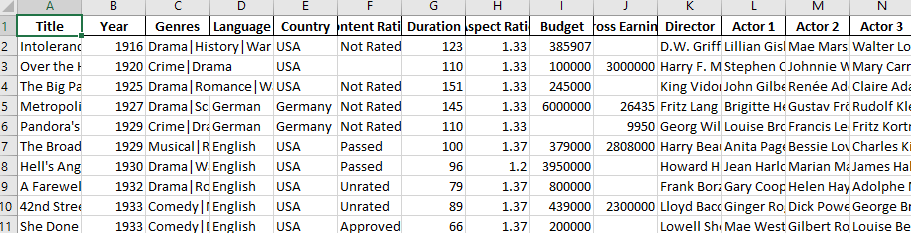

Let’s suppose the Excel file looks like this:

Now, we can dive into the code.

Example 1: Read an Excel file.

Python3

import pandas as pd

df = pd.read_excel("sample.xlsx")

print(df)

Output:

Example 2: To select a particular column, we can pass a parameter “index_col“.

Python3

import pandas as pd

df = pd.read_excel("sample.xlsx",

index_col = 0)

print(df)

Output:

Example 3: In case you don’t prefer the initial heading of the columns, you can change it to indexes using the parameter “header”.

Python3

import pandas as pd

df = pd.read_excel('sample.xlsx',

header = None)

print(df)

Output:

Example 4: If you want to change the data type of a particular column you can do it using the parameter “dtype“.

Python3

import pandas as pd

df = pd.read_excel('sample.xlsx',

dtype = {"Products": str,

"Price":float})

print(df)

Output:

Example 5: In case you have unknown values, then you can handle it using the parameter “na_values“. It will convert the mentioned unknown values into “NaN”

Python3

import pandas as pd

df = pd.read_excel('sample.xlsx',

na_values =['item1',

'item2'])

print(df)

Output:

Like Article

Save Article

Why learn to work with Excel with Python? Excel is one of the most popular and widely-used data tools; it’s hard to find an organization that doesn’t work with it in some way. From analysts, to sales VPs, to CEOs, various professionals use Excel for both quick stats and serious data crunching.

With Excel being so pervasive, data professionals must be familiar with it. Working with data in Python or R offers serious advantages over Excel’s UI, so finding a way to work with Excel using code is critical. Thankfully, there’s a great tool already out there for using Excel with Python called pandas.

Pandas has excellent methods for reading all kinds of data from Excel files. You can also export your results from pandas back to Excel, if that’s preferred by your intended audience. Pandas is great for other routine data analysis tasks, such as:

- quick Exploratory Data Analysis (EDA)

- drawing attractive plots

- feeding data into machine learning tools like scikit-learn

- building machine learning models on your data

- taking cleaned and processed data to any number of data tools

Pandas is better at automating data processing tasks than Excel, including processing Excel files.

In this tutorial, we are going to show you how to work with Excel files in pandas. We will cover the following concepts.

- setting up your computer with the necessary software

- reading in data from Excel files into pandas

- data exploration in pandas

- visualizing data in pandas using the matplotlib visualization library

- manipulating and reshaping data in pandas

- moving data from pandas into Excel

Note that this tutorial does not provide a deep dive into pandas. To explore pandas more, check out our course.

System Prerequisites

We will use Python 3 and Jupyter Notebook to demonstrate the code in this tutorial.In addition to Python and Jupyter Notebook, you will need the following Python modules:

- matplotlib — data visualization

- NumPy — numerical data functionality

- OpenPyXL — read/write Excel 2010 xlsx/xlsm files

- pandas — data import, clean-up, exploration, and analysis

- xlrd — read Excel data

- xlwt — write to Excel

- XlsxWriter — write to Excel (xlsx) files

There are multiple ways to get set up with all the modules. We cover three of the most common scenarios below.

- If you have Python installed via Anaconda package manager, you can install the required modules using the command

conda install. For example, to install pandas, you would execute the command —conda install pandas. - If you already have a regular, non-Anaconda Python installed on the computer, you can install the required modules using

pip. Open your command line program and execute commandpip install <module name>to install a module. You should replace<module name>with the actual name of the module you are trying to install. For example, to install pandas, you would execute command —pip install pandas. - If you don’t have Python already installed, you should get it through the Anaconda package manager. Anaconda provides installers for Windows, Mac, and Linux Computers. If you choose the full installer, you will get all the modules you need, along with Python and pandas within a single package. This is the easiest and fastest way to get started.

The Data Set

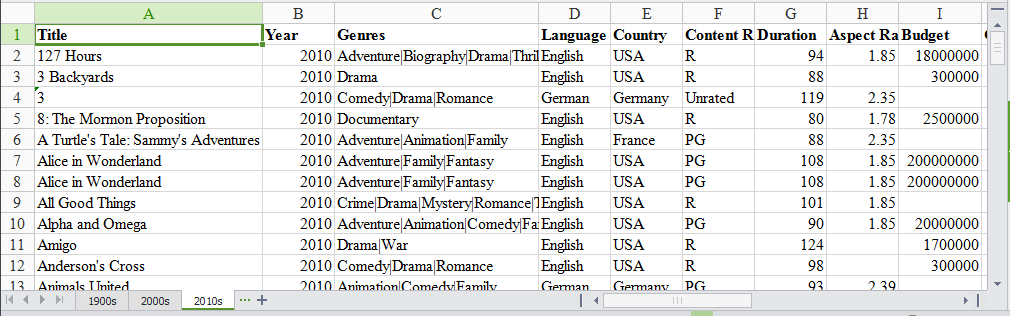

In this tutorial, we will use a multi-sheet Excel file we created from Kaggle’s IMDB Scores data. You can download the file here.

Our Excel file has three sheets: ‘1900s,’ ‘2000s,’ and ‘2010s.’ Each sheet has data for movies from those years.

We will use this data set to find the ratings distribution for the movies, visualize movies with highest ratings and net earnings and calculate statistical information about the movies. We will be analyzing and exploring this data using Python and pandas, thus demonstrating pandas capabilities for working with Excel data in Python.

Read data from the Excel file

We need to first import the data from the Excel file into pandas. To do that, we start by importing the pandas module.

import pandas as pdWe then use the pandas’ read_excel method to read in data from the Excel file. The easiest way to call this method is to pass the file name. If no sheet name is specified then it will read the first sheet in the index (as shown below).

excel_file = 'movies.xls'

movies = pd.read_excel(excel_file)Here, the read_excel method read the data from the Excel file into a pandas DataFrame object. Pandas defaults to storing data in DataFrames. We then stored this DataFrame into a variable called movies.

Pandas has a built-in DataFrame.head() method that we can use to easily display the first few rows of our DataFrame. If no argument is passed, it will display first five rows. If a number is passed, it will display the equal number of rows from the top.

movies.head()| Title | Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Intolerance: Love’s Struggle Throughout the Ages | 1916 | Drama|History|War | NaN | USA | Not Rated | 123 | 1.33 | 385907.0 | NaN | … | 436 | 22 | 9.0 | 481 | 691 | 1 | 10718 | 88 | 69.0 | 8.0 |

| 1 | Over the Hill to the Poorhouse | 1920 | Crime|Drama | NaN | USA | NaN | 110 | 1.33 | 100000.0 | 3000000.0 | … | 2 | 2 | 0.0 | 4 | 0 | 1 | 5 | 1 | 1.0 | 4.8 |

| 2 | The Big Parade | 1925 | Drama|Romance|War | NaN | USA | Not Rated | 151 | 1.33 | 245000.0 | NaN | … | 81 | 12 | 6.0 | 108 | 226 | 0 | 4849 | 45 | 48.0 | 8.3 |

| 3 | Metropolis | 1927 | Drama|Sci-Fi | German | Germany | Not Rated | 145 | 1.33 | 6000000.0 | 26435.0 | … | 136 | 23 | 18.0 | 203 | 12000 | 1 | 111841 | 413 | 260.0 | 8.3 |

| 4 | Pandora’s Box | 1929 | Crime|Drama|Romance | German | Germany | Not Rated | 110 | 1.33 | NaN | 9950.0 | … | 426 | 20 | 3.0 | 455 | 926 | 1 | 7431 | 84 | 71.0 | 8.0 |

5 rows × 25 columns

Excel files quite often have multiple sheets and the ability to read a specific sheet or all of them is very important. To make this easy, the pandas read_excel method takes an argument called sheetname that tells pandas which sheet to read in the data from. For this, you can either use the sheet name or the sheet number. Sheet numbers start with zero. If the sheetname argument is not given, it defaults to zero and pandas will import the first sheet.

By default, pandas will automatically assign a numeric index or row label starting with zero. You may want to leave the default index as such if your data doesn’t have a column with unique values that can serve as a better index. In case there is a column that you feel would serve as a better index, you can override the default behavior by setting index_col property to a column. It takes a numeric value for setting a single column as index or a list of numeric values for creating a multi-index.

In the below code, we are choosing the first column, ‘Title’, as index (index=0) by passing zero to the index_col argument.

movies_sheet1 = pd.read_excel(excel_file, sheetname=0, index_col=0)

movies_sheet1.head()| Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | Director | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Title | |||||||||||||||||||||

| Intolerance: Love’s Struggle Throughout the Ages | 1916 | Drama|History|War | NaN | USA | Not Rated | 123 | 1.33 | 385907.0 | NaN | D.W. Griffith | … | 436 | 22 | 9.0 | 481 | 691 | 1 | 10718 | 88 | 69.0 | 8.0 |

| Over the Hill to the Poorhouse | 1920 | Crime|Drama | NaN | USA | NaN | 110 | 1.33 | 100000.0 | 3000000.0 | Harry F. Millarde | … | 2 | 2 | 0.0 | 4 | 0 | 1 | 5 | 1 | 1.0 | 4.8 |

| The Big Parade | 1925 | Drama|Romance|War | NaN | USA | Not Rated | 151 | 1.33 | 245000.0 | NaN | King Vidor | … | 81 | 12 | 6.0 | 108 | 226 | 0 | 4849 | 45 | 48.0 | 8.3 |

| Metropolis | 1927 | Drama|Sci-Fi | German | Germany | Not Rated | 145 | 1.33 | 6000000.0 | 26435.0 | Fritz Lang | … | 136 | 23 | 18.0 | 203 | 12000 | 1 | 111841 | 413 | 260.0 | 8.3 |

| Pandora’s Box | 1929 | Crime|Drama|Romance | German | Germany | Not Rated | 110 | 1.33 | NaN | 9950.0 | Georg Wilhelm Pabst | … | 426 | 20 | 3.0 | 455 | 926 | 1 | 7431 | 84 | 71.0 | 8.0 |

5 rows × 24 columns

As you noticed above, our Excel data file has three sheets. We already read the first sheet in a DataFrame above. Now, using the same syntax, we will read in rest of the two sheets too.

movies_sheet2 = pd.read_excel(excel_file, sheetname=1, index_col=0)

movies_sheet2.head()| Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | Director | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Title | |||||||||||||||||||||

| 102 Dalmatians | 2000 | Adventure|Comedy|Family | English | USA | G | 100.0 | 1.85 | 85000000.0 | 66941559.0 | Kevin Lima | … | 2000.0 | 795.0 | 439.0 | 4182 | 372 | 1 | 26413 | 77.0 | 84.0 | 4.8 |

| 28 Days | 2000 | Comedy|Drama | English | USA | PG-13 | 103.0 | 1.37 | 43000000.0 | 37035515.0 | Betty Thomas | … | 12000.0 | 10000.0 | 664.0 | 23864 | 0 | 1 | 34597 | 194.0 | 116.0 | 6.0 |

| 3 Strikes | 2000 | Comedy | English | USA | R | 82.0 | 1.85 | 6000000.0 | 9821335.0 | DJ Pooh | … | 939.0 | 706.0 | 585.0 | 3354 | 118 | 1 | 1415 | 10.0 | 22.0 | 4.0 |

| Aberdeen | 2000 | Drama | English | UK | NaN | 106.0 | 1.85 | 6500000.0 | 64148.0 | Hans Petter Moland | … | 844.0 | 2.0 | 0.0 | 846 | 260 | 0 | 2601 | 35.0 | 28.0 | 7.3 |

| All the Pretty Horses | 2000 | Drama|Romance|Western | English | USA | PG-13 | 220.0 | 2.35 | 57000000.0 | 15527125.0 | Billy Bob Thornton | … | 13000.0 | 861.0 | 820.0 | 15006 | 652 | 2 | 11388 | 183.0 | 85.0 | 5.8 |

5 rows × 24 columns

movies_sheet3 = pd.read_excel(excel_file, sheetname=2, index_col=0)

movies_sheet3.head()| Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | Director | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Title | |||||||||||||||||||||

| 127 Hours | 2010.0 | Adventure|Biography|Drama|Thriller | English | USA | R | 94.0 | 1.85 | 18000000.0 | 18329466.0 | Danny Boyle | … | 11000.0 | 642.0 | 223.0 | 11984 | 63000 | 0.0 | 279179 | 440.0 | 450.0 | 7.6 |

| 3 Backyards | 2010.0 | Drama | English | USA | R | 88.0 | NaN | 300000.0 | NaN | Eric Mendelsohn | … | 795.0 | 659.0 | 301.0 | 1884 | 92 | 0.0 | 554 | 23.0 | 20.0 | 5.2 |

| 3 | 2010.0 | Comedy|Drama|Romance | German | Germany | Unrated | 119.0 | 2.35 | NaN | 59774.0 | Tom Tykwer | … | 24.0 | 20.0 | 9.0 | 69 | 2000 | 0.0 | 4212 | 18.0 | 76.0 | 6.8 |

| 8: The Mormon Proposition | 2010.0 | Documentary | English | USA | R | 80.0 | 1.78 | 2500000.0 | 99851.0 | Reed Cowan | … | 191.0 | 12.0 | 5.0 | 210 | 0 | 0.0 | 1138 | 30.0 | 28.0 | 7.1 |

| A Turtle’s Tale: Sammy’s Adventures | 2010.0 | Adventure|Animation|Family | English | France | PG | 88.0 | 2.35 | NaN | NaN | Ben Stassen | … | 783.0 | 749.0 | 602.0 | 3874 | 0 | 2.0 | 5385 | 22.0 | 56.0 | 6.1 |

5 rows × 24 columns

Since all the three sheets have similar data but for different recordsmovies, we will create a single DataFrame from all the three DataFrames we created above. We will use the pandas concat method for this and pass in the names of the three DataFrames we just created and assign the results to a new DataFrame object, movies. By keeping the DataFrame name same as before, we are over-writing the previously created DataFrame.

movies = pd.concat([movies_sheet1, movies_sheet2, movies_sheet3])We can check if this concatenation by checking the number of rows in the combined DataFrame by calling the method shape on it that will give us the number of rows and columns.

movies.shape(5042, 24)Using the ExcelFile class to read multiple sheets

We can also use the ExcelFile class to work with multiple sheets from the same Excel file. We first wrap the Excel file using ExcelFile and then pass it to read_excel method.

xlsx = pd.ExcelFile(excel_file)

movies_sheets = []

for sheet in xlsx.sheet_names:

movies_sheets.append(xlsx.parse(sheet))

movies = pd.concat(movies_sheets)If you are reading an Excel file with a lot of sheets and are creating a lot of DataFrames, ExcelFile is more convenient and efficient in comparison to read_excel. With ExcelFile, you only need to pass the Excel file once, and then you can use it to get the DataFrames. When using read_excel, you pass the Excel file every time and hence the file is loaded again for every sheet. This can be a huge performance drag if the Excel file has many sheets with a large number of rows.

Exploring the data

Now that we have read in the movies data set from our Excel file, we can start exploring it using pandas. A pandas DataFrame stores the data in a tabular format, just like the way Excel displays the data in a sheet. Pandas has a lot of built-in methods to explore the DataFrame we created from the Excel file we just read in.

We already introduced the method head in the previous section that displays few rows from the top from the DataFrame. Let’s look at few more methods that come in handy while exploring the data set.

We can use the shape method to find out the number of rows and columns for the DataFrame.

movies.shape(5042, 25)This tells us our Excel file has 5042 records and 25 columns or observations. This can be useful in reporting the number of records and columns and comparing that with the source data set.

We can use the tail method to view the bottom rows. If no parameter is passed, only the bottom five rows are returned.

movies.tail()| Title | Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1599 | War & Peace | NaN | Drama|History|Romance|War | English | UK | TV-14 | NaN | 16.00 | NaN | NaN | … | 1000.0 | 888.0 | 502.0 | 4528 | 11000 | 1.0 | 9277 | 44.0 | 10.0 | 8.2 |

| 1600 | Wings | NaN | Comedy|Drama | English | USA | NaN | 30.0 | 1.33 | NaN | NaN | … | 685.0 | 511.0 | 424.0 | 1884 | 1000 | 5.0 | 7646 | 56.0 | 19.0 | 7.3 |

| 1601 | Wolf Creek | NaN | Drama|Horror|Thriller | English | Australia | NaN | NaN | 2.00 | NaN | NaN | … | 511.0 | 457.0 | 206.0 | 1617 | 954 | 0.0 | 726 | 6.0 | 2.0 | 7.1 |

| 1602 | Wuthering Heights | NaN | Drama|Romance | English | UK | NaN | 142.0 | NaN | NaN | NaN | … | 27000.0 | 698.0 | 427.0 | 29196 | 0 | 2.0 | 6053 | 33.0 | 9.0 | 7.7 |

| 1603 | Yu-Gi-Oh! Duel Monsters | NaN | Action|Adventure|Animation|Family|Fantasy | Japanese | Japan | NaN | 24.0 | NaN | NaN | NaN | … | 0.0 | NaN | NaN | 0 | 124 | 0.0 | 12417 | 51.0 | 6.0 | 7.0 |

5 rows × 25 columns

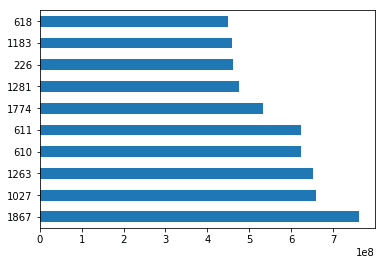

In Excel, you’re able to sort a sheet based on the values in one or more columns. In pandas, you can do the same thing with the sort_values method. For example, let’s sort our movies DataFrame based on the Gross Earnings column.

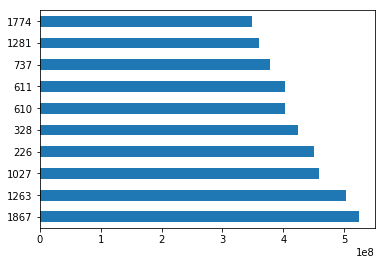

sorted_by_gross = movies.sort_values(['Gross Earnings'], ascending=False)Since we have the data sorted by values in a column, we can do few interesting things with it. For example, we can display the top 10 movies by Gross Earnings.

sorted_by_gross["Gross Earnings"].head(10)1867 760505847.0

1027 658672302.0

1263 652177271.0

610 623279547.0

611 623279547.0

1774 533316061.0

1281 474544677.0

226 460935665.0

1183 458991599.0

618 448130642.0

Name: Gross Earnings, dtype: float64We can also create a plot for the top 10 movies by Gross Earnings. Pandas makes it easy to visualize your data with plots and charts through matplotlib, a popular data visualization library. With a couple lines of code, you can start plotting. Moreover, matplotlib plots work well inside Jupyter Notebooks since you can displace the plots right under the code.

First, we import the matplotlib module and set matplotlib to display the plots right in the Jupyter Notebook.

import matplotlib.pyplot as plt%matplotlib inlineWe will draw a bar plot where each bar will represent one of the top 10 movies. We can do this by calling the plot method and setting the argument kind to barh. This tells matplotlib to draw a horizontal bar plot.

sorted_by_gross['Gross Earnings'].head(10).plot(kind="barh")

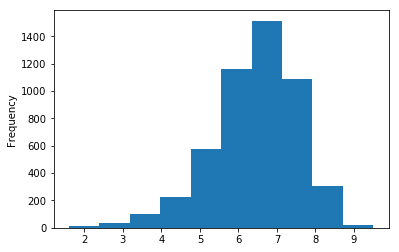

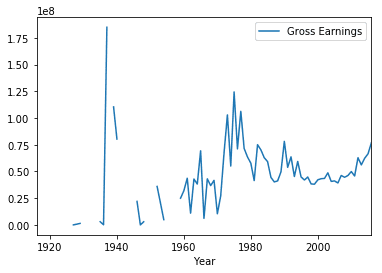

plt.show()Let’s create a histogram of IMDB Scores to check the distribution of IMDB Scores across all movies. Histograms are a good way to visualize the distribution of a data set. We use the plot method on the IMDB Scores series from our movies DataFrame and pass it the argument.

movies['IMDB Score'].plot(kind="hist")

plt.show()This data visualization suggests that most of the IMDB Scores fall between six and eight.

Getting statistical information about the data

Pandas has some very handy methods to look at the statistical data about our data set. For example, we can use the describe method to get a statistical summary of the data set.

movies.describe()| Year | Duration | Aspect Ratio | Budget | Gross Earnings | Facebook Likes — Director | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| count | 4935.000000 | 5028.000000 | 4714.000000 | 4.551000e+03 | 4.159000e+03 | 4938.000000 | 5035.000000 | 5029.000000 | 5020.000000 | 5042.000000 | 5042.000000 | 5029.000000 | 5.042000e+03 | 5022.000000 | 4993.000000 | 5042.000000 |

| mean | 2002.470517 | 107.201074 | 2.220403 | 3.975262e+07 | 4.846841e+07 | 686.621709 | 6561.323932 | 1652.080533 | 645.009761 | 9700.959143 | 7527.457160 | 1.371446 | 8.368475e+04 | 272.770808 | 140.194272 | 6.442007 |

| std | 12.474599 | 25.197441 | 1.385113 | 2.061149e+08 | 6.845299e+07 | 2813.602405 | 15021.977635 | 4042.774685 | 1665.041728 | 18165.101925 | 19322.070537 | 2.013683 | 1.384940e+05 | 377.982886 | 121.601675 | 1.125189 |

| min | 1916.000000 | 7.000000 | 1.180000 | 2.180000e+02 | 1.620000e+02 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 0.000000 | 5.000000e+00 | 1.000000 | 1.000000 | 1.600000 |

| 25% | 1999.000000 | 93.000000 | 1.850000 | 6.000000e+06 | 5.340988e+06 | 7.000000 | 614.500000 | 281.000000 | 133.000000 | 1411.250000 | 0.000000 | 0.000000 | 8.599250e+03 | 65.000000 | 50.000000 | 5.800000 |

| 50% | 2005.000000 | 103.000000 | 2.350000 | 2.000000e+07 | 2.551750e+07 | 49.000000 | 988.000000 | 595.000000 | 371.500000 | 3091.000000 | 166.000000 | 1.000000 | 3.437100e+04 | 156.000000 | 110.000000 | 6.600000 |

| 75% | 2011.000000 | 118.000000 | 2.350000 | 4.500000e+07 | 6.230944e+07 | 194.750000 | 11000.000000 | 918.000000 | 636.000000 | 13758.750000 | 3000.000000 | 2.000000 | 9.634700e+04 | 326.000000 | 195.000000 | 7.200000 |

| max | 2016.000000 | 511.000000 | 16.000000 | 1.221550e+10 | 7.605058e+08 | 23000.000000 | 640000.000000 | 137000.000000 | 23000.000000 | 656730.000000 | 349000.000000 | 43.000000 | 1.689764e+06 | 5060.000000 | 813.000000 | 9.500000 |

The describe method displays below information for each of the columns.

- the count or number of values

- mean

- standard deviation

- minimum, maximum

- 25%, 50%, and 75% quantile

Please note that this information will be calculated only for the numeric values.

We can also use the corresponding method to access this information one at a time. For example, to get the mean of a particular column, you can use the mean method on that column.

movies["Gross Earnings"].mean()48468407.526809327Just like mean, there are methods available for each of the statistical information we want to access. You can read about these methods in our free pandas cheat sheet.

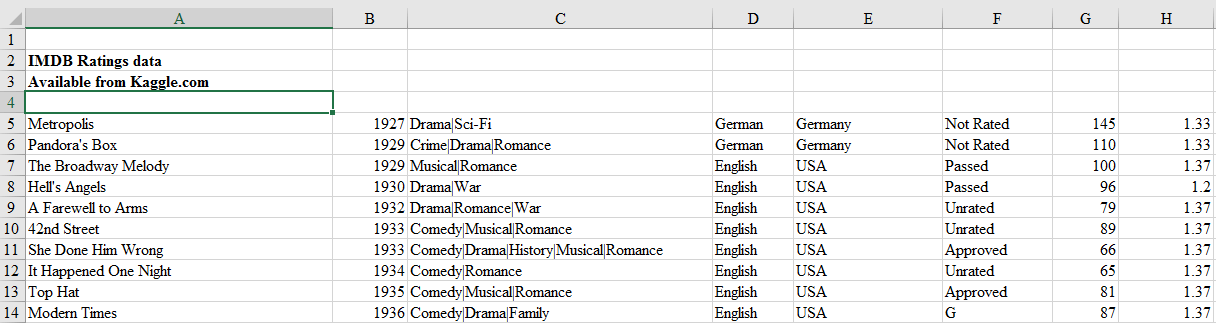

Reading files with no header and skipping records

Earlier in this tutorial, we saw some ways to read a particular kind of Excel file that had headers and no rows that needed skipping. Sometimes, the Excel sheet doesn’t have any header row. For such instances, you can tell pandas not to consider the first row as header or columns names. And If the Excel sheet’s first few rows contain data that should not be read in, you can ask the read_excel method to skip a certain number of rows, starting from the top.

For example, look at the top few rows of this Excel file.

This file obviously has no header and first four rows are not actual records and hence should not be read in. We can tell read_excel there is no header by setting argument header to None and we can skip first four rows by setting argument skiprows to four.

movies_skip_rows = pd.read_excel("movies-no-header-skip-rows.xls", header=None, skiprows=4)

movies_skip_rows.head(5)| 0 | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | … | 15 | 16 | 17 | 18 | 19 | 20 | 21 | 22 | 23 | 24 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Metropolis | 1927 | Drama|Sci-Fi | German | Germany | Not Rated | 145 | 1.33 | 6000000.0 | 26435.0 | … | 136 | 23 | 18.0 | 203 | 12000 | 1 | 111841 | 413 | 260.0 | 8.3 |

| 1 | Pandora’s Box | 1929 | Crime|Drama|Romance | German | Germany | Not Rated | 110 | 1.33 | NaN | 9950.0 | … | 426 | 20 | 3.0 | 455 | 926 | 1 | 7431 | 84 | 71.0 | 8.0 |

| 2 | The Broadway Melody | 1929 | Musical|Romance | English | USA | Passed | 100 | 1.37 | 379000.0 | 2808000.0 | … | 77 | 28 | 4.0 | 109 | 167 | 8 | 4546 | 71 | 36.0 | 6.3 |

| 3 | Hell’s Angels | 1930 | Drama|War | English | USA | Passed | 96 | 1.20 | 3950000.0 | NaN | … | 431 | 12 | 4.0 | 457 | 279 | 1 | 3753 | 53 | 35.0 | 7.8 |

| 4 | A Farewell to Arms | 1932 | Drama|Romance|War | English | USA | Unrated | 79 | 1.37 | 800000.0 | NaN | … | 998 | 164 | 99.0 | 1284 | 213 | 1 | 3519 | 46 | 42.0 | 6.6 |

5 rows × 25 columns

We skipped four rows from the sheet and used none of the rows as the header. Also, notice that one can combine different options in a single read statement. To skip rows at the bottom of the sheet, you can use option skip_footer, which works just like skiprows, the only difference being the rows are counted from the bottom upwards.

The column names in the previous DataFrame are numeric and were allotted as default by the pandas. We can rename the column names to descriptive ones by calling the method columns on the DataFrame and passing the column names as a list.

movies_skip_rows.columns = ['Title', 'Year', 'Genres', 'Language', 'Country', 'Content Rating', 'Duration', 'Aspect Ratio', 'Budget', 'Gross Earnings', 'Director', 'Actor 1', 'Actor 2', 'Actor 3', 'Facebook Likes - Director', 'Facebook Likes - Actor 1', 'Facebook Likes - Actor 2', 'Facebook Likes - Actor 3', 'Facebook Likes - cast Total', 'Facebook likes - Movie', 'Facenumber in posters', 'User Votes', 'Reviews by Users', 'Reviews by Crtiics', 'IMDB Score']

movies_skip_rows.head()| Title | Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | … | Facebook Likes — Actor 1 | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Metropolis | 1927 | Drama|Sci-Fi | German | Germany | Not Rated | 145 | 1.33 | 6000000.0 | 26435.0 | … | 136 | 23 | 18.0 | 203 | 12000 | 1 | 111841 | 413 | 260.0 | 8.3 |

| 1 | Pandora’s Box | 1929 | Crime|Drama|Romance | German | Germany | Not Rated | 110 | 1.33 | NaN | 9950.0 | … | 426 | 20 | 3.0 | 455 | 926 | 1 | 7431 | 84 | 71.0 | 8.0 |

| 2 | The Broadway Melody | 1929 | Musical|Romance | English | USA | Passed | 100 | 1.37 | 379000.0 | 2808000.0 | … | 77 | 28 | 4.0 | 109 | 167 | 8 | 4546 | 71 | 36.0 | 6.3 |

| 3 | Hell’s Angels | 1930 | Drama|War | English | USA | Passed | 96 | 1.20 | 3950000.0 | NaN | … | 431 | 12 | 4.0 | 457 | 279 | 1 | 3753 | 53 | 35.0 | 7.8 |

| 4 | A Farewell to Arms | 1932 | Drama|Romance|War | English | USA | Unrated | 79 | 1.37 | 800000.0 | NaN | … | 998 | 164 | 99.0 | 1284 | 213 | 1 | 3519 | 46 | 42.0 | 6.6 |

5 rows × 25 columns

Now that we have seen how to read a subset of rows from the Excel file, we can learn how to read a subset of columns.

Reading a subset of columns

Although read_excel defaults to reading and importing all columns, you can choose to import only certain columns. By passing parse_cols=6, we are telling the read_excel method to read only the first columns till index six or first seven columns (the first column being indexed zero).

movies_subset_columns = pd.read_excel(excel_file, parse_cols=6)

movies_subset_columns.head()| Title | Year | Genres | Language | Country | Content Rating | Duration | |

|---|---|---|---|---|---|---|---|

| 0 | Intolerance: Love’s Struggle Throughout the Ages | 1916 | Drama|History|War | NaN | USA | Not Rated | 123 |

| 1 | Over the Hill to the Poorhouse | 1920 | Crime|Drama | NaN | USA | NaN | 110 |

| 2 | The Big Parade | 1925 | Drama|Romance|War | NaN | USA | Not Rated | 151 |

| 3 | Metropolis | 1927 | Drama|Sci-Fi | German | Germany | Not Rated | 145 |

| 4 | Pandora’s Box | 1929 | Crime|Drama|Romance | German | Germany | Not Rated | 110 |

Alternatively, you can pass in a list of numbers, which will let you import columns at particular indexes.

Applying formulas on the columns

One of the much-used features of Excel is to apply formulas to create new columns from existing column values. In our Excel file, we have Gross Earnings and Budget columns. We can get Net earnings by subtracting Budget from Gross earnings. We could then apply this formula in the Excel file to all the rows. We can do this in pandas also as shown below.

movies["Net Earnings"] = movies["Gross Earnings"] - movies["Budget"]Above, we used pandas to create a new column called Net Earnings, and populated it with the difference of Gross Earnings and Budget. It’s worth noting the difference here in how formulas are treated in Excel versus pandas. In Excel, a formula lives in the cell and updates when the data changes — with Python, the calculations happen and the values are stored — if Gross Earnings for one movie was manually changed, Net Earnings won’t be updated.

Let’s use the sort_values method to sort the data by the new column we created and visualize the top 10 movies by Net Earnings.

sorted_movies = movies[['Net Earnings']].sort_values(['Net Earnings'], ascending=[False])sorted_movies.head(10)['Net Earnings'].plot.barh()

plt.show()Pivot Table in pandas

Advanced Excel users also often use pivot tables. A pivot table summarizes the data of another table by grouping the data on an index and applying operations such as sorting, summing, or averaging. You can use this feature in pandas too.

We need to first identify the column or columns that will serve as the index, and the column(s) on which the summarizing formula will be applied. Let’s start small, by choosing Year as the index column and Gross Earnings as the summarization column and creating a separate DataFrame from this data.

movies_subset = movies[['Year', 'Gross Earnings']]

movies_subset.head()| Year | Gross Earnings | |

|---|---|---|

| 0 | 1916.0 | NaN |

| 1 | 1920.0 | 3000000.0 |

| 2 | 1925.0 | NaN |

| 3 | 1927.0 | 26435.0 |

| 4 | 1929.0 | 9950.0 |

We now call pivot_table on this subset of data. The method pivot_table takes a parameter index. As mentioned, we want to use Year as the index.

earnings_by_year = movies_subset.pivot_table(index=['Year'])

earnings_by_year.head()| Gross Earnings | |

|---|---|

| Year | |

| 1916.0 | NaN |

| 1920.0 | 3000000.0 |

| 1925.0 | NaN |

| 1927.0 | 26435.0 |

| 1929.0 | 1408975.0 |

This gave us a pivot table with grouping on Year and summarization on the sum of Gross Earnings. Notice, we didn’t need to specify Gross Earnings column explicitly as pandas automatically identified it the values on which summarization should be applied.

We can use this pivot table to create some data visualizations. We can call the plot method on the DataFrame to create a line plot and call the show method to display the plot in the notebook.

earnings_by_year.plot()

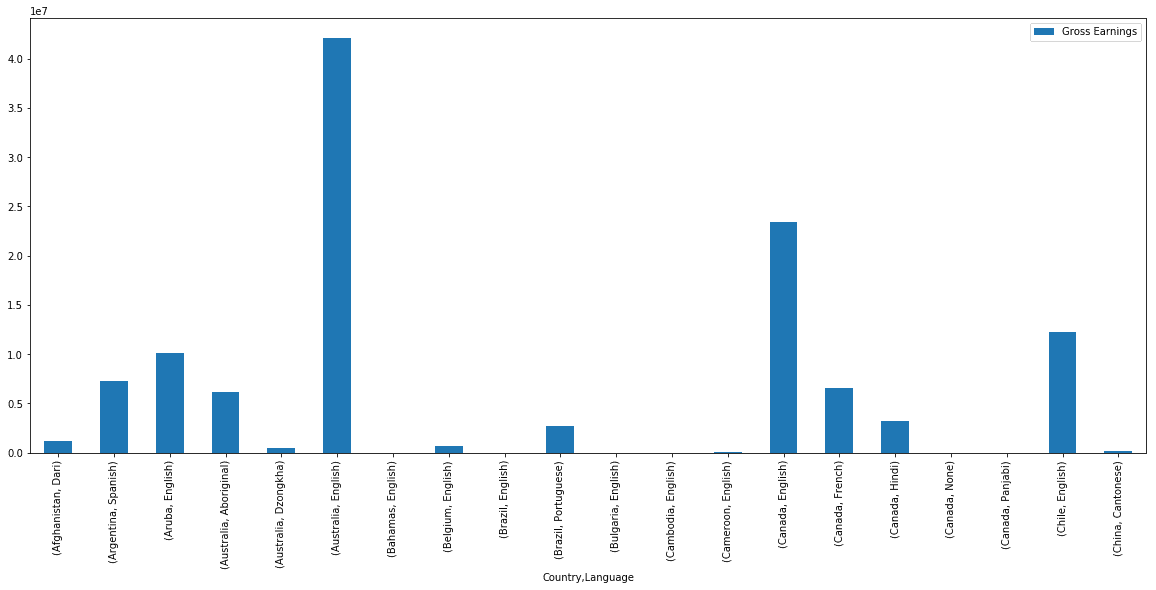

plt.show()We saw how to pivot with a single column as the index. Things will get more interesting if we can use multiple columns. Let’s create another DataFrame subset but this time we will choose the columns, Country, Language and Gross Earnings.

movies_subset = movies[['Country', 'Language', 'Gross Earnings']]

movies_subset.head()| Country | Language | Gross Earnings | |

|---|---|---|---|

| 0 | USA | NaN | NaN |

| 1 | USA | NaN | 3000000.0 |

| 2 | USA | NaN | NaN |

| 3 | Germany | German | 26435.0 |

| 4 | Germany | German | 9950.0 |

We will use columns Country and Language as the index for the pivot table. We will use Gross Earnings as summarization table, however, we do not need to specify this explicitly as we saw earlier.

earnings_by_co_lang = movies_subset.pivot_table(index=['Country', 'Language'])

earnings_by_co_lang.head()| Gross Earnings | ||

|---|---|---|

| Country | Language | |

| Afghanistan | Dari | 1.127331e+06 |

| Argentina | Spanish | 7.230936e+06 |

| Aruba | English | 1.007614e+07 |

| Australia | Aboriginal | 6.165429e+06 |

| Dzongkha | 5.052950e+05 |

Let’s visualize this pivot table with a bar plot. Since there are still few hundred records in this pivot table, we will plot just a few of them.

earnings_by_co_lang.head(20).plot(kind='bar', figsize=(20,8))

plt.show()Exporting the results to Excel

If you’re going to be working with colleagues who use Excel, saving Excel files out of pandas is important. You can export or write a pandas DataFrame to an Excel file using pandas to_excel method. Pandas uses the xlwt Python module internally for writing to Excel files. The to_excel method is called on the DataFrame we want to export.We also need to pass a filename to which this DataFrame will be written.

movies.to_excel('output.xlsx')By default, the index is also saved to the output file. However, sometimes the index doesn’t provide any useful information. For example, the movies DataFrame has a numeric auto-increment index, that was not part of the original Excel data.

movies.head()| Title | Year | Genres | Language | Country | Content Rating | Duration | Aspect Ratio | Budget | Gross Earnings | … | Facebook Likes — Actor 2 | Facebook Likes — Actor 3 | Facebook Likes — cast Total | Facebook likes — Movie | Facenumber in posters | User Votes | Reviews by Users | Reviews by Crtiics | IMDB Score | Net Earnings | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | Intolerance: Love’s Struggle Throughout the Ages | 1916.0 | Drama|History|War | NaN | USA | Not Rated | 123.0 | 1.33 | 385907.0 | NaN | … | 22.0 | 9.0 | 481 | 691 | 1.0 | 10718 | 88.0 | 69.0 | 8.0 | NaN |

| 1 | Over the Hill to the Poorhouse | 1920.0 | Crime|Drama | NaN | USA | NaN | 110.0 | 1.33 | 100000.0 | 3000000.0 | … | 2.0 | 0.0 | 4 | 0 | 1.0 | 5 | 1.0 | 1.0 | 4.8 | 2900000.0 |

| 2 | The Big Parade | 1925.0 | Drama|Romance|War | NaN | USA | Not Rated | 151.0 | 1.33 | 245000.0 | NaN | … | 12.0 | 6.0 | 108 | 226 | 0.0 | 4849 | 45.0 | 48.0 | 8.3 | NaN |

| 3 | Metropolis | 1927.0 | Drama|Sci-Fi | German | Germany | Not Rated | 145.0 | 1.33 | 6000000.0 | 26435.0 | … | 23.0 | 18.0 | 203 | 12000 | 1.0 | 111841 | 413.0 | 260.0 | 8.3 | -5973565.0 |

| 4 | Pandora’s Box | 1929.0 | Crime|Drama|Romance | German | Germany | Not Rated | 110.0 | 1.33 | NaN | 9950.0 | … | 20.0 | 3.0 | 455 | 926 | 1.0 | 7431 | 84.0 | 71.0 | 8.0 | NaN |

5 rows × 26 columns

You can choose to skip the index by passing along index-False.

movies.to_excel('output.xlsx', index=False)We need to be able to make our output files look nice before we can send it out to our co-workers. We can use pandas ExcelWriter class along with the XlsxWriter Python module to apply the formatting.

We can do use these advanced output options by creating a ExcelWriter object and use this object to write to the EXcel file.

writer = pd.ExcelWriter('output.xlsx', engine='xlsxwriter')

movies.to_excel(writer, index=False, sheet_name='report')

workbook = writer.bookworksheet = writer.sheets['report']We can apply customizations by calling add_format on the workbook we are writing to. Here we are setting header format as bold.

header_fmt = workbook.add_format({'bold': True})

worksheet.set_row(0, None, header_fmt)Finally, we save the output file by calling the method save on the writer object.

writer.save()As an example, we saved the data with column headers set as bold. And the saved file looks like the image below.

Like this, one can use XlsxWriter to apply various formatting to the output Excel file.

Conclusion

Pandas is not a replacement for Excel. Both tools have their place in the data analysis workflow and can be very great companion tools. As we demonstrated, pandas can do a lot of complex data analysis and manipulations, which depending on your need and expertise, can go beyond what you can achieve if you are just using Excel. One of the major benefits of using Python and pandas over Excel is that it helps you automate Excel file processing by writing scripts and integrating with your automated data workflow. Pandas also has excellent methods for reading all kinds of data from Excel files. You can export your results from pandas back to Excel too if that’s preferred by your intended audience.

On the other hand, Excel is a such a widely used data tool, it’s not a wise to ignore it. Acquiring expertise in both pandas and Excel and making them work together gives you skills that can help you stand out in your organization.

If you’d like to learn more about this topic, check out Dataquest’s interactive Pandas and NumPy Fundamentals course, and our Data Analyst in Python, and Data Scientist in Python paths that will help you become job-ready in around 6 months.

Learn how to import an Excel file (having .xlsx extension) using python pandas.

Pandas is the most popular data manipulation package in Python, and DataFrames are the Pandas data type for storing tabular 2D data. Reading data from excel files or CSV files, and writing data to Excel files or CSV files using Python Pandas is a necessary skill for any analyst or data scientist.

Table of Contents

- Python Pandas read_excel() Syntax

- Import Excel file using Python Pandas (Example)

- read_excel Important Parameters Examples

- Import Specific Excel Sheet using sheet name

- Import Multiple Excel Sheets Pandas

- Import only n Rows of Excel Sheet

- Import specific columns of Excel Sheet

- Import Specific Excel Sheet using sheet name

- Common Errors and Troubleshooting

1. Pandas read_excel() Syntax

The syntax of DataFrame to_excel() function and some of the important parameters are:

pandas.read_excel(io='filepath', sheet_name=0, header=0, usecols=None, nrows=None)| Sr.No | Parameters Description |

|---|---|

| 1 | io the file path from where you want to read the data. This could be a URL path or, could be a local system file path. Valid URL schemes include http, ftp, s3, and file. |

| 2 | sheet_name: str, int, list, or None, default 0 Available cases: ~Default is 0: 1st sheet as a DataFrame~Use 1: To read 2nd sheet as a DataFrame~Use Specific Sheet Name: "Sheet1" to load sheet with name “Sheet1”~Load Multiple Sheets using dict: [0, 2, "MySheet"] will load first, third and sheet named “MySheet” as a dictionary of DataFrame~None: Load All sheets |

| 3 | header default is 0. Pass Header = 1 to consider the second line of the dataset as a header. Use None if there is no header. |

| 4 | usecols ~Default is None, then parse all columns.~If str, then provide a comma-separated list of Excel columns (“A, B, D, E”) or range of Excel columns (e.g. “A:F” or “A, B,E:F”). Ranges are inclusive of both sides.~If list of int, indicates list of column numbers to be parsed e.g. [1,2,5].~If list of string, provide list of column names to be parsed e.g. [“A, B, D, E”]. |

| 5 | nrows: Default is NoneNumber of rows to parse (provide int). |

For complete list of read_excel parameters refer to official documentation.

2. Import Excel file using Python Pandas

Let’s review a full example:

- Create a DataFrame from scratch and save it as Excel

- Import (or load) the DataFrame from above saved Excel file

import pandas as pd

# Create a dataframe

raw_data = {'first_name': ['Sam','Ziva','Kia','Robin'],

'degree': ['PhD','MBA','','MS'],

'age': [25, 29, 19, 21]}

df = pd.DataFrame(raw_data)

df

#Save the dataframe to the current directory

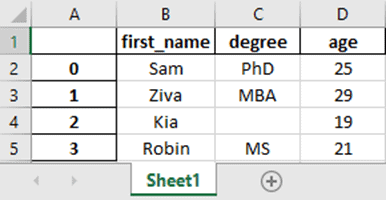

df.to_excel(r'Example1.xlsx')We have the following data about students:

| first_name | degree | age | |

|---|---|---|---|

| 0 | Sam | PhD | 25 |

| 1 | Ziva | MBA | 29 |

| 2 | Kia | 19 | |

| 3 | Robin | MS | 21 |

Read Excel file into Pandas DataFrame (Explained)

Now, let’s see the steps to import the Excel file into a DataFrame.

Step 1: Enter the path and filename where the Excel file is stored. The could be a local system file path or URL path.

For example,

pd.read_excel(r‘D:PythonTutorialExample1.csv‘)

Notice that path is highlighted with 3 different colors:

- The blue part represents the path where the Excel file is saved.

- The green part is the name of the file you want to import.

- The purple part represents the file type or Excel file extension. Use ‘.xlsx’ in case of an Excel file.

Modify the Python above code to reflect the path where the Excel file is stored on your computer.

Note: You can save or read an Excel file without explicitly providing a file path (blue part) by placing the file in the current working directory. To find current directory path use below code:

# Current working directory

import os

print(os.getcwd())

# Display all files present in the current working directory

print(os.listdir(os.getcwd()))D:PythonTutorial Example1.xlsx

Find out how to read multiple files in a folder(directory) here.

Step 2: Enter the following code and make the necessary changes to your path to read the Excel file.

import pandas as pd

# Read the excel file

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx')

dfSnapshot of Data Representation in Excel files

On the left side of the image Excel file is opened in Microsoft Excel. On the right side same Excel file is opened in Juptyter Notebook using pandas read_excel.

3. Pandas read_excel Important Parameters Examples

3.1 Import Specific Excel Sheet using Python Pandas

There may be Multiple Sheets in an Excel file. Pandas provide various methods to import one or multiple excel sheets in sheet_name parameter.

- Default is

0: Read the 1st sheet in Excel as a DataFrame - Use

1: To read 2nd sheet as a DataFrame - Use Specific Sheet Name:

"Sheet1"to load sheet with name “Sheet1” - Load Multiple Sheets using dict:

[0, 2, "MySheet"]will load first, third and sheet named “MySheet” as a dictionary of DataFrame - None: Load All sheets

1. Import Excel Sheet using Integer

By default sheet_name = 0 imports the 1st sheet in Excel as a DataFrame. To import Second Excel Sheet i.e. “Salary Info” in our case as a Pandas DataFrame use sheet_name = 1

import pandas as pd

# Read "Salary Info" Sheet from Excel file (2nd Sheet)

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',sheet_name=1)

df| first_name | salary | |

| 0 | Sam | 120000 |

| 1 | Ziva | 80000 |

| 2 | Kia | 110000 |

| 3 | Robin | 150000 |

2. Import Specific Excel Sheet using Sheet Name

To import Specific Excel Sheet i.e. “Personal Info” as a Pandas DataFrame using sheet_name = "Personal Info"

import pandas as pd

# Read excel file sheet "Personal Info" using sheetname

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',sheet_name="Personal Info")

df| first_name | degree | age | |

| 0 | Sam | PhD | 25 |

| 1 | Ziva | MBA | 29 |

| 2 | Kia | NaN | 19 |

| 3 | Robin | MS | 21 |

3. Import Multiple Excel Sheet into Pandas DataFrame

Multiple Excel Sheets can be read into Pandas DataFrame by passing list in the sheet_name parameter e.g. [0, “Salary Info”] will load the first sheet and sheet named “Salary Info” as a dictionary of DataFrame.

import pandas as pd

# Read multiple excel file sheets as dictionary of DataFrame

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',sheet_name=[0, "Salary Info"])

df

Now to store different sheets into different DataFrames use Dictionary Key Value.

import pandas as pd

# Read multiple excel file sheets as dictionary of DataFrame

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',sheet_name=[0, "Salary Info"])

# As seen in the output above Keys are 0 and "Salary_Info"

Personal_Info = df[0]

Salary_Info = df["Salary Info"]

print(Personal_Info)

print(Salary_Info)3.2 Import only n Rows of Excel Sheet using Pandas

Sometimes Excel file is quite big or our system has memory constraints. In this case, we can import only the top n rows of Excel Sheet using Pandas read_excel nrows parameter. For example, to import only top 2 rows use nrows=2

import pandas as pd

# Load top 2 rows of Excel sheets as Pandas DataFrame

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',nrows=2)

df| first_name | degree | age | |

| 0 | Sam | PhD | 25 |

| 1 | Ziva | MBA | 29 |

3.3 Import specific columns of Excel Sheet

There may be hundreds of columns in excel sheet, but while importing we need only few columns. In this case, we can pass usecols parameter. Different ways to use usecols parameter are below:

- Default is

None, parse all columns. - If

str, then provide a comma-separated list of Excel columns (“A, B, D, E”) or range of Excel columns (e.g. “A:F” or “A, B,E:F”). Ranges are inclusive of both sides. - If

list of int, indicates list of column numbers to be parsed e.g. [0,2,5]. - If

list of string, provide list of column names to be parsed e.g. [“A, B, D, E”].

import pandas as pd

# Import 1st and 3rd columns of Execl sheet as Pandas DataFrame

df = pd.read_excel(r'D:PythonTutorialExample1.xlsx',usecols=[0,2])

df| first_name | age | |

| 0 | Sam | 25 |

| 1 | Ziva | 29 |

| 2 | Kia | 19 |

| 3 | Robin | 21 |

4. Common Errors and Troubleshooting

Listing down the common error you can face while loading data from CSV files into Pandas dataframe will be:

FileNotFoundError: File b'filename.csv' does not exist- Reason: File Not Found error typically occurs when there is an issue with the file path (or directory) or file name.

- Fix: Check file path, file name, and file extension.

SyntaxError: (unicode error) 'unicodeescape' codec can't decode bytes in position 2-3: truncated UXXXXXXXX escape- Reason: In

Ustarts an eight-character Unicode escape, such asU00014321. In the code, the escape is followed by the character ‘s’, which is invalid. - Fix:

- Use the prefix string with

r(to produce a raw string)pd.read_excel(r'D:PythonTutorialfilename.xlsx')or, - You either need to duplicate all backslashes

pd.read_excel(r'D:\Python\Tutorial\filename.xlsx')

- Use the prefix string with

- Reason: In

ImportError: Install xlrd >= 1.0.0 for Excel support.- Reason: xlrd package is not available in the python environment

- Fix: Install xlrd package if you get the above error

pip install xlrd

Conclusion

We have covered the steps needed to read an Excel file in python using pandas read_excel function.

Go to read data from csv files, and write data to CSV files using Python.

В Python данные из файла Excel считываются в объект DataFrame. Для этого используется функция read_excel() модуля pandas.

Лист Excel — это двухмерная таблица. Объект DataFrame также представляет собой двухмерную табличную структуру данных.

- Пример использования Pandas read_excel()

- Список заголовков столбцов листа Excel

- Вывод данных столбца

- Пример использования Pandas to Excel: read_excel()

- Чтение файла Excel без строки заголовка

- Лист Excel в Dict, CSV и JSON

- Ресурсы

Предположим, что у нас есть документ Excel, состоящий из двух листов: «Employees» и «Cars». Верхняя строка содержит заголовок таблицы.

Ниже приведен код, который считывает данные листа «Employees» и выводит их.

import pandas

excel_data_df = pandas.read_excel('records.xlsx', sheet_name='Employees')

# print whole sheet data

print(excel_data_df)

Вывод:

EmpID EmpName EmpRole 0 1 Pankaj CEO 1 2 David Lee Editor 2 3 Lisa Ray Author

Первый параметр, который принимает функция read_excel ()— это имя файла Excel. Второй параметр (sheet_name) определяет лист для считывания данных.

При выводе содержимого объекта DataFrame мы получаем двухмерные таблицы, схожие по своей структуре со структурой документа Excel.

Чтобы получить список заголовков столбцов таблицы, используется свойство columns объекта Dataframe. Пример реализации:

print(excel_data_df.columns.ravel())

Вывод:

['Pankaj', 'David Lee', 'Lisa Ray']

Мы можем получить данные из столбца и преобразовать их в список значений. Пример:

print(excel_data_df['EmpName'].tolist())

Вывод:

['Pankaj', 'David Lee', 'Lisa Ray']

Можно указать имена столбцов для чтения из файла Excel. Это потребуется, если нужно вывести данные из определенных столбцов таблицы.

import pandas

excel_data_df = pandas.read_excel('records.xlsx', sheet_name='Cars', usecols=['Car Name', 'Car Price'])

print(excel_data_df)

Вывод:

Car Name Car Price 0 Honda City 20,000 USD 1 Bugatti Chiron 3 Million USD 2 Ferrari 458 2,30,000 USD

Если в листе Excel нет строки заголовка, нужно передать его значение как None.

excel_data_df = pandas.read_excel('records.xlsx', sheet_name='Numbers', header=None)

Если вы передадите значение заголовка как целое число (например, 3), тогда третья строка станет им. При этом считывание данных начнется со следующей строки. Данные, расположенные перед строкой заголовка, будут отброшены.

Объект DataFrame предоставляет различные методы для преобразования табличных данных в формат Dict , CSV или JSON.

excel_data_df = pandas.read_excel('records.xlsx', sheet_name='Cars', usecols=['Car Name', 'Car Price'])

print('Excel Sheet to Dict:', excel_data_df.to_dict(orient='record'))

print('Excel Sheet to JSON:', excel_data_df.to_json(orient='records'))

print('Excel Sheet to CSV:n', excel_data_df.to_csv(index=False))

Вывод:

Excel Sheet to Dict: [{'Car Name': 'Honda City', 'Car Price': '20,000 USD'}, {'Car Name': 'Bugatti Chiron', 'Car Price': '3 Million USD'}, {'Car Name': 'Ferrari 458', 'Car Price': '2,30,000 USD'}]

Excel Sheet to JSON: [{"Car Name":"Honda City","Car Price":"20,000 USD"},{"Car Name":"Bugatti Chiron","Car Price":"3 Million USD"},{"Car Name":"Ferrari 458","Car Price":"2,30,000 USD"}]

Excel Sheet to CSV:

Car Name,Car Price

Honda City,"20,000 USD"

Bugatti Chiron,3 Million USD

Ferrari 458,"2,30,000 USD"

- Документы API pandas read_excel()

Дайте знать, что вы думаете по этой теме материала в комментариях. Мы крайне благодарны вам за ваши комментарии, дизлайки, подписки, лайки, отклики!

Время на прочтение

7 мин

Количество просмотров 172K

Если Вы только начинаете свой путь знакомства с возможностями Python, ваши познания еще имеют начальный уровень — этот материал для Вас. В статье мы опишем, как можно извлекать информацию из данных, представленных в Excel файлах, работать с ними используя базовый функционал библиотек. В первой части статьи мы расскажем про установку необходимых библиотек и настройку среды. Во второй части — предоставим обзор библиотек, которые могут быть использованы для загрузки и записи таблиц в файлы с помощью Python и расскажем как работать с такими библиотеками как pandas, openpyxl, xlrd, xlutils, pyexcel.

В какой-то момент вы неизбежно столкнетесь с необходимостью работы с данными Excel, и нет гарантии, что работа с таким форматами хранения данных доставит вам удовольствие. Поэтому разработчики Python реализовали удобный способ читать, редактировать и производить иные манипуляции не только с файлами Excel, но и с файлами других типов.

Отправная точка — наличие данных

ПЕРЕВОД

Оригинал статьи — www.datacamp.com/community/tutorials/python-excel-tutorial

Автор — Karlijn Willems

Когда вы начинаете проект по анализу данных, вы часто сталкиваетесь со статистикой собранной, возможно, при помощи счетчиков, возможно, при помощи выгрузок данных из систем типа Kaggle, Quandl и т. д. Но большая часть данных все-таки находится в Google или репозиториях, которыми поделились другие пользователи. Эти данные могут быть в формате Excel или в файле с .csv расширением.

Данные есть, данных много. Анализируй — не хочу. С чего начать? Первый шаг в анализе данных — их верификация. Иными словами — необходимо убедиться в качестве входящих данных.

В случае, если данные хранятся в таблице, необходимо не только подтвердить качество данных (нужно быть уверенным, что данные таблицы ответят на поставленный для исследования вопрос), но и оценить, можно ли доверять этим данным.

Проверка качества таблицы

Чтобы проверить качество таблицы, обычно используют простой чек-лист. Отвечают ли данные в таблице следующим условиям:

- данные являются статистикой;

- различные типы данных: время, вычисления, результат;

- данные полные и консистентные: структура данных в таблице — систематическая, а присутствующие формулы — работающие.

Ответы на эти простые вопросы позволят понять, не противоречит ли ваша таблица стандарту. Конечно, приведенный чек-лист не является исчерпывающим: существует много правил, на соответствие которым вы можете проверять данные в таблице, чтобы убедиться, что таблица не является “гадким утенком”. Однако, приведенный выше чек-лист наиболее актуален, если вы хотите убедиться, что таблица содержит качественные данные.

Бест-практикс табличных данных

Читать данные таблицы при помощи Python — это хорошо. Но данные хочется еще и редактировать. Причем редактирование данных в таблице, должно соответствовать следующим условиям:

- первая строка таблицы зарезервирована для заголовка, а первый столбец используется для идентификации единицы выборки;

- избегайте имен, значений или полей с пробелами. В противном случае, каждое слово будет интерпретироваться как отдельная переменная, что приведет к ошибкам, связанным с количеством элементов в строке в наборе данных. Лучше использовать подчеркивания, регистр (первая буква каждого раздела текста — заглавная) или соединительные слова;

- отдавайте предпочтение коротким названиям;

- старайтесь избегать использования названий, которые содержат символы ?, $,%, ^, &, *, (,),-,#, ?,,,<,>, /, |, , [ ,] ,{, и };

- удаляйте любые комментарии, которые вы сделали в файле, чтобы избежать дополнительных столбцов или полей со значением NA;

- убедитесь, что любые недостающие значения в наборе данных отображаются как NA.

После внесения необходимых изменений (или когда вы внимательно просмотрите свои данные), убедитесь, что внесенные изменения сохранены. Это важно, потому что позволит еще раз взглянуть на данные, при необходимости отредактировать, дополнить или внести изменения, сохраняя формулы, которые, возможно, использовались для расчета.

Если вы работаете с Microsoft Excel, вы наверняка знаете, что есть большое количество вариантов сохранения файла помимо используемых по умолчанию расширения: .xls или .xlsx (переходим на вкладку “файл”, “сохранить как” и выбираем другое расширение (наиболее часто используемые расширения для сохранения данных с целью анализа — .CSV и.ТХТ)). В зависимости от варианта сохранения поля данных будут разделены знаками табуляции или запятыми, которые составляют поле “разделитель”. Итак, данные проверены и сохранены. Начинаем готовить рабочее пространство.

Подготовка рабочего пространства

Подготовка рабочего пространства — одна из первых вещей, которую надо сделать, чтобы быть уверенным в качественном результате анализа.

Первый шаг — проверка рабочей директории.

Когда вы работаете в терминале, вы можете сначала перейти к директории, в которой находится ваш файл, а затем запустить Python. В таком случае необходимо убедиться, что файл находится в директории, из которой вы хотите работать.

Для проверки дайте следующие команды:

# Import `os`

import os

# Retrieve current working directory (`cwd`)

cwd = os.getcwd()

cwd

# Change directory

os.chdir("/path/to/your/folder")

# List all files and directories in current directory

os.listdir('.')

Эти команды важны не только для загрузки данных, но и для дальнейшего анализа. Итак, вы прошли все проверки, вы сохранили данные и подготовили рабочее пространство. Уже можно начать чтение данных в Python?

Установка пакетов для чтения и записи Excel файлов

Несмотря на то, что вы еще не знаете, какие библиотеки будут нужны для импорта данных, нужно убедиться, что у все готово для установки этих библиотек. Если у вас установлен Python 2> = 2.7.9 или Python 3> = 3.4, нет повода для беспокойства — обычно, в этих версиях уже все подготовлено. Поэтому просто убедитесь, что вы обновились до последней версии

Для этого запустите в своем компьютере следующую команду:

# For Linux/OS X

pip install -U pip setuptools

# For Windows

python -m pip install -U pip setuptoolsВ случае, если вы еще не установили pip, запустите скрипт python get-pip.py, который вы можете найти здесь (там же есть инструкции по установке и help).

Установка Anaconda

Установка дистрибутива Anaconda Python — альтернативный вариант, если вы используете Python для анализа данных. Это простой и быстрый способ начать работу с анализом данных — ведь отдельно устанавливать пакеты, необходимые для data science не придется.

Это особенно удобно для новичков, однако даже опытные разработчики часто идут этим путем, ведь Anakonda — удобный способ быстро протестировать некоторые вещи без необходимости устанавливать каждый пакет отдельно.

Anaconda включает в себя 100 наиболее популярных библиотек Python, R и Scala для анализа данных в нескольких средах разработки с открытым исходным кодом, таких как Jupyter и Spyder. Если вы хотите начать работу с Jupyter Notebook, то вам сюда.

Чтобы установить Anaconda — вам сюда.

Загрузка файлов Excel как Pandas DataFrame

Ну что ж, мы сделали все, чтобы настроить среду! Теперь самое время начать импорт файлов.

Один из способов, которым вы будете часто пользоваться для импорта файлов с целью анализа данных — импорт с помощью библиотеки Pandas (Pandas — программная библиотека на языке Python для обработки и анализа данных). Работа Pandas с данными происходит поверх библиотеки NumPy, являющейся инструментом более низкого уровня. Pandas — мощная и гибкая библиотека и она очень часто используется для структуризации данных в целях облегчения анализа.

Если у вас уже есть Pandas в Anaconda, вы можете просто загрузить файлы в Pandas DataFrames с помощью pd.Excelfile ():

# Import pandas

import pandas as pd

# Assign spreadsheet filename to `file`

file = 'example.xlsx'

# Load spreadsheet

xl = pd.ExcelFile(file)

# Print the sheet names

print(xl.sheet_names)

# Load a sheet into a DataFrame by name: df1

df1 = xl.parse('Sheet1')Если вы не установили Anaconda, просто запустите pip install pandas, чтобы установить пакет Pandas в вашей среде, а затем выполните команды, приведенные выше.

Для чтения .csv-файлов есть аналогичная функция загрузки данных в DataFrame: read_csv (). Вот пример того, как вы можете использовать эту функцию:

# Import pandas

import pandas as pd

# Load csv

df = pd.read_csv("example.csv") Разделителем, который эта функция будет учитывать, является по умолчанию запятая, но вы можете, если хотите, указать альтернативный разделитель. Перейдите к документации, если хотите узнать, какие другие аргументы можно указать, чтобы произвести импорт.

Как записывать Pandas DataFrame в Excel файл

Предположим, после анализа данных вы хотите записать данные в новый файл. Существует способ записать данные Pandas DataFrames (с помощью функции to_excel ). Но, прежде чем использовать эту функцию, убедитесь, что у вас установлен XlsxWriter, если вы хотите записать свои данные на несколько листов в файле .xlsx:

# Install `XlsxWriter`

pip install XlsxWriter

# Specify a writer

writer = pd.ExcelWriter('example.xlsx', engine='xlsxwriter')

# Write your DataFrame to a file

yourData.to_excel(writer, 'Sheet1')

# Save the result

writer.save()

Обратите внимание, что в фрагменте кода используется объект ExcelWriter для вывода DataFrame. Иными словами, вы передаете переменную writer в функцию to_excel (), и указываете имя листа. Таким образом, вы добавляете лист с данными в существующую книгу. Также можно использовать ExcelWriter для сохранения нескольких разных DataFrames в одной книге.

То есть если вы просто хотите сохранить один файл DataFrame в файл, вы можете обойтись без установки библиотеки XlsxWriter. Просто не указываете аргумент, который передается функции pd.ExcelWriter (), остальные шаги остаются неизменными.

Подобно функциям, которые используются для чтения в .csv-файлах, есть также функция to_csv () для записи результатов обратно в файл с разделителями-запятыми. Он работает так же, как когда мы использовали ее для чтения в файле:

# Write the DataFrame to csv

df.to_csv("example.csv")Если вы хотите иметь отдельный файл с вкладкой, вы можете передать a t аргументу sep. Обратите внимание, что существуют различные другие функции, которые можно использовать для вывода файлов. Их можно найти здесь.

Использование виртуальной среды

Общий совет по установке библиотек — делать установку в виртуальной среде Python без системных библиотек. Вы можете использовать virtualenv для создания изолированных сред Python: он создает папку, содержащую все необходимое для использования библиотек, которые потребуются для Python.

Чтобы начать работу с virtualenv, сначала нужно его установить. Потом перейти в директорию, где будет находится проект. Создать virtualenv в этой папке и загрузить, если нужно, в определенную версию Python. После этого активируете виртуальную среду. Теперь можно начинать загрузку других библиотек и начинать работать с ними.

Не забудьте отключить среду, когда вы закончите!

# Install virtualenv

$ pip install virtualenv

# Go to the folder of your project

$ cd my_folder

# Create a virtual environment `venv`

$ virtualenv venv

# Indicate the Python interpreter to use for `venv`

$ virtualenv -p /usr/bin/python2.7 venv

# Activate `venv`

$ source venv/bin/activate

# Deactivate `venv`

$ deactivate

Обратите внимание, что виртуальная среда может показаться сначала проблематичной, если вы делаете первые шаги в области анализа данных с помощью Python. И особенно, если у вас только один проект, вы можете не понимать, зачем вообще нужна виртуальная среда.

Но что делать, если у вас несколько проектов, работающих одновременно, и вы не хотите, чтобы они использовали одну и ту же установку Python? Или если у ваших проектов есть противоречивые требования. В таких случаях виртуальная среда — идеальное решение.

Во второй части статьи мы расскажем об основных библиотеках для анализа данных.

Продолжение следует…

The read_excel() method can read Excel 2003 (.xls) and

Excel 2007+ (.xlsx) files using the xlrd Python

module. The to_excel() instance method is used for

saving a DataFrame to Excel. Generally the semantics are

similar to working with csv data. See the cookbook for some

advanced strategies

10.5.1 Reading Excel Files

In the most basic use-case, read_excel takes a path to an Excel

file, and the sheetname indicating which sheet to parse.

# Returns a DataFrame read_excel('path_to_file.xls', sheetname='Sheet1')

10.5.1.1 ExcelFile class

To facilitate working with multiple sheets from the same file, the ExcelFile

class can be used to wrap the file and can be be passed into read_excel

There will be a performance benefit for reading multiple sheets as the file is

read into memory only once.

xlsx = pd.ExcelFile('path_to_file.xls) df = pd.read_excel(xlsx, 'Sheet1')

The ExcelFile class can also be used as a context manager.

with pd.ExcelFile('path_to_file.xls') as xls: df1 = pd.read_excel(xls, 'Sheet1') df2 = pd.read_excel(xls, 'Sheet2')

The sheet_names property will generate

a list of the sheet names in the file.

The primary use-case for an ExcelFile is parsing multiple sheets with

different parameters

data = {} # For when Sheet1's format differs from Sheet2 with pd.ExcelFile('path_to_file.xls') as xls: data['Sheet1'] = pd.read_excel(xls, 'Sheet1', index_col=None, na_values=['NA']) data['Sheet2'] = pd.read_excel(xls, 'Sheet2', index_col=1)

Note that if the same parsing parameters are used for all sheets, a list

of sheet names can simply be passed to read_excel with no loss in performance.

# using the ExcelFile class data = {} with pd.ExcelFile('path_to_file.xls') as xls: data['Sheet1'] = read_excel(xls, 'Sheet1', index_col=None, na_values=['NA']) data['Sheet2'] = read_excel(xls, 'Sheet2', index_col=None, na_values=['NA']) # equivalent using the read_excel function data = read_excel('path_to_file.xls', ['Sheet1', 'Sheet2'], index_col=None, na_values=['NA'])

New in version 0.12.

ExcelFile has been moved to the top level namespace.

New in version 0.17.

read_excel can take an ExcelFile object as input

10.5.1.2 Specifying Sheets

Note

The second argument is sheetname, not to be confused with ExcelFile.sheet_names

Note

An ExcelFile’s attribute sheet_names provides access to a list of sheets.

- The arguments

sheetnameallows specifying the sheet or sheets to read. - The default value for

sheetnameis 0, indicating to read the first sheet - Pass a string to refer to the name of a particular sheet in the workbook.

- Pass an integer to refer to the index of a sheet. Indices follow Python

convention, beginning at 0. - Pass a list of either strings or integers, to return a dictionary of specified sheets.

- Pass a

Noneto return a dictionary of all available sheets.

# Returns a DataFrame read_excel('path_to_file.xls', 'Sheet1', index_col=None, na_values=['NA'])

Using the sheet index:

# Returns a DataFrame read_excel('path_to_file.xls', 0, index_col=None, na_values=['NA'])

Using all default values:

# Returns a DataFrame read_excel('path_to_file.xls')

Using None to get all sheets:

# Returns a dictionary of DataFrames read_excel('path_to_file.xls',sheetname=None)

Using a list to get multiple sheets:

# Returns the 1st and 4th sheet, as a dictionary of DataFrames. read_excel('path_to_file.xls',sheetname=['Sheet1',3])

New in version 0.16.

read_excel can read more than one sheet, by setting sheetname to either

a list of sheet names, a list of sheet positions, or None to read all sheets.

New in version 0.13.

Sheets can be specified by sheet index or sheet name, using an integer or string,

respectively.

10.5.1.3 Reading a MultiIndex

New in version 0.17.

read_excel can read a MultiIndex index, by passing a list of columns to index_col

and a MultiIndex column by passing a list of rows to header. If either the index

or columns have serialized level names those will be read in as well by specifying

the rows/columns that make up the levels.

For example, to read in a MultiIndex index without names:

In [1]: df = pd.DataFrame({'a':[1,2,3,4], 'b':[5,6,7,8]}, ...: index=pd.MultiIndex.from_product([['a','b'],['c','d']])) ...: In [2]: df.to_excel('path_to_file.xlsx') In [3]: df = pd.read_excel('path_to_file.xlsx', index_col=[0,1]) In [4]: df Out[4]: a b a c 1 5 d 2 6 b c 3 7 d 4 8

If the index has level names, they will parsed as well, using the same

parameters.

In [5]: df.index = df.index.set_names(['lvl1', 'lvl2']) In [6]: df.to_excel('path_to_file.xlsx') In [7]: df = pd.read_excel('path_to_file.xlsx', index_col=[0,1]) In [8]: df Out[8]: a b lvl1 lvl2 a c 1 5 d 2 6 b c 3 7 d 4 8

If the source file has both MultiIndex index and columns, lists specifying each

should be passed to index_col and header

In [9]: df.columns = pd.MultiIndex.from_product([['a'],['b', 'd']], names=['c1', 'c2']) In [10]: df.to_excel('path_to_file.xlsx') In [11]: df = pd.read_excel('path_to_file.xlsx', ....: index_col=[0,1], header=[0,1]) ....: In [12]: df Out[12]: c1 a c2 b d lvl1 lvl2 a c 1 5 d 2 6 b c 3 7 d 4 8

Warning

Excel files saved in version 0.16.2 or prior that had index names will still able to be read in,

but the has_index_names argument must specified to True.

10.5.1.4 Parsing Specific Columns

It is often the case that users will insert columns to do temporary computations

in Excel and you may not want to read in those columns. read_excel takes

a parse_cols keyword to allow you to specify a subset of columns to parse.

If parse_cols is an integer, then it is assumed to indicate the last column

to be parsed.

read_excel('path_to_file.xls', 'Sheet1', parse_cols=2)

If parse_cols is a list of integers, then it is assumed to be the file column

indices to be parsed.

read_excel('path_to_file.xls', 'Sheet1', parse_cols=[0, 2, 3])

10.5.1.5 Cell Converters

It is possible to transform the contents of Excel cells via the converters

option. For instance, to convert a column to boolean:

read_excel('path_to_file.xls', 'Sheet1', converters={'MyBools': bool})

This options handles missing values and treats exceptions in the converters

as missing data. Transformations are applied cell by cell rather than to the

column as a whole, so the array dtype is not guaranteed. For instance, a

column of integers with missing values cannot be transformed to an array

with integer dtype, because NaN is strictly a float. You can manually mask

missing data to recover integer dtype:

cfun = lambda x: int(x) if x else -1 read_excel('path_to_file.xls', 'Sheet1', converters={'MyInts': cfun})

10.5.2 Writing Excel Files

10.5.2.1 Writing Excel Files to Disk

To write a DataFrame object to a sheet of an Excel file, you can use the

to_excel instance method. The arguments are largely the same as to_csv

described above, the first argument being the name of the excel file, and the

optional second argument the name of the sheet to which the DataFrame should be

written. For example:

df.to_excel('path_to_file.xlsx', sheet_name='Sheet1')

Files with a .xls extension will be written using xlwt and those with a

.xlsx extension will be written using xlsxwriter (if available) or

openpyxl.

The DataFrame will be written in a way that tries to mimic the REPL output. One

difference from 0.12.0 is that the index_label will be placed in the second

row instead of the first. You can get the previous behaviour by setting the

merge_cells option in to_excel() to False:

df.to_excel('path_to_file.xlsx', index_label='label', merge_cells=False)

The Panel class also has a to_excel instance method,

which writes each DataFrame in the Panel to a separate sheet.

In order to write separate DataFrames to separate sheets in a single Excel file,

one can pass an ExcelWriter.

with ExcelWriter('path_to_file.xlsx') as writer: df1.to_excel(writer, sheet_name='Sheet1') df2.to_excel(writer, sheet_name='Sheet2')

Note

Wringing a little more performance out of read_excel

Internally, Excel stores all numeric data as floats. Because this can

produce unexpected behavior when reading in data, pandas defaults to trying

to convert integers to floats if it doesn’t lose information (1.0 -->). You can pass

1convert_float=False to disable this behavior, which

may give a slight performance improvement.

10.5.2.2 Writing Excel Files to Memory

New in version 0.17.

Pandas supports writing Excel files to buffer-like objects such as StringIO or

BytesIO using ExcelWriter.

New in version 0.17.

Added support for Openpyxl >= 2.2

# Safe import for either Python 2.x or 3.x try: from io import BytesIO except ImportError: from cStringIO import StringIO as BytesIO bio = BytesIO() # By setting the 'engine' in the ExcelWriter constructor. writer = ExcelWriter(bio, engine='xlsxwriter') df.to_excel(writer, sheet_name='Sheet1') # Save the workbook writer.save() # Seek to the beginning and read to copy the workbook to a variable in memory bio.seek(0) workbook = bio.read()

Note

engine is optional but recommended. Setting the engine determines

the version of workbook produced. Setting engine='xlrd' will produce an

Excel 2003-format workbook (xls). Using either 'openpyxl' or

'xlsxwriter' will produce an Excel 2007-format workbook (xlsx). If

omitted, an Excel 2007-formatted workbook is produced.

10.5.3 Excel writer engines

New in version 0.13.

pandas chooses an Excel writer via two methods:

- the

enginekeyword argument - the filename extension (via the default specified in config options)

By default, pandas uses the XlsxWriter for .xlsx and openpyxl

for .xlsm files and xlwt for .xls files. If you have multiple

engines installed, you can set the default engine through setting the

config options io.excel.xlsx.writer and

io.excel.xls.writer. pandas will fall back on openpyxl for .xlsx

files if Xlsxwriter is not available.

To specify which writer you want to use, you can pass an engine keyword

argument to to_excel and to ExcelWriter. The built-in engines are:

openpyxl: This includes stable support for Openpyxl from 1.6.1. However,

it is advised to use version 2.2 and higher, especially when working with

styles.xlsxwriterxlwt

# By setting the 'engine' in the DataFrame and Panel 'to_excel()' methods. df.to_excel('path_to_file.xlsx', sheet_name='Sheet1', engine='xlsxwriter') # By setting the 'engine' in the ExcelWriter constructor. writer = ExcelWriter('path_to_file.xlsx', engine='xlsxwriter') # Or via pandas configuration. from pandas import options options.io.excel.xlsx.writer = 'xlsxwriter' df.to_excel('path_to_file.xlsx', sheet_name='Sheet1')