Improve Article

Save Article

Like Article

Improve Article

Save Article

Like Article

MS Excel is a powerful tool for handling huge amounts of tabular data. It can be particularly useful for sorting, analyzing, performing complex calculations and visualizing data. In this article, we will discuss how to extract a table from a webpage and store it in Excel format.

Step #1: Converting to Pandas dataframe

Pandas is a Python library used for managing tables. Our first step would be to store the table from the webpage into a Pandas dataframe. The function read_html() returns a list of dataframes, each element representing a table in the webpage. Here we are assuming that the webpage contains a single table.

Output

0 1 2 3 4 0 ROLL_NO NAME ADDRESS PHONE AGE 1 1 RAM DELHI 9455123451 18 2 2 RAMESH GURGAON 9652431543 18 3 3 SUJIT ROHTAK 9156253131 20 4 4 SURESH DELHI 9156768971 18

Step #2: Storing the Pandas dataframe in an excel file

For this, we use the to_excel() function of Pandas, passing the filename as a parameter.

Output:

In case of multiple tables on the webpage, we can change the index number from 0 to that of the required table.

Like Article

Save Article

What will we cover in this tutorial?

Yes, you can do it manually. Copy from an HTML table and paste into an Excel spread sheet. Or you can dive into how to pull data directly from the internet into Excel. Sometimes it is not convenient, as some data needs to be transformed and you need to do it often.

In this tutorial we will show how this can be easily automated with Python using Pandas.

That is we go from data that needs to be transformed, like, $102,000 into 102000. Also, how to join (or merge) different datasources before we create a Excel spread sheet.

Step 1: The first data source: Revenue of Microsoft

There are many sources where you can get this data, but Macrotrends has it nicely in a table and for more than 10 years old data.

First thing first, let’s try to take a look at the data. You can use Pandas read_html to get the data from the tables given a URL.

import pandas as pd url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/revenue" tables = pd.read_html(url) revenue = tables[0] print(revenue)

Where we know it is in the first table on the page. A first few lines of the output is given here.

Microsoft Annual Revenue(Millions of US $) Microsoft Annual Revenue(Millions of US $).1 0 2020 $143,015 1 2019 $125,843 2 2018 $110,360 3 2017 $96,571 4 2016 $91,154

First thing to manage are the column names and setting the year to the index.

import pandas as pd

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/revenue"

tables = pd.read_html(url)

revenue = tables[0]

revenue.columns = ['Year', 'Revenue']

revenue = revenue.set_index('Year')

print(revenue)

A first few lines.

Revenue Year 2020 $143,015 2019 $125,843 2018 $110,360 2017 $96,571 2016 $91,154

That helped. But then we need to convert the Revenue column to integers. This is a bit tricky and can be done in various ways. We first need to remove the $-sign, then the comma-sign, before we convert it.

revenue['Revenue'] = pd.to_numeric(revenue['Revenue'].str[1:].str.replace(',',''), errors='coerce')

And that covers it.

Step 2: Getting another data source: Free Cash Flow for Microsoft

We want to combine this data with the Free Cash Flow (FCF) of Microsoft.

The data can be gathered the same way and column and index can be set similar.

import pandas as pd

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/free-cash-flow"

tables = pd.read_html(url)

fcf = tables[0]

fcf.columns = ['Year', 'FCF']

fcf = fcf.set_index('Year')

print(fcf)

The first few lines are.

FCF Year 2020 45234.0 2019 38260.0 2018 32252.0 2017 31378.0 2016 24982.0

All ready to be joined with the other data.

import pandas as pd

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/revenue"

tables = pd.read_html(url)

revenue = tables[0]

revenue.columns = ['Year', 'Revenue']

revenue = revenue.set_index('Year')

revenue['Revenue'] = pd.to_numeric(revenue['Revenue'].str[1:].str.replace(',',''), errors='coerce')

# print(revenue)

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/free-cash-flow"

tables = pd.read_html(url)

fcf = tables[0]

fcf.columns = ['Year', 'FCF']

fcf = fcf.set_index('Year')

data = revenue.join(fcf)

# Let's reorder it

data = data.iloc[::-1].copy()

Where we also reorder it, to have it from the early ears in the top. Notice the copy(), which is not strictly necessary, but makes a hard-copy of the data and not just a view.

Revenue FCF Year 2005 39788 15793.0 2006 44282 12826.0 2007 51122 15532.0 2008 60420 18430.0 2009 58437 15918.0

Wow. Ready to export.

Step 3: Exporting it to Excel

This is too easy to have an entire section for it.

data.to_excel('Output.xlsx')

Isn’t it beautiful. Of course you need to execute this after all the lines above.

In total.

import pandas as pd

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/revenue"

tables = pd.read_html(url)

revenue = tables[0]

revenue.columns = ['Year', 'Revenue']

revenue = revenue.set_index('Year')

revenue['Revenue'] = pd.to_numeric(revenue['Revenue'].str[1:].str.replace(',',''), errors='coerce')

# print(revenue)

url = "https://www.macrotrends.net/stocks/charts/MSFT/microsoft/free-cash-flow"

tables = pd.read_html(url)

fcf = tables[0]

fcf.columns = ['Year', 'FCF']

fcf = fcf.set_index('Year')

data = revenue.join(fcf)

# Let's reorder it

data = data.iloc[::-1].copy()

# Export to Excel

data.to_excel('Output.xlsx')

Which will result in an Excel spread sheet called Output.xlsx.

There are many things you might find easier in Excel, like playing around with different types of visualization. On the other hand, there might be many aspects you find easier in Python. I know, I do. Almost all of them. Not kidding. Still, Excel is a powerful tool which is utilized by many specialists. Still it seems like the skills of Python are in request in connection with Excel.

Python Circle

Do you know what the 5 key success factors every programmer must have?

How is it possible that some people become programmer so fast?

While others struggle for years and still fail.

Not only do they learn python 10 times faster they solve complex problems with ease.

What separates them from the rest?

I identified these 5 success factors that every programmer must have to succeed:

- Collaboration: sharing your work with others and receiving help with any questions or challenges you may have.

- Networking: the ability to connect with the right people and leverage their knowledge, experience, and resources.

- Support: receive feedback on your work and ask questions without feeling intimidated or judged.

- Accountability: stay motivated and accountable to your learning goals by surrounding yourself with others who are also committed to learning Python.

- Feedback from the instructor: receiving feedback and support from an instructor with years of experience in the field.

I know how important these success factors are for growth and progress in mastering Python.

That is why I want to make them available to anyone struggling to learn or who just wants to improve faster.

With the Python Circle community, you can take advantage of 5 key success factors every programmer must have.

Be part of something bigger and join the Python Circle community.

I’m trying to convert the table in the following site to an xls table:

http://www.dekel.co.il/madad-lazarchan

The following is the code I came up with from researching:

from bs4 import BeautifulSoup

import pandas as pd

from urllib2 import urlopen

import requests

import csv

url='http://www.dekel.co.il/madad-lazarchan'

table = pd.read_html(requests.get(url).text, attrs={"class" : "medadimborder"})

print table</code>

How can I get it to display the headers properly and output to a csv or xls file?

If I add the following:

table.to_csv('test.csv')

instead of the print row I get this error:

'list' object has no attribute 'to_csv'

Thanks in Advance!

Okay based on the comments maybe I shouldn’t use panda or read_html as I want a table and not a list. I wrote the following code but now the printout has delimiters and looks like I lost the header row. Also still not sure how to export it to csv file.

from bs4 import BeautifulSoup

import urllib2

import csv

soup = BeautifulSoup(urllib2.urlopen('http://www.dekel.co.il/madad-lazarchan').read(), 'html')

data = []

table = soup.find("table", attrs={"class" : "medadimborder"})

table_body = table.find('tbody')

rows = table_body.findAll('tr')

for row in rows:

cols = row.findAll('td')

cols = [ele.text.strip() for ele in cols]

print cols

[u’01/16′, u’130.7915′, u’122.4640′, u’117.9807′, u’112.2557′, u’105.8017′, u’100.5720′, u’98.6′]

[u’12/15′, u’131.4547′, u’123.0850′, u’118.5790′, u’112.8249′, u’106.3383′, u’101.0820′, u’99.1′]

[u’11/15′, u’131.5874′, u’123.2092′, u’118.6986′, u’112.9387′, u’106.4456′, u’101.1840′, u’99.2′]

Парсинг данных. Эта штука может быть настолько увлекательной, что порой затягивает очень сильно. Ведь всегда интересно найти способ, с помощью которого можно получить те или иные данные, да еще и структурировать их в нужном виде. В статье «Простой пример работы с Excel в Python» уже был рассмотрен один из способов получить данные из таблиц и сохранить их в формате Excel на разных листах. Для этого мы искали на странице все теги, которые так или иначе входят в содержимое таблицы и вытаскивали из них данные. Но, есть способ немного проще. И, давайте, о нем поговорим.

А состоит этот способ в использовании библиотеки pandas. Конечно же, ее простой не назовешь. Это очень мощный инструмент для аналитики самых разнообразных данных. И в рассмотренном ниже случае мы лишь коснемся небольшого фрагмента из того, что вообще умеет делать эта библиотека.

Что понадобиться?

Для того, чтобы написать данный скрипт нам понадобиться конечно же сам pandas. Библиотеки requests, BeautifulSoup и lxml. А также модуль для записи файлов в формате xlsx – xlsxwriter. Установить их все можно одной командой:

pip install requests bs4 lxml pandas xlsxwriter

А после установки импортировать в скрипт для дальнейшей работы с функциями, которые они предоставляют:

Python:

import requests

from bs4 import BeautifulSoup

import pandas as pdТак же с сайта, на котором расположены целевые таблицы нужно взять заголовки для запроса. Данные заголовки не нужны для pandas, но нужны для requests. Зачем вообще использовать в данном случае запросы? Тут все просто. Можно и не использовать вовсе. А полученные таблицы при сохранении называть какими-нибудь составными именами, вроде «Таблица 1» и так далее, но гораздо лучше и понятнее, все же собрать данные о том, как называется данная таблица в оригинале. Поэтому, с помощью запросов и библиотеки BeautifulSoup мы просто будем искать название таблицы.

Но, вернемся к заголовкам. Взял я их в инструментах разработчика на вкладке сеть у первого попавшегося запроса.

Python:

headers = {

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.174 '

'YaBrowser/22.1.3.942 Yowser/2.5 Safari/537.36',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,'

'application/signed-exchange;v=b3;q=0.9 '

}Теперь нужен список, в котором будут перечисляться года, которые представлены в виде таблиц на сайте. Эти года получаются из псевдовыпадающего списка. Я не стал использовать selenium для того, чтобы получить их со страницы. Так как обычный запрос не может забрать эти данные. Они подгружаются с помощью JS скриптов. В данном случае не так уж много данных, которые надо обработать руками. Поэтому я создал список, в которые эти данные и внес вручную:

Python:

num_year_dict = ['443', '442', '441', '440', '439', '438', '437', '436', '435', '434', '433', '432', '431', '426',

'425', '1', '2', '165', '884', '1851', '3226', '4385', '4959', '5582', '6297', '6886', '7371',

'8071', '8671']Теперь нам нужно будет создать пустой словарь вне всяких циклов. Именно, чтобы он был глобальной переменной. Этот словарь мы и будем наполнять полученными данными, а также сохранять их него данные в таблицу Excel. Поэтому, я подумал, что проще сделать его глобальной переменной, чем тасовать из функции в функцию.

df = {}

Назвал я его df, потому как все так называют. И увидев данное название в нужном контексте становиться понятно, что используется pandas. df – это сокращение от DataFrame, то есть, определенный набор данных.

Ну вот, предварительная подготовка закончена. Самое время получать данные. Давайте для начала сходим на одну страницу с таблицей и попробуем получить оттуда данные с помощью pandas.

tables = pd.read_html('https://www.sports.ru/rfpl/table/?s=443&table=0&sub=table')

Здесь была использована функция read_html. Pandas использует библиотеку для парсинга lxml. То есть, примерно это все работает так. Получаются данные со страницы, а затем в коде выполняется поиск с целью найти все таблицы, у которых есть тэг <table>, а далее, внутри таблиц ищутся заголовки и данные под тэгами <tr> и <td>, которые и возвращаются в виде списка формата DataFrame.

Давайте выполним запрос. Но вот печатать данные пока не будем. Нужно для начала понять, сколько таблиц нашлось в запросе. Так как на странице их может быть несколько. Помимо той, что на виду, в виде таблиц может быть оформлен подзаголовок или еще какая информация. Поэтому, давайте узнаем, сколько элементов списка содержится в запросе, а соответственно, столько и таблиц. Выполняем:

print(len(tables))

И видим, что найденных таблиц две. Если вывести по очереди элементы списка, то мы увидим, что нужная нам таблица, в данном случае, находиться под индексом 1. Вот ее и распечатаем для просмотра:

print(tables[1])

И вот она полученная таблица:

Как видим, в данной таблице помимо нужных нам данных, содержится так же лишний столбец, от которого желательно избавиться. Это, скажем так, можно назвать сопутствующим мусором. Поэтому, полученные данные иногда надо «причесать». Давайте вызовем метод drop и удалим ненужный нам столбец.

tables[1].drop('Unnamed: 0', axis=1, inplace=True)

На то, что нужно удалить столбец указывает параметр axis, который равен 1. Если бы нужно было удалить строку, он был бы равен 0. Ну и указываем название столбца, который нужно удалить. Параметр inplace в значении True указывает на то, что удалить столбец нужно будет в исходных данных, а не возвращать нам их копию с удаленным столбцом.

А теперь нужно получить заголовок таблицы. Поэтому, делаем запрос к странице, получаем ее содержимое и отправляем для распарсивания в BeautifulSoup. После чего выполняем поиск названия и обрезаем из него все лишние данные.

Python:

url = f'https://www.sports.ru/rfpl/table/?s={num}&table=0&sub=table'

req = requests.get(url=url, headers=headers)

soup = BeautifulSoup(req.text, 'lxml')

title_table = soup.find('h2', class_='titleH3').text.split("-")[2].strip().replace("/", "_")Теперь, когда у нас есть таблица и ее название, отправим полученные значения в ранее созданный глобально словарь.

df[title_table] = tables[1]

Вот и все. Мы получили данные по одной таблице. Но, не будем забывать, что их больше тридцати. А потому, нужен цикл, чтобы формировать ссылки из созданного ранее списка и делать запросы уже к страницам по ссылке. Давайте полностью оформим код функции. Назовем мы ее, к примеру, get_pd_table(). Ее полный код состоит из всех тех элементов кода, которые мы рассмотрели выше, плюс они запущены в цикле.

Python:

def get_pd_table():

for num in num_year_dict:

url = f'https://www.sports.ru/rfpl/table/?s={num}&table=0&sub=table'

req = requests.get(url=url, headers=headers)

soup = BeautifulSoup(req.text, 'lxml')

title_table = soup.find('h2', class_='titleH3').text.split("-")[2].strip().replace("/", "_")

print(f'Получаю данные из таблицы: "{title_table}"...')

tables = pd.read_html(url)

tables[1].drop('Unnamed: 0', axis=1, inplace=True)

df[title_table] = tables[1]Итак, когда цикл пробежится по всем ссылкам у нас будет готовый словарь с данными турниров, которые желательно бы записать на отдельные листы. На каждом листе по таблице. Давайте сразу создадим для этого функцию pd_save().

writer = pd.ExcelWriter('./Турнирная таблица ПЛ РФ.xlsx', engine='xlsxwriter')

Создаем объект писателя, в котором указываем имя записываемой книги, и инструмент, с помощью которого будем производить запись в параметре engine=’xlsxwriter’.

После запускаем цикл, в котором создаем объекты, то есть листы для записи из ключей списка с таблицами df, указываем, с помощью какого инструмента будет производиться запись, на какой лист. Имя листа берется из ключа словаря. А также указывается параметр index=False, чтобы не сохранялись индексы автоматически присваиваемые pandas.

df[df_name].to_excel(writer, sheet_name=df_name, index=False)

Ну и после всего сохраняем книгу:

writer.save()

Полный код функции сохранения значений:

Python:

def pd_save():

writer = pd.ExcelWriter('./Турнирная таблица ПЛ РФ.xlsx', engine='xlsxwriter')

for df_name in df.keys():

print(f'Записываем данные в лист: {df_name}')

df[df_name].to_excel(writer, sheet_name=df_name, index=False)

writer.save()Вот и все. Для того, чтобы было не скучно ждать, пока будет произведен парсинг таблиц, добавим принты с информацией о получаемой таблице в первую функцию.

print(f'Получаю данные из таблицы: "{title_table}"...')

И во вторую функцию, с сообщением о том, данные на какой лист записываются в данный момент.

print(f'Записываем данные в лист: {df_name}')

Ну, а дальше идет функция main, в которой и вызываются вышеприведенные функции. Все остальное, в виде принтов, это просто декорации, для того чтобы пользователь видел, что происходят какие-то процессы.

Python:

import requests

from bs4 import BeautifulSoup

import pandas as pd

headers = {

'user-agent': 'Mozilla/5.0 (X11; Linux x86_64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/96.0.4664.174 '

'YaBrowser/22.1.3.942 Yowser/2.5 Safari/537.36',

'accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,image/avif,image/webp,image/apng,*/*;q=0.8,'

'application/signed-exchange;v=b3;q=0.9 '

}

num_year_dict = ['443', '442', '441', '440', '439', '438', '437', '436', '435', '434', '433', '432', '431', '426',

'425', '1', '2', '165', '884', '1851', '3226', '4385', '4959', '5582', '6297', '6886', '7371',

'8071', '8671']

df = {}

def get_pd_table():

for num in num_year_dict:

url = f'https://www.sports.ru/rfpl/table/?s={num}&table=0&sub=table'

req = requests.get(url=url, headers=headers)

soup = BeautifulSoup(req.text, 'lxml')

title_table = soup.find('h2', class_='titleH3').text.split("-")[2].strip().replace("/", "_")

print(f'Получаю данные из таблицы: "{title_table}"...')

tables = pd.read_html(url)

tables[1].drop('Unnamed: 0', axis=1, inplace=True)

df[title_table] = tables[1]

def pd_save():

writer = pd.ExcelWriter('./Турнирная таблица ПЛ РФ.xlsx', engine='xlsxwriter')

for df_name in df.keys():

print(f'Записываем данные в лист: {df_name}')

df[df_name].to_excel(writer, sheet_name=df_name, index=False)

writer.save()

def main():

get_pd_table()

print(' ')

pd_save()

print('n[+] Данные записаны!')

if __name__ == '__main__':

main()И ниже результат работы скрипта с уже полученными и записанными таблицами:

Как видите, использовать библиотеку pandas, по крайней мере в данном контексте, не очень сложно. Конечно же, это только самая малая часть того, что она умеет. А умеет она собирать и анализировать данные из самых разных форматов, включая такие распространенные, как: cvs, txt, HTML, XML, xlsx.

Ну и думаю, что не всегда данные будут прилетать «чистыми». Скорее всего, периодически будут попадаться мусорные столбцы или строки. Но их не особо то трудно удалить. Нужно только понимать, что и откуда.

В общем, для себя я сделал однозначный вывод – если мне понадобиться парсить табличные значения, то лучше, чем использование pandas, пожалуй и не придумаешь. Можно просто на лету формировать данные из одного формата и переводить тут же в другой без утомительного перебора. К примеру, из формата csv в json.

Спасибо за внимание. Надеюсь, что данная информация будет вам полезна

Python is a popular tool for all kind of automation needs and therefore a great candidate for your reporting tasks.

There is a wealth of techniques and libraries available and we’re going to introduce five popular options here. After reading this blog post, you should be able to pick the right library for your next reporting project according to your needs and skill set.

Table of Contents

- Overview

- Pandas

- xlwings

- Plotly Dash

- Datapane

- ReportLab

- Conclusion

Overview

Before we begin, here is a high level comparison of the libraries presented in this post:

| Library | Technology | Summary |

|---|---|---|

| Pandas + HTML | HTML | You can generate beautiful reports in the form of static web pages if you know your way around HTML + CSS. The HTML report can also be turned into a PDF for printing. |

| Pandas + Excel | Excel | This is a great option if the report has to be in Excel. It can be run on a server where Excel is not installed, i.e. it’s an ideal candidate for a “download to Excel” button in a web app. The Excel file can be exported to PDF. |

| xlwings | Excel | xlwings allows the use of an Excel template so the formatting can be done by users without coding skills. It requires, however, an installation of Excel so it’s a good option when the report can be generated on a desktop, e.g. for ad-hoc reports. The Excel file can be exported to PDF. |

| Plotly Dash | HTML | Dash allows you to easily spin up a great looking web dashboard that is interactive without having to write any JavaScript code. If formatted properly, it can be used as a source for PDFs, too. Like Pandas + HTML, it requires good HTML + CSS skills to make it look the way you want. |

| Datapane | HTML | Datapane allows you to create HTML reports with interactive elements. It also offers a hosted solution so end users can change the input parameters that are used to create these reports. |

| ReportLab | ReportLab creates direct PDF files without going through HTML or Excel first. It’s very fast and powerful but comes with a steep learning curve. Used by Wikipedia for their PDF export. |

Pandas

I am probably not exaggerating when I claim that almost all reporting in Python starts with Pandas. It’s incredibly easy to create Pandas DataFrames with data from databases, Excel and csv files or json responses from a web API. Once you have the raw data in a DataFrame, it only requires a few lines of code to clean the data and slice & dice it into a digestible form for reporting. Accordingly, Pandas will be used in all sections of this blog post, but we’ll start by leveraging the built-in capabilities that Pandas offers for reports in Excel and HTML format.

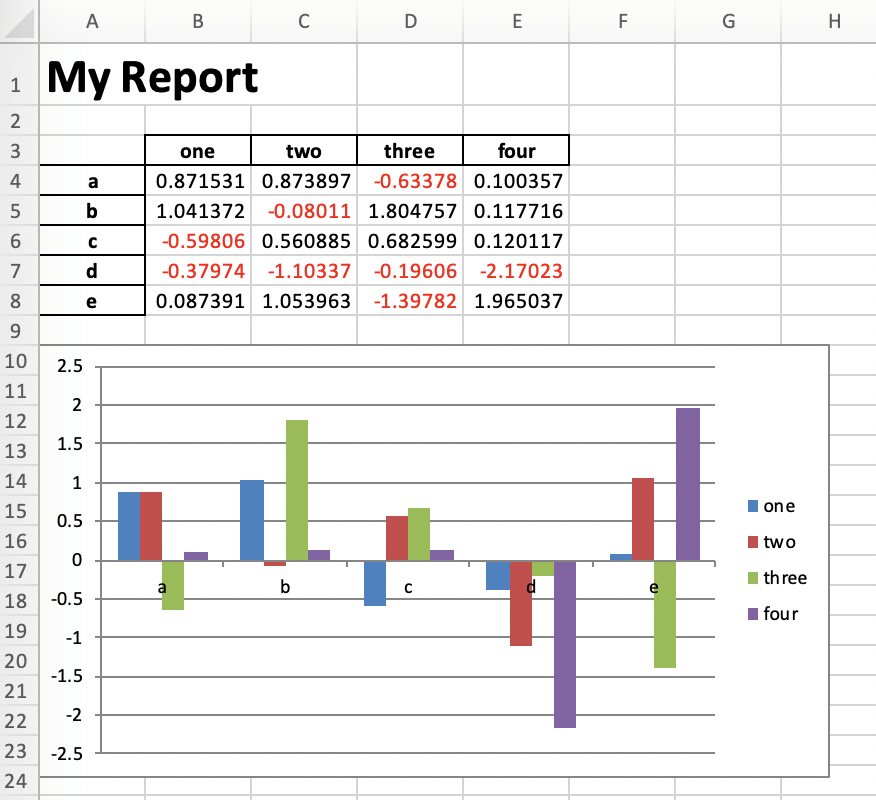

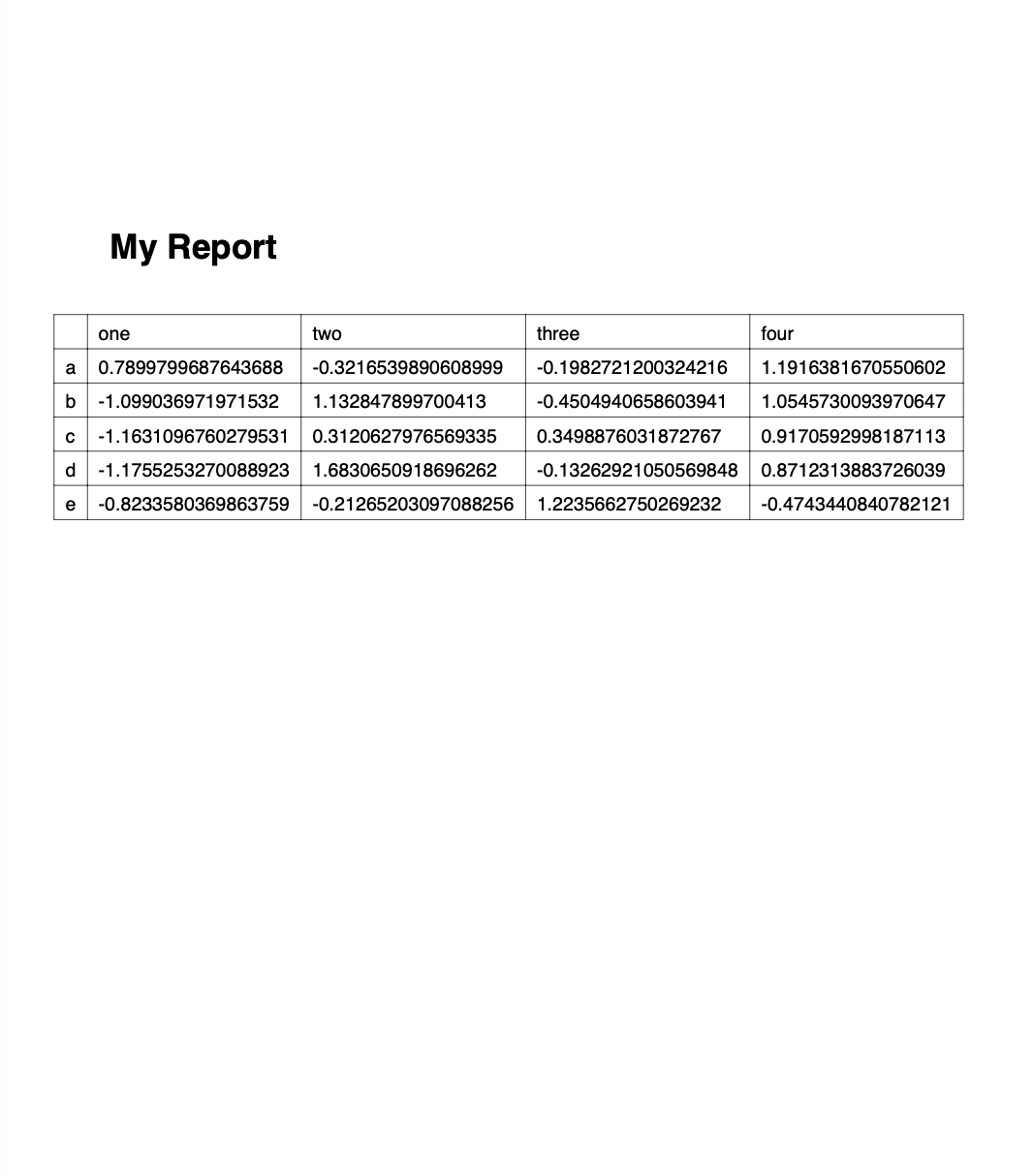

Pandas + Excel

Required libraries: pandas, xlsxwriter

If you want to do something slightly more sophisticated than just dumping a DataFrame into an Excel spreadsheet, I found that Pandas and XlsxWriter is the easiest combination, but others may prefer OpenPyXL. In that case you should be able to easily adopt this snippet by replacing engine='xlsxwriter' with engine='openpyxl' and changing the book/sheet syntax so it works with OpenPyXL:

import pandas as pd

import numpy as np

# Sample DataFrame

df = pd.DataFrame(np.random.randn(5, 4), columns=['one', 'two', 'three', 'four'],

index=['a', 'b', 'c', 'd', 'e'])

# Dump Pandas DataFrame to Excel sheet

writer = pd.ExcelWriter('myreport.xlsx', engine='xlsxwriter')

df.to_excel(writer, sheet_name='Sheet1', startrow=2)

# Get book and sheet objects for futher manipulation below

book = writer.book

sheet = writer.sheets['Sheet1']

# Title

bold = book.add_format({'bold': True, 'size': 24})

sheet.write('A1', 'My Report', bold)

# Color negative values in the DataFrame in red

format1 = book.add_format({'font_color': '#E93423'})

sheet.conditional_format('B4:E8', {'type': 'cell', 'criteria': '<=', 'value': 0, 'format': format1})

# Chart

chart = book.add_chart({'type': 'column'})

chart.add_series({'values': '=Sheet1!B4:B8', 'name': '=Sheet1!B3', 'categories': '=Sheet1!$A$4:$A$8'})

chart.add_series({'values': '=Sheet1!C4:C8', 'name': '=Sheet1!C3'})

chart.add_series({'values': '=Sheet1!D4:D8', 'name': '=Sheet1!D3'})

chart.add_series({'values': '=Sheet1!E4:E8', 'name': '=Sheet1!E3'})

sheet.insert_chart('A10', chart)

writer.save()

Running this will produce the following report:

Of course, we could now go back to the script and add more code to style it a bit nicer, but I leave this as an exercise to the reader…

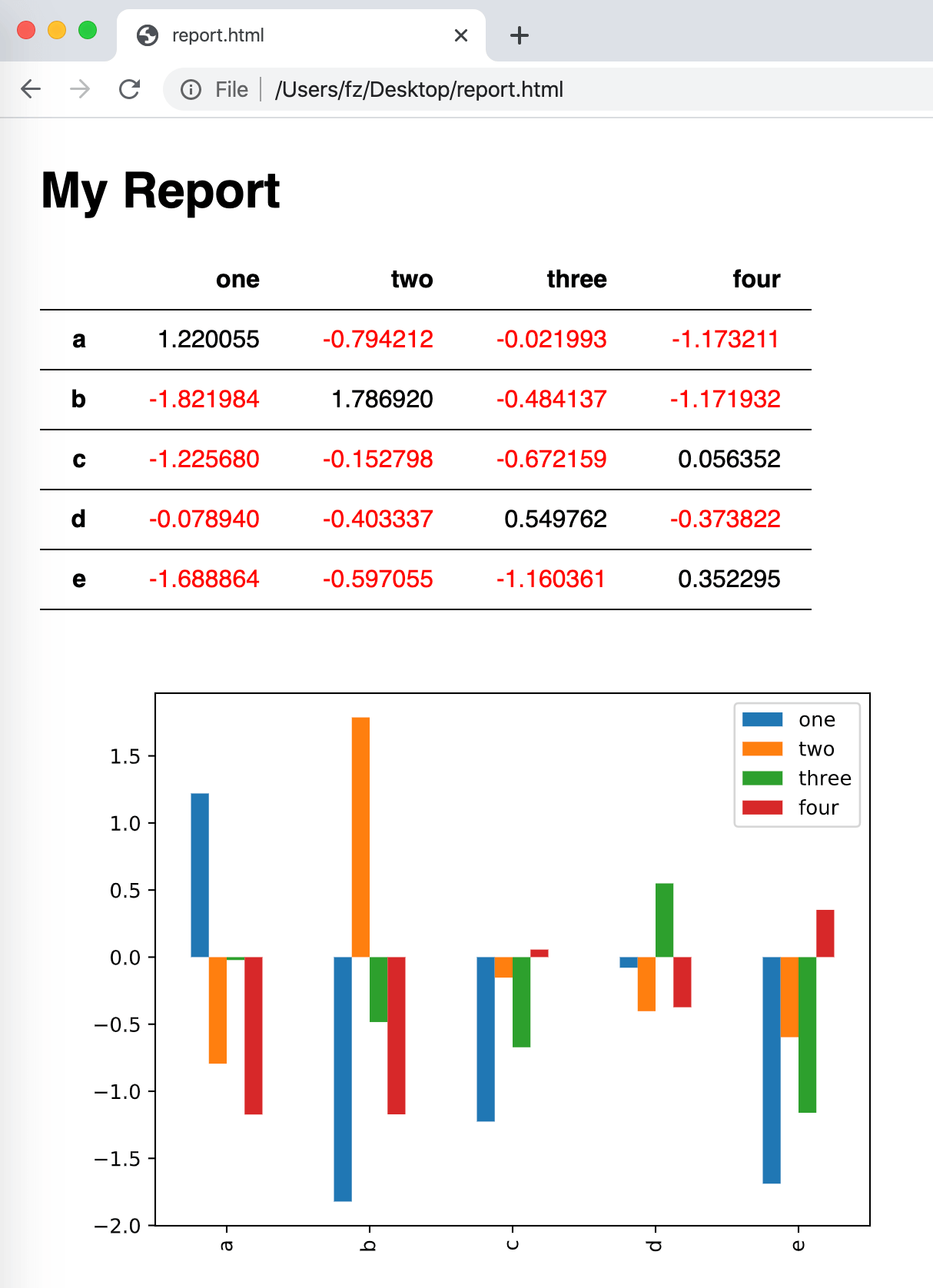

Pandas + HTML

Required libraries: pandas, jinja2

Creating an HTML report with pandas works similar to what’ve just done with Excel: If you want a tiny bit more than just dumping a DataFrame as a raw HTML table, then you’re best off by combining Pandas with a templating engine like Jinja:

First, let’s create a file called template.html:

<html>

<head>

<style>

* {

font-family: sans-serif;

}

body {

padding: 20px;

}

table {

border-collapse: collapse;

text-align: right;

}

table tr {

border-bottom: 1px solid

}

table th, table td {

padding: 10px 20px;

}

</style>

</head>

<body>

<h1>My Report</h1>

{{ my_table }}

<img src='plot.svg' width="600">

</body>

</html>

Then, in the same directory, let’s run the following Python script that will create our HTML report:

import pandas as pd

import numpy as np

import jinja2

# Sample DataFrame

df = pd.DataFrame(np.random.randn(5, 4), columns=['one', 'two', 'three', 'four'],

index=['a', 'b', 'c', 'd', 'e'])

# See: https://pandas.pydata.org/pandas-docs/stable/user_guide/style.html#Building-styles

def color_negative_red(val):

color = 'red' if val < 0 else 'black'

return f'color: {color}'

styler = df.style.applymap(color_negative_red)

# Template handling

env = jinja2.Environment(loader=jinja2.FileSystemLoader(searchpath=''))

template = env.get_template('template.html')

html = template.render(my_table=styler.render())

# Plot

ax = df.plot.bar()

fig = ax.get_figure()

fig.savefig('plot.svg')

# Write the HTML file

with open('report.html', 'w') as f:

f.write(html)

The result is a nice looking HTML report that could also be printed as a PDF by using something like WeasyPrint:

Note that for such an easy example, you wouldn’t necessarily need to use a Jinja template. But when things start to become more complex, it’ll definitely come in very handy.

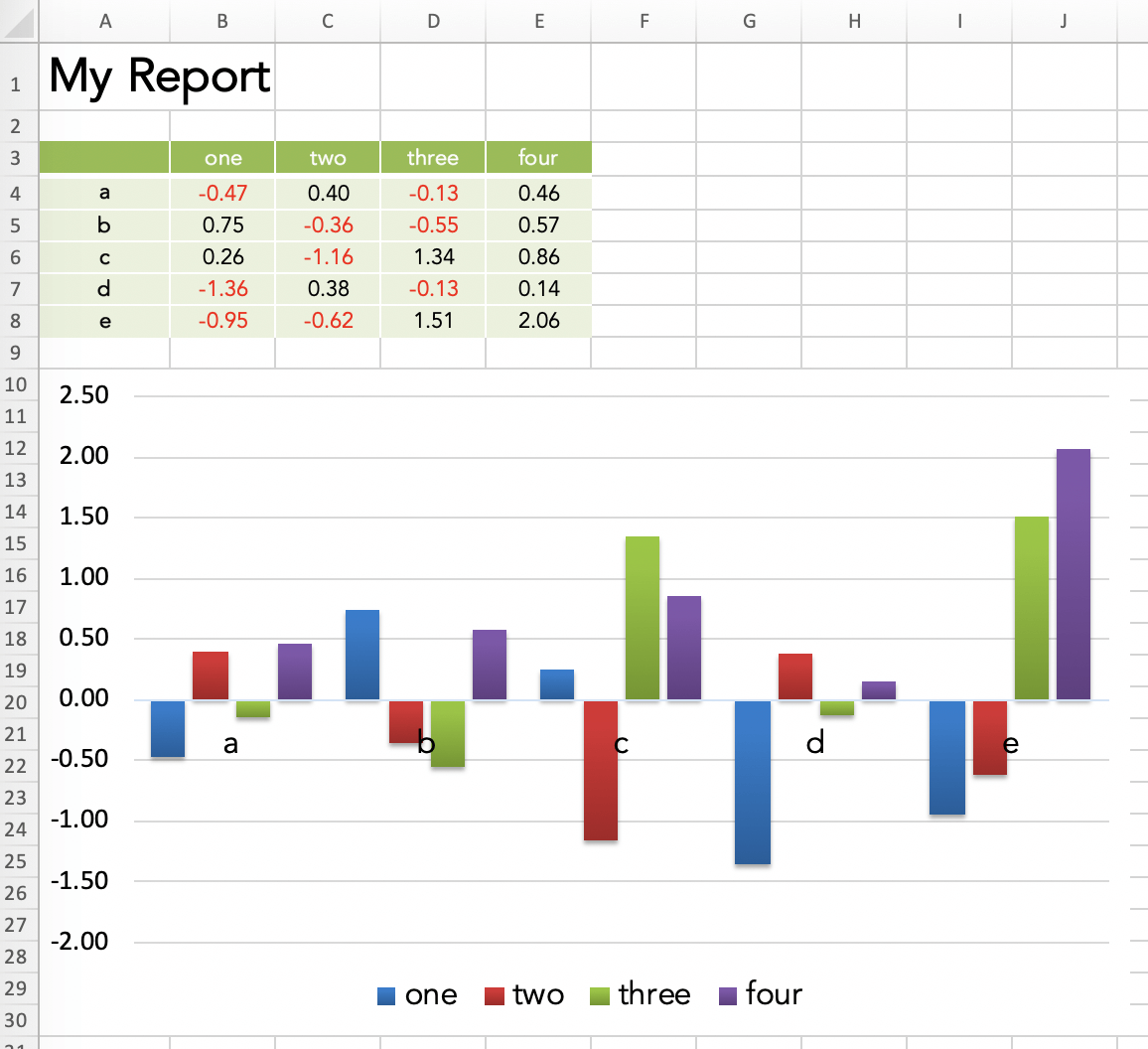

xlwings

xlwings allows you to program and automate Excel with Python instead of VBA. The difference to XlsxWriter or OpenPyXL (used above in the Pandas section) is the following: XlsxWriter and OpenPyXL write Excel files directly on disk. They work wherever Python works and don’t require an installation of Microsoft Excel.

xlwings, on the other hand, can write, read and edit Excel files via the Excel application, i.e. a local installation of Microsoft Excel is required. xlwings also allows you to create macros and user-defined functions in Python rather than in VBA, but for reporting purposes, we won’t really need that.

While XlsxWriter/OpenPyXL are the best choice if you need to produce reports in a scalable way on your Linux web server, xlwings does have the advantage that it can edit pre-formatted Excel files without losing or destroying anything. OpenPyXL on the other hand (the only writer library with xlsx editing capabilities) will drop some formatting and sometimes leads to Excel raising errors during further manual editing.

xlwings (Open Source)

Replicating the sample we had under Pandas is easy enough with the open-source version of xlwings:

import xlwings as xw

import pandas as pd

import numpy as np

# Open a template file

wb = xw.Book('mytemplate.xlsx')

# Sample DataFrame

df = pd.DataFrame(np.random.randn(5, 4), columns=['one', 'two', 'three', 'four'],

index=['a', 'b', 'c', 'd', 'e'])

# Assign data to cells

wb.sheets[0]['A1'].value = 'My Report'

wb.sheets[0]['A3'].value = df

# Save under a new file name

wb.save('myreport.xlsx')

Running this will produce the following report:

So where does all the formatting come from? The formatting is done directly in the Excel template before running the script. This means that instead of having to program tens of lines of code to format a single cell with the proper font, colors and borders, I can just make a few clicks in Excel. xlwings then merely opens the template file and inserts the values.

This allows us to create a good looking report in your corporate design very fast. The best part is that the Python developer doesn’t necessarily have to do the formatting but can leave it to the business user who owns the report.

Note that you could instruct xlwings to run the report in a separate and hidden instance of Excel so it doesn’t interfere with your other work.

xlwings PRO

The Pandas + Excel as well as the xlwings (open source) sample both have a few issues:

- If, for example, you insert a few rows below the title, you will have to adjust the cell references accordingly in the Python code. Using named ranges could help but they have other limitations (like the one mentioned at the end of this list).

- The number of rows in the table might be dynamic. This leads to two issues: (a) data rows might not be formatted consistently and (b) content below the table might get overwritten if the table is too long.

- Placing the same value in a lot of different cells (e.g. a date in the source note of every table or chart) will cause duplicated code or unnecessary loops.

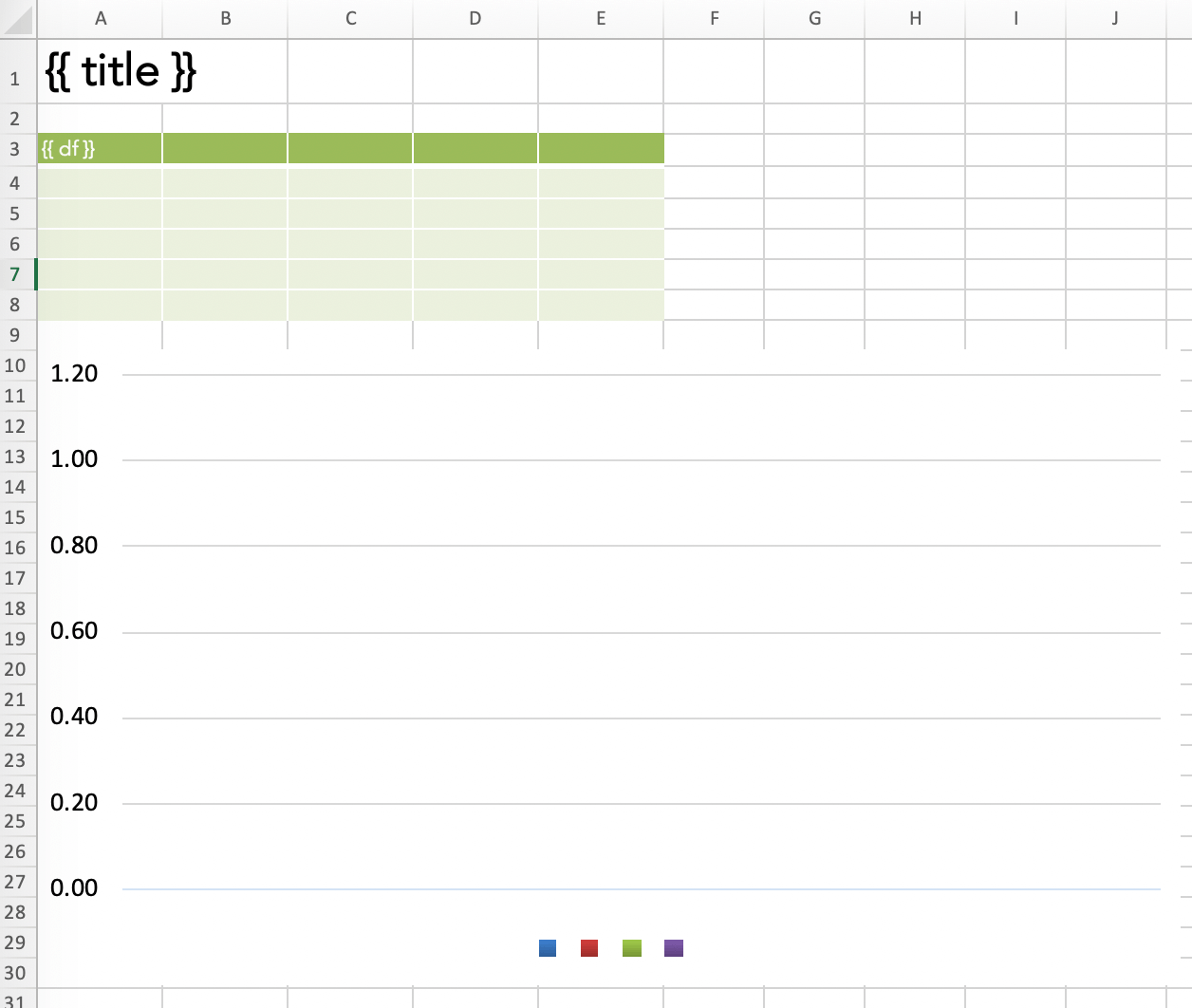

To fix these issues, xlwings PRO comes with a dedicated reports package:

- Separation of code and design: Users without coding skills can change the template on their own without having to touch the Python code.

- Template variables: Python variables (between double curly braces) can be directly used in cells , e.g.

{{ title }}. They act as placeholders that will be replaced by the values of the variables. - Frames for dynamic tables: Frames are vertical containers that dynamically align and style tables that have a variable number of rows. To see how Frames work, have a look at the documentation.

You can get a free trial for xlwings PRO here. When using the xlwings PRO reports package, your code simplifies to the following:

import pandas as pd

import numpy as np

from xlwings.pro.reports import create_report # part of xlwings PRO

# Sample DataFrame

df = pd.DataFrame(np.random.randn(5, 4), columns=['one', 'two', 'three', 'four'],

index=['a', 'b', 'c', 'd', 'e'])

# Create the report by passing in all variables as kwargs

wb = create_report('mytemplate.xlsx',

'myreport.xlsx',

title='My Report',

df=df)

All that’s left is to create a template with the placeholders for title and df:

Running the script will produce the same report that we generated with the open source version of xlwings above. The beauty of this approach is that there are no hard coded cell references anymore in your Python code. This means that the person who is responsible for the layout can move the placeholders around and change the fonts and colors without having to bug the Python developer anymore.

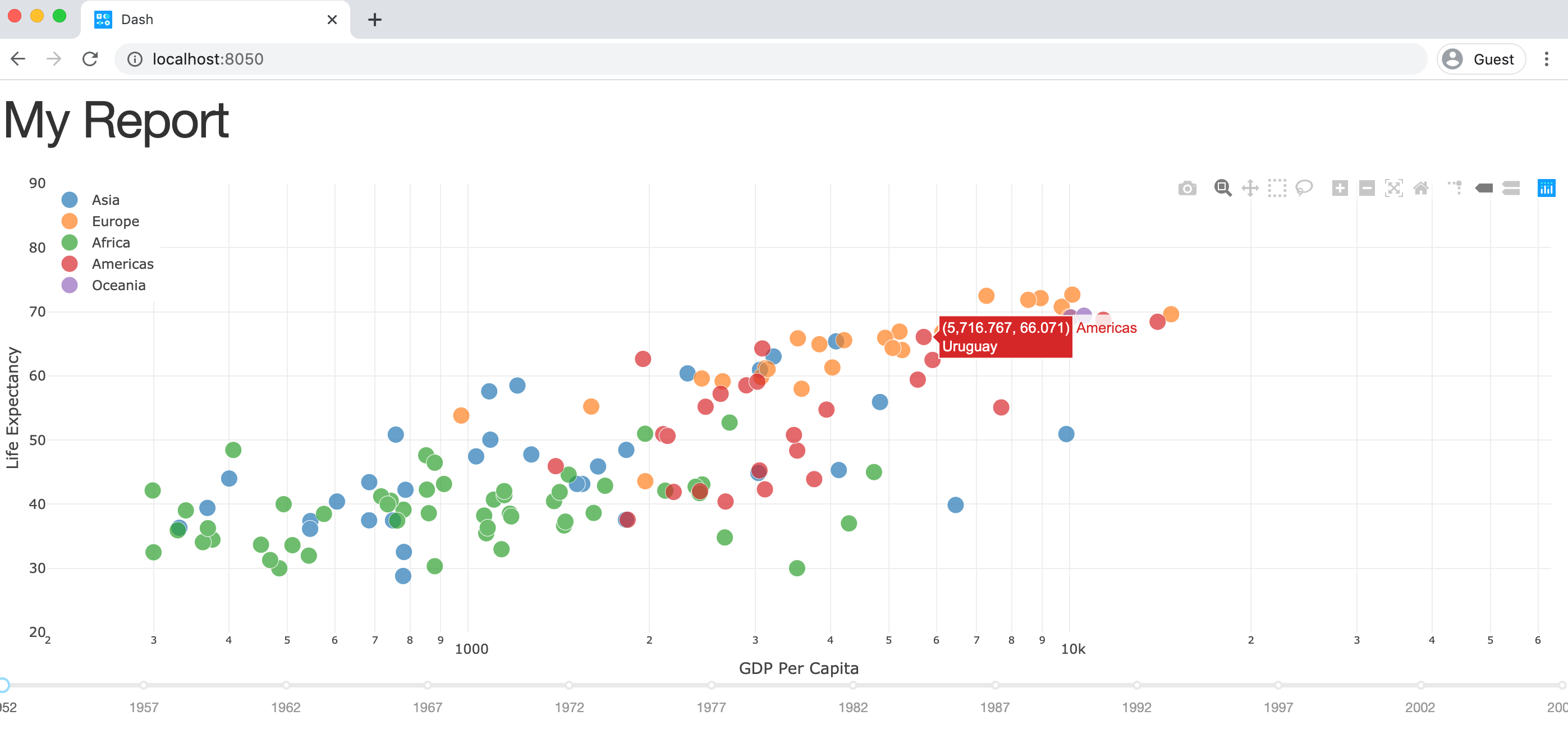

Plotly Dash

Required libraries: pandas, dash

Plotly is best known for their beautiful and open-source JavaScript charting library which builds the core of Chart Studio, a platform for collaboratively designing charts (no coding required).

To create a report though, we’re using their latest product Plotly Dash, an open-source framework that allows the creation of interactive web dashboards with Python only (no need to write JavaScript code). Plotly Dash is also available as Enterprise plan.

How it works is best explained by looking at some code, adopted with minimal changes from the official getting started guide:

import pandas as pd

import dash

import dash_core_components as dcc

import dash_html_components as html

from dash.dependencies import Input, Output

# Sample DataFrame

df = pd.read_csv('https://raw.githubusercontent.com/plotly/datasets/master/gapminderDataFiveYear.csv')

# Dash app - The CSS code is pulled in from an external file

app = dash.Dash(__name__, external_stylesheets=['https://codepen.io/chriddyp/pen/bWLwgP.css'])

# This defines the HTML layout

app.layout = html.Div([

html.H1('My Report'),

dcc.Graph(id='graph-with-slider'),

dcc.Slider(

id='year-slider',

min=df['year'].min(),

max=df['year'].max(),

value=df['year'].min(),

marks={str(year): str(year) for year in df['year'].unique()},

step=None

)

])

# This code runs every time the slider below the chart is changed

@app.callback(Output('graph-with-slider', 'figure'), [Input('year-slider', 'value')])

def update_figure(selected_year):

filtered_df = df[df.year == selected_year]

traces = []

for i in filtered_df.continent.unique():

df_by_continent = filtered_df[filtered_df['continent'] == i]

traces.append(dict(

x=df_by_continent['gdpPercap'],

y=df_by_continent['lifeExp'],

text=df_by_continent['country'],

mode='markers',

opacity=0.7,

marker={'size': 15, 'line': {'width': 0.5, 'color': 'white'}},

name=i

))

return {

'data': traces,

'layout': dict(

xaxis={'type': 'log', 'title': 'GDP Per Capita', 'range': [2.3, 4.8]},

yaxis={'title': 'Life Expectancy', 'range': [20, 90]},

margin={'l': 40, 'b': 40, 't': 10, 'r': 10},

legend={'x': 0, 'y': 1},

hovermode='closest',

transition={'duration': 500},

)

}

if __name__ == '__main__':

app.run_server(debug=True)

Running this script and navigating to http://localhost:8050 in your browser will give you this dashboard:

The charts look great by default and it’s very easy to make your dashboard interactive by writing simple callback functions in Python: You can choose the year by clicking on the slider below the chart. In the background, every change to our year-slider will trigger the update_figure callback function and hence update the chart.

By arranging your documents properly, you could create an interactive web dashboard that can also act as the source for your PDF factsheet, see for example their financial factsheet demo together with it’s source code.

Alternatives to Plotly Dash

If you are looking for an alternative to Plotly Dash, make sure to check out Panel. Panel was originally developed with the support of Anaconda Inc., and is now maintained by Anaconda developers and community contributors. Unlike Plotly Dash, Panel is very inclusive and supports a wide range of plotting libraries including: Bokeh, Altair, Matplotlib and others (including also Plotly).

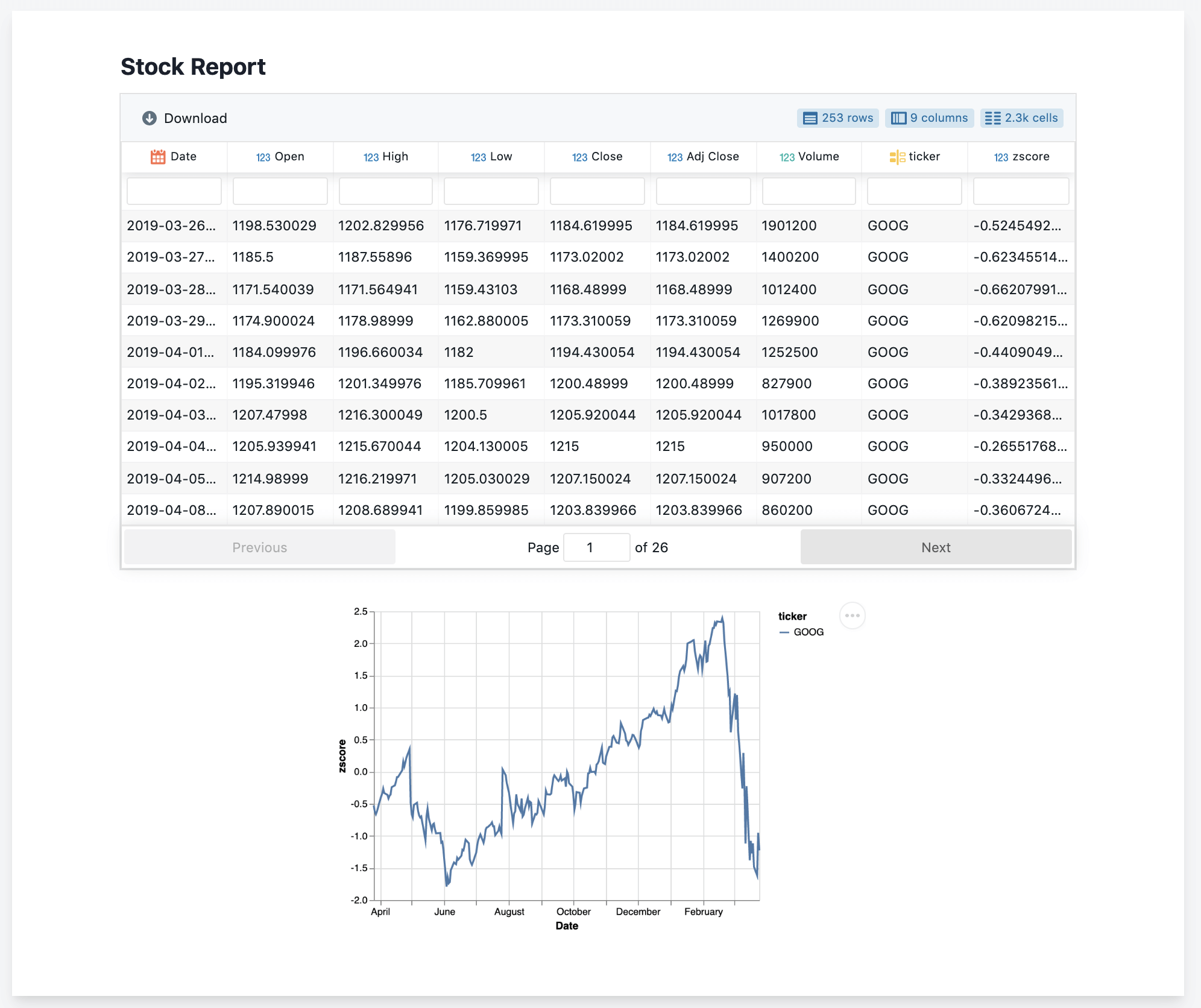

Datapane

Required libraries: datapane

Datapane is a framework for reporting which allows you to generate interactive reports from pandas DataFrames, Python visualisations (such as Bokeh and Altair), and Markdown. Unlike solutions such as Dash, Datapane allows you to generate standalone reports which don’t require a running Python server—but it doesn’t require any HTML coding either.

Using Datapane, you can either generate one-off reports, or deploy your Jupyter Notebook or Python script so others can generate reports dynamically by entering parameters through an automatically generated web app.

Datapane (open-source library)

Datapane’s open-source library allows you to create reports from components, such as a Table component, a Plot component, etc. These components are compatible with Python objects such as pandas DataFrames, and many visualisation libraries, such as Altair:

import datapane as dp

import pandas as pd

import altair as alt

df = pd.read_csv('https://query1.finance.yahoo.com/v7/finance/download/GOOG?period2=1585222905&interval=1mo&events=history')

chart = alt.Chart(df).encode(

x='Date:T',

y='Open'

).mark_line().interactive()

r = dp.Report(dp.Table(df), dp.Plot(chart))

r.save(path='report.html')

This code renders a standalone HTML document with an interactive, searchable table and plot component.

If you want to publish your report, you can login to Datapane (via $ datapane login) and use the publish method, which will give you a URL such as this which you can share or embed.

r.publish(name='my_report')

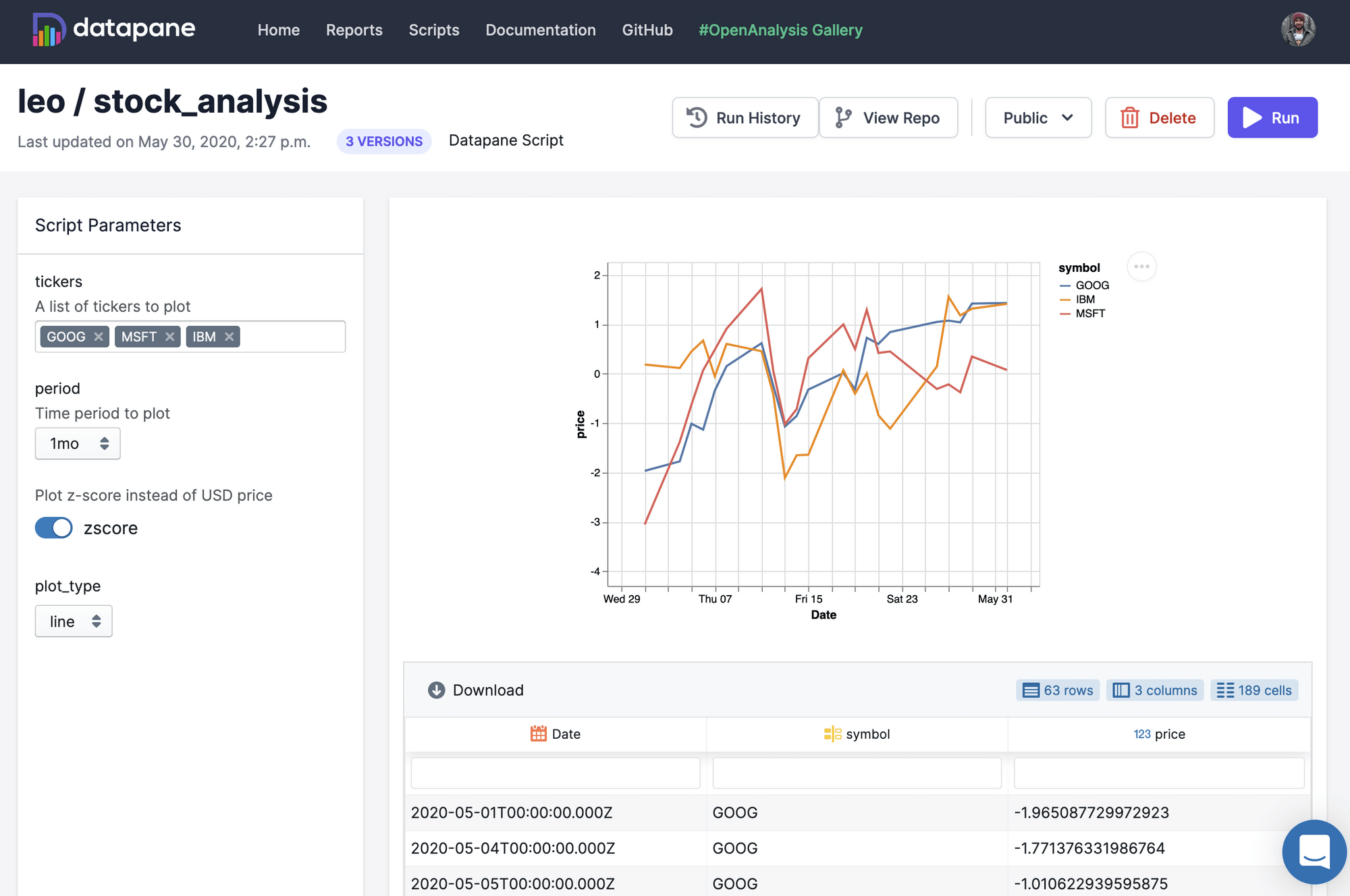

Hosted Reporting Apps

Datapane can also be used to deploy Jupyter Notebooks and Python scripts so that other people who are not familiar with Python can generate custom reports. By adding a YAML file to your folder, you can specify input parameters as well as dependencies (through pip, Docker, or local folders). Datapane also has support for managing secret variables, such as database passwords, and for storing and persisting files. Here is a sample script (stocks.py) and YAML file (stocks.yaml):

# stocks.py

import datapane as dp

import altair as alt

import yfinance as yf

dp.Params.load_defaults('./stocks.yaml')

tickers = dp.Params.get('tickers')

plot_type = dp.Params.get('plot_type')

period = dp.Params.get('period')

data = yf.download(tickers=' '.join(tickers), period=period, groupby='ticker').Close

df = data.reset_index().melt('Date', var_name='symbol', value_name='price')

base_chart = alt.Chart(df).encode(x='Date:T', y='price:Q', color='symbol').interactive()

chart = base_chart.mark_line() if plot_type == 'line' else base_chart.mark_bar()

dp.Report(dp.Plot(chart), dp.Table(df)).publish(name=f'stock_report', headline=f'Report on {" ".join(tickers)}')

# stocks.yaml

name: stock_analysis

script: stocks.py

# Script parameters

parameters:

- name: tickers

description: A list of tickers to plot

type: list

default: ['GOOG', 'MSFT', 'IBM']

- name: period

description: Time period to plot

type: enum

choices: ['1d','5d','1mo','3mo','6mo','1y','2y','5y','10y','ytd','max']

default: '1mo'

- name: plot_type

type: enum

default: line

choices: ['bar', 'line']

## Python packages required for the script

requirements:

- yfinance

Publishing this into a reporting app is easy as running $ datapane script deploy. For a full example see this example GitHub repository or read the docs.

ReportLab

Required libraries: pandas, reportlab

ReportLab writes PDF files directly. Most prominently, Wikipedia uses ReportLab to generate their PDF exports. One of the key strength of ReportLab is that it builds PDF reports “at incredible speeds”, to cite their homepage. Let’s have a look at some sample code for both the open-source and the commercial version!

ReportLab OpenSource

In its most basic functionality, ReportLab uses a canvas where you can place objects using a coordinate system:

from reportlab.pdfgen import canvas

c = canvas.Canvas("hello.pdf")

c.drawString(50, 800, "Hello World")

c.showPage()

c.save()

ReportLab also offers an advanced mode called PLATYPUS (Page Layout and Typography Using Scripts), which is able to define dynamic layouts based on templates at the document and page level. Within pages, Frames would then arrange Flowables (e.g. text and pictures) dynamically according to their height. Here is a very basic example of how you put PLATYPUS at work:

import pandas as pd

import numpy as np

from reportlab.pdfgen.canvas import Canvas

from reportlab.lib import colors

from reportlab.lib.styles import getSampleStyleSheet

from reportlab.lib.units import inch

from reportlab.platypus import Paragraph, Frame, Table, Spacer, TableStyle

# Sample DataFrame

df = pd.DataFrame(np.random.randn(5, 4), columns=['one', 'two', 'three', 'four'],

index=['a', 'b', 'c', 'd', 'e'])

# Style Table

df = df.reset_index()

df = df.rename(columns={"index": ""})

data = [df.columns.to_list()] + df.values.tolist()

table = Table(data)

table.setStyle(TableStyle([

('INNERGRID', (0, 0), (-1, -1), 0.25, colors.black),

('BOX', (0, 0), (-1, -1), 0.25, colors.black)

]))

# Components that will be passed into a Frame

story = [Paragraph("My Report", getSampleStyleSheet()['Heading1']),

Spacer(1, 20),

table]

# Use a Frame to dynamically align the compents and write the PDF file

c = Canvas('report.pdf')

f = Frame(inch, inch, 6 * inch, 9 * inch)

f.addFromList(story, c)

c.save()

Running this script will produce the following PDF:

ReportLab PLUS

In comparison to the open-source version of ReportLab, the most prominent features of Reportlab PLUS are

- a templating language

- the ability to include vector graphics

The templating language is called RML (Report Markup Language), an XML dialect. Here is a sample of how it looks like, taken directly from the official documentation:

<!DOCTYPE document SYSTEM "rml.dtd">

<document filename="example.pdf">

<template>

<pageTemplate id="main">

<frame id="first" x1="72" y1="72" width="451" height="698" />

</pageTemplate>

</template>

<stylesheet>

</stylesheet>

<story>

<para>

This is the "story". This is the part of the RML document where

your text is placed.

</para>

<para>

It should be enclosed in "para" and "/para" tags to turn it into

paragraphs.

</para>

</story>

</document>

The idea here is that you can have any program produce such an RML document, not just Python, which can then be transformed into a PDF document by ReportLab PLUS.

Conclusion

Python offers various libraries to create professional reports and factsheets. If you are a good at HTML + CSS have a look at Plotly Dash or Panel or write your HTML documents directly with the help of the to_html method in Pandas.

If you need your report as Excel file (or if you hate CSS), Pandas + XlsxWriter/OpenPyXL or xlwings might be the right choice — you can still export your Excel document as PDF file. xlwings is the better choice if you want to split the design and code work. XlsxWriter/OpenPyxl is the better choice if it needs to be scalable and run on a server.

If you need to generate PDF files at high speed, check out ReportLab. It has a steep learning curve and requires to write quite some code but once the code has been written, it works at high speed.

guys — this is life-changing. AUTOMATE ALL YOUR EXCEL REPORTS WITH PYTHON!!

1) Basic writing of dataframe from pandas into an excel sheet

see here: https://pandas.pydata.org/pandas-docs/stable/generated/pandas.DataFrame.to_excel.html AND http://xlsxwriter.readthedocs.io/example_pandas_multiple.html

# writing one dataframe to one excel file

df.to_excel()

# writing multiple dataframas into different sheets

writer = pd.ExcelWriter('pandas_multiple.xlsx', engine='xlsxwriter')

df1.to_excel(writer, sheet_name='Sheet1')

df2.to_excel(writer, sheet_name='Sheet2')

df3.to_excel(writer, sheet_name='Sheet3')

writer.save()

2) Write dataframe from pandas into excel sheet with number and cell color formatting:

# write to excel

writer = pd.ExcelWriter(destination_filepath,engine='xlsxwriter')

workbook=writer.book

worksheet=workbook.add_worksheet('sheetname')

writer.sheets['sheetname'] = worksheet

# define formats

format_num = workbook.add_format({'num_format': '_(* #,##0_);_(* (#,##0);_(* "-"??_);_(@_)'})

format_perc = workbook.add_format({'num_format': '0%;-0%;"-"'})

format_header = workbook.add_format({'bold': True, 'bg_color': '#C6EFCE'})

# write individual cells

worksheet.write(0, 2, "sheetname", format_header)

worksheet.write(1, 2, sum(df['abc']))

worksheet.write(1, 3, sum(df['def']))

worksheet.write(1, 4, sum(df['ghi']))

# write dataframe in

df.to_excel(writer,sheet_name='sheetname', startrow=2, startcol=0, index=False)

worksheet.set_column(1, 1, 45)

worksheet.set_column(2, 4, 15, format_num)

worksheet.set_column(5, 5, 15, format_perc)

writer.save

3) Write your dataframe into pre-formatted Excel sheets

see the docs for more info: https://docs.xlwings.org/en/stable/datastructures.html

import xlwings as xw

list_of_values = [1, 2, 3] # this pastes as a row. to paste as a column, use [[1], [2], [3]]

workbook_path = 'C:/abc.xlsx' # make sure it's the FULL path, otherwise you will hit a pop-up prompt (manual input required) while overwriting existing files when saving

wb = xw.Book(workbook_path)

ws = wb.sheets['sheet1']

ws.range('E35').value = list_of_values # this can be a list or dataframe - just pick the top left cell to paste

wb.save()

wb.close()

see here for an alternative method (which also throws an error for me — strange): https://stackoverflow.com/questions/9920935/easily-write-formatted-excel-from-python-start-with-excel-formatted-use-it-in

3) Creating a PivotTable in Excel

see here: https://stackoverflow.com/questions/22532019/creating-pivot-table-in-excel-using-python

import win32com.client

Excel = win32com.client.gencache.EnsureDispatch('Excel.Application') # Excel = win32com.client.Dispatch('Excel.Application')

win32c = win32com.client.constants

wb = Excel.Workbooks.Add()

Sheet1 = wb.Worksheets("Sheet1")

TestData = [['Country','Name','Gender','Sign','Amount'],

['CH','Max' ,'M','Plus',123.4567],

['CH','Max' ,'M','Minus',-23.4567],

['CH','Max' ,'M','Plus',12.2314],

['CH','Max' ,'M','Minus',-2.2314],

['CH','Sam' ,'M','Plus',453.7685],

['CH','Sam' ,'M','Minus',-53.7685],

['CH','Sara','F','Plus',777.666],

['CH','Sara','F','Minus',-77.666],

['DE','Hans','M','Plus',345.088],

['DE','Hans','M','Minus',-45.088],

['DE','Paul','M','Plus',222.455],

['DE','Paul','M','Minus',-22.455]]

for i, TestDataRow in enumerate(TestData):

for j, TestDataItem in enumerate(TestDataRow):

Sheet1.Cells(i+2,j+4).Value = TestDataItem

cl1 = Sheet1.Cells(2,4)

cl2 = Sheet1.Cells(2+len(TestData)-1,4+len(TestData[0])-1)

PivotSourceRange = Sheet1.Range(cl1,cl2)

PivotSourceRange.Select()

Sheet2 = wb.Worksheets(2)

cl3=Sheet2.Cells(4,1)

PivotTargetRange= Sheet2.Range(cl3,cl3)

PivotTableName = 'ReportPivotTable'

PivotCache = wb.PivotCaches().Create(SourceType=win32c.xlDatabase, SourceData=PivotSourceRange, Version=win32c.xlPivotTableVersion14)

PivotTable = PivotCache.CreatePivotTable(TableDestination=PivotTargetRange, TableName=PivotTableName, DefaultVersion=win32c.xlPivotTableVersion14)

PivotTable.PivotFields('Name').Orientation = win32c.xlRowField

PivotTable.PivotFields('Name').Position = 1

PivotTable.PivotFields('Gender').Orientation = win32c.xlPageField

PivotTable.PivotFields('Gender').Position = 1

PivotTable.PivotFields('Gender').CurrentPage = 'M'

PivotTable.PivotFields('Country').Orientation = win32c.xlColumnField

PivotTable.PivotFields('Country').Position = 1

PivotTable.PivotFields('Country').Subtotals = [False, False, False, False, False, False, False, False, False, False, False, False]

PivotTable.PivotFields('Sign').Orientation = win32c.xlColumnField

PivotTable.PivotFields('Sign').Position = 2

DataField = PivotTable.AddDataField(PivotTable.PivotFields('Amount'))

DataField.NumberFormat = '#'##0.00'

Excel.Visible = 1

wb.SaveAs('ranges_and_offsets.xlsx')

Excel.Application.Quit()

Special feature for xlwings

see here: http://docs.xlwings.org/en/stable/quickstart.html

Bonus: Datables

Not excel but get interactive tables on your webpage!! Simple, impressive and FREE lol.

https://datatables.net/

Update (2018-10-08):

So I’ve been approached to share this link here: https://www.pyxll.com/blog/tools-for-working-with-excel-and-python/

Mostly it introduces PyXLL, by comparing against various tools for working with python and excel.

It’s always helpful to know about alternative solutions out there and their pros and cons. PyXLL seems like there’s lots of features and seems fairly powerful — if you can convince your company to fork out $250 a year, per user.

For me… I think I will stick to the free and open source alternatives for now :p

Содержание

- Input/output#

- Pickling#

- Flat file#

- Clipboard#

- Excel#

- Latex#

- HDFStore: PyTables (HDF5)#

- Feather#

- Parquet#

- Google BigQuery#

- STATA#

- Input/output#

- Pickling#

- Flat file#

- Clipboard#

- Excel#

- Latex#

- HDFStore: PyTables (HDF5)#

- Feather#

- Parquet#

- Google BigQuery#

- STATA#

- Статья Парсим данные таблиц сайта в Excel с помощью Pandas

Input/output#

Pickling#

Load pickled pandas object (or any object) from file.

Pickle (serialize) object to file.

Flat file#

read_table (filepath_or_buffer,В *[,В sep,В . ])

Read general delimited file into DataFrame.

read_csv (filepath_or_buffer,В *[,В sep,В . ])

Read a comma-separated values (csv) file into DataFrame.

Write object to a comma-separated values (csv) file.

read_fwf (filepath_or_buffer,В *[,В colspecs,В . ])

Read a table of fixed-width formatted lines into DataFrame.

Clipboard#

Read text from clipboard and pass to read_csv.

Copy object to the system clipboard.

Excel#

read_excel (io[,В sheet_name,В header,В names,В . ])

Read an Excel file into a pandas DataFrame.

Write object to an Excel sheet.

Parse specified sheet(s) into a DataFrame.

Write Styler to an Excel sheet.

ExcelWriter (path[,В engine,В date_format,В . ])

Class for writing DataFrame objects into excel sheets.

read_json (path_or_buf,В *[,В orient,В typ,В . ])

Convert a JSON string to pandas object.

Normalize semi-structured JSON data into a flat table.

Convert the object to a JSON string.

Create a Table schema from data .

read_html (io,В *[,В match,В flavor,В header,В . ])

Read HTML tables into a list of DataFrame objects.

Render a DataFrame as an HTML table.

Write Styler to a file, buffer or string in HTML-CSS format.

read_xml (path_or_buffer,В *[,В xpath,В . ])

Read XML document into a DataFrame object.

Render a DataFrame to an XML document.

Latex#

Render object to a LaTeX tabular, longtable, or nested table.

Write Styler to a file, buffer or string in LaTeX format.

HDFStore: PyTables (HDF5)#

read_hdf (path_or_buf[,В key,В mode,В errors,В . ])

Read from the store, close it if we opened it.

HDFStore.put (key,В value[,В format,В index,В . ])

Store object in HDFStore.

Append to Table in file.

Retrieve pandas object stored in file.

Retrieve pandas object stored in file, optionally based on where criteria.

Print detailed information on the store.

Return a list of keys corresponding to objects stored in HDFStore.

Return a list of all the top-level nodes.

Walk the pytables group hierarchy for pandas objects.

One can store a subclass of DataFrame or Series to HDF5, but the type of the subclass is lost upon storing.

Feather#

read_feather (path[,В columns,В use_threads,В . ])

Load a feather-format object from the file path.

Write a DataFrame to the binary Feather format.

Parquet#

Load a parquet object from the file path, returning a DataFrame.

Write a DataFrame to the binary parquet format.

Load an ORC object from the file path, returning a DataFrame.

Write a DataFrame to the ORC format.

read_sas (filepath_or_buffer,В *[,В format,В . ])

Read SAS files stored as either XPORT or SAS7BDAT format files.

read_spss (path[,В usecols,В convert_categoricals])

Load an SPSS file from the file path, returning a DataFrame.

Read SQL database table into a DataFrame.

Read SQL query into a DataFrame.

Read SQL query or database table into a DataFrame.

Write records stored in a DataFrame to a SQL database.

Google BigQuery#

read_gbq (query[,В project_id,В index_col,В . ])

Load data from Google BigQuery.

STATA#

Read Stata file into DataFrame.

Export DataFrame object to Stata dta format.

Return data label of Stata file.

Return a nested dict associating each variable name to its value and label.

Return a dict associating each variable name with corresponding label.

Export DataFrame object to Stata dta format.

Источник

Input/output#

Pickling#

Load pickled pandas object (or any object) from file.

Pickle (serialize) object to file.

Flat file#

read_table (filepath_or_buffer,В *[,В sep,В . ])

Read general delimited file into DataFrame.

read_csv (filepath_or_buffer,В *[,В sep,В . ])

Read a comma-separated values (csv) file into DataFrame.

Write object to a comma-separated values (csv) file.

read_fwf (filepath_or_buffer,В *[,В colspecs,В . ])

Read a table of fixed-width formatted lines into DataFrame.

Clipboard#

Read text from clipboard and pass to read_csv.

Copy object to the system clipboard.

Excel#

read_excel (io[,В sheet_name,В header,В names,В . ])

Read an Excel file into a pandas DataFrame.

Write object to an Excel sheet.

Class for parsing tabular Excel sheets into DataFrame objects.

Parse specified sheet(s) into a DataFrame.

Write Styler to an Excel sheet.

ExcelWriter (path[,В engine,В date_format,В . ])

Class for writing DataFrame objects into excel sheets.

read_json (path_or_buf,В *[,В orient,В typ,В . ])

Convert a JSON string to pandas object.

Normalize semi-structured JSON data into a flat table.

Convert the object to a JSON string.

Create a Table schema from data .

read_html (io,В *[,В match,В flavor,В header,В . ])

Read HTML tables into a list of DataFrame objects.

Render a DataFrame as an HTML table.

Write Styler to a file, buffer or string in HTML-CSS format.

read_xml (path_or_buffer,В *[,В xpath,В . ])

Read XML document into a DataFrame object.

Render a DataFrame to an XML document.

Latex#

Render object to a LaTeX tabular, longtable, or nested table.

Write Styler to a file, buffer or string in LaTeX format.

HDFStore: PyTables (HDF5)#

read_hdf (path_or_buf[,В key,В mode,В errors,В . ])

Read from the store, close it if we opened it.

HDFStore.put (key,В value[,В format,В index,В . ])

Store object in HDFStore.

Append to Table in file.

Retrieve pandas object stored in file.

Retrieve pandas object stored in file, optionally based on where criteria.

Print detailed information on the store.

Return a list of keys corresponding to objects stored in HDFStore.

Return a list of all the top-level nodes.

Walk the pytables group hierarchy for pandas objects.

One can store a subclass of DataFrame or Series to HDF5, but the type of the subclass is lost upon storing.

Feather#

read_feather (path[,В columns,В use_threads,В . ])

Load a feather-format object from the file path.

Write a DataFrame to the binary Feather format.

Parquet#

Load a parquet object from the file path, returning a DataFrame.

Write a DataFrame to the binary parquet format.

read_orc (path[,В columns,В dtype_backend,В . ])

Load an ORC object from the file path, returning a DataFrame.

Write a DataFrame to the ORC format.

read_sas (filepath_or_buffer,В *[,В format,В . ])

Read SAS files stored as either XPORT or SAS7BDAT format files.

Load an SPSS file from the file path, returning a DataFrame.

Read SQL database table into a DataFrame.

Read SQL query into a DataFrame.

Read SQL query or database table into a DataFrame.

Write records stored in a DataFrame to a SQL database.

Google BigQuery#

read_gbq (query[,В project_id,В index_col,В . ])

Load data from Google BigQuery.

STATA#

Read Stata file into DataFrame.

Export DataFrame object to Stata dta format.

Return data label of Stata file.

Return a nested dict associating each variable name to its value and label.

Return a dict associating each variable name with corresponding label.

Export DataFrame object to Stata dta format.

Источник

Статья Парсим данные таблиц сайта в Excel с помощью Pandas

Парсинг данных. Эта штука может быть настолько увлекательной, что порой затягивает очень сильно. Ведь всегда интересно найти способ, с помощью которого можно получить те или иные данные, да еще и структурировать их в нужном виде. В статье «Простой пример работы с Excel в Python» уже был рассмотрен один из способов получить данные из таблиц и сохранить их в формате Excel на разных листах. Для этого мы искали на странице все теги, которые так или иначе входят в содержимое таблицы и вытаскивали из них данные. Но, есть способ немного проще. И, давайте, о нем поговорим.

А состоит этот способ в использовании библиотеки pandas. Конечно же, ее простой не назовешь. Это очень мощный инструмент для аналитики самых разнообразных данных. И в рассмотренном ниже случае мы лишь коснемся небольшого фрагмента из того, что вообще умеет делать эта библиотека.

Для того, чтобы написать данный скрипт нам понадобиться конечно же сам pandas. Библиотеки requests, BeautifulSoup и lxml. А также модуль для записи файлов в формате xlsx – xlsxwriter. Установить их все можно одной командой:

pip install requests bs4 lxml pandas xlsxwriter

А после установки импортировать в скрипт для дальнейшей работы с функциями, которые они предоставляют:

Так же с сайта, на котором расположены целевые таблицы нужно взять заголовки для запроса. Данные заголовки не нужны для pandas, но нужны для requests. Зачем вообще использовать в данном случае запросы? Тут все просто. Можно и не использовать вовсе. А полученные таблицы при сохранении называть какими-нибудь составными именами, вроде «Таблица 1» и так далее, но гораздо лучше и понятнее, все же собрать данные о том, как называется данная таблица в оригинале. Поэтому, с помощью запросов и библиотеки BeautifulSoup мы просто будем искать название таблицы.

Но, вернемся к заголовкам. Взял я их в инструментах разработчика на вкладке сеть у первого попавшегося запроса.

Теперь нужен список, в котором будут перечисляться года, которые представлены в виде таблиц на сайте. Эти года получаются из псевдовыпадающего списка. Я не стал использовать selenium для того, чтобы получить их со страницы. Так как обычный запрос не может забрать эти данные. Они подгружаются с помощью JS скриптов. В данном случае не так уж много данных, которые надо обработать руками. Поэтому я создал список, в которые эти данные и внес вручную:

Теперь нам нужно будет создать пустой словарь вне всяких циклов. Именно, чтобы он был глобальной переменной. Этот словарь мы и будем наполнять полученными данными, а также сохранять их него данные в таблицу Excel. Поэтому, я подумал, что проще сделать его глобальной переменной, чем тасовать из функции в функцию.

Назвал я его df, потому как все так называют. И увидев данное название в нужном контексте становиться понятно, что используется pandas. df – это сокращение от DataFrame, то есть, определенный набор данных.

Ну вот, предварительная подготовка закончена. Самое время получать данные. Давайте для начала сходим на одну страницу с таблицей и попробуем получить оттуда данные с помощью pandas.

Здесь была использована функция read_html. Pandas использует библиотеку для парсинга lxml. То есть, примерно это все работает так. Получаются данные со страницы, а затем в коде выполняется поиск с целью найти все таблицы, у которых есть тэг , а далее, внутри таблиц ищутся заголовки и данные под тэгами и , которые и возвращаются в виде списка формата DataFrame.

Давайте выполним запрос. Но вот печатать данные пока не будем. Нужно для начала понять, сколько таблиц нашлось в запросе. Так как на странице их может быть несколько. Помимо той, что на виду, в виде таблиц может быть оформлен подзаголовок или еще какая информация. Поэтому, давайте узнаем, сколько элементов списка содержится в запросе, а соответственно, столько и таблиц. Выполняем:

И видим, что найденных таблиц две. Если вывести по очереди элементы списка, то мы увидим, что нужная нам таблица, в данном случае, находиться под индексом 1. Вот ее и распечатаем для просмотра:

И вот она полученная таблица:

Как видим, в данной таблице помимо нужных нам данных, содержится так же лишний столбец, от которого желательно избавиться. Это, скажем так, можно назвать сопутствующим мусором. Поэтому, полученные данные иногда надо «причесать». Давайте вызовем метод drop и удалим ненужный нам столбец.

tables[1].drop(‘Unnamed: 0’, axis=1, inplace=True)

На то, что нужно удалить столбец указывает параметр axis, который равен 1. Если бы нужно было удалить строку, он был бы равен 0. Ну и указываем название столбца, который нужно удалить. Параметр inplace в значении True указывает на то, что удалить столбец нужно будет в исходных данных, а не возвращать нам их копию с удаленным столбцом.

А теперь нужно получить заголовок таблицы. Поэтому, делаем запрос к странице, получаем ее содержимое и отправляем для распарсивания в BeautifulSoup. После чего выполняем поиск названия и обрезаем из него все лишние данные.

Теперь, когда у нас есть таблица и ее название, отправим полученные значения в ранее созданный глобально словарь.

Вот и все. Мы получили данные по одной таблице. Но, не будем забывать, что их больше тридцати. А потому, нужен цикл, чтобы формировать ссылки из созданного ранее списка и делать запросы уже к страницам по ссылке. Давайте полностью оформим код функции. Назовем мы ее, к примеру, get_pd_table(). Ее полный код состоит из всех тех элементов кода, которые мы рассмотрели выше, плюс они запущены в цикле.

Итак, когда цикл пробежится по всем ссылкам у нас будет готовый словарь с данными турниров, которые желательно бы записать на отдельные листы. На каждом листе по таблице. Давайте сразу создадим для этого функцию pd_save().

writer = pd.ExcelWriter(‘./Турнирная таблица ПЛ РФ.xlsx’, engine=’xlsxwriter’)

Создаем объект писателя, в котором указываем имя записываемой книги, и инструмент, с помощью которого будем производить запись в параметре engine=’xlsxwriter’.

После запускаем цикл, в котором создаем объекты, то есть листы для записи из ключей списка с таблицами df, указываем, с помощью какого инструмента будет производиться запись, на какой лист. Имя листа берется из ключа словаря. А также указывается параметр index=False, чтобы не сохранялись индексы автоматически присваиваемые pandas.

df[df_name].to_excel(writer, sheet_name=df_name, index=False)

Ну и после всего сохраняем книгу:

Полный код функции сохранения значений:

Вот и все. Для того, чтобы было не скучно ждать, пока будет произведен парсинг таблиц, добавим принты с информацией о получаемой таблице в первую функцию.

print(f’Получаю данные из таблицы: ««. ‘)

И во вторую функцию, с сообщением о том, данные на какой лист записываются в данный момент.

print(f’Записываем данные в лист: ‘)

Ну, а дальше идет функция main, в которой и вызываются вышеприведенные функции. Все остальное, в виде принтов, это просто декорации, для того чтобы пользователь видел, что происходят какие-то процессы.

И ниже результат работы скрипта с уже полученными и записанными таблицами:

Как видите, использовать библиотеку pandas, по крайней мере в данном контексте, не очень сложно. Конечно же, это только самая малая часть того, что она умеет. А умеет она собирать и анализировать данные из самых разных форматов, включая такие распространенные, как: cvs, txt, HTML, XML, xlsx.

Ну и думаю, что не всегда данные будут прилетать «чистыми». Скорее всего, периодически будут попадаться мусорные столбцы или строки. Но их не особо то трудно удалить. Нужно только понимать, что и откуда.

В общем, для себя я сделал однозначный вывод – если мне понадобиться парсить табличные значения, то лучше, чем использование pandas, пожалуй и не придумаешь. Можно просто на лету формировать данные из одного формата и переводить тут же в другой без утомительного перебора. К примеру, из формата csv в json.

Спасибо за внимание. Надеюсь, что данная информация будет вам полезна

Источник

.. currentmodule:: pandas

IO tools (text, CSV, HDF5, …)

The pandas I/O API is a set of top level reader functions accessed like

:func:`pandas.read_csv` that generally return a pandas object. The corresponding

writer functions are object methods that are accessed like

:meth:`DataFrame.to_csv`. Below is a table containing available readers and

writers.

| Format Type | Data Description | Reader | Writer |

|---|---|---|---|

| text | CSV | :ref:`read_csv<io.read_csv_table>` | :ref:`to_csv<io.store_in_csv>` |

| text | Fixed-Width Text File | :ref:`read_fwf<io.fwf_reader>` | |

| text | JSON | :ref:`read_json<io.json_reader>` | :ref:`to_json<io.json_writer>` |

| text | HTML | :ref:`read_html<io.read_html>` | :ref:`to_html<io.html>` |

| text | LaTeX | :ref:`Styler.to_latex<io.latex>` | |

| text | XML | :ref:`read_xml<io.read_xml>` | :ref:`to_xml<io.xml>` |

| text | Local clipboard | :ref:`read_clipboard<io.clipboard>` | :ref:`to_clipboard<io.clipboard>` |

| binary | MS Excel | :ref:`read_excel<io.excel_reader>` | :ref:`to_excel<io.excel_writer>` |

| binary | OpenDocument | :ref:`read_excel<io.ods>` | |

| binary | HDF5 Format | :ref:`read_hdf<io.hdf5>` | :ref:`to_hdf<io.hdf5>` |

| binary | Feather Format | :ref:`read_feather<io.feather>` | :ref:`to_feather<io.feather>` |

| binary | Parquet Format | :ref:`read_parquet<io.parquet>` | :ref:`to_parquet<io.parquet>` |

| binary | ORC Format | :ref:`read_orc<io.orc>` | :ref:`to_orc<io.orc>` |

| binary | Stata | :ref:`read_stata<io.stata_reader>` | :ref:`to_stata<io.stata_writer>` |

| binary | SAS | :ref:`read_sas<io.sas_reader>` | |

| binary | SPSS | :ref:`read_spss<io.spss_reader>` | |

| binary | Python Pickle Format | :ref:`read_pickle<io.pickle>` | :ref:`to_pickle<io.pickle>` |

| SQL | SQL | :ref:`read_sql<io.sql>` | :ref:`to_sql<io.sql>` |

| SQL | Google BigQuery | :ref:`read_gbq<io.bigquery>` | :ref:`to_gbq<io.bigquery>` |

:ref:`Here <io.perf>` is an informal performance comparison for some of these IO methods.

Note

For examples that use the StringIO class, make sure you import it

with from io import StringIO for Python 3.

CSV & text files

The workhorse function for reading text files (a.k.a. flat files) is

:func:`read_csv`. See the :ref:`cookbook<cookbook.csv>` for some advanced strategies.

Parsing options

:func:`read_csv` accepts the following common arguments:

Basic

- filepath_or_buffer : various

- Either a path to a file (a :class:`python:str`, :class:`python:pathlib.Path`,

or :class:`py:py._path.local.LocalPath`), URL (including http, ftp, and S3

locations), or any object with aread()method (such as an open file or

:class:`~python:io.StringIO`). - sep : str, defaults to

','for :func:`read_csv`,tfor :func:`read_table` - Delimiter to use. If sep is

None, the C engine cannot automatically detect

the separator, but the Python parsing engine can, meaning the latter will be

used and automatically detect the separator by Python’s builtin sniffer tool,

:class:`python:csv.Sniffer`. In addition, separators longer than 1 character and

different from's+'will be interpreted as regular expressions and

will also force the use of the Python parsing engine. Note that regex

delimiters are prone to ignoring quoted data. Regex example:'\r\t'. - delimiter : str, default

None - Alternative argument name for sep.

- delim_whitespace : boolean, default False

- Specifies whether or not whitespace (e.g.

' 'or't')

will be used as the delimiter. Equivalent to settingsep='s+'.

If this option is set toTrue, nothing should be passed in for the

delimiterparameter.

Column and index locations and names

- header : int or list of ints, default

'infer' -

Row number(s) to use as the column names, and the start of the

data. Default behavior is to infer the column names: if no names are

passed the behavior is identical toheader=0and column names

are inferred from the first line of the file, if column names are

passed explicitly then the behavior is identical to

header=None. Explicitly passheader=0to be able to replace

existing names.The header can be a list of ints that specify row locations

for a MultiIndex on the columns e.g.[0,1,3]. Intervening rows

that are not specified will be skipped (e.g. 2 in this example is

skipped). Note that this parameter ignores commented lines and empty

lines ifskip_blank_lines=True, so header=0 denotes the first

line of data rather than the first line of the file. - names : array-like, default

None - List of column names to use. If file contains no header row, then you should

explicitly passheader=None. Duplicates in this list are not allowed. - index_col : int, str, sequence of int / str, or False, optional, default

None -

Column(s) to use as the row labels of the

DataFrame, either given as

string name or column index. If a sequence of int / str is given, a

MultiIndex is used.Note

index_col=Falsecan be used to force pandas to not use the first

column as the index, e.g. when you have a malformed file with delimiters at

the end of each line.The default value of

Noneinstructs pandas to guess. If the number of

fields in the column header row is equal to the number of fields in the body

of the data file, then a default index is used. If it is larger, then

the first columns are used as index so that the remaining number of fields in

the body are equal to the number of fields in the header.The first row after the header is used to determine the number of columns,

which will go into the index. If the subsequent rows contain less columns

than the first row, they are filled withNaN.This can be avoided through

usecols. This ensures that the columns are

taken as is and the trailing data are ignored. - usecols : list-like or callable, default

None -

Return a subset of the columns. If list-like, all elements must either

be positional (i.e. integer indices into the document columns) or strings

that correspond to column names provided either by the user innamesor

inferred from the document header row(s). Ifnamesare given, the document

header row(s) are not taken into account. For example, a valid list-like

usecolsparameter would be[0, 1, 2]or['foo', 'bar', 'baz'].Element order is ignored, so

usecols=[0, 1]is the same as[1, 0]. To

instantiate a DataFrame fromdatawith element order preserved use

pd.read_csv(data, usecols=['foo', 'bar'])[['foo', 'bar']]for columns

in['foo', 'bar']order or

pd.read_csv(data, usecols=['foo', 'bar'])[['bar', 'foo']]for

['bar', 'foo']order.If callable, the callable function will be evaluated against the column names,

returning names where the callable function evaluates to True:.. ipython:: python import pandas as pd from io import StringIO data = "col1,col2,col3na,b,1na,b,2nc,d,3" pd.read_csv(StringIO(data)) pd.read_csv(StringIO(data), usecols=lambda x: x.upper() in ["COL1", "COL3"])

Using this parameter results in much faster parsing time and lower memory usage

when using the c engine. The Python engine loads the data first before deciding

which columns to drop.

General parsing configuration

- dtype : Type name or dict of column -> type, default

None -

Data type for data or columns. E.g.

{'a': np.float64, 'b': np.int32, 'c': 'Int64'}

Usestrorobjecttogether with suitablena_valuessettings to preserve

and not interpret dtype. If converters are specified, they will be applied INSTEAD

of dtype conversion... versionadded:: 1.5.0 Support for defaultdict was added. Specify a defaultdict as input where the default determines the dtype of the columns which are not explicitly listed.

- dtype_backend : {«numpy_nullable», «pyarrow»}, defaults to NumPy backed DataFrames

-

Which dtype_backend to use, e.g. whether a DataFrame should have NumPy

arrays, nullable dtypes are used for all dtypes that have a nullable

implementation when «numpy_nullable» is set, pyarrow is used for all

dtypes if «pyarrow» is set.The dtype_backends are still experimential.

.. versionadded:: 2.0

- engine : {

'c','python','pyarrow'} -

Parser engine to use. The C and pyarrow engines are faster, while the python engine

is currently more feature-complete. Multithreading is currently only supported by

the pyarrow engine... versionadded:: 1.4.0 The "pyarrow" engine was added as an *experimental* engine, and some features are unsupported, or may not work correctly, with this engine.

- converters : dict, default

None - Dict of functions for converting values in certain columns. Keys can either be

integers or column labels. - true_values : list, default

None - Values to consider as

True. - false_values : list, default

None - Values to consider as

False. - skipinitialspace : boolean, default

False - Skip spaces after delimiter.

- skiprows : list-like or integer, default

None -

Line numbers to skip (0-indexed) or number of lines to skip (int) at the start

of the file.If callable, the callable function will be evaluated against the row

indices, returning True if the row should be skipped and False otherwise:.. ipython:: python data = "col1,col2,col3na,b,1na,b,2nc,d,3" pd.read_csv(StringIO(data)) pd.read_csv(StringIO(data), skiprows=lambda x: x % 2 != 0)

- skipfooter : int, default

0 - Number of lines at bottom of file to skip (unsupported with engine=’c’).

- nrows : int, default

None - Number of rows of file to read. Useful for reading pieces of large files.

- low_memory : boolean, default

True - Internally process the file in chunks, resulting in lower memory use

while parsing, but possibly mixed type inference. To ensure no mixed

types either setFalse, or specify the type with thedtypeparameter.

Note that the entire file is read into a singleDataFrameregardless,

use thechunksizeoriteratorparameter to return the data in chunks.

(Only valid with C parser) - memory_map : boolean, default False

- If a filepath is provided for

filepath_or_buffer, map the file object

directly onto memory and access the data directly from there. Using this

option can improve performance because there is no longer any I/O overhead.

NA and missing data handling

- na_values : scalar, str, list-like, or dict, default

None - Additional strings to recognize as NA/NaN. If dict passed, specific per-column

NA values. See :ref:`na values const <io.navaluesconst>` below

for a list of the values interpreted as NaN by default. - keep_default_na : boolean, default

True -

Whether or not to include the default NaN values when parsing the data.

Depending on whetherna_valuesis passed in, the behavior is as follows:- If

keep_default_naisTrue, andna_valuesare specified,na_values

is appended to the default NaN values used for parsing. - If

keep_default_naisTrue, andna_valuesare not specified, only

the default NaN values are used for parsing. - If

keep_default_naisFalse, andna_valuesare specified, only

the NaN values specifiedna_valuesare used for parsing. - If

keep_default_naisFalse, andna_valuesare not specified, no

strings will be parsed as NaN.

Note that if

na_filteris passed in asFalse, thekeep_default_naand

na_valuesparameters will be ignored. - If

- na_filter : boolean, default

True - Detect missing value markers (empty strings and the value of na_values). In

data without any NAs, passingna_filter=Falsecan improve the performance

of reading a large file. - verbose : boolean, default

False - Indicate number of NA values placed in non-numeric columns.

- skip_blank_lines : boolean, default

True - If

True, skip over blank lines rather than interpreting as NaN values.