No matter what aspect of language

a scholar is looking into, he or she will adhere to the principles of

scientific method: observation, classification, generalization,

predictions, and verification. “The procedures by which researchers

go about their work of describing, explaining and predicting

phenomena are called research methodology. It is also defined as the

study of methods by which knowledge is gained” (Rajasekar,

Philominathan, & Chinnathambi, 2006, p.2). Observations do not

always give a clear sense of what data may be relevant and what data

are not. Accurate observation is especially critical in lexicology

when the phenomena under investigation appear to be anomalous.

Classification includes an orderly arrangement of data obtained

through observation. It also includes arrangement of some anomalous

data.

The next principle includes

generalization. The collected data are analyzed, and on this basis,

certain hypotheses are offered to explain the phenomena. Theory and

hypothesis are two concepts that are associated with explanations in

lexicology. A theory may be a well-developed and well-confirmed body

of explanatory material. Some

scholars believe that predictions are a part of the hypothesis, and

once predictions are made, the hypothesis can be tested (Perry, p.

17). Verification involves

confirmation or rejection of the hypothesis.

R. S. Ginzburg , S. S. Khidekel,

G. Y. Knyazeva, and A. A. Sankin (1979)

propose the following methods and procedures of linguistic

analyses:

contrastive analysis; statistical methods of analysis; immediate

constituents analysis; distributional analysis and co-occurrence;

transformational analysis; componential analysis; and method of

semantic differential.

Comparative and contrastive

analysis involves the

systematic comparison of two or more philogenically-related and

non-related languages with the aim of finding the similarities and

differences between or among them. The term ‘comparative’ was

first used by Sir William Jones in 1786, in a speech at the Royal

Asiatic Society in Calcutta, India. In his speech, he compared

Sanskrit, Greek, Latin, and Gothic and pointed out that some words

shared similarities. “The comparative method is a technique of

linguistic analysis that compares lists of related words in a

selection of languages” (Denham & Lobeck, 2011, p.361). This

method also helps individuals study the structure of a target

language by comparing it to the structure of their native language.

Specialists

in the field of applied linguistics believe that the most effective

teaching materials are those that are based upon a scientific

description of the target language, carefully compared with a

parallel description of the native language of the learner (Fries,

1957). The

contrastive analysis

is the prediction that a contrastive analysis of structural

differences between two or more languages will allow individuals to

identify areas of contrast and predict where there will be some

difficulty and errors on the part of a second-language learner. The

method helps to predict and explain difficulties individuals may

experience while learning a second language.

The second approach is

statistical,

or quantitative.

It has originated mainly from “the field of psychology where there

has been heavy emphasis on the use of statistics to make

generalizations from samples to populations” (Perry, 2011, p. 79).

A quantitative method is used to represent data in numbers; it is a

study that uses numerical data with emphasis on statistics to answer

the research questions. A quantitative

method differs from a

qualitative method.

Qualitative research does not highlight statistical data. It is a

research done in “a natural setting, involving intensive holistic

data collection through observation at a very close personal level

without the influence of prior theory and contains mostly verbal

analysis” (Perry, 2011, p. 257). A qualitative method may be used

in case studies and discourse analysis.

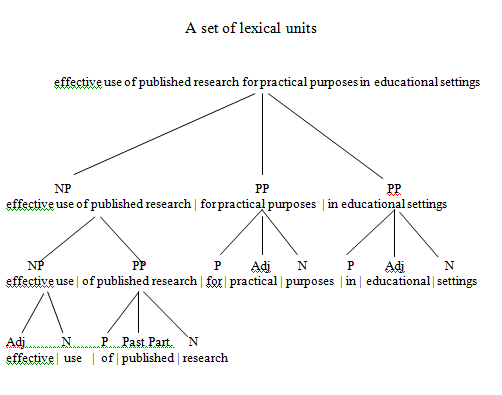

The term immediate

constituents (IC) was

first used by Leonard Bloomfield in his book, Language.

“The principle of immediate constituents leads us to observe the

structural order, which may differ from their actual sequence”

(Bloomfield, 1935, p. 210). Ginzburg et

al. (1979)

further develop the theory of immediate constituents, and they try to

determine

the ways in which lexical units are relevantly related to one

another. For example, the phrase effective

use of published research for practical purposes in educational

settings

may be divided into

the following successive layers – immediate constituents, which in

turn are subdivided into further immediate constituents:

The

purpose of IC analysis is to segment a set of lexical units into

independent sequences, or ICs, thus revealing the hierarchical

structure of this set. “Successive segmentation results in Ultimate

Constituents (UC), i.e. two-facet units that cannot be segmented into

smaller units having both sound-form and meaning” (Ginzburg,

Khidekel,

Knyazeva,

& Sankin,

1979, p. 246).

In the distributional

analysis and

co-occurrence, the term ‘distribution’ means “the occurrence of

a lexical unit relative to other lexical units of the same level

(words relative to words / morphemes relative to morphemes, etc.)

(1979,

p. 246). Lexemes occupy certain positions in a sentence, and if words

are polysemous, they realize their meanings in the context, in

different distributional patterns; for example, compare the verb

chase

in

the phrases chase

around after someone

(to seek someone or something in different places), chase

after someone or something

(to pursue or hunt for someone), chase

someone or something away from some place

(to drive someone or something out of some place), chase

someone or something down

(to track down and seize someone or something), chase

someone in(to) some place

(to drive someone or some creature into a place), and chase

someone and something up

(to seek someone or something out; to look high and low for someone

or something. So, the term ‘distribution’ is “the aptness of a

word in one of its meanings to collocate or to co-occur with a

certain group, or certain groups of words having some common semantic

component” (1979, p. 249). V.

Fromkin, R. Rodman, N. Hyams, and K. M. Hummel (2010)

term this analysis as collocation

analysis when the

presence of one word in the text affects the occurrence of other

words, so a collocation is “the occurrence of two or more words

within a short space of each other in a corpus” (p.432).

Transformational

analysis

in lexicological investigations is the “re-patterning of various

distributional structures in order to discover difference or

similarity of meaning of practically identical distributional

patterns” (Ginzburg,

Khidekel,

Knyazeva,

& Sankin,

1979, p.251). In a number of cases, distributional patterns are

polysemous; therefore, transformational procedures will help to

analyze the semantic similarity

or difference

of the lexemes under examination and the factors that account for

their polysemy. If we compare the compound words highball,

highland, highlight,

and high-rise,

we see that the distributional pattern of stems is identical, and it

may be represented as adj+n

pattern. The first part of the stems modifies or describes the second

part, and these compounds may be assumed as ‘a kind of ball,’ ‘a

kind of land,’ ‘a kind of light,’ and ‘a kind of rise.’

However, this assumption may be wrong because the semantic

relationship between the stems is different; therefore, the lexical

meanings of the words are also different. A transformational

procedure shows that highland

is semantically equivalent to ‘an area of high ground’; highball

is

not a kind of ball but corresponds to “whiskey

and water or soda with ice, served in a tall glass’ or ‘a

railroad signal for a train to proceed at full speed’;

highlight semantically

equals to ‘the part of the surface that catches more light’; and

high-rise

is semantically the same as ‘a building with many stories (Br.

storeys).’ Although distributional structure of these compound

words is similar, the transformational analysis of these words shows

that the semantic relationship between the stems and the lexical

meanings of the words is different. This same approach may be applied

to the analysis of the word-groups, and the result will be the same:

“word-groups of identical distributional structure when

re-patterned also show that the semantic relationship between words

and consequently the meaning of word-groups may be different”

(Ibid,

p.252).

Structural

linguists take a seemingly different approach to the analysis of

lexemes, known as componential

analysis—that

is an analysis in terms of components. This view to the depiction of

meaning of words and phrases is based upon “the thesis that the

sense of every lexeme can be analyzed in terms of a set of more

general sense components (or semantic features)” (Lyons, 1987,

p.317). This term was first used by N.Trubetzkoi (1939) who

introduced componential analysis to phonology. Later, Hjelmslev and

Jakobson applied it to grammar and semantics. In America,

componential analysis was proposed by “anthropologists as a

technique for describing and comparing the vocabulary of kinship in

various languages (as cited in Lyons, 1978, p. 318), and only later

American linguists adopted the method and integrated it into

semantics and syntax. Componential analysis is useful when

identifying similarities and differences among words with related

meanings. F.R. Palmer suggests that sex provides a set of components

for kinship terms. We can add here other components as well:

|

Human |

Male |

Female |

Adult |

Child |

Lineality |

Generation |

|

|

grandmother |

+ |

— |

+ |

+ |

— |

direct |

G1 |

|

grandfather |

+ |

+ |

— |

+ |

— |

direct |

G1 |

|

mother |

+ |

— |

+ |

+ |

— |

direct |

G2 |

|

father |

+ |

+ |

— |

+ |

— |

direct |

G2 |

|

aunt |

+ |

— |

+ |

+ |

— |

collineal |

G2 |

|

uncle |

+ |

+ |

— |

+ |

— |

collineal |

G2 |

|

daughter |

+ |

— |

+ |

+ |

+ |

direct |

G4 |

|

son |

+ |

+ |

— |

+ |

+ |

direct |

G4 |

|

granddaughter |

+ |

— |

+ |

+ |

+ |

direct |

G5 |

|

grandson |

+ |

+ |

— |

+ |

+ |

direct |

G5 |

|

sister |

+ |

— |

+ |

+ |

+ |

collineal |

G3 |

|

brother |

+ |

+ |

— |

+ |

+ |

collineal |

G3 |

|

niece |

+ |

— |

+ |

+ |

+ |

collineal |

G4 |

|

nephew |

+ |

+ |

— |

+ |

+ |

collineal |

G4 |

|

cousin |

+ |

+ |

+ |

+ |

+ |

ablineal |

G3 |

If

we apply componential analysis to living creatures that represent

some kind of ‘proportional’ relationship, the most characteristic

is a three-fold division with many words that refer to living

creatures (Palmer, 1990, p. 109):

|

Man |

Woman |

Child |

Man |

Woman |

Child |

|

cock |

hen |

chick |

gander |

goose |

gosling |

|

bull |

cow |

calf |

lion |

lioness |

cub |

|

boar |

sow |

piglet |

peacock |

peahen |

chick, |

|

buck |

doe |

fawn |

ram |

ewe |

lamb |

The

characteristic of componential analysis is that it attempts “as far

as possible to treat components in terms of binary oppositions”

(Palmer, 1990, p.111), e.g., between an adult and a child, a male and

female, parent and child, animate and inanimate, and other

oppositions.

Words are

related to semantic meaning not only in denotation, as the above

analyses show, but also in connotation. The analysis of the

connotational meanings of words is difficult to do because their

nuances are slight and difficult to grasp, and they do not yield

themselves easily to objective investigation and verification.

Semantic differential, the technique introduced by psycholinguists

Charles Egerton

Osgood, George J. Suci, and Percy H. Tannenbaum,

has been proven to be very effective in establishing and displaying

these differences. “The semantic differential is a combination of

controlled association and scaling procedures” (1957, p. 20). The

participants are provided “a concept to be differentiated and a set

of bipolar adjectival scales against which to do it” (1957, p.20),

and they are tasked to rate a concept, or a term, on a 7-scale

semantic differential for the concept, with the poles described by

two antonymic adjectives (e.g. ‘beautiful’ and ‘ugly’). The

following example illustrates this technique:

Woman

Although

the participants will apply their subjective evaluation, this method

proves to be objective because the procedures are explicit and can be

replicated (1957, p.125).

Соседние файлы в предмете [НЕСОРТИРОВАННОЕ]

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

Linguistic analysis refers to the scientific analysis of a language sample. It involves at least one of the five main branches of linguistics, which are phonology, morphology, syntax, semantics, and pragmatics. Linguistic analysis can be used to describe the unconscious rules and processes that speakers of a language use to create spoken or written language, and this can be useful to those who want to learn a language or translate from one language to another. Some argue that it can also provide insight into the minds of the speakers of a given language, although this idea is controversial.

The discipline of linguistics is defined as the scientific study of language. People who have an education in linguistics and practice linguistic analysis are called linguists. The drive behind linguistic analysis is to understand and describe the knowledge that underlies the ability to speak a given language, and to understand how the human mind processes and creates language.

The five main branches of linguistics are phonology, morphology, syntax, semantics, and pragmatics. An extended language analysis may cover all five of the branches, or it may focus on only one aspect of the language being analyzed. Each of the five branches focuses on a single area of language.

Phonology refers to the study of the sounds of a language. Every language has its own inventory of sounds and logical rules for combining those sounds to create words. The phonology of a language essentially refers to its sound system and the processes used to combine sounds in spoken language.

Morphology refers to the study of the internal structure of the words of a language. In any given language, there are many words to which a speaker can add a suffix, prefix, or infix to create a new word. In some languages, these processes are more productive than others. The morphology of a language refers to the word-building rules speakers use to create new words or alter the meaning of existing words in their language.

Syntax is the study of sentence structure. Every language has its own rules for combining words to create sentences. Syntactic analysis attempts to define and describe the rules that speakers use to put words together to create meaningful phrases and sentences.

Semantics is the study of meaning in language. Linguists attempt to identify not only how speakers of a language discern the meanings of words in their language, but also how the logical rules speakers apply to determine the meaning of phrases, sentences, and entire paragraphs. The meaning of a given word can depend on the context in which it is used, and the definition of a word may vary slightly from speaker to speaker.

Pragmatics is the study of the social use of language. All speakers of a language use different registers, or different conversational styles, depending on the company in which they find themselves. A linguistic analysis that focuses on pragmatics may describe the social aspects of the language sample being analyzed, such as how the status of the individuals involved in the speech act could affect the meaning of a given utterance.

Linguistic analysis has been used to determine historical relationships between languages and people from different regions of the world. Some governmental agencies have used linguistic analysis to confirm or deny individuals’ claims of citizenship. This use of linguistic analysis remains controversial, because language use can vary greatly across geographical regions and social class, which makes it difficult to accurately define and describe the language spoken by the citizens of a particular country.

What Is Linguistic Analysis?

Linguistic analysis refers back to the scientific analysis of a language pattern. It includes not less than one of the 5 most important branches of linguistics, that are phonology, morphology, syntax, semantics, and pragmatics. Linguistic analysis can be utilized to explain the unconscious guidelines and processes that audio system of a language use to create spoken or written language, and this may be helpful to those that need to be taught a language or translate from one language to a different. Some argue that it may additionally present perception into the minds of the audio system of a given language, though this concept is controversial. Levels of linguistic analysisThe self-discipline of linguistics is outlined because the scientific research of language. Individuals who have an schooling in linguistics and follow linguistic analysis are known as linguists. The drive behind linguistic analysis is to know and describe the data that underlies the flexibility to talk a given language, and to know how the human thoughts processes and creates languageThe 5 most important branches of linguistics are phonology, morphology, syntax, semantics, and pragmatics. An prolonged language analysis might cowl all 5 of the branches, or it could deal with just one side of the language being analyzed. Every of the 5 branches focuses on a single space of language. Levels of linguistic analysis

Phonology refers back to the research of the sounds of a language. Each language has its personal stock of sounds and logical guidelines for combining these sounds to create phrases. The phonology of a language basically refers to its sound system and the processes used to mix sounds in spoken language.

Morphology refers back to the research of the interior construction of the phrases of a language. In any given language, there are lots of phrases to which a speaker can add a suffix, prefix, or infix to create a brand new phrase. In some languages, these processes are extra productive than others. The morphology of a language refers back to the word-building guidelines audio system use to create new phrases or alter the which means of present phrases of their language.

Syntax is the research of sentence construction. Each language has its personal guidelines for combining phrases to create sentences. Syntactic analysis makes an attempt to outline and describe the foundations that audio system use to place phrases collectively to create significant phrases and sentences.

Semantics is the research of which means in language. Linguists try to establish not solely how audio system of a language discern the meanings of phrases of their language, but additionally how the logical guidelines audio system apply to find out the which means of phrases, sentences, and whole paragraphs. The which means of a given phrase can rely upon the context during which it’s used, and the definition of a phrase might fluctuate barely from speaker to speaker. Levels of linguistic analysis

Pragmatics is the research of the social use of language. All audio system of a language use completely different registers, or completely different conversational types, relying on the corporate during which they discover themselves. A linguistic analysis that focuses on pragmatics might describe the social elements of the language pattern being analyzed, comparable to how the standing of the people concerned within the speech act may have an effect on the which means of a given utterance.

Linguistic analysis has been used to find out historic relationships between languages and folks from completely different areas of the world. Some governmental companies have used linguistic analysis to verify or deny people’ claims of citizenship. This use of linguistic analysis stays controversial, as a result of language use can fluctuate significantly throughout geographical areas and social class, which makes it troublesome to precisely outline and describe the language spoken by the residents of a selected nation.

Text or speech in natural language can be analyzed at different levels, language levels. Each language level is determined by the main language element or the class of elements that are typical for a particular level. Each plane has an input and output view.

1-phonetic Level

Phonetics is a science on the border of linguistics, anatomy, physiology and physics. This level is concerned with signal processing, ie their sorting and classification. The basic unit is the so-called “ telephone ”.

Phones can be further divided into:

- articulatory ie according to the place where they are formed (position of the tongue, teeth, opening of the oral cavity, etc.),

- acoustic i.e. transmission of sounds by frequency,

- perceptual ie the way the listener receives sounds.

Phonetics determine the formation of vowels and consonants (long / short, tone high / low / descending, voiced / voiceless, nasal / non-nasal). The output of the phonetic level is the processing of the array of phones in the phonetic alphabet. Levels of linguistic analysis

2-Phonological level

Phonology deals with the function of sounds. Like phonetics, this level deals with the study of the sound side of natural language, specifically the sound differences that have the ability to discern meaning in a particular language. Phonology is concerned with the function of sounds. The basic unit is the so-called “ phoneme ”, that is, a sound instrument used to distinguish morphemes, words and word forms of the same language, with different meanings (lexical, grammatical). The phoneme itself can only be recognized by the realization of a “voice“.

The method of articulating a particular phoneme is called “ allophone ” and denotes one of the possible sounds, both in phonetics and phonology. An example of sounds that are given a phonological function (eg “j”) in Czech, namely – chin – gin. The content of the phonological level also includes distinguishing features. This means that there are differences between individual phonemes and higher-level sound phenomena, which have the ability to discern the meaning of words. For example, in the Czech language, this characteristic is solidity (three – three, fifth – fifth) and the differentiation of several sounds (t / d). Another important and indivisible unit in linguistics is the so-called ” grapheme “† The chart shows the letter, characters, icons, numbers and punctuation marks. Usually one phoneme corresponds to one phoneme. [Where? ] It is the recording of a sound with a graphic symbol. The output from the phonological level is a series of symbols of the abstract alphabet, usable at the phonological level. Levels of linguistic analysis

3-Morphology

Morphology is a linguistics that studies inflection, that is, inflection and timing. It also examines the regular derivation of words using prefixes, suffixes, and suffixes. Morphology studies the relationships between different parts of words. The basic unit is the so-called “morpheme“. It is the smallest unit that carries meaning, it is the unit of the language system. A morph is a superficial realization of a morpheme, for example it is a unit of speech – there are specific morphs “ber-” and “br-“, which are the realization of one morpheme. Different morphs that are realizations of the same morpheme are called allomorphs .

There are two types of morphemes:

- lexical morpheme – is a stem of a word that has meaning

- grammatical morpheme – determines the grammatical role of a word form

From a morphological point of view, words are divided into flexible (inflection and timing) and inflexible.

- Morphological Level – Entering this plane is a sequence of phonemes written in the abstract alphabet. The basic element is morphonemes, the composition of the elements are the so-called Morphs. The output is a series of morphones divided into morphs.

- morphematic Level – The input is a series of morphs. The basic element is the so-called “seed” and the compound elements are “morphemes” and “Form”. The output is a series of word forms, including semantic (lexical) and grammatical information. The form corresponds to the word form. Morphemes are lexical (for example, the stem “healthy”) and grammatical (for example, the ending “more”). The topics are lexical, such as part of speech and grammar. The output of morphology is the processing of sentence structure. [source? †

4-syntactic Level

Syntax is a linguistic discipline that deals with the relationships between words in a sentence, as well as the correct formation of sentence structures and word order. The syntax does not describe the meaning of individual words and phrases. The basic unit is a sentence. Natural language syntax then describes the language that arose from natural evolution. Natural language is typically (syntactically) ambiguous. Levels of linguistic analysis

The input to the syntactic plane is a series of morphemes. The basic element is the so-called “Day”, that is, a member of the sentence. It can be not just a word, but for example more words such as “in the house”, “I did”, etc. The compound element is the so-called “syntagmém”, or a sentence. Syntactic categories are then understood, for example subject, predicate, subject, proverbial clause, complement. The output of the syntactic level is a sentence structure (a tree denoting sentence relationships).

5-Semantic level

Semantics is part of semiotics. It deals with the meaning of expressions from different structural levels of language, morphemes, words, idioms and sentences, or even higher units of text. The relationships of these expressions with reality then give meaning. The access to the semantic plane is a sentence tree denoting sentence relations. The basic element is the so-called “Semantic”, which corresponds to the tagmen.

The semantic level is discussed further :

-

- coordination – ie. merging (a, i, ani, nebo), where the sentences are equivalent in content, – resistance (but, but, but), where the second sentence expresses a fact contrary to the fact of the first sentence, separation (or -or), when when the two sentences are combined, their contents are mutually exclusive.

- coreference – it is a coincidence of a subject with a predicate at so-called long distances, – it is a relation of two or more expressions in the text to one object, even if this object is replaced by a pronoun in the previous sentence,

- deep x surface features

Sentence division: The sentence is divided into a theme, which is the basis and premise (what we already know) and a rhyme, which has the function of a core and a focus (what we say new about what we already know). Within the starting point or focus, the members of the sentence are included in the system word order. It’s an in-depth word order. Levels of linguistic analysis

The output of the semantic level is a sentence structure with determining sentence relationships.

6-pragmatic Level

Pragmatics as a scientific discipline, it falls into the field of linguistics and philosophy, which deals with oral expression, that is, speeches and utterances. At this level, the assignment of real world objects (it does not fall into the linguistic content) to specific so-called nodes of the sentence structure is realized.

The pragmatic level deals with practical communication problems, in particular the individual interpretation of the text. If a character is interpreted, then only in relation to other characters, objects and users. Through language it is possible to understand and describe specific objects of our thinking.

This level touches on the choice, use and effect of all spoken or written characters in a given communication situation and assesses whether the speaker has chosen the right strategy so that the receiver comes to understanding. Interpretation can also be influenced by the performer’s own set of knowledge and his attitude to the acquired knowledge.

At the pragmatic level, but also beyond, there is also the so-called conversation , which in ordinary communication can be understood as a discussion, dissertation or as an explanation of a certain topic, in the form of a dialogue of several speakers or just a monologue.

The pragmatic level output is a logical form of text that can be judged true or false.

Bloomfield, Léonard (1887–1949)

M. Bierwisch, in International Encyclopedia of the Social & Behavioral Sciences, 2001

5 Roots of a Mentalistic Conception of Language

Thus, Bloomfield insisted on a strictly antimentalistic program of linguistic analysis, according to his opinion that ‘Non-linguists … constantly forget that a speaker is making noise, and credit him, instead, with the possession of impalpable “ideas”.’ It remains for the linguist to show, in detail, that the speaker has no ‘ideas’ and that the noise is sufficient (1936). But while he defended this dogma by practically excluding semantics from the actual linguistic research agenda, he was, surprisingly, not able to exclude mentalistic concepts from the domain of his very central concern—the analysis of linguistic form: underlying forms, derivational rules, precedence order of operations, and even phonemes as parts of underlying forms are concepts that go beyond the mechanistic elements extracted from noise. This conflict between rigid methodological principles on the one side and explanatory insights on the other mirrors one aspect of the above mentioned tension between Bloomfield and Sapir, in the following sense: although both scholars agreed on the general principles of linguistics as a scientific enterprise, including insights in relevant detail such as the description of Indian languages, or the nature of sound change, Sapir always insisted on the psychological nature of linguistic facts. This is clear from his Sound patterns in language (1925), and even more programmatically from The psychological reality of phonemes (1933). As a matter of fact, the orientation of Sapir’s inspiring essays amounted to a mentalistic research strategy.

Thus, although Bloomfield in 1940 was Sapir’s successor as Sterling Professor of Linguistics at Yale University, it was Sapir’s notion of language as a psychological reality that dominated the subsequent period of post-Bloomfieldian linguistics, as shown, e.g., by the title and orientation of The Sound Pattern of Russian by Halle (1959) and The Sound Pattern of English by Chomsky and Halle (1968).

But the tension persists. While Sapir and Jakobson provided ideas and orientation of this development, it is still Bloomfield’s radical clarification of the conceptual framework and descriptive technology that is the indispensable foundation of modern linguistics. And perhaps more importantly, his Menomini morphophonemics is the paradigm case not only of a complete descriptive analysis given in terms of an explicit, complex rule system, but also of an impressive victory of clear insight over methodological orthodoxy. Conceding the amount of mentalistic machinery implied in this analysis of sound structure, strong theories of syntax and even semantics have in the meanwhile been shown to be possible and productive—in spite of Bloomfield’s antimentalistic bias.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767002187

Logic and Linguistics

T. Zimmermann, in International Encyclopedia of the Social & Behavioral Sciences, 2001

Logical concepts and techniques are widely used (a) as tools for linguistic analysis and (b) in the study of the structure of grammatical theory. As to (a), the classical field of applying logic to linguistics is the analysis of meaning. The key concepts of linguistic semantics, including the very notion of meaning, are frequently defined in terms of, or in analogy with, concepts originating in formal logic. Moreover, algorithms translating natural language into logical symbolism are used to give precise accounts of, and allow comparison with, the semantic structure of natural language. Concerning (b), a central idea of generative grammar has been to specify logical characteristics of linguistic theory and study their repercussions on the complexity of the object under investigation, i.e., language.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767029910

Multimodal discourse trees for health management and security

Boris Galitsky, in Artificial Intelligence for Healthcare Applications and Management, 2022

1.1 Forensic linguistics

The number of computational approaches to forensic linguistics has increased significantly over the last decades because of increasing computer processing power as well as growing interest of computer scientists in forensic applications of Natural Language Processing (NLP). At the same time, forensic linguists faced the need to use computer resources in both their research and their casework, especially when dealing with large volumes of data (Coulthard, 2010).

Forensic linguistics has attracted significant attention ever since Svartvik (1968) published The Evans Statements: A Case for Forensic Linguistics Svartvik (1968). The true potential of linguistic analysis in forensic contexts has been demonstrated. Since then, the corpus of research on forensic linguistics methods and techniques has been growing along with the spectrum of potential applications. Indeed, the three subareas identified by forensic linguistics in a broad sense are:

- (1)

-

the written language of the law

- (2)

-

interaction in legal contexts

- (3)

-

language as evidence (Coulthard et al., 2017; Coulthard and Sousa-Silva, 2016)

These directions have been further advanced and extended to several other applications worldwide.

The written language of the law came to include applications other than studying the complexity of legal language; interaction in legal contexts has significantly evolved and now focuses on any kind of interaction in legal contexts. This also includes attempts to identify the use of language with misinterpretation (Gales, 2015), prove appropriate interpreting (Kredens, 2016), and manage language as evidence. Other research foci are on disputed meanings (Butters, 2012), the application of methods of authorship detection for cybercriminal investigations, and an attempt to develop authorship synthesis (Grant and MacLeod, 2018).

One of the main goals of forensic linguistics is to provide a careful and systemic analysis of syntactic and semantic features of legal language. The results of this analysis can be used by many different professionals. For example, police officers can use this evidence not only to interview witnesses and suspects more effectively but also to solve crimes more reliably. Lawyers, judges, and jury members can use these analyses to help evaluate questions of guilt and innocence more fairly. And translators and interpreters can use this research to communicate with greater accuracy. Forensic linguistics serves justice and helps people to find the truth when a crime has been committed.

In this chapter, we build a foundation for what one would call discourse forensic linguistics, that is, how legal writers organize their thoughts describing criminal intents and evidence, involving data items of various natures. Legal texts have peculiar discourse structures (Aboul-Enein, 1999); moreover, these texts are accompanied by structured numerical data like maps, logs, and various types of transaction records. Legal texts have DTs different from other written genres and a special form of logical implications. The role of argumentation-based discourse features in legal texts is important as well (Galitsky et al., 2009).

More than 40 years ago, Jan Svartvik showed dramatically just how helpful forensic linguistics could be. His analysis involved the transcript of a police interview with Timothy Evans, a man who had been found guilty of murdering his wife and his baby daughter in 1949. Svartvik demonstrated that parts of the transcript differed considerably in their grammatical style when he compared them to the rest of the recorded interview. Based on this research and other facts, the courts ruled that Evans had been wrongly accused. Unfortunately, Evans had already been executed in 1950. However, thanks to Svartvik’s work, 16 years later, he was formally acquitted. The study of Svartvik is considered today to be one of the first major cases in which forensic linguistics was used to achieve justice in a court of law. Today, forensic linguistics is a well-established, internationally recognized independent field of study.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128245217000107

Cognitive Computing: Theory and Applications

S. Jothilakshmi, V.N. Gudivada, in Handbook of Statistics, 2016

7.2.1 Text Analysis

Text analysis transforms the input text into speakable forms using text normalization, linguistic and phonetic analysis. Text normalization converts numbers and symbols into words, replaces abbreviations by their corresponding whole phrases, among others. The goal of Linguistic analysis is to understand the content of the text. Statistical methods are used to find the most probable meaning of the text. A grapheme is a letter or a sequence of letters that represent a sound (i.e., phoneme) in a word. Phonetic analysis converts graphemes into phonemes. Generation of sequence of phonetic units for a given standard word is referred to as letter to phoneme or text to phoneme rule. The complexity of these rules and their derivation depends on the nature of the language.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/S0169716116300463

Concepts of Health and Disease

Christopher Boorse, in Philosophy of Medicine, 2011

Analysis

Fulford, like all other writers in this section except Boorse, aims to analyze an ‘everyday usage’ [1989, 27] of medical concepts largely common to physicians and the lay public. Unlike other writers, he identifies his method as the ‘linguistic analysis’ (22) practiced by such figures as Wittgenstein, Austin, Urmson, and Hare (xv, 22–3, 54, 121–22). One major goal is to illuminate conceptual problems in, specifically, psychiatry. Hence, Fulford begins by relating his project to the controversy over Szasz’s claim that mental illness, as a conceptual impossibility, is a myth. In his first chapter, he argues that we need to recast that controversy by rejecting two assumptions and a form of argument shared by both sides in this dispute, antipsychiatrists and psychiatrists. The assumptions are that mental illness is conceptually problematic, but that physical illness is not. The form of argument is to test alleged mental illnesses against properties thought essential to physical ones (5).

Fulford next seeks to undermine the ‘conventional’ view of medical concepts, as represented by Boorse. A brief identifies features of common medical usage that the BST allegedly fails to capture. Then chapter 3 offers a debate between a metaethical descriptivist and a nondescriptivist over ‘Boorse’s version of the medical model’ (37). One of the main conclusions of this debate is that ‘dysfunction’ is a ‘value term,’ i.e., has evaluative as well as descriptive content. The main argument for this thesis, in chapter 6, proceeds by comparing functions in artifacts and organisms. In artifacts, functioning is doing something in a special sense, ‘functional doing’ (92 ff). Fulford argues by a rich array of examples that the function of an artifact depends on its designer’s purposes in two ways, both as to end and as to means: ‘for a functional object to be functioning, not only must it be serving its particular ‘designed-for’ purpose, it must be serving that purpose by its particular ‘designed-for’ means’ (98). Moreover, ‘purpose’ is ‘an evaluative concept,’ i.e., ‘evaluation is … part of the very meaning of the term’ (106). From this Fulford concludes that claims of biological function also rest on value judgments.

Fulford’s own positive account can be summarized as follows. Not only are ‘illness’ and the various ‘malady’ terms such as ‘disease’ negative value terms, but they express a specifically medical kind of negative value involving action failure. As the ‘reverse-view’ strategy requires, ‘illness’ is the primary concept, from which all the others are definable. To motivate his account of illness, Fulford uses the point that bodies are dysfunctional, yet persons ill. To what, he asks, does illness of a person stand in the same relation as bodily, or artifact, dysfunction stands to functional doing? Since artifacts and their parts function by moving or not moving, Fulford considers illnesses consisting of movement or lack of movement. Using action theory’s standard example of arm-raising, he suggests that the concept of illness has ‘its origins in the experience of a particular kind of action failure’: failure of ordinary action ‘in the apparent absence of obstruction and/or opposition’ (109). By ordinary action, he means the ‘everyday’ kind of action which, as Austin said, we ‘just get on and do’ (116). He next suggests that this analysis also suits illnesses consisting in sensations or lack of sensations. Pain and other unpleasant sensations, when symptoms of illness, also involve action failure. That is because ‘pain-as-illness,’ unlike normal pain, is ‘pain from which one is unable to withdraw in the (perceived) absence of obstruction and/or opposition’ (138). Finally, the analysis covers mental illness as naturally as physical illness. As physical illness involves failure of ordinary physical actions, like arm-raising, so mental illness involves failure of ordinary mental actions, like thinking and remembering.

From this basic illness concept, Fulford suggests, all the more technical medical concepts are defined, and in particular a family of concepts of disease. He begins by separating, within the set I of conditions that ‘may’ be viewed as illness, a subset Id of conditions ‘widely’ viewed as such (59). ‘Disease’, he thinks, has various possible meanings in relation to Id. In its narrowest meaning (HDv), ‘disease’ may merely express the same value judgment as ‘illness’, but in relation only to the subset Id, not to all of I (61). A variant of this idea is a descriptive meaning of ‘disease’ (HDf1) as ‘condition widely viewed as illness,’ which, of course, is not an evaluation, as is HDv, but a description of one (63). Two other broader descriptive senses of ‘disease’ are then derivable from HDf1: condition causally [HDf2] or statistically [HDf3] associated with an HDf1 disease (65, 69). Fulford provides no comparable analyses for other ‘malady’ terms such as ‘wound’ and ‘disability’, nor for ‘dysfunction’. Nevertheless, he seems to hold that their meaning depends on the concept of illness in some fashion.

Fulford judges his account of illness a success by two outcome criteria. The first is that it find, if possible, a neutral general concept of illness, then explain the similarities and differences of ‘physical illness’ and ‘mental illness’ in ordinary usage (24). The main difference is that people disagree much more in evaluating mental features, e.g., anxiety compared with pain. Using the example of alcoholism, he tries to show how clinical difficulty in diagnosing mental illness results from the nature of the phenomena, which the concept, far from being defective, faithfully reflects (85,153).

Fulford’s account succeeds equally, he thinks, by his second criterion, clinical usefulness. In psychiatric nosology, it suggests several improvements: (1) to make explicit the evaluative, as well as the factual, elements defining any disease for which the former are clinically important; (2) to make psychiatric use of nondisease categories of physical medicine, such as wound and disability; and to be open to the possibilities that (3) mental-disease theory will look quite different from physical-disease theory and (4) in psychiatry, a second taxonomy of kinds of illness will also be required (182–3). Fulford claims three specific advantages for his view over conventional psychiatry. By revealing the essence of delusions, it vindicates the category of psychosis and explains the ethics of involuntary medical treatment. First, delusions can be seen as defective ‘reasons for action,’ whether cognitive or evaluative (215). Second, since this defect is structural, psychosis is the most radical and paradigmatic type of illness (239) — ‘not a difficulty in doing something, but a failure in the very definition of what is done’ (238). Third, that is what explains, as rival views cannot, both why psychotics escape criminal responsibility and why only mental, not physical, illness justifies involuntary medical treatment (240–3). Finally, Fulford thinks his theory promotes improvement in primary health care, as well as closer relations both between somatic and psychological medicine and between medicine and philosophy (244–54). He pursues the themes of “values-based” medicine in his [2004] and in Woodbridge and Fulford [2004].

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780444517876500027

An Assessment Analytics Framework (AAF) for Enhancing Students’ Progress

Z. Papamitsiou, A.A. Economides, in Formative Assessment, Learning Data Analytics and Gamification, 2016

4.3.3 The “process” block: how the collected data are analyzed and interpreted?

The heart of every AA system is its processing engine, the mechanisms that are employed in analyzing the input data and producing the exploitable results. The literature review revealed the rich variety of different methods, algorithms and resources involved in the process of analysis. Examples of these components are linguistic analysis, text mining, speech recognition, classification techniques, association rule mining, process mining, machine learning techniques, affect recognition, and many more. Different instruments are adopted for the data processing, including algorithms and modeling methods. Table 7.1 summarizes the cases (application scenarios) according to the learning setting, the data-types and the analysis methods that have been employed.

Table 7.1. Summary of Application Scenarios With Learning Setting, Data-Types, Analysis Methods

| Authors and Year | Learning Setting | Data-Type (Resources) | Data Analysis Method |

|---|---|---|---|

| Barla et al. (2010) | Web-based education—adaptive testing | Question repository—consider test questions as a part of educational material and improve adaptation by considering their difficulty and other attributes | Combination of item response theory with course structure annotations for recommendation purposes (classification) |

| Caballé et al. (2011) | Computer supported collaborative learning (CSCL) for assessing participation behavior, knowledge building and performance | Asynchronous discussion within virtual learning environment; post tagging, assent, and rating | Statistical analysis |

| Chen and Chen (2009) | Mobile learning and assessment (mobile devices used as the primary learning mediator) | Web-based learning portfolios—personalized e-learning system and PDA; attendance rate, accumulated score, concentration degree (reverse degree of distraction) | Data mining: statistic correlation analysis, fuzzy clustering analysis, gray relational analysis, K-means clustering, fuzzy association rule mining and fuzzy inference, classification; clustering |

| Davies et al. (2015) | Computer-based learning (spreadsheets) for detection of systematic student errors as knowledge gaps and misconceptions | Activity-trace logged data; processes students take to arrive at their final solution | Discovery with models; pattern recognition |

| Leeman-Munk et al. (2014) | Intelligent tutoring systems (ITS) for problem-solving and analyzing student-composed text | Digital science notebook; responses to constructed response questions; grades | Text analytics; text similarity technique; semantic analysis technique |

| Moridis and Economides (2009) | Online self-assessment tests | Multiple choice questions | Students’ mood models—pattern recognition |

| Okada and Tada (2014) | Real-world learning for context-aware support | Wearable devices; sound devices; audio-visual records; field notes and activity maps; body postures; spatiotemporal behavior information | 3D classification; probabilistic modeling; ubiquitous computing techniques |

| Papamitsiou et al. (2014) | Web-based education—computer-based testing | Question repository—temporal traces | Partial Least Squares—statistical methods |

| Perera et al. (2009) | CSCL to improve the teaching of the group work skills and facilitation of effective team work by small groups | Open source, professional software development tracking system that supports collaboration; traces of users’ actions (create a wiki page or modify it; create a new ticket, or modify an existing, etc.) | Clustering; sequential patterns mining |

| Sao Pedro et al. (2013) | ITS—for inquiry skill assessment | Fine-grained actions were timestamped; interactions with the inquiry, changing simulation variable values and running/pausing/resetting the simulation, and transitioning between inquiry tasks | Traditional data mining, iterative search, and domain expertise |

| Segedy et al. (2013) | Open-ended learning environments (OELEs) for problem-solving tasks and assess students’ cognitive skill levels | Cognitive tutors; causal map edits; quizzes, question evaluations, and explanations; link annotations | Model-driven assessments; model of relevant cognitive and metacognitive processes; classification |

| Shibani et al. (2015) | Teamwork assessment for measuring teamwork competency | Custom-made chat program | Text mining; classification |

| Tempelaar et al. (2013) | Learning management systems (LMS) for formative assessment | Generic digital learning environment; LMS; demographics, cultural differences; learning styles; behaviors; emotions | Statistical analysis |

| Vozniuk et al. (2014) | MOOCS—peer assessment | Social media platform—consensus, agreement, correlations | Statistical analysis |

| Xing et al. (2014) | CSCL—virtual learning environments (VLE) for participatory learning assessment | Log files about actions, time, duration, space, tasks, objects, chat | Clustering enhanced with activity theory |

In addition, the underlying pedagogical usefulness acts as a strong criterion that drives the whole analysis process in order to produce valid and useful results. However, most cases draw the attention to boundaries and limitations related mostly to ethics and security issues on the data manipulation.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128036372000075

24th European Symposium on Computer Aided Process Engineering

Javier Farreres, … Antonio Espuña, in Computer Aided Chemical Engineering, 2014

2.1.3 Pattern 3: such as

This pattern is proposed by Hearst (1992) as “such NP as NP”, but in the text of the ISA88 standard no occurrence of this pattern can be observed. Alternatively, a lot of “NP such as NP” are found. Thus, the pattern was adapted to this second case.

No linguistic analysis could be performed for this case because the analyser didn’t properly detect the “such as” construct to subsequently detect the two noun phrases surrounding it. A tagging process (Carreras et al., 2004) was performed in order to know the grammar category of each word and then nouns were detected before and after the “such as” construct. For example, in the phrase “Example 8: Process Management events such as allocation of equipment to a batch, creation of a control recipe, etc.”, allocation of equipment to a batch and creation of a control recipe are given as examples of Process Management events, thus the “Process Management event” concept is the parent of former concepts. Pattern 1.3 resulted in 305 candidate relations.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780444634566501460

Dialogue management based on forcing a user through a discourse tree of a text

Boris Galitsky, in Artificial Intelligence for Healthcare Applications and Management, 2022

5 User intent recognizer

One of the essential capabilities of a chatbot is to discriminate between a request to commit a transaction (such as a patient treatment request) and a question to obtain some information. Usually, these forms of user activity follow each other.

Before a user wants a chatbot to perform an action (such as open a new bank account), the user would want to know the rules and conditions for this account. Once the user knowledge request is satisfied, the user makes a decision and orders a transaction. Once this transaction is completed by the chatbot, the user might want to know a list of options available and asks a question (such as how to fund this new account). Hence user questions and transactional requests are intermittent and need to be recognized reliably.

Errors in recognizing questions vs transactional requests are severe. If a question is misinterpreted and an answer to a different question is returned, the user can reformulate it and ask again. If a transactional request is recognized as a different (wrong) transaction, the user will understand it when the chatbot issues a request to specify inappropriate parameters. Then the user would cancel their request, attempt to reformulate it, and issue it again. Hence chatbot errors associated with wrongly understood questions and transactional requests can be naturally rectified. At the same time, chatbot errors recognizing questions vs transactional requests would break the whole conversation and the user would be confused as how to continue a conversation. Therefore, the chatbot needs to avoid these kinds of errors by any means.

Recognizing questions vs transactional requests must be domain independent. In any domain a user might want to ask a question or to request a transaction, and this recognition should not depend on the subject. Whereas a chatbot might need training data from a chatbot developer to operate in a specific domain (such as flu), recognizing questions vs transactional requests must be a capability built in advance by a chatbot vendor, before this chatbot will be adjusted to a particular domain.

We also target recognition of questions vs transactional requests to be in a context-independent manner. Potentially there could be any order in which questions are asked and requests are made. A user may switch from information access to a request to do something and back to information access, although this should be discouraged. Even a human customer support agent prefers a user to first receive information, make a decision, and then request an action (make an order).

A request can be formulated explicitly or implicitly. Could you do this may mean both a question about the chatbot capability as well as an implicit request to do this. Even a simple question like “what is my account balance?” may be a transactional request to select an account and execute a database query. Another way to express a request is via mentioning of a desired state instead of explicit action to achieve it. For example, the utterance “I am too cold” indicates not a question but rather a desired state that can be achieved by turning on the heater. If no available action is associated with “cold” this utterance is classified as a question related to “coldness.” To handle this ambiguity in a domain-independent manner, we differentiate between questions and transactional requests linguistically, not pragmatically.

Although a vast training dataset for each class is available, it turns out that the rule-based approach provides an adequate performance. For an utterance, classification into a request or question is done by a rule-based system on two levels:

- (1)

-

Keyword level

- (2)

-

linguistic analysis of phrases level

The algorithm chart includes four major components (Fig. 16):

Fig. 16. The architecture for question vs transaction request recognition. The class labels on the bottom correspond to the decision rules above.

- •

-

Data, vocabularies, configuration

- •

-

Rule engine

- •

-

Linguistic processor

- •

-

Decision former

Data, vocabularies, and configuration components include leading verbs indicating that an utterance is a request. They also include expressions used by an utterance author to indicate the author wants something from a peer, such as “Please do … for me.” These expressions also refer to information requests such as “Give me MY …,” for example, account information. For a question, this vocabulary includes the ways people address questions, such as “please tell me….”

Rule engine applies a sequence of rules, both keyword-based, vocabulary-based, and linguistic. The rules are applied in certain order, oriented to find indication of a transaction. If main cases of transactions are not identified, only then does the rule engine apply question rules. Finally, if question rules did not fire, we classify the utterance as unknown, but nevertheless treat it as default, a question. Most rules are specific to the class of requests; if none of them fire, then the decision is also a question.

Linguistic processor targets two cases: imperative leading verb and a reference to “my” object. Once parsing is done, the first word should be a regular verb in present tense and active voice, and be neither modal, mental, or a form of be. These constraints assure this verb is in the imperative form, for example, “Drop the temperature in the room.” The second case addresses utterances related to an object the author owns or is associated to, such as “my account balance” and “my car.” These utterances are connected with an intent to perform an action with these objects or a request for information on them (versus a question that expresses a request to share general knowledge, not about my object).

Decision former takes an output of the rule engine and outputs one out of three decisions, along with an explanation for each of them. Each fired keyword-based rule provides an explanation, as well as each linguistic rule. Thus when a resultant decision is produced, there is always a detailed backup of it. If any of the components failed while applying a rule, the resultant decision is unknown.

If no decision is made, the chatbot comes back to the user asking for explicit clarification: “Please be clearer if you are asking a question or requesting a transaction.”

We present examples for each class together with the rules that fired and delivered the decision (shown in brackets).

Questions

If I do not have my Internet Banking User ID and Password, how can I login? [if and how can I—prefix].

I am anxious about spending my money [mental verb].

I am worried about my spending [mental verb].

I am concerned about how much I used [mental verb].

I am interested how much money I lost on stock [mental verb].

How can my saving account be funded [How + my].

Domestic wire transfer [no transactional rule fired therefore question].

order replacement/renewal card not received [no transactional rule fired therefore question].

Requests from the chatbot to do something

Tell me… [leading imperative verb].

Confirm that…

Help me to … [leading imperative verb].

5.1 Nearest neighbor-based learning for user intent recognition

If a chatbot developer intends to overwrite the intent recognition rules, they need to supply a balanced training set that includes samples for both classes. To implement a nearest-neighbor functionality, we rely on information extraction and search library Lucene (Erenel and Altınçay, 2012). The training needs to be conducted in advance, but in real time when a new utterance arrives the following happens:

- (1)

-

An instant index is created from the current utterance.

- (2)

-

We iterate through all samples from both classes. For each sample, a query is built and a search issued against the instant index.

- (3)

-

We collect the set of queries that delivered non-empty search results with its class, and aggregate this set by the classes.

- (4)

-

We verify that a certain class is highly represented by the aggregated results and the other class has significantly lower presentation. Then we select this highly represented class as a recognition result. Otherwise, the system should refuse to accept a recognition result and issue Unknown.

Lucene default TF*IDF model will assure that the training set elements are the closest in terms of most significant keywords (from the frequency perspective (Salton and Yang, 1973)). Trstenjak et al. (2013) presented the possibility of using a k-nearest neighbor (KNN) algorithm with TF*IDF method for text classification. This method enables classification according to various parameters, measurement, and analysis of results. Evaluation of framework was focused on the speed and quality of classification, and testing results showed positive and negative characteristics of the TF*IDF-KNN algorithm. Our evaluation was performed on several categories of documents in the online environment and showed stable and reliable performance. Tests shed light on the quality of classification and determined which factors have an impact on performance of classification.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128245217000041

The digital curation toolkit: strategies for adding value to work-related social systems

Abby Clobridge, in Trends, Discovery, and People in the Digital Age, 2013

The Semantic Web

The underlying theme connecting most of the current work within information management is that of the Semantic Web. The general idea behind the Semantic Web is to create a system in which machines are able to ‘understand’ information presented on the Internet in the same way that humans can understand or comprehend information – thus allowing machines to better support standard processes such as finding, storing, translating or combining information from disparate sources. In short, the Semantic Web is a vision for the Internet in which more complex human requests are able to be intelligently interpreted by search engines and computers based on the semantics of the request – the relationships between words and phrases, their context and their intended meaning.

Three types of Semantic Web applications starting to emerge that are of particular importance to digital curation for work-related systems are as follows:

- ■

-

Automated tag extraction. Automated tag extraction tools are designed to automate the process of extracting concepts, terms and ideas from a specified web page, document or other type of knowledge resource. While many such scripts, programs and tools have existed for years, several more advanced tools have been released that incorporate parts-of-speech (POS) tagging and linguistic analysis.

- ■

-

Vocabulary prompting. Vocabulary prompting applications provide suggestions for other tags/terms for users to consider adding to a particular web page, document or other type of knowledge resource. They can be extremely helpful for users who are new to tagging or are pressed for time. Vocabulary prompting functionality can also suggest alternate preferred versions of tags – for example, ‘did you mean “human resources” instead of “HR”?’ and cutting down on duplicate and redundant tags.

- ■

-

Data visualisation. Data visualisation tools enable users to create diagrams or other visual representations of concepts, tags or terms within a data set as well as the relationship between terms. Clustering is one such method – individual tags, terms and concepts are displayed in ‘clusters’ based on their similarities. Concept maps focus on the specific relationships between individual terms rather than groups of terms. Concept maps and clustering techniques are both more advanced versions of tag clouds – the popular Web 2.0 mechanism for displaying tags in a ‘cloud’ with more often used tags being weighted higher and therefore appearing larger in terms of font size. Clustering and concept maps both provide a more detailed view of a data set rather than a snapshot view.

Table 11.1 provides an overview of selected tools that are currently available.

Table 11.1. Selected Semantic Web tools

| Tool | Notes |

|---|---|

| AlchemyAPI1 |

|

| OpenCalais2 |

|

| TerMine3 |

|

| Topia. TermExtract4 |

|

- 1.

- http://alchemyapi.com

- 2.

- http://opencalais.com

- 3.

- http://nactem.ac.uk/software/termine/

- 4.

- http://pypi.python.org/pypi/topia.termextract/

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9781843347231500115

Sapir–Whorf Hypothesis

J.A. Lucy, in International Encyclopedia of the Social & Behavioral Sciences, 2001

3 Empirical Research on the Hypothesis

Although the Sapir–Whorf proposal has had wide impact on thinking in the humanities and social sciences, it has not been extensively investigated empirically. Indeed, some believe it is too difficult, if not impossible in principle, to investigate. Further, a good deal of the empirical work that was first developed was quite narrowly confined to attacking Whorf’s analyses, documenting particular cases of language diversity, or exploring the implications in domains such as color terms that represent somewhat marginal aspects of language structure. In large part, therefore, acceptance or rejection of the proposal for many years depended more on personal and professional predilections than on solid evidence. Nonetheless, a variety of modern initiatives have stimulated renewed interest in mounting empirical assessments of the hypothesis.

Contemporary empirical efforts can be classed into three broad types, depending on which of the three key terms in the hypothesis they take as their point of departure: language, reality, or thought (Lucy 1997).

A structure-centered approach begins with an observed difference between languages, elaborates the interpretations of reality implicit in them, and then seeks evidence for their influence on thought. The approach remains open to unexpected interpretations of reality but often has difficulty establishing a neutral basis for comparison. The classic example of a language-centered approach is Whorf’s pioneering comparison of Hopi and English described above. The most extensive contemporary effort to extend and improve the comparative fundamentals in a structure-centered approach has sought to establish a relation between variations in grammatical number marking and attentiveness to number and shape (Lucy 1992b). This research remedies some of the traditional difficulties of structure-centered approaches by framing the linguistic analysis typologically so as to enhance comparison, and by supplementing ethnographic observation with a rigorous assessment of individual thought. This then makes possible the realization of the benefits of the structure-centered approach: placing the languages at issue on an equal footing, exploring semantically significant lexical and grammatical patterns, and developing connections to interrelated semantic patterns in each language.

A domain-centered approach begins with a domain of experienced reality, typically characterized independently of language(s), and asks how various languages select from, encode, and organize it. Typically, speakers of different languages are asked to refer to ‘the same’ materials or situations so the different linguistic construals become clear. The approach facilitates controlled comparison, but often at the expense of regimenting the linguistic data rather narrowly. The classic example of this approach, developed by Roger Brown and Eric Lenneberg in the 1950s, showed that some colors are more lexically encodable than others, and that more codable colors are remembered better. This line of research was later extended by Brent Berlin, Paul Kay, and their colleagues, but to argue instead that there are cross-linguistic universals in the encoding of the color domain such that a small number of ‘basic’ color terms emerge in languages as a function of biological constraints. Although this research has been widely accepted as evidence against the validity of linguistic relativity hypothesis, it actually deals largely with constraints on linguistic diversity rather than with relativity as such. Subsequent research has challenged Berlin and Kay’s universal semantic claim, and shown that different color-term systems do in fact influence color categorization and memory. (For discussions and references, see Lucy 1992a, Hardin and Maffi 1997, Roberson et al. 2000.) The most successful effort to improve the quality of the linguistic comparison in a domain-centered approach has sought to show cognitive differences in the spatial domain between languages favoring the use of body coordinates to describe arrangements of objects (e.g., ‘the man is left of the tree’) and those favoring systems anchored in cardinal direction terms or topographic features (e.g., ‘the man is east/uphill of the tree’) (Pederson et al. 1998, Levinson in press). This research on space remedies some of the traditional difficulties of domain-centered approaches by developing a more rigorous and substantive linguistic analysis to complement the ready comparisons facilitated by this approach.

A behavior-centered approach begins with a marked difference in behavior which the researcher comes to believe has its roots in and provides evidence for a pattern of thought arising from language practices. The behavior at issue typically has clear practical consequences (either for theory or for native speakers), but since the research does not begin with an intent to address the linguistic relativity question, the theoretical and empirical analyses of language and reality are often weakly developed. The most famous example of a behavior-centered approach is the effort to account for differences in Chinese and English speakers’ facility with counterfactual or hypothetical reasoning by reference to the marking of counterfactuals in the two languages (Bloom 1981). The interpretation of these results remains controversial (Lucy 1992a).

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0080430767030424