Consider

two propositions. First, word processors have improved by leaps and

bounds since their advent more than a dozen years ago. Second, what

we actually do with word processors has not changed very much in the

last decade. Both these statements may be true, but they create a

tension: if the technology has been so revolutionary why should

things remain pretty much as they always were?

One

resolution to this slight conundrum is that the revolution has not

yet begun in earnest. So far we have been watching a dress rehearsal

:

word

processing is going to change radically in the next decade, and will

make a huge difference to the way we write and think about writing.

The improvements to the technology of word processing witnessed so

far are preparatory to the really significant changes which will

make us look at language and computing in a new light.

These

changes are unlikely to come about through the gradual accretion of

even more features to the already excellent packages in the market.

Several software innovations which are already hovering on the edge

of the mainstream will blossom over the next five or ten years.

These innovations will create niches for newcomers and

simultaneously force the major packages to focus on the parts of the

language software which they are best at. We will recognize that

there is much more to language processing software than the rather

similar functions covered by the best contemporary word processing

packages.

SPEECH

PROCESSING. It

seems highly likely that speech processing will move more into the

mainstream of personal computing. As ever with innovation, it may

not happen in quite the way we first imagined. When speech

processing was first mooted five years ago, it was seen as a way of

circumventing the keyboard. Some of us have trouble with basic

typing, and nearly all of us have difficulties with rarely-used

command sequences or macros. While we look forward to the day we can

instruct our machines to “print

three copies”, significant applications for speech processing are

likely to appear before we get reliable automatic dictation

machines. We will incorporate speech messaging well before we crack

the problem of unconstrained speech recognition.

Most

of the documentation that moves around corporations and between

businesses is remarkably standard. The average document comes in

about five almost-identical versions: five copies, agenda,

schedules, minutes, ’blind’

copies sent to collea-

Students’

reseach

work _ 157

gues,

action copies sent to subordinates and so on. The five copies

generally differ only in their destination and minor detail. A

problem with such documentation is that it tends to be regarded as

dross by its recipients even if it is in fact important. An ideal way

of giving apparent dross a high ’impact

factor’ is to attach a voice message to some e-mail.

Incorporating

sound bites in documents may seem a superficial addition to the basic

word processing function. But it is in line with the tendency towards

object-oriented programs and user interfaces, hypertexts and

multi-media documents. And all the powerful trends in the technology

will encourage us to think of word processing as much broader than

the typing or typesetting functions predominant in today’s

word processing and desktop publishing programs. While word

processing will encompass voice and graphics, we can also count on an

equally strong tendency towards a more abstract and artificial view

of the document as ’structured program’.

The

drive to uncover structure in documentation is already very strong in

the defense industries. We have all heard the story about the

documentation for Boeing 737

weighing

more than the airplane itself or the American frigates which carry

more tons of paper than they do tons of missiles. But this mass of

paper is no joke for the industries which build these machines, and

they are consequently taking the lead in developing powerful tools

for the automatic processing and interpreting of documentation.

SGML.

One of the keys is the development of Standard Generalised Markup

Language (SGML) schemes: SGML allows for documentation manipulation

which is independent of the particular way in which the document has

been processed or represented —

on

paper, screen, or magnetic tape, for example. SGML has a real pay-off

when your are dealing with large amounts of text. In typesetting or

desktop publishing systems there is no way of distinguishing the

italic into which you cast the title of a book from the italic you

use for a foreign word.

‘

But

in SGML systems these differences are marked. The basic idea is that

if you can treat the structure of documentation as abstract and

declarative

rather

than being procedural

and

dependent on the manner of its representation, it should be much

easier to make large-scale comparisons of documents. SGML markup is

not easily intelligible to the human eye —

quite

the reverse, as it tends to look like a jumble of brackets and codes.

But

new software will use SGML while concealing it from the user in much

the same way as our word processors now use and exchange ASCII

without our needing to notice it. SGML will come into its own when

users are able to incorporate documents, standard form contracts or

advertising brochures into their databases, agreement files or

catalogues without needing to consciously translate the structure to

the formats they prefer to use.

-

What’s

the tension implied in the two propositions? You could begin your

answer with: On the one hand, … -

To

what extent is word processing revolution under way? -

What

may result from the software developments in word processing? -

What

was the original idea behind the development of speech processing? -

What

is seen as the major difficulty with the use of standard

documentation?

158

Unit4

-

What

is thought to be the solution to this problem? -

Which

two examples of the documentation problems facing industries are

given in the text? -

Name

the ways in which documentation can be represented. -

Describe

the differences in textual representation which are said to be

’marked’

in SGML. -

Is

SGML markup easily understood by the user ? -

Explain

the comparison that is made between SGML and ASCII. -

Have

we witnessed any new developments in the field of word processing

recently? If so, provide some comments.

Task

4.

SO.

Read and translate the text with a dictionary. About Writing

-

The

writing process has four stages: prewriting, drafting, revising and

editing. -

In

the prewriting

stage

you gather information for your writing project by:

a. Writing

down what

you know about your subject.

b. Interviewing

people

who know about your subject.

с

Researching

your

subject in books, articles, and other materials.

-

Your

rough

draft is

the first version of your writing project in which you outline your

ideas and decide on a central idea. -

You

then revise

your

draft several times adding details and making your writing clearer

and better organized. -

In

the editing

process

you correct errors in grammar, spelling, punctuation, and so on,

and you proofread

your

final version to make sure it is free of errors. -

As

you write, you will discover what you know about your subject

(perhaps more than you thought), what you still need to find out,

and what is important to you.

Prewriting

consists

of the following steps:

1. Write

down what you already know about your subject by:

a. Listing

a

few broad ideas that come quickly to mind.

b. Recording

information through focused

freewriting, writing

on a chosen subjectnonstop

for five or ten minutes.

с

Brainstorming

by

asking questions that bring to mind details you can use.

2. Gather

any additional information you need by:

a. Summarizing

another

writer’s

ideas.

b. Interviewing

people

who know about your subject.

-

Express

your essay’s

central

(or

main) idea

in

a thesis

statement —

a

clear sentence expressing the point you want to make. -

Decide

or focus

on

a central idea:

a. Determine

your purpose in writing about the topic you have chosen.

b. Find

the main point you want to make.с

Select

the details you want to include.

Students

‘

reseach

work

159

5. Limit

your discussion so that your can support your thesis with enough

details to beconvincing.

6. Revise

your central idea as often as you need.II.

Drafting

-

Review

your information dropping and adding details to keep your thesis

clear and convincing. -

Write

a scratch outline keeping your working thesis in mind and grouping

details under headings that organize them. -

Prepare

a rough draff»

a. Write

as much information as you can about each section of your outline.

b. Don’t

be concerned about spelling, paragraph structure or other such

details atthis

point.

Ш.

Revising

1. As

you review your rough draft, ask yourself:

a. Does

my thesis statement still express my main point?

b. Are

all the details I have included necessary?с

Should

I rearrange paragraphs?

d. Should

I move material from one paragraph to another?

e. Does

any paragraph need more details?

f. Are

my introduction and conclusion interesting?

-

Revise

your rough draft in the light of your answers to these questions. -

Now

ask your questions again and revise, revise, revise.

Соседние файлы в предмете [НЕСОРТИРОВАННОЕ]

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

- #

Today in Tedium: Word processors are generally kind of boring—they do their job, and that’s about it. Most people don’t put a ton of second thought into their word processors. As you might have noticed from my rant from a couple of months ago, I’m not that kind of person. That rant drew up a lot of conversation, most of it good and interesting. (It did have its critics, but even their comments were interesting!) But it hit me that, in a lot of ways, I was spending more time complaining than discussing ways to move text editing forward. Today’s issue of Tedium attempts to look at the future of word processing. — Ernie @ Tedium

«It’s as though people cannot see text, they see only through text. Some people want to talk about knowledge, some people want to talk about collaboration … very few want to talk about text.»

— Frode Hegland, the founder and an organizer of The Future of Text Symposium, an annual event that’s designed to highlight discussions around better tying computing to the written word, discussing the way that text gets short shrift in revamps of the word processor. «The analogy that really stayed with me is, it’s as though people want to talk about making a better window, but they don’t talk about the view,» he told me of the most recent event, held in August at Google’s Mountain View, California headquarters.

(James F. Clay/Flickr)

Embracing text for the sake of text

The thoughts that our brain generates come out best when they work together consistently, in a single waveform.

The problem is, when you write stuff in a word processor, you’re constantly pulled away from your ideas—you keep having to refer to your research, to keep looking stuff up, and on a computer, you’re constantly switching between windows.

This creates a lot of friction and, mentally, you’re stuck trying to sort between four or five different layers of messy thoughts. Things get chopped into pieces and connections fail to get made.

I do my best to avoid that kind of friction, but it’s tough. So it was nice to find a kindred spirit on this issue in the form of Hegland, who has long spent time obsessed with this idea of «liquid information,» or how words and ideas can flow together in one fluid swoop.

The British developer and academic, who has been focused on big-picture issues regarding information for decades, has developed an app specifically designed to solve this problem, a macOS add-on called Liquid | Flow that allows users to use key commands to select a piece of text and quickly perform an action on it without even thinking. (I’ve tried it; It’s smooth like butter.)

Hegland’s annual The Future of Text Symposium—which this year covered both interesting approaches to word processing like Jesse Grosjean’s ultra-minimalist WriteRoom and outré concepts like Robert Scoble pondering text in the era of the Magic Leap—has a nice throw-out-all-the-cards kind of feel.

There were a couple of living legends in the room that day, including Vint Cerf, a co-inventor of the internet, and Ted Nelson, who came up with the word «hypertext.» But in many ways, the direction of Hegland’s career and his focus on the written word has been defined by a mentor who wasn’t even at the August event: Douglas Engelbart, the early tech innovator whose «Mother of all Demos» helped shape the functionality of the desktop computer. (Engelbart died in 2013.)

Watch on YouTube

Hegland said that his focus on the written word has in part been defined by his personal relationship with Engelbart, and he’s trying to bring some of Engelbart’s thinking to his word processor project Liquid | Author. The app, designed for both macOS and iOS and publicly released for the latter, has an interestingly narrow focus—rather than trying to revamp word processing all at once, it attempts to tackle how teachers and students interact with documents, an interaction that many people are familiar with.

«It should be instant for students to create a citation—web, book, article, whatever—and it should be equally instant for the teacher to check that citation,» Hegland explained of the app’s strategy.

Another goal with Liquid | Author, he says, is to use his Future of Text gatherings almost as a skunkworks project for new ideas that can be implemented in the project, which he’ll likely focus on as he works on his Ph.D.

It certainly won’t be a hugely commercial project—something Hegland is quick to admit—but it will be a fascinating one to watch.

«As the legend goes, when Steve Jobs ‘borrowed’ the ideas behind personal computing from Xerox PARC, many subtle, but crucial, differences were lost.»

— A page on the website for the document editing tool Notion, explaining in part why the company built the tool, which combines word processing and document collaboration. The company talks at length on its website about trying to «bring back some of the ideas of those early pioneers.» That isn’t really an argument being made by a lot of companies making word processors these days. (FWIW, Notion is probably the most forward-thinking of the up-and-comers out there, a list that includes Dropbox Paper and Gingko.)

A modern take on the word processor that isn’t really a word processor at all

Maybe the issue with our word processors isn’t that there’s too much crap. It’s just that neither you nor your company cares about anyone else’s.

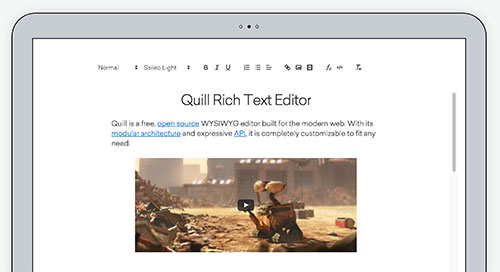

The ambitious, Github-famous text editing tool Quill is designed to consider the process of text editing from this perspective. Rather than treating word processing as a single monolithic beast, it’s an open-source project—hack it however you need it, thanks. Author and lead maintainer Jason Chen, who helped start the project while at SalesForce, suggests the strategy is intended to allow companies and end users that rely on the platform to create something that more specifically suits their needs.

«It was a common saying that 95 percent of features in Word are never used, but the problem is that different groups of people use a different 5 percent,» Chen explained in an interview. «I think these big companies are looking for a tool that just does the 5 percent that they care about and skip the rest.»

So far, the approach—which he says is inspired by both Medium’s graceful, opinionated design and Etherpad’s strong, hackable foundation—has won some prominent fans, such as Vox Media, Gannett, and Hubspot. And the hits keep coming. Last month, LinkedIn revamped its Medium-competing publishing platform to use Quill, with the engineering manager of the publishing platform praising the tool’s technical power and flexibility.

«As LinkedIn publishing evolves, Quill’s underlying technology opens the door for rich features, such as collaborative editing and custom rich media types,» the company’s Jake Dejno wrote.

The strategy, Chen says, reflects a reframing of the place of word processing in the modern day. He argues that word processors generally haven’t kept up with the interactivity of the internet (stuff like embeds, you know the deal); simultaneously, though, we have what Chen characterized as a «diminishing need for their strengths.»

«The reasons we have traditionally used word processors has slowly been eroded away,» he explained. «LinkedIn is replacing the resume, Github is replacing documentation, and blogging (and respective tools) have chipped into journalism. Even documents that are meant to be printed are largely being standardized and automated. Most letters in your physical mailbox today are probably from some bank that generated and printed it without touching Word.»

By putting the rendering of words into the hands of the end user—and doing so, it should be said, with state-of-the-art technical standards—projects like Quill could redefine our relationship with the word processor … by, in some ways, removing it from the equation entirely.

So, where does the future stand for word processing in the long run? Are we still going to be using an all-in-one tool for handling the written word down the line here? In posing that question to Chen, he suggested that in the shorter run, companies will begin to use more specialized tools, but further down the line, he believes we may see significant changes in the way we interact with written words.

«In 15 years I think the input interface and the idea of a ‘document’ to be entirely reimagined,» Chen suggests. «I don’t think we’ll be at telepathy in that timeframe but I can see a lot more speech and audio playing a larger role for input. Video is already augmenting the document, but VR could be ubiquitous by then.»

But thinking of audio and video as a replacement for a written word, while an interesting idea, gives me pause—for a few reasons. One issue comes up as I click through the recording of my Skype conversation with Frode Hegland: I find the sound of my own voice terrible, because I tend not to speak as cleanly as I write. (Man, how do people deal with the fact that I’m a 35-year-old guy who repeatedly says «like» and «you know» in the middle of a conversation? No wonder this isn’t a podcast.)

Hegland, who is much more eloquent than I am, suggested that natural language processing was potentially going to change the way that we write—particularly real-time summarization techniques that could tell you whether your story makes sense on the fly. He specifically pointed to the work of Bruce Horn, an early Macintosh developer who now works for Intel, as well as Stanford linguistics professor Livia Polanyi.

But, ultimately, Hegland still saw a place for the written word, as well as the tools that traditionally put those words there.

«Over the past few years I’ve come to appreciate that freedom of [mental] movement is the key,» he said, highlighting the nature of liquidity in putting thoughts to the page. «When you look about the freedom of your own hands moving, you have such incredible freedom of movement.»‘

(Hegland isn’t a Markdown fan like myself, but hey, pobody’s nerfect.)

As long as the freedom of mental movement in my hands goes faster than my own voice, I’m probably going to have a need for a keyboard. But what about the next generation? Will word processors eventually get thrown out into the annals of history like a stream of old Motorola RAZRs and BlackBerry phones?

God, I hope not. I still have a lot of words to write.

Like this? Well, you should read more of our stuff.

Get more issues in your inbox

A version of this post originally appeared on Tedium, a twice-weekly newsletter that hunts for the end of the long tail.

Word processors are generally kind of boring—they do their job, and that’s about it.

Most people don’t put a ton of second thought into their word processors. I’m not that kind of person—in fact, a couple of months ago, I wrote a whole rant about how I’m not that kind of person.

That rant drew up a lot of conversation, most of it good and interesting. (It did have its critics, but even their comments were interesting!) But it hit me that, in a lot of ways, I was spending more time complaining than discussing ways to move text editing forward.

So let’s talk about a couple of efforts to solve the problems that word processors have. They have little, if anything, in common—and that’s what makes them so appealing.

«It’s as though people cannot see text, they see only through text. Some people want to talk about knowledge, some people want to talk about collaboration … very few want to talk about text.»

— Frode Hegland, the founder and an organizer of The Future of Text Symposium, an annual event that’s designed to highlight discussions around better tying computing to the written word, discussing the way that text gets short shrift in revamps of the word processor. «The analogy that really stayed with me is, it’s as though people want to talk about making a better window, but they don’t talk about the view,» he told me of the most recent event, held in August at Google’s Mountain View, California headquarters.

Embracing text for the sake of text

The thoughts that our brain generates come out best when they work together consistently, in a single waveform.

The problem is, when you write stuff in a word processor, you’re constantly pulled away from your ideas—you keep having to refer to your research, to keep looking stuff up, and on a computer, you’re constantly switching between windows.

This creates a lot of friction and, mentally, you’re stuck trying to sort between four or five different layers of messy thoughts. Things get chopped into pieces and connections fail to get made.

I do my best to avoid that kind of friction, but it’s tough. So it was nice to find a kindred spirit on this issue in the form of Hegland, who has long spent time obsessed with this idea of «liquid information,» or how words and ideas can flow together in one fluid swoop.

The British developer and academic, who has been focused on big-picture issues regarding information for decades, has developed an app specifically designed to solve this problem, a macOS add-on called Liquid | Flow that allows users to use key commands to select a piece of text and quickly perform an action on it without even thinking. (I’ve tried it; It’s smooth like butter.)

Hegland’s annual The Future of Text Symposium—which this year covered both interesting approaches to word processing like Jesse Grosjean’s ultra-minimalist WriteRoom and outré concepts like Robert Scoble pondering text in the era of the Magic Leap—has a nice throw-out-all-the-cards kind of feel.

There were a couple of living legends in the room that day, including Vint Cerf, a co-inventor of the internet, and Ted Nelson, who came up with the word «hypertext.» But in many ways, the direction of Hegland’s career and his focus on the written word has been defined by a mentor who wasn’t even at the August event: Douglas Engelbart, the early tech innovator whose «Mother of all Demos» helped shape the functionality of the desktop computer. (Engelbart died in 2013.)

Hegland said that his focus on the written word has in part been defined by his personal relationship with Engelbart, and he’s trying to bring some of Engelbart’s thinking to his word processor project Liquid | Author. The app, designed for both macOS and iOS and publicly released for the latter, has an interestingly narrow focus—rather than trying to revamp word processing all at once, it attempts to tackle how teachers and students interact with documents, an interaction that many people are familiar with.

«It should be instant for students to create a citation—web, book, article, whatever—and it should be equally instant for the teacher to check that citation,» Hegland explained of the app’s strategy.

Another goal with Liquid | Author, he says, is to use his Future of Text gatherings almost as a skunkworks project for new ideas that can be implemented in the project, which he’ll likely focus on as he works on his Ph.D.

It certainly won’t be a hugely commercial project—something Hegland is quick to admit—but it will be a fascinating one to watch.

«As the legend goes, when Steve Jobs ‘borrowed’ the ideas behind personal computing from Xerox PARC, many subtle, but crucial, differences were lost.»

— A page on the website for the document editing tool Notion, explaining in part why the company built the tool, which combines word processing and document collaboration. The company talks at length on its website about trying to «bring back some of the ideas of those early pioneers.» That isn’t really an argument being made by a lot of companies making word processors these days. (FWIW, Notion is probably the most forward-thinking of the up-and-comers out there, a list that includes Dropbox Paper and Gingko.)

A modern take on the word processor that isn’t really a word processor at all

Maybe the issue with our word processors isn’t that there’s too much crap. It’s just that neither you nor your company cares about anyone else’s.

The ambitious, Github-famous text editing tool Quill is designed to consider the process of text editing from this perspective. Rather than treating word processing as a single monolithic beast, it’s an open-source project—hack it however you need it, thanks. Author and lead maintainer Jason Chen, who helped start the project while at SalesForce, suggests the strategy is intended to allow companies and end users that rely on the platform to create something that more specifically suits their needs.

«It was a common saying that 95 percent of features in Word are never used, but the problem is that different groups of people use a different 5 percent,» Chen explained in an interview. «I think these big companies are looking for a tool that just does the 5 percent that they care about and skip the rest.»

So far, the approach—which he says is inspired by both Medium’s graceful, opinionated design and Etherpad’s strong, hackable foundation—has won some prominent fans, such as Vox Media, Gannett, and Hubspot. And the hits keep coming. Last month, LinkedIn revamped its Medium-competing publishing platform to use Quill, with the engineering manager of the publishing platform praising the tool’s technical power and flexibility.

«As LinkedIn publishing evolves, Quill’s underlying technology opens the door for rich features, such as collaborative editing and custom rich media types,» the company’s Jake Dejno wrote.

The strategy, Chen says, reflects a reframing of the place of word processing in the modern day. He argues that word processors generally haven’t kept up with the interactivity of the internet (stuff like embeds, you know the deal); simultaneously, though, we have what Chen characterized as a «diminishing need for their strengths.»

«The reasons we have traditionally used word processors has slowly been eroded away,» he explained. «LinkedIn is replacing the resume, Github is replacing documentation, and blogging (and respective tools) have chipped into journalism. Even documents that are meant to be printed are largely being standardized and automated. Most letters in your physical mailbox today are probably from some bank that generated and printed it without touching Word.»

By putting the rendering of words into the hands of the end user—and doing so, it should be said, with state-of-the-art technical standards—projects like Quill could redefine our relationship with the word processor—by, in some ways, removing it from the equation entirely.

So, where does the future stand for word processing in the long run? Are we still going to be using an all-in-one tool for handling the written word down the line here? In posing that question to Chen, he suggested that in the shorter run, companies will begin to use more specialized tools, but further down the line, he believes we may see significant changes in the way we interact with written words.

«In 15 years I think the input interface and the idea of a ‘document’ [will] be entirely reimagined,» Chen suggests. «I don’t think we’ll be at telepathy in that timeframe but I can see a lot more speech and audio playing a larger role for input. Video is already augmenting the document, but VR could be ubiquitous by then.»

But thinking of audio and video as a replacement for a written word, while an interesting idea, gives me pause—for a few reasons. One issue comes up as I click through the recording of my Skype conversation with Frode Hegland: I find the sound of my own voice terrible, because I tend not to speak as cleanly as I write. (Man, how do people deal with the fact that I’m a 35-year-old guy who repeatedly says «like» and «you know» in the middle of a conversation?)

Hegland, who is much more eloquent than I am, suggested that natural language processing was potentially going to change the way that we write—particularly real-time summarization techniques that could tell you whether your story makes sense on the fly. He specifically pointed to the work of Bruce Horn, an early Macintosh developer who now works for Intel, as well as Stanford linguistics professor Livia Polanyi.

But, ultimately, Hegland still saw a place for the written word, as well as the tools that traditionally put those words there.

«Over the past few years I’ve come to appreciate that freedom of [mental] movement is the key,» he said, highlighting the nature of liquidity in putting thoughts to the page. «When you look about the freedom of your own hands moving, you have such incredible freedom of movement.»‘

(Hegland isn’t a Markdown fan like myself, but hey, pobody’s nerfect.)

As long as the freedom of mental movement in my hands goes faster than my own voice, I’m probably going to have a need for a keyboard. But what about the next generation? Will word processors eventually get thrown out into the annals of history like a stream of old Motorola RAZRs and BlackBerry phones?

God, I hope not. I still have a lot of words to write.

Word processing has become such an essential part of our daily lives, particularly for those of us who write for a living, that we tend to take its presence there, and indeed its existence as an activity in itself, for granted. Yet the tool we use for this activity, namely word processing software is, of course, like the personal computer on which it depends, a very recent innovation in the story of the written word. Indeed, the modern incarnation of the word processor, such as Microsoft Word, was by no means an inevitability, and could, in principle at least, have taken a different form.

The essential component of what we would now call a word processor is a WYSIWYG (What You See Is What You Get) editor, which displays your work on screen as it will look on the printed page, in real time. In this way, the word processor brings together meaning and form in much the same way that we would if we sat in front of a piece of paper and began writing and/or drawing. In so doing, the word processor gives us the illusion of an experience akin to physically writing.

The arrival of the WYSIWYG paradigm for word processing in the 1980s represented a revolution in the way that we interact with the process of writing. However, before its arrival, software for writing would generally separate meaning and form. This is to say that text would be composed independently of the specification of its final appearance. In considerable measure this was because it was simply not possible technologically at that stage in time to bring the two together in real time. Nevertheless, the separation of meaning and form has advantages in the writing process. It enables the writer to focus on what s/he wants to say first, without needing to worry about its final appearance, which can be decided later. Many writers have found this helpful, to the extent that some, including, notably, George R. R. Martin, still use 1980s software written for MS-DOS for their writing.[1]

While the look and feel of word processors today may be state-of-the-art, the essential paradigm has not in fact changed much at all since the late 1980s, as a comparison of the following screenshots shows:

This paradigm may well be with us for some time to come. It is striking, for instance, that despite the ready availability of e-readers, the printed book has remained an attractive proposition. Sales of physical books have hardly been affected by those of e-books. Indeed, still represent by far the majority of book sales, at least in the US.[4] From another perspective, it is striking that we still tend to view official or academic electronic documents (as opposed to e.g. works of fiction) as facsimiles of what they would look like on the printed page, even if they never exist—or at least we never see them—as such.

However, the history of our interaction with writing shows that at some point the paradigm will change. So, for example, writing moved from the clay tablet to papyrus and parchment, and the format moved from the scroll to the codex. And it is the latter that, albeit now baptised ‘book’, that we have preserved to this day.

Furthermore, given that the events of 2020 have catalysed changes to our lives that were already in the offing, a change in the paradigm(s) of our interaction with writing may come sooner rather than later. What might one of those new paradigms look like?

We have all become (over) familiar with online collaboration tools and video conferencing software than we might have expected at the year’s beginning, and it seems unlikely this will change any time soon. What has also become clear, however, is the extent to which, in the perception of many, these online tools are currently significantly inferior to face-to-face interaction, hence the notion of ‘Zoom fatigue’. This situation is, however, likely to improve in the coming years. As bandwidths become greater, and VR and AR technology becomes more affordable, it seems reasonable to suppose that the ‘Zoom meetings’ in which we currently interact with one another will be replaced by meetings in virtual 3D space, rather than the 2D one most of us inhabit at the moment. What will our interaction with the written word in that space be like?

It is probably easier to say what it could be like than what it will be like. For example, no limits would be set by the geometrical parameters of a (virtual) piece of paper. Thus, writing could be projected on to any number of surfaces, such as a cube, a sphere or a cylinder. Indeed, there would be no need for a surface at all: with AR or VR text could simply be suspended in mid-‘air’. Thus one could imagine a meeting where the participants were discussing a text, and all looking at a virtual text suspended in the middle of the group.

Of course, none of this means that we will necessarily dispense with reading from (the illusion of) pieces of paper, and our interaction with the written word may simply continue to imitate the physical reality of the printed word that we currently enjoy. Bold experiments in how we interact with the (virtual) written word have been attempted before, and not really gone anywhere.[9] However, the possibilities of VR and AR mean that we need not continue to read in the way to which we have become accustomed, and the history of writing shows that the format our interaction with the written word takes will change at some point. In a virtual world, the possibilities are boundless.

References / Further reading

Kirschenbaum, Matthew G. 2016. Track changes: A literary history of word processing. Cambridge, Mass.: The Belknap Press of Harvard University Press.

Hegland, Frode A. (ed.) 2020. The future of text. Future Text Publishing. https://futuretextpublishing.com/future-of-text-2020-download/

~ Robert Crellin (Research Associate on the CREWS project)

[1] https://www.cnet.com/news/george-r-r-martin-writes-with-a-dos-word-processor/

[2] Built from sources provided by the Computer History Museum (https://computerhistory.org/blog/microsoft-word-for-windows-1-1a-source-code/). Used with permission from Microsoft. (https://www.microsoft.com/en-us/legal/intellectualproperty/permissions/default)

[3] Used with permission from Microsoft. (https://www.microsoft.com/en-us/legal/intellectualproperty/permissions/default)

[4] See, e.g. https://www.cnbc.com/2019/09/19/physical-books-still-outsell-e-books-and-heres-why.html

[5] https://commons.wikimedia.org/wiki/File:Letter_Luenna_Louvre_AO4238.jpg

[6] https://commons.wikimedia.org/wiki/File:Scroll.jpg

[7] Picture taken by Leszek Jańczuk (https://commons.wikimedia.org/wiki/File:Codex_Vaticanus,_XXI_Targi_Wydawc%C3%B3w_Katolickich_2015-05-03_0019.JPG) licenced under https://creativecommons.org/licenses/by-sa/4.0/deed.en.

[8] Created with Vectary (vecary.com). Virtual person from: https://lh3.googleusercontent.com/BGAswBlvR6Qc713HVSsJAa0DyPZ5LuEpTF2GbsSRePYQ70nvpVvDLvxnfqAi9Cpg

[9] See e.g. https://archive.nytimes.com/www.nytimes.com/library/cyber/surf/111997mind.html

I will try to tackle this a bit, feeling a little audacious. Word processing has been around as long as I can remember, (and it wasn’t that good). Technology however now is moving at a ever increasing rate, we see the computer in almost all of electronics now, ie transistors, and processors. Now Moores Law dictates, «The amount of transistors in a computer will double every year». So far his theory has not been proven wrong, but you can only shrink a silicon chip so small right?

This is where nanotechnology comes, in order for Moores Law to be still be true, we need to shrink this chip smaller and not give off heat. Cause heat is not effective and destroys life in our computer see? (we are currently doing this) Now that we have done this, we get smaller and smarter devices, ie ultra slim phones, laptops, i pad.

Back to your original question, what do you think Word Processing will be like in the future, well it’s really hard to predict. But going with current trends, we can expect devices to be smaller, and with greater word processing and voice word processing. Think of it as a smart AI, that monitors «hot key words» that you would use often. And while it does this it could even search for things based on your interest for example it would pool information from google or text messages.

Maybe in the far future, we could even have implants with nano size «smart transistors» for better word vocabulary. Effectively we would be merging man and machine.

Just my take on it, hope you like