Import data from the web

Get started with Power Query and take your data transformation skills to the next level. First, let’s import some data.

Note Although the videos in this training are based on Excel for Microsoft 365, we’ve added instructions as video labels if you are using Excel 2016.

-

Download the template tutorial that accompanies this training, from here, and then open it.

-

On the Import Data from Web worksheet, copy the URL, which is a Wikipedia page for the FIFA World Cup standings.

-

Select Data > Get & Transform > From Web.

-

Press CTRL+V to paste the URL into the text box, and then select OK.

-

In the Navigator pane, under Display Options, select the Results table.

Power Query will preview it for you in the Table View pane on the right.

-

Select Load. Power Query transforms the data and loads it as an Excel table.

-

Double-click the sheet tab name and then rename it «World Cup Results».

Tip

To get updates to this World Cup data, select the table, and then select Query Refresh.

Need more help?

Bookmark this app

Press Ctrl + D to add this page to your favorites or Esc to cancel the action.

Send the download link to

Send us your feedback

Oops! An error has occurred.

Invalid file, please ensure that uploading correct file

Error has been reported successfully.

You have successfully reported the error, You will get the notification email when error is fixed.

Click this link to visit the forums.

Immediately delete the uploaded & processed files.

Are you sure to delete the files?

Enter Url

In this tutorial, we will show you different ways to fetch data from a website into excel automatically. This is often one of the foremost used excel features for those that use excel for data analysis work.

Follow the below step-by-step procedure to learn how to fetch data directly and skip the hassle of doing data entry manually from a website.

Extracting data (data collection and update) automatically from a webpage to your Excel worksheet could be

important for a few jobs. Let’s see how you can do it.

Method 1: Copy – Paste (One time + Manual)

This method is the easiest as it enables direct data fetching in the literal sense.

To do so:

- Simply open the website from where we would like to seek information.

- Copy the data which we want to have in our Excel sheet

- Select the tabular column (or Cell) and Paste it to get the web data into your Excel sheet.

As this method fetches the data only once, the drawback of this method is that you simply cannot refresh the information fetched timely when the changes are made within the website then again you would require to repeat the above step to keep your worksheet updated.

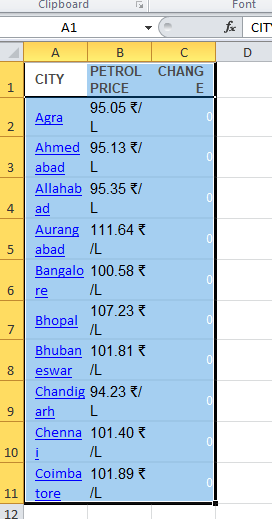

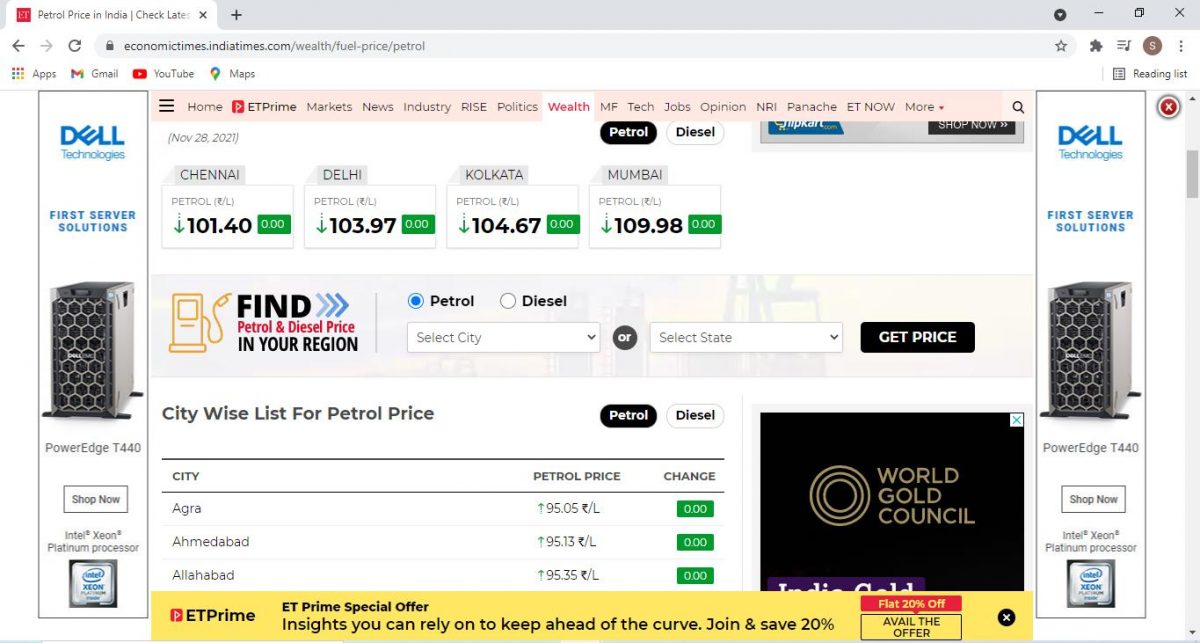

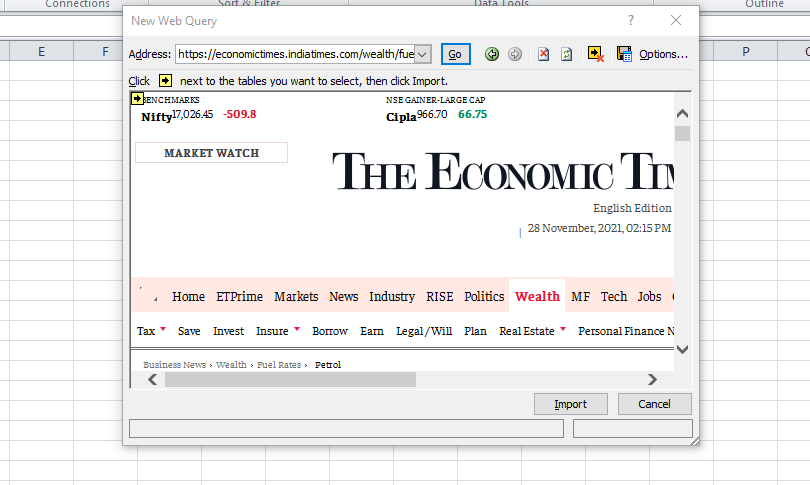

Method 2: Realtime Data Extraction

We will be using Excel’s From Web Command within the Data ribbon to gather data from the website. Say, I would like to

gather data from the below page or URL: https://economictimes.indiatimes.com/wealth/fuel-price/petrol.

It shows the daily Petrol-Price’s for respective cities, all over India. To fetch the data directly into our Excel sheets we follow the below steps:

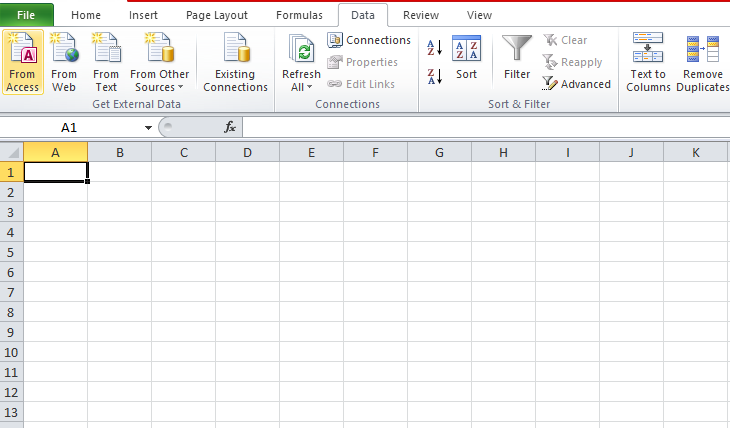

- In the Excel worksheet, open the Data ribbon and click on the From Web command.

- A New Web Query dialog box appears

- In the address bar, I have pasted the address URL of our desired webpage (you must paste accordingly): https://economictimes.indiatimes.com/wealth/fuel-price/petrol.

- Then Click on the Go button, placed right after the address bar

- The same website loads up within the new web query panel as a preview.

- Now spot the yellow arrows near the query box.

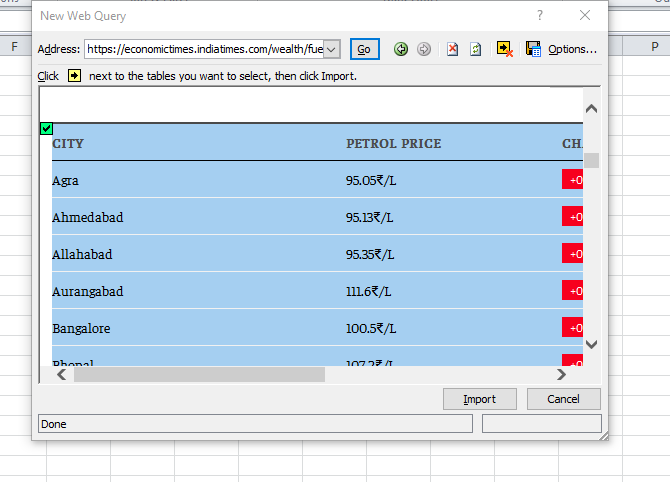

- Move your mouse pointer over the yellow arrows. You see a zone is highlighted with a blue border and therefore the yellow arrow becomes green on hover.

In our example, I have chosen the City wise petrol prices all over India.

- Now, Click on the Import button. Import Data panel appears. It asks about the location of importing the data, currently, I choose to reserve it in cell A1, but you may reserve it anywhere, in any cell of the worksheet.

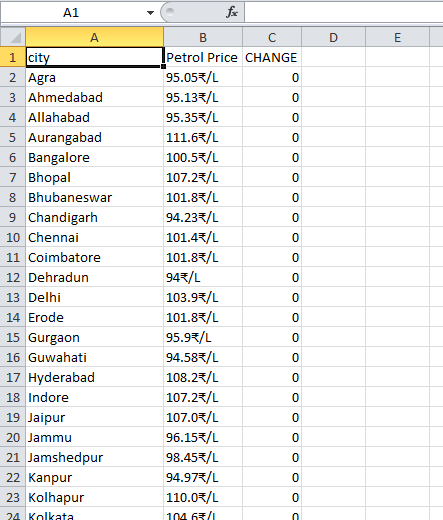

As we see now that the info required by us is inserted into the excel worksheet. Now as we’ve got the info we will make

changes as we would like as per our requirement.

NOTE:- The website should have data in a format like Table or Pre-data format. Otherwise, it increases the work because

then we need to convert the data into a readable or excel-able format which again is a bit hectic.

After all the text shown in the columns isn’t your ally. So, we shall assume your life is straightforward like that and you’ve got an internet site that has data in a compatible direct Excel readable format.

The other most important thing is that you need not have to update the data from time to time.

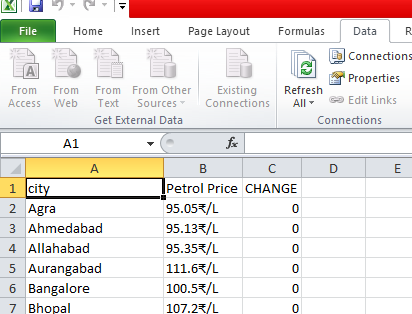

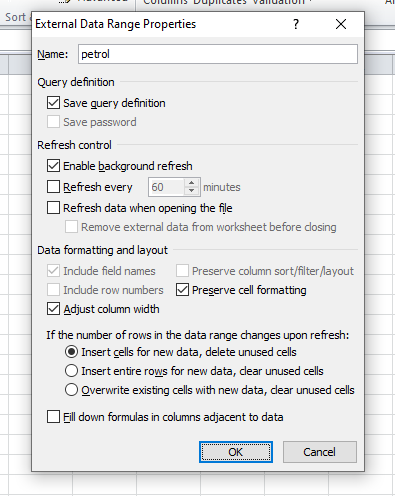

Refresh Excel data for Update:

You can manually or automatically refresh the data. To do so click on the drop-down button of the refresh all command.

You can click on Refresh if you need just one cell to update and Refresh All if you want everything in the sheet to re-fetch data.

We can even set a period time for refreshing data automatically. Click on this Connection Properties option from the list.

You can name the connection, add an outline too.

Under Refresh Control, you get a command Refresh every (by default 60 minutes), which is subject to configuration.

Conclusion

So that’s it for this tutorial, hope you’ve got understood the way to pull/extract the data from the website into an Excel

sheet.

Power Query is an extremely useful feature that allows us to:

- connect to different data sources, like text files, Excel files, databases, websites, etc…

- transform the data based on report prerequisites.

- save the data into an Excel table, data model, or simply connect to the data for later loading.

The best part is that if the source data changes, you can update the destination results with a single click; something that is ideal for data that changes frequently.

This is analogous to recording and executing a macro. But unlike a macro, the creation and execution of the back-end code happen automatically.

If you can click buttons, you can create automated Power Query solutions.

Example #1

Import Spot Prices for Petroleum

from a Website to Excel

The first step is to connect to the data source. For this example, we will connect to the U.S. Energy Information Administration.

https://www.eia.gov/petroleum

The information we need is in a table that is part of the overall webpage.

In the “old days”, we would transfer the information from the website into Excel by highlighting the webpage table and copy/paste the data into Excel.

If you’ve ever done this, you know what a hit-or-miss proposition this can be. It’s the 50/50/90 Rule: if you have a 50/50 chance of winning, you’ll lose 90% of the time.

Even if you were to successfully transfer the information into Excel, the information is not linked to the webpage. When the webpage changes, you will need to recopy/paste (and potentially fix) the updated information.

- Begin by copying the URL from the webpage (assuming you are previewing the page in a browser). If not, you can type it into the next step’s URL prompt.

- Select Data (tab) -> Get & Transform (group) -> From Web.

- In the From Web dialog box, paste the URL into the URL field and click OK.

The Navigator window displays the components of the webpage in the left panel.

If you want to ensure you are on the correct webpage, click the tab labeled Web View to get a preview of the page in a traditional HTML format.

It is unlikely that the listed components on the left will be presented with obvious names as to which item goes to which webpage component. You may need to click from one item to the next, previewing each item in the right-side preview panel, in order to determine which belongs to the desired table.

If the data does not require any further transformations, you can click Load/Load To… to send the data directly to Excel. This will allow you to select the destination of the results data, such as a table on a new or existing worksheet, the Data Model, or create a “Connection Only” to the source data.

- In the Navigator dialog box, select the arrow next to Load and click Load To…

- In the Import Data dialog box, select “Existing worksheet” and point to a cell on your desired destination worksheet (like cell A1 on “Sheet1”).

The result is a table that is connected to a query. The Queries & Connections panel (right) lists all existing queries in this file.

If you hover over a query, an information window will appear giving you the following information:

- a preview of the data

- the number of imported columns

- the last refresh date/time

- how the data was loaded or connected to the Excel file

- the location of the source data

Although the data looks correct, we have some structural issues with the results that will cause problems with further analysis.

The empty cells in the Product column will cause problems when sorting, filtering, charting, or pivoting the data.

We need to make some adjustments to the data.

- Double-click (or right-click and choose Edit) the listed query to activate the Power Query Editor.

We want to fill down the listed products into the lower, empty cells of the Product column.

- In the Power Query Editor, select the Product column and click Transform (tab) -> Any Column (group) -> Fill -> Down.

Nothing happened.

The reason the product names failed to repeat down through the empty cells is that Power Query did not interpret the cells as empty. There may be some artifact from the webpage that exists in the cell that we can’t see.

We will replace all the “fake empty” cells with null values. This should allow the Fill Down operation to work as expected.

- Select the Changed Type step in the Query Settings panel (right).

- Select the Product column and click Transform (tab) -> Any Column (group) -> Replace Values.

- Tell Power Query that you wish to insert this new step into the existing query by clicking Insert.

- In the Replace Values dialog box, leave the “Value To Find” field empty and type “null” (no quotes) in the “Replace With” field. Click OK when finished.

If you select the previously created “Filled Down” step at the bottom of the Query Settings panel you will see the updated results of the query.

- Update the query name to “Spot Prices”.

- Click the top part of the Close & Load button at the far-left of the Home

If we were to graph the data, and the data were to change, we can refresh our graph by clicking Data (tab) -> Queries & Connections (group) -> Refresh All or right-click on the data and select Refresh.

Query Options

There are some controllable options available by selecting Data (tab) -> Queries & Connections (group) -> Refresh All -> Connection Properties…

Some of the more popular options include:

- Refresh the data every N number of minutes

- Refresh the data when opening the file

- Opting for participation during a Refresh All operation

Example #2

Import Weather Forecast

for the Next 10 Days

Imagine you work at the front desk of a popular hotel in New York City. As a customer service, you wish to supply your guests with a printout of the weather forecast for the next 10 days.

This is something that needs to be printed every day where each successive day looks at its next 10 days.

- We start by searching for a website that can supply a 10-day forecast for Seattle.

- We take the first offer in the search results that takes us to weather.com.

- Now that we have the web link to the 10-day forecast for New York City, we will copy and paste it into a web query in Excel (Data (tab) -> Get & Transform (group) -> From Web).

- Select the table from the left side of the Navigator window.

We can see from the preview that there are some columns towards the right that we are not interested in and the column headers have shifted.

- In the Navigator window, select Transform Data to load the forecast into Power Query.

- Begin editing the data by right-clicking on the header for the “Day” column and select Remove.

- Select the last 3 columns (“Wind”, “Humidity”, and “Column7”) and remove them as well.

- Rename the remaining 3 columns.

- Description -> Day

- High / Low -> Description

- Precip -> High/Low

- Rename the query “SeattleWeather”.

- On the Home tab, select the lower-part of the Close & Load button and click Close & Load To… and select Existing Worksheet from the Import Data dialog box. Click OK when complete.

We now have the weather forecast for the next 10 days.

Each day, we only need right-click the table to refresh the information.

Impressing the Boss

We really like the idea seen on the original weather.com website that displays a raincloud emoji for days that are expecting rain.

To bring a bit of fun to our report, we will have Excel display an umbrella emoji for any day that is forecasting rain or showers.

NOTE: This creative bit of Excel trickery is brought to us by our good friends Frédéric Le Guen and Oz du Soleil. Links to their blog and video detail various uses of this trick can be found at the end of this post.

The first step is to add the umbrella emoji. We will access the built-in Windows emoji library.

- On the keyboard, press the Windows key and the period to display the emoji library.

- Type the word “rain” to filter the emoji library to rain-related emojis.

- Select the umbrella with raindrops.

- Highlight the umbrella emoji in the Formula Bar and press Copy (CTRL-C) then Enter.

- Double-click the “SeattleWeather” query to launch the Power Query Editor.

The next step is to create a new column that adds the umbrella emoji for any row that contains the words “rain” or “shower” in the Description column.

- Select Add Column (tab) -> General (group) -> Conditional Column.

- The name of the new column is “Be Equipped” and the logic is “if the column named ‘Description’ contains the word ‘rain’ then display the umbrella emoji”. (Paste the umbrella emoji into the Output)

- Click the Add Clause button to create a second condition.

- The second description’s logic is “else if the column named ‘Description’ contains the word ‘shower’ then display the umbrella emoji”. (Paste the umbrella emoji into the Output)

- Click OK to add the new conditional column.

- Drag the header for “Be Equipped” so the new column lies between the “Description” and “High/Low” columns.

- Close & Load the updated query back into Excel.

Tomorrow, you only need right-click the table to load the updated 10-day weather forecast.

Interesting Links

Frédéric Le Guen’s blog post on adding emojis to your reports

Oz’s video – Emojis, Excel, Power Query & Dynamic Arrays

Practice Workbook

Feel free to Download the Workbook HERE.

Published on: December 15, 2019

Last modified: March 10, 2023

Leila Gharani

I’m a 5x Microsoft MVP with over 15 years of experience implementing and professionals on Management Information Systems of different sizes and nature.

My background is Masters in Economics, Economist, Consultant, Oracle HFM Accounting Systems Expert, SAP BW Project Manager. My passion is teaching, experimenting and sharing. I am also addicted to learning and enjoy taking online courses on a variety of topics.

On the Import Data from Web worksheet, copy the URL, which is a Wikipedia page for the FIFA World Cup standings. Select Data > Get & Transform > From Web. Press CTRL+V to paste the URL into the text box, and then select OK. In the Navigator pane, under Display Options, select the Results table.

Contents

- 1 Can you import data from a website into Excel?

- 2 How do I import data from HTML to Excel?

- 3 How do I scrape data from a website in Excel?

- 4 How do you pull data from a website into Excel using macro?

- 5 How do you collect data from a website?

- 6 How do I extract a CSV file from a website?

- 7 Is it legal to scrape data from websites?

- 8 How do I extract text from a website?

- 9 What are the 4 methods of data collection?

- 10 How do you pull data from a website using python?

- 11 How do I convert HTML to csv?

- 12 How do I export all URL from a website?

- 13 How do I display csv data on a website?

- 14 Is web crawling illegal?

- 15 Why is web scraping bad?

- 16 Is API web scraping?

- 17 What is Web scraping?

- 18 What are the 5 types of data?

- 19 What are the 3 data gathering techniques?

- 20 What are the 5 methods of collecting data?

Can you import data from a website into Excel?

You can easily import a table of data from a web page into Excel, and regularly update the table with live data. Open a worksheet in Excel. From the Data menu select either Import External Data or Get External Data.Choose the table of data you wish to import, then click the Import button.

On the File menu, click Import. In the Import dialog box, click the option for the type of file that you want to import, and then click Import. In the Choose a File dialog box, locate and click the CSV, HTML, or text file that you want to use as an external data range, and then click Get Data.

How do I scrape data from a website in Excel?

Excel Web Scraping Explained

- Select the cell in which you want the data to appear.

- Click on Data> From Web.

- The New Web query box will pop up as shown below.

- Enter the web page URL you need to extract data from in the Address bar and hit the Go button.

How do you pull data from a website into Excel using macro?

Using Excel Web Queries to Get Web Data

- Go to Data -> Get External Data -> From Web.

- A window named “New Web Query” will pop up.

- Enter the web address into the “Address” text box. [img]

- The page will load and show yellow icons against data/tables.

- Click the yellow icon of arrow next to the tables you want to select.

How do you collect data from a website?

- Review your website traffic reports.

- Ask your website designer to add a data form to your business website.

- Retrieve data from your merchant processor.

- Create an email registration form.

- Use cookies data.

Click the button that says “Select an element to inspect it” Hover or click on the HTML table. The highlighted source code corresponds to this table – right click the highlighted source code. Click “Copy X-Path”

Is it legal to scrape data from websites?

It is perfectly legal if you scrape data from websites for public consumption and use it for analysis. However, it is not legal if you scrape confidential information for profit. For example, scraping private contact information without permission, and sell them to a 3rd party for profit is illegal.

Click and drag to select the text on the Web page you want to extract and press “Ctrl-C” to copy the text. Open a text editor or document program and press “Ctrl-V” to paste the text from the Web page into the text file or document window. Save the text file or document to your computer.

What are the 4 methods of data collection?

Data may be grouped into four main types based on methods for collection: observational, experimental, simulation, and derived.

How do you pull data from a website using python?

To extract data using web scraping with python, you need to follow these basic steps:

- Find the URL that you want to scrape.

- Inspecting the Page.

- Find the data you want to extract.

- Write the code.

- Run the code and extract the data.

- Store the data in the required format.

How do I convert HTML to csv?

How to convert HTML to CSV

- Upload html-file(s) Select files from Computer, Google Drive, Dropbox, URL or by dragging it on the page.

- Choose “to csv” Choose csv or any other format you need as a result (more than 200 formats supported)

- Download your csv.

How do I export all URL from a website?

Usage

- Go to Tools > Export All URLs to export URLs of your website.

- Select Post Type.

- Choose Data (e.g Post ID, Title, URLs, Categories)

- Apply Filters (e.g Post Status, Author, Post Range)

- Configure advance options (e.g exclude domain url, number of posts)

- Finally Select Export type and click on Export Now.

How do I display csv data on a website?

To display the data from CSV file to web browser, we will use fgetcsv() function. Comma Separated Value (CSV) is a text file containing data contents. It is a comma-separated value file with . csv extension, which allows data to be saved in a tabular format.

Is web crawling illegal?

Web scraping and crawling aren’t illegal by themselves.Web scraping started in a legal grey area where the use of bots to scrape a website was simply a nuisance. Not much could be done about the practice until in 2000 eBay filed a preliminary injunction against Bidder’s Edge.

Why is web scraping bad?

Site scraping can be a powerful tool. In the right hands, it automates the gathering and dissemination of information. In the wrong hands, it can lead to theft of intellectual property or an unfair competitive edge.

Is API web scraping?

Web scraping allows you to extract data from any website through the use of web scraping software. On the other hand, APIs give you direct access to the data you’d want.In these scenarios, web scraping would allow you to access the data as long as it is available on a website.

What is Web scraping?

Web scraping is the process of using bots to extract content and data from a website. Unlike screen scraping, which only copies pixels displayed onscreen, web scraping extracts underlying HTML code and, with it, data stored in a database. The scraper can then replicate entire website content elsewhere.

What are the 5 types of data?

Common data types include:

- Integer.

- Floating-point number.

- Character.

- String.

- Boolean.

What are the 3 data gathering techniques?

Under the main three basic groups of research methods (quantitative, qualitative and mixed), there are different tools that can be used to collect data.

What are the 5 methods of collecting data?

Here are the top six data collection methods:

- Interviews.

- Questionnaires and surveys.

- Observations.

- Documents and records.

- Focus groups.

- Oral histories.