This article is about the worldwide computer network. For the global system of pages accessed via URLs, see World Wide Web. For other uses, see Internet (disambiguation).

The Internet (or internet)[a] is the global system of interconnected computer networks that uses the Internet protocol suite (TCP/IP)[b] to communicate between networks and devices. It is a network of networks that consists of private, public, academic, business, and government networks of local to global scope, linked by a broad array of electronic, wireless, and optical networking technologies. The Internet carries a vast range of information resources and services, such as the interlinked hypertext documents and applications of the World Wide Web (WWW), electronic mail, telephony, and file sharing.

The origins of the Internet date back to the development of packet switching and research commissioned by the United States Department of Defense in the late 1960s to enable time-sharing of computers.[2] The primary precursor network, the ARPANET, initially served as a backbone for the interconnection of regional academic and military networks in the 1970s to enable resource sharing. The funding of the National Science Foundation Network as a new backbone in the 1980s, as well as private funding for other commercial extensions, led to worldwide participation in the development of new networking technologies, and the merger of many networks.[3] The linking of commercial networks and enterprises by the early 1990s marked the beginning of the transition to the modern Internet,[4] and generated a sustained exponential growth as generations of institutional, personal, and mobile computers were connected to the network. Although the Internet was widely used by academia in the 1980s, commercialization incorporated its services and technologies into virtually every aspect of modern life.

Most traditional communication media, including telephone, radio, television, paper mail, and newspapers, are reshaped, redefined, or even bypassed by the Internet, giving birth to new services such as email, Internet telephone, Internet television, online music, digital newspapers, and video streaming websites. Newspaper, book, and other print publishing have adapted to website technology or have been reshaped into blogging, web feeds, and online news aggregators. The Internet has enabled and accelerated new forms of personal interaction through instant messaging, Internet forums, and social networking services. Online shopping has grown exponentially for major retailers, small businesses, and entrepreneurs, as it enables firms to extend their «brick and mortar» presence to serve a larger market or even sell goods and services entirely online. Business-to-business and financial services on the Internet affect supply chains across entire industries.

The Internet has no single centralized governance in either technological implementation or policies for access and usage; each constituent network sets its own policies.[5] The overarching definitions of the two principal name spaces on the Internet, the Internet Protocol address (IP address) space and the Domain Name System (DNS), are directed by a maintainer organization, the Internet Corporation for Assigned Names and Numbers (ICANN). The technical underpinning and standardization of the core protocols is an activity of the Internet Engineering Task Force (IETF), a non-profit organization of loosely affiliated international participants that anyone may associate with by contributing technical expertise.[6] In November 2006, the Internet was included on USA Today‘s list of New Seven Wonders.[7]

Terminology

The word internetted was used as early as 1849, meaning interconnected or interwoven.[8] The word Internet was used in 1945 by the United States War Department in a radio operator’s manual,[9] and 1974 as the shorthand form of Internetwork.[10] Today, the term Internet most commonly refers to the global system of interconnected computer networks, though it may also refer to any group of smaller networks.[11]

When it came into common use, most publications treated the word Internet as a capitalized proper noun; this has become less common.[11] This reflects the tendency in English to capitalize new terms and move to lowercase as they become familiar.[11][12] The word is sometimes still capitalized to distinguish the global internet from smaller networks, though many publications, including the AP Stylebook since 2016, recommend the lowercase form in every case.[11][12] In 2016, the Oxford English Dictionary found that, based on a study of around 2.5 billion printed and online sources, «Internet» was capitalized in 54% of cases.[13]

The terms Internet and World Wide Web are often used interchangeably; it is common to speak of «going on the Internet» when using a web browser to view web pages. However, the World Wide Web or the Web is only one of a large number of Internet services,[14] a collection of documents (web pages) and other web resources, linked by hyperlinks and URLs.[15]

History

In the 1960s, the Advanced Research Projects Agency (ARPA) of the United States Department of Defense (DoD) funded research into time-sharing of computers.[16][17][18] J. C. R. Licklider proposed the idea of a universal network while leading the Information Processing Techniques Office (IPTO) at ARPA. Research into packet switching, one of the fundamental Internet technologies, started in the work of Paul Baran in the early 1960s and, independently, Donald Davies in 1965.[2][19] After the Symposium on Operating Systems Principles in 1967, packet switching from the proposed NPL network was incorporated into the design for the ARPANET and other resource sharing networks such as the Merit Network and CYCLADES, which were developed in the late 1960s and early 1970s.[20]

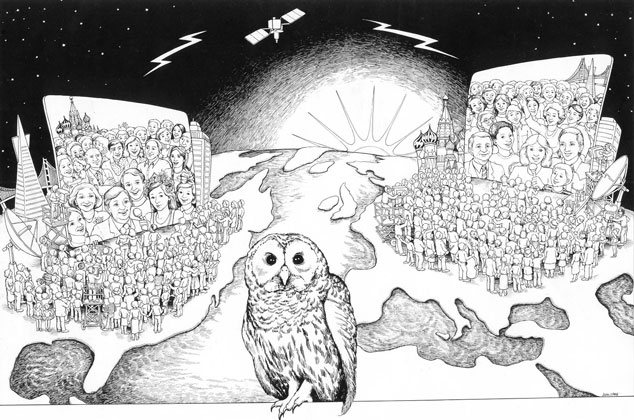

ARPANET development began with two network nodes which were interconnected between the University of California, Los Angeles (UCLA) and SRI International (SRI) on 29 October 1969.[21] The third site was at the University of California, Santa Barbara, followed by the University of Utah. In a sign of future growth, 15 sites were connected to the young ARPANET by the end of 1971.[22][23] These early years were documented in the 1972 film Computer Networks: The Heralds of Resource Sharing.[24] Thereafter, the ARPANET gradually developed into a decentralized communications network, connecting remote centers and military bases in the United States.[25]

Early international collaborations for the ARPANET were rare. Connections were made in 1973 to the Norwegian Seismic Array (NORSAR),[26] and to University College London which provided a gateway to British academic networks forming the first international resource sharing network.[27] ARPA projects, international working groups and commercial initiatives led to the development of various protocols and standards by which multiple separate networks could become a single network or «a network of networks».[28] In 1974, Bob Kahn at DARPA and Vint Cerf at Stanford University published their ideas for «A Protocol for Packet Network Intercommunication».[29] They used the term internet as a shorthand for internetwork in RFC 675,[10] and later RFCs repeated this use.[30] Kahn and Cerf credit Louis Pouzin with important influences on the resulting TCP/IP design.[31] National PTTs and commercial providers developed the X.25 standard and deployed it on public data networks.[32]

Access to the ARPANET was expanded in 1981 when the National Science Foundation (NSF) funded the Computer Science Network (CSNET). In 1982, the Internet Protocol Suite (TCP/IP) was standardized, which permitted worldwide proliferation of interconnected networks. TCP/IP network access expanded again in 1986 when the National Science Foundation Network (NSFNet) provided access to supercomputer sites in the United States for researchers, first at speeds of 56 kbit/s and later at 1.5 Mbit/s and 45 Mbit/s.[33] The NSFNet expanded into academic and research organizations in Europe, Australia, New Zealand and Japan in 1988–89.[34][35][36][37] Although other network protocols such as UUCP and PTT public data networks had global reach well before this time, this marked the beginning of the Internet as an intercontinental network. Commercial Internet service providers (ISPs) emerged in 1989 in the United States and Australia.[38] The ARPANET was decommissioned in 1990.[39]

Steady advances in semiconductor technology and optical networking created new economic opportunities for commercial involvement in the expansion of the network in its core and for delivering services to the public. In mid-1989, MCI Mail and Compuserve established connections to the Internet, delivering email and public access products to the half million users of the Internet.[40] Just months later, on 1 January 1990, PSInet launched an alternate Internet backbone for commercial use; one of the networks that added to the core of the commercial Internet of later years. In March 1990, the first high-speed T1 (1.5 Mbit/s) link between the NSFNET and Europe was installed between Cornell University and CERN, allowing much more robust communications than were capable with satellites.[41] Six months later Tim Berners-Lee would begin writing WorldWideWeb, the first web browser, after two years of lobbying CERN management. By Christmas 1990, Berners-Lee had built all the tools necessary for a working Web: the HyperText Transfer Protocol (HTTP) 0.9,[42] the HyperText Markup Language (HTML), the first Web browser (which was also an HTML editor and could access Usenet newsgroups and FTP files), the first HTTP server software (later known as CERN httpd), the first web server,[43] and the first Web pages that described the project itself. In 1991 the Commercial Internet eXchange was founded, allowing PSInet to communicate with the other commercial networks CERFnet and Alternet. Stanford Federal Credit Union was the first financial institution to offer online Internet banking services to all of its members in October 1994.[44] In 1996, OP Financial Group, also a cooperative bank, became the second online bank in the world and the first in Europe.[45] By 1995, the Internet was fully commercialized in the U.S. when the NSFNet was decommissioned, removing the last restrictions on use of the Internet to carry commercial traffic.[46]

| Users | 2005 | 2010 | 2017 | 2019 | 2021 |

|---|---|---|---|---|---|

| World population[48] | 6.5 billion | 6.9 billion | 7.4 billion | 7.75 billion | 7.9 billion |

| Worldwide | 16% | 30% | 48% | 53.6% | 63% |

| In developing world | 8% | 21% | 41.3% | 47% | 57% |

| In developed world | 51% | 67% | 81% | 86.6% | 90% |

As technology advanced and commercial opportunities fueled reciprocal growth, the volume of Internet traffic started experiencing similar characteristics as that of the scaling of MOS transistors, exemplified by Moore’s law, doubling every 18 months. This growth, formalized as Edholm’s law, was catalyzed by advances in MOS technology, laser light wave systems, and noise performance.[49]

Since 1995, the Internet has tremendously impacted culture and commerce, including the rise of near instant communication by email, instant messaging, telephony (Voice over Internet Protocol or VoIP), two-way interactive video calls, and the World Wide Web[50] with its discussion forums, blogs, social networking services, and online shopping sites. Increasing amounts of data are transmitted at higher and higher speeds over fiber optic networks operating at 1 Gbit/s, 10 Gbit/s, or more. The Internet continues to grow, driven by ever greater amounts of online information and knowledge, commerce, entertainment and social networking services.[51] During the late 1990s, it was estimated that traffic on the public Internet grew by 100 percent per year, while the mean annual growth in the number of Internet users was thought to be between 20% and 50%.[52] This growth is often attributed to the lack of central administration, which allows organic growth of the network, as well as the non-proprietary nature of the Internet protocols, which encourages vendor interoperability and prevents any one company from exerting too much control over the network.[53] As of 31 March 2011, the estimated total number of Internet users was 2.095 billion (30.2% of world population).[54] It is estimated that in 1993 the Internet carried only 1% of the information flowing through two-way telecommunication. By 2000 this figure had grown to 51%, and by 2007 more than 97% of all telecommunicated information was carried over the Internet.[55]

Governance

The Internet is a global network that comprises many voluntarily interconnected autonomous networks. It operates without a central governing body. The technical underpinning and standardization of the core protocols (IPv4 and IPv6) is an activity of the Internet Engineering Task Force (IETF), a non-profit organization of loosely affiliated international participants that anyone may associate with by contributing technical expertise. To maintain interoperability, the principal name spaces of the Internet are administered by the Internet Corporation for Assigned Names and Numbers (ICANN). ICANN is governed by an international board of directors drawn from across the Internet technical, business, academic, and other non-commercial communities. ICANN coordinates the assignment of unique identifiers for use on the Internet, including domain names, IP addresses, application port numbers in the transport protocols, and many other parameters. Globally unified name spaces are essential for maintaining the global reach of the Internet. This role of ICANN distinguishes it as perhaps the only central coordinating body for the global Internet.[56]

Regional Internet registries (RIRs) were established for five regions of the world. The African Network Information Center (AfriNIC) for Africa, the American Registry for Internet Numbers (ARIN) for North America, the Asia-Pacific Network Information Centre (APNIC) for Asia and the Pacific region, the Latin American and Caribbean Internet Addresses Registry (LACNIC) for Latin America and the Caribbean region, and the Réseaux IP Européens – Network Coordination Centre (RIPE NCC) for Europe, the Middle East, and Central Asia were delegated to assign IP address blocks and other Internet parameters to local registries, such as Internet service providers, from a designated pool of addresses set aside for each region.

The National Telecommunications and Information Administration, an agency of the United States Department of Commerce, had final approval over changes to the DNS root zone until the IANA stewardship transition on 1 October 2016.[57][58][59][60] The Internet Society (ISOC) was founded in 1992 with a mission to «assure the open development, evolution and use of the Internet for the benefit of all people throughout the world».[61] Its members include individuals (anyone may join) as well as corporations, organizations, governments, and universities. Among other activities ISOC provides an administrative home for a number of less formally organized groups that are involved in developing and managing the Internet, including: the IETF, Internet Architecture Board (IAB), Internet Engineering Steering Group (IESG), Internet Research Task Force (IRTF), and Internet Research Steering Group (IRSG). On 16 November 2005, the United Nations-sponsored World Summit on the Information Society in Tunis established the Internet Governance Forum (IGF) to discuss Internet-related issues.

Infrastructure

2007 map showing submarine fiberoptic telecommunication cables around the world

The communications infrastructure of the Internet consists of its hardware components and a system of software layers that control various aspects of the architecture. As with any computer network, the Internet physically consists of routers, media (such as cabling and radio links), repeaters, modems etc. However, as an example of internetworking, many of the network nodes are not necessarily internet equipment per se, the internet packets are carried by other full-fledged networking protocols with the Internet acting as a homogeneous networking standard, running across heterogeneous hardware, with the packets guided to their destinations by IP routers.

Service tiers

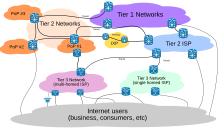

Packet routing across the Internet involves several tiers of Internet service providers.

Internet service providers (ISPs) establish the worldwide connectivity between individual networks at various levels of scope. End-users who only access the Internet when needed to perform a function or obtain information, represent the bottom of the routing hierarchy. At the top of the routing hierarchy are the tier 1 networks, large telecommunication companies that exchange traffic directly with each other via very high speed fibre optic cables and governed by peering agreements. Tier 2 and lower-level networks buy Internet transit from other providers to reach at least some parties on the global Internet, though they may also engage in peering. An ISP may use a single upstream provider for connectivity, or implement multihoming to achieve redundancy and load balancing. Internet exchange points are major traffic exchanges with physical connections to multiple ISPs. Large organizations, such as academic institutions, large enterprises, and governments, may perform the same function as ISPs, engaging in peering and purchasing transit on behalf of their internal networks. Research networks tend to interconnect with large subnetworks such as GEANT, GLORIAD, Internet2, and the UK’s national research and education network, JANET.

Access

Common methods of Internet access by users include dial-up with a computer modem via telephone circuits, broadband over coaxial cable, fiber optics or copper wires, Wi-Fi, satellite, and cellular telephone technology (e.g. 3G, 4G). The Internet may often be accessed from computers in libraries and Internet cafes. Internet access points exist in many public places such as airport halls and coffee shops. Various terms are used, such as public Internet kiosk, public access terminal, and Web payphone. Many hotels also have public terminals that are usually fee-based. These terminals are widely accessed for various usages, such as ticket booking, bank deposit, or online payment. Wi-Fi provides wireless access to the Internet via local computer networks. Hotspots providing such access include Wi-Fi cafes, where users need to bring their own wireless devices such as a laptop or PDA. These services may be free to all, free to customers only, or fee-based.

Grassroots efforts have led to wireless community networks. Commercial Wi-Fi services that cover large areas are available in many cities, such as New York, London, Vienna, Toronto, San Francisco, Philadelphia, Chicago and Pittsburgh, where the Internet can then be accessed from places such as a park bench.[62] Experiments have also been conducted with proprietary mobile wireless networks like Ricochet, various high-speed data services over cellular networks, and fixed wireless services. Modern smartphones can also access the Internet through the cellular carrier network. For Web browsing, these devices provide applications such as Google Chrome, Safari, and Firefox and a wide variety of other Internet software may be installed from app-stores. Internet usage by mobile and tablet devices exceeded desktop worldwide for the first time in October 2016.[63]

Mobile communication

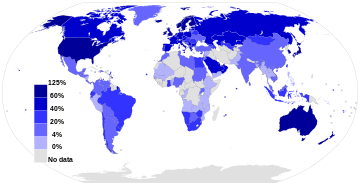

Number of mobile cellular subscriptions 2012–2016

The International Telecommunication Union (ITU) estimated that, by the end of 2017, 48% of individual users regularly connect to the Internet, up from 34% in 2012.[64] Mobile Internet connectivity has played an important role in expanding access in recent years especially in Asia and the Pacific and in Africa.[65] The number of unique mobile cellular subscriptions increased from 3.89 billion in 2012 to 4.83 billion in 2016, two-thirds of the world’s population, with more than half of subscriptions located in Asia and the Pacific. The number of subscriptions is predicted to rise to 5.69 billion users in 2020.[66] As of 2016, almost 60% of the world’s population had access to a 4G broadband cellular network, up from almost 50% in 2015 and 11% in 2012.[disputed – discuss][66] The limits that users face on accessing information via mobile applications coincide with a broader process of fragmentation of the Internet. Fragmentation restricts access to media content and tends to affect poorest users the most.[65]

Zero-rating, the practice of Internet service providers allowing users free connectivity to access specific content or applications without cost, has offered opportunities to surmount economic hurdles, but has also been accused by its critics as creating a two-tiered Internet. To address the issues with zero-rating, an alternative model has emerged in the concept of ‘equal rating’ and is being tested in experiments by Mozilla and Orange in Africa. Equal rating prevents prioritization of one type of content and zero-rates all content up to a specified data cap. A study published by Chatham House, 15 out of 19 countries researched in Latin America had some kind of hybrid or zero-rated product offered. Some countries in the region had a handful of plans to choose from (across all mobile network operators) while others, such as Colombia, offered as many as 30 pre-paid and 34 post-paid plans.[67]

A study of eight countries in the Global South found that zero-rated data plans exist in every country, although there is a great range in the frequency with which they are offered and actually used in each.[68] The study looked at the top three to five carriers by market share in Bangladesh, Colombia, Ghana, India, Kenya, Nigeria, Peru and Philippines. Across the 181 plans examined, 13 per cent were offering zero-rated services. Another study, covering Ghana, Kenya, Nigeria and South Africa, found Facebook’s Free Basics and Wikipedia Zero to be the most commonly zero-rated content.[69]

Internet Protocol Suite

The Internet standards describe a framework known as the Internet protocol suite (also called TCP/IP, based on the first two components.) This is a suite of protocols that are ordered into a set of four conceptional layers by the scope of their operation, originally documented in RFC 1122 and RFC 1123. At the top is the application layer, where communication is described in terms of the objects or data structures most appropriate for each application. For example, a web browser operates in a client–server application model and exchanges information with the Hypertext Transfer Protocol (HTTP) and an application-germane data structure, such as the Hypertext Markup Language (HTML).

Below this top layer, the transport layer connects applications on different hosts with a logical channel through the network. It provides this service with a variety of possible characteristics, such as ordered, reliable delivery (TCP), and an unreliable datagram service (UDP).

Underlying these layers are the networking technologies that interconnect networks at their borders and exchange traffic across them. The Internet layer implements the Internet Protocol (IP) which enables computers to identify and locate each other by IP address, and route their traffic via intermediate (transit) networks.[70] The internet protocol layer code is independent of the type of network that it is physically running over.

At the bottom of the architecture is the link layer, which connects nodes on the same physical link, and contains protocols that do not require routers for traversal to other links. The protocol suite does not explicitly specify hardware methods to transfer bits, or protocols to manage such hardware, but assumes that appropriate technology is available. Examples of that technology include Wi-Fi, Ethernet, and DSL.

As user data is processed through the protocol stack, each abstraction layer adds encapsulation information at the sending host. Data is transmitted over the wire at the link level between hosts and routers. Encapsulation is removed by the receiving host. Intermediate relays update link encapsulation at each hop, and inspect the IP layer for routing purposes.

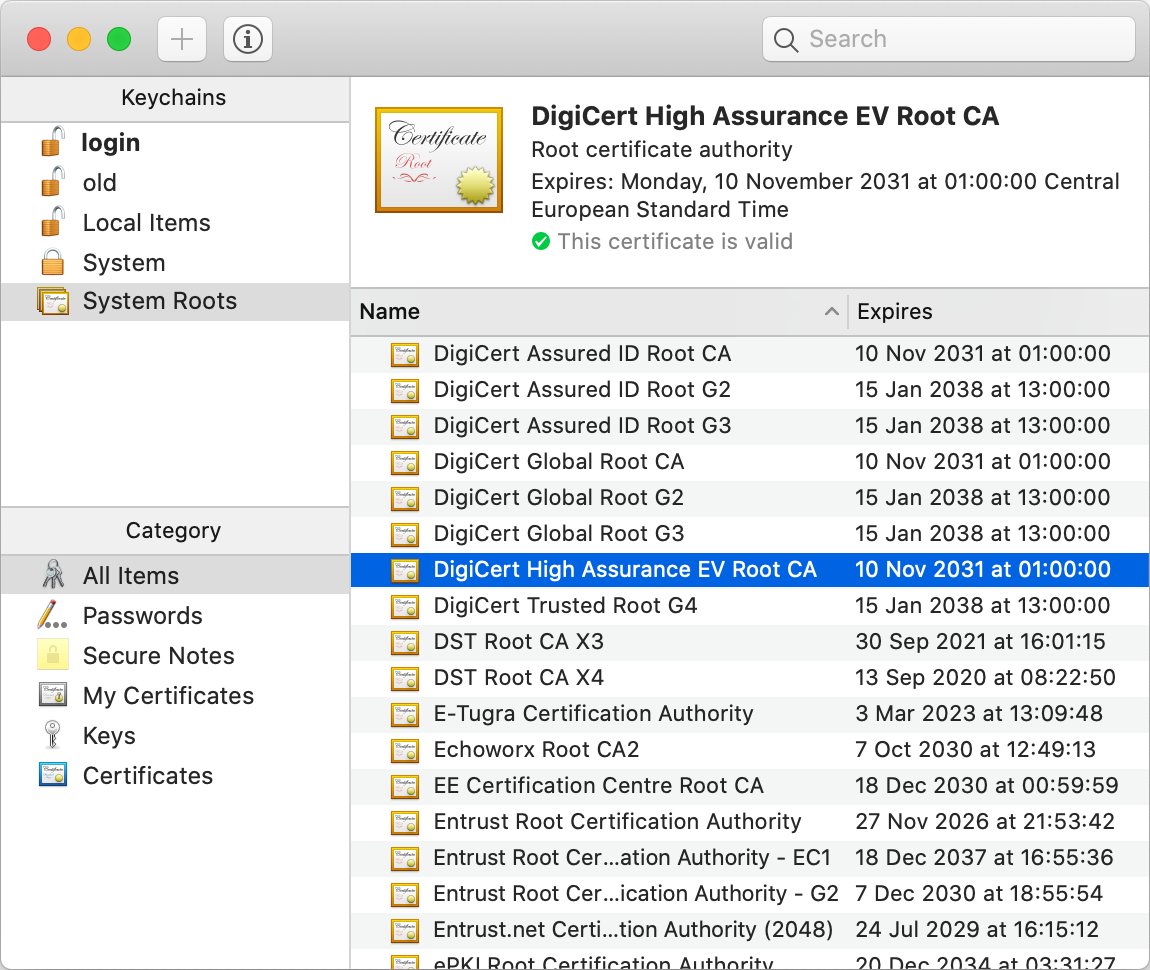

Internet protocol

Conceptual data flow in a simple network topology of two hosts (A and B) connected by a link between their respective routers. The application on each host executes read and write operations as if the processes were directly connected to each other by some kind of data pipe. After the establishment of this pipe, most details of the communication are hidden from each process, as the underlying principles of communication are implemented in the lower protocol layers. In analogy, at the transport layer the communication appears as host-to-host, without knowledge of the application data structures and the connecting routers, while at the internetworking layer, individual network boundaries are traversed at each router.

The most prominent component of the Internet model is the Internet Protocol (IP). IP enables internetworking and, in essence, establishes the Internet itself. Two versions of the Internet Protocol exist, IPv4 and IPv6.

IP Addresses

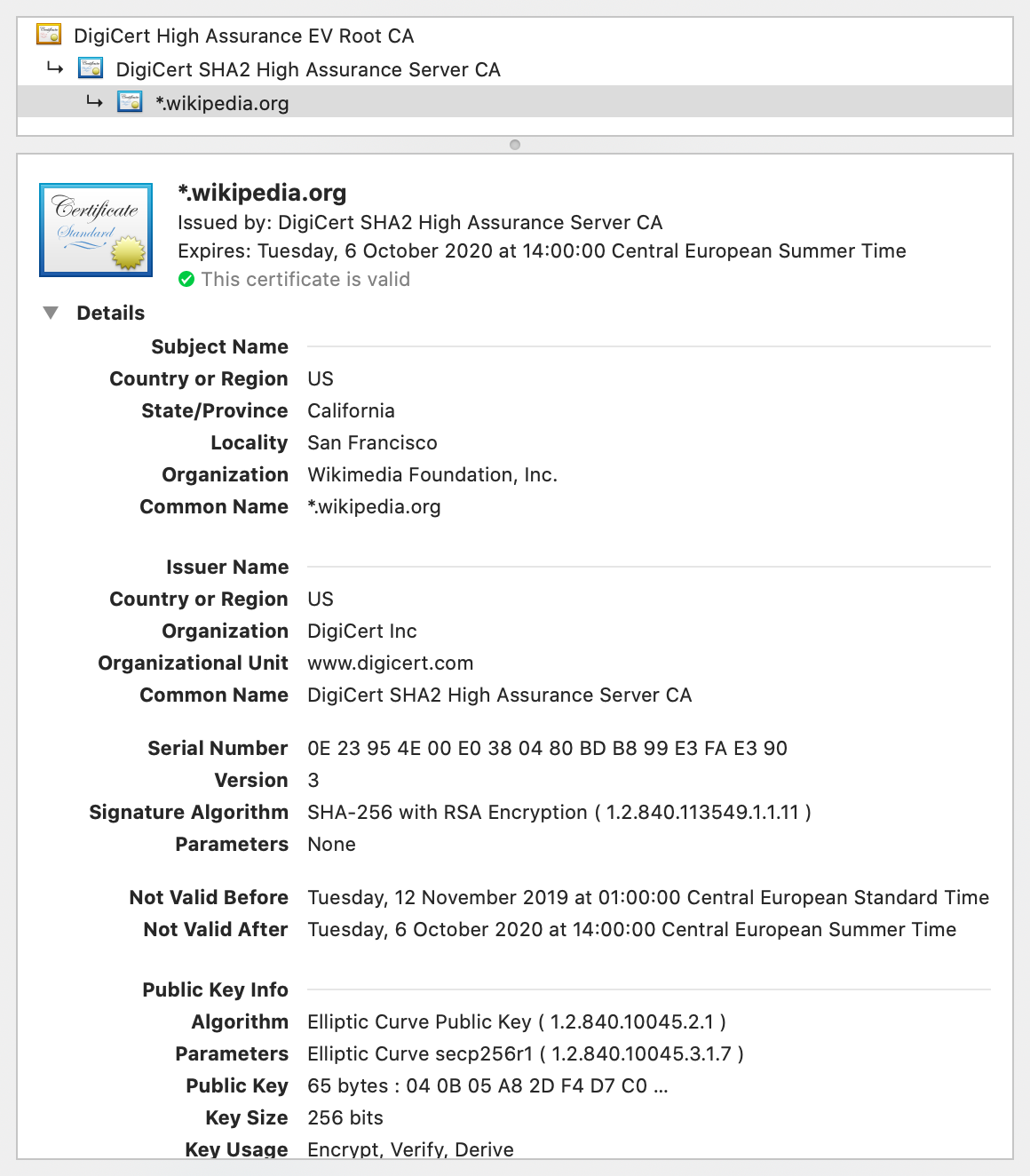

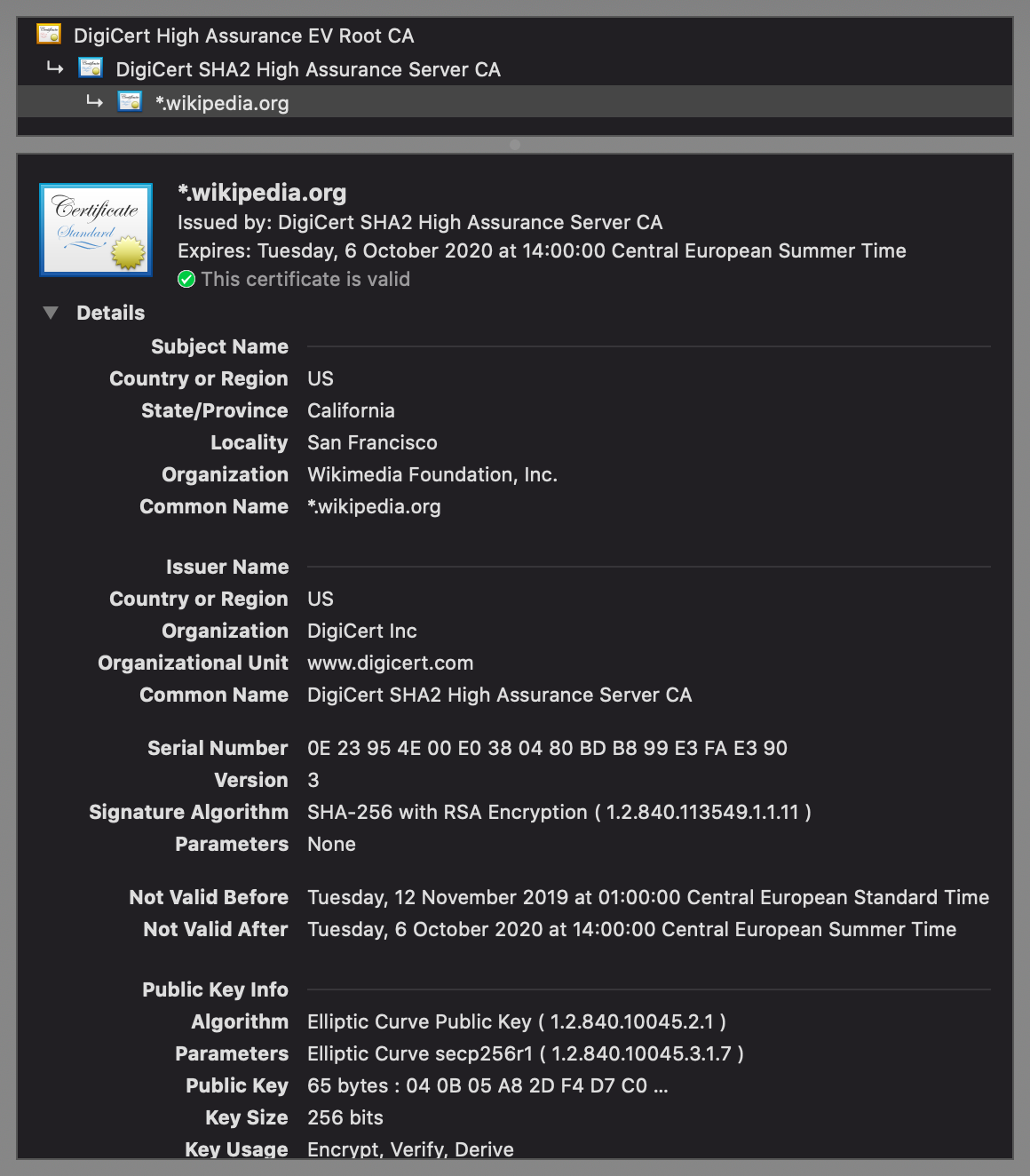

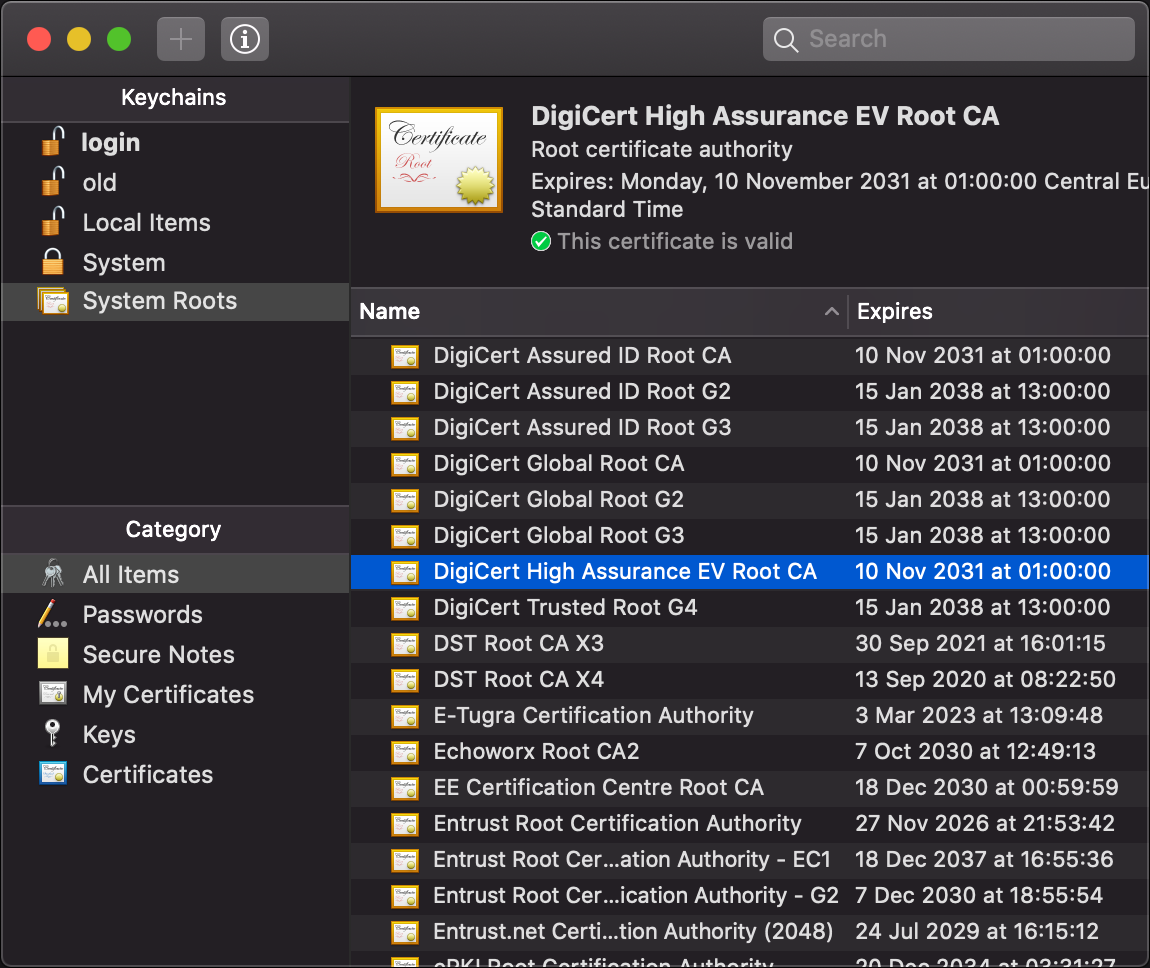

A DNS resolver consults three name servers to resolve the domain name user-visible «www.wikipedia.org» to determine the IPv4 Address 207.142.131.234.

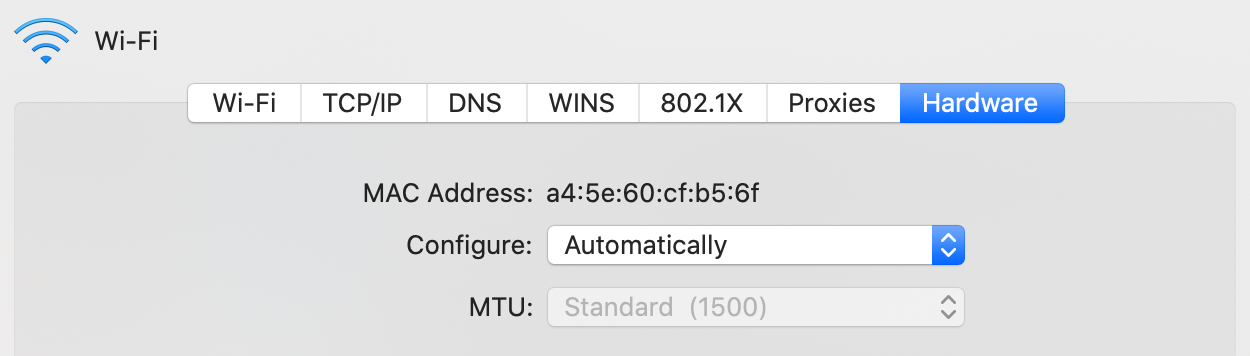

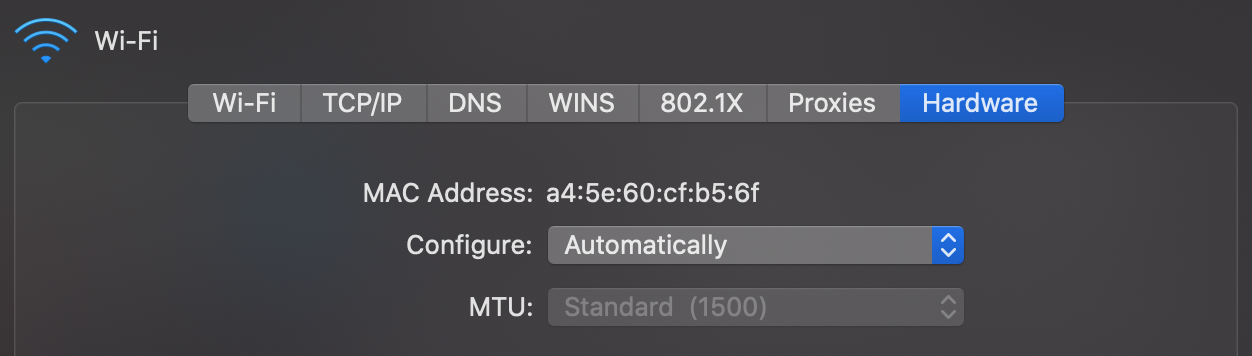

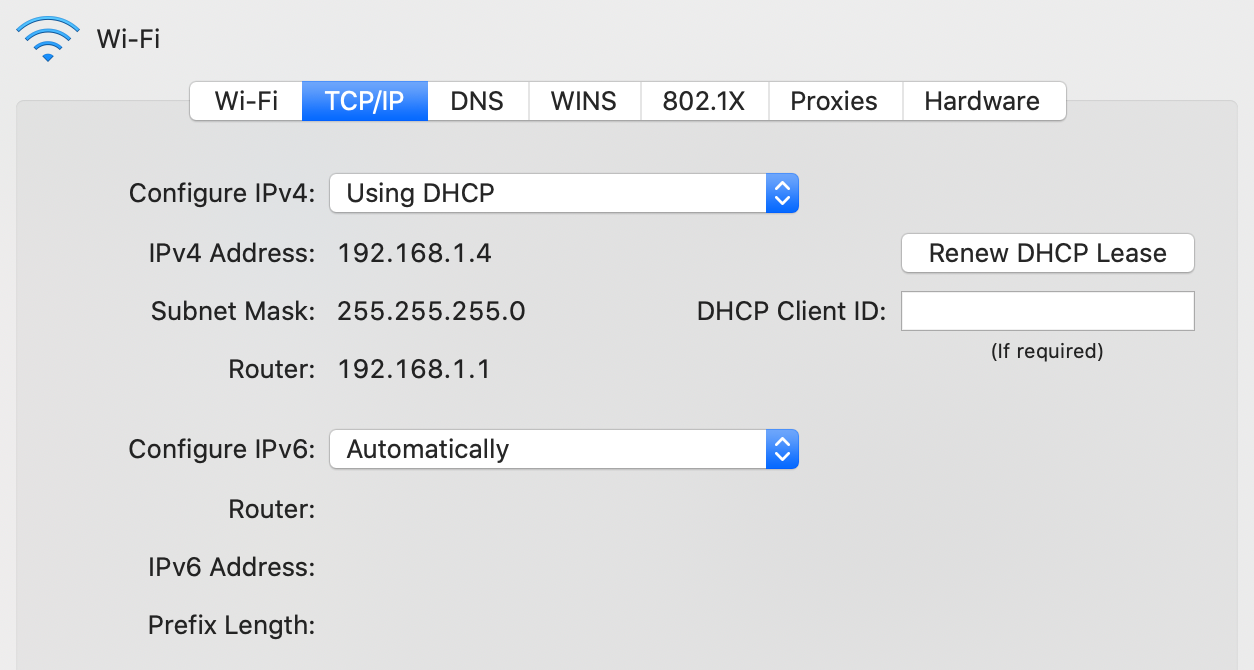

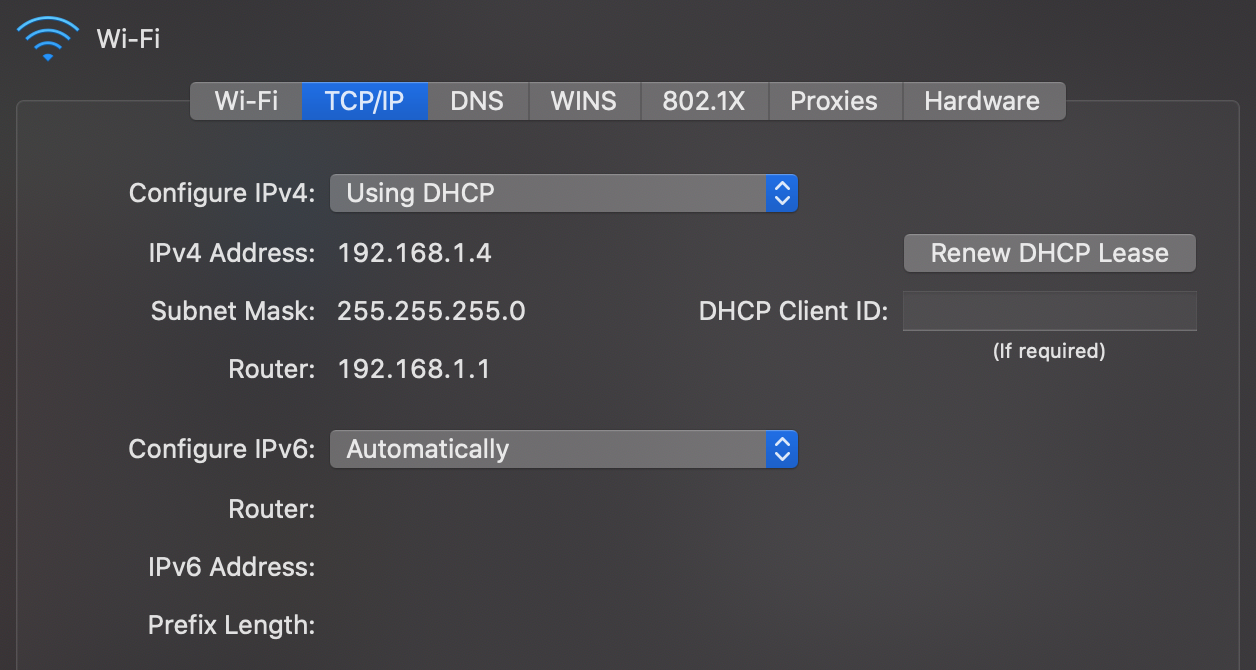

For locating individual computers on the network, the Internet provides IP addresses. IP addresses are used by the Internet infrastructure to direct internet packets to their destinations. They consist of fixed-length numbers, which are found within the packet. IP addresses are generally assigned to equipment either automatically via DHCP, or are configured.

However, the network also supports other addressing systems. Users generally enter domain names (e.g. «en.wikipedia.org») instead of IP addresses because they are easier to remember, they are converted by the Domain Name System (DNS) into IP addresses which are more efficient for routing purposes.

IPv4

Internet Protocol version 4 (IPv4) defines an IP address as a 32-bit number.[70] IPv4 is the initial version used on the first generation of the Internet and is still in dominant use. It was designed to address up to ≈4.3 billion (109) hosts. However, the explosive growth of the Internet has led to IPv4 address exhaustion, which entered its final stage in 2011,[71] when the global IPv4 address allocation pool was exhausted.

IPv6

Because of the growth of the Internet and the depletion of available IPv4 addresses, a new version of IP IPv6, was developed in the mid-1990s, which provides vastly larger addressing capabilities and more efficient routing of Internet traffic. IPv6 uses 128 bits for the IP address and was standardized in 1998.[72][73][74] IPv6 deployment has been ongoing since the mid-2000s and is currently in growing deployment around the world, since Internet address registries (RIRs) began to urge all resource managers to plan rapid adoption and conversion.[75]

IPv6 is not directly interoperable by design with IPv4. In essence, it establishes a parallel version of the Internet not directly accessible with IPv4 software. Thus, translation facilities must exist for internetworking or nodes must have duplicate networking software for both networks. Essentially all modern computer operating systems support both versions of the Internet Protocol. Network infrastructure, however, has been lagging in this development. Aside from the complex array of physical connections that make up its infrastructure, the Internet is facilitated by bi- or multi-lateral commercial contracts, e.g., peering agreements, and by technical specifications or protocols that describe the exchange of data over the network. Indeed, the Internet is defined by its interconnections and routing policies.

Subnetwork

Creating a subnet by dividing the host identifier

A subnetwork or subnet is a logical subdivision of an IP network.[76]: 1, 16 The practice of dividing a network into two or more networks is called subnetting.

Computers that belong to a subnet are addressed with an identical most-significant bit-group in their IP addresses. This results in the logical division of an IP address into two fields, the network number or routing prefix and the rest field or host identifier. The rest field is an identifier for a specific host or network interface.

The routing prefix may be expressed in Classless Inter-Domain Routing (CIDR) notation written as the first address of a network, followed by a slash character (/), and ending with the bit-length of the prefix. For example, 198.51.100.0/24 is the prefix of the Internet Protocol version 4 network starting at the given address, having 24 bits allocated for the network prefix, and the remaining 8 bits reserved for host addressing. Addresses in the range 198.51.100.0 to 198.51.100.255 belong to this network. The IPv6 address specification 2001:db8::/32 is a large address block with 296 addresses, having a 32-bit routing prefix.

For IPv4, a network may also be characterized by its subnet mask or netmask, which is the bitmask that when applied by a bitwise AND operation to any IP address in the network, yields the routing prefix. Subnet masks are also expressed in dot-decimal notation like an address. For example, 255.255.255.0 is the subnet mask for the prefix 198.51.100.0/24.

Traffic is exchanged between subnetworks through routers when the routing prefixes of the source address and the destination address differ. A router serves as a logical or physical boundary between the subnets.

The benefits of subnetting an existing network vary with each deployment scenario. In the address allocation architecture of the Internet using CIDR and in large organizations, it is necessary to allocate address space efficiently. Subnetting may also enhance routing efficiency, or have advantages in network management when subnetworks are administratively controlled by different entities in a larger organization. Subnets may be arranged logically in a hierarchical architecture, partitioning an organization’s network address space into a tree-like routing structure.

Routing

Computers and routers use routing tables in their operating system to direct IP packets to reach a node on a different subnetwork. Routing tables are maintained by manual configuration or automatically by routing protocols. End-nodes typically use a default route that points toward an ISP providing transit, while ISP routers use the Border Gateway Protocol to establish the most efficient routing across the complex connections of the global Internet. The default gateway is the node that serves as the forwarding host (router) to other networks when no other route specification matches the destination IP address of a packet.[77][78]

IETF

While the hardware components in the Internet infrastructure can often be used to support other software systems, it is the design and the standardization process of the software that characterizes the Internet and provides the foundation for its scalability and success. The responsibility for the architectural design of the Internet software systems has been assumed by the Internet Engineering Task Force (IETF).[79] The IETF conducts standard-setting work groups, open to any individual, about the various aspects of Internet architecture. The resulting contributions and standards are published as Request for Comments (RFC) documents on the IETF web site. The principal methods of networking that enable the Internet are contained in specially designated RFCs that constitute the Internet Standards. Other less rigorous documents are simply informative, experimental, or historical, or document the best current practices (BCP) when implementing Internet technologies.

Applications and services

The Internet carries many applications and services, most prominently the World Wide Web, including social media, electronic mail, mobile applications, multiplayer online games, Internet telephony, file sharing, and streaming media services.

Most servers that provide these services are today hosted in data centers, and content is often accessed through high-performance content delivery networks.

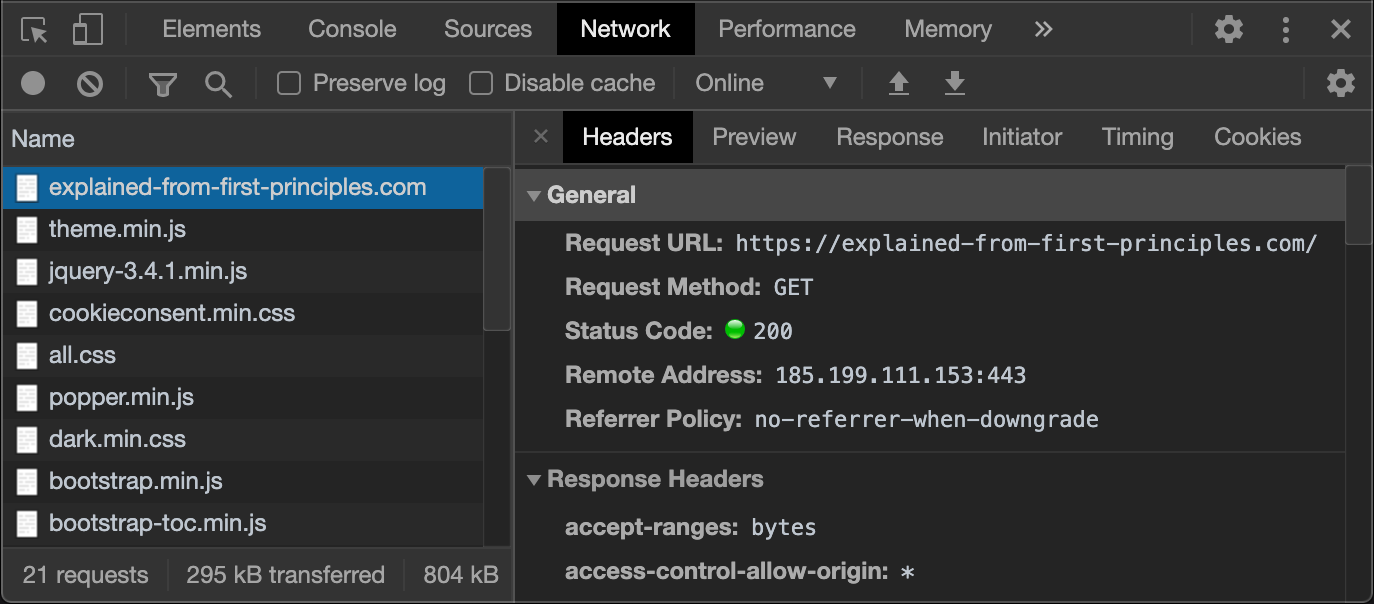

World Wide Web

The World Wide Web is a global collection of documents, images, multimedia, applications, and other resources, logically interrelated by hyperlinks and referenced with Uniform Resource Identifiers (URIs), which provide a global system of named references. URIs symbolically identify services, web servers, databases, and the documents and resources that they can provide. Hypertext Transfer Protocol (HTTP) is the main access protocol of the World Wide Web. Web services also use HTTP for communication between software systems for information transfer, sharing and exchanging business data and logistic and is one of many languages or protocols that can be used for communication on the Internet.[80]

World Wide Web browser software, such as Microsoft’s Internet Explorer/Edge, Mozilla Firefox, Opera, Apple’s Safari, and Google Chrome, lets users navigate from one web page to another via the hyperlinks embedded in the documents. These documents may also contain any combination of computer data, including graphics, sounds, text, video, multimedia and interactive content that runs while the user is interacting with the page. Client-side software can include animations, games, office applications and scientific demonstrations. Through keyword-driven Internet research using search engines like Yahoo!, Bing and Google, users worldwide have easy, instant access to a vast and diverse amount of online information. Compared to printed media, books, encyclopedias and traditional libraries, the World Wide Web has enabled the decentralization of information on a large scale.

The Web has enabled individuals and organizations to publish ideas and information to a potentially large audience online at greatly reduced expense and time delay. Publishing a web page, a blog, or building a website involves little initial cost and many cost-free services are available. However, publishing and maintaining large, professional web sites with attractive, diverse and up-to-date information is still a difficult and expensive proposition. Many individuals and some companies and groups use web logs or blogs, which are largely used as easily updatable online diaries. Some commercial organizations encourage staff to communicate advice in their areas of specialization in the hope that visitors will be impressed by the expert knowledge and free information, and be attracted to the corporation as a result.

Advertising on popular web pages can be lucrative, and e-commerce, which is the sale of products and services directly via the Web, continues to grow. Online advertising is a form of marketing and advertising which uses the Internet to deliver promotional marketing messages to consumers. It includes email marketing, search engine marketing (SEM), social media marketing, many types of display advertising (including web banner advertising), and mobile advertising. In 2011, Internet advertising revenues in the United States surpassed those of cable television and nearly exceeded those of broadcast television.[81]: 19 Many common online advertising practices are controversial and increasingly subject to regulation.

When the Web developed in the 1990s, a typical web page was stored in completed form on a web server, formatted in HTML, complete for transmission to a web browser in response to a request. Over time, the process of creating and serving web pages has become dynamic, creating a flexible design, layout, and content. Websites are often created using content management software with, initially, very little content. Contributors to these systems, who may be paid staff, members of an organization or the public, fill underlying databases with content using editing pages designed for that purpose while casual visitors view and read this content in HTML form. There may or may not be editorial, approval and security systems built into the process of taking newly entered content and making it available to the target visitors.

Communication

Email is an important communications service available via the Internet. The concept of sending electronic text messages between parties, analogous to mailing letters or memos, predates the creation of the Internet.[82][83] Pictures, documents, and other files are sent as email attachments. Email messages can be cc-ed to multiple email addresses.

Internet telephony is a common communications service realized with the Internet. The name of the principle internetworking protocol, the Internet Protocol, lends its name to voice over Internet Protocol (VoIP). The idea began in the early 1990s with walkie-talkie-like voice applications for personal computers. VoIP systems now dominate many markets, and are as easy to use and as convenient as a traditional telephone. The benefit has been substantial cost savings over traditional telephone calls, especially over long distances. Cable, ADSL, and mobile data networks provide Internet access in customer premises[84] and inexpensive VoIP network adapters provide the connection for traditional analog telephone sets. The voice quality of VoIP often exceeds that of traditional calls. Remaining problems for VoIP include the situation that emergency services may not be universally available, and that devices rely on a local power supply, while older traditional phones are powered from the local loop, and typically operate during a power failure.

Data transfer

File sharing is an example of transferring large amounts of data across the Internet. A computer file can be emailed to customers, colleagues and friends as an attachment. It can be uploaded to a website or File Transfer Protocol (FTP) server for easy download by others. It can be put into a «shared location» or onto a file server for instant use by colleagues. The load of bulk downloads to many users can be eased by the use of «mirror» servers or peer-to-peer networks. In any of these cases, access to the file may be controlled by user authentication, the transit of the file over the Internet may be obscured by encryption, and money may change hands for access to the file. The price can be paid by the remote charging of funds from, for example, a credit card whose details are also passed—usually fully encrypted—across the Internet. The origin and authenticity of the file received may be checked by digital signatures or by MD5 or other message digests. These simple features of the Internet, over a worldwide basis, are changing the production, sale, and distribution of anything that can be reduced to a computer file for transmission. This includes all manner of print publications, software products, news, music, film, video, photography, graphics and the other arts. This in turn has caused seismic shifts in each of the existing industries that previously controlled the production and distribution of these products.

Streaming media is the real-time delivery of digital media for the immediate consumption or enjoyment by end users. Many radio and television broadcasters provide Internet feeds of their live audio and video productions. They may also allow time-shift viewing or listening such as Preview, Classic Clips and Listen Again features. These providers have been joined by a range of pure Internet «broadcasters» who never had on-air licenses. This means that an Internet-connected device, such as a computer or something more specific, can be used to access online media in much the same way as was previously possible only with a television or radio receiver. The range of available types of content is much wider, from specialized technical webcasts to on-demand popular multimedia services. Podcasting is a variation on this theme, where—usually audio—material is downloaded and played back on a computer or shifted to a portable media player to be listened to on the move. These techniques using simple equipment allow anybody, with little censorship or licensing control, to broadcast audio-visual material worldwide.

Digital media streaming increases the demand for network bandwidth. For example, standard image quality needs 1 Mbit/s link speed for SD 480p, HD 720p quality requires 2.5 Mbit/s, and the top-of-the-line HDX quality needs 4.5 Mbit/s for 1080p.[85]

Webcams are a low-cost extension of this phenomenon. While some webcams can give full-frame-rate video, the picture either is usually small or updates slowly. Internet users can watch animals around an African waterhole, ships in the Panama Canal, traffic at a local roundabout or monitor their own premises, live and in real time. Video chat rooms and video conferencing are also popular with many uses being found for personal webcams, with and without two-way sound. YouTube was founded on 15 February 2005 and is now the leading website for free streaming video with more than two billion users.[86] It uses an HTML5 based web player by default to stream and show video files.[87] Registered users may upload an unlimited amount of video and build their own personal profile. YouTube claims that its users watch hundreds of millions, and upload hundreds of thousands of videos daily.

The Internet has enabled new forms of social interaction, activities, and social associations. This phenomenon has given rise to the scholarly study of the sociology of the Internet.

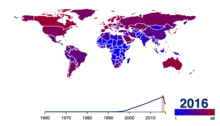

Users

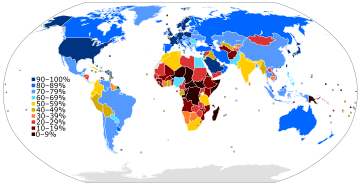

Share of population using the Internet.[88] See or edit source data.

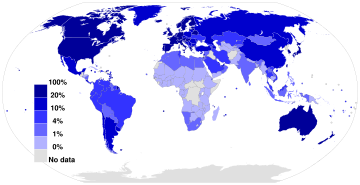

Internet users per 100 population members and GDP per capita for selected countries

From 2000 to 2009, the number of Internet users globally rose from 394 million to 1.858 billion.[91] By 2010, 22 percent of the world’s population had access to computers with 1 billion Google searches every day, 300 million Internet users reading blogs, and 2 billion videos viewed daily on YouTube.[92] In 2014 the world’s Internet users surpassed 3 billion or 43.6 percent of world population, but two-thirds of the users came from richest countries, with 78.0 percent of Europe countries population using the Internet, followed by 57.4 percent of the Americas.[93] However, by 2018, Asia alone accounted for 51% of all Internet users, with 2.2 billion out of the 4.3 billion Internet users in the world coming from that region. The number of China’s Internet users surpassed a major milestone in 2018, when the country’s Internet regulatory authority, China Internet Network Information Centre, announced that China had 802 million Internet users.[94] By 2019, China was the world’s leading country in terms of Internet users, with more than 800 million users, followed closely by India, with some 700 million users, with the United States a distant third with 275 million users. However, in terms of penetration, China has[when?] a 38.4% penetration rate compared to India’s 40% and the United States’s 80%.[95] As of 2020, it was estimated that 4.5 billion people use the Internet, more than half of the world’s population.[96][97]

The prevalent language for communication via the Internet has always been English. This may be a result of the origin of the Internet, as well as the language’s role as a lingua franca and as a world language. Early computer systems were limited to the characters in the American Standard Code for Information Interchange (ASCII), a subset of the Latin alphabet.

After English (27%), the most requested languages on the World Wide Web are Chinese (25%), Spanish (8%), Japanese (5%), Portuguese and German (4% each), Arabic, French and Russian (3% each), and Korean (2%).[98] By region, 42% of the world’s Internet users are based in Asia, 24% in Europe, 14% in North America, 10% in Latin America and the Caribbean taken together, 6% in Africa, 3% in the Middle East and 1% in Australia/Oceania.[99] The Internet’s technologies have developed enough in recent years, especially in the use of Unicode, that good facilities are available for development and communication in the world’s widely used languages. However, some glitches such as mojibake (incorrect display of some languages’ characters) still remain.

In an American study in 2005, the percentage of men using the Internet was very slightly ahead of the percentage of women, although this difference reversed in those under 30. Men logged on more often, spent more time online, and were more likely to be broadband users, whereas women tended to make more use of opportunities to communicate (such as email). Men were more likely to use the Internet to pay bills, participate in auctions, and for recreation such as downloading music and videos. Men and women were equally likely to use the Internet for shopping and banking.[100]

More recent studies indicate that in 2008, women significantly outnumbered men on most social networking services, such as Facebook and Myspace, although the ratios varied with age.[101] In addition, women watched more streaming content, whereas men downloaded more.[102] In terms of blogs, men were more likely to blog in the first place; among those who blog, men were more likely to have a professional blog, whereas women were more likely to have a personal blog.[103]

Splitting by country, in 2012 Iceland, Norway, Sweden, the Netherlands, and Denmark had the highest Internet penetration by the number of users, with 93% or more of the population with access.[104]

Several neologisms exist that refer to Internet users: Netizen (as in «citizen of the net»)[105] refers to those actively involved in improving online communities, the Internet in general or surrounding political affairs and rights such as free speech,[106][107] Internaut refers to operators or technically highly capable users of the Internet,[108][109] digital citizen refers to a person using the Internet in order to engage in society, politics, and government participation.[110]

Usage

The Internet allows greater flexibility in working hours and location, especially with the spread of unmetered high-speed connections. The Internet can be accessed almost anywhere by numerous means, including through mobile Internet devices. Mobile phones, datacards, handheld game consoles and cellular routers allow users to connect to the Internet wirelessly. Within the limitations imposed by small screens and other limited facilities of such pocket-sized devices, the services of the Internet, including email and the web, may be available. Service providers may restrict the services offered and mobile data charges may be significantly higher than other access methods.

Educational material at all levels from pre-school to post-doctoral is available from websites. Examples range from CBeebies, through school and high-school revision guides and virtual universities, to access to top-end scholarly literature through the likes of Google Scholar. For distance education, help with homework and other assignments, self-guided learning, whiling away spare time or just looking up more detail on an interesting fact, it has never been easier for people to access educational information at any level from anywhere. The Internet in general and the World Wide Web in particular are important enablers of both formal and informal education. Further, the Internet allows researchers (especially those from the social and behavioral sciences) to conduct research remotely via virtual laboratories, with profound changes in reach and generalizability of findings as well as in communication between scientists and in the publication of results.[114]

The low cost and nearly instantaneous sharing of ideas, knowledge, and skills have made collaborative work dramatically easier, with the help of collaborative software. Not only can a group cheaply communicate and share ideas but the wide reach of the Internet allows such groups more easily to form. An example of this is the free software movement, which has produced, among other things, Linux, Mozilla Firefox, and OpenOffice.org (later forked into LibreOffice). Internet chat, whether using an IRC chat room, an instant messaging system, or a social networking service, allows colleagues to stay in touch in a very convenient way while working at their computers during the day. Messages can be exchanged even more quickly and conveniently than via email. These systems may allow files to be exchanged, drawings and images to be shared, or voice and video contact between team members.

Content management systems allow collaborating teams to work on shared sets of documents simultaneously without accidentally destroying each other’s work. Business and project teams can share calendars as well as documents and other information. Such collaboration occurs in a wide variety of areas including scientific research, software development, conference planning, political activism and creative writing. Social and political collaboration is also becoming more widespread as both Internet access and computer literacy spread.

The Internet allows computer users to remotely access other computers and information stores easily from any access point. Access may be with computer security, i.e. authentication and encryption technologies, depending on the requirements. This is encouraging new ways of remote work, collaboration and information sharing in many industries. An accountant sitting at home can audit the books of a company based in another country, on a server situated in a third country that is remotely maintained by IT specialists in a fourth. These accounts could have been created by home-working bookkeepers, in other remote locations, based on information emailed to them from offices all over the world. Some of these things were possible before the widespread use of the Internet, but the cost of private leased lines would have made many of them infeasible in practice. An office worker away from their desk, perhaps on the other side of the world on a business trip or a holiday, can access their emails, access their data using cloud computing, or open a remote desktop session into their office PC using a secure virtual private network (VPN) connection on the Internet. This can give the worker complete access to all of their normal files and data, including email and other applications, while away from the office. It has been referred to among system administrators as the Virtual Private Nightmare,[115] because it extends the secure perimeter of a corporate network into remote locations and its employees’ homes.

By late 2010s Internet has been described as «the main source of scientific information «for the majority of the global North population».[116]: 111

Social networking and entertainment

Many people use the World Wide Web to access news, weather and sports reports, to plan and book vacations and to pursue their personal interests. People use chat, messaging and email to make and stay in touch with friends worldwide, sometimes in the same way as some previously had pen pals. Social networking services such as Facebook have created new ways to socialize and interact. Users of these sites are able to add a wide variety of information to pages, pursue common interests, and connect with others. It is also possible to find existing acquaintances, to allow communication among existing groups of people. Sites like LinkedIn foster commercial and business connections. YouTube and Flickr specialize in users’ videos and photographs. Social networking services are also widely used by businesses and other organizations to promote their brands, to market to their customers and to encourage posts to «go viral». «Black hat» social media techniques are also employed by some organizations, such as spam accounts and astroturfing.

A risk for both individuals and organizations writing posts (especially public posts) on social networking services, is that especially foolish or controversial posts occasionally lead to an unexpected and possibly large-scale backlash on social media from other Internet users. This is also a risk in relation to controversial offline behavior, if it is widely made known. The nature of this backlash can range widely from counter-arguments and public mockery, through insults and hate speech, to, in extreme cases, rape and death threats. The online disinhibition effect describes the tendency of many individuals to behave more stridently or offensively online than they would in person. A significant number of feminist women have been the target of various forms of harassment in response to posts they have made on social media, and Twitter in particular has been criticised in the past for not doing enough to aid victims of online abuse.[117]

For organizations, such a backlash can cause overall brand damage, especially if reported by the media. However, this is not always the case, as any brand damage in the eyes of people with an opposing opinion to that presented by the organization could sometimes be outweighed by strengthening the brand in the eyes of others. Furthermore, if an organization or individual gives in to demands that others perceive as wrong-headed, that can then provoke a counter-backlash.

Some websites, such as Reddit, have rules forbidding the posting of personal information of individuals (also known as doxxing), due to concerns about such postings leading to mobs of large numbers of Internet users directing harassment at the specific individuals thereby identified. In particular, the Reddit rule forbidding the posting of personal information is widely understood to imply that all identifying photos and names must be censored in Facebook screenshots posted to Reddit. However, the interpretation of this rule in relation to public Twitter posts is less clear, and in any case, like-minded people online have many other ways they can use to direct each other’s attention to public social media posts they disagree with.

Children also face dangers online such as cyberbullying and approaches by sexual predators, who sometimes pose as children themselves. Children may also encounter material which they may find upsetting, or material that their parents consider to be not age-appropriate. Due to naivety, they may also post personal information about themselves online, which could put them or their families at risk unless warned not to do so. Many parents choose to enable Internet filtering or supervise their children’s online activities in an attempt to protect their children from inappropriate material on the Internet. The most popular social networking services, such as Facebook and Twitter, commonly forbid users under the age of 13. However, these policies are typically trivial to circumvent by registering an account with a false birth date, and a significant number of children aged under 13 join such sites anyway. Social networking services for younger children, which claim to provide better levels of protection for children, also exist.[118]

The Internet has been a major outlet for leisure activity since its inception, with entertaining social experiments such as MUDs and MOOs being conducted on university servers, and humor-related Usenet groups receiving much traffic.[citation needed] Many Internet forums have sections devoted to games and funny videos.[citation needed] The Internet pornography and online gambling industries have taken advantage of the World Wide Web. Although many governments have attempted to restrict both industries’ use of the Internet, in general, this has failed to stop their widespread popularity.[119]

Another area of leisure activity on the Internet is multiplayer gaming.[120] This form of recreation creates communities, where people of all ages and origins enjoy the fast-paced world of multiplayer games. These range from MMORPG to first-person shooters, from role-playing video games to online gambling. While online gaming has been around since the 1970s, modern modes of online gaming began with subscription services such as GameSpy and MPlayer.[121] Non-subscribers were limited to certain types of game play or certain games. Many people use the Internet to access and download music, movies and other works for their enjoyment and relaxation. Free and fee-based services exist for all of these activities, using centralized servers and distributed peer-to-peer technologies. Some of these sources exercise more care with respect to the original artists’ copyrights than others.

Internet usage has been correlated to users’ loneliness.[122] Lonely people tend to use the Internet as an outlet for their feelings and to share their stories with others, such as in the «I am lonely will anyone speak to me» thread.

A 2017 book claimed that the Internet consolidates most aspects of human endeavor into singular arenas of which all of humanity are potential members and competitors, with fundamentally negative impacts on mental health as a result. While successes in each field of activity are pervasively visible and trumpeted, they are reserved for an extremely thin sliver of the world’s most exceptional, leaving everyone else behind. Whereas, before the Internet, expectations of success in any field were supported by reasonable probabilities of achievement at the village, suburb, city or even state level, the same expectations in the Internet world are virtually certain to bring disappointment today: there is always someone else, somewhere on the planet, who can do better and take the now one-and-only top spot.[123]

Cybersectarianism is a new organizational form which involves: «highly dispersed small groups of practitioners that may remain largely anonymous within the larger social context and operate in relative secrecy, while still linked remotely to a larger network of believers who share a set of practices and texts, and often a common devotion to a particular leader. Overseas supporters provide funding and support; domestic practitioners distribute tracts, participate in acts of resistance, and share information on the internal situation with outsiders. Collectively, members and practitioners of such sects construct viable virtual communities of faith, exchanging personal testimonies and engaging in the collective study via email, online chat rooms, and web-based message boards.»[124] In particular, the British government has raised concerns about the prospect of young British Muslims being indoctrinated into Islamic extremism by material on the Internet, being persuaded to join terrorist groups such as the so-called «Islamic State», and then potentially committing acts of terrorism on returning to Britain after fighting in Syria or Iraq.

Cyberslacking can become a drain on corporate resources; the average UK employee spent 57 minutes a day surfing the Web while at work, according to a 2003 study by Peninsula Business Services.[125] Internet addiction disorder is excessive computer use that interferes with daily life. Nicholas G. Carr believes that Internet use has other effects on individuals, for instance improving skills of scan-reading and interfering with the deep thinking that leads to true creativity.[126]

Electronic business

Electronic business (e-business) encompasses business processes spanning the entire value chain: purchasing, supply chain management, marketing, sales, customer service, and business relationship. E-commerce seeks to add revenue streams using the Internet to build and enhance relationships with clients and partners. According to International Data Corporation, the size of worldwide e-commerce, when global business-to-business and -consumer transactions are combined, equate to $16 trillion for 2013. A report by Oxford Economics added those two together to estimate the total size of the digital economy at $20.4 trillion, equivalent to roughly 13.8% of global sales.[127]

While much has been written of the economic advantages of Internet-enabled commerce, there is also evidence that some aspects of the Internet such as maps and location-aware services may serve to reinforce economic inequality and the digital divide.[128] Electronic commerce may be responsible for consolidation and the decline of mom-and-pop, brick and mortar businesses resulting in increases in income inequality.[129][130][131]

Author Andrew Keen, a long-time critic of the social transformations caused by the Internet, has focused on the economic effects of consolidation from Internet businesses. Keen cites a 2013 Institute for Local Self-Reliance report saying brick-and-mortar retailers employ 47 people for every $10 million in sales while Amazon employs only 14. Similarly, the 700-employee room rental start-up Airbnb was valued at $10 billion in 2014, about half as much as Hilton Worldwide, which employs 152,000 people. At that time, Uber employed 1,000 full-time employees and was valued at $18.2 billion, about the same valuation as Avis Rent a Car and The Hertz Corporation combined, which together employed almost 60,000 people.[132]

Remote work

Remote work is facilitated by tools such as groupware, virtual private networks, conference calling, videotelephony, and VoIP so that work may be performed from any location, most conveniently the worker’s home. It can be efficient and useful for companies as it allows workers to communicate over long distances, saving significant amounts of travel time and cost. More workers have adequate bandwidth at home to use these tools to link their home to their corporate intranet and internal communication networks.

Collaborative publishing

Wikis have also been used in the academic community for sharing and dissemination of information across institutional and international boundaries.[133] In those settings, they have been found useful for collaboration on grant writing, strategic planning, departmental documentation, and committee work.[134] The United States Patent and Trademark Office uses a wiki to allow the public to collaborate on finding prior art relevant to examination of pending patent applications. Queens, New York has used a wiki to allow citizens to collaborate on the design and planning of a local park.[135] The English Wikipedia has the largest user base among wikis on the World Wide Web[136] and ranks in the top 10 among all Web sites in terms of traffic.[137]

Politics and political revolutions

Banner in Bangkok during the 2014 Thai coup d’état, informing the Thai public that ‘like’ or ‘share’ activities on social media could result in imprisonment (observed 30 June 2014)

The Internet has achieved new relevance as a political tool. The presidential campaign of Howard Dean in 2004 in the United States was notable for its success in soliciting donation via the Internet. Many political groups use the Internet to achieve a new method of organizing for carrying out their mission, having given rise to Internet activism, most notably practiced by rebels in the Arab Spring.[138][139] The New York Times suggested that social media websites, such as Facebook and Twitter, helped people organize the political revolutions in Egypt, by helping activists organize protests, communicate grievances, and disseminate information.[140]

Many have understood the Internet as an extension of the Habermasian notion of the public sphere, observing how network communication technologies provide something like a global civic forum. However, incidents of politically motivated Internet censorship have now been recorded in many countries, including western democracies.[141][142]

Philanthropy

The spread of low-cost Internet access in developing countries has opened up new possibilities for peer-to-peer charities, which allow individuals to contribute small amounts to charitable projects for other individuals. Websites, such as DonorsChoose and GlobalGiving, allow small-scale donors to direct funds to individual projects of their choice. A popular twist on Internet-based philanthropy is the use of peer-to-peer lending for charitable purposes. Kiva pioneered this concept in 2005, offering the first web-based service to publish individual loan profiles for funding. Kiva raises funds for local intermediary microfinance organizations that post stories and updates on behalf of the borrowers. Lenders can contribute as little as $25 to loans of their choice, and receive their money back as borrowers repay. Kiva falls short of being a pure peer-to-peer charity, in that loans are disbursed before being funded by lenders and borrowers do not communicate with lenders themselves.[143][144]

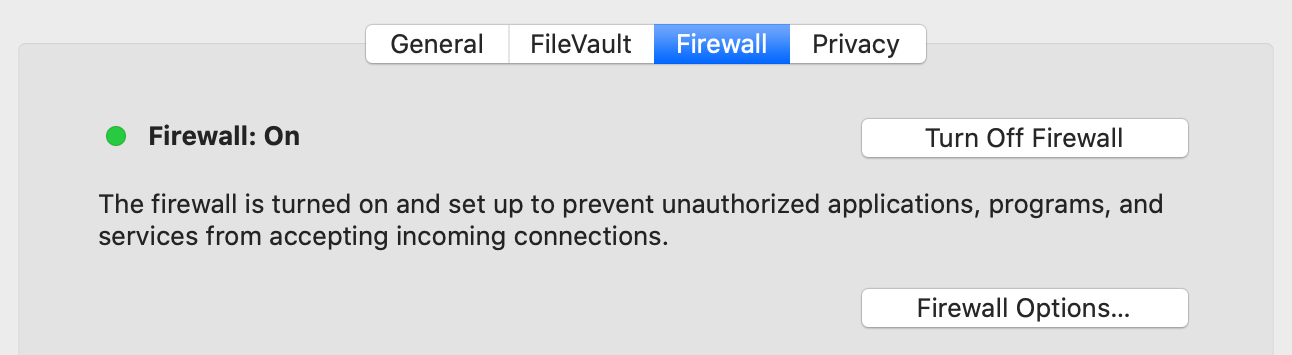

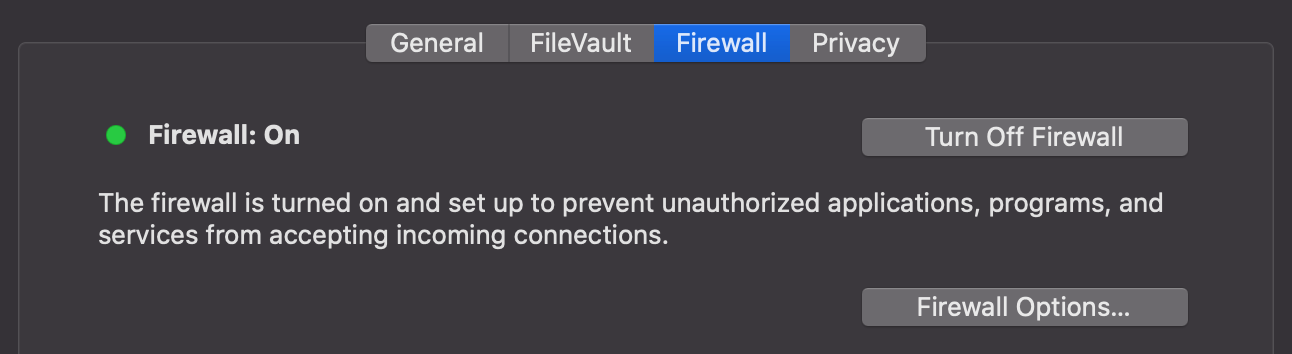

Security

Internet resources, hardware, and software components are the target of criminal or malicious attempts to gain unauthorized control to cause interruptions, commit fraud, engage in blackmail or access private information.

Malware

Malware is malicious software used and distributed via the Internet. It includes computer viruses which are copied with the help of humans, computer worms which copy themselves automatically, software for denial of service attacks, ransomware, botnets, and spyware that reports on the activity and typing of users. Usually, these activities constitute cybercrime. Defense theorists have also speculated about the possibilities of hackers using cyber warfare using similar methods on a large scale.[145]

Surveillance

The vast majority of computer surveillance involves the monitoring of data and traffic on the Internet.[146] In the United States for example, under the Communications Assistance For Law Enforcement Act, all phone calls and broadband Internet traffic (emails, web traffic, instant messaging, etc.) are required to be available for unimpeded real-time monitoring by Federal law enforcement agencies.[147][148][149] Packet capture is the monitoring of data traffic on a computer network. Computers communicate over the Internet by breaking up messages (emails, images, videos, web pages, files, etc.) into small chunks called «packets», which are routed through a network of computers, until they reach their destination, where they are assembled back into a complete «message» again. Packet Capture Appliance intercepts these packets as they are traveling through the network, in order to examine their contents using other programs. A packet capture is an information gathering tool, but not an analysis tool. That is it gathers «messages» but it does not analyze them and figure out what they mean. Other programs are needed to perform traffic analysis and sift through intercepted data looking for important/useful information. Under the Communications Assistance For Law Enforcement Act all U.S. telecommunications providers are required to install packet sniffing technology to allow Federal law enforcement and intelligence agencies to intercept all of their customers’ broadband Internet and VoIP traffic.[150]

The large amount of data gathered from packet capturing requires surveillance software that filters and reports relevant information, such as the use of certain words or phrases, the access of certain types of web sites, or communicating via email or chat with certain parties.[151] Agencies, such as the Information Awareness Office, NSA, GCHQ and the FBI, spend billions of dollars per year to develop, purchase, implement, and operate systems for interception and analysis of data.[152] Similar systems are operated by Iranian secret police to identify and suppress dissidents. The required hardware and software was allegedly installed by German Siemens AG and Finnish Nokia.[153]

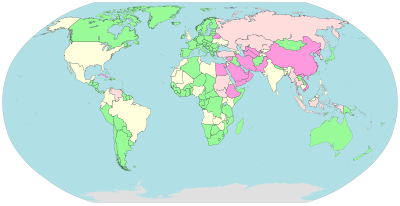

Censorship

Pervasive

Substantial

Selective

Little or none

Some governments, such as those of Burma, Iran, North Korea, Mainland China, Saudi Arabia and the United Arab Emirates, restrict access to content on the Internet within their territories, especially to political and religious content, with domain name and keyword filters.[159]

In Norway, Denmark, Finland, and Sweden, major Internet service providers have voluntarily agreed to restrict access to sites listed by authorities. While this list of forbidden resources is supposed to contain only known child pornography sites, the content of the list is secret.[160] Many countries, including the United States, have enacted laws against the possession or distribution of certain material, such as child pornography, via the Internet, but do not mandate filter software. Many free or commercially available software programs, called content-control software are available to users to block offensive websites on individual computers or networks, in order to limit access by children to pornographic material or depiction of violence.

Performance

As the Internet is a heterogeneous network, the physical characteristics, including for example the data transfer rates of connections, vary widely. It exhibits emergent phenomena that depend on its large-scale organization.[161]

Traffic volume

Global Internet Traffic as of 2018

The volume of Internet traffic is difficult to measure, because no single point of measurement exists in the multi-tiered, non-hierarchical topology. Traffic data may be estimated from the aggregate volume through the peering points of the Tier 1 network providers, but traffic that stays local in large provider networks may not be accounted for.

Outages

An Internet blackout or outage can be caused by local signalling interruptions. Disruptions of submarine communications cables may cause blackouts or slowdowns to large areas, such as in the 2008 submarine cable disruption. Less-developed countries are more vulnerable due to a small number of high-capacity links. Land cables are also vulnerable, as in 2011 when a woman digging for scrap metal severed most connectivity for the nation of Armenia.[162] Internet blackouts affecting almost entire countries can be achieved by governments as a form of Internet censorship, as in the blockage of the Internet in Egypt, whereby approximately 93%[163] of networks were without access in 2011 in an attempt to stop mobilization for anti-government protests.[164]

Energy use

Estimates of the Internet’s electricity usage have been the subject of controversy, according to a 2014 peer-reviewed research paper that found claims differing by a factor of 20,000 published in the literature during the preceding decade, ranging from 0.0064 kilowatt hours per gigabyte transferred (kWh/GB) to 136 kWh/GB.[165] The researchers attributed these discrepancies mainly to the year of reference (i.e. whether efficiency gains over time had been taken into account) and to whether «end devices such as personal computers and servers are included» in the analysis.[165]

In 2011, academic researchers estimated the overall energy used by the Internet to be between 170 and 307 GW, less than two percent of the energy used by humanity. This estimate included the energy needed to build, operate, and periodically replace the estimated 750 million laptops, a billion smart phones and 100 million servers worldwide as well as the energy that routers, cell towers, optical switches, Wi-Fi transmitters and cloud storage devices use when transmitting Internet traffic.[166][167] According to a non-peer reviewed study published in 2018 by The Shift Project (a French think tank funded by corporate sponsors), nearly 4% of global CO2 emissions could be attributed to global data transfer and the necessary infrastructure.[168] The study also said that online video streaming alone accounted for 60% of this data transfer and therefore contributed to over 300 million tons of CO2 emission per year, and argued for new «digital sobriety» regulations restricting the use and size of video files.[169]

See also

- Crowdfunding

- Crowdsourcing

- Darknet

- Deep web

- Freenet

- Internet industry jargon

- Index of Internet-related articles

- Internet metaphors

- Internet video

- «Internets»

- Open Systems Interconnection

- Outline of the Internet

Notes

- ^ See Capitalization of Internet.

- ^ Despite the name, TCP/IP also includes UDP traffic, which is significant.[1]

References

- ^ Amogh Dhamdhere. «Internet Traffic Characterization». Retrieved 6 May 2022.

- ^ a b «A Flaw in the Design». The Washington Post. 30 May 2015. Archived from the original on 8 November 2020. Retrieved 20 February 2020.

The Internet was born of a big idea: Messages could be chopped into chunks, sent through a network in a series of transmissions, then reassembled by destination computers quickly and efficiently. Historians credit seminal insights to Welsh scientist Donald W. Davies and American engineer Paul Baran. … The most important institutional force … was the Pentagon’s Advanced Research Projects Agency (ARPA) … as ARPA began work on a groundbreaking computer network, the agency recruited scientists affiliated with the nation’s top universities.

- ^ Stewart, Bill (January 2000). «Internet History – One Page Summary». The Living Internet. Archived from the original on 2 July 2014.

- ^ «#3 1982: the ARPANET community grows» in 40 maps that explain the internet Archived 6 March 2017 at the Wayback Machine, Timothy B. Lee, Vox Conversations, 2 June 2014. Retrieved 27 June 2014.

- ^ Strickland, Jonathan (3 March 2008). «How Stuff Works: Who owns the Internet?». Archived from the original on 19 June 2014. Retrieved 27 June 2014.

- ^ Hoffman, P.; Harris, S. (September 2006). The Tao of IETF: A Novice’s Guide to Internet Engineering Task Force. IETF. doi:10.17487/RFC4677. RFC 4677.

- ^ «New Seven Wonders panel». USA Today. 27 October 2006. Archived from the original on 15 July 2010. Retrieved 31 July 2010.

- ^ «Internetted». Oxford English Dictionary (Online ed.). Oxford University Press. (Subscription or participating institution membership required.) nineteenth-century use as an adjective.

- ^ «United States Army Field Manual FM 24-6 Radio Operator’s Manual Army Ground Forces June 1945». United States War Department.

{{cite web}}: CS1 maint: url-status (link) - ^ a b Cerf, Vint; Dalal, Yogen; Sunshine, Carl (December 1974). Specification of Internet Transmission Control Protocol. IETF. doi:10.17487/RFC0675. RFC 675.

- ^ a b c d Corbett, Philip B. (1 June 2016). «It’s Official: The ‘Internet’ Is Over». The New York Times. ISSN 0362-4331. Archived from the original on 14 October 2020. Retrieved 29 August 2020.

- ^ a b Herring, Susan C. (19 October 2015). «Should You Be Capitalizing the Word ‘Internet’?». Wired. ISSN 1059-1028. Archived from the original on 31 October 2020. Retrieved 29 August 2020.

- ^ Coren, Michael J. (2 June 2016). «One of the internet’s inventors thinks it should still be capitalized». Quartz. Archived from the original on 27 September 2020. Retrieved 8 September 2020.

- ^ «World Wide Web Timeline». Pews Research Center. 11 March 2014. Archived from the original on 29 July 2015. Retrieved 1 August 2015.

- ^ «HTML 4.01 Specification». World Wide Web Consortium. Archived from the original on 6 October 2008. Retrieved 13 August 2008.

[T]he link (or hyperlink, or Web link) [is] the basic hypertext construct. A link is a connection from one Web resource to another. Although a simple concept, the link has been one of the primary forces driving the success of the Web.

- ^ Hauben, Michael; Hauben, Ronda (1997). «5 The Vision of Interactive Computing And the Future». Netizens: On the History and Impact of Usenet and the Internet (PDF). Wiley. ISBN 978-0-8186-7706-9. Archived (PDF) from the original on 3 January 2021. Retrieved 2 March 2020.

- ^ Zelnick, Bob; Zelnick, Eva (1 September 2013). The Illusion of Net Neutrality: Political Alarmism, Regulatory Creep and the Real Threat to Internet Freedom. Hoover Press. ISBN 978-0-8179-1596-4. Archived from the original on 10 January 2021. Retrieved 7 May 2020.

- ^ Peter, Ian (2004). «So, who really did invent the Internet?». The Internet History Project. Archived from the original on 3 September 2011. Retrieved 27 June 2014.

- ^ «Inductee Details — Paul Baran». National Inventors Hall of Fame. Archived from the original on 6 September 2017. Retrieved 6 September 2017; «Inductee Details — Donald Watts Davies». National Inventors Hall of Fame. Archived from the original on 6 September 2017. Retrieved 6 September 2017.

- ^ Kim, Byung-Keun (2005). Internationalising the Internet the Co-evolution of Influence and Technology. Edward Elgar. pp. 51–55. ISBN 978-1-84542-675-0.

- ^ Gromov, Gregory (1995). «Roads and Crossroads of Internet History». Archived from the original on 27 January 2016.

- ^ Hafner, Katie (1998). Where Wizards Stay Up Late: The Origins of the Internet. Simon & Schuster. ISBN 978-0-684-83267-8.

- ^ Hauben, Ronda (2001). «From the ARPANET to the Internet». Archived from the original on 21 July 2009. Retrieved 28 May 2009.

- ^ «Internet Pioneers Discuss the Future of Money, Books, and Paper in 1972». Paleofuture. 23 July 2013. Archived from the original on 17 October 2020. Retrieved 31 August 2020.

- ^ Townsend, Anthony (2001). «The Internet and the Rise of the New Network Cities, 1969–1999». Environment and Planning B: Planning and Design. 28 (1): 39–58. doi:10.1068/b2688. ISSN 0265-8135. S2CID 11574572.

- ^ «NORSAR and the Internet». NORSAR. Archived from the original on 21 January 2013.

- ^ Kirstein, P.T. (1999). «Early experiences with the Arpanet and Internet in the United Kingdom» (PDF). IEEE Annals of the History of Computing. 21 (1): 38–44. doi:10.1109/85.759368. ISSN 1934-1547. S2CID 1558618. Archived from the original (PDF) on 7 February 2020.

- ^ Leiner, Barry M. «Brief History of the Internet: The Initial Internetting Concepts». Internet Society. Archived from the original on 9 April 2016. Retrieved 27 June 2014.

- ^ Cerf, V.; Kahn, R. (1974). «A Protocol for Packet Network Intercommunication» (PDF). IEEE Transactions on Communications. 22 (5): 637–648. doi:10.1109/TCOM.1974.1092259. ISSN 1558-0857. Archived (PDF) from the original on 13 September 2006.

The authors wish to thank a number of colleagues for helpful comments during early discussions of international network protocols, especially R. Metcalfe, R. Scantlebury, D. Walden, and H. Zimmerman; D. Davies and L. Pouzin who constructively commented on the fragmentation and accounting issues; and S. Crocker who commented on the creation and destruction of associations.

- ^ Leiner, Barry M.; Cerf, Vinton G.; Clark, David D.; Kahn, Robert E.; Kleinrock, Leonard; Lynch, Daniel C.; Postel, Jon; Roberts, Larry G.; Wolff, Stephen (2003). «A Brief History of Internet». Internet Society. p. 1011. arXiv:cs/9901011. Bibcode:1999cs……..1011L. Archived from the original on 4 June 2007. Retrieved 28 May 2009.

- ^ «The internet’s fifth man». The Economist. 30 November 2013. ISSN 0013-0613. Archived from the original on 19 April 2020. Retrieved 22 April 2020.