The Stroop effect is an effect named after John Ridley Stroop, who in his research revealed the dependence of the speed of a person’s reaction when choosing a word in cases where the color that depicts a word differs from the color that this word denotes.

The online Stroop Effect test offered on this page does not fully match the original Stroop Effect test. This test implements a formula that takes into account delays in user response under normal conditions and when mixing correct and false color names. This formula defines the progressive rate of a person’s response delay. We can say that the higher the coefficient obtained, the higher the reaction rate and flexibility of the cognitive functions of the human brain.

The rules for passing this test using the Stroop Effect online are very simple. The test is divided into three parts.

In the first part of the Stroop Effect online test, in the middle is a black word denoting some color (red, green …). Under this word are buttons colored in different colors. The respondent must press the button that the word describes (if it is written RED, press the RED button).

In the second part of the Stroop Effect online test, a square is displayed in the middle, painted in any color, and the respondent needs to click on one of the buttons below, of the same color.

In the third and final part of the Stroop Effect online test, a word is displayed in the middle, which is colored in one of five colors, and denoting a certain color (red, green …). The color of a word and the color that this word means, in most cases, do not match. Under this word are buttons colored in different colors. The respondent must press the button that DESCRIBES the word (if it is written RED in green, then press the RED button).

After passing the final part of the Stroop Effect online test, the resulting coefficient for the current test and the comparative indicators of the rest of the respondents who passed the Stroop Effect test online will be displayed.

Тест Стру́па (тест цветных слов Струпа, словесно-цветовой тест Струпа, тест словесно-цветовой интерференции), классический нейропсихологический тест, оценивающий лёгкость переработки информации в ситуации когнитивного конфликта.

Методология

В 1935 г. американский психолог Дж. Р. Струп опубликовал статью по результатам нескольких собственных экспериментов (Stroop. 1935).

Во втором эксперименте он использовал карточку со словами, напечатанными «неправильными» цветами, а в качестве контрольной – карточку с цветными квадратами тех же цветов. В этом эксперименте требовалось на контрольной карте называть цвет квадратов, а на экспериментальной – цвет чернил, не читая сами слова. Выяснилось, что время называния в условиях, когда вербальная и визуальная информация не совпадали (их ещё называют неконгруэнтными или конфликтными), было значимо выше. Эта закономерность получила название эффект Струпа.

Струп сделал вывод, что дополнительное время требуется для того, чтобы преодолеть интерференцию – подавить автоматизированный процесс чтения, а скорость, с которой происходит подавление автоматического процесса, является индикатором когнитивной гибкости. Существуют и другие объяснительные модели эффекта, в том числе подвергающие сомнению автоматизированность чтения (Algom. 2019; Besner. 1999), однако сам факт наличия эффекта сомнений не вызывает.

Эффект Струпа используется в экспериментах в оригинальной форме или с различными модификациями, касающимися:

-

количества и наименования цветов – кроме оригинальной комбинации (красный, синий, зелёный, коричневый и фиолетовый), также популярны варианты, включающие красный, синий, зелёный и жёлтый (Hammes. 1971; Spreen. 1998; Bayard. 2011) или только красный, синий и зелёный (Golden. 1978); встречаются и более экзотические комбинации: например, «греческий» вариант – красный, синий, зелёный и цвет загара (Zalonis. 2009) или «бразильский» – синий, зелёный и розовый (Zimmermann. 2015);

-

количества и расположения слов на странице – 10 колонок по 10 слов (Hammes. 1971), 5 колонок по 20 слов (Golden. 1978), 4 колонки по 6 слов (Spreen. 1998; Bayard. 2011), 4 колонки по 28 слов (Zalonis. 2009);

-

фона карт, на которых предъявляются стимулы (белый, чёрный);

-

формы цветных символов – буквы ХХХХ (Golden. 1978), прямоугольники (Comalli. 1962; Hammes. 1971), точки (Spreen. 1998), многоугольники;

-

дополнительного стимульного материала – например, в Victoria Stroop Test используется карта с нейтральными словами, напечатанными цветными чернилами (Spreen. 1998), в некоторых экспериментах – карта с несуществующими словами, буквосочетаниями (Kindt. 1996);

-

формата предъявления (бумажный, компьютерный);

-

типа запрашиваемого ответа – выбор из нескольких вариантов на экране, чтение вслух, выбор варианта на экране + чтение вслух и др. (Booth D. Test review. Vienna Test System : STROOP (STROOP) / D. Booth, D. Hughes, S. Waters, P. Lindley // The British Psychological Society Psychological Testing Centre. – 2014).

Исследователи нередко называют свои экспериментальные процедуры тестом Струпа, но при этом в большинстве случаев речь идёт не о психометрически обоснованном тесте, а об экспериментальной процедуре, основанной на эффекте Струпа. Существует, однако, и несколько психометрических методик с измеренной надёжностью, валидностью и тестовыми нормами.

Структура методики, способ проведения, версии

Бумажная версия предложена К. Голденом (Golden. 1978) и имеет название «словесно-цветовой тест Струпа» (Stroop Color Word Test) (рис. Словесно-цветовой тест Струпа).

Коммерческая компьютерная версия теста Струпа называется STROOP и распространяется австрийской компанией Schuhfried в составе тестовой батареи Vienna Test System. Методика существует на 20 языках, включая русский, к ней прилагается документация, касающаяся психометрических показателей (Booth. 2014). В ней слова и символы предъявляются не одновременно на странице, а по одному, последовательно, в виде отдельных тестовых заданий с вариантами ответа.

Обработка и интерпретация результатов методики

В бумажной версии теста подсчитывается разница между количеством правильных ответов по третьей карте (с интерференцией) и по второй карте (нейтральной).

В компьютерной методике рассчитываются два показателя – интерференция называния и интерференция чтения. Интерференция называния представляет собой разницу в скорости называния цветных квадратов и слов, напечатанных неконгруэнтными по цвету чернилами. Интерференция чтения – это разница в скорости чтения слов, напечатанных чёрным шрифтом, и слов, напечатанных неконгруэнтными по цвету чернилами.

Психометрические показатели

Бумажная версия

Есть данные о высокой ретестовой надёжности методики при повторном применении через 1–2 недели (Franzen. 1987), через 6 месяцев (Neyens. 1997) и через 11 месяцев (Dikmen. 1999). Конвергентная валидность теста Струпа подтверждена высокими корреляциями с другими нейропсихологическими методиками, требующими селективного внимания и участия ингибиторных процессов, в частности с тестом «Проложи путь» (Aloia. 1997; Weinstein. 1999) и тестом «Включённые фигуры» (Weinstein. 1999).

Компьютерная версия

Показатели внутренней надёжности (измеренные коэффициентом альфа Кронбаха) превышают 0,9, надёжность, измеренная методом расщепления, для разных форм методики (отличающихся методом компьютерного ввода – с клавиатуры, стилусом, с сенсорного экрана) лежит в границах от 0,85 до 0,99. Это ожидаемый результат для теста, измеряющего специфический узкий конструкт. Данные о конвергентной (дискриминантной) валидности методики очень скудны, единственное исследование, упомянутое в руководстве, проведено на очень маленькой выборке в 37 человек – показана корреляция между временем обнаружения сигнала и средним временем реакции в конгруэнтных условиях в тесте Струпа. В неконгруэнтных условиях корреляции ниже, что считается доказательством дискриминантной валидности, поскольку предполагается, что скорость и способность подавлять интерференцию в значительной степени независимы (Booth. 2014).

Область применения

Тест Струпа используется в качестве элемента нейропсихологических тестовых батарей, предназначенных для диагностики различных нарушений, связанных преимущественно с лобными долями мозга. В частности, он может быть полезен в диагностике нейропсихологических нарушений, вызванных:

-

приёмом наркотиков (Gottschalk. 2000);

-

повреждениями лобных долей мозга (Wildgruber. 2000);

-

закрытыми травмами головы (Shum. 1990);

-

болезнью Паркинсона (Mahieux. 1995);

-

лёгкими формами слабоумия (Nathan. 2001);

-

болезнью Альцгеймера (Bondi. 2002).

Также есть данные о возможностях диагностики тревожно-депрессивных расстройств, депрессии, тревожности при помощи теста Струпа (Dozois. 2001). Важно понимать, что с помощью теста Струпа можно обнаружить симптомы, которые указывают на наличие заболевания, но он редко позволяет отличить одно расстройство от другого.

Методики, использующие эффект Струпа

Существует целый ряд экспериментальных процедур, в которых эффект Струпа используется на материале, отличном от оригинального. Например, в словесно-изобразительном задании Струпа слова вписаны в изображения (предметные рисунки или геометрические формы). Если слово и изображение совпадают по значению, изображение идентифицируется быстрее, чем если слово не соответствует изображению (Hentschel. 1973).

В тесте направлений Струпа слова, указывающие направление («направо», «налево», «вверх», «вниз»), располагаются внутри стрелок, и испытуемых просят совершать движение в том направлении, на которое указывает стрелка. Интерференция обнаруживается в тех случаях, когда слово в стрелке не соответствует направлению, которое она указывает (Shor. 1970).

В счётном тесте Струпа испытуемых просят подсчитать количество слов на экране и затем нажать на соответствующую кнопку. В контрольной серии предъявляются наборы слов из одной понятийной категории (например, животные), в экспериментальной – сами слова являются числительными (Seymour. 1974).

Эмоциональный тест Струпа

Отдельного внимания заслуживает т. н. эмоциональная задача Струпа (Williams. 1996). Подобно классическому тесту Струпа в ней измеряется время реакции, необходимое для того, чтобы назвать цвет, которым написаны определённые слова. Слова, однако, являются не названиями цветов, а либо имеют нейтральное значение (например, «часы», «бутылка», «небо», «ветер», «карандаш»), либо связаны с определёнными эмоциональными состояниями или расстройствами (рис. Эмоциональная задача Струпа).

Данная экспериментальная процедура используется в диагностике и изучении тревожных расстройств и социофобии (Becker. 2001; Bar-Haim. 2007), страха смерти (Lundh. 1998), сезонного аффективного расстройства (Spinks. 2001), посттравматического расстройства (Cisler. 2011), алкогольной зависимости (Sharma. 2001), обсессивно-компульсивного расстройства (Kyrios. 1998).

Дата публикации: 11 июля 2022 г. в 13:44 (GMT+3)

The Stroop Color and Word Test is widely used in school and neuropsychological situations. It measures a person’s attention, executive functions, and inhibitory behavioral control.

The Stroop Color and Word Test has been used since 1935. It measures people’s attentional control using an interference system. For example, one of the challenges might be to read a series of color names written in a different shade from the one they designate. It seems simple but is actually difficult in practice.

This is one of the most common and popular psychological tests. It’s quite old and largely studied. In fact, it was J.M. Cattell who realized the relevance of this concept in 1886: human beings discriminate colors before words. The reading process is more complex and requires a higher level of attention and concentration.

However, it was experimental psychologist John Ridley Stroop who delved much deeper into the relationship between cognition and inference. He coined the term “Stroop effect”. This idea summarizes the difficulty people have when reading the word “green” written in red. Something like this is quite difficult and doctors use it to evaluate neuropsychological problems.

What does the Stroop Color and Word Test measure?

This test is an attentional test with which to evaluate the ability of a person to classify information from the environment and to react selectively to that information.

- It’s a common instrument in neuropsychological practice to identify students, hyperactive or not, with attention deficits. It’s also helpful in the evaluation of people with dementia and brain damage and can even help measure how stress affects a person’s attentional processes.

- Similarly, studies such as one conducted at the University of Minnesota reveal the brain area linked to the Stroop task is the anterior cingulate cortex and the dorsolateral prefrontal cortex.

- Finally, these structures concentrate processes related to memory, attention, reading, and color discrimination.

How to apply the Stroop Color and Word Test

This test is quite simple to use and apply.

- Professionals apply it individually.

- It takes less than 15 minutes.

- In addition, professionals apply it to people who can read, so that, on average, they use it in people between the ages of seven and 70.

- The test consists of three sheets containing 100 items.

- In addition, the purpose is for the subject to be able to reduce their impulses; that first automatic response that arises when looking at the pictures. The most common mistake is to say “green” when a different word is written in this color.

- Finally, the test measures selective attention as well as processing speed.

The three phases of the Stroop Color and Word Test

This test consists of three specific yet simple phases. There are three different sheets and five columns in each of them that contain three elements. The subject must do the following.

Read in the first phase

The first task is the simplest and intended to break the ice. At the same time, it introduces the person to the test. To do so, the person gets a list of three words repeated in an arbitrary manner: red, blue, and green. The difficulty lies in the fact that these words are all written in black. Furthermore, the objective is to not make mistakes while reading them quickly.

The second phase is about accuracy in color vs. figure

In this phase, the person gets a second card with a series of figures in different colors. The objective is simple, although not without difficulty for those who aren’t paying attention. The person must first identify the color of each figure. Then, they must state what symbol or figure it is (a square, cross, star, etc.).

The third phase is about inference

This is the best known. There’s a set of words in different colors in this card — red, blue, and green. The purpose here is for the person to read each word quickly and without making mistakes. The difficulty, of course, lies in the fact that these words are names of colors and the color of the type with which they’re written doesn’t correspond to their actual meaning.

How to evaluate the Stroop Color and Word Test

There are two variables to evaluate this test: the number of hits and the response time. Both factors are important because some people might spend an excessive amount of time performing a single task. Thus, the professional must take all the data into account, including the difficulty in understanding the instructions (something common in cases of dementia or drug abuse).

On average, the Stroop Color and Word Test is particularly useful for assessing executive functions in patients with Alzheimer’s disease, schizophrenia, and Huntington’s chorea. This is because damage to the anterior cingulate cortex and prefrontal cortex is usually progressive and noticeable. As you can see, this instrument is as simple as it’s useful. In fact, it’s still valid despite its age but remarkably effective, above all.

It might interest you…

Introduction

The Stroop Color and Word Test (SCWT) is a neuropsychological test extensively used for both experimental and clinical purposes. It assesses the ability to inhibit cognitive interference, which occurs when the processing of a stimulus feature affects the simultaneous processing of another attribute of the same stimulus (Stroop, 1935). In the most common version of the SCWT, which was originally proposed by Stroop in the 1935, subjects are required to read three different tables as fast as possible. Two of them represent the “congruous condition” in which participants are required to read names of colors (henceforth referred to as color-words) printed in black ink (W) and name different color patches (C). Conversely, in the third table, named color-word (CW) condition, color-words are printed in an inconsistent color ink (for instance the word “red” is printed in green ink). Thus, in this incongruent condition, participants are required to name the color of the ink instead of reading the word. In other words, the participants are required to perform a less automated task (i.e., naming ink color) while inhibiting the interference arising from a more automated task (i.e., reading the word; MacLeod and Dunbar, 1988; Ivnik et al., 1996). This difficulty in inhibiting the more automated process is called the Stroop effect (Stroop, 1935). While the SCWT is widely used to measure the ability to inhibit cognitive interference; previous literature also reports its application to measure other cognitive functions such as attention, processing speed, cognitive flexibility (Jensen and Rohwer, 1966), and working memory (Kane and Engle, 2003). Thus, it may be possible to use the SCWT to measure multiple cognitive functions.

In the present article, we present a systematic review of the SCWT literature in order to assess the theoretical adequacy of the different scoring methods proposed to measure the Stroop effect (Stroop, 1935). We focus on Italian literature, which reports the use of several versions of the SCWT that vary in in terms of stimuli, administration protocol, and scoring methods. Finally, we attempt to indicate a score method that allows measuring the ability to inhibit cognitive interference in reference to the subjects’ performance in SCWT.

Methods

We looked for normative studies of the SCWT. All studies included a healthy adult population. Since our aim was to understand the various available scoring methods, no studies were excluded on the basis of age, gender, and/or education of participants, or the specific version of SCWT used (e.g., short or long, computerized or paper). Studies were identified using electronic databases and citations from a selection of relevant articles. The electronic databases searched included PubMed (All years), Scopus (All years) and Google Scholar (All years). The last search was run on the 22nd February, 2017, using the following search terms: “Stroop; test; normative.” All studies written in English and Italian were included.

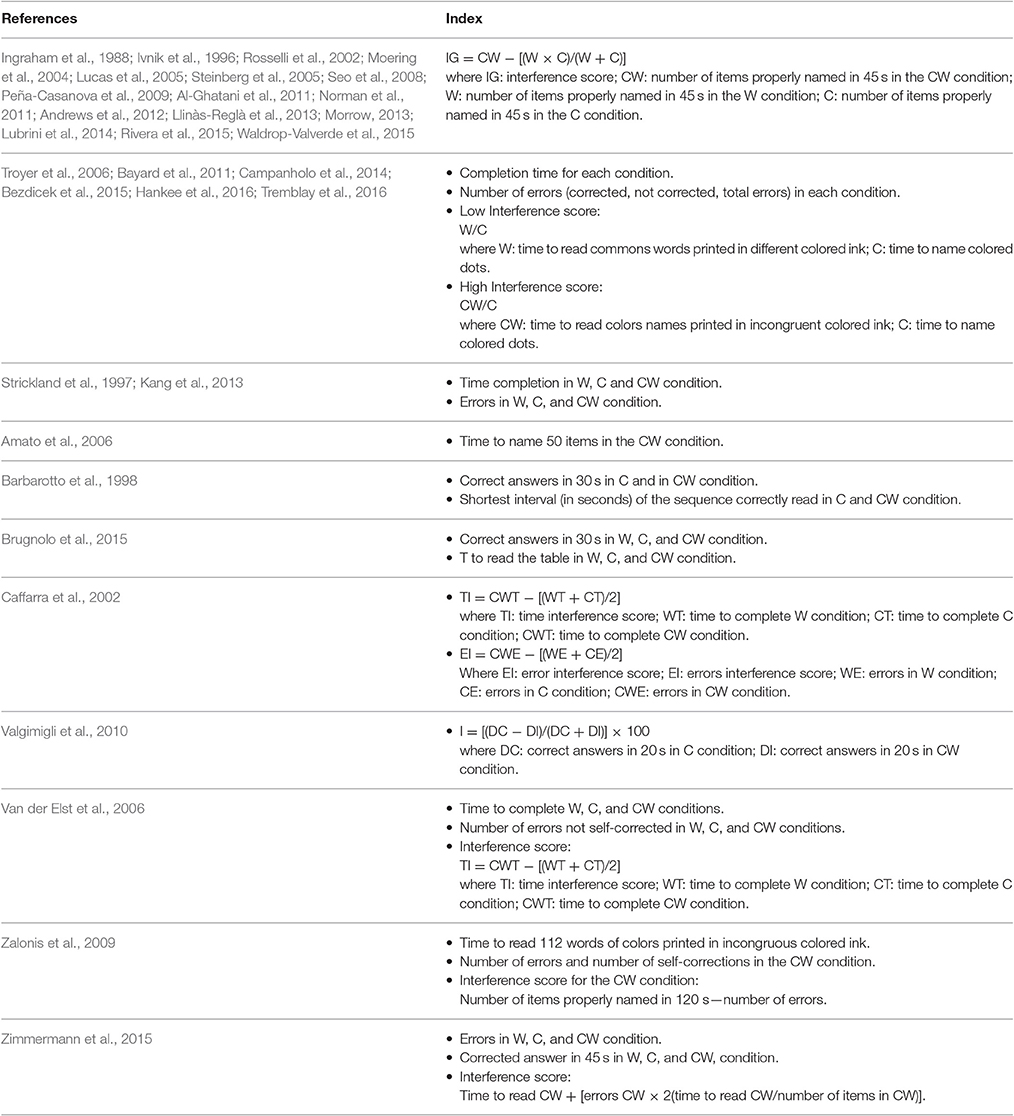

Two independent reviewers screened the papers according to their titles and abstracts; no disagreements about suitability of the studies was recorded. Thereafter, a summary chart was prepared to highlight mandatory information that had to be extracted from each report (see Table 1).

Table 1. Summary of data extracted from reviewed articles; those related to the Italian normative data are in bold.

One Author extracted data from papers while the second author provided further supervision. No disagreements about extracted data emerged. We did not seek additional information from the original reports, except for Caffarra et al. (2002), whose full text was not available: relevant information have been extracted from Barletta-Rodolfi et al. (2011).

We extracted the following information from each article:

• Year of publication.

• Indexes whose normative data were provided.

Eventually, as regards the variables of interest, we focused on those scores used in the reviewed studies to assess the performance at the SCWT.

Results

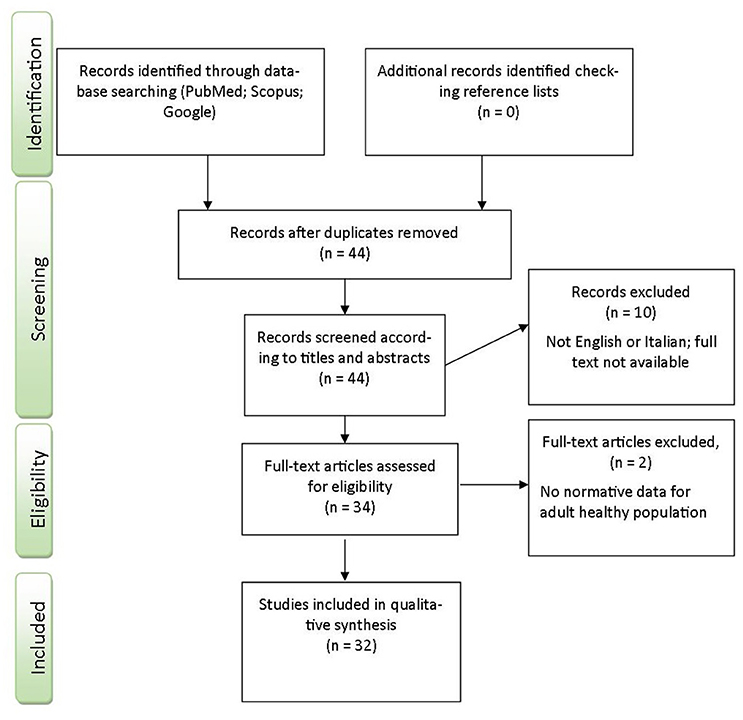

We identified 44 articles from our electronic search and screening process. Eleven of them were judged inadequate for our purpose and excluded. Four papers were excluded as they were written in languages other than English or Italian (Bast-Pettersen, 2006; Duncan, 2006; Lopez et al., 2013; Rognoni et al., 2013); two were excluded as they included children (Oliveira et al., 2016) and a clinical population (Venneri et al., 1992). Lastly, we excluded six Stroop Test manuals, since not entirely procurable (Trenerry et al., 1989; Artiola and Fortuny, 1999; Delis et al., 2001; Golden and Freshwater, 2002; Mitrushina et al., 2005; Strauss et al., 2006a). At the end of the selection process we had 32 articles suitable for review (Figure 1).

Figure 1. Flow diagram of studies selection process.

From the systematic review, we extracted five studies with Italian normative data. Details are reported in Table 1. Of the remaining 27 studies that provide normative data for non-Italian populations, 16 studies (Ivnik et al., 1996; Ingraham et al., 1988; Rosselli et al., 2002; Moering et al., 2004; Lucas et al., 2005; Steinberg et al., 2005; Seo et al., 2008; Peña-Casanova et al., 2009; Al-Ghatani et al., 2011; Norman et al., 2011; Andrews et al., 2012; Llinàs-Reglà et al., 2013; Morrow, 2013; Lubrini et al., 2014; Rivera et al., 2015; Waldrop-Valverde et al., 2015) adopted the scoring method proposed by Golden (1978). In this method, the number of items correctly named in 45 s in each conditions is calculated (i.e., W, C, CW). Then the predicted CW score (Pcw) is calculated using the following formula:

equivalent to:

Then, the Pcw value is subtracted from the actual number of items correctly named in the incongruous condition (CW) (i.e., IG = CW − Pcw): this procedure allows to obtain an interference score (IG) based on the performance in both W and C conditions. Thus, a negative IG value represents a pathological ability to inhibit interference, where a lower score means greater difficulty in inhibiting interference.

Six articles (Troyer et al., 2006; Bayard et al., 2011; Campanholo et al., 2014; Bezdicek et al., 2015; Hankee et al., 2016; Tremblay et al., 2016) adopted the Victoria Stroop Test. In this version, three conditions are assessed: the C and the CW correspond to the equivalent conditions of the original version of the test (Stroop, 1935), while the W condition includes common words which do not refer to colors. This condition represents an intermediate inhibition condition, as the interference effect between the written word and the color name is not present. In this SCWT form (Strauss et al., 2006b), for each condition, the completion time and the number of errors (corrected, non-corrected, and total errors) are recorded and two interference scores are computed:

Five studies (Strickland et al., 1997; Van der Elst et al., 2006; Zalonis et al., 2009; Kang et al., 2013; Zimmermann et al., 2015) adopted different SCWT versions. Three of them (Strickland et al., 1997; Van der Elst et al., 2006; Kang et al., 2013) computed, independently, the completion time and the number of errors for each condition. Additionally, Van der Elst et al. (2006), computed an interference score based on the speed performance only:

where WT, CT, and CWT represent the time to complete the W, C, and CW table, respectively. Zalonis et al. (2009) recorded: (i) the time; (ii) the number of errors and (iii) the number of self-corrections in the CW. Moreover, they computed an interference score subtracting the number of errors in the CW conditions from the number of items properly named in 120 s in the same table. Lastly, Zimmermann et al. (2015) computed the number of errors and the number of correct answers given in 45 s in each conditions. Additionally, they calculated an interference score derived by the original scoring method provided by Stroop (1935).

Of the five studies (Barbarotto et al., 1998; Caffarra et al., 2002; Amato et al., 2006; Valgimigli et al., 2010; Brugnolo et al., 2015) that provide normative data for the Italian population, two are originally written in Italian (Caffarra et al., 2002; Valgimigli et al., 2010), while the others are written in English (Barbarotto et al., 1998; Amato et al., 2006; Brugnolo et al., 2015). An English translation of the title and abstract of Caffarra et al. (2002) is available. Three of the studies consider the performance only on the SCWT (Caffarra et al., 2002; Valgimigli et al., 2010; Brugnolo et al., 2015) while the others also include other neuropsychological tests in the experimental assessment (Barbarotto et al., 1998; Amato et al., 2006). The studies are heterogeneous in that they differ in terms of administered conditions, scoring procedures, number of items, and colors used. Three studies adopted a 100-items version of the SCWT (Amato et al., 2006; Valgimigli et al., 2010; Brugnolo et al., 2015) which is similar to the original version proposed by Stroop (1935). In this version, in every condition (i.e., W, C, CW), items are arranged in a matrix of 10 × 10 columns and rows; the colors are red, green, blue, brown, and purple. However, while two of these studies administered the W, C, and CW conditions once (Amato et al., 2006; Valgimigli et al., 2010), Barbarotto et al. (1998) administered the CW table twice, requiring participants to read the word during the first administration and then to name the ink color during the consecutive administration. Additionally, they also administered a computerized version of the SCWT in which 40 stimuli are presented in each condition; red, blue, green, and yellow are used. Valgimigli et al. (2010) and Caffarra et al. (2002) administered shorter paper versions of the SCWT including only three colors (i.e., red, blue, green). More specifically, the former administered only the C and CW conditions including 60 items each, arranged in six columns of 10 items. The latter employed a version of 30 items for each condition (i.e., W, C, CW), arranged in three columns of 10 items each.

Only two of the five studies assessed and provided normative data for all the conditions of the SCWT (i.e., W, C, CW; Caffarra et al., 2002; Brugnolo et al., 2015), while others provide only partial results. Valgimigli et al. (2010) provided normative data only for the C and CW condition, while Amato et al. (2006) and Barbarotto et al. (1998) administered all the SCWT conditions (i.e., W, C, CW) but provide normative data only for the CW condition, and the C and CW condition respectively.

These studies use different methods to compute subjects’ performance. Some studies record the time needed, independently in each condition, to read all (Amato et al., 2006) or a fixed number (Valgimigli et al., 2010) of presented stimuli. Others consider the number of correct answers produced in a fixed time (30 s; Amato et al., 2006; Brugnolo et al., 2015). Caffarra et al. (2002) and Valgimigli et al. (2010) provide a more complex interference index that relates the subject’s performance in the incongruous condition with the performance in the others. In Caffarra et al. (2002), two interference indexes based on reading speed and accuracy, respectively, are computed using the following formula:

Furthermore, in Valgimigli et al. (2010) an interference score is computed using the formula:

where DC represents the correct answers produced in 20 s in naming colors and DI corresponds to the correct answers achieved in 20 s in the interference condition. However, they do not take into account the performance on the word reading condition.

Discussion

According to the present review, multiple SCWT scoring methods are available in literature, with Golden’s (1978) version being the most widely used. In the Italian literature, the heterogeneity in SCWT scoring methods increases dramatically. The parameters of speed and accuracy of the performance, essential for proper detection of the Stroop Effect, are scored differently between studies, thus highlighting methodological inconsistencies. Some of the reviewed studies score solely the speed of the performance (Amato et al., 2006; Valgimigli et al., 2010). Others measure both the accuracy and speed of performance (Barbarotto et al., 1998; Brugnolo et al., 2015); however, they provide no comparisons between subjects’ performance on the different SCWT conditions. On the other hand, Caffarra et al. (2002) compared performance in the W, C, and CW conditions; however, they computed speed and accuracy independently. Only Valgimigli et al. (2010) present a scoring method in which an index merging speed and accuracy is computed for the performance in all the conditions; however, the Authors assessed solely the performance in the C and the CW conditions, neglecting the subject’s performance in the W condition.

In our opinion, the reported scoring methods impede an exhaustive description of the performance on the SCWT, as suggested by clinical practice. For instance, if only the reading time is scored, while accuracy is not computed (Amato et al., 2006) or is computed independently (Caffarra et al., 2002), the consequences of possible inhibition difficulties on the processing speed cannot be assessed. Indeed, patients would report a non-pathological reading speed in the incongruous condition, despite extremely poor performance, even if they do not apply the rule “naming ink color,” simply reading the word (e.g., in CW condition, when the stimulus is the word/red/printed in green ink, patient says “Red” instead of “Green”). Such behaviors provide an indication of the failure to maintain consistent activation of the intended response in the incongruent Stroop condition, even if the participants properly understand the task. Such scenarios are often reported in different clinical populations. For example, in the incongruous condition, patients with frontal lesions (Vendrell et al., 1995; Stuss et al., 2001; Swick and Jovanovic, 2002) as well as patients affected by Parkinson’s Disease (Fera et al., 2007; Djamshidian et al., 2011) reported significant impairments in terms of accuracy, but not in terms of processing speed. Counting the number of correct answers in a fixed time (Amato et al., 2006; Valgimigli et al., 2010; Brugnolo et al., 2015) may be a plausible solution.

Moreover, it must be noted that error rate (and not the speed) is an index of inhibitory control (McDowd et al., 1995) or an index of ability to maintain the tasks goal temporarily in a highly retrievable state (Kane and Engle, 2003). Nevertheless, computing exclusively the error rate (i.e., the accuracy in the performance), without measuring the speed of performance, would be insufficient for an extensive evaluation of the performance in the SCWT. In fact, the behavior in the incongruous condition (i.e., CW) may be affected by difficulties that are not directly related to an impaired ability to suppress the interference process, which may lead to misinterpretation of the patient’s performance. People affected by color-blindness or dyslexia would represent the extreme case. Nonetheless, and more ordinarily, slowness, due to clinical circumstances like dysarthria, mood disorders such as depression, or collateral medication effect, may irremediably affect the performance in the SCWT. In Parkinson’s Disease, ideomotor slowness (Gardner et al., 1959; Jankovic et al., 1990) impacts the processing speed in all SCWT conditions, determining a global difficulty in the response execution rather than a specific impairment in the CW condition (Stacy and Jankovic, 1992; Hsieh et al., 2008). Consequently, it seems necessary to relate the performance in the incongruous condition to word reading and color naming abilities, when inhibition capability has to be assessed, as proposed by Caffarra et al. (2002). In this method the W score and C score were subtracted from CW score. However, as previously mentioned, the scoring method suggested by Caffarra et al. (2002) computes errors and speed separately. Thus, so far, none of the proposed Italian normative scoring methods seem adequate to assess patients’ performance in the SCWT properly and informatively.

Examples of more suitable interference scores can be found in non-Italian literature. Stroop (1935) proposed that the ability to inhibit cognitive interference can be measured in the SCWT using the formula:

where, total time is the overall time for reading; mean time per word is the overall time for reading divided by the number of items; and the number of uncorrected errors is the number of errors not spontaneously corrected. Gardner et al. (1959) also propose a similar formula:

where 100 refers to the number of stimuli used in this version of the SCWT. When speed and errors are computed together, the correct recognition of patients who show difficulties in inhibiting interference despite a non-pathological reading time, increases. However, both the mentioned scores (Stroop, 1935; Mitrushina et al., 2005) may be susceptible to criticism (Jensen and Rohwer, 1966). In fact, even though accuracy and speed are merged into a global score in these studies (Stroop, 1935; Mitrushina et al., 2005), they are not computed independently. In Gardner et al. (1959) the number of errors are computed in relation to the mean time per item and then added to the total time, which may be redundant and lead to a miscomputation.

The most adopted scoring method in the international panorama is Golden (1978). Lansbergen et al. (2007) point out that the index IG might not be adequately corrected for inter-individual differences in the reading ability, despite its effective adjustment for color naming. The Authors highlight that the reading process is more automated in expert readers, and, consequently, they may be more susceptible to interference (Lansbergen et al., 2007), thus, requiring that the score is weighted according to individual reading ability. However, experimental data suggests that the increased reading practice does not affect the susceptibility to interference in SCWT (Jensen and Rohwer, 1966). Chafetz and Matthews (2004)’s article might be useful for a deeper understanding of the relationship between reading words and naming colors, but the debate about the role of reading ability on the inhibition process is still open. The issue about the role of reading ability on the SCWT performance cannot be adequately satisfied even if the Victoria Stroop Test scoring method (Strauss et al., 2006b) is adopted, since the absence of the standard W condition.

In the light of the previous considerations, we recommend that a scoring method for the SCWT should fulfill two main requirements. First, both accuracy and speed must be computed for all SCWT conditions. And secondly, a global index must be calculated to relate the performance in the incongruous condition to reading words and color naming abilities. The first requirement can be achieved by counting the number of correct answers in each condition in within a fixed time (Amato et al., 2006; Valgimigli et al., 2010; Brugnolo et al., 2015). The second requirement can be achieved by subtracting the W score and C score from CW score, as suggested by Caffarra et al. (2002). None of the studies reviewed satisfies both these requirements.

According to the review, the studies with Italian normative data present different theoretical interpretations of the SCWT scores. Amato et al. (2006) and Caffarra et al. (2002) describe the SCWT score as a measure of the fronto-executive functioning, while others use it as an index of the attentional functioning (Barbarotto et al., 1998; Valgimigli et al., 2010) or of general cognitive efficiency (Brugnolo et al., 2015). Slowing to a response conflict would be due to a failure of selective attention or a lack in the cognitive efficiency instead of a failure of response inhibition (Chafetz and Matthews, 2004); however, the performance in the SCWT is not exclusively related to concentration, attention or cognitive effectiveness, but it relies to a more specific executive-frontal domain. Indeed, subjects have to process selectively a specific visual feature blocking out continuously the automatic processing of reading (Zajano and Gorman, 1986; Shum et al., 1990), in order to solve correctly the task. The specific involvement of executive processes is supported by clinical data. Patients with anterior frontal lesions, and not with posterior cerebral damages, report significant difficulties in maintaining a consistent activation of the intended response (Valgimigli et al., 2010). Furthermore, Parkinson’s Disease patients, characterized by executive dysfunction due to the disruption of dopaminergic pathway (Fera et al., 2007), reported difficulties in SCWT despite unimpaired attentional abilities (Fera et al., 2007; Djamshidian et al., 2011).

Conclusion

According to the present review, the heterogeneity in the SCWT scoring methods in international literature, and most dramatically in Italian literature, seems to require an innovative, alternative and unanimous scoring system to achieve a more proper interpretation of the performance in the SCWT. We propose to adopt a scoring method in which (i) the number of correct answers in a fixed time in each SCWT condition (W, C, CW) and (ii) a global index relative to the CW performance minus reading and/or colors naming abilities, are computed. Further studies are required to collect normative data for this scoring method and to study its applicability in clinical settings.

Author Contributions

Conception of the work: FS. Acquisition of data: ST. Analysis and interpretation of data for the work: FS and ST. Writing: ST, and revising the work: FS. Final approval of the version to be published and agreement to be accountable for all aspects of the work: FS and ST.

Conflict of Interest Statement

The authors declare that the research was conducted in the absence of any commercial or financial relationships that could be construed as a potential conflict of interest.

Acknowledgments

The Authors thank Prerana Sabnis for her careful proofreading of the manuscript.

References

Al-Ghatani, A. M., Obonsawin, M. C., Binshaig, B. A., and Al-Moutaery, K. R. (2011). Saudi normative data for the Wisconsin Card Sorting test, Stroop test, test of non-verbal intelligence-3, picture completion and vocabulary (subtest of the wechsler adult intelligence scale-revised). Neurosciences 16, 29–41.

PubMed Abstract | Google Scholar

Amato, M. P., Portaccio, E., Goretti, B., Zipoli, V., Ricchiuti, L., De Caro, M. F., et al. (2006). The Rao’s brief repeatable battery and stroop test: normative values with age, education and gender corrections in an Italian population. Mult. Scler. 12, 787–793. doi: 10.1177/1352458506070933

PubMed Abstract | CrossRef Full Text | Google Scholar

Andrews, K., Shuttleworth-Edwards, A., and Radloff, S. (2012). Normative indications for Xhosa speaking unskilled workers on the Trail Making and Stroop Tests. J. Psychol. Afr. 22, 333–341. doi: 10.1080/14330237.2012.10820538

CrossRef Full Text | Google Scholar

Artiola, L., and Fortuny, L. A. I. (1999). Manual de Normas Y Procedimientos Para la Bateria Neuropsicolog. Tucson, AZ: Taylor & Francis.

Barbarotto, R., Laiacona, M., Frosio, R., Vecchio, M., Farinato, A., and Capitani, E. (1998). A normative study on visual reaction times and two Stroop colour-word tests. Neurol. Sci. 19, 161–170. doi: 10.1007/BF00831566

PubMed Abstract | CrossRef Full Text | Google Scholar

Barletta-Rodolfi, C., Gasparini, F., and Ghidoni, E. (2011). Kit del Neuropsicologo Italiano. Bologna: Società Italiana di Neuropsicologia.

Bast-Pettersen, R. (2006). The Hugdahl Stroop Test: A normative stud y involving male industrial workers. J. Norwegian Psychol. Assoc. 43, 1023–1028.

Bayard, S., Erkes, J., and Moroni, C. (2011). Collège des psychologues cliniciens spécialisés en neuropsychologie du languedoc roussillon (CPCN Languedoc Roussillon). Victoria Stroop Test: normative data in a sample group of older people and the study of their clinical applications in the assessment of inhibition in Alzheimer’s disease. Arch. Clin. Neuropsychol. 26, 653–661. doi: 10.1093/arclin/acr053

PubMed Abstract | CrossRef Full Text | Google Scholar

Bezdicek, O., Lukavsky, J., Stepankova, H., Nikolai, T., Axelrod, B. N., Michalec, J., et al. (2015). The Prague Stroop Test: normative standards in older Czech adults and discriminative validity for mild cognitive impairment in Parkinson’s disease. J. Clin. Exp. Neuropsychol. 37, 794–807. doi: 10.1080/13803395.2015.1057106

PubMed Abstract | CrossRef Full Text | Google Scholar

Brugnolo, A., De Carli, F., Accardo, J., Amore, M., Bosia, L. E., Bruzzaniti, C., et al. (2015). An updated Italian normative dataset for the Stroop color word test (SCWT). Neurol. Sci. 37, 365–372. doi: 10.1007/s10072-015-2428-2

PubMed Abstract | CrossRef Full Text | Google Scholar

Caffarra, P., Vezzaini, G., Dieci, F., Zonato, F., and Venneri, A. (2002). Una versione abbreviata del test di Stroop: dati normativi nella popolazione italiana. Nuova Rivis. Neurol. 12, 111–115.

Campanholo, K. R., Romão, M. A., Machado, M. A. D. R., Serrao, V. T., Coutinho, D. G. C., Benute, G. R. G., et al. (2014). Performance of an adult Brazilian sample on the Trail Making Test and Stroop Test. Dement. Neuropsychol. 8, 26–31. doi: 10.1590/S1980-57642014DN81000005

CrossRef Full Text | Google Scholar

Delis, D. C., Kaplan, E., and Kramer, J. H. (2001). Delis-Kaplan Executive Function System (D-KEFS). San Antonio, TX: Psychological Corporation.

Djamshidian, A., O’Sullivan, S. S., Lees, A., and Averbeck, B. B. (2011). Stroop test performance in impulsive and non impulsive patients with Parkinson’s disease. Parkinsonism Relat. Disord. 17, 212–214. doi: 10.1016/j.parkreldis.2010.12.014

PubMed Abstract | CrossRef Full Text | Google Scholar

Duncan, M. T. (2006). Assessment of normative data of Stroop test performance in a group of elementary school students Niterói. J. Bras. Psiquiatr. 55, 42–48. doi: 10.1590/S0047-20852006000100006

CrossRef Full Text | Google Scholar

Fera, F., Nicoletti, G., Cerasa, A., Romeo, N., Gallo, O., Gioia, M. C., et al. (2007). Dopaminergic modulation of cognitive interference after pharmacological washout in Parkinson’s disease. Brain Res. Bull. 74, 75–83. doi: 10.1016/j.brainresbull.2007.05.009

PubMed Abstract | CrossRef Full Text | Google Scholar

Gardner, R. W., Holzman, P. S., Klein, G. S., Linton, H. P., and Spence, D. P. (1959). Cognitive control: a study of individual consistencies in cognitive behaviour. Psychol. Issues 1, 1–186.

Golden, C. J. (1978). Stroop Color and Word Test: A Manual for Clinical and Experimental Uses. Chicago, IL: Stoelting Co.

Golden, C. J., and Freshwater, S. M. (2002). The Stroop Color and Word Test: A Manual for Clinical and Experimental Uses. Chicago, IL: Stoelting.

Hankee, L. D., Preis, S. R., Piers, R. J., Beiser, A. S., Devine, S. A., Liu, Y., et al. (2016). Population normative data for the CERAD word list and Victoria Stroop Test in younger-and middle-aged adults: cross-sectional analyses from the framingham heart study. Exp. Aging Res. 42, 315–328. doi: 10.1080/0361073X.2016.1191838

PubMed Abstract | CrossRef Full Text | Google Scholar

Hsieh, Y. H., Chen, K. J., Wang, C. C., and Lai, C. L. (2008). Cognitive and motor components of response speed in the Stroop test in Parkinson’s disease patients. Kaohsiung J. Med. Sci. 24, 197–203. doi: 10.1016/S1607-551X(08)70117-7

PubMed Abstract | CrossRef Full Text | Google Scholar

Ivnik, R. J., Malec, J. F., Smith, G. E., Tangalos, E. G., and Petersen, R. C. (1996). Neuropsychological tests’ norms above age 55: COWAT, BNT, MAE token, WRAT-R reading, AMNART, STROOP, TMT, and JLO. Clin. Neuropsychol. 10, 262–278. doi: 10.1080/13854049608406689

CrossRef Full Text | Google Scholar

Jankovic, J., McDermott, M., Carter, J., Gauthier, S., Goetz, C., Golbe, L., et al. (1990). Parkinson Study Group. Variable expression of Parkinson’s disease: a base-line analysis of DATATOP cohort. Neurology 40, 1529–1534.

PubMed Abstract | Google Scholar

Jensen, A. R., and Rohwer, W. D. (1966). The Stroop Color-Word Test: a Review. Acta Psychol. 25, 36–93. doi: 10.1016/0001-6918(66)90004-7

PubMed Abstract | CrossRef Full Text

Kane, M. J., and Engle, R. W. (2003). Working-memory capacity and the control of attention: the contributions of goal neglect, response competition, and task set to Stroop interference. J. Exp. Psychol. Gen. 132, 47–70. doi: 10.1037/0096-3445.132.1.47

PubMed Abstract | CrossRef Full Text | Google Scholar

Kang, C., Lee, G. J., Yi, D., McPherson, S., Rogers, S., Tingus, K., et al. (2013). Normative data for healthy older adults and an abbreviated version of the Stroop test. Clin. Neuropsychol. 27, 276–289. doi: 10.1080/13854046.2012.742930

PubMed Abstract | CrossRef Full Text | Google Scholar

Lansbergen, M. M., Kenemans, J. L., and van Engeland, H. (2007). Stroop interference and attention-deficit/hyperactivity disorder: a review and meta-analysis. Neuropsychology 21:251. doi: 10.1037/0894-4105.21.2.251

PubMed Abstract | CrossRef Full Text | Google Scholar

Llinàs-Reglà, J., Vilalta-Franch, J., López-Pousa, S., Calvó-Perxas, L., and Garre-Olmo, J. (2013). Demographically adjusted norms for Catalan older adults on the Stroop Color and Word Test. Arch. Clin. Neuropsychol. 28, 282–296. doi: 10.1093/arclin/act003

PubMed Abstract | CrossRef Full Text | Google Scholar

Lopez, E., Salazar, X. F., Villasenor, T., Saucedo, C., and Pena, R. (2013). “Validez y datos normativos de las pruebas de nominación en personas con educación limitada,” in Poster Presented at The Congress of the “Sociedad Lationoamericana de Neuropsicologıa” (Montreal, QC).

Lubrini, G., Periañez, J. A., Rios-Lago, M., Viejo-Sobera, R., Ayesa-Arriola, R., Sanchez-Cubillo, I., et al. (2014). Clinical Spanish norms of the Stroop test for traumatic brain injury and schizophrenia. Span. J. Psychol. 17:E96. doi: 10.1017/sjp.2014.90

PubMed Abstract | CrossRef Full Text | Google Scholar

Lucas, J. A., Ivnik, R. J., Smith, G. E., Ferman, T. J., Willis, F. B., Petersen, R. C., et al. (2005). Mayo’s older african americans normative studies: norms for boston naming test, controlled oral word association, category fluency, animal naming, token test, wrat-3 reading, trail making test, stroop test, and judgment of line orientation. Clin. Neuropsychol. 19, 243–269. doi: 10.1080/13854040590945337

PubMed Abstract | CrossRef Full Text | Google Scholar

MacLeod, C. M., and Dunbar, K. (1988). Training and Stroop-like interference: evidence for a continuum of automaticity. J. Exp. Psychol. Learn. Mem. Cogn. 14, 126–135. doi: 10.1037/0278-7393.14.1.126

PubMed Abstract | CrossRef Full Text | Google Scholar

McDowd, J. M., Oseas-Kreger, D. M., and Filion, D. L. (1995). “Inhibitory processes in cognition and aging,” in Interference and Inhibition in Cognition, eds F. N. Dempster and C. J. Brainerd (San Diego, CA: Academic Press), 363–400.

Google Scholar

Mitrushina, M., Boone, K. B., Razani, J., and D’Elia, L. F. (2005). Handbook of Normative Data for Neuropsychological Assessment. New York, NY: Oxford University Press.

Google Scholar

Moering, R. G., Schinka, J. A., Mortimer, J. A., and Graves, A. B. (2004). Normative data for elderly African Americans for the Stroop color and word test. Arch. Clin. Neuropsychol. 19, 61–71. doi: 10.1093/arclin/19.1.61

PubMed Abstract | CrossRef Full Text | Google Scholar

Norman, M. A., Moore, D. J., Taylor, M., Franklin, D. Jr., Cysique, L., Ake, C., et al. (2011). Demographically corrected norms for African Americans and Caucasians on the hopkins verbal learning test–revised, brief visuospatial memory test–revised, stroop color and word test, and wisconsin card sorting test 64-card version. J. Clin. Exp. Neuropsychol. 33, 793–804. doi: 10.1080/13803395.2011.559157

PubMed Abstract | CrossRef Full Text | Google Scholar

Oliveira, R. M., Mograbi, D. C., Gabrig, I. A., and Charchat-Fichman, H. (2016). Normative data and evidence of validity for the Rey Auditory Verbal Learning Test, Verbal Fluency Test, and Stroop Test with Brazilian children. Psychol. Neurosci. 9, 54–67. doi: 10.1037/pne0000041

CrossRef Full Text | Google Scholar

Peña-Casanova, J., Qui-ones-Ubeda, S., Gramunt-Fombuena, N., Quintana, M., Aguilar, M., Molinuevo, J. L., et al. (2009). Spanish multicenter normative studies (NEURONORMA Project): norms for the Stroop color-word interference test and the Tower of London-Drexel. Arch. Clin. Neuropsychol. 24, 413–429. doi: 10.1093/arclin/acp043

PubMed Abstract | CrossRef Full Text | Google Scholar

Rivera, D., Perrin, P. B., Stevens, L. F., Garza, M. T., Weil, C., Saracho, C. P., et al. (2015). Stroop color-word interference test: normative data for the Latin American Spanish speaking adult population. Neurorehabilitation 37, 591–624. doi: 10.3233/NRE-151281

PubMed Abstract | CrossRef Full Text | Google Scholar

Rognoni, T., Casals-Coll, M., Sánchez-Benavides, G., Quintana, M., Manero, R. M., Calvo, L., et al. (2013). Spanish normative studies in a young adult population (NEURONORMA young adults Project): norms for the Boston Naming Test and the Token Test. Neurología 28, 73–80. doi: 10.1016/j.nrl.2012.02.009

PubMed Abstract | CrossRef Full Text | Google Scholar

Rosselli, M., Ardila, A., Santisi, M. N., Arecco Mdel, R., Salvatierra, J., Conde, A., et al. (2002). Stroop effect in Spanish–English bilinguals. J. Int. Neuropsychol. Soc. 8, 819–827. doi: 10.1017/S1355617702860106

PubMed Abstract | CrossRef Full Text | Google Scholar

Seo, E. H., Lee, D. Y., Kim, S. G., Kim, K. W., Youn, J. C., Jhoo, J. H., et al. (2008). Normative study of the Stroop Color and Word Test in an educationally diverse elderly population. Int. J. Geriatr. Psychiatry 23, 1020–1027 doi: 10.1002/gps.2027

PubMed Abstract | CrossRef Full Text | Google Scholar

Shum, D. H. K., McFarland, K. A., and Brain, J. D. (1990). Construct validity of eight tests of attention: comparison of normal and closed head injured samples. Clin. Neuropsychol. 4, 151–162. doi: 10.1080/13854049008401508

CrossRef Full Text | Google Scholar

Stacy, M., and Jankovic, J. (1992). Differential diagnosis of parkinson’s disease and the parkinsonism plus syndrome. Neurol. Clin. 10, 341–359.

PubMed Abstract | Google Scholar

Steinberg, B. A., Bieliauskas, L. A., Smith, G. E., and Ivnik, R. J. (2005). Mayo’s older Americans normative studies: age-and IQ-adjusted norms for the trail-making test, the stroop test, and MAE controlled oral word association test. Clin. Neuropsychol. 19, 329–377. doi: 10.1080/13854040590945210

PubMed Abstract | CrossRef Full Text | Google Scholar

Strauss, E., Sherman, E. M., and Spreen, O. (2006a). A Compendium of Neuropsychological Tests: Administration, Norms, and Commentary. Oxford: American Chemical Society.

Google Scholar

Strauss, E., Sherman, E. M. S., and Spreen, O. (2006b). A Compendium of Neuropsychological Tests, 3rd Edn. New York, NY: Oxford University Press.

Google Scholar

Strickland, T. L., D’Elia, L. F., James, R., and Stein, R. (1997). Stroop color-word performance of African Americans. Clin. Neuropsychol. 11, 87–90. doi: 10.1080/13854049708407034

CrossRef Full Text | Google Scholar

Stroop, J. R. (1935). Studies of interference in serial verbal reactions. J. Exp. Psychol. 18, 643–662. doi: 10.1037/h0054651

CrossRef Full Text | Google Scholar

Stuss, D. T., Floden, D., Alexander, M. P., Levine, B., and Katz, D. (2001). Stroop performance in focal lesion patients: dissociation of processes and frontal lobe lesion location. Neuropsychologia 39, 771–786. doi: 10.1016/S0028-3932(01)00013-6

PubMed Abstract | CrossRef Full Text | Google Scholar

Swick, D., and Jovanovic, J. (2002). Anterior cingulate cortex and the Stroop task: neuropsychological evidence for topographic specificity. Neuropsychologia 40, 1240–1253. doi: 10.1016/S0028-3932(01)00226-3

PubMed Abstract | CrossRef Full Text | Google Scholar

Tremblay, M. P., Potvin, O., Belleville, S., Bier, N., Gagnon, L., Blanchet, S., et al. (2016). The victoria stroop test: normative data in Quebec-French adults and elderly. Arch. Clin. Neuropsychol. 31, 926–933. doi: 10.1093/arclin/acw029

CrossRef Full Text | Google Scholar

Trenerry, M. R., Crosson, B., DeBoe, J., and Leber, W. R. (1989). Stroop Neuropsychological Screening Test. Odessa, FL: Psychological Assessment Resources.

Troyer, A. K., Leach, L., and Strauss, E. (2006). Aging and response inhibition: normative data for the Victoria Stroop Test. Aging Neuropsychol. Cogn. 13, 20–35. doi: 10.1080/138255890968187

PubMed Abstract | CrossRef Full Text | Google Scholar

Valgimigli, S., Padovani, R., Budriesi, C., Leone, M. E., Lugli, D., and Nichelli, P. (2010). The Stroop test: a normative Italian study on a paper version for clinical use. G. Ital. Psicol. 37, 945–956. doi: 10.1421/33435

CrossRef Full Text | Google Scholar

Van der Elst, W., Van Boxtel, M. P., Van Breukelen, G. J., and Jolles, J. (2006). The Stroop Color-Word Test influence of age, sex, and education; and normative data for a large sample across the adult age range. Assessment 13, 62–79. doi: 10.1177/1073191105283427

PubMed Abstract | CrossRef Full Text | Google Scholar

Vendrell, P., Junqué, C., Pujol, J., Jurado, M. A., Molet, J., and Grafman, J. (1995). The role of prefrontal regions in the Stroop task. Neuropsychologia 33, 341–352. doi: 10.1016/0028-3932(94)00116-7

PubMed Abstract | CrossRef Full Text | Google Scholar

Venneri, A., Molinari, M. A., Pentore, R., Cotticelli, B., Nichelli, P., and Caffarra, P. (1992). Shortened Stroop color-word test: its application in normal aging and Alzheimer’s disease. Neurobiol. Aging 13, S3–S4. doi: 10.1016/0197-4580(92)90135-K

CrossRef Full Text

Waldrop-Valverde, D., Ownby, R. L., Jones, D. L., Sharma, S., Nehra, R., Kumar, A. M., et al. (2015). Neuropsychological test performance among healthy persons in northern India: development of normative data. J. Neurovirol. 21, 433–438. doi: 10.1007/s13365-015-0332-4

PubMed Abstract | CrossRef Full Text | Google Scholar

Zajano, M. J., and Gorman, A. (1986). Stroop interference as a function of percentage of congruent items. Percept. Mot. Skills 63, 1087–1096. doi: 10.2466/pms.1986.63.3.1087

CrossRef Full Text | Google Scholar

Zalonis, I., Christidi, F., Bonakis, A., Kararizou, E., Triantafyllou, N. I., Paraskevas, G., et al. (2009). The stroop effect in Greek healthy population: normative data for the Stroop Neuropsychological Screening Test. Arch. Clin. Neuropsychol. 24, 81–88. doi: 10.1093/arclin/acp011

PubMed Abstract | CrossRef Full Text | Google Scholar

Zimmermann, N., Cardoso, C. D. O., Trentini, C. M., Grassi-Oliveira, R., and Fonseca, R. P. (2015). Brazilian preliminary norms and investigation of age and education effects on the Modified Wisconsin Card Sorting Test, Stroop Color and Word test and Digit Span test in adults. Dement. Neuropsychol. 9, 120–127. doi: 10.1590/1980-57642015DN92000006

CrossRef Full Text | Google Scholar

Stroop Test—The color-word-interference test (“Stroop Task”) is an instrument that is indicative of interference control when participants are presented with expected or unexpected tasks (Stroop, 1935).

From: Progress in Brain Research, 2021

Clinical Applications of Principle 1

Warren W. Tryon, in Cognitive Neuroscience and Psychotherapy, 2014

Emotional Stroop (e-Stroop)

The standard Stroop Test (Stroop, 1935) consists of color words printed in different colors of ink. Initially, the time taken for participants to read all of the color names is measured. Then participants are asked to name the color of ink that each word is printed in. Typically it takes longer for participants to say the ink colors than it does to read the words. Cohen et al. (1990) provided a connectionist model whose information explains why this is so.

The emotional Stroop, also known as the e-Stroop test (Williams et al., 1996), uses target and control lists of words printed in various colors of ink. For example, the words on the target list would be anxiety-related if the study was about anxiety, or the words on the target list would be depression-related if the study was about depression. Neutral words that appear with the same frequency in the English language typically constitute the control list. The standard Stroop test is administered to control participants. They typically take longer to name the color of ink that the words are printed in than to read the names of the colors. Anxious persons show an exaggerated Stroop effect when the anxiety-related target list is used. Depressed persons show an exaggerated Stroop effect when the depression-related target list is used. Williams et al. (1996) have shown that the exaggerated Stroop effect can be used to assess a wide variety of psychopathology. The e-Stroop test is sufficiently sensitive that it can detect differences between neutral and emotionally charged words in normal college students. Teachman et al. (2010) reviewed more current clinical applications of the e-Stroop test.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780124200715000090

History of Research into the Acute Exercise–Cognition Interaction

Terry McMorris, in Exercise-Cognition Interaction, 2016

Stroop Color Task

The Stroop color test (Stroop, 1935) requires inhibition of prepotent responses and selecting relevant sensory information. It has several variations. Sibley, Etnier, and Le Masurier (2006) provided a good example of a fairly common version of the task. In the first condition, the color naming condition, participants were presented with a string of the letter “X” (e.g., XXXXX) written in red, blue, yellow, or green ink. Participants were required to, as quickly as possible, verbally state the ink color in which the letter was written. In a second condition, the color-word interference condition, participants were shown the words “red,” “blue,” “yellow,” or “green” but the words were written in ink that was different in color to the word. The participants were told to state the color of the ink as quickly and accurately as possible. In a third condition, the negative priming condition, the ink color of each word was the same as the color word stimulus on the previous item. For example, if the color word on the previous item was “blue,” the ink color of the current item would be blue. The color-word interference condition, although measuring inhibition to some extent as it is more common to read the word than state the ink color, is more a test of the individual’s ability to select the relevant sensory stimuli. As a result one often sees the Stroop test described as a test of selective attention. The negative priming condition requires the same selective attention as the color-word interference condition but places an extra burden on inhibitory processes, as the correct response to the new stimulus is the color that the person has just inhibited in the previous answer. Speed and accuracy are the normal dependent variables.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128007785000013

Three Faces of Cognitive Processes

J.P. Das, in Cognition, Intelligence, and Achievement, 2015

Construct Fidelity vs. Factor Purity

Constructs, not individual differences, define a theory. The value of individual differences is in prediction of behavior, not in explaining the construct. On the other hand, in scientific theories, explanation is the object of inquiry. Prediction is secondary; it is a bonus. “The explanatory part of a scientific theory, [is] supposedly distinct from its predictive … part” (Deutsch, 2011, p. 324). A balance between the two is hard to achieve, as we see if we consider the Attention and Planning tests.

Attention and Planning were the two additional constructs that required tests to be selected on the basis of each construct. However, traditional psychometric principles would dictate that individual differences in Attention tests must have strong correlations among them. The same holds for tests to be chosen as measures of Planning. The essential criterion for test selection has been factor purity—that all attention tests preferably load on the same factor, and are distinguished from those with high loadings on the Planning factor, although the two factors may be allowed to correlate, but not too highly.

The relative weight given to construct fidelity and factorial purity has constrained the choice of tasks. In regard to CAS, as discussed in detail in the foundations of PASS theory (Das et al., 1994), measures of attention should include Vigilance for sustained attention, Stroop-like tests for selective attention, selection of response occurring at the point of “expression” in contrast to “receptive” attention. The auditory vigilance test that was included in earlier versions of the CAS was excluded from the final version, mainly because of a weak correlation with the other tests finally selected for the battery. Construct-wise, vigilance has a strong claim as a known test of attention. Expressive Attention (Stroop, 1935) is a test that was retained although it showed a weak correlation with the two other subtests in the Attention scale.

Planning tests should have a qualitative part: strategies observed by the examiner, and self-reported by the participants, in addition to the quantitative score (time and accuracy). A failure to consider both in determining an integrated score for the test is a mistake. But how to integrate the qualitative strategy score with the quantitative has remained problematic, especially for purposes of factor analysis.

After the CAS was commercially published (Naglieri & Das, 1997), Kranzler, Keith, and Flanagan (2000) criticized it based on the factor analysis of the tests that were included in the battery. The main point in their paper is a frequently cited criticism: are Attention and Planning two distinct factors? Kranzler et al. found only a marginal fit for the four-factor model; the Attention and Planning factors were indistinguishable.

It is the validity of constructs that is the central point in a theory. Any set of tests can replace CAS insofar as these represent the four constructs and, of course, provided the battery of tests is fairly well standardized and normed on a large sample. This was not the case in Kranzler et al. (2000). The usefulness of considering planning and attention as separate constructs in distinguishing clinical groups, as well as in application of Planning apart from Attention in management decision making has not been repudiated.

I think a high correlation between Attention and Planning factors (without input from strategy observations) is at the center of criticism of the entire CAS (see Kranzler et al., 2000) and specifically, the suggestion that Planning and Attention are not separable. I wonder if Kranzler et al. had considered attention and planning as valid constructs, would they have been more circumspect? However, the CAS did not help in separating the two types of tests inasmuch as some of the tests were similar.

CAS did not really have a test of an important part of the attention construct: a basic component of attention is the Orienting Response; it cannot be easily categorized as a planning behavior. Inclusion of vigilance would have strong elements of the Orienting Response that can be manipulated by instruction for arousal or inhibition (see the preceding section on history, especially our experiments on the Orienting Response). That would have added to the Stroop (Expressive Attention) as an instantiation of inhibiting a response.

Tests of attention such as the Stroop test and Vigilance, in contrast to Planning tests such as Trail Making and Crack the Code, have been found useful in diagnosis of special populations such as individuals with attention deficits and fetal alcohol syndrome. I conclude that by maintaining a distinction between Planning and Attention, we can achieve conceptual clarity and better understand special populations. In other words, we should establish their construct validity and pragmatic validity. As Dietrich (2007) said,

Their brain localizations, or neural networks can further separate the two constructs. Early on, Luria (1966) suggested that planning and the basic attention processes are separable both at the level of cognition and the brain. Current research agrees with this. For instance, consider Executive Attention that is characterized as maintaining focus and direction and reflects intention, is closer to Planning. Planning includes executive functions, judgments, decision-making, and evaluations as we have discussed in the previous chapters. Contrast Planning with automatic attention, and selective attention that filters information even before it is perceived. These are controlled by posterior attentional network based primarily in the parietal cortex. Executive attention, on the other hand, depends on anterior attentional network located in the prefrontal cortex. (Dietrich, 2007, p. 201)

It is the validity of constructs that is the central point in a theory. Any set of tests can replace CAS insofar as they represent the four constructs and, of course, provided the battery of tests is fairly well standardized and normed on a large sample. In spite of its limitations, CAS is still a valid assessment battery—supportive neuropsychological evidence, and the use of CAS in exploring basic psychological processes fundamental to reading and differential diagnosis of special populations is reviewed briefly in the next section.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780124103887000038

Executive Function

Martha Ann Bell, Tatiana Garcia Meza, in Encyclopedia of Infant and Early Childhood Development (Second Edition), 2020

Inhibitory Control

A classic adult task used to assess inhibitory control is the color-word Stroop test, where participants respond to the color of the ink rather than the written word. The task is difficult because of trials with incongruence between the word and the color of the ink, such as the word BLUE written in yellow ink. This requires adults to inhibit the natural response of “blue” and instead respond by saying, “yellow”.

This is akin to the more complex inhibitory control tasks used with children ages 3 and older, such as the day/night Stroop-like task (Diamond et al., 1997), where the children must respond “night” when shown a picture of the sun and “day” when shown a picture of the moon. Variations on the child Stroop-like tasks include grass/snow (responding to green and white cards) and hand game (responding to fist and finger point). For these more complex inhibitory control tasks, young children are required to learn an easy rule (e.g., say “day” when shown the sun card, “night” when shown the moon card), play by the specified rule, and then re-learn a new rule and play by the new “silly” rule. These tasks require the child to both inhibit a prepotent response, and at the same time remember a set of cognitively taxing rules that are tapping into working memory.

Complex inhibitory control tasks for toddlers include the shape Stroop test. The child is shown pictures of big-sized fruits and little-sized fruits and they are labeled (big apple, little apple). Then when shown a small fruit embedded in an incongruent big fruit, with a big version of the small fruit on the same card, toddlers are asked to point to the small version of that fruit (Kochanska et al., 1996). The reverse categorization task requires toddlers to sort big and small objects into big and small containers, respectively. They are then asked to reverse the order to play the “silly” way and sort big objects into the small container and small objects into the big container (Carlson et al., 2004).

It is difficult to know what a complex inhibitory control task would be that is appropriate for infants, but the A-not-B task might be a good candidate (Cuevas et al., 2012; Cuevas et al., 2018), where infants manually retrieve an object hidden at location A after successive trials and then must inhibit the prepotent response to search at location A after the object is hidden at location B. In this work, the classic version of this task that involves infant manual search, was modified to be a visual search task; the infant is required to hold a rule in mind (look where the toy was hidden) and inhibit looks to a location where a toy was previously hidden in order to find the toy in the new location (Bell, 2012). Conversely, a simple inhibitory control task during infancy would be the Freeze-Frame task (Holmboe et al., 2008), which is much like the adult antisaccade task used by Miyake et al. (2000). For the antisaccade task, adults are instructed to inhibit looking at an initial cue on one side of a computer screen in order to correctly identify a quickly disappearing target on the other side of the screen. For Freeze-Frame, the infant must inhibit looking to a distractor in the periphery so that a colorful animation remains at the central location on a computer screen.

Simple inhibitory control tasks for toddlers and preschoolers include delay tasks. The most well-known simple inhibitory control task is the delay of gratification task or the “marshmallow task”. The child is instructed to wait for a specified amount of time to receive a reward stimulus, such as a snack treat or a prize, and is given the option to ring a bell to receive the smaller reward if unable to wait (Mischel et al., 1973). Other tasks used to measure simple inhibitory control include gift delay (Kochanska et al., 2000); the child is instructed not to look while the experimenter wraps an attractive gift and then leaves the room to get a bow for the gift. In snack delay (Kochanska et al., 1996), a snack is placed under a clear cup and the child is instructed to wait to eat until the bell is rung. Because simple inhibitory control tasks do not require working memory, as the reward remains in view, they can be considered pure inhibition tasks.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128093245237486

Dementias and the Frontal Lobes

Michał Harciarek, … Anna Barczak, in Executive Functions in Health and Disease, 2017

Assessment of Executive Function in Dementia

More than 60% of patients with dementia cannot complete standard executive measures such as Stroop test or TMT-B (Enright et al., 2015). The choice of executive tasks to be used in a dementia clinic is made based on their simplicity and their minimal reliance upon the basic cognitive processes, such as language, visuospatial, and memory functions. The task instruction should be short, straightforward, and easy to remember, and the test material needs to be easily handled. In patients with movement disorders accuracy scores should not be time-dependent. The examples of tests/tasks of executive processes feasible in a dementia clinic are provided in Table 19.2. Of note, whereas most traditional executive tasks engage mostly dorsolateral frontal pathways, the presence of environmental dependency syndrome, frequently seen in both bvFTD (Ghosh, Dutt, Bhargava, & Snowden, 2013) and PSP (Ghika, Tennis, Growdon, Hoffman, & Johnson, 1995), may alert the clinician to the potential involvement of other frontal areas (i.e., mesial, orbitofrontal, frontostriatal, or frontothalamic tracts) (Archibald, Mateer, & Kerns, 2001). Importantly, both EDS and disinhibition may be examples of environmentally driven rather than internally generated patterns of behavior.

Table 19.2. Assessment of Executive Function in Dementia

| Observed/Assessed Aspect of Executive Function | Observable Sign/Psychometric Test/Clinical Task |

|---|---|

| Inhibition |

|

|

|

|

|

| Initiation and generation |

|

|

|

| Mental set-shifting |

|

|

|

| Planning and sequencing |

|

|

|

| Executive screening tasks |

|

| Proxy report of executive/behavioral symptoms in daily life |

|

BRIEF-2, Behavior Rating Inventory of Executive Function-2; CBI, Cambridge Behavioural Inventory; DEX, Dysexecutive Questionnaire; D-KEFS, Delis–Kaplan Executive Function System; FBI, Frontal Behavioral Inventory; FrSBe, The Frontal Systems Behavior Scale; INECO, The Institute of Cognitive Neurology; IRSPC, Iowa Rating Scale for Personality Change; NPI, Neuropsychiatric Inventory; TMT, Trail Making Test; WCST, Wisconsin Card Sorting Test.

Motor impersistence assessment can also be sometimes useful for the differential diagnosis. For instance, inability to sustain tongue protrusion (>10 s) is one of the typical signs of HD. In the context of predominant psychiatric symptoms and lack of family history, the presence of this sign should alert the clinician to look out for other HD symptoms.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B9780128036761000192

Neuropsychological Functioning, Assessment of

J. Uekermann, I. Daum, in International Encyclopedia of the Social & Behavioral Sciences, 2001

2.6 Executive Functions

Frequently used tests of executive functions are the ‘Wisconsin Card Sorting Test,’ the ‘Stroop test’ (see Lezak 1995), and the recently developed ‘Hayling Test’ (Burgess and Shallice 1997). The ‘Wisconsin Card Sorting Test’ requires the detection of sorting rules (color, shape, and number of elements) and the modification of such rules in response to feedback. The ‘Stroop Test’ and the ‘Hayling Test’ assess the ability to suppress habitual responses. In the Stroop test, color words that are printed in a different color, have to be read aloud; the color of the written words has to be inhibited (e.g., the word ‘red’ printed in green). The Hayling Test requires the completion of sentences by unrelated words, obvious semantically related completions have to be suppressed. Further tests of executive function involve planning or problem solving within the context of complex puzzles such as the ‘Tower of London Task’ (see Lezak 1995).

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B008043076701295X

Frontal Lobe

Lada A. Kemenoff, … Joel H. Kramer, in Encyclopedia of the Human Brain, 2002

II.C Response Inhibition

Another important aspect of executive functioning is the ability to inhibit responses to established patterns of behavior. The Stroop Test is considered a measure of a person’s ability to inhibit a habitual response in favor of an unusual one. During the interference condition of the Stroop, subjects are presented with a list of colored words (blue, green, red, etc.) printed in nonmatching colored ink. For example, the word blue may be printed in red ink, and the word green may be printed in blue ink. The subject’s task is to name the ink color in which the words are printed as quickly as possible. This challenge involves suppressing the strong inclination to read the color name. Many patients with frontal lobe damage are unable to inhibit reading the words and thus show impairment on this task.

Read full chapter

URL:

https://www.sciencedirect.com/science/article/pii/B0122272102001485

Varieties of Self-Control

Michael Beran, in Self-Control in Animals and People, 2018

Cognitive Control Tasks

On the “cognitive” side of things, tasks of so-called executive functions that reflect control mechanisms involve inhibition. Perhaps the most famous is the Stroop test (Stroop, 1935), in which subjects must respond to stimuli on the basis of properties other than those that are typically most relevant and more readily apparent, such as trying to name the color of letters in a word rather than read the color name that the word spells (e.g., responding “Green” when seeing the word RED printed in green color). Stroop tests have been used in thousands of studies with humans (see MacLeod, 1991), and in some studies with animals (e.g., Beran, Washburn, & Rumbaugh, 2007; Washburn, 1994), to measure control processes and inhibition. Similar tests such as Go No-Go tasks involve stopping oneself from making a typical response when a specific kind of cue is experienced (Nosek & Banaji, 2001). Flanker tests involve ignoring cues that are highly salient but distract from the response goal (e.g., having to respond “right” when a central arrow in a row of arrows points right, but all others point to the left; Eriksen & Eriksen, 1974). Even performing mazes or the Tower of Hanoi task (Simon & Hayes, 1976; Simon, 1975), which require anticipating future moves and seeing points where one must go away from what appears to be a closer approximation of the goal to actually get closer to the goal, does not require self-control, but does require self-regulation and inhibition. Thus, cognitive tasks such as these also tap into basic mechanisms that are relevant to self-control choices, even if they are not self-control tasks themselves. The important point here is that one must be careful not to conflate all of these, simply because a subject in one of these tests inhibits responding at the right moment, toward achieving a goal.